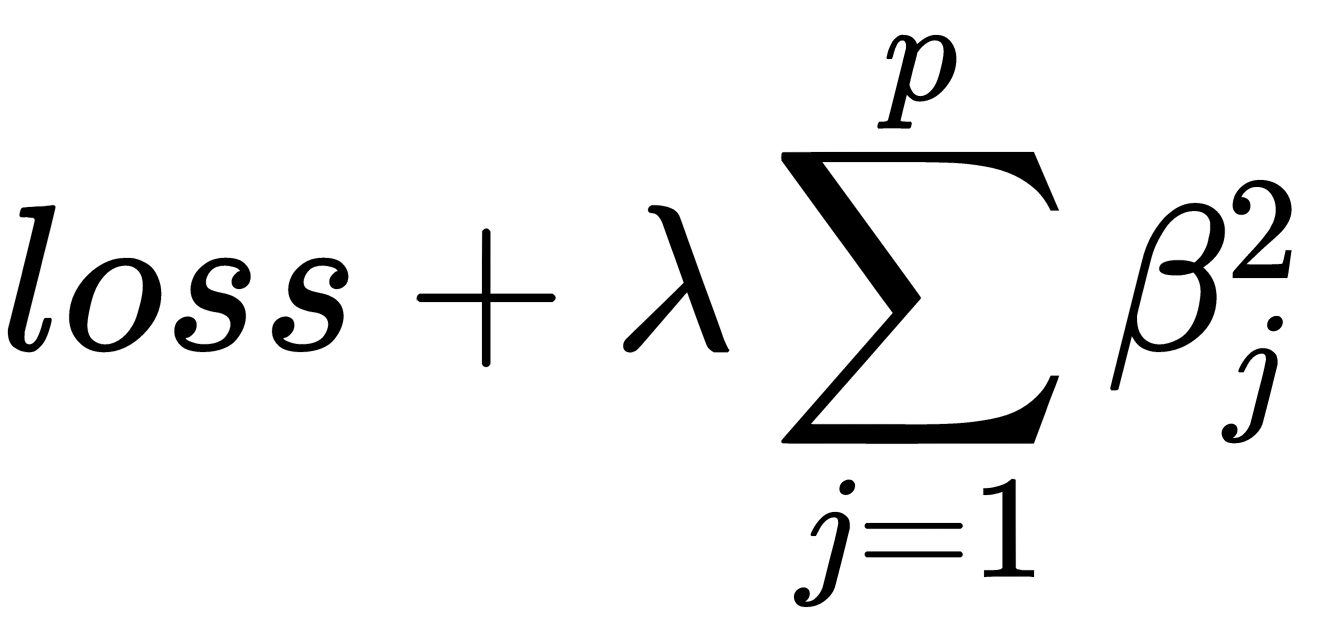

L2 regularization is also an additional term to the loss function:

This additional term adds a squared value of magnitude of parameters as a penalty. λ is also a coefficient of regularization. Its higher values lead to stronger regularization and can lead to underfitting. Another name for this regularization type is Ridge regularization. Unlike L1 regularization, this type does not have a feature selection characteristic. Instead, we can interpret it as a model smoothness configurator. In addition, L2 regularization is computationally more efficient for gradient descent-based optimizers because its differentiation has an analytical solution.

There is a class called shark::TwoNormRegularizer in the Shark-ML library whose instances can be added to trainers to perform L2 regularization. In the Shogun library, regularization is usually incorporated into the model (algorithm) classes and cannot be changed. An example of such an algorithm is the CKernelRidgeRegression class, which implements a linear regression model with L2 regularization.