|

|

|

|

|

The player’s primary logic operates within the known possibilities of physics. Keep in mind gravity, weight, mass, density, force, buoyancy, elasticity, etc. Use this as the starting point, but do not be limited by it.

Matt Allmer

An understanding of motion and the driving forces thereof is crucial in understanding games. Most objects in games move. That is what makes them dynamic. Whether it be a two-dimensional (2D) character such as the original Mario or a fully-fledged three-dimensional (3D) character such as Geralt in The Witcher 3, they and their game environments are in constant motion.

To grasp the concept of motion, especially with respect to computer games, a development of foundation knowledge in vector mathematics is required. Vectors are used extensively in game development for describing not only positions, speed, acceleration, and direction but also within 3D models to specify UV texturing, lighting properties, and other special effects.

Before leaping into the use of vectors in games for defining motion, a crash course in essential vector mathematics for game environments is presented in the next section.

In Chapter 1, a vector was introduced as a line with a length (magnitude) and a direction (indicated by an arrow). Vectors can be used to represent measurements such as displacement, velocity, and acceleration. In 2D, a vector has x and y coordinates. In 3D, it has x, y, and z coordinates. In pure mathematics, a vector is not a point in space, but a set of changing coordinate instructions. It can be likened to the instructions on a fictional pirate’s treasure map; for example, take three steps to the west and seven steps to the south. As shown in Figure 2.1, the instructions three steps to the west could be interpreted as the vector (3,0), meaning move 3 in the positive x direction and nothing in the y direction. The instructions move seven steps to the south become the vector (0,−7), meaning move only 7 in a negative y direction.

To determine the final location, vector x and y values are added to the starting point x and y values. For example, in Figure 2.1, the pirate ship lands at (4,8), and moving (3,0) will place them at (4 + 3,8 + 0) = (7,8). Then moving (0,−7) will put them at (7 + 0,8 − 7) = (7,1). They can also take a shortcut by going directly in a straight line to the treasure. In this case, the two instruction vectors (3,0) and (0,−7) are added together and become (3,−7). By taking the starting location and adding this new vector, they will end up in the same location [i.e., (4 + 3,8 − 7) = (7,1)].

To travel from the treasure back to the ship, the pirates can follow the same line but in the opposite direction. This is achieved by flipping the vector such that all coordinate values are multiplied by −1. In this case, to get back to the ship, they follow the vector (−3,7).

FIG 2.1 A pirate’s treasure map illustrating the use of vectors.

It might also be useful for the pirates to know how far the treasure is from the ship. The length of a vector, v, called its magnitude and written |v|, is found using the Pythagorean theorem:

(2.1) |

For the pirates, it means that their journey is a length of 7.62 (kilometers, if the units being used are kilometers), that is,

Sometimes it is necessary to scale a vector, so that it has a length equal to 1. The process of scaling the length is called normalizing, and the resultant vector, which still points in the same direction, is called a unit vector. To find the unit vector, each coordinate of the vector is divided by the vector’s length. In the case of the pirate’s journey, this would equate to (3/7.62,−7/7.62) = (0.39,−0.92). If the pirate takes 0.39 steps to the west and 0.92 steps to the south, he will end up a distance of 1 from his starting position, right on the original vector, as shown in Figure 2.2. As can be seen, the vectors (3,−7) and (0.39,−0.92) are parallel and the magnitude of (0.39,−0.92) is 1.

The unit vectors for north, south, east, and west as they would be overlaid on a map of the earth* are (0,1), (0,−1), (−1,0), and (1,0).

Two further important calculations can be performed with vectors. These are the dot product and the cross product. The use of these in computer graphics and games programming will become overwhelmingly clear later. Among other things, the dot product can be used to calculate the angle between two vectors, and the cross product can be used to determine the direction.

FIG 2.2 A normalized vector has a length of 1.

FIG 2.3 A pirate facing (1, 0) and the vector to the treasure.

The dot product is calculated by taking two vectors, v and w, and multiplying their respective coordinates together and then adding them. The dot product results in a single value. It can be calculated using the following equation:

(2.2) |

Given the vectors v= (1,0) and w= (3,−7), the direction the pirate is facing and the direction to the treasure (shown in Figure 2.3), the dot product will be 1 × 3 + 0 × −7 = 3.

But what does this mean? The most useful application of the dot product is working out the angle between two vectors. In a moment, we will work out the actual value of the angle, but for now, by just knowing the value of the dot product you can determine how the vectors sit in relation to each other. If the dot product is greater than zero, the vectors are less than 90° apart; if the dot product equals zero, then they are at right angles (perpendicular); and if the dot product is less than zero, then they are more than 90° apart.

To find out the exact angle the pirate must turn to face the treasure, the arccosine of the dot product of the unit vectors is calculated. The unit vector for (3,−7) is (0.39,−0.92) as already established and (1,0) is already a unit vector. This result for the angle between the vectors is therefore:

= arcos[(1,0).(0.39,0.92)]

= arcos[(1 × 0.39) + (0 × 0.92)]

= arcos(0.39)

= 67°

You can always check the result of your calculation by looking at a plot of the vectors and measuring them with a protractor. In this case, by taking a visual estimate, the angle is larger than 45° and less than 90°; therefore, the calculation appears to be correct.

Now imagine the pirate is told to turn 67° and walk for 7.62 km to get to the treasure. Which way does he turn? The image in Figure 2.3 shows that a decision needs to be made as whether to turn to the right or the left.

In computer graphics, a positive value for an angle always indicates a counterclockwise turn. A counterclockwise turn in this case would have the pirate facing away from the treasure. When calculating the angle between vectors using the dot product, the angle is always positive. Therefore, you need another method to determine the turn direction. This is where the cross product comes into play.

The cross product of two vectors results in another vector. The resulting vector is perpendicular (at 90°) to both the initial vectors. This sounds odd working in 2D as a vector at right angles to two vectors in 2D would come right out of the page. For this reason, the cross product is only defined for 3D. The formula to work out the cross product is a little obtuse and requires further knowledge of vector mathematics, but we will try to make it as painless as possible.

The cross product of two vectors, v and w, denoted v × w is shown in the following equation:

(2.3) |

The equation is defined in terms of standard 3D unit vectors. These vectors are three unit-length vectors oriented in the directions of the x, y, and z axes. If you examine Equation 2.3 you will notice that there are three parts added together. The first part determines the value of the x coordinate of the vector, as the unit vector (1,0,0) only has a value for the x coordinate. The same occurs in the other two parts for the y and z coordinates.

To find the cross product of two 2D vectors, the vectors first need to be converted into 3D coordinates. This is as easy as adding a zero z value. For example, v= (1,0) will become v= (1,0,0) and w= (0.39,−0.92) will become w= (0.39,−0.92,0). The value of v × w would equate to (0 × 0 – 0 × −0.92)(1,0,0) + (0 × 0.39 –1 × 0)(0,1,0) + (1 × −0.92 –0 × 0.39)(0,0,1) = 0(1,0,0) + 0(0,1,0) + −0.92(0,0,1) = (0,0,0) + (0,0,0) + (0,0,−0.92) = (0,0,−0.92). This vector only has a z coordinate, so is directed along the z axis. It is therefore coming out of the page.

An interesting thing to note about the cross product is that if the order of the equation is reversed, the resulting vector is different. w × v would equal (0,0,0.92) (check this out!), which is a vector of the same length as the one produced by v × w, but traveling in the exact opposite direction. This differs from the calculation of the dot product that yields the same answer no matter what the order of the vectors.

How does this help the pirate determine the direction in which to turn?

If he starts by facing in the direction of v and wishes to turn to face w, we can calculate v × w. If we examine Figure 2.3, we can see that w would be on the pirate’s right and therefore would require a clockwise turn. We know from the previous example that a clockwise turn between two vectors produces a cross product result with a negative z value. The opposite is true for a counterclockwise turn. Therefore, we can say that if z is positive, it means a counterclockwise turn and if z is negative, a clockwise turn.

The pirate now knows to turn to his right 67° clockwise and travel 7.62 km in a straight line to reach the treasure.

This may all seem obvious by looking at the map. However, objects in a game environment that have no visual point of reference, such as artificially controlled bots or vehicles, require these very calculations in order to move around successfully in a virtual environment.

![]() Unity Specifics

Unity Specifics

Vectors

Every object in Unity has a number of vectors associated with it. A game object’s transform component has three: position, rotation and scale. Figure 2.4 shows the layout of a typical game environment with a robot model as a game object. Usually in 3D, the y axis represents up, the x axis to the side, and the z axis forwards. Both the environment and all game objects have their own transforms. The axes are displayed in the Scene as red, green, and blue arrowed lines as shown in Figure 2.4. The y/up axis is green, the x/side axis is red and the z/forward axis is blue.

FIG 2.4 Vectors in the Unity 3D environment.

The environment has its own axes and the orientation is set by the way you change the scene around to look at different objects. In the Game, the orientation is dependent on the camera’s orientation. Each game object has its own local orientation depicted by a set of axes appearing in the Scene when the object is selected. So while the y axis for the world may be vertical in a scene, it could be horizontal locally for an object that is lying down.

In Unity there are two vector classes: Vector2 and Vector3. A game object’s position, rotation, and scale values are stored as Vector3. Vector2 is useful for storing 2D vector information.

A game object also has a vector for each of its x, y, and z axes: Vector3.left, Vector3.up, and Vector3.forward respectively. These are useful for moving an object along its axes without needing to know its orientation.

![]() On the Website

On the Website

Vector2 and Vector3 Class Definitions

Detailed information about the Unity vector classes can be found in the Script Reference here:

http://unity3d.com/support/documentation/ScriptReference/Vector2.html

http://unity3d.com/support/documentation/ScriptReference/Vector3.html

Whether it is in 2D or 3D space, the principles of vectors are applied in the same way. As we explored with vectors, the difference between a 2D coordinate and a 3D coordinate is just another value. In 3D game engines, such as Unity, 2D games are created by ignoring one of the axes. In the rocket ship application shown in Section 2.4, all game objects are positioned in the same plane, having a y position value initially set to 0. All movements thereafter only move and rotate the objects in x and y. This is the same principle as moving objects around on a flat tabletop. In the rocket ship game, the camera is positioned directly above the game objects and perspective is removed to give the illusion of a truly 2D world.

The camera in a game is a critical component, as it presents the action to the player. It is literally the lens through which the game world is perceived. Understanding how the camera moves and how to set what it looks at is essential knowledge.

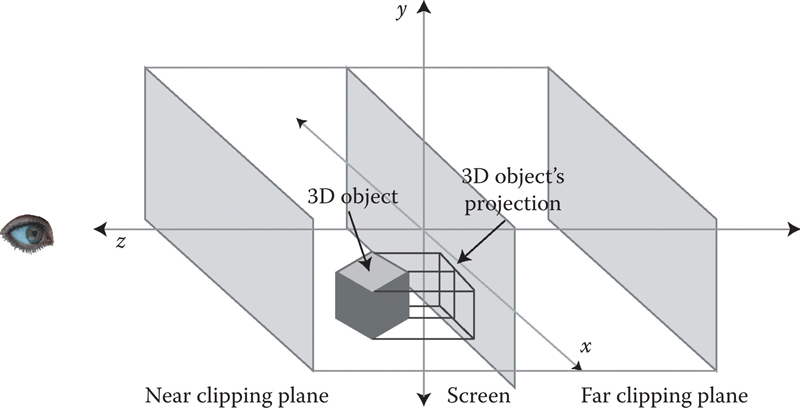

The camera in a game defines the visible area on the screen. In addition to defining the height and width of the view, the camera also sets the depth of what can be seen. The entire space visible by a camera is called the view volume. If an object is not inside the view volume, it is not drawn on the screen. The shape of the view volume can be set to orthographic or perspective. Both views are constructed from an eye position (representing the viewers’ location), a near clipping plane, the screen, and a far clipping plane.

An orthographic camera projects all points of 3D objects between the clipping planes in parallel onto a screen plane, as shown in Figure 2.5. The screen plane is the view the player ends up seeing. The viewing volume of an orthographic camera is the shape of a rectangular prism.

A perspective camera projects all points of 3D objects between the clipping planes back to the eye, as shown in Figure 2.6. The near clipping plane becomes the screen. The viewing volume of a perspective camera is called the frustum, as it takes on the volume of a pyramid with the top cut off. The eye is located at the apex of the pyramid.

The result of using a perspective and orthographic camera on the same scene in Unity is illustrated in Figure 2.7. A perspective camera is used in Figure 2.7a. The way in which perspective projections best show depth is evident from the line of buildings getting smaller as they disappear into the distance. This is not the case for the orthographic camera shown in Figure 2.7b. Depth can only be determined by which objects are drawn in front. The buildings appear to be flattened with no size difference between buildings in the distance. Figure 2.7c and d illustrate the way in which the camera view volume is displayed in Unity’s Editor Scene. If an object is not inside the view volume in the Scene, it will not appear on the screen in the Game.

FIG 2.5 Orthographic projection.

FIG 2.6 Perspective projection.

FIG 2.7 A 3D scene in Unity using (a) a perspective camera and (b) an orthographic camera. (c) The perspective camera’s frustum as displayed in the Unity scene. (d) The orthographic camera’s frustum as displayed in the Unity scene.

![]() Unity Specifics

Unity Specifics

Cameras

When you first create a new project in Unity, it will come with a Main Camera in the Hierarchy. Selecting a camera reveals the settings in the Inspector. Examples for the orthographic and perspective cameras are shown in Figure 2.8a and b, respectively.

While setting the camera values for the near and far planes in the Inspector, the resulting frustum can be watched in the Scene. To change the width and height of the viewing volume, for a perspective camera the field of view (FOV) is modified. The greater the field of view, the more the player will be able to see around their immediate area. To get a feel for the field of view hold your arms out to the side and look straight ahead as if to make a cross figure with your body. Slowly bring your arms around to your front until you can just see both hands out of the corners of your eyes (while looking straight ahead). When your hands come into your peripheral vision, the angle your arms make is your field of view. The average human forward facing field of view is close to 180 degrees while some birds are capable of almost 360 degrees.

FIG 2.8 Settings for the Unity camera. a) Orthographic Camera b) Perspective Camera.

The complementary field of view for the orthogonal camera in Unity is set by the size property.

![]() Unity Hands On

Unity Hands On

Getting Comfortable Behind the Camera

Step 1: Download Chapter Two/CameraPlay.zip from the website, unzip, and open in Unity. In the Project, double-click on street in the Scenes folder to open the scene. The Scene will appear with a row of medieval houses on a terrain.

Step 2: Modify the window tabs if necessary to enable viewing of the Game and Scene at the same time. Select Main Camera from the Hierarchy. Zoom in or out in the Scene so that the camera and its frustum are in full view. If you cannot find the camera, double-click on it in the Hierarchy to bring it into the center of the Scene.

![]() Note

Note

To set the Game view camera to look at the environment from the same location set in the Scene, select the Main Camera in the Hierarchy and GameObject > Align with View from the main menu. This repositions the camera to be looking at the scene from your point of view.

To move independently around in the Scene, leaving the camera where it is, hold down the Q key and drag the mouse to pan and hold down the ALT key and drag the mouse to rotate.

Step 3: Locate the Camera component in the Inspector. Find the Field of View slider. Move the slider forward and back to change the viewing angle. Take note how the frustum is affected in the Scene and the resulting Game view.

Step 4: Set the Field of View to 60°. This is a popular setting for the FOV in many games. This setting is half the total viewing angle. In this case it gives you 120°.

Step 5: Change the Far clipping plane to 90°. Note that half of the background trees are missing in the Game. This is because they are now beyond the far plane and outside the frustum. The background color you see is set by the Background property in the camera settings.

Step 6: To watch the effect of changing the far plane distance continually, place the mouse over the word Far, hold down the right mouse button, and drag it left and right to decrease and increase the value in the Far box. This method can be used for changing the values of most properties in the Unity Editor.

Step 7: Now, do the same for the Near clipping plane and observe how the view in the Game is modified.

Step 8: Change the camera to Orthographic by changing the Projection property of the Camera component. Try modifying the size property to see how it affects the Game view.

Step 9: Change the camera back to a perspective projection. Set the FOV to 60° and the Near and Far planes to 1° and 200°, respectively.

Step 10: Create a sphere Game Object and position it at (620,15,715).

Step 11: Select GameObject > Camera from the main menu. Rename it to SphereCam. This camera will become the viewing camera automatically.

Step 12: Locate the Depth property in the SphereCam’s Camera component. If this property is larger than the depth for the Main Camera, it will be the one that is drawn in the Game. Change the depth for both cameras so that the SphereCam’s depth is −1 and the Main Camera’s depth is 2°. The Main Camera will take control again.

Step 13: Set the SphereCam’s position to (0,0,0).

Step 14: Drag and drop the SphereCam onto Sphere in the Hierarchy as shown in Figure 2.9.

FIG 2.9 SphereCam attached to the scene.

Step 15: If you try to play the scene at this point, there will be an error reported saying “There are two audio listeners in the scene. Please ensure there is always exactly one audio listener in the scene.” This is because there is an audio listener attached to all cameras by default. As with the Highlander, in the end there can be only one in your game. The audio listener is the component that listens for audio sources in the game environment and ensures that they play at the right volume and in the correct speakers. To resolve the error, remove the audio listener from the SphereCam.

Step 16: Create a new C# file and call it Orbit. Enter the code in Listing 2.1.

Listing 2.1 Script to make a game object move in a circular path around its starting position

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Orbit : MonoBehaviour {

float radius = 30;

Vector3 startPosition;

int speed = 3;

void Start() {

startPosition = transform.position;

}

void Update ()

{

transform.position = new Vector3(radius * Mathf.

Sin(Time.fixedTime * speed) +

startPosition.x,

transform.position.y,

radius * Mathf.Cos(Time.fixedTime * speed) +

startPosition.z);

}

Step 17: Attach the C# to the Sphere.

Step 18: Select SphereCam from Hierarchy such that the Camera Preview window opens in Scene.

Step 19: Ensure that you can see both Game and Scene views and press play.

Step 20: The camera preview for the SphereCam will display a moving scene as the Sphere moves about within the environment.

Step 21: Create a Plane and position and orient it as shown in Figure 2.10.

Step 22: In Project, select Create > Render Texture. Rename it to sphereCamView.

Step 23: Select SphereCam in the Hierarchy and in the Target Texture property of the Camera component, select and set sphereCamView. If you select sphereCamView from the Project, you will see the view captured by SphereCam on this new texture’s surface in the Inspector.

Step 24: Drag and drop the sphereCamView render texture onto the new plane in the Scene. With the plane selected, change the shader for sphereCamView to Unlit/Texture.

FIG 2.10 Adding a new plane to the scene.

Step 25: Play to see the view from SphereCam play out on the big screen in your scene. This technique can be used in your games for surveillance cameras, minimaps, or reflections in mirrors or water.

While cameras can be used to create a number of different visual effects, they are also important for optimizing a game’s performance. For example, the camera’s view volume should not be considered a trivial setting. As mentioned previously, all objects inside the view volume get drawn to the screen. The more objects to be drawn, the slower the frames per second. Even objects behind other objects and not noticeably visible will be considered by the game engine as something to be drawn. So even though an object does not appear on the screen, if it is inside the camera’s view volume it will be processed. Therefore, if you have a narrow back street scene in a European city where the player will never see beyond the immediate buildings, the camera’s far plane can come forward to exclude other buildings that cannot be seen anyway.

Whether the camera is looking at an orthographic or a perspective view, the coordinate system within the game environment remains the same.

2.3.2 Local and World Coordinate Systems

There are two coordinate systems at work in game environments: local and world. The local system is relative to a single game object, and the world system specifies the orientation and coordinates for the entire world. It is like having a map for the local layout of a city versus the longitude and latitude system used for the entire earth.

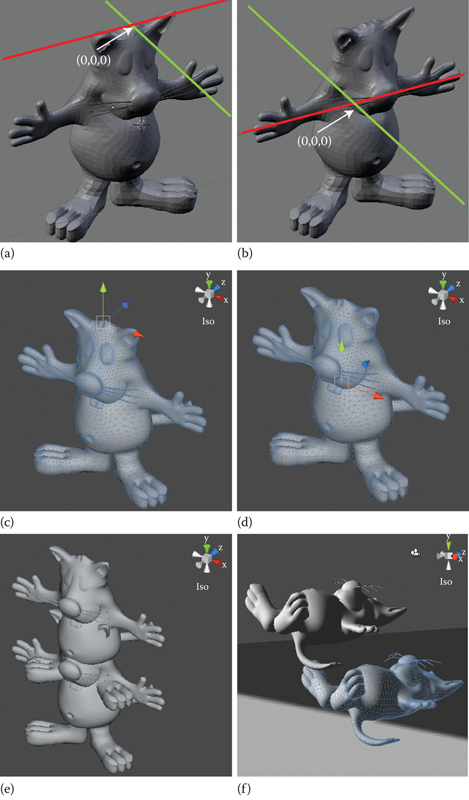

A game object can move and rotate relative to its local coordinate system or the world. How it moves locally depends on the position of the origin, the (0,0,0) point, within the model. Figure 2.11a shows a 3D model in Blender with the origin situated at the tip of the head, and Figure 2.11b shows it in the center of the body. In Blender the default vertical axis is the z axis. The red and green lines in Figure 2.11a and b represent the x and y axes, respectively. When imported into Unity, the software automatically flips the z axis for you, making y the new vertical axis. As shown in Figure 2.11a and b, the origin of the model is carried across into Unity. When a model is selected, its origin is evident by the location of the translation handles used for moving the model around in the Scene. The location of the model’s central point becomes an issue in Unity when positioning and rotating it. In Figure 2.11e, both models are placed in the world at (0,0,0) as set by the Inspector. As you can see, the models are placed in differing positions relative to their own central points. Figure 2.11f demonstrates how rotation is also affected by the model’s origin. The model from Figure 2.11a rotates about the point in the center top of the head, whereas the model in Figure 2.11b rotates about its abdomen.

In Figure 2.12, the effect of rotations on local and world coordinate systems is illustrated. Any object at the world origin when rotated will be oriented in the same way around local and world axes. However, when the model is not at the world origin, a rotation in world coordinates will move as well as reorient the model. Local rotations are not affected by the model’s location in the world.

2.3.3 Translation, Rotation, and Scaling

Three transformations can be performed on an object whether it be in 2D or 3D: translation, rotation, and scaling.

Translation refers to moving an object and is specified by a vector in the same way the pirate in Section 2.2 moved across the island. A translation occurs whenever the x, y, or z values of an object are modified. They can be modified all at once with a vector or one at a time. To move an object in the x direction by 5, the Unity C# is:

this.transform.Translate(5,0,0);

FIG 2.11 The effect of transformations based on local coordinates. (a) A model in Blender with the origin at the center top, (b) a model in Blender with the origin in the abdomen, (c) translation axes positioned in Unity for the model in (a), (d) translation axes positioned in Unity for the model in (b), (e) both models positioned at the world origin in a Unity scene, and (f) both models rotated 90° about their local x axes.

FIG 2.12 Local and world rotations affected by a model’s position.

To move the object by 3 in the x, 5 in the y, and 8 in the z, in Unity C# it could be written as

this.transform.Translate(3,5,8);

Several examples of the Translate function are shown in Figure 2.13.

Rotation turns an object about a given axis by a specified number of degrees. An object can rotate about its x, y, or z axes or the world x, y, or z axes. Combined rotations are also possible. These cause an object to rotate around arbitrary axes defined by vector values. The Unity C# to rotate an object about 90° about the y axis is

this.transform.Rotate(Vector3.up, 90);

To rotate 90° around the x axis, the script is

this.transform.Rotate(Vector3.right, 90);

and to rotate 90° around the z axis, the script is

this.transform.Rotate(Vector3.forward, 90);

Some of these rotations are illustrated in Figure 2.14.

FIG 2.13 Using the translate function to modify the position of a game object.

FIG 2.14 Rotating a game object with the rotate function.

FIG 2.15 Scaling an object.

Finally, scaling changes the size of an object as shown in Figure 2.15. An object can be scaled along its x, y, or z axis. This can be achieved in C# at the same time using a vector, thus

this.transform.localScale = new Vector3(3,0.5,10);

Values for the scale are always multiplied against the original size of the object. Therefore, a scale of zero is illegal. If a negative scaling value is used, the object is flipped. For example, setting the y axis scale to −1 will turn the object upside down.

Taking some time to orient yourself with both 2D and 3D space is necessary to understanding how objects will move around within your game. Fortunately, Unity takes the hard mathematics and hides it behind many easy-to-use functions. However, when something goes wrong in your game, it’s nice to have some idea where to start looking.

Chapter 1 introduced polygons as the small shapes, usually triangles and sometimes squares, that make up 2D and 3D meshes (or models). A polygon in a mesh also represents a plane. A plane is a 3D object that has a width and height but no depth. It is completely flat and can be oriented in any direction, but not twisted.

The sides of planes are defined by straight edges between vertices. Each vertex has an associated point in space. In addition, planes only have one side. This means that they can only be seen when viewed from above. To see this, open Unity and create a plane game object. Rotate the plane around to the other side. It will become invisible, but it will still be there.

In order to define the visible side of a plane, it has an associated vector called a normal. This is not to be confused with normalization of a vector into a unit vector. A normal is a vector that is orthogonal (90°) to the plane as shown in Figure 2.16.

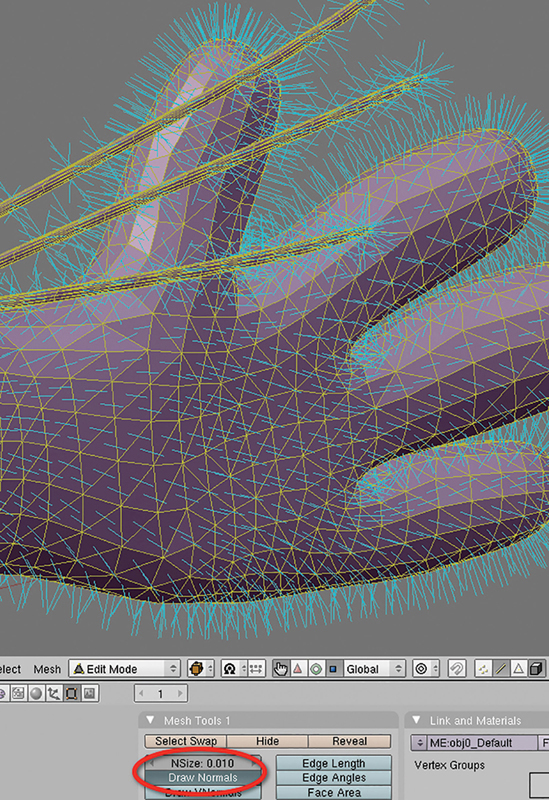

Knowing the normal to a plane is critical in determining how textures and lighting affect a model. It is the side the normal comes out of that is visible and therefore textured and lit. When a model is created in a 3D modeling package such as Blender, the normals are usually facing outward from the object. Figure 2.17 shows a model in Blender with the normals for each plane shown in blue.

The angle that a normal makes with any rays from light sources is used to calculate the lighting effect on the plane. Figure 2.18 illustrates the vectors and normal used in calculating the effect of light on a plane. The closer the normal becomes to being parallel with the vector to the light source, the brighter the plane will be drawn. This lighting model is called Lambert shading and is used in computer graphics for diffuse lighting.

FIG 2.16 A plane and its normal.

FIG 2.17 A 3D model in Blender showing normals for each plane in blue. To turn this on, select the object and, in edit mode, click on Draw Normals in the mesh tools 1 panel.

FIG 2.18 Vectors used in calculating the strength of lighting on a plane; a vector to the viewer, the normal and a vector to the light source.

![]() Unity Hands On

Unity Hands On

Meshes and Normals

Step 1: Download Chapter Two/PolyNormals.zip from the website, unzip, and open.

Step 2: In the Scene, create two plane objects and place them side by side. Use GameObject > 3D Object > Plane to generate each new object.

Step 3: Add a directional light and rotate it, so the light is hitting the planes at about 30°.

Step 4: Take the CrumbleMesh.cs script and attach it to both planes.

Step 5: Select one of the planes in the Hierarchy, and tick the box next to Recalculate Normals of the Crumble Mesh Script component in the Inspector.

Step 6: Play. You will be able to see the planes deforming as shown in Figure 2.19.

Step 7: Examine the CrumpleMesh script in the editor. Although the code itself is complex for a beginner programmer, there are a few lines of significance to point out. First, the script uses a class called Perlin(). The complete code for this can be found in the Plugin folder in the Project. The specifics of this are not important at this time, but it is the cause of the smooth deformation movement of the planes. It is essentially a fractal algorithm for a random yet smooth movement. Each vertex of the plane’s mesh is captured and translated using a value from the Perlin function.

Mesh mesh = GetComponent<MeshFilter>().mesh;

The line is the Unity method for getting the mesh of any object to which the script is attached. At the very bottom of the script is the option to recalculate the normal. The script will do this when the tick box you selected before is ticked. This is the reason why one plane has updated shading and the other does not. The plane without the recalculated normal keeps the shading it started with on each polygon because, as far as the rendering system is concerned, the angle between the light source and the normal has remained unchanged. It is possible to move vertices around and leave the normals where they were. In this case, the original normals are no longer orthogonal with their polygons.

FIG 2.19 Two planes, a directional light, and a script to deform the surfaces. The plane on the left has its normal recalculated.

mesh.RecalculateNormals();

The line ensures that all normals are recalculated and made orthogonal to their polygons after the polygons’ vertices are moved. In this case, recalculating the normal is essential to getting the correct lighting effect on the surface.

2.4 Two-Dimensional Games in a 3D Game Engine

Although 3D game engines are not often written to support 2D game creation, many developers use them for this purpose because (a) they are familiar with them, (b) they do not have to purchase software licenses for other development platforms, and (c) the engines provide support for multiple platforms and game types. As Unity added the feature to port from Android and iOS platforms in earlier versions, it became attractive to developers for the creation of 2D content. At the time, Unity was still strictly 3D and 2D had to be faked by ignoring one of the axes in the 3D world.

Now-a-days Unity supports 2D game creation by putting the camera into orthographic mode and looking straight down the z axis. This setup happens by default when a new project is created in 2D mode. The scene is still essentially 3D but all the action appears and operates in the X-Y plane.

![]() Unity Hands On

Unity Hands On

A 2D Vector Based Space Shoot ‘em Up

In this hands-on exercise we will create a simple 2D environment in which a rocket ship shoots at the earth. It will demonstrate how to program an object to automatically move around a target and shoot at it.

Step 1: Download Chapter Two/Basic2DStarter.zip from the website, unzip, and open in Unity. The new scene will have a Main Camera set to orthographic with a size of 5. Do not change these settings. In the Project you will see a couple of textures and materials. One is of the earth and one of a rocket ship.

FIG 2.20 Creating a new sprite and assigning a texture.

Step 2: Add a new sprite to the Scene with GameObject > 2D Object > Sprite from the main menu. Call this new sprite “Earth.” Select this sprite in the Hierarchy and drag and drop the earth texture from the project onto the sprite property of the Sprite Renderer as shown in Figure 2.20.

Step 3: Create another sprite in the same way as Step 2, name it “Rocket” and give it the rocket texture. The rocket will appear on top of the earth sprite. With the rocket selected in the Hierarchy, press the W key and then drag the rocket to one side, away from the earth in the Scene.

Step 4: Create a new C# Script in the Project. Recall the procedure to do this is to select the small drop-down Create menu in the Project window and select C#. Rename the file Attack.cs and enter the following code in Listing 2.2.

Listing 2.2 Script to make one game object face another

//Attack.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Attack : MonoBehaviour {

public GameObject target;

public float turnSpeed = 5.0f;

void LookAt2D(Vector3 targetPos)

{

Vector3 dir = targetPos - this.transform.

position;

float angle = Mathf.Atan2(dir.y, dir.x) * Mathf.

Rad2Deg - 90;

Quaternion q = Quaternion.AngleAxis(angle,

Vector3.forward);

this.transform.rotation = Quaternion.Slerp

(transform.rotation, q,

Time.deltaTime * turnSpeed);

}

void Update ()

{

LookAt2D(target.transform.position);

}

}

The code in Listing 2.2 employs a function called LookAt2D(). This causes the object to which the code is attached to smoothly turn and look at the target object. Unity has a LookAt() function built in and works on 3D objects. It turns a game object such that the object’s z axis is oriented toward the target. If you use this in the 2D environment the object in question will turn on its side and become invisible. In this case we want to turn the object such that its y axis faces the target. You will notice the code uses something called quaternions. These will be discussed in the next section.

In the angle calculation you will see a “−90” on the end of the calculation. This is assuming the game object travels in the direction of its y axis. If your object needs to move along the x axis instead, remove the “−90” from the calculation.

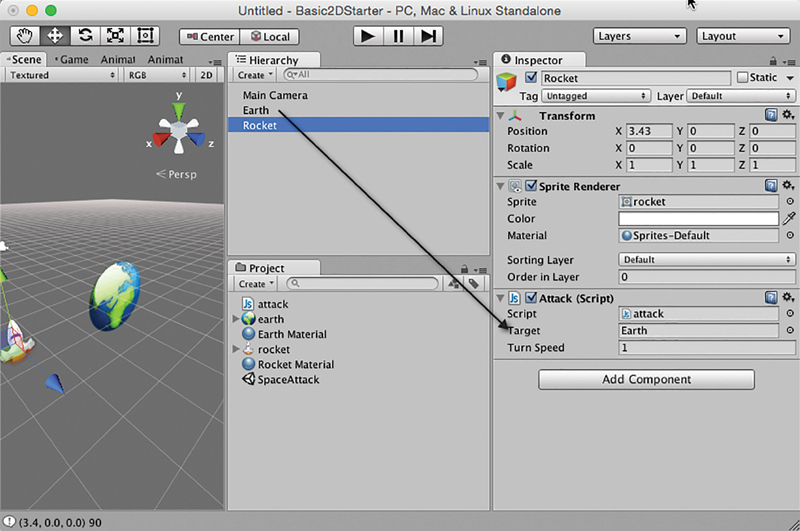

Attach the attack script to the rocket. Drag and drop the Earth sprite onto its exposed Target variable as shown in Figure 2.21.

Play. The rocket will turn slowly to face the earth. No matter where the rocket is placed in the Scene it will always turn to face the target. If you want to change the turn rate, adjust the exposed Turn Speed variable in the attack script.

Step 5: Now the rocket is turning toward the earth, we want to make it move forward. To do this is a matter of adding a single line into the code as shown in Listing 2.3.

FIG 2.21 Attaching the code and assigning a target game object.

Listing 2.3 Script to make an object move toward its target

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Attack : MonoBehaviour {

public GameObject target;

public float turnSpeed = 5.0f;

public float flightSpeed = 0.3f;

void LookAt2D(Vector3 targetPos)

{

Vector3 dir = targetPos - this.transform.position;

float angle = Mathf.Atan2(dir.y, dir.x) * Mathf.

Rad2Deg - 90;

Quaternion q = Quaternion.AngleAxis(angle,

Vector3.forward);

this.transform.rotation = Quaternion.Slerp

transform.rotation, q,

(Time.deltaTime * turnSpeed);

}

void Update ()

{

LookAt2D(target.transform.position);

this.transform.Translate(Vector3.up *

flightSpeed);

}

}

Save and play. The rocket will fall into an orbit of the earth. This is because it is constantly turning toward it and moving forward. For a tighter orbit increase the Turn Rate speed.

Step 6: To make the rocket move away from the planet when it gets too close, we can test for the distance the rocket is from the planet and turn it back toward its starting position when it comes within a specific distance. To do this, modify your script to that in Listing 2.4.

Listing 2.4 Testing rocket’s distance to planet

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Attack : MonoBehaviour {

public GameObject target;

public float turnSpeed = 5.0f;

public float flightSpeed = 0.3f;

float distanceToTarget;

string state = "ATTACK";

void LookAt2D(Vector3 targetPos)

{

Vector3 dir = targetPos - this.transform.position;

float angle = Mathf.Atan2(dir.y, dir.x) * Mathf.

Rad2Deg - 90;

Quaternion q = Quaternion.AngleAxis(angle,

Vector3.forward);

this.transform.rotation = Quaternion.

Slerp(transform.rotation, q, Time.deltaTime *

turnSpeed);

}

void Update ()

{

distanceToTarget = (target.transform.position -

this.transform.position).magnitude;

if(distanceToTarget > 10)

{

state = "ATTACK";

}

else if (distanceToTarget < 2)

{

state = "RETREAT";

}

if(state == "ATTACK")

{

LookAt2D(target.transform.position);

this.transform.Translate(Vector3.up *

flightSpeed);

}

else

{

this.transform.Translate(Vector3.up *

flightSpeed);

}

}

}

Save and Play. When the rocket gets within a distance of 2 of the earth it will start retreating until it is further than a distance of 10 away and then it will start attacking again. At this stage you can play with the flightSpeed and turnSpeed values to get the behavior you desire from the rocket.

At this point is worth pausing for a discussion of quaternions, the mathematical construct at the heart of turning the rocket ship around.

In 3D space there are three axes around which an object can rotate. These rotations are analogous with the rotational movements of an aircraft as shown in Figure 2.22 (a) a rotation about the x axis, (b) creates pitch, a rotation about the z axis, (c) creates roll, and a rotation about the y axis, (d) develops yaw.

The angles used to specify how far to rotate objects around these axes are called Euler angles. Euler angles are often used in 3D software and game engines because they are intuitive to use. For example, if someone asked you to rotate around your vertical axis by 180° you would know this meant to turn around and look in the opposite direction.

However, there is a fundamental flaw in using Euler angles for rotations in software that can cause unexpected rotational effects. These angles are applied one after the other and therefore have a mathematical compounding effect. This consequence is seen in the mechanical devices used to stabilize aircraft, ships and spacecraft; the gyroscope.

FIG 2.22 (a–d) Individual rotations about the x, y, and z axis in 3D space.

A simple gyroscope is illustrated in Figure 2.23. It consists of three discs attached to the outer structure of a vehicle (in this example, a plane) and attached to each other at pivot points each representing rotations around the x, y, and z axes. These rotating discs are called gimbals. As the plane yaws, pitches, and rolls, the gyroscope responds to the forces with the rotating of the discs at their pivot points. The idea is that a plate attached to the central, third gimbal always remains upright. Navigational systems attached to the gyroscope monitor this plate to determine the orientation of the vehicle. For example, if the vehicle were on autopilot, the objective would be to keep it upright and level to the ground, and any change in the gyroscope’s orientation assists with pitch, yaw, or roll corrections.

FIG 2.23 A simple gyroscope. (a) The gimbals and rotation points. (b) Attaching the gyroscope to a plane. (c) A clockwise yaw of the plane forces the first gimbal to move with it, as it is attached and has no freedom of movement in that direction, whereas the second gimbal is free to move in response in the opposite direction. (d) A 90° yaw will cause the first and second gimbals to align thereby entering a locked state. (e) After the first and second gimbals become locked, a pitch will retain the integrity of the third gimbal. (f) However, a roll can cause erratic behavior from gimbal 3.

A situation can occur in which two of the gimbals become aligned as shown in Figure 2.23d. This is called a gimbal lock. At this point, it can either be corrected with the right maneuver (e) or cause erratic behaviors. In Figure 2.23f, the third gimbal cannot rotate back such that the central plate is facing upward as its pivot points would not allow it. In some circumstances, alignment of the first and second gimbal can also cause the third gimbal to flip upside down, even if the vehicle itself is not upside down. When this occurs, the navigational system becomes very confused as it attempts to realign the vehicle.

While this is a simplistic examination of gimbals, the issue of gimbal lock is a reality. It is a mechanical reality that was experienced by the astronauts of the Apollo missions and it is a virtual reality when using Euler angles to rotation 3D objects. Just as the gyroscope compounds the rotations of the outer gimbal inward, multiplying x, y, and z angle rotations one after the other in software systems produces the same errors.

2.4.2 Quaternions to the Rescue

Quaternions are mathematical constructs that allow for rotations around the three axes to be calculated all at once in contrast to Euler angles that are calculated one after the other. A quaternion has an x, y, and z component as well as a rotation value.

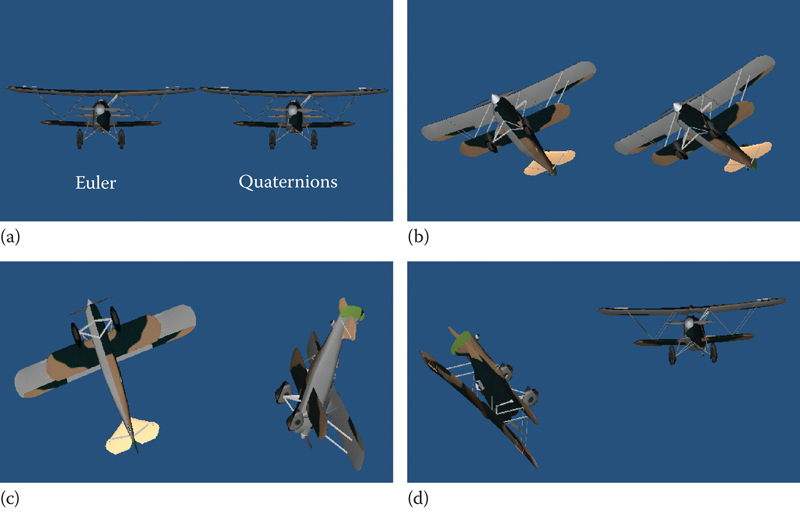

In games using Euler angles, rotations can cause erratic orientations of objects. This is illustrated in Figure 2.24 where the rotations of two planes are compared. Each plane is continually rotated around the x, y, and z axes by 1°. The plane on the left in Figure 2.24 is rotated using Euler angles and the other uses quaternions. The movement of the quaternion plane is smoother.

FIG 2.24 Two planes rotated 1° around each axis every frame. One uses Euler angle calculations, the other quaternions. (a) Planes begin aligned, (b) planes remain aligned at first, (c) once the x angle becomes 90° for the Euler plane, its rotations go off course with respect to the quaternion plane, (d) even further in the simulation.

Quaternions are used throughout modern game engines, including Unity, as they do not suffer from gimbal lock.

![]() For Research

For Research

Quaternions

If you are interested in the complex inner workings of quaternions check out Visualizing Quaternions by Andrew J. Hanson (2006, ISBN: 978-0120884001).

Although the rotation value for the transform component of game objects appears as Euler x, y, and z values in the Inspector, Unity uses quaternions for storing orientations. The most used quaternion functions are LookRotation(), Angle(), and Slerp().

LookRotation() given a vector will calculate the equivalent quaternion to turn an object to look along the original vector. This means, this.transform.rotation = Quaternion. LookRotation(target.position–this.transform.position); achieves the same result as this.transform.LookAt(target.transform.position).

The Angle() function calculates the angle between two rotations. It might be used to determine if an enemy is directly facing toward or away from the player.

Slerp() takes a starting rotation and an ending rotation and cuts it up into small arcs. It is extremely effective in making rotating objects change from one direction to another in a smooth motion. Originally, the previously created rocket ship was flipping back and forth as it changed direction. This occurs when the objects goes from the original facing direction to the goal facing direction in one frame. Slerp() breaks this rotation up, so small parts of it occur with each frame instead of all in one go.

Now you have a little understanding of quaternions we can continue with the 2D game. It contains two quaternion functions: AngleAxis() and Slerp(). AngleAxis() takes an angle and a vector, and rotates and object by the angle around the vector. It is being used to determine how to rotate the rocket, so it faces the earth. This value is required by the Slerp() function that uses the rotation speed and the time between the drawing of frames (Time.deltaTime) to carve up the complete turn angle into smaller pieces, making the rocket ship turn smoothly.

![]() Unity Hands On

Unity Hands On

Bullets and Explosions

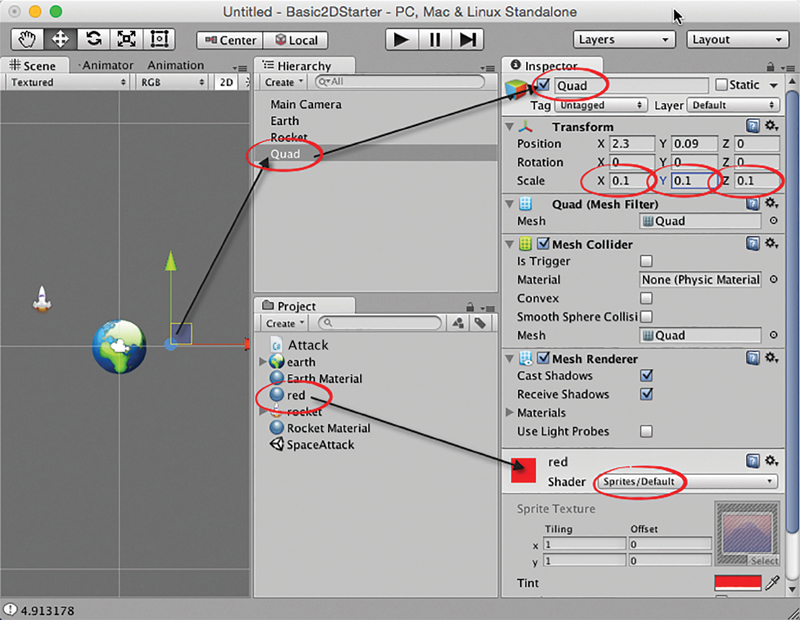

Step 1: To create bullets that come from the rocket add a new quad to the Scene by selecting GameObject > 3D Object > Quad from the main menu. You will not be able to see it until a material is added.

Step 2: Create a new material in the Project by selecting Material from the drop-down Create menu. Set the main color of the material to red, its Shader to Sprites/Default, and rename it. Drag and drop the red material onto the new quad as shown in Figure 2.25.

Step 3: The bullet will need to be small therefore scale the square down to 0.1 for the x, y, and z scale values in the Inspector.

Step 4: Create a new C# file called MoveBullet.cs. Add the code in Listing 2.5. Attach this script to the bullet (called Quad in the Hierarchy).

FIG 2.25 Creating a quad and coloring it red.

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class MoveBullet : MonoBehaviour {

float speed = 0.1f;

void OnBecameInvisible()

{

Destroy(this.gameObject);

}

void Update ()

{

this.transform.Translate(Vector3.up * speed);

}

}

Save and Play. The bullet will move up the screen along its up-axis and with it the y. If you wanted it to move sideways along the x you would change Vector3.up to Vector3.right.

The OnBecameInvisible() function ensures that the bullet gets destroyed by the game engine once it goes beyond the camera view. This will allow us to create many bullets and they will clean themselves up—meaning the game engine does not need to keep processing them if they move outside the game world. If you do not do this, you can end up with a million bullet game objects clogging up memory and eventually making your game crash.

Step 5: Next, select the bullet in the Hierarchy and remove the Mesh Collider by deleting the component in the Inspector. At the very bottom of the Inspector, click on the Add Component button. From this new menu select Physics 2D > Circle Collider 2D. This will add a 2D collider to the bullet object allowing us to detect its collisions in 2D space. If you zoom in on the bullet in the Scene you will be able to see the new collider as a circle around the red quad as shown in Figure 2.26.

Step 6: Drag the bullet from the Hierarchy and into the Project. This will create a new Prefab from the Quad. This bullet prefab can be used as a template to create multiple bullets at run time. They will all look the same, have the same properties, and have the same script attached. A prefab appears as a blue cube in the Project as shown in Figure 2.27.

Step 7: Select the bullet that remains in the Scene and delete it leaving only the prefab.

FIG 2.26 Adding a 2D physics collider to the bullet object.

Step 8: Return to the Attack.cs script. Open it in the editor. We will now add the bullet into here so the rocket shoots multiple copies of it. Modify the attack script as shown in Listing 2.6.

FIG 2.27 Creating a prefab.

Listing 2.6 Giving the script access to the bullet prefab

public class Attack : MonoBehaviour {

…

public float flightSpeed = 0.3f;

public GameObject bullet;

…

void Update ()

{

Instantiate(bullet, this.transform.position, this.

transform.rotation);

distanceToTarget = (target.transform.position -

this.transform.position).magnitude;

if(distanceToTarget > 10)

…

}

The Instantiate function now included in update creates a copy of the bullet from the prefab we created, and places it in the game world at the position of the rocket and with the same rotation as the rocket. This means that when the script on the bullet runs and pushes it forward, it will push it forward in the direction of the rocket.

Before you can see this in action you need to drag and drop the bullet prefab from the Project and deposit it onto the exposed bullet variable on the rocket. This is shown in Figure 2.28.

Save and Play. Bullets will now come out of the rocket and proceed to move forward in the direction they were created, facing until they move off the screen. You will notice the bullets are running slower than the rocket and therefore fall behind it. To fix this, locate the bullet’s speed in the moveBullet script and change it from 0.1 to 1.

Step 9: To make the rocket look like it is controlled by some half-intelligence, we should make it shoot only when it is likely to hit the target. Therefore, when it is facing away from the earth it should not be shooting at all. We do this again by calculating the angle between the rocket’s facing direction and the direction to the target. If the angle is small, then the rocket should shoot. Modify the Attack.cs code as shown in Listing 2.7.

FIG 2.28 Assigning a prefab to be used by a script.

Listing 2.7 Controlling the shooting based on the rocket’s facing direction

…

void Update ()

{

//MOVE INSTANTIATE LINE FROM HERE TO BELOW

distanceToTarget = (target.transform.position -

this.transform.position).magnitude;

if(distanceToTarget > 10)

{

state = "ATTACK";

}

else if (distanceToTarget < 2)

{

state = "RETREAT";

}

if(state == "ATTACK")

{

LookAt2D(target.transform.position);

this.transform.Translate(Vector3.up *

flightSpeed);

Vector3 vectorToTarget = target.transform.

position - this.transform.position;

if(Vector3.Angle(vectorToTarget, this.

transform.up) < 30)

{

Instantiate(bullet, this.transform.position,

this.transform.rotation);

}

}

else

…

Save and Play. The rocket will now only instantiate bullets when there is 30° or less between the direction it is facing and the direction to the target. You can play around with the value of 30 as you so wish. If you use a smaller angle you might have to increase the rocket’s turn rate so it can approach the target at the correct angle to trigger shooting.

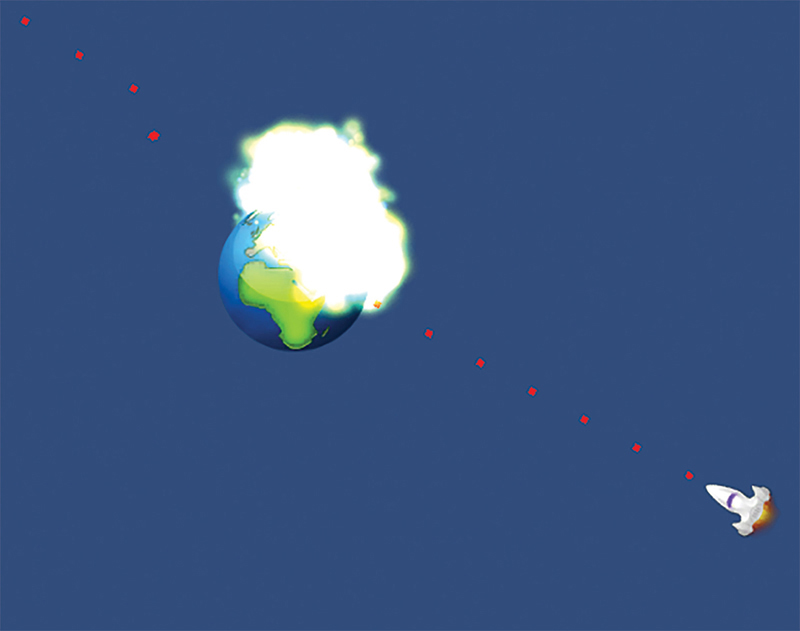

Step 10: The final part of this hands-on will be to create small explosions when the bullets hit the target. The first step is to import a particle system. You will find one on the website with the starter files for this chapter called fireworks.unitypackage. To bring the file into Unity, select Assets > Import Package > Custom Package from the main menu. Locate the fireworks.unitypackage file and open it. It will create a new folder in the Project with a Fireworks prefab in Standard Assets > Particle Systems > Misc.

Step 11: To have the bullet detect when it has hit another collider we need to attach a Rigidbody to it. With the Quad prefab selected in the Project, in the Inspector click the Add Component button and select Physics2D > Rigidbody2D. A Rigidbody component will be added to the Quad. Set its gravity value to 0 as shown in Figure 2.29.

FIG 2.29 Adding a Rigidbody to an object.

Step 12: Next we need to add a collider to the Earth in order to detect when the bullet has hit the Earth. With the Earth object selected in the Hierarchy, in the Inspector click the Add Component button and select Physics2D > Circle Collider 2D.

Step 13: Now modify the MoveBullet.cs script to include a Unity function that detects collisions. When the bullet hits the earth, it will instantiate a fireworks object. Make the changes shown in Listing 2.8.

Listing 2.8 Adding 2D collision detection function

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class MoveBullet : MonoBehaviour {

float speed = 0.1f;

public GameObject explosion;

void OnCollisionEnter2D(Collision2D collisionObj)

{

if (collisionObj.gameObject.name == "Earth")

{

Instantiate(explosion,this.transform.

position, this.transform.rotation);

Destroy(this.gameObject);

}

}

void OnBecameInvisible()

{

Destroy(this.gameObject);

}

void Update ()

{

this.transform.Translate(Vector3.up * speed);

}

}

Ensure the earth game object is called “Earth,” with a capital “E” just like the one being tested for in the if statement in Listing 2.8 otherwise the explosion will not be instantiated.

Step 14: Before the explosion will work, you need to assign the fireworks prefab to the exposed explosion property of the MoveBullet.cs script. Locate the fireworks prefab in the Project and drag and drop it onto the explosion property as shown in Figure 2.30.

FIG 2.30 Assigning a prefab to be used by a script.

FIG 2.31 The game in play.

Step 15: Save and Play. Each time the rocket’s bullets strike the earth it will instantiate a firework object as shsown in Figure 2.31.

Game players bring their own experience of the real world to a game environment. They understand that gravity makes things drop, acceleration changes the speed of an object, and when an object hits another object, a reaction, such as bouncing or exploding, will occur based on the object’s composition.

These expectations within game environments are essential to establishing the player’s suspension of disbelief—a psychological state in which a player accepts the limitations of a medium in order to engage with the content. This can include anything from low-quality graphics to flying aliens. Anything that messes with the player’s suspension of disbelief causes them to disengage and lose interest, like being woken from a dream. A big part of ensuring a game environment is making the virtual world act and react like the real one.

The laws of physics are a set of complex rules that describe the physical nature of the universe. Adhering to these rules when creating a game is key to creating a believable environment in which the player interacts. Sometimes the laws are bent to suit narrative; however, they essentially remain static throughout. For example, players in a modern warfare game, taking place on the earth, would expect gravity to react for their character as it does for them in the real world. In the case of science fiction, the effects of gravity may be altered to fit the story.

Physics is a fundamental element in games as it controls the way in which objects interact with the environment and how they move.

Although physics covers topics such as Einstein’s theory of relativity and thermodynamics, the key ones used in game environments are Newton’s three laws of motion and the law of gravity.

Although it is a myth that an apple fell on Newton’s head, he did devise his theory of gravity while watching an apple fall from a tree. In Newton’s publication the Principia, the force of gravity is defined thus:

Every particle of matter in the universe attracts every other particle with a force that is directly proportional to the product of the masses of the particles and inversely proportional to the square of the distance between them.

In short, this means the bigger an object, the more it attracts other objects and that this attraction gets stronger the closer it is. Kepler also used this law, a century later, to develop his laws of planetary motion.

In game environments, applying a downward velocity to an object simulates gravity. The y coordinate of the object’s position is updated with each game loop to make it move in a downward direction. If you were to code this in Unity, the C# would look something like this:

transform.Translate(0,-1,0);

Unfortunately, the actual calculation for real gravity would be a little more complex than taking away one, as the effect of earth’s gravity is a downward acceleration of 9.8 meters per second. This means that the downward speed of an object gets faster by 9.8 meters per second with each second. An object starting with a speed of 0 after 1 second will be moving at 9.8 meters per second, after 2 seconds it will be moving at 19.6 meters per second, and after 3 seconds it will be moving at 29.4 meters per second.

In addition, a game loop may not take exactly 1 second to execute. This will throw out any calculations you attempt with each loop update on a second by second basis.

Fortunately, game engines take care of all the mathematics and allow you to set just one gravity value for your environment. Let us take a look at how Unity does it.

![]() Unity Specifics

Unity Specifics

Gravity

From the main menu select Edit > Project Settings > Physics. The Physics properties will open in the Inspector as shown in Figure 2.32. The default setting is for a downward acceleration of 9.81 meters per second2.

FIG 2.32 Unity’s physics properties.

As you can see, depending on your own game environment, gravity can be set in any direction including upwards and sideways.

![]() Unity Hands On

Unity Hands On

Applying Gravity

Step 1: Start Unity and create a new 3D project. Note, from now on, unless specified, all new projects should be created as 3D. Orient the Scene such that the y axis is pointing upward. Position the camera, so that it has the same view as shown in the Scene. To do this, select the camera from the Hierarchy and then from the main menu GameObject > Align with View.

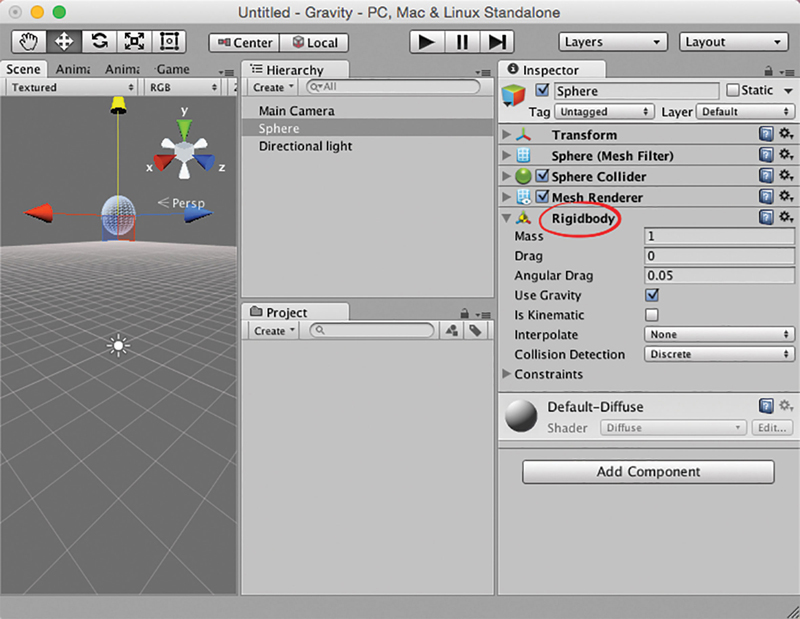

Step 2: Add a sphere to the Scene and position it at the top of the camera view. With the sphere selected in the Hierarchy, click on the Add Component button in the Inspector. Navigate to add a Physics > Rigidbody. The result of this will be a new component added to the sphere in the Inspector, as shown in Figure 2.33. The Rigidbody component makes the sphere part of Unity’s physics processing and as such gravity will be applied to it.

Step 3: Press play. The sphere will fall downward.

FIG 2.33 A Scene with a sphere that has a Rigidbody attached.

Step 4: Press stop and change the gravity via the main menu’s Edit > Project Settings > Physics; in the Inspector click on Gravity to expand. You may want to set it to a positive y value or even have it go to the side with a change to the x. You choose.

Step 5: Press play to see the effect of the new gravity settings.

Every body continues in its state of rest, or of uniform motion in a straight line, unless it is compelled to change that state by forces impressed upon it.

This means that an object will remain stationary and a moving object will keep moving in the same direction unless pushed or pulled. In the real world, a number of different forces act to move or slow objects. These include gravity and friction. In addition, objects colliding with each other will also act to change their movement.

![]() Unity Hands On

Unity Hands On

Newton’s First

Step 1: Create a new Unity Project. Ensure that the y axis is pointing up and position the Main Camera to the same view.

Step 2: Select GameObject >3D Object > Plane from the main menu. Resize the plane to twice its original size to create a large ground area. You can do this by pressing the R key while the plane is selected, or changing the scale x, y, and z values in the Inspector to 2. Add a directional light. Add a grass or dirt seamless texture to the plane.

Step 3: Select GameObject > 3D Object > Cube from the main menu. Zoom in to center the cube in the Scene. Lift the cube so that it is slightly above the ground.

Step 4: With the cube selected in the Hierarchy, use the Add Component button in the Inspector to add a Physics > Rigidbody to it.

Step 5: Press play. The cube will fall until it hits the ground and stops. Although the plane does not have a Rigidbody attached, it does have a Collider. Select plane in the Hierarchy and look for the Mesh Collider in the Inspector. This collider is used by the physics system. Although the ground plane is not affected by the cube hitting it, the cube, because it has a Rigidbody, is stopped by the collider of the plane.

Step 6: Lift the cube higher above the plane. Add a Rigidbody to the plane. You may get an error after adding the Rigidbody to the plane. It will say something like “Computer mesh inertia tensor failed…” Ignore this for now—we will deal with it in a moment.

Step 7: Press play. Note that the plane and the cube both fall at the same rate.

Step 8: Select the plane from the Hierarchy and find its Rigidbody component in the Inspector. To add air friction, set the value of Drag to 10.

Step 9: Press play. The plane will fall away more slowly than the cube. When the cube hits the plane, the plane will speed up and possibly flip, depending on where you have placed the cube with respect to the plane.

Step 10: Remove the Rigidbody from the plane by selecting the small drop-down menu as shown in Figure 2.34.

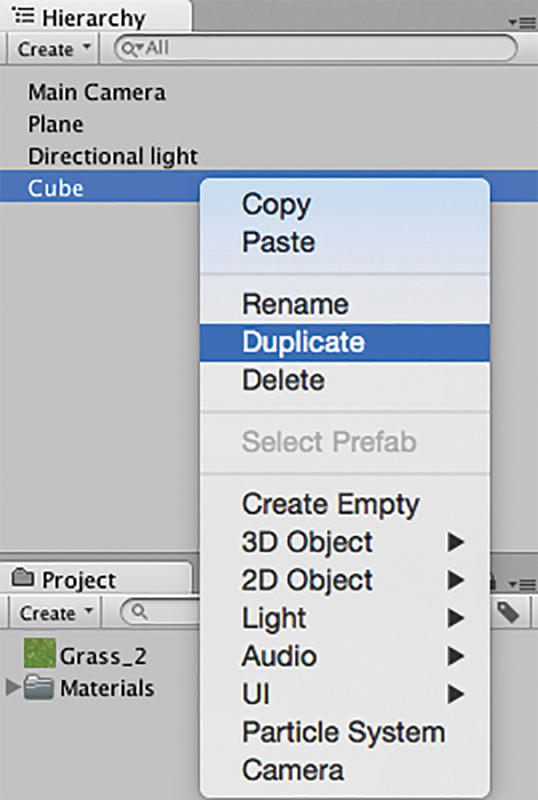

Step 11: Select the cube in the Hierarchy, right-click on it, and select Duplicate as shown in Figure 2.35. Duplicating a GameObject after it has had components, such as a Rigidbody, attached to it will ensure that the duplicate has all the same attachments. Move the duplicate cube, which will be in the exact same location as the original. You can also duplicate with Ctrl+D (Windows) or Cmd+D (Mac).

Step 12: Continue duplicating and moving to build a stack of cubes as shown in Figure 2.36.

FIG 2.34 Removing a component from a game object.

FIG 2.35 Duplicating a game object in the Inspector.

FIG 2.36 A stack of duplicated cubes, all with Rigidbodies.

Step 13: In the Project, create a new C# file and call it fire. Open it in the script editor and enter the code in Listing 2.9. Save the script and attach it to the Main Camera.

Listing 2.9 Script to create a sphere as a projectile on a left mouse click

//Fire.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Fire : MonoBehaviour {

void Update ()

{

if(Input.GetButtonDown("Fire1"))

{

Ray ray = Camera.main.ScreenPointToRay

(Input.mousePosition);

GameObject sphere = GameObject.CreatePrimitive

(PrimitiveType.Sphere);

sphere.transform.position = ray.origin;

sphere.AddComponent<Rigidbody>();

sphere.GetComponent<Rigidbody>().AddForce

(ray.direction * 1000);

}

}

}

Step 14: Save and play. A click in the Game will create a sphere at the click location and project it in the direction the camera is facing. Before a force is added to push the sphere, the AddComponent() function is used to add a Rigidbody component to the sphere as it is not attached by default. The ScreenPointToRay() takes the mouse click location and turns it into a vector (called a ray) that starts at the click location and continues in the direction the camera is facing. This direction is used as the push force on the sphere, propelling it forward.

Step 15: Gravity still affects the sphere. To see it in action, change the multiplier for the AddForce() function from 1000 to 100.

![]() Note

Note

Mesh Inertia Failure

From time to time when adding rigid bodies onto meshes you may get the error: Actor::updateMassFromShapes: Compute mesh inertia tensor failed for one of the actor’s mesh shapes! Please change mesh geometry or supply a tensor manually! When this occurs, it means that you have tried to put a rigid body onto a mesh that has no depth and therefore no mass. A plane is so flat that it has literally 0 height; no volume, no mass. To fix this, remove the mesh collider that is on the object and replace it with something like a box or sphere collider.

2.5.3 The Second Law of Motion

The acceleration produced by a particular force acting on a body is directly proportional to the magnitude of the force and inversely proportional to the mass of the body.

This means that a larger force is required to move a heavier object. In addition, a heavier object with the same acceleration as a lighter object will cause more destruction when it hits something as the force will be greater. Imagine throwing a bowling ball and a tennis ball at a wall with the same acceleration. Which one is going to leave a bigger hole?

![]() Unity Hands On

Unity Hands On

Newton’s Second

Step 1: Begin by opening the Unity project from Section 2.5.2. Open the fire script and ensure that the AddForce() multiplier is set to 1000, for example, AddForce(ray.direction * 1000).

Step 2: In the Hierarchy, select each cube in turn, locate the Rigidbody component in the Inspector, and set the Mass to 10.

Step 3: Press play. Note that the heavier cubes are now more difficult to knock over with the sphere.

Step 4: Edit Fire.cs to increase the mass of the sphere as shown in Listing 2.10. Save and play.

Listing 2.10 Script to change the mass of a Rigidbody

//Fire.cs

void Update ()

{

if(Input.GetButtonDown("Fire1"))

{

…

sphere.AddComponent<Rigidbody>();

sphere.GetComponent<Rigidbody>().mass = 10;

sphere.GetComponent<Rigidbody>().AddForce(ray.

direction * 1000);

}

}

Step 5: Because the sphere has more mass, the added force has less effect. However, you may be able to get it to roll along the ground. The extra mass will be enough to knock over the cubes.

Step 6: Try setting the AddForce() multiplier to 10,000. Note that because the mass has increased 10-fold, the force required to move the sphere in the same way must also increase 10-fold.

To every action there is always opposed an equal reaction; or, the mutual actions of two bodies upon each other are always equal, and directed to contrary parts.

This can be rephrased to the well-known adage for every action there is an equal and opposite reaction. When a truck hits a car, energy from the movement of the truck is transferred to the car and it is propelled away from the truck. If a car hits another car of a similar size, some of the energy is transferred to the second car, whereas some goes back into the first car. If a car hits a brick wall, chances are most of the energy will go back into the car. This energy needs to go somewhere. In the case of cars, specially designed crumple zones absorb the energy. For a tennis ball, some of the energy is absorbed by the rubbery nature of the ball and the rest is used to propel the ball away. In other words, collisions occurring in the real world have an effect on the environment as well as the object.

The examples thus far examined in Unity see energy from an initial force transferred or totally absorbed by the objects. For a heavy sphere, cubes are knocked over easily, whereas a light sphere hits the cubes and drops straight to the ground. This rarely happens in the real world where things tend to bounce, to some degree. In Unity, adding a physics material to an object can simulate these extra effects.

![]() Unity Specifics

Unity Specifics

Physics Materials

In Unity, a physics material is created in the Project and then added to the object’s Collider component. A physics material sets values for bounce and friction. The bounciness of an object can be set between 0 (no bounce) and 1 (full bounce) to simulate how much of a collision’s force comes back to the object. For example, for a tennis ball, set bounce to 1. The friction value determines how well an object holds onto another surface when rubbed together. For example, rubber on concrete has a high friction value, whereas stone on ice has a low friction value. Friction is a force that slows down movement. Friction can be set to 0 (no friction) up to an infinite number (total friction).

![]() Unity Hands On

Unity Hands On

Newton’s Third

Step 1: Create a new Unity project with a sloping plane and cube as shown in Figure 2.37. Attach a Rigidbody to the cube. Press play and watch the cube drop down until it hits the plane and then rolls the rest of the way. Try to rotate the cube such that one side is parallel to the plane as shown.

FIG 2.37 The initial scene required to demonstrate physics materials.

FIG 2.38 Creating physics material.

Step 2: To create physics material, in Project, select the Create menu and choose Physics Material, as shown in Figure 2.38. Rename the material box.

FIG 2.39 The Inspector view of physics material.

Step 3: Select the cube in the Hierarchy and locate its Box Collider in the Inspector. The first property will be Material. Click on the small circle to the very right of it and select the box physics material from the pop-up window. If you play the application at this point, there will be very little effect. Select box from the Project. The view of this physics material will appear in the Inspector as shown in Figure 2.39. Set the Bounciness value to 1. Press play to see the effect.

Step 4: To get a higher bounce, set the Bounce Combine value to Maximum. These combine values tell the physics engine how to work out the effect when two objects collide. When set to maximum it will apply the maximum bounce factor out of the two objects. If you set it to minimum the cube will not bounce, as the plane has no bounciness value.

Step 5: Now try setting all the box physics material values to 0 and the Friction Combine to minimum. Press play. With no bounce or friction, the box will fall to the plane and then slide down as if the surface was made of ice.

2.6 Physics and the Principles of Animation

In their 1981 book, The Illusion of Life, Disney animators Ollie Johnston and Frank Thomas introduced 12 rules to be applied when creating animated films. These are

Squash and stretch:The deformation of objects in reaction to the laws of physics; for example, a tennis ball hitting a wall squashes on collision.

Anticipation: Presenting short actions or hints to a viewer of what is about to happen; for example, a person about to jump in the air will bend their knees first.

Staging: Presenting an idea such that no mistake can be made as to what is happening; for example, viewing an angry person’s face gives a better impression of their mood than the back of their head.

Straight-ahead action and pose to pose: These are animation drawing methods. Straight-ahead action refers to drawing out a scene frame by frame. Pose to pose refers to drawing key frames or key moments in a scene and filling in the gaps later.

Follow-through and overlapping action: This is the way in which momentum acts on a moving object to cause extra motion even after the initial force has stopped; for example, a baseball pitcher’s arm does not stop moving the moment the ball leaves his hand. In addition, his legs and body also move in response to the action. Overlapping action occurs when secondary objects move with the main object.

Slow in and out: Natural movement in which there is a change in direction decelerates into the change and accelerates out; for example, a car turning a corner slows into the corner and accelerates out. A person jumping will slow into the impact with the ground and speed up as he pushes off the ground with his legs.

Arcs: Motion in animals and humans occurs along curved paths. This includes the rotation of limbs and the rise and fall of a body when walking. The same curved movement is also found in the trajectory of thrown objects.

Secondary actions: These animations support the principal animation. They give a scene more realism; for example, a person walking along the street would not just be moving his legs. His arms might swing, he may be talking, and his hair could be flowing with the breeze.

Timing: This refers to the speed of actions. It is essential for establishing mood and realism; for example, a fast-moving character will appear to be in a hurry, whereas a slow-moving character portrays lethargy or disinterest. For realism, the correct timing of actions with motion and sound is critical. Slow animated walking characters can look like they are slipping across the ground if their forward movement and leg cycles are not matched. A delay between an action and a sound, such as a bomb exploding and the associated sound effect, adds to suspension of disbelief.

Exaggeration: Perfect imitations of the real world in animation can appear dull and static. Often it is necessary to make things bigger, faster, and brighter to present them in an acceptable manner to a viewer. Overexaggeration is also used in the physical features of characters for the effects of physics; for example, in Warner Brothers’ coyote and roadrunner films, when the coyote is about to fall from a great height, the time he spends in the air realizing his predicament is exaggerated far beyond what normal gravity would allow.

Solid drawing: This is the term given to an animator’s ability to consider and draw a character with respect to anatomy, weight, balance, and shading in a 3D context. A character must have a presence in the environment, and being able to establish volume and weight in an animation is crucial to believing the character is actually in and part of the environment.

Appeal: This relates to an animator’s ability to bring a character to life. It must be able to appeal to an audience through physical form, personality, and actions.

All but a couple of the preceding principles of animation can be conveyed in a game environment through the physics system. They are consequences of physics acting in the real world. We subconsciously see and experience them every day, albeit not with as much exaggeration as a game, and come to expect them in the virtual environment.

In the following hands-on sections, you will get a chance to see how these principles can be applied in your own games.

2D Boy’s two-dimensional adventure World of Goo features many moving balls of Goo. Each time Goo accelerates, it becomes elongated along the direction of movement, and it decelerates and squashes when it collides with another object. Such movement occurs in the real world and is explained by Newton’s laws.

While game-based physics engines do allow for the creation of bouncy objects, typically they do not provide real-time squashing and stretching algorithms for the actual game object. The rigid body attached to a game object to simulate physics by very definition remains rigid even though its movement suggests otherwise. In most cases, it is too processor intensive in 3D environments to squash and stretch all objects, but just for fun this hands-on session will show you how to do it in Unity.

![]() Unity Hands On

Unity Hands On

Squash and Stretch

Step 1: Download Chapter Two/AnimPrinciples.zip from the website, unzip, and open in Unity. In the Project, double-click on squash in the Scenes folder to open the scene. The warehouse scene from one of the Unity online resources will be visible.

Step 2: We want to be able to move around inside the warehouse and need to add a first person controller (FPC). Select Assets > Import Package from the main menu, and select the Characters (Figure 2.40).

![]() Note

Note

Locating Standard Assets

In some of the more recent installations of Unity, the Standard Assets have been missing or unable to be downloaded. You can get these from the Asset Store by searching for Standard Assets. Alternatively, the standard assets for V. 2018 are available with the resource files for this book.

FIG 2.40 The Unity packages folder location on a Mac.

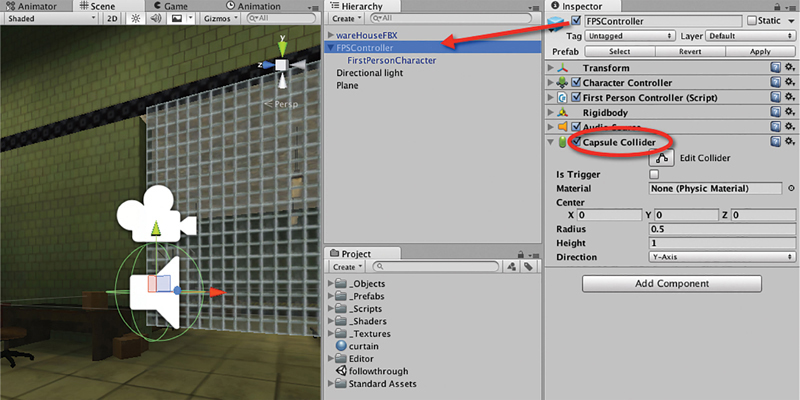

Step 3: Unity will decompress the package and then show you the files it is about to add to your project. Select all and click on Import. A folder called Standard Assets will appear in the Project. Inside this folder locate the RigidBodyFPSController and drag it into the Scene. A capsule-shaped object with a camera attached will show in the Scene, as illustrated in Figure 2.41.

Step 4: Delete Main Camera from the Hierarchy. There is already a camera attached to the FPC and the Main Camera is no longer needed.