|

|

|

|

|

Animation is about creating the illusion of life. And you can’t create it if you don’t have one.

Brad Bird

Animation is the underlying mechanic on which the visual aspect of computer games is built. It is the illusion of movement created through the display of a rapid succession of images, each slightly different from the other, creating the impression of motion. Animation is possible because of biological phenomena involving the human eye.

Originally, the perception of motion was explained by a theory known as persistence of vision, which refers to the afterimage that appears on the retina for approximately 1/25 of a second. You will notice this effect after staring at something and then closing your eyes. A negative type of imprint will be apparent. This effect is exaggerated if you look at a high-contrast image such as the shadowed side of a tree with the sun shining through from the other side. It was initially thought that humans saw animation when the afterimage from one still shot merged with the next. Although persistence of vision is a term still used to explain our ability to see movement when there is none in film and cinema, the theory was discredited as the main explanation by German Gestalt psychologist Max Wertheimer in 1912. Rather he proposed that the perception of motion was a psychological phenomenon called phi. Phi, in short, is the way in which the human brain automatically fills in the gaps between the images we see and therefore creates a perception of seamless motion.

The traditional technique for producing animation was to hand draw each image, known as a frame, and display them one after the other. Early Disney cartoons were produced in this manner. In order to provide smooth motion, the frames need to be shown at 24 frames per second. These frames are shot in twos such that each still image is displayed on two frames of film. This means that 12 drawings are required for 1 second of film.

For modern computer games, frame rates between 30 and 100 are acceptable. Of course, if there were no motion on the screen, a frame rate of 1 would be adequate. The frame rate in a computer game will differ depending on the background processing that is occurring during any given game loop. Ironically, fast action-paced games with lots of moving objects need to run at a higher frame rate in order for the player to take all the action in, although all the extra processing would be taxing on the processor and could lead to low frame rates.

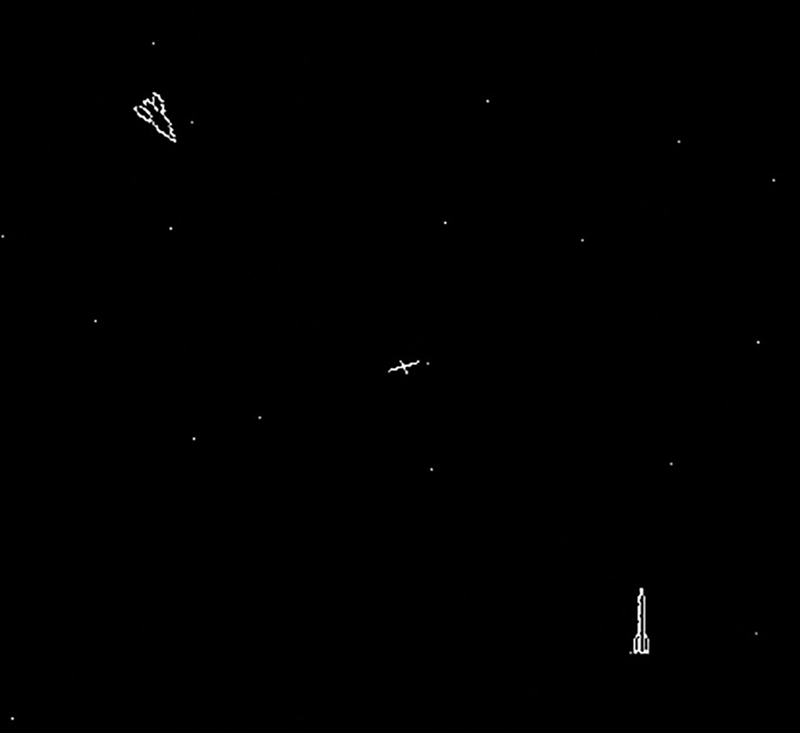

Animation in the very first computer games was the result of using vector graphics to draw an object on the screen, clearing the screen, and redrawing the object at a slightly different location and rotation. Essentially, each frame was being drawn on the fly by an algorithm—the reason being that the computers of this time did not have memory available for storing art assets created by others, not to mention the absence of digital paint programs to produce them. For very simplistic graphics, this worked effectively. The use of real-time drawing in one of the first computer games, Spacewar! (produced in 1962), is shown in Figure 3.1.

FIG 3.1 Spacewar!

When read-only memory was introduced to arcade games in 1974, it allowed for the storage of predrawn graphics along with the game’s program. The game could then load the various graphical assets and integrate them into the animation on the screen. These two-dimensional bitmaps were first referred to as sprites.

Loading a 2D image onto the screen and redrawing it along a path will create a simple animation. This very principle is illustrated in the rocket ship workshop from Chapter 2, in which the static rocket ship image is moved around the screen. The rocket ship and planet are sprites. In these examples, sprites are dealt with by placing a texture representing the graphic element over a plane game object. The plane is then moved around the screen to create an animation. Both the rocket ship and the planet each have its own materials representing the different images.

The more materials used in a game, the less efficiently it will run. This is closely related to the way in which the power of two images is processed. In the case of Unity, each material adds an extra load inside each game loop. This load is called a draw call. A draw call occurs when rendering commands are processed by the computer’s graphics processor. Each material used equals one draw call. Therefore, if you have 100 materials, it will cost you 100 draw calls.

![]() For Research

For Research

Rendering Statistics

For more details about rendering statistics in Unity see http://docs.unity3d.com/Manual/RenderingStatistics.html.

As the number of draw calls increases, the slower your game will run, although a great number of draw calls would be required to make any noticeable difference on a high-performance gaming machine. However, if you port your application to a mobile device, a dramatic effect on performance is seen after around 15 draw calls.

Materials are not the only things that will increase the number of draw calls. As the polycounts of the meshes in the game environment increase, so too will the draw calls. However, polycounts do not have a one-to-one relationship with performance as materials do. Therefore, it is essential to consider the way in which sprite materials are handled.

Considering that a single material made from a texture that is 512 × 512 will take the same number of draw calls as one that is 32 × 32, it seems a waste to use only a 32 × 32 texture. Therefore, if the 32 × 32 image were put into a 512 × 512 image, there would be plenty of space in that image for other small images. Combining images into one texture is a common technique in games and is called a texture atlas.

In a texture atlas, each smaller image has its own set of pixel coordinates and a width and height. To create such an atlas, you can use a paint program such as Photoshop or GIMP to combine smaller images into a single larger one. Figure 3.2 shows an example texture map in GIMP. Each image is placed such that a bounding box around it does not overlap with any other image. This allows for easy extraction of single images from the texture map in the game engine. In this example, the bounding box for the small car starts at (0,0) pixels and ends at (40,40) pixels. GIMP is useful for creating texture atlases; the pixel location of the mouse cursor is shown in the lower left-hand corner of the window. This makes it easier to extract the bounding boxes for each image. In GIMP, however, (0,0) is in the upper left-hand corner of the image. If you were to use Adobe Illustrator, (0,0) is in the bottom-left corner. Keep this in mind when you are grabbing pixel coordinates. If your texture appears upside down, it could be because the y axis is inverted. This is not a problem, just something that needs to be taken into consideration in your game scripts.

The coordinates of the bounding box are then used to manipulate the UV values of a mesh such that only the pixels inside the bounding box appear on the mesh as its texture. This requires a thorough understanding of vertices and UVs.

While the vertices of a mesh can have any value in 2D or 3D space, UVs are always specified between 0 and 1.

FIG 3.2 A texture atlas in GIMP showing the boundaries of a small car sprite image.

![]() Unity Hands On

Unity Hands On

Modifying Mesh UVs for Sprite mapping

Step 1: Download Chapter Three/StaticSprites.zip from the website. Unzip and open the project with Unity. Open the scene called spriteland. You will see a view of a 2D street scene.

Step 2: Locate the texture called townatlas in the Textures folder under Project. Click on the texture to reveal its properties in the Inspector. To use the image for sprite creation, set the Texture Type to Sprite 2D and UI as shown in Figure 3.3.

Step 3: Once the Texture Type is set beneath it, you will see a setting for Sprite Mode. Set this to Multiple.

Step 4: Still in the Inspector and looking at the settings for the townatlas image, click on the button Sprite Editor.

FIG 3.3 Inspector texture settings for creating a sprite.

Step 5: Drag the mouse over one of the smaller images, such as the house, to draw a square around it. This square defines the UV settings of the sprite. When you release the mouse, a sprite is created called townatlas_0 as shown in Figure 3.4.

Step 6: Drag the mouse around the tree to make another sprite. This will automatically be named townatlas_1.

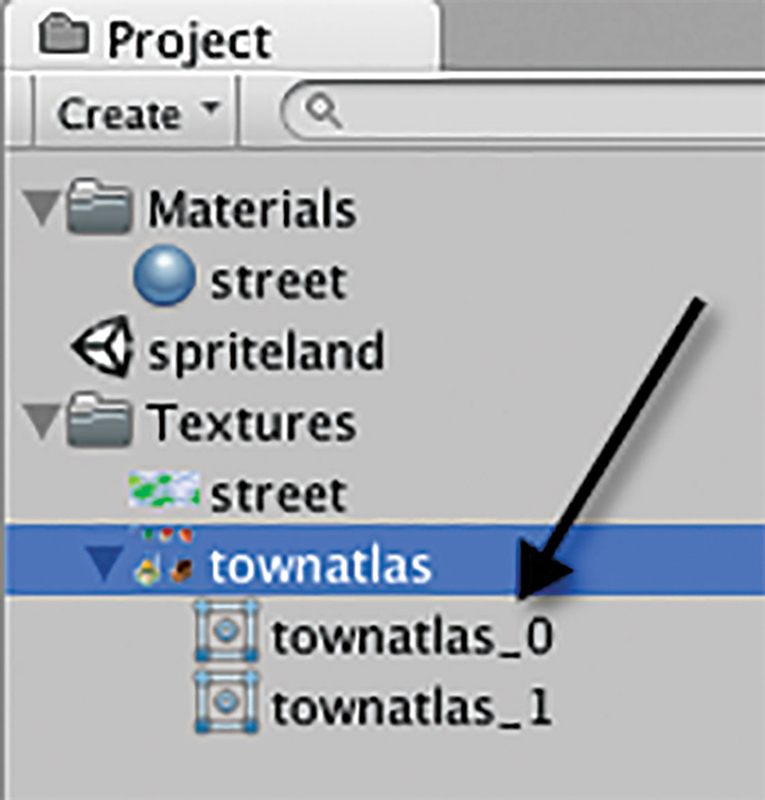

Step 7: When you are finished defining the sprites, click on the Apply button at the top of the window and close it. In the Project, each sprite will appear as a child object of the texture. This is illustrated in Figure 3.5.

FIG 3.4 Creating individual sprites from a texture.

FIG 3.5 Sprites displayed as children of the texture to which they belong.

FIG 3.6 Putting the sprite texture onto an object in the scene.

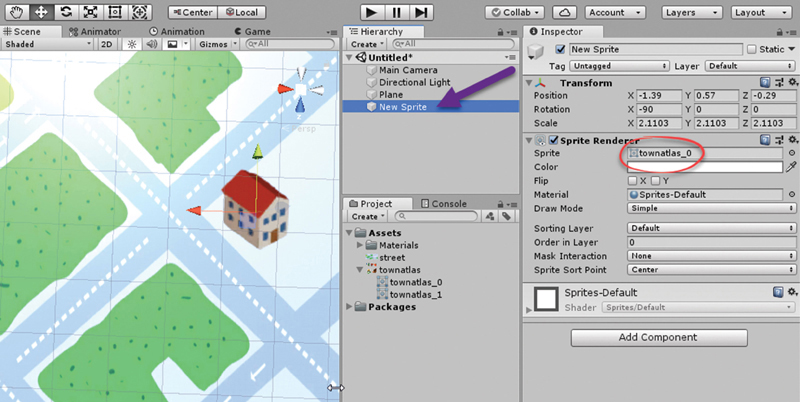

Step 8: From the main menu, select Game Object > 2D Object > Sprite. A sprite object will be added to the Scene. You may have to move it forward on the z axis to see it as it could be behind the existing street scene quad. To place one of the sprites you just created on it as the texture, drag and drop the desired one onto the Sprite Renderer for the new sprite as shown in Figure 3.6.

If you ever go back to the Sprite Editor and adjust the UV settings for an individual sprite texture, it will be immediately reflected on any objects using that texture.

Step 9: Complete this exercise by setting UVs around each item in the townatlas and applying them to sprites in the scene.

Technically, any 2D image used as an object or character in a game is a sprite. However, the term is associated more often with a set of 2D animations representing specific movements of a game object. In this case, a sprite consists of a set of images that combine to animate specific gestures, such as walking, running, and jumping. The sprites are imported into a game engine and a code is used to control how the frames are presented in the game environment to give the illusion of character movement.

The animation images for a sprite are drawn based on the movement cycles originally developed for cartoon characters. Preston Blair, an acclaimed animator who worked for Disney, Warner Brothers, and Hanna-Barbera, published many illustrations demonstrating various character poses through a series of movement cycles.

FIG 3.7 Half of a walk cycle where the other half would be a reversal of the arm and leg positions.

The first of these cycles often referred to when teaching elementary animation is the walk cycle shown in Figure 3.7. Note the use of motion arcs for natural movement in the walk cycle here and the others by Preston Blair. The character rises and falls with each step.

Each of the images in Figure 3.7 becomes a single frame for the sprite. When these frames are cycled through in the game, it gives the illusion of a walking character.

![]() Unity Hands On

Unity Hands On

Creating a Texture Atlas with Code and Animated Sprite

Step 1: Create a new 2D Unity project.

Step 2: Download FBI_walk_cycle.png from the website. This is a freely available animated sprite sheet at opengameart.org created by FeralFantom. Add the texture to your Unity project.

Step 3: With the texture selected in the Project, set the Texture Type to Sprite 2D and UI, and set the Sprite Mode to Multiple.

Step 4: Click on the Sprite Editor button in the Inspector. At the top of the Sprite Editor window, set the editor to Splice then select Grid By Cell Size as shown in Figure 3.8. In the case of this sprite, each frame of the animation is 64 × 64. Use this as the pixel size for the grid. Click on the Slice Button when you are ready to create all the sprites and then click Apply.

Note that in order for this type of texture atlas UV mapping to work, each sprite has to be arranged in sequential and equal sized squares. This is typical of animated sprite sheets and something you should consider when making your own assets.

Step 5: Back in the project, the original texture will now have all the sprites shown as child objects. They will be named sequentially from FBI_walk_cycle_0 to FBI_walk_cycle_35.

Step 6: Take the Project tab and drag and drop it away from Unity to create a new window. Stretch it out horizontally and then right-click on the tab title and select Two Column Layout. Click the right icon on the sprite texture to expand; all the individual images will be visible as shown in Figure 3.9.

FIG 3.8 Slicing up a texture atlas for animation.

FIG 3.9 Displaying all the animation frames in the project window.

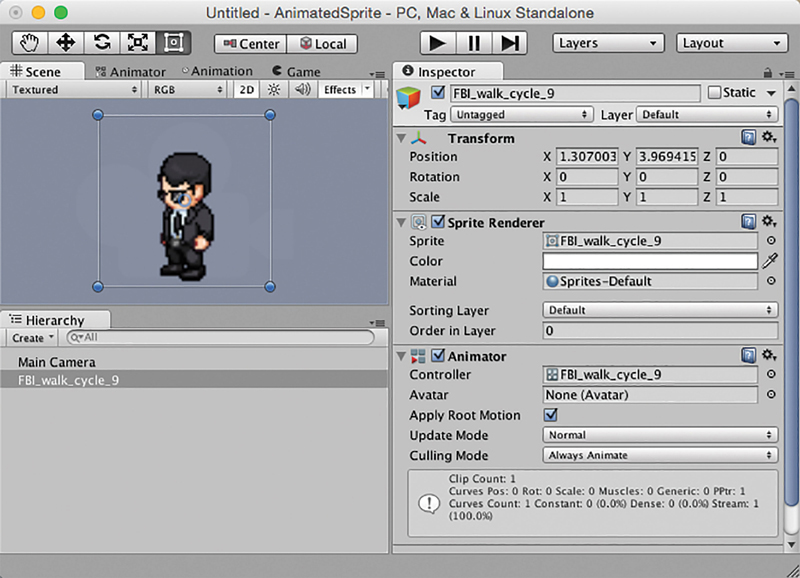

Step 7: Shift-select all the frames for the walking to the left animation in the Project. These are frames 9 through to 17. Drag them into the Hierarchy. On dropping them, Unity will ask for an animation name. Call this new animation as WalkingLeft. Click on Save.

FIG 3.10 A newly created animated sprite from single frames.

A new animation will be created in the scene complete with a Sprite Renderer and Animator as shown in Figure 3.10.

Press play and watch the animation play. The character will cycle through its walking to the left animation. You can make the character bigger by selecting it in the Scene, pressing the R key, and using the axes gizmos to drag out a new size.

Step 8: Download Chapter Three/background.png from the website. Add a new 3D Quad to the scene behind the character and put this new texture on it. Ensure the new texture is just a texture and not a sprite. Stretch the plane out to accommodate the texture. Move the background a quad away from the camera so the character is visible.

Step 9: Create a new C# Script named Scrolling.cs. Add the image scrolling code from Chapter 2, which is repeated here in Listing 3.1 for your convenience. Attach the C# to the new plane.

Listing 3.1 Code to scroll a texture over the surface of a mesh

//Scrolling.cs

using UnityEngine;

using System.Collections;

public class Scrolling : MonoBehaviour {

public Vector2 uvSpeed = new Vector2(0.0f, -1.0f);

public Vector2 uvOffset = Vector2.zero;

void LateUpdate()

{

uvOffset += (uvSpeed * Time.deltaTime);

this.GetComponent<Renderer>().materials[0].

SetTextureOffset("_MainTex", uvOffset);

}

}

Note that the background must be a 3D object, and the texture applied must be a basic texture, otherwise the code will not work. In other words, do not use sprites for this.

Step 10: With this new plane selected, in the Inspector change the UVSpeed to (−0.1,0).

Step 11: Play. The background will scroll making the character appear to walk along the street.

Step 12: To move the character itself with the arrow keys, create new C# script called Walk.cs, add the code in Listing 3.2 and attach it to the character’s plane.

Listing 3.2 Code to scroll a texture over the surface of a mesh

//Walk.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Walk : MonoBehaviour {

public float speed = 0.1f;

// Use this for initialization

void Start () {

}

// Update is called once per frame

void Update () {

if(Input.GetKey("right"))

{

this.transform.Translate(speed,0,0);

}

if(Input.GetKey("left"))

{

this.transform.Translate(-speed,0,0);

}

}

}

Step 13: Play. The arrow keys will move the sprite back and forth in front of the background. Remove the scrolling script from the background to get a better idea of how the character is moving.

Step 14: With the walking speed set to 0.1, the character appears to slide across the ground. This is a common error made by beginner animators when creating walk cycles and placing the character into an environment whether it be in 2D or 3D. The idea is to get the walk cycle speed to match with the motion speed so that each foot appears to be planted into the ground. For this character, a speed of about 0.04 works well. Try this out.

![]() Note

Note

Making your own sprite frames

One of the easiest ways to create your own sprites, instead of drawing each frame by hand, is to use a software package that will do it for you. Adobe Flash has an export to image option that will allow you to create single frames from an animation and save them as a sequence of PNG files. Anime Studio Pro is a 2D animation software package that will take an image of a character and allow you to add your own bones (see Figure 3.11). You can then manipulate these bones to pose the character. After an animation has been created, Anime Studio Pro provides export features that will create a sequence of JPG or PNG files that can then be used on sprites in Unity.

![]() On the Web

On the Web

Advanced Sprite Editor

Advanced use of the Unity Sprite Editor:

http://unity3d.com/learn/tutorials/modules/beginner/2d/sprite-editor

More Sprites: https://opengameart.org/

GIMP http://www.gimp.org

FIG 3.11 Manipulating character poses in Anime Studio Pro.

Calculating animations in real time through kinematics is a very processor-costly method for animating game characters. While this method can certainly produce spontaneous interactions with characters, far more artificially intelligent controls need to be programmed to make them feel real. If you examine game characters closely, you will notice that they repeat the same actions in exactly the same way over and over. This is because it is less effort on the part of the animation to make; for example, why use five different walk animations when just one will suffice? It does not add anything more to a game having characters that can select from different walking styles as they see fit.

If you stop to observe ancillary characters in the crowd such as those in Assassin’s Creed or Skyrim, you will be able to spot their walk cycles and other repeated actions. Because the purpose of the game in these cases is not to eye the crowd, the same repeated walk cycle is not that important. You may also find a game hero who climbs a drainpipe in the same way as he climbs a rope. Reusing animations is just a trick to get better performance out of a game and a way to keep the development budget down. In the end, it is how these animations are used and how the game environment is designed around these limitations. For example, the animation for climbing a ladder could be used for scaling a building or a trellis if the objects are designed to have handholds and footholds in similar positions to a ladder.

When animations are fixed and not manipulated in real time by the program, they are called baked. This means that the entire animation sequence is calculated beforehand and that the program receives a set of model transformations for each frame.

Because the character modeler cannot possibly know at the time of animating how the player is going to direct and move the character, it is impossible to create a long strung out animation, for example, showing the character running up the road, jumping over a fence, and rolling through a window. If the player decides that the character should not jump over the fence but rather hop on the nearest motorbike, a single long animation will not allow for visualization. Instead, animations are broken into short action segments that can later be put together in any order to facilitate fluid animation. Such segments might include walk, run, and jump cycles. Depending on how the player wants the character to move, he or she can then run, jump, run, and walk—or walk, jump, jump, run, and walk seamlessly.

This means that each animation segment should start and end with the character in the same pose. When the next segment is added, there is no obvious gap in the sequence. As shown in Figure 3.12, the initial frame for the character’s idle, run, walk, and shoot down animations has the character’s feet and legs in exactly the same position. No matter what the sequence of actions, the full movement of the character will appear fluid. In a situation where the animation switches to the shoot down, the arms and shoulders will move away from the initial poses of the others. If this is a very small movement, and given the legs do not move, the change in pose will appear as a simple frame change in any other segment.

If a situation arises where the character needs to go from a walking to a crawling action, two in-between sequences are required to stitch the animations together: one for getting down and one for standing up. Sometimes a single animation is reused for its reverse action. For example, getting down is reused for the standing up, and walking forward is reused for walking backward. However, if you have a close look at both these examples, the results are usually very unconvincing and ruin the entire effect.

FIG 3.12 Starting frames for four different animations performed by the same character.

Baked animations can be made in software modeling and animation tools such as Autodesk’s 3DS Max and Maya. The native format for animations in Unity is FBX, which can be created with both these applications. Once the FBX sequences have been imported into Unity, Script can be used to control how they play.

![]() Unity Hands On

Unity Hands On

Controlling Character Animation

Step 1: Download Chapter Three/WalkingGranny.zip from the website. Unzip and open the project in Unity. Open the scene called animations. In the Game, you will see a character called Sporty_Granny.

Note that in the Project > Granny folder, you will find a number of files named Sporty_Granny@*** where *** is an action such as idle or jump. Each one of these files is an FBX animation.

Step 2: Select the Sporty_Granny.fbx object in the Project as shown in Figure 3.13. In the Inspector, select the Rig tab and set the Animation Type to Humanoid. Click on apply. If you now select the Sporty_Granny object in the Hierarchy, you will notice that the object has an Animator component attached. This component requires an Animator Controller. The Animator Controller will link all available animations to the model. You will need to do this for all the FBX files, shift select the remaining FBX files (i.e., Shorty_Granny@idle, etc.) and set the Animation Type to Humanoid and click Apply.

FIG 3.13 Setting up a newly imported FBX model for animating.

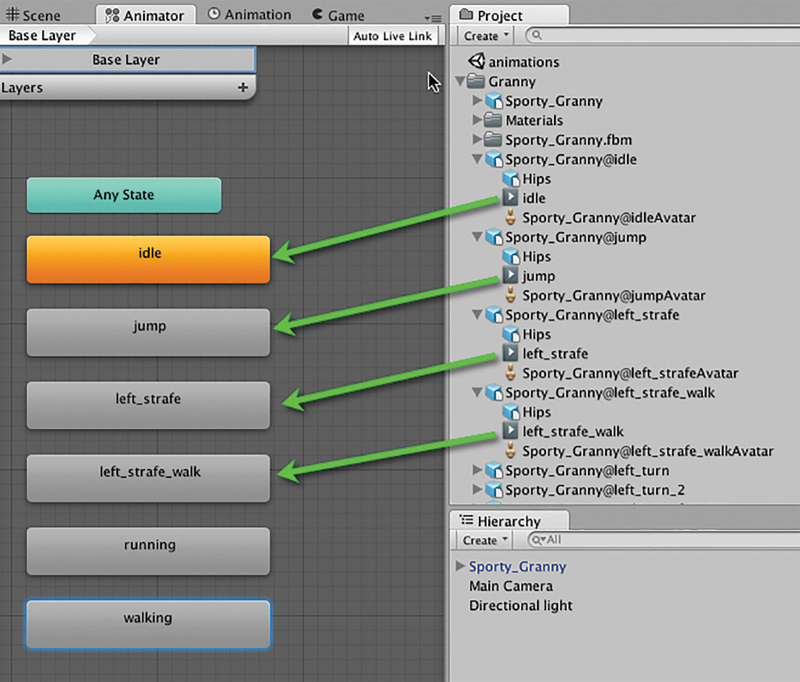

Step 3: In the Project, create a new Animator Controller as shown in Figure 3.14. Rename it Granny Controller. Having it selected in the Project window, you will see a small Open button appearing in the controller. Click on this to add the animations. The Animator window will open. It has a gridded background, and there will be a green round-cornered square with Any State written on it.

Step 4: With the Animator window open next to the Project, locate all the animation files and drag them from the Project into the Animator. The files you are looking for have a small blue “play” icon next to them. The first one you add will appear in orange. This will become the default animation for the model. The other animations will appear in gray. What you can expect to see during this process is illustrated in Figure 3.15.

Step 5: When you have finished dragging the animations to the controller, select the Sporty_Granny object in the Hierarchy. This is the model you can see in the scene. In the Inspector, the Animator component will be visible. Drag the newly created Granny Controller and drop it onto the Controller property of the animator as shown in Figure 3.16.

FIG 3.14 Creating a new animator controller in the project.

FIG 3.15 Adding individual models to an animator controller.

FIG 3.16 Attaching an animator controller to a model.

Save what you have done so far and press Play. The first animation you dragged into the animator (the one in orange) will play on the model. To change the default animation, return to the Granny_Controller editing window, right-click on one of the animations and set them to default.

Step 6: Next we are going to control the movement and animations of Sporty_Granny with script. Begin by creating a new C# file named AnimControls. Into this file, type the code shown in Listing 3.3.

Listing 3.3 Script to control a model’s movement and animation

//AnimControls.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class AnimControls : MonoBehaviour {

Animator anim;

float speed = 0.05f;

float rotSpeed = 50.0f;

// Use this for initialization

void Start () {

anim = gameObject.GetComponent<Animator>();

}

// Update is called once per frame

void Update () {

if(Input.GetKey("up"))

{

anim.Play("walking",0);

this.transform.position += this.transform.

forward * speed;

}

else if(Input.GetKey("down"))

{

anim.Play("walking",0);

this.transform.position -= this.transform.

forward * speed;

}

else if(Input.GetKeyUp("up") || Input.

GetKeyUp("down"))

{

anim. Play("idle",0);

}

}

}

Attach this script file to the Sporty_Granny game object in the Hierarchy. When run, the script accesses the object’s Animator and plays the “walking” animation when the up or down arrow keys are pressed.

The Play function takes two parameters. The first is the name of the animation. This must be written exactly as the animation is named in the Animator window (i.e., the word written on the orange or gray squares). The second, which in this case is 0, is the layer the animation is on. In the Animator window, you may have noticed all the animations went into the Base Layer. This is layer 0. We will use only layer 0, so always set the second parameter to this.

Play and use the arrow keys to move Sporty Granny around. She can only go forward and back at this stage.

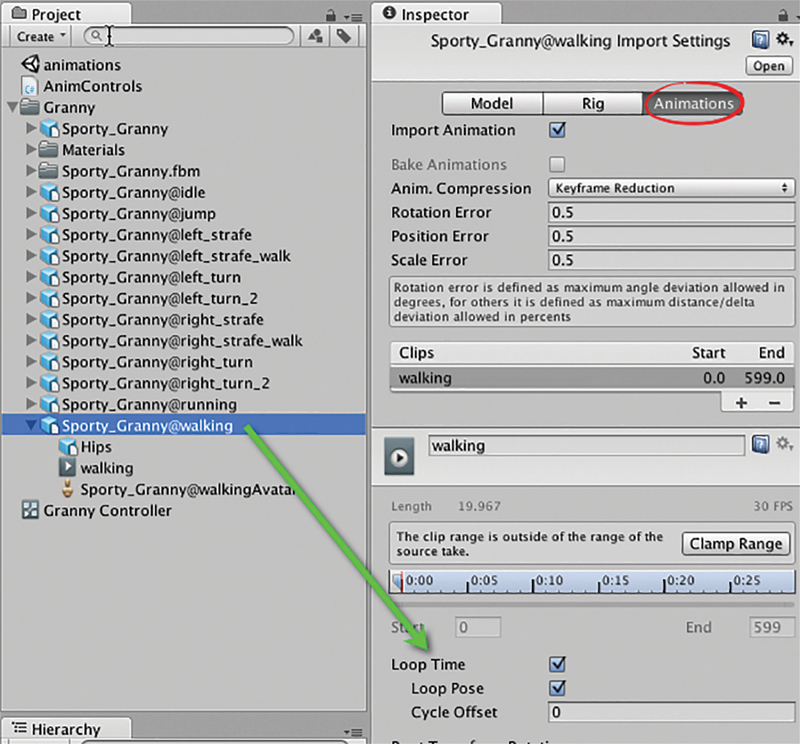

Step 7: In moving the character in the previous step, you may have noticed the walk animation cycles once and then stops. The reason is that the animation is not set to loop. To fix this, select the animation you want to loop, in this case “walking,” from the Project. In the Inspector, select the Animations tab and then tick the boxes for Loop Time and Loop Pose as shown in Figure 3.17. Ensure you scroll to the bottom of the Inspector and click on the Apply button.

FIG 3.17 Setting an animation to automatically loop.

FIG 3.18 Adding transitions between animation states.

Step 8: Play again and try walking back and forth. The walk animation will now loop.

This is much better, but the animations still snap between idle and walk. To fix this, we need to create transitions in the Animator window.

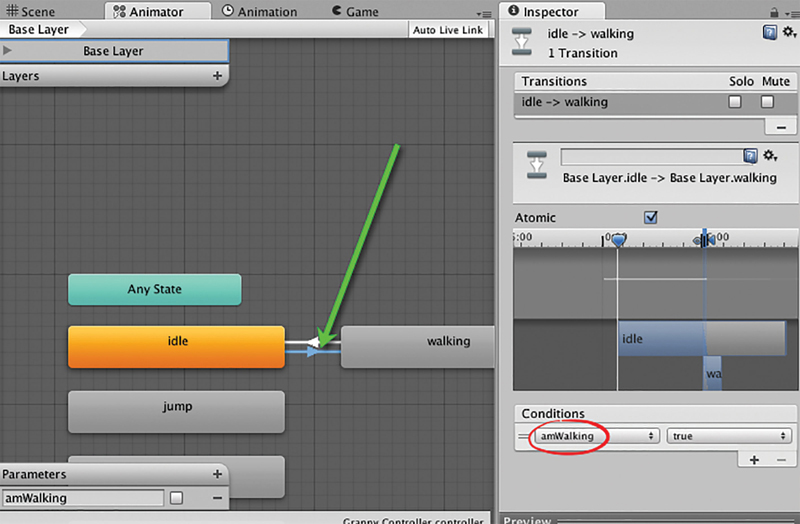

Step 9: Double-click on the Granny Controller in the Project to open the Animator window. Bring the Idle and Walking animation boxes next to each other. Right-click on the Idle animation to bring up a menu with Make Transition as an option. Select this and then click on Walking. A white line with an arrow will link the two animations. Because we want to smoothly transition from Idle to Walking and back again, right-click on Walking and make a transition line from Walking to Idle. This process is illustrated in Figure 3.18.

With these animations linked, you will be able to create smooth transitions from one to the other; however, to make this happen, you need to set up some variables.

Step 10: In the Animator window is a small box titled Parameters. Click on the + button and create a new Bool parameter called “amWalking.” This value will now be used to control when the transitions between the animations occur.

Step 11: Select the arrow that points from Idle to Walking. It will become highlighted in blue. In the Inspector, you will see the details about this transition—untick Has Exit Time. At the bottom, in the Conditions area, you want to add the amWalking parameter as a condition and set its value to true as illustrated in Figure 3.19.

By adding this parameter, you are telling Unity that when the value of amWalking is set to true, it should play a transition animation from Idle to Walking.

You will want to do the exact same thing for the transition going from Walking back to Idle. But this time, add the parameter amWalking but make its value false.

Step 12: Transitions between animations do not just happen by themselves. You need to code them. In this case, both are determined by the value of amWalking; therefore, we set the value of amWalking to true or false in the code to control what the character does. Modify the Update function in AnimControls.cs to reflect that in Listing 3.4.

FIG 3.19 Adding a parameter to control animation transitions.

Listing 3.4 Updated AnimControls

//AnimControls.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class AnimControls : MonoBehaviour {

Animator anim;

float speed = 0.05f;

float rotSpeed = 50.0f;

// Use this for initialization

void Start () {

anim = gameObject.GetComponent<Animator>();

}

// Update is called once per frame

void Update () {

if(Input.GetKey("up"))

{

anim.SetBool(“amWalking”,true);

this.transform.position += this.transform.

forward * speed;

}

else if(Input.GetKey("down"))

{

anim.SetBool(“amWalking”,true);

this.transform.position -= this.transform.

forward * speed;

}

else if(Input.GetKeyUp("up") || Input.

GetKeyUp("down"))

{

anim.SetBool(“amWalking”,false);

}

}

}

Step 13: Play. The character will now smoothly transition between animation states.

Step 14: To make the camera follow the character, in the Scene, move the view around until you look at the character from behind in a position slightly above as shown in Figure 3.20. With the camera selected in the Hierarchy, from the main menu select GameObject > Align With View. Once you have the camera in a place you would like, drag and drop it in the Hierarchy onto the Sporty_Granny game object. The camera will become a child object of the model and follow it wherever it moves.

FIG 3.20 Position the camera for a third-person view.

At this point, you might want to add in a ground plane, otherwise there will be nothing that Sporty_Granny is moving relative to and it will not appear as though she is going anywhere.

Step 15: To add turning to the character, the same format as the existing code is used to test for the left and right arrow keys. While turning, the model’s transform is simply rotated. Modify AnimationControls.cs to add a couple of lines to the bottom of the Update function as shown in Listing 3.5.

Listing 3.5 Updated AnimControls

//AnimControls.cs

…

float rotSpeed = 50.0f;

…

// Update is called once per frame

void Update () {

…

if(Input.GetKey("left"))

{

transform.Rotate(-Vector3.up * Time.deltaTime *

rotSpeed);

}

else if(Input.GetKey("right"))

{

transform.Rotate(Vector3.up * Time.deltaTime *

rotSpeed);

}

}

Step 16: Play. The left and right arrows will turn Sporty_Granny. There are many other animations that come with the character. Try mapping these to keys in AnimationControls.cs for yourself.

For more detailed tutorials on Unity’s Mecanim system, see the author’s online video series at http://bit.ly/unityanimation and https://holistic3d.com/udemy/mastering-3d-animation/.

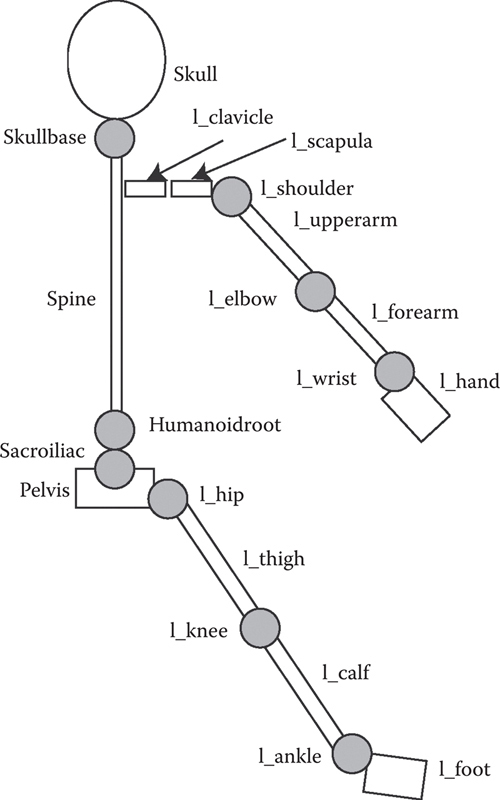

Biomechanics is a field of study that examines the mechanical movement of biological systems such as humans, plants, and animals. It plays an important part in animation describing the way in which natural hierarchical systems such as the human skeleton move. The hierarchy for a skeleton begins at the skull and divides into a number of segments, including arms, legs, hands, and feet that connect to one another by joints. When higher-level joints—such as the shoulder—move, any connected lower level segments, such as the hand, move too. In contrast, when a lower level joint or segment moves, such as a finger, any higher-level segments, such as the skull, do not move. Aristotle first wrote about biomechanics in De Motu Animalium (On the Movement of Animals).

There are a number of ways in which a skeletal structure can be specified for animation. The Humanoid Animation Working Group (H-Anim; http://www.web3d.org/documents/specifications/19774/V1.0/index.html) is an international project with the goal of providing a standard profile for a humanoid skeleton so it can be used consistently across a number of applications. A partial view of the standard is illustrated in Figure 3.21. This makes the process of swapping characters in and out of games and virtual environments more streamlined. Imagine that you are able to take your favorite character from Halo and use it in The Sims. This of course would not work; however, if both games used the same rules from the H-Anim specification, it would work.

FIG 3.21 A partial view of the H-Anim project’s specifications for a humanoid skeletal hierarchy showing minimal joints.

The premise behind H-Anim and any other skeletal representation is the connection of straight segments and rotatable joints. The way in which these structures move is analyzed by the applied mechanics discipline of kinematics.

Kinematics describes the motion of objects without consideration of the causes leading to the motion. It examines linear and rotational movement with respect to distance, direction, and velocity. These are the very same concepts developed in Chapter 2 through the examination of vector mathematics. Kinematics can be examined from two points of view: forward and inverse.

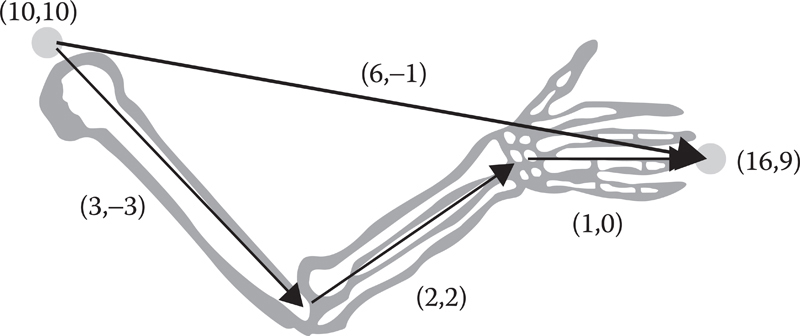

Forward kinematics calculates the final position of the end of an articulated object, given the angle and rotation of the joints and the length of the segments. To exemplify, forward kinematics can calculate the position of a character’s hand, given the rotation and angles of joints and the length of the bone segments. The hand in this case is what is known in kinematics as the end effector. To solve such a problem, simple vector mathematics is employed. Each bone has a length and direction that are specified as a vector. Adding all the vectors together will give the final destination. As illustrated in Figure 3.22, if the shoulder is positioned at (10,10) with the humerus (upper arm bone) making a vector of (3,−3), the radius and ulna (lower arm bones) making a vector of (2,2), and the hand with a vector of (1,0), the final position of the finger tips will be at (16,9).

Inverse kinematics is used in games to ensure that characters connect with the environment. For example, in The Sims, when a character interacts with an object, the game must ensure that the character is standing in the correct position to pick the object up. Although the bending over and picking up an object are premade animations, the character still needs to be positioned in the correct location to perform a convincing connection with the object. For that reason, if a Sim is required to pick up a guitar, the character will walk over to the item first and position itself such that when the pickup animation plays, it looks as though the object is being picked up.

FIG 3.22 A forward kinematic example with an arm in 2D.

Inverse kinematics is somewhat more complex than forward kinematics. It performs the reverse operation of forward kinematics, asking what the angles of the joints must be to position the end effector at a specific location? For example, if the shoulder is at (10,10), how should the bones in the arm be positioned to place the hand at (16,9)? This is not as simple as it might first appear because, when you think about it, the joints in the arm can make a variety of angles, as shown in Figure 3.23.

Try it for yourself. Grab hold of a doorknob and keeping your shoulder at the same location in space, see how many different positions you can manipulate your arm into without removing your hand from the doorknob. These are just the movements for your arm, which consists of three basic joints and three principal segments. Imagine calculating the inverse kinematics for something with 10 or more joints.

Although we consider the shoulder as a single joint, for the purposes of inverse kinematics, it is actually three. If you hold your arm straight out to the side, you will be able to raise and lower it (like flapping to fly), move it from side to side (like waving past traffic), and also rotate it (as though your outreached hand is turning a knob). Each distinct movement is called a degree of freedom (DOF). Therefore, the shoulder has three DOFs: two DOFs that translate the attached segment and one that rotates it.

FIG 3.23 Three solutions to an inverse kinematic problem.

![]() For Research

For Research

Inverse Kinematics

For further in-depth examination of inverse kinematic systems in Unity check out http://docs.unity3d.com/Manual/InverseKinematics.html.

In the preceding examples of 3D animations, character action sequences were split into segments. In the case of 2D sprites, only one sequence was given (walking); in the 3D examples, each action was contained in its own separate file.

It is not always the case that animations come to game developers in this way. If you were to download a fully animated character from a site such as TurboSquid, the character may come as a single file containing any number of animation sequences. In the case of 2D sprites, it is not uncommon to find all the action sequences for one character in a single texture atlas.

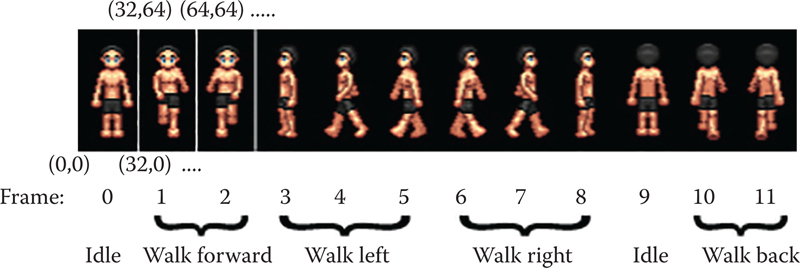

3.7.1 Single 2D Sprite Actions

As we have seen, a texture atlas is a convenient and optimizing way to keep sprites. More often than not, all the animations for a single character are kept in a texture atlas. This requires pixel management on the part of the programmer to ensure that the correct part of the texture is displayed at the right time. Figure 3.24 shows a texture atlas with several idle and walking animation frames. Although it is not strictly necessary to have the frames belonging to the same animation next to each other in the texture, it makes it monumentally easier to program if they are in sequence and packed together. It is also easier if each frame is of the same size. In the image shown, each frame is 32 × 64.

Individual animations are specified with a starting frame and a number of frames; for example, the walk left animation starts at frame 3 and is three frames in length. By knowing the fixed width for a frame, the exact pixel value for the start of an animation sequence can be calculated. In this case, the walk left animation begins at frame 3, and therefore the starting x pixel location would be 3 × 32 = 96.

FIG 3.24 Joined frames for four separate animations. (Sprite art thanks to Dezire Soft).

![]() Unity Specifics

Unity Specifics

Sprite Dimensions

If the sprite atlas does not have power of 2 dimensions, Unity will squash and stretch it to make it so. This will produce an undesirable effect with the frame locations. To correct this, select the texture in the Project and in the Inspector set the Texture Type to Advanced and the Non Power of 2 to None.

![]() Unity Hands On

Unity Hands On

Managing Animated Sprite Sequences

Step 1: Download Chapter Three/SpriteManager.zip from the website. Open the project in Unity and open the WalkingSprite scene. You will find a scene with four animated sprites in it. These sprites were created from a sprite sheet using a grid to cut the images up as was performed in a previous hands-on session.

Play. You will notice that all the sprites are shown and the animations are playing. Further investigation will reveal that all the sprites are positioned in the scene at exactly the same position.

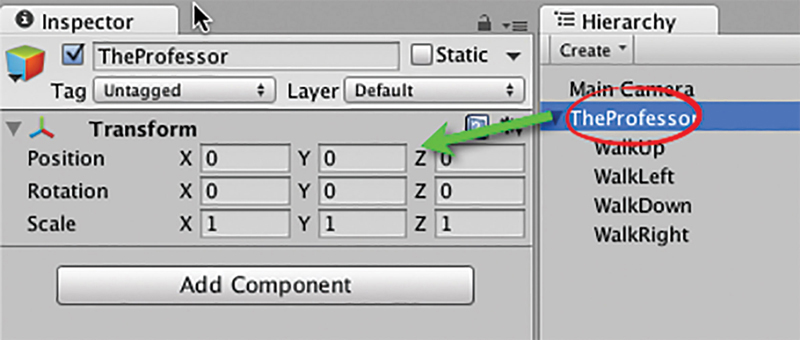

Step 2: Create an empty game object in the Inspector and child all the sprites to it as shown in Figure 3.25. Rename the empty object TheProfessor. The position in space of the TheProfessor as long as each sprite is at position (0,0,0). This will ensure that, relative to TheProfessor, the sprites are at its origin.

Wherever we move TheProfessor, the sprites will now automatically follow.

Step 3: Create a new C# file called SpriteManagement.cs. Open SpriteManagement.cs in the script editor and add the code in Listing 3.6.

FIG 3.25 Adding sprite objects to an empty game object.

Listing 3.6 Controlling the movement of a sprite with the arrow keys

//SpriteManagement.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class SpriteManagement : MonoBehaviour {

float speed = 0.01f;

// Update is called once per frame

void Update () {

if(Input.GetKey (KeyCode.UpArrow))

{

this.transform.Translate(0,speed,0);

}

else if(Input.GetKey (KeyCode.DownArrow))

{

this.transform.Translate(0,-speed,0);

}

else if(Input.GetKey (KeyCode.LeftArrow))

{

this.transform.Translate(-speed,0,0);

}

else if(Input.GetKey (KeyCode.RightArrow))

{

this.transform.Translate(speed,0,0);

}

}

}

Step 4: Attach the script to TheProfessor and Play. The sprite will be moveable with the arrow keys. You will still see all sprites playing.

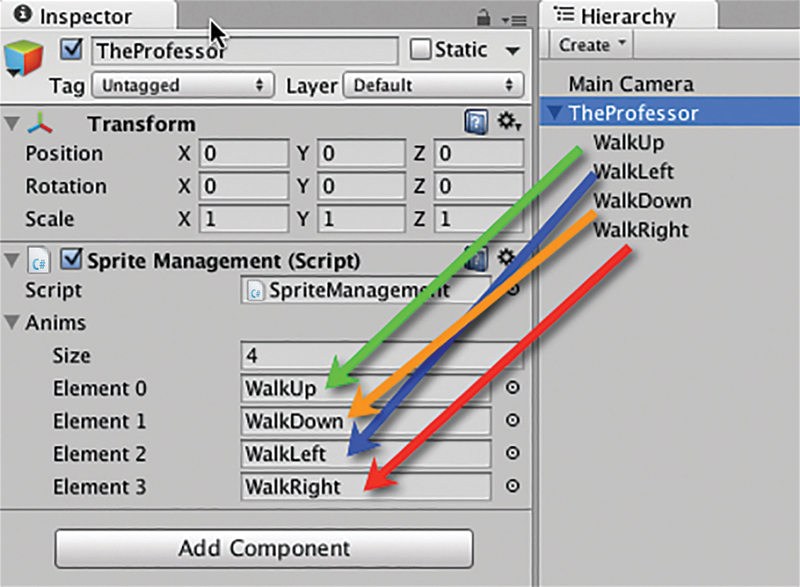

Step 5: Next we need to turn the sprites on and off depending on the key being pressed. For example, if the up arrow is being pressed and the character is moving up the screen, then that particular animated sprite should be the only one visible. To achieve this, our code needs access to each animation. We can put them into a simple array by modifying SpriteManagement.cs as shown in Listing 3.7.

Listing 3.7 Creating an animation array

public class SpriteManagement : MonoBehaviour {

float speed = 0.01f;

public GameObject[] anims;

// Update is called once per frame

void Update () {

…

Save the code and return to the Inspector. You will find the new array visible in the script properties. As shown in Figure 3.26, set the array size to 4 and then drag and drop each sprite from the Hierarchy into a position in the array. The order the sprites appear in the array will matter to the way you program their appearance, therefore add them in the same order as shown in Figure 3.26.

Step 6: Now the script knows about the animated sprites, we can turn them on and off as needed. A simple function can be employed to turn off all the sprites which are then turned on as required. If no keys are being pressed, we should show the last frame of the sprite animation so the character just does not disappear. To achieve this, modify SpriteManagement.cs by adding the code shown in Listing 3.8.

FIG 3.26 Creating an array for sprite animations.

Listing 3.8 Creating class structures for strong values

// Updated SpriteManagement.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class SpriteManagement: MonoBehaviour {

float speed = 0.01f;

public GameObject[] anims;

int currentAnim = -1;

// Use this for initialization

void Start () {

TurnAllAnimationsOff();

}

void TurnAllAnimationsOff()

{

foreach(GameObject i in anims)

{

i.SetActive(false);

}

}

// Update is called once per frame

void Update () {

if(currentAnim != -1)

//if there is a current animation set its speed

//to 1

anims[currentAnim].GetComponent<Animator>().speed

= 1;

if(Input.GetKey (KeyCode.UpArrow))

{

//before turning on this animation

//turn off the previous if it is a different

//one

if(currentAnim != 0 && currentAnim != -1)

anims[currentAnim].SetActive(false);

//move the character

this.transform.Translate(0,speed,0);

//make the appropriate sprite active and

start playing

anims[0].SetActive(true);

//update the number of the current animation

currentAnim = 0;

}

else if(Input.GetKey (KeyCode.DownArrow))

{

if(currentAnim != 1 && currentAnim != -1)

anims[currentAnim].SetActive(false);

this.transform.Translate(0,-speed,0);

anims[1].SetActive(true);

currentAnim = 1;

}

else if(Input.GetKey (KeyCode.LeftArrow))

{

if(currentAnim != 2 && currentAnim != -1)

anims[currentAnim].SetActive(false);

this.transform. Translate(-speed,0,0);

anims[2].SetActive(true);

currentAnim = 2;

}

else if(Input.GetKey (KeyCode.RightArrow))

{

if(currentAnim != 3 && currentAnim != -1)

anims[currentAnim].SetActive(false);

this.transform. Translate(speed,0,0);

anims[3].SetActive(true);

currentAnim = 3;

}

else

{

//if there is no key being pressed, set the

//current animation speed to 0

//in other words, pause it.

if(currentAnim != -1)

anims[currentAnim].GetComponent<Animator>().

speed = 0;

}

}

}

Save and Play. The character will now switch between animations as it moves around the screen. To add more animations, you would insert extra sprites into the array and then more if statements testing for key presses.

3.7.2 Single-Filed 3D Animations

Original 3D FPS games such as Quake 3 Arena (Q3A) use a single track of 3D animation that defines many separate animations in the same way as an animated sprite texture atlas. Animations for a character in Q3A, a Quakebot, for example, are played from specified frames—some are looped and some are not. To create a series of animations for a Quake character, a number of different animations need to be specified and then glued together. For example, the running animation might go from frame 30 to frame 45, and the swimming animation might go from frame 46 to frame 57.

Animations in Q3A must be set up in a specific order. The order and length of the animations are displayed in Table 3.1.

Table 3.1 Order and Frame Size of Animations used in Q3Aa

Animation | Length (in frames) | Description |

BOTH_DEATH1 | ~30 | Full body animation |

BOTH_DEAD1 | ~1 | Death scenes and final |

BOTH_DEATH2 | ~30 | Death poses. |

BOTH_DEAD2 | ~1 | |

BOTH_DEATH3 | ~30 | |

BOTH_DEAD3 | ~1 | |

Category: Upper Body | ||

TORSO_GESTURE | ~45 | e.g., taunting |

TORSO_ATTACK | 6* | Attack other player |

TORSO_ATTACK2 | 6* | ″ |

TORSO_DROP | 5* | Drop arms as to change weapon |

TORSO_RAISE | 4* | Lift up new weapon |

TORSO_STAND | 1* | Idle pose for upper body |

TORSO_STAND2 | 1* | ″ |

Category: Lower Body | ||

LEGS_WALKCR | ~10 | Crouched while walking forward |

LEGS_WALK | ~15 | Walking forward |

LEGS_RUN | ~12 | Running forward |

LEGS_BACK | ~10 | Back pedaling |

LEGS_SWIM | ~10 | Swimming |

LEGS_JUMP | ~10 | Jumping up forward |

LEGS_LAND | ~6 | Landing after jump |

LEGS_JUMPB | ~10 | Jumping up backwards |

LEGS_LANDB | ~6 | Landing after backwards jump |

LEGS_IDLE | ~10 | Idle pose for lower body |

LEGS_IDLECR | ~10 | Crouched idle pose for lower body |

LEGS_TURN | ~8 | Turning on the spot |

a All animation lengths are approximations with the exception of those indicated by a * which need to be exact.

Table 3.2 A Partial Animation Configuration File

Animation | First Frame | Number of Frames | Times to Loop | Frames per Second |

BOTH_DEATH1 | 0 | 30 | 0 | 25 |

BOTH_DEAD1 | 29 | 1 | 0 | 25 |

TORSO_GESTURE | 90 | 40 | 0 | 15 |

TORSO_ATTACK | 130 | 6 | 0 | 15 |

As shown in Table 3.1, upper and lower animations are separate with the exception of death scenes. Therefore, movement of the upper body is independent of the lower body. This allows for different animation effects by combining differing animation parts. However, this can be a slight problem when two unrelated animations are combined; for example, an upper TORSO_ATTACK combined with a LEGS_SWIM would look strange. Although this system of animation has the drawback of creating inappropriate movements, it does provide for an overall greater number of animations.

Because many of the animation sequences do not have a defined length, an animation configuration file needs to be generated for the Q3A game engine so that it can locate the correct animation progressions. The configuration file is called animation.cfg and is loaded into the Q3A engine with the appropriate model. The configuration file contains information on the first frame of the sequence, the length, in frames, of the sequence, the number of times to loop the animation, and how fast to play it. The file contains this information for each animation sequence in the order shown in Table 3.2.

A model is defined as three separate parts: head, torso, and legs. Each part of the model is linked internally by what is known as a tag. Tags control the locations at which the parts of the model are connected. Because each part is dealt with separately, the tags essentially join them together. There are three principal tags in a Q3A model: tag_head (which joins the head to the torso), tag_torso (which joins the upper body to the lower body), and tag_weapon (which provides a location to attach the weapon model). For games such as Q3A that allow players to modify and create their own character models, having a standard format such as this is crucial in ensuring that the models are animated and rendered consistently.

Secondary animation refers to movement that occurs as a result of primary animation. For example, when a character walks, in response to the movement, his hair might move and his muscles ripple. If a character shoots a weapon, you would expect a recoil action. Secondary animation is caused by the law of physics, which dictates that every action has an equal and opposite reaction. Without the extra animation, a character can appear static and unrealistic.

Depending on the level of secondary animation, it can be processor intensive and not feasible in a real-time game environment. The avatars used in Quake 3 Arena and Unreal Tournament are very simple, with tight-fitting clothing and close-cut hair. This is not because the artist could not be bothered or at the time did not have the technology to create highly realistic models—it is simply because animating all the extra elements with secondary animation was not feasible on the hardware available at the time these games were released. Even today, hair and cloth in 3D real-time environments are limited. A very nice example of the secondary animation of cloth can be seen in Assassin’s Creed. The cloak and ropes of the main character move in response to the character’s movements and the wind.

As hardware capabilities increase, so will the quantity and quality of secondary animation.

Other animations that could also be considered secondary to the main character are those that bring the environment to life, for example, trees swaying in the breeze, water running in a river, other characters walking down the street, or even the main character’s own clothing, such as a cape.

![]() Unity Hands On

Unity Hands On

Adding Secondary Motion

In this exercise, we will add a cape to a model using the cloth component and a preexisting cape model.

Download the fileChapter Three/SecondaryAnimation.zip. Open the scene called secondaryAnim.

In the Project, you will find an asset called cape. Drag this into the scene next to the character and reposition to sit on the character’s back. Make the cape a child of the Sporty_Granny > Hips > Spine > Spine1 > Spine2 > Neck object. This will ensure the cape is attached to the character and moves when it does.

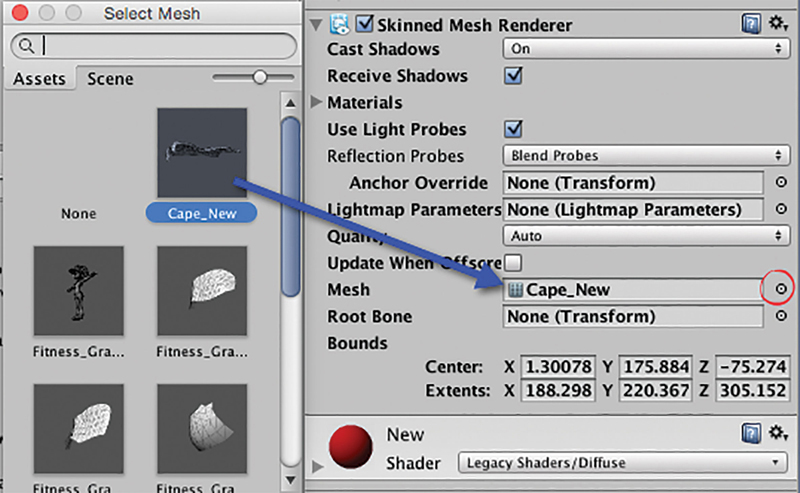

With the cape selected in the Hierarchy, use the Add Component button in the Inspector to add a Physics > Cloth component. A new Skinned Mesh Renderer will also be added. If the old Mesh Renderer is still there, delete it. At this point, the cape will turn invisible. That is because the old renderer is gone. The new Skinned Mesh Renderer needs to know about the mesh it is drawing. Set its value to the cape’s mesh as shown in Figure 3.27. You can do this by selecting the small round icon to the right of the property and then locating the cape’s mesh in the window that pops open. Once assigned, the cape will reappear in the Scene.

With the cape selected, drag Sporty_Granny into the Cloth Capsule Collider as shown in Figure 3.28. This will cause the character’s body to impact the movement of the cape.

FIG 3.27 Assigning a mesh to a cloth’s skinned mesh renderer.

FIG 3.28 Setup for a character and cloth.

Next, select the Cloth’s Edit Constraints button. From the window that opens, select Paint. The cloth’s vertices will now be black dots. Use the mouse to paint over the vertices at the top of the cloth. They will turn green. These are the fixed vertices that will ensure the cloth remains pinned to the character.

Save and play. The character will be able to run around followed by a billowing cloth. If you find the cloth at an unnatural distance from the character, you may have to shrink the characters capsule collider.

This chapter examined 2D and 3D animation principles and techniques. As moving and animated objects are key elements in games, understanding how a game engine manages and manipulates these assets is a key to including them effectively in games.