This book has come from a place where open source software is a great success and transformational technology force. It would be hard to credibly argue that open source ideas and practices haven’t greatly influenced how software gets developed and how cooperative innovation happens more broadly.

But even the most positive storylines embed other narratives that don’t make it into press releases. Some of these are just blemishes. Others are more fundamental questions about balancing the desires of users and communities, creating sustainable development models, and continuing to flourish in an IT landscape that has fundamentally changed since open source first came onto the scene.

A Positive Feedback Loop

Perhaps the most glancing critique of the open source model is to point out that it’s not always effective or that it’s failed to make significant inroads in many areas.

Or, as Matt Asay has observed, that most open source projects rely on a small group of core contributors—often working for a vendor. The open source community development model is something of an idealization in many cases.1

There’s never going to be a singular approach to creating things that are useful to individuals and organizations. But it’s worth asking what aspects of open source aren’t always optimized.

The Linux Foundation’s Jim Zemlin describes open source software development in terms of a positive feedback loop linking projects, solutions, and value. Maintaining the links in this feedback loop depends on there being answers to some important questions.

One is about successful business models; I’ve already touched on this question but will come back to it in depth later in this chapter; it’s a defining question for open source success or failure. Other questions relate to aligning with communities, maintaining appropriate levels of control, and finding the right balance between sharing and consuming.

Projects

We’ve already covered many of the aspects of projects and their associated communities that it’s important to get right. But, as Zemlin notes, there are always ways to improve.

“How do we create more secure code in upstream projects? How do we take the responsibility of this code being used in important systems that impact the privacy or the health of millions of individuals?” he asks. He adds that you may want to think explicitly about how your project fits into this feedback loop. “When I build my next project or a product, I should say, that project will be in line with, in a much more effective way, the products that I’m building.”

Perhaps the biggest mindset shift over the past few years has been a broader recognition of the relationship of projects to value. As Zemlin puts it: "To get the value, it’s not just consumed, it is to share back. There’s not [just] some moral obligation, although I would argue that that’s also important. There’s an actual incredibly large business benefit to sharing as well." That said, it’s hard to say free riding on the open source contributions of others is a solved concern for the open source model given how one-sided the participation of many organizations, including large IT vendors and cloud providers, can be.

Solutions and Products

Other questions relate to how products and other solutions work as part of an open source ecosystem. For example, there are many nuances involved in balancing competing requirements for stability and new technology across projects and commercial products. There is no single right answer here either. Tensions will always exist between upstream projects and downstream products—which in turn creates frictions that wouldn’t exist in an idealized open source development model.

At the same time, commercial adoption and a community of support providers often need to move forward together and create a stable base upon which users and buyers are comfortable putting a project into production. Balancing the requirements for projects and products is hard. But finding a balance that works (whatever the compromises required) is necessary.

Successful products and other commercial solutions are often the necessary economic input into the open source feedback loop. Without that input, that is, customers paying for something they need, there may be no value created for those in a position to provide the inputs, such as developer salaries, which go into moving a project forward.

Value

It’s a feedback loop and, as Zemlin argues, all three cogs are important. However, even if all aspects of open source as a development model and means for cooperation aren’t solely about business value, that’s an important point to probe if open source is going to continue to be one of the ultimate game changers.

Benefits such as societal good, better interoperability, and the creation of new companies are all well and good. But systematic success for open source depends on contributors to projects and their users realizing value—whether efficiency, speed of innovation, or reliability—which increases their opportunity to deliver profits. And for those contributors and users reinvesting patches, features, and resources into the project community, increasing the hiring of developers and others with expertise.

Value creation and reinvestment is central to the forces and market trends that could impinge on the viability of open source as an ongoing development model. These include macro changes in the IT industry, the shifting expectations and preferences of software users, and the effectiveness of business models that include open source software (or maybe even software more generally) as a central element.

The IT Industry Has Changed

Open source remains an instrument for preserving user options, portability, and sustainability.

When everything is proprietary, software can’t really evolve in the same way to be a platform for other platforms. Look at the way Linux has evolved to be the foundation for new types of software and new types of open source platforms and technologies. And this in turn has helped sustain the value of open source overall, which arguably comes most of all from its effectiveness as a software innovation accelerator.

How can open source software continue to sustain itself moving forward?

We live in a world increasingly distant in time from the Unix wars, which played a significant role in the genesis of open source. Of course, open source has continued to grow and thrive even though the importance of source code for supporting a fragmented collection of hardware platforms is mostly far behind us. Horizontal software stacks built on standardized hardware are the norm. But the IT industry of 2018 is a much different one from that of 2000 or even, really, 2010.

The Rise of the “Cloud”

Google’s then-CEO Eric Schmidt is often credited with coining the “cloud computing” term in 2006, although its first appearance was probably in a Compaq Computer business plan a decade earlier. As is so often the case with technology, though, closely related concepts had been germinating for decades. For example, in a 1961 speech given to celebrate MIT’s centennial, artificial intelligence pioneer John McCarthy introduced the idea of a computing utility.

As recounted by, among others, Nick Carr in his book The Big Switch: Rewiring the World, from Edison to Google (W.W.Norton & Company, 2013), the utility take on cloud computing metaphorically mirrored the evolution of power generation and distribution. Industrial Revolution factories in the late 19th century built largely customized, and decentralized, systems to run looms and other automated tools, powered by water or steam turbines.

These power generation and distribution systems, such as the machine works of Richard Hartmann in Figure 6-1, were a competitive differentiator; the more power you could produce, the more machines you could run, and the more goods you could manufacture for sale. The contrast to the electric grid and huge centralized power generation plants, such as the Hoover Dam shown in Figure 6-2, is stark.

Until recently, we’ve been living in an era in which the pendulum had clearly swung in favor of similarly distributed computing. Computers increasingly migrated from the “glass house” of IT out to the workgroups, small offices, and desktops on the periphery. Even before Intel and Microsoft became early catalysts for this trend’s growth, computers had been dispersing to some degree for much of the history of computing. The minicomputer and Unix revolutions were among the earlier waves headed in the same general direction.

You could think of these as analogs to the distributed power systems of the Industrial Revolution. And part and parcel of the environment that gave rise to open source. Where infrastructure is a competitive differentiator, the parts and knowledge to construct that infrastructure are valuable.

Machine works of Richard Hartmann in Chemnitz, Germany. The factories of the Industrial Revolution were built around localized and customized power sources that, in turn, drove local mechanical systems deriving from those power sources. Source: Wikimedia, in the public domain.

The Hoover Dam (formerly Boulder Dam). Electric power as a utility has historically depended on large centralized power sources. Source: Across the Colorado River, 1942, by Ansel Adams. In the public domain from the National Archives.

Why the Cloud Matters to Open Source

This shift of computing re-creates a new type of vertically integrated stack. One-time chief technology officer of Sun Microsystems, Greg Papadopoulos, one suspects hyperbolically and with an eye toward something IBM founder Thomas J. Watson probably never said, suggested that “the world only needs five computers,” which is to say there would be “more or less, five hyperscale, pan-global broadband computing services giants” each on the order of a Google.

Open source projects are ubiquitous throughout this new stack. Even Microsoft, now under CEO Satya Nadella, supports Linux workloads and has dropped the overt hostility to open source, which once characterized the company. (Former CEO Steve Ballmer once likened Linux to a “cancer” on the basis of its copyleft GPL license.)

However, remember the feedback loop. Some cloud giants have indeed made significant contributions to open source projects. For example, Google created Kubernetes, the leading open source project for managing software containers, based on the infrastructure it had built for its internal use. Facebook has open sourced both software and hardware projects.

But, for the most part, these dominant companies use open source to create what are largely proprietary platforms far more than they reinvest to perpetuate ongoing development in the commons. And they’re sufficiently large and well-resourced that they mostly don’t depend on cooperative invention at this point.

It’s easy to dismiss free riding as a problem given that organizations are missing out in some ways if they do so. However, to the degree that large tech companies, both cloud providers and others such as Apple, take far more from the open source commons than they contribute back, this at least raises concerns about open source sustainability.

Out at the Edge

It’s not wholly accurate to say that computing is recentralizing though. You probably have something in your pocket that has the capabilities of a supercomputer of not that many years ago. You may also have a talking assistant in your kitchen. Or a thermostat that’s connected to the Internet. Most computers these days aren’t in a datacenter.

However, all that distributed computing has important differences from the personal computer era of distributed computing.

Your iPhone is a walled garden that only lets you browse the Web or install Apple-approved applications on an Apple-developed software stack. The nature of the process effectively makes open source, at least in the sense of an iterative open development process, impossible.

Android phones run a variant of Linux, but you still don’t have the ability to modify them in the same manner as a PC. Sensors and other “edge” devices within the broad Internet-of-Things space can similarly run open source software but often depend on centralized services and, in any case, are usually designed as black boxes that resist user tinkering.

Thus, we increasingly find ourselves in a computing landscape composed of, on the one hand, monolithic computing services at the core and; on the other hand, mostly locked-down appliances on the edge. Neither is really a great recipe for the sort of cooperative infrastructure software development where open source has thrived.

Where’s the Money ?

The commercial value that can be extracted from software has also just declined more broadly. This might seem an odd assertion given how software and other technologies are indisputably central to more and more business processes and customer services. Stephen O’Grady explores this apparent contradiction in The Software Paradox: The Rise and Fall of the Commercial Software Market (O’Reilly Media, 2015). He argues that “Software, once an enabler rather than a product, is headed back in that direction. There are and will continue to be large software licensing revenue streams available, but traditional high margin, paid upfront pricing will become less dominant by the year, gradually giving way to alternative models.”

In other words, software enables organizations to extract value from other things that they sell. Apple has been so successful, in part, because it sells a complete integrated product of which the operating system is an incidental part. And, indeed, app developers for iOS find it a challenging business because offering apps solely through up-front purchases mostly doesn’t work at all.

Open source models arguably exacerbate the issue. Stephen O’Grady has also written that “The numbers, on the surface, would indicate that the various economic models embraced by open source firms are not in fact favorable to the firms that embrace them. Closed source vendors are typically appalled at the conversion rates of users/customers at firms like JBoss (3%) and MySQL (around 1%, anecdotally, on higher volume)—and those firms are more or less the most popular in their respective product categories. Even with the understanding that these are volume rather than margin plays, there are many within the financial community that remain skeptical of the long term prospects for open source (even as VC’s pour money in in hopes of short term returns).”2

He wrote those words over 10 years ago, but Red Hat’s success notwithstanding, there remains a dearth of companies that have been able to turn pure open source plays into significant revenues and profits. Red Hat has done many things right in terms of both strategy and executing. Analyst Krish Subramanian attributes it “to 1) Being the first to understand OSS model and establish themselves well before the industry woke up to OSS 2) Picking the right OSS projects and contributing code to these projects. You can’t win in OSS without code contribution.”

Over that period, the industry has probably also developed a better appreciation for some approaches to business using open source that don’t work. Developer tools have always been a tough business, even with expensive proprietary products. Selling open source subscriptions for client devices is also hard; lack of good business models aren’t the only reason that we’ve never seen “The Year of the Linux Desktop” but it hasn’t helped. We see similar dynamics with the Internet-of-Things. The webcams, temperature sensors, and smoke alarms may be running open source software but essentially no one is willing to pay for that in the consumer market especially.

In any case, building a significant business around selling open source software is clearly not straightforward or a lot more companies would have done so. It’s also worth observing that even Red Hat, at about $3 billion dollars in annual revenues as of 2018, is still quite small compared to either traditional proprietary software vendors or the new cloud vendors who depend upon open source. Amazon Web Services alone has over $20 billion in (rapidly growing) annual revenue.

What Users Want

User needs have also changed. In some respects, this applies more to individual consumers than to corporate buyers. But, as IT consumerizes, the boundaries between those groups blur and fade to the point where it can sometimes make sense to think about users broadly.

The New Bundles

Bundling is a broad concept and there’s perhaps no more better historical example than newspapers.

Newspapers bundle various news topics like syndicated and local news, sports, and political reporting, along with advertising, classifieds, weather, comic strips, shopping coupons, and more. Many of the economic woes of the newspaper can be traced to the splitting of this bundle. Craigslist took over the classifieds—and made them mostly free. Online severed the connection between news and local ads. While ads run online as well, the economics are something along the lines of print dollars devalued to digital dimes.

As NYU professor Clay Shirky wrote in 2008: “For a long time, longer than anyone in the newspaper business has been alive in fact, print journalism has been intertwined with these economics. The expense of printing created an environment where Wal-Mart was willing to subsidize the Baghdad bureau. This wasn’t because of any deep link between advertising and reporting, nor was it about any real desire on the part of Wal-Mart to have their marketing budget go to international correspondents. It was just an accident. Advertisers had little choice other than to have their money used that way, since they didn’t really have any other vehicle for display ads.”3

Software, both historically and today, has also been a bundle in various respects. As O’Grady observed in The Software Paradox, software used to be mostly something that you wrote in order to be able to sell hardware. It wasn’t something considered valuable on its own. In many respects, we’ve increasingly returned to that way of thinking whether we’re talking iOS on an iPhone or Linux on a webcam.

One of the core challenges around business models built directly on open source software is that open source breaks software business models that depend on bundling. If all you care about is the code in the upstream project, that’s available to download for free. An open source subscription model doesn’t require you to keep paying in order to retain access to an application as a proprietary subscription does. You’ll lose access to professional support and other benefits of the subscription, but the software itself is gratis. In fact, open source is arguably the ultimate force for unbundling. Mix and match. Modify. Tinker. Move.

Online consumer services in particular have also just led to a mindset that you don’t have to directly pay for many things. Your data is being mined and you’re being targeted by advertising, but your credit card isn’t being billed monthly. Or, you’re buying something else from the company in question and the software you use is simply in support of that. There’s a widely held expectation that, if it’s digital, it should be free. This echoes The Software Paradox again.

Users Want Convenience

There’s another aspect of bundles worth exploring.

Bundles, like other aspects of packaging, are prescriptive. They can be seen as a response to The Paradox of Choice: Why More Is Less (HarperCollins), a 2004 book by American psychologist Barry Schwartz, in which he argues that consumers don’t seem to be benefiting psychologically from all their autonomy and freedom of choice. Whether or not one accepts Schwartz’s disputed hypothesis, it’s certainly the case that technology options sometimes seem to proliferate endlessly with less and less real benefit to choosing one tech over another.

When sellers create bundles they also do so for a variety of reasons that often have to do with getting people to pay for stuff that they don’t really want to pay for. The auto manufacturer who will only install leather seats if you also buy a sunroof and an upgraded trim package isn’t doing so primarily because it wants to make life easier for the buyer. It’s doing so because not everyone who really wants leather seats would normally be willing to also pay for those other options given the choice.

But the experience and convenience of a well-designed bundle that extends beyond the core product is at least a side effect in many cases.

Unbox a computer a couple of decades ago and, if you were lucky, you might find a sheet of paper easily identifiable as a “Quick Start” guide. (Which itself was an improvement over simply needing a field engineer to swing by.)

Today the unboxing experience of consumer goods like Apple’s iPhone has become almost a cliché, but it’s no less real for that. In the words of Grant Wenzlau, “Packaging is no longer simply about packaging the object—it is about the unboxing experience and art directing. This is where the process starts for designers today: you work backward from the Instagram image to the unboxing moment to the design that serves it.”4

Stephen O’Grady also writes about the power of convenience that bundled services can help deliver. “One of the biggest challenges for vendors built around traditional procurement patterns is their tendency to undervalue convenience. Developers, in general, respond to very different incentives than do their executive purchasing counterparts. Where organizational buyers tend to be less price sensitive and more focused on issues relating to reliability and manageability, as one example, individual developers tend to be more concerned with cost and availability—convenience, in other words.”5

He argues that, while Napster became popular in part because people could download music for free, it was also more convenient than driving to the mall and buying an album on a CD. Today, streaming accounts for the majority of music industry revenue with over 30 million subscribers in the United States.

Open source software can still have a good user experience, of course. And some communities provide bundling such as Fedora for Linux and the OpenShift Origin for container platforms. However, these are (at least in part) best efforts by the community and—though reasonably stable and satisfactory for some use cases—there is no support model that ensures that parts work well together or that integration problems will be fixed promptly as there is in the commercial products deriving from these projects.

Using open source software doesn’t need to be about self-supporting a bag of parts downloaded off some repository on the Internet. That’s what commercial software subscriptions are for: turning unsupported projects into supported and curated products. Nonetheless, there’s at least a tension between the idea of open source software as malleable, customizable, and free (in either meaning of the word) and a bundle that, by design, is prescriptive, abstracts away underlying complexity, and explicitly excludes technologies not deemed sufficiently mature for the target buyer.

Hiding Complexity

The history of the computer industry has been one of continuously layering abstractions. The operating system was one of the first. Others have included virtual memory, logical addressing in disks, and virtualization in its many forms.

This can lead to abstracting away the infrastructure components where active open source communities have historically created so much software value.

We see an example in one of the most recent software trends, nascent as of this writing, is what usually goes by the monikers “serverless computing” and Functions-as-a-Service. (There’s still a server, of course, but the idea is to make it invisible to developers.) This abstracts away underlying infrastructure to an even greater degree than containers, allowing for suitable functions—think encoding an uploaded video file—to run in response to events and other triggers. As with many technologies, the general concept isn’t exactly a new one. For example, IBM CICS, invented 50 years ago, provides services that extend or replace the built-in functions of the operating system.

Most associated with Lambda at Amazon Web Services currently, a variety of open source projects in this space such as OpenWhisk and Knative are also underway. The overall goal can be thought of as almost making the packaging invisible while letting developers implement an idea with as little friction as possible.

The idea is a sound one. In some respects, serverless extends the container concept to further mask complexities that aren’t relevant to many developers’ writing applications. However, at the same time, it at least raises the question of whether ongoing community investments in platform software will decline as the value and attention shifts to code that’s specific to individual businesses.

It’s Not the End

If this chapter so far has been a bit of a downer, it’s because it’s important to poke at the open source model as a way to better understand strengths and weaknesses and to guide future actions. To that end, consider this hand-wringing in the context of both the IT industry and the broader market forces at play.

IT Is Hybrid

One context is simply the observation that the adoption of technology takes place over a period of years and even decades in different places and at different rates. Science fiction author William Gibson once said that “The future is already here—it’s just not very evenly distributed.”

That pretty much describes technology adoption patterns. The Silicon Valley startup all-in on Amazon Web Services may have little in common with the regional bank that’s still getting its virtualization workflows in order—much less the local pizza shop running an ancient version of Windows on its point of sale system.

The Big Switch metaphor for cloud computing, by which electric power centralized and became a commodity from the perspective of users, arguably became a staple of many a conference presentation because of such absolutes. The advantages of centrally generated electricity over complex arrays of belts and pulleys that dictated how factories needed to be arranged, however inefficient from a workflow perspective, were profound. Electricity could be sent through wires to motors attached to individual pieces of machinery. The thousands of motors in a modern manufacturing plant range from those in huge gantry cranes to numerous small ones in power tools.

As a result, although the changeover was slow at first because of the initial expense and the novelty of electric power, factories began to switch to electricity in earnest during the first decade of the 20th century. In The Big Switch: Rewiring the World, from Edison to Google, Nick Carr writes that “By 1905, a writer for Engineering magazine felt comfortable declaring that ‘no one would now think of planning a new plant with other than electric driving.’ In short order, electric power had gone from exotic to commonplace.”

It’s something of an irony that, while cloud computing was coming onto the scene, electricity generation was actually starting to decentralize with the increased use of solar panels in particular. But the more important point is that computing as a new utility on the order of the electric grid is mostly wrong—at least for any time values that we care about as a practical manner.

Consider all the reasons businesses, especially large ones, might want or need to continue to run applications in-house: control and visibility, compliance with regulations, integration with various existing software and hardware, proximity to data, and so forth. In short, we should expect a future that is a hybrid of many things, not just one big switch. This doesn’t make for a tidy narrative in which some specific approach or technology conquers all. But it’s far more consistent with the observed history of the computer industry in which, as we’ve seen, there may be certain overarching ebbs and flows, but messiness generally wins out over out over winner takes all.

Stipulate, if you will, both that public clouds will increase in their share of the computing market and that the major global public clouds, as they operate today, don’t necessarily have incentives to significantly invest in open source. Even if that’s the case, many players in the IT industry will continue to have those incentives, including smaller cloud and other service providers who can take advantage of open source projects like OpenStack to compete on a more equal footing.

Centers of Gravity Shift

Where has open source software development had the greatest impact? The easy response is the operating system—notably Linux. You wouldn’t necessarily be wrong. Linux brought a Unix-like operating system to mass market hardware and unified the non-Microsoft Windows computing world to a degree that would almost certainly never have happened otherwise. It’s big in mobile, by way of Android, and it is relentlessly filling other niches that were once reserved for proprietary operating systems.

A more complete response would take a broader view that included software such as the Apache web server, innovations in software-defined storage and software-defined networking, OpenStack, container platforms, and more. However, whether we take the narrow view or the broader one, it’s true that the focus of open source development has been on infrastructure software, the software plumbing of datacenters that’s largely independent of an organization’s industry or specific application requirements.

It’s then a short step to argue that at some of this base plumbing is very well-understood and mature. It may not exactly be a commodity; for example, security incidents highlight the need for ongoing operating system support and updates. But it’s hard to argue with a general assertion that, as we’ve seen, software value is shifting to applications that businesses use to create differentiated product and service offerings. Furthermore, computing infrastructure is precisely what cloud providers offer. It’s not necessary to install and operate it yourself.

This is all true. However, open source software development already has a long history of moving into new areas. In part, it’s about moving up the stack from the operating system layer to software that builds on and expands on the operating system, such as container orchestration. Or, into expanding middleware for both traditional enterprise applications and new areas such as the Internet-of-Things.

The Development Model Is Effective

And, if the open source development model is effective—as it certainly appears to be in many cases—why wouldn’t we expect to see its continued use? True, we can point to examples of very successful companies that extensively use open source software but don’t participate much in the open development process and others that are simply mostly proprietary. However, as we’ve seen, many companies—including many who aren’t traditional IT vendors—do participate in various aspects of open source development because it makes business sense for them to do so.

It’s possible, perhaps even probable, that the focus of open source development will broaden and shift over time. For example, with Automotive Grade Linux, we see specialized development that builds on a horizontal platform to better meet the needs of a particular industry. We see a corresponding involvement by companies that we would have historically categorized as end-users in Industrial Internet-of-Things software projects.

There’s no shortage of software needed across many industries and a relatively limited population of skilled developers to write it. The landscape won’t stay static. Different organizations will participate in different areas. Who profits directly and indirectly from open source will change.

But even with the changed and changing landscape in computing, there really aren’t good reasons to think that open source as a software development model is something that only made sense for a relatively fleeting window of time.

Ecosystems Matter

It’s also worth remembering where some of the impetus behind open source comes from.

Vertical integration was a common model for 20th-century industrial companies. As The Economist notes, “Some of the best known examples of vertical integration have been in the oil industry. In the 1970s and 1980s, many companies that were primarily engaged in exploration and the extraction of crude petroleum decided to acquire downstream refineries and distribution networks. Companies such as Shell and BP came to control every step involved in bringing a drop of oil from its North Sea or Alaskan origins to a vehicle’s fuel tank.”6 In its heyday, Eastman Kodak owned its own chemical company to meet its needs for the vast quantities of ingredients needed to manufacture and process film.

We’ve already seen how commonplace this model was in the computer industry with mainframes; minicomputers; and, to an only somewhat lesser degree, Unix systems.

It could be an effective way to control access to inputs. It could also increase the market power of a dominant supplier. Indeed, regulators and lawmakers have at times restricted certain forms of vertical integrations as well as other types of tying together products and services. For example, manufacturers have often tried to make using hardware like a printer contingent on buying a profitable stream of printer supplies like ink from them, rather than going to a discount third party.

Today though we see the rise of coopetition. We see markets demanding interoperability and standards. We see more specialization and disaggregation.

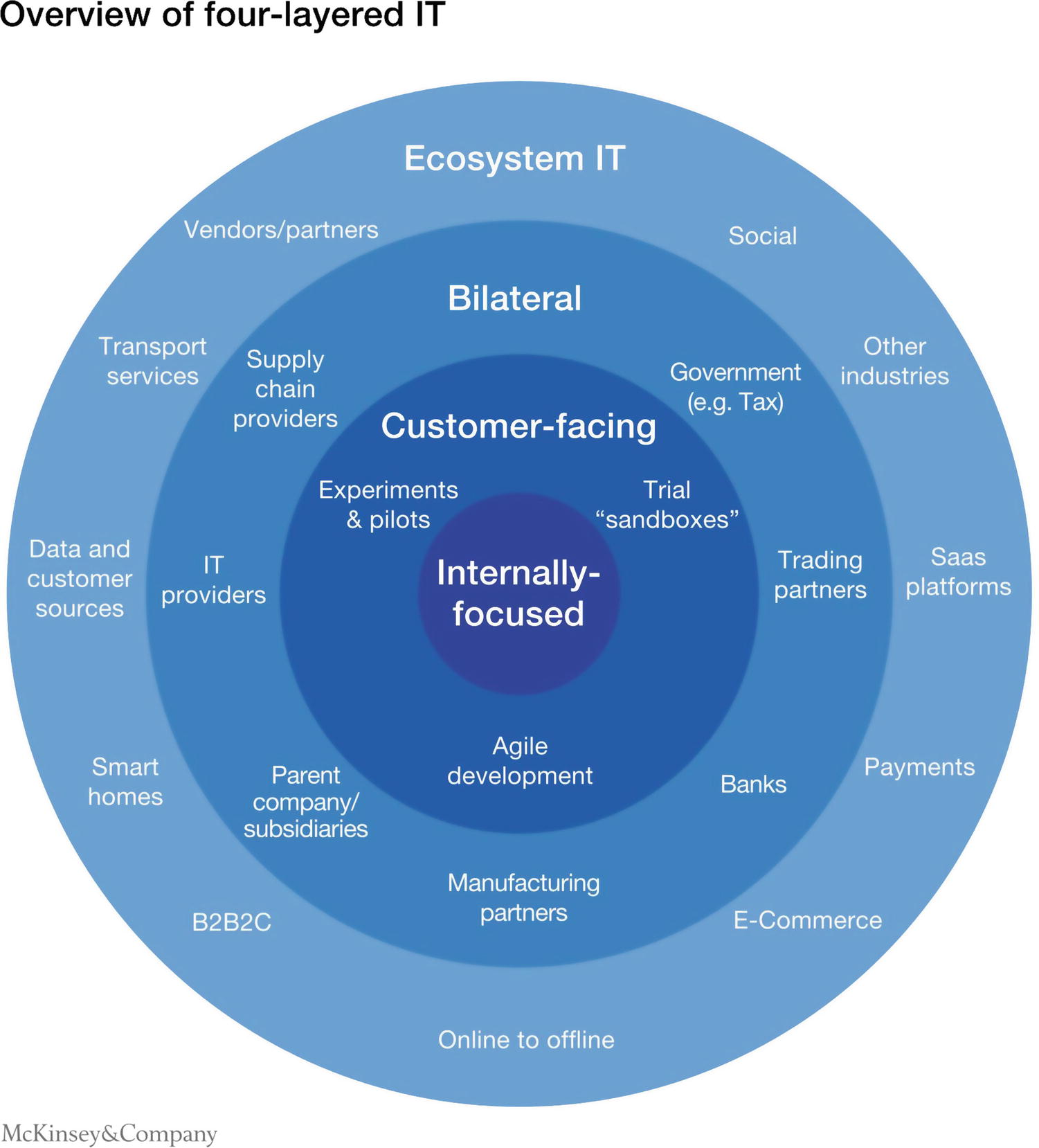

McKinsey argues that to fully benefit from new business technology, CIOs need to adapt to emerging technology ecosystems. Source: Adopting an ecosystem view of business technology, February 2017.

Together, these suggest an environment that naturally aligns with organizations working together on technologies and other projects for mutually beneficial reasons. Open source software isn’t the only way to do so. But much of today’s technology is software. Standards are increasingly developed first through code. Open source development can almost be thought of as a communication platform for disparate companies working together to achieve business objectives.

It’s Not Just About the Code

I’ve laid out some of the challenges that open source faces in today’s world. IT organizations can increasingly use global cloud providers rather than operate their own infrastructure. That same infrastructure is being commoditized in many cases and community code, downloaded for free, is good enough for many purposes. This in turn can choke off the reinvestment that the open source development model needs to work.

Furthermore, the viability of software as a stand-alone business seems to be declining. Users increasingly don’t want to explicitly pay out of pocket for software. They expect it as part of a bundle whether that means hardware or paying implicitly through advertising and other forms of monetization, which makes their attention something of a product to be sold.

And users want convenience. Open source was born from an era where using software meant installing it on a computer someplace. Today, they’re more likely to acquire some sort of service that runs on software, invisible to them. Software abstracted in this way can be less valuable for a company looking to profit from it and reinvest some of those proceeds in its ongoing development.

At the same time, it’s not all doom and gloom. Open source is a good fit with developing ecosystems and businesses that need to work together on technology.

However, there’s a bigger point, which is the topic of this book’s final chapter. Open source principles have indeed led to an extremely effective software development model that can form the basis for equally effective enterprise software products. Products that bring not just code, but also expertise, support, and other capabilities to solve business problems, to customers. That model has also started to influence the way that software is developed more broadly.

It also goes beyond software. Many principles, or at least analogs to them can influence approaches to data, to education, to organizations. There’s more inertia in many of these areas than in software. But there are also enormous opportunities.