Introduction

This chapter gives a brief overview of the input/output (I/O) channel architecture and introduces the connectivity options available on IBM Z platforms. It includes the following sections:

|

Note: The link data rates discussed throughout this book do not represent the performance of the links. The actual performance depends on many factors including latency through the adapters and switches, cable lengths, and the type of workload using the connection.

|

1.1 I/O channel overview

I/O channels are components of the IBM mainframe system architecture (IBM z/Architecture®). They provide a pipeline through which data is exchanged between systems or between a system and external devices (in storage or on the networking). z/Architecture channel connections, referred to as channel paths, have been a standard attribute of all IBM Z platforms dating back to the IBM S/360. Over the years, numerous extensions have been made to the z/Architecture to improve I/O throughput, reliability, availability, and scalability.

One of the many key strengths of the Z platform is the ability to deal with large volumes of simultaneous I/O operations. The channel subsystem (CSS) provides the function for Z platforms to communicate with external I/O and network devices and manage the flow of data between those external devices and system memory. This goal is achieved by using a system assist processor (SAP) that connects the CSS to the external devices.

The SAP uses the I/O configuration definitions loaded in the hardware system area (HSA) of the system to identify the external devices and the protocol they support. The SAP also monitors the queue of I/O operations passed to the CSS by the operating system.

Using an SAP, the processing units (PUs) are relieved of the task of communicating directly with the devices, so data processing can proceed concurrently with I/O processing.

Increased system performance demands higher I/O and network bandwidth, speed, and flexibility, so the CSS evolved along with the advances in scalability of the Z platforms. The z/Architecture provides functions for scalability in the form of multiple CSSes that can be configured within the same IBM Z platform, for example:

•IBM z14 and z13 supports up to six CSSes

•IBM z13s supports up to three CSSes

•IBM zEnterprise EC12 (zEC12) supports up to four CSSes

•IBM zEnterprise BC12 (zBC12) supports up to two CSSes

All of these Z platforms deliver a significant increase in I/O throughput. For more information, see Chapter 2, “Channel subsystem overview” on page 15.

1.1.1 I/O hardware infrastructure

I/O hardware connectivity is implemented through several I/O features, some of which support the Peripheral Component Interconnect Express (PCIe) Gen2 and Gen 31 standards. They are housed in PCIe I/O drawers, whereas others are housed in either I/O drawers or I/O cages.

In an I/O cage, features are installed in I/O slots, with four I/O slots forming an I/O domain. Each I/O domain uses an InfiniBand Multiplexer (IFB-MP) in the I/O cage and a copper cable that is connected to a Host Channel Adapter (HCA-C) fanout on the front of the book. The interconnection speed is 6 GBps. Each I/O cage supports up to seven I/O domains, for a total of 28 I/O slots. An eighth IFB-MP is installed to provide an alternative path to I/O features in slots 29, 30, 31, and 32 in case of a failure in domain 7.

I/O drawers provide more I/O granularity and capacity flexibility than with the I/O cage. The I/O drawer supports two I/O domains, for a total of eight I/O slots, with four I/O features in each I/O domain. Each I/O domain uses an IFB-MP in the I/O drawer and a copper cable to connect to an HCA-C fanout in the CPC drawer. The interconnection speed is 6 GBps. I/O drawers simplify planning because they can be added concurrently and removed in the field, which is an advantage over I/O cages. I/O drawers were first offered with the IBM z10™ BC.

|

Note: I/O drawers are not supported on z14 and later Z platforms.

|

PCIe I/O drawers allow a higher number of features (four times more than the I/O drawer and a 14% increase over the I/O cage) and increased port granularity. Each drawer can accommodate up to 32 features in any combination. They are organized in four hardware domains per drawer, with eight features per domain. The PCIe I/O drawer is attached to a PCIe fanout in the CPC drawer, with an interconnection speed of 8 GBps with PCIe Gen2 and 16 GBps with PCIe Gen3. PCIe I/O drawers can be installed and repaired concurrently in the field.

1.1.2 I/O connectivity features

The most common attachment to an Z I/O channel is a storage control unit (CU), which can be accessed through a Fibre Connection (IBM FICON) channel, for example. The CU controls I/O devices such as disk and tape drives.

System-to-system communications are typically implemented by using the IBM Integrated Coupling Adapter (ICA SR), Coupling Express Long Reach (CE LR), InfiniBand (IFB) coupling links, Shared Memory Communications, and FICON channel-to-channel (FCTC) connections.

The Internal Coupling (IC) channel, IBM HiperSockets™, and Shared Memory Communications can be used for communications between logical partitions within the Z platform.

The Open Systems Adapter (OSA) features provide direct, industry-standard Ethernet connectivity and communications in a networking infrastructure.

The 10GbE RoCE Express2 and 10GbE RoCE Express features provide high-speed, low-latency networking fabric for IBM z/OS-to-z/OS shared memory communications.

As part of system planning activities, decisions are made about where to locate the equipment (for distance reasons), how it will be operated and managed, and the business continuity requirements for disaster recovery, tape vaulting, and so on. The types of software (operating systems and applications) that will be used must support the features and devices on the Z platform.

From a hardware point of view, all of the features in the PCIe I/O drawers, I/O drawers, and I/O cages are supported by the Z Support Elements. This functionality applies to installing and updating Licensed Internal Code (LIC) to features and other operational tasks.

Many features have an integrated processor that handles the adaptation layer functions required to present the necessary features to the rest of the system in a uniform manner. Therefore, all the operating systems have the same interface with the I/O subsystem.

The z14, z13, z13s, zEC12, and zBC12 support industry-standard PCIe adapters called native PCIe adapters. For native PCIe adapter features, there is no adaptation layer, but the device driver is present in the operating system. The adapter management functions (such as diagnostics and firmware updates) are provided by Resource Groups.

There are four Resource Groups on z14, and there are two Resource Groups on z13, z13s, zEC12, and zBC12. The Resource Groups are managed by an integrated firmware processor that is part of the system’s base configuration.

The following sections briefly describe connectivity options for the I/O features available on the Z platforms.

1.2 FICON Express

The Fibre Channel connection (FICON) Express features were originally designed to provide access to extended count key data (IBM ECKD™) devices, and FICON channel-to-channel (CTC) connectivity. Then came support for access to Small Computer System Interface (SCSI) devices. This innovation was followed by support for High-Performance FICON for z Systems (zHPF) for OLTP I/O workloads that transfer small blocks of fixed-size data. These OLTP I/O workloads include IBM DB2® database, Virtual Storage Access Method (VSAM), partitioned data set extended (PDSE), and IBM z/OS file system (zFS).

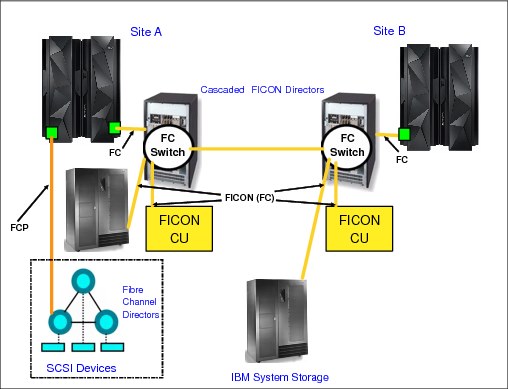

IBM Z platforms build on this I/O architecture by offering high-speed FICON connectivity, as shown in Figure 1-1.

Figure 1-1 FICON connectivity

The FICON implementation enables full-duplex data transfer. In addition, multiple concurrent I/O operations can occur on a single FICON channel. FICON link distances can be extended by using various solutions. For more information, see 10.2.2, “FICON repeated distance solutions” on page 167.

The FICON features on Z also support full fabric connectivity for the attachment of SCSI devices by using the Fibre Channel Protocol (FCP). Software support is provided by IBM z/VM®, IBM z/VSE®, and Linux on z Systems operating systems.

1.3 zHyperLink Express

IBM zHyperLink is a technology that provides a low-latency point-to-point connection from a z14 to an IBM DS8880. The transport protocol is defined for reading and writing ECKD data records. It provides a 5-fold reduction in I/O services time for read requests.

The zHyperLink Express feature works as native PCIe adapter and can be shared by multiple LPARs.

On the z14, the zHyperLink Express feature is installed in the PCIe I/O drawer. On the IBM DS8880 side, the fiber optic cable connects to a zHyperLink PCIe interface in I/O bay.

The zHyperLink Express feature has the same qualities of service as do all Z I/O channel features.

Figure 1-2 depicts a point-to-point zHyperLink connection with a z14.

Figure 1-2 zHyperLink physical connectivity

1.4 Open Systems Adapter-Express

The Open Systems Adapter-Express (OSA-Express) features are the pipeline through which data is exchanged between the Z platforms and devices in the network. OSA-Express3, OSA-Express4S, OSA-Express5S, and OSA-Express6S features provide direct, industry-standard LAN connectivity in a networking infrastructure, as shown in Figure 1-3.

Figure 1-3 OSA-Express connectivity for IBM Z platforms

The OSA-Express features bring the strengths of the Z family, such as security, availability, and enterprise-wide access to data to the LAN environment. OSA-Express provides connectivity for the following LAN types:

•1000BASE-T Ethernet (10/100/1000 Mbps)

•1 Gbps Ethernet

•10 Gbps Ethernet

1.5 HiperSockets

IBM HiperSockets technology provides seamless network connectivity to consolidate servers in an advanced infrastructure intraserver network. HiperSockets creates multiple independent, integrated, virtual LANs within an IBM Z platform.

This technology provides high-speed connectivity between combinations of logical partitions or virtual servers. It eliminates the need for any physical cabling or external networking connection between these virtual servers. This network within the box concept minimizes network latency and maximizes bandwidth capabilities between z/VM, Linux on z Systems, IBM z/VSE, and IBM z/OS images, or combinations of these. HiperSockets use is also possible under the IBM z/VM operating system, which enables establishing internal networks between guest operating systems, such as multiple Linux servers.

The z/VM virtual switch can transparently bridge a guest virtual machine network connection on a HiperSockets LAN segment. This bridge allows a single HiperSockets guest virtual machine network connection to directly communicate with other guest virtual machines on the virtual switch and with external network hosts through the virtual switch OSA UPLINK port.

Figure 1-4 shows an example of HiperSockets connectivity with multiple logical partitions (LPARs) and virtual servers.

Figure 1-4 HiperSockets connectivity with multiple LPARs and virtual servers

HiperSockets technology is implemented by Z LIC, with the communication path in system memory and the transfer information between the virtual servers at memory speed.

1.6 Parallel Sysplex and coupling links

IBM Parallel Sysplex is a clustering technology that represents a synergy between hardware and software. It consists of the following components:

•Parallel Sysplex-capable servers

•A coupling facility

•Coupling links (CS5, CL5, IFB, IC)

•Server Time Protocol (STP)

•A shared direct access storage device (DASD)

•Software, both system and subsystem

These components are all designed for parallel processing, as shown in Figure 1-5.

Figure 1-5 Parallel Sysplex connectivity

Parallel Sysplex cluster technology is a highly advanced, clustered, commercial processing system. It supports high-performance, multisystem, read/write data sharing, which enables the aggregate capacity of multiple z/OS systems to be applied against common workloads.

The systems in a Parallel Sysplex configuration are linked and can fully share devices and run the same applications. This feature enables you to harness the power of multiple IBM Z platforms as though they are a single logical computing system.

The architecture is centered around the implementation of a coupling facility (CF) that runs the coupling facility control code (CFCC) and high-speed coupling connections for intersystem and intrasystem communications. The CF provides high-speed data sharing with data integrity across multiple Z platforms.

Parallel Sysplex technology provides high availability for business-critical applications. The design is further enhanced with the introduction of System-Managed Coupling Facility Structure Duplexing, which provides the following additional benefits:

•Availability: Structures do not need to be rebuilt if a coupling facility fails.

•Manageability and usability: A consistent procedure is established to manage structure recovery across users.

•Configuration benefits: A sysplex can be configured with internal CFs only.

Parallel Sysplex technology supports connectivity between systems that differ by up to two generations (n-2). For example, an IBM z14 can participate in an IBM Parallel Sysplex cluster with z13, z13s, zEC12, and zBC12 platforms.

1.7 Shared Memory Communications

Shared Memory Communications (SMC) on Z platforms is a technology that can improve throughput by accessing data faster with less latency. SMC reduces CPU resource consumption compared to traditional TCP/IP communications. Furthermore, applications do not need to be modified to gain the performance benefits of SMC.

SMC allows two peers to send and receive data by using system memory buffers that each peer allocates for its partner’s use. Two types of SMC protocols are available on the Z platform:

•SMC-Remote Direct Memory Access (SMC-R)

SMC-R is a protocol for Remote Direct Memory Access (RDMA) communication between TCP socket endpoints in LPARs in different systems. SMC-R runs over networks that support RDMA over Converged Ethernet (RoCE). It allows existing TCP applications to benefit from RDMA without requiring modifications. SMC-R provides dynamic discovery of the RDMA capabilities of TCP peers and automatic setup of RDMA connections that those peers can use.

The 10GbE RoCE Express2 and 10GbE RoCE Express features provide the RoCE support needed for LPAR-to-LPAR communication across Z platforms.

•SMC-Direct Memory Access (SMC-D)

SMC-D implements the same SMC protocol that is used with SMC-R to provide highly optimized intra-system communications. Where SMC-R uses RoCE for communicating between TCP socket endpoints in separate systems, SMC-D uses Internal Shared Memory (ISM) technology for communicating between TCP socket endpoints in the same system.

ISM provides adapter virtualization (virtual functions (VFs)) to facilitate the intra-system communications. Hence, SMC-D does not require any additional physical hardware (no adapters, switches, fabric management, or PCIe infrastructure). Therefore, significant cost savings can be achieved when using the ISM for LPAR-to-LPAR communication within the same Z platform.

Both SMC protocols use shared memory architectural concepts, eliminating TCP/IP processing in the data path, yet preserving TCP/IP quality of service (QoS) for connection management purposes.

Figure 1-6 shows the connectivity for SMC-D and SMC-R configurations.

Figure 1-6 Connectivity for SMC-D and SMC-R configurations

1.8 I/O feature support

Table 1-1 lists the I/O features that are available on Z platforms. Not all I/O features can be ordered on all systems, and certain features are available only for a system upgrade. Depending on the type and version of Z, there might be further restrictions (for example, on the maximum number of supported ports).

Table 1-1 IBM Z I/O features

|

I/O feature

|

Feature codes

|

Maximum number of ports

|

Ports per feature

|

||||

|

z14

|

z13

|

z13s

|

zEC12

|

zBC12

|

|||

|

zHyperLink Express

|

|||||||

|

zHyperLink

|

0431

|

16

|

N/A

|

N/A

|

N/A

|

N/A

|

2

|

|

FICON Express

|

|||||||

|

FICON Express16S+ LX

|

0427

|

320

|

320

|

128

|

N/A

|

N/A

|

2

|

|

FICON Express16S+ SX

|

0428

|

320

|

320

|

128

|

N/A

|

N/A

|

2

|

|

FICON Express16S LX

|

0418

|

320

|

320

|

128

|

N/A

|

N/A

|

2

|

|

FICON Espress16S SX

|

0419

|

320

|

320

|

128

|

N/A

|

N/A

|

2

|

|

FICON Express8S 10 KM LX

|

0409

|

N/A

|

320

|

128

|

320

|

128

|

2

|

|

FICON Express8S SX

|

0410

|

N/A

|

320

|

128

|

320

|

128

|

2

|

|

FICON Express8 10 KM LX

|

3325

|

N/A

|

64

|

64

|

176

|

32/64c

|

4

|

|

FICON Express8 SX

|

3326

|

N/A

|

64

|

64

|

176

|

32/64c

|

4

|

|

FICON Express4 10 KM LX

|

3321

|

N/A

|

N/A

|

N/A

|

176

|

32/641

|

4

|

|

FICON Express4 SX

|

3322

|

N/A

|

N/A

|

N/A

|

176

|

32/64c

|

4

|

|

FICON Express4-2C SX

|

3318

|

N/A

|

N/A

|

N/A

|

N/A

|

16/32c

|

2

|

|

OSA-Express

|

|||||||

|

OSA-Express6S 10 GbE LR

|

0424

|

48

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express6S 10 GbE SR

|

0425

|

48

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express5S 10 GbE LR

|

0415

|

N/A

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express5S 10 GbE SR

|

0416

|

N/A

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express4S 10 GbE LR

|

0406

|

N/A

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express4S 10 GbE SR

|

0407

|

N/A

|

48

|

48

|

48

|

48

|

1

|

|

OSA-Express3 10 GbE LR

|

3370

|

N/A

|

N/A

|

N/A

|

48

|

16/32c

|

2

|

|

OSA-Express3 10 GbE SR

|

3371

|

N/A

|

N/A

|

N/A

|

48

|

16/32c

|

2

|

|

OSA-Express6S GbE LX

|

0422

|

96

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express6S GbE SX

|

0423

|

96

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express5S GbE LX

|

0413

|

N/A

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express5S GbE SX

|

0414

|

N/A

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express4S GbE LX

|

0404

|

N/A

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express4S GbE SX

|

0405

|

N/A

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express3 GbE LX

|

3362

|

N/A

|

N/A

|

N/A

|

96

|

32/64c

|

42

|

|

OSA-Express3 GbE SX

|

3363

|

N/A

|

N/A

|

N/A

|

96

|

32/64c

|

4b

|

|

OSA-Express3-2P GbE SX

|

3373

|

N/A

|

N/A

|

N/A

|

N/A

|

16/32c

|

2b

|

|

OSA-Express2 GbE LX

|

3364

|

N/A

|

N/A

|

N/A

|

N/A

|

N/A

|

2

|

|

OSA-Express2 GbE SX

|

3365

|

N/A

|

N/A

|

N/A

|

N/A

|

N/A

|

2

|

|

OSA-Express5S 1000BASE-T

|

0417

|

N/A

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express6S 1000BASE-T

|

0426

|

96

|

96

|

96

|

96

|

96

|

2b

|

|

OSA-Express4S 1000BASE-T Ethernet

|

0408

|

N/A

|

96

|

96

|

96

|

N/A

|

2b

|

|

OSA-Express3 1000BASE-T Ethernet

|

3367

|

N/A

|

N/A

|

N/A

|

96

|

32/64c

|

4b

|

|

OSA-Express3-2P 1000BASE-T Ethernet

|

3369

|

N/A

|

N/A

|

N/A

|

N/A

|

16/32c

|

2b

|

|

RoCE Express

|

|||||||

|

10GbE RoCE Express2

|

0412

|

8

|

16

|

16

|

16

|

16

|

2

|

|

10GbE RoCE Express

|

0411

|

8

|

16

|

16

|

16

|

16

|

1

|

|

HiperSockets

|

|||||||

|

HiperSockets

|

N/A

|

32

|

32

|

32

|

32

|

32

|

N/A

|

|

Coupling links

|

|||||||

|

IC

|

N/A

|

32

|

32

|

32

|

32

|

32

|

N/A

|

|

CE LR

|

0433

|

64

|

32

|

4/8c

|

N/A

|

N/A

|

2

|

|

ICA SR

|

0172

|

80

|

40

|

4/8c

|

N/A

|

N/A

|

2

|

|

HCA3-O (12x IFB or 12x IFB33)

|

0171

|

32

|

32

|

4/8c

|

32

|

16

|

2

|

|

HCA3-O LR (1x IFB)

|

0170

|

64

|

64

|

8/16c

|

64

|

32

|

4

|

|

HCA2-O (12x IFB)

|

0163

|

N/A

|

N/A

|

N/A

|

32

|

16

|

2

|

|

HCA2-O LR (1x IFB)

|

0168

|

N/A

|

N/A

|

N/A

|

32

|

12

|

2

|

|

ISC-3 (2 Gbps)4

|

0217

0218

0219

|

N/A

|

N/A

|

N/A

|

48

|

32/48c

|

4

|

1 RPQ 8P2733 is required to carry forward the second I/O drawer on upgrades.

2 Both ports are on one CHPID.

3 For use of the 12x IFB3 protocol, a maximum of four CHPIDs per port can be used and must connect to an HCA3-O port. Auto-configured when conditions are met for 12x IFB3.

4 There are three feature codes for ISC-3: Feature code 0217 is for the ISC Mother card (ISC-M); Feature code 0218 is for the ISC Daughter card (ISC-D); and Individual ISC-3 port activation must be ordered by using feature code 0219. (RPQ 8P2197 is available for the ISC-3 Long-Distance Option (up to 20 km) and has an increment of 2 without extra LIC-CC.)

1.9 Special-purpose feature support

In addition to the I/O connectivity features, several special purpose features are available that can be installed in the PCIe I/O drawers, such as:

•Crypto Express

•Flash Express

•zEDC Express

1.9.1 Crypto Express features

Integrated cryptographic features provide leading cryptographic performance and functions. Reliability, availability, and serviceability support is unmatched in the industry, and the cryptographic solution received the highest standardized security certification (FIPS 140-2 Level 4).

Crypto Express6S, Crypto Express5S, and Crypto Express4S are tamper-sensing and tamper-responding programmable cryptographic features that provide a secure cryptographic environment. Each adapter contains a tamper-resistant hardware security module (HSM). The HSM can be configured as a secure IBM Common Cryptographic Architecture (CCA) coprocessor, as a secure IBM Enterprise PKCS #11 (EP11) coprocessor, or as an accelerator.

Each Crypto Express6S and Crypto Express5S feature occupies one I/O slot in the PCIe I/O drawer. Crypto Express6S is supported on the z14. Crypto Express5S is supported on the z13 and z13s platforms. Crypto Express4S is supported on the zEC12 and zBC12, but not supported by z14.

1.9.2 Flash Express feature

Flash Express is an innovative optional feature that was introduced with the zEC12 and also available on zBC12, z13, and z13s platforms. It improves performance and availability for critical business workloads that cannot afford any reduction in service levels. Flash Express is easy to configure, requires no special skills, and produces rapid time to value.

Flash Express implements storage-class memory (SCM) through an internal NAND Flash solid-state drive (SSD) in a PCIe adapter form factor. Each Flash Express feature is installed exclusively in the PCIe I/O drawer and occupies one I/O slot.

For availability reasons, the Flash Express feature must be ordered in pairs. A feature pair provides 1.4 TB of usable storage. A maximum of four pairs (4 x 1.4 = 5.6 TB) is supported in a system. One PCIe I/O drawer supports up to two Flash Express pairs.

Flash Express storage is allocated to each partition in a manner similar to main memory allocation. The allocation is specified at the Hardware Management Console (HMC). z/OS can use the Flash Express feature as SCM for paging store to minimize the supervisor call (SVC) memory dump duration.

The z/OS paging subsystem supports a mix of Flash Express and external disk storage. Flash Express can also be used by Coupling Facility images to provide extra capacity for particular structures (for example, IBM MQ shared queues application structures).

|

Note: Starting with the z14, zFlash Express was replaced by Virtual Flash memory (VFM). VFM implements Extended Asynchronous Data Mover (EADM) architecture by using HSA-like memory instead of Flash card pairs.

|

1.9.3 zEDC Express feature

zEDC Express is an optional feature that is exclusive to the z14, z13, z13s, zEC12, and zBC12 systems. It provides hardware-based acceleration of data compression and decompression. That capability improves cross-platform data exchange, reduces processor use, and saves disk space.

A minimum of one feature can be ordered, and a maximum of eight can be installed on the system on the PCIe I/O drawer. Up to two zEDC Express features per domain can be installed. There is one PCIe adapter/compression coprocessor per feature, which implements compression as defined by RFC1951 (DEFLATE). For more information about the DEFLATE Compress Data Format Specification, see the RFC for DEFLATE Compressed Data Format Specification Version 1.3.

A zEDC Express feature can be shared by up to 15 LPARs. z/OS version 2.1 and later supports the zEDC Express feature. z/VM 6.3 with PTFs also provides guest exploitation.

Table 1-2 lists the special-purpose features that are available on Z platforms. Not all special-purpose features can be ordered on all systems, and certain features are available only with a system upgrade. Except for the Crypto Express3 features, all special-purpose features are in the PCIe I/O drawer.

Table 1-2 IBM Z special-purpose features

|

Special-purpose feature

|

Feature codes

|

Maximum number of features

|

||||

|

z14

|

z13s

|

z13s

|

zEC12

|

zBC12

|

||

|

Crypto Express

|

||||||

|

Crypto Express6S

|

0893

|

16

|

16

|

16

|

N/A

|

N/A

|

|

Crypto Express5S

|

0890

|

N/A

|

16

|

16

|

N/A

|

N/A

|

|

Crypto Express4S

|

0865

|

N/A

|

N/A

|

N/A

|

16

|

16

|

|

Crypto Express3

|

0864

|

N/A

|

N/A

|

N/A

|

16

|

16

|

|

Flash Express

|

||||||

|

Flash Express1

|

0402

04032

|

N/A

|

8

|

8

|

8

|

8

|

|

zEDC Express

|

||||||

|

zEDC Express

|

0420

|

8

|

8

|

8

|

8

|

8

|

1 Features are ordered in pairs.

2 Available on the z13 and z13s above.

1 PCIe Gen3 is not available for the Z family earlier than IBM z13 and z13s. Previously, IBM zEnterprise Systems used PCIe Gen2.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.