IBM DB2 for i

This chapter describes the following enhancements to the DB2 for i functions in IBM i version 7.2:

For more information about the IBM i 7.2 database enhancements, see the IBM i Technology Updates developerWorks wiki:

8.1 Introduction to DB2 for i

DB2 for i is a member of the DB2 family. This database is integrated and delivered with the IBM i operating system. DB2 for i has been enhanced from release to release of IBM i. In addition, DB2 for i is also enhanced by using the DB2 PTF Groups, where PTF Group SF99702 applies to IBM i 7.2 for DB2. This group does not change in V7R2.

IBM i 7.2 brings many DB2 functional, security, performance, and management enhancements. Most of these enhancements are implemented to support agility or what is referred to as a data-centric approach for the platform.

A data-centric paradigm requires business application designers, architects, and developers to focus on the database as a central point of modern applications for better data integrity, security, business agility, and a lower time to market factor. This is in contrast to an application-centric approach, where data manipulation, security, and integrity is, at least partially, governed by the application code. A data-centric concept moves these responsibilities over the underlying database.

A data-centric approach ensures consistent and secure data access and manipulation regardless of the application and the database interface that are used. Implementing security patterns for business critical data, and data access and manipulation procedures within DB2 for i, is now easier than ever, and more importantly, it removes code complexity and a security-related burden from business applications.

This approach is especially crucial for contemporary IT environments where the same data is being manipulated by web, mobile, and legacy applications at the same time. Moving data manipulation logic over the DB2 for i also uses all the current and future performance enhancements, database monitoring capabilities, and performance problem investigation tools and techniques.

The following key IBM i 7.2 database enhancements support this data-centric approach:

•Row and column access control (RCAC)

•Separation of duties

•DB2 for i services

•Global variables

•Named and default parameter support on user-defined functions (UDFs) and user-defined table functions (UDTFs)

•Pipelined table functions

•SQL plan cache management

•Performance enhancements

•Interoperability and portability

•LIMIT x OFFSET y clause

This chapter describes all the major enhancements to DB2 for i in IBM i 7.2.

8.2 Separation of duties concept

Separation of duties is a concept that helps companies to stay compliant with standards and government regulations.

One of these requirements is that users doing administration tasks on operating systems (usually they have *ALLOBJ special authority) should not be able to see and manipulate customer data in DB2 databases.

Before IBM i 7.2, when users had *ALLOBJ special authority, they could manipulate DB2 files on a logical level and see the data in these database files. Also, there was a problem because someone who was able to grant permission to other users to work with data in the database had also authority to see and manipulate the data inside the databases.

To separate out the operating system administration permissions and data manipulation permissions, DB2 for i uses the QIBM_DB_SECADM function. For this function, authorities to see and manipulate of data can be set up for individual users or groups. Also, a default option can be configured. For more information about the QIBM_DB_SECADM function, see 8.2.1, “QIBM_DB_SECADM function usage” on page 323.

Although the QIBM_DB_SECADM function usage can decide who can manipulate DB2 security, there is another concept in DB2 for i 7.2, which helps to define which users or groups can access rows and columns. This function is called Row and Column Access Control (RCAC). For more information about RCAC, see 8.2.2, “Row and Column Access Control support” on page 325.

8.2.1 QIBM_DB_SECADM function usage

IBM i 7.2 now allows management of data security without exposing the data to be read or modified.

Users with *SECADM special authority can grant or revoke privileges to any object even if they do not have these privileges. This can be done by using the Work with Function Usage (WRKFCNUSG) CL command and specifying the authority of *ALLOWED or *DENIED for particular users of the QIBM_DB_SECADM function.

From the Work with Function Usage (WRKFCNUSG) panel (Figure 8-1), enter option 5 (Display usage) next to QIBM_DB_SECADM and press Enter.

|

Work with Function Usage

Type options, press Enter.

2=Change usage 5=Display usage

Opt Function ID Function Name

QIBM_DIRSRV_ADMIN IBM Tivoli Directory Server Administrator

QIBM_ACCESS_ALLOBJ_JOBLOG Access job log of *ALLOBJ job

QIBM_ALLOBJ_TRACE_ANY_USER Trace any user

QIBM_WATCH_ANY_JOB Watch any job

QIBM_DB_DDMDRDA DDM & DRDA Application Server Access

5 QIBM_DB_SECADM Database Security Administrator

QIBM_DB_SQLADM Database Administrator

|

Figure 8-1 Work with Function Usage panel

In the example that is shown in Figure 8-2, in the Display Function Usage panel, you can see that default authority for unspecified user profiles is *DENIED. Also, both the DEVELOPER1 and DEVELOPER2 user profiles are specifically *DENIED.

|

Display Function Usage

Function ID . . . . . . : QIBM_DB_SECADM

Function name . . . . . : Database Security Administrator

Description . . . . . . : Database Security Administrator Functions

Product . . . . . . . . : QIBM_BASE_OPERATING_SYSTEM

Group . . . . . . . . . : QIBM_DB

Default authority . . . . . . . . . . . . : *DENIED

*ALLOBJ special authority . . . . . . . . : *NOTUSED

User Type Usage User Type Usage

DEVELOPER1 User *DENIED

DEVELOPER2 User *DENIED

|

Figure 8-2 Display Function Usage panel

If you return back to the Work with Function Usage panel (Figure 8-1 on page 323) and enter option 2 (Change usage) next to QIBM_DB_SECADM and press Enter, you see the Change Function Usage (CHGFCNUSG) panel, as shown in Figure 8-3. You can use the CHGFCNUSG CL command to change who is authorized to use the QIBM_DB_SECADM function.

|

Change Function Usage (CHGFCNUSG)

Type choices, press Enter.

Function ID . . . . . . . . . . > QIBM_DB_SECADM

User . . . . . . . . . . . . . . Name

Usage . . . . . . . . . . . . . *ALLOWED, *DENIED, *NONE

Default authority . . . . . . . *DENIED *SAME, *ALLOWED, *DENIED

*ALLOBJ special authority . . . *NOTUSED *SAME, *USED, *NOTUSED

|

Figure 8-3 Change Function Usage panel

In the example that is shown in Figure 8-4, the DBADMINS group profile is being authorized for the QIBM_DB_SECADM function.

|

Change Function Usage (CHGFCNUSG)

Type choices, press Enter.

Function ID . . . . . . . . . . > QIBM_DB_SECADM

User . . . . . . . . . . . . . . DBADMINS Name

Usage . . . . . . . . . . . . . *ALLOWED *ALLOWED, *DENIED, *NONE

Default authority . . . . . . . *DENIED *SAME, *ALLOWED, *DENIED

*ALLOBJ special authority . . . *NOTUSED *SAME, *USED, *NOTUSED

|

Figure 8-4 Allow the DBADMINS group profile access to the QIBM _DB_SECADM function

Showing the function usage for the QIBM_DB_SECADM function now shows that all users in the DBADMINS group now can manage DB2 security. See Figure 8-5.

|

Display Function Usage

Function ID . . . . . . : QIBM_DB_SECADM

Function name . . . . . : Database Security Administrator

Description . . . . . . : Database Security Administrator Functions

Product . . . . . . . . : QIBM_BASE_OPERATING_SYSTEM

Group . . . . . . . . . : QIBM_DB

Default authority . . . . . . . . . . . . : *DENIED

*ALLOBJ special authority . . . . . . . . : *NOTUSED

User Type Usage User Type Usage

DBADMINS Group *ALLOWED

DEVELOPER1 User *DENIED

DEVELOPER2 User *DENIED

|

Figure 8-5 Display Function Usage panel showing the authority that is granted to DBADMINS group profile

8.2.2 Row and Column Access Control support

IBM i 7.2 is now distributed with option 47, IBM Advanced Data Security for IBM i. This option must be installed before using Row and Column Access Control (RCAC).

RCAC is based on the DB2 for i ability to define rules for row access control (by using the SQL statement CREATE PERMISSION) and to define rules for column access control and creation of column masks for specific users or groups (by using the SQL statement CREATE MASK).

|

Note: RCAC is described in detail in Row and Column Access Support in IBM DB2 for i, REDP-5110. This section only briefly describes the basic principles of RCAC and provides a short example that shows the ease of implementing the basic separation of duties concept.

|

Special registers that are used for user identifications

To implement RCAC, special registers are needed to check which user is trying to display or use data in a particular table. To be able to identify users for the RCAC, IBM i uses several special registers that are listed in Table 8-1.

Table 8-1 Special registers for identifying users

|

Special register

|

Definition

|

|

USER

or

SESSION_USER

|

The effective user of the thread is returned.

|

|

SYSTEM_USER

|

The authorization ID that initiated the connection is returned.

|

|

CURRENT USER

or

CURRENT_USER

|

The most recently program adopted authorization ID within the thread is returned. When no adopted authority is active, the effective user of the thread Is returned.

|

|

CURRENT_USER: The CURRENT_USER special register is new in DB2 for IBM i 7.2. For more information, see IBM Knowledge Center:

|

VERIFY_GROUP_FOR_USER function

The VERIFY_GROUP_FOR_USER function has two parameters: userid and group.

For RCAC, the userid parameter is usually one of special register values (SESSION_USER, SYSTEM_USER, or CURRENT_USER), as described in “Special registers that are used for user identifications” on page 326.

This function returns a large integer value. If the user is member of group, this function returns a value of 1; otherwise, it returns a value of 0.

RCAC example

This section provides a brief example of implementing RCAC. This example uses an employee database table in a library called HR. This employee database table contains the following fields:

•Last name

•First name

•Date of birth

•Bank account number

•Employee number

•Department number

•Salary

Another database table that is called CHIEF contains, for each employee number, the employee number of their boss or chief.

You must create the following group profiles, as shown in Example 8-1:

•EMPGRP, which contains members of all employees

•HRGRP, which contains members from the HR department

•BOARDGRP, which contains members of all bosses or chiefs in the company

Example 8-1 Create group profiles for the RCAC example

CRTUSRPRF USRPRF(EMPGRP)

PASSWORD(*NONE)

TEXT('Employee group')

CRTUSRPRF USRPRF(HRGRP)

PASSWORD(*NONE)

TEXT('Human resources group')

CRTUSRPRF USRPRF(BOARDGRP)

PASSWORD(*NONE)

TEXT('Chiefs group')

Next, create the user profiles, as shown in the Example 8-2. For simplicity, this example assumes the following items:

•The user profile password is the same as the user profile name.

•For all employees, the user profile name is the same as employee’s last name.

Example 8-2 Create user profiles for the RCAC example

CRTUSRPRF USRPRF(JANAJ)

TEXT('Jana Jameson')

GRPPRF(EMPNO)

SUPGRPRF(HRGRP)

-- Jana Jameson works in the HR department

CRTUSRPRF USRPRF(JONES)

TEXT('George Jones - the company boss')

GRPPRF(EMPGRP)

SUPGRPPRF(BOARDGRP)

-- George James is a boss in the company and is an employee as well.

CRTUSRPRF USRPRF(FERRANONI)

TEXT('Fabrizion Ferranoni')

GRPPRF(EMPGRP)

CRTUSRPRF USRPRF(KADLEC)

TEXT('Ian Kadlec')

GRPPRF(EMPGRP)

CRTUSRPRF USRPRF(NORTON)

TEXT('Laura Norton')

GRPPRF(EMPGRP)

CRTUSRPRF USRPRF(WASHINGTON)

TEXT('Kimberly Washington')

GRPPRF(EMPGRP)

Now, create the DB2 schema (collection) HR and employee table EMPLOYEE. The primary key for the employee table is the employee number. After creating the database table EMPLOYEE, create indexes EMPLOYEE01 and EMPLOYEE02 for faster access. Then, add records to the database by using the SQL insert command. See Example 8-3.

|

Note: When entering SQL commands, you can use either the STRSQL CL command to start an interactive SQL session or use IBM Navigator for i. If you use STRSQL, make sure to remove the semicolon marks at the end of each SQL statement.

|

Example 8-3 Create the schema, database table, and indexes, and populate the table with data

CREATE TABLE HR/EMPLOYEE

(FIRST_NAME FOR COLUMN FNAME CHARACTER (15 ) NOT NULL WITH DEFAULT,

LAST_NAME FOR COLUMN LNAME CHARACTER (15 ) NOT NULL WITH DEFAULT,

DATE_OF_BIRTH FOR COLUMN BIRTHDATE DATE NOT NULL WITH DEFAULT,

BANK_ACCOUNT CHARACTER (9 ) NOT NULL WITH DEFAULT,

EMPLOYEE_NUM FOR COLUMN EMPNO CHARACTER(6) NOT NULL WITH DEFAULT,

DEPARTMENT FOR COLUMN DEPT DECIMAL (4, 0) NOT NULL WITH DEFAULT,

SALARY DECIMAL (9, 0) NOT NULL WITH DEFAULT,

PRIMARY KEY (EMPLOYEE_NUM)) ;

CREATE INDEX HR/EMPLOYEE01 ON HR/EMPLOYEE

(LAST_NAME ASC,

FIRST_NAME ASC) ;

CREATE INDEX HR/EMPLOYEE02 ON HR/EMPLOYEE

(DEPARTMENT ASC,

LAST_NAME ASC,

FIRST_NAME ASC);

-- Now created the table CHIEF and add data

CREATE TABLE HR/CHIEF

(EMPLOYEE_NUM FOR COLUMN EMPNO CHARACTER(6 ) NOT NULL WITH DEFAULT,

CHIEF_NO CHARACTER(6 ) NOT NULL WITH DEFAULT,

PRIMARY KEY (EMPLOYEE_NUM)) ;

INSERT INTO HR/CHIEF VALUES

(

'G76852', 'G00012') ;

INSERT INTO HR/CHIEF VALUES

(

'G12835', 'G00012');

INSERT INTO HR/CHIEF VALUES

(

'G23561', 'G00012');

INSERT INTO HR/CHIEF VALUES

(

'G00012', 'CHIEF ');

INSERT INTO HR/CHIEF VALUES

(

'G32421', 'G00012');

-- Load exapmple data into EMPLOYEE table

INSERT INTO HR/EMPLOYEE

VALUES('Fabrizio', 'Ferranoni',

DATE('05/12/1959') , 345723909, 'G76852','0001', 96000) ;

INSERT INTO HR/EMPLOYEE

VALUES('Ian', 'Kadlec',

DATE('11/23/1967') , 783920125, 'G23561', '0001', 64111) ;

INSERT INTO HR/EMPLOYEE

VALUES('Laura', 'Norton',

DATE('02/20/1972') , 834510932, 'G00012', '0001', 55222) ;

INSERT INTO HR/EMPLOYEE

VALUES('Kimberly', 'Washington',

DATE('10/31/1972') , 157629076, 'G12435', '0005' , 100000);

INSERT INTO HR/EMPLOYEE

VALUES('George', 'Jones',

DATE('10/11/1952') , 673948571, 'G00001', '0000' , 1100000);

INSERT INTO HR/EMPLOYEE

VALUES('Jana ', 'Jameson',

DATE('10/11/1952') , 643247347, 'G32421', '0205' , 30000);

Now comes the most important and most interesting part of this example, which is defining the row and column access rules. First, specify the row permission access rules, as shown in Example 8-4. The following rules are implemented:

•Each employee should see only their own record, except for employees from the human resources department (group HRGRP).

•Chiefs (group BOARDGRP) should have access to all rows.

Example 8-4 Example of row access rule

CREATE PERMISSION ACCESS_TO_ROW ON HR/EMPLOYEE

FOR ROWS WHERE

(VERIFY_GROUP_FOR_USER(SESSION_USER,'BOARDGRP') = 1)

OR

(VERIFY_GROUP_FOR_USER(SESSION_USER,'EMPGRP') = 1

AND UPPER(LAST_NAME) = SESSION_USER)

OR

(VERIFY_GROUP_FOR_USER(SESSION_USER,'HRGRP') = 1)

ENFORCED FOR ALL ACCESS

ENABLE ;

-- Activate row access control

ALTER TABLE HR/EMPLOYEE ACTIVATE ROW ACCESS CONTROL ;

Here is an explanation of Example 8-4 on page 329:

1. This example creates a rule that is called ACCESS_ON_ROW.

2. First, a check is done by using the VERIFY_GROUP_FOR_USER function to see whether the user profile of the person who is querying the employee table (SESSION_USER) is part of the group BOARDGRP. If the user is a member of BOARDGRP, the function returns a value of 1 and they are granted access to the row.

3. If the user is member of the EMPGRP group, a check is done to see whether this user has records in the file (UPPER(LAST_NAME) = SESSION_USER). For example, employee Ian Kadlec cannot see the row (record) of the employee Laura Norton.

4. Another verification is done to see whether the user profile is a member of the group HRGRP. If yes, the user profile has access to all employee records (rows).

5. If none of conditions are true, the user looking at the table data sees no records.

6. Finally, using the SQL command ALTER TABLE....ACTIVATE ROW ACCESS CONTROL, the row permission rules are activated.

Example 8-5 shows an example of using column masks. The SQL CREATE MASK statement is used to hide data in specific columns that contain sensitive personal information, such as a bank account number or salary, and present that data to only authorized people.

Example 8-5 Example of creating column masks

-- Following are masks for columns, BANK_ACCOUNT and SALARY

CREATE MASK BANKACC_MASK ON HR/EMPLOYEE FOR

COLUMN BANK_ACCOUNT RETURN

CASE

WHEN (VERIFY_GROUP_FOR_USER(SESSION_USER, 'BOARDGRP' ) = 1

AND UPPER(LAST_NAME) = SESSION_USER )

THEN BANK_ACCOUNT

WHEN VERIFY_GROUP_FOR_USER(SESSION_USER, 'HRGRP' ) = 1

THEN BANK_ACCOUNT

WHEN UPPER(LAST_NAME) = SESSION_USER

AND

VERIFY_GROUP_FOR_USER(SESSION_USER, 'EMPGRP' ) = 1

THEN BANK_ACCOUNT

ELSE '*********'

END

ENABLE;

CREATE MASK SALARY_MASK ON HR/EMPLOYEE FOR

COLUMN SALARY RETURN

CASE

WHEN UPPER(LAST_NAME) = SESSION_USER

AND VERIFY_GROUP_FOR_USER(SESSION_USER, 'EMPNO') = 1

THEN SALARY

WHEN VERIFY_GROUP_FOR_USER(SESSION_USER, 'BOARDGRP' ) = 1

THEN SALARY

WHEN VERIFY_GROUP_FOR_USER(SESSION_USER, 'HRGRP' ) = 1

THEN SALARY

ELSE 0

END

ENABLE;

-- Activate both column masks

ALTER TABLE HR/EMPLOYEE ACTIVATE COLUMN ACCESS CONTROL ;

Here is an explanation of Example 8-5 on page 330:

1. This example is creating a column mask that is called BANKACC_MASK, which is related to the BANK_ACCOUNT column.

2. If the user accessing the data is a chief (member of the BORADGRP group), then show only their bank account data.

3. If the user accessing the data is an HR department employee (member of the HRGRP group), then show all employee bank account numbers.

4. All other users should not see the bank account number, so the data is masked with ‘*********’.

5. Next, create the column mask that is called SALARY_MASK, which is related to the SALARY column.

6. If the user accessing the data is an employee (member of the EMPGRP group), then show only their salary data.

7. If the user accessing the data is an HR employee (member of the HRGRP group), then show all the salary data.

8. If the user accessing the data is a chief (member of the BOARDGRP group), then show all the salary data.

9. All other users should not see the salary data, so the data is masked with ‘*********’.

10. Finally, using the SQL command ALTER TABLE....ACTIVATE Column ACCESS CONTROL, the column mask rules are activated.

Results

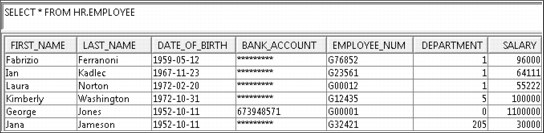

When user JONES logs on (they are a member of the BOARDGRP group), they see the results from the EMPLOYEE table, as shown in Figure 8-6. A chief can see all employees and their salaries, but can see only their own bank account number.

Figure 8-6 EMPLOYEE table as seen by the JONES user profile (Chief)

When any employee (in this example, the KADLEC user profile) logs on, they see the results from the EMPLOYEE table, as shown in Figure 8-7. In this case, each employee sees all data, but only data that is related to their person. This is a result of the ACCESS_TO_ROW row permission rule.

Figure 8-7 EMPLOYEE table as seen by the KADLEC user profile

When a human resources employee (in this example, the JANAJ user profile) logs on, they see the results from EMPLOYEE table, as shown in Figure 8-8. A human resources employee sees the bank accounts and salaries of all employees.

Figure 8-8 EMPLOYEE table as seen by the JANAJ user profile (human resources employee)

All other user profiles see an empty database file.

Adding the OR REPLACE clause to masks and permissions

Similar to other Data Definition Language (DDL) statements, the OR REPLACE clause was added to the following SQL commands:

•CREATE MASK

•REPLACE MASK

•CREATE PERMISSION

•REPLACE PERMISSION

The use of the OR REPLACE clause makes it easier to redeploy the RCAC rule text. For more information about the OR REPLACE clause, see IBM Knowledge Center:

8.3 DB2 security enhancements

This section describes the following DB2 security-related enhancements in IBM i 7.2:

8.3.1 QIBM_DB_ZDA and QIBM_DB_DDMDRDA function usage IDs

The QIBM_DB_ZDA and QIBM_DB_DDMDRDA function usage IDs block database server inbound connections. These function usage IDs ship with the default authority of *ALLOWED:

•You can use the QIBM_DB_ZDA function usage ID to restrict ODBC and JDBC Toolbox from the server side, including Run SQL Scripts, System i Navigator, and DB2 specific portions of Systems Director Navigator for i.

•You can use the QIBM_DB_DDMDRDA function usage ID to lock down DDM and IBM DRDA application server access.

These function usage IDs can be managed by the Work with Function Usage (WRKFCNUSG) CL command or by using the Change Function Usage (CHGFCNUSG) CL command. For an example of using the CHGFCNUSG CL command, see Example 8-6.

Example 8-6 Deny user profile user1 the usage of the DDM and DRDA application server access

CHGFCNUSG FCNID(QIBM_DB_DDMDRDA) USER(user1) USAGE(*DENIED)

8.3.2 Authorization list detail added to authorization catalogs

Using an authorization list eases security management and also improves performance because user profiles do not need to contain a list of objects to which they are authorized. They need to contain the authorization list that contains these objects.

Table 8-2 lists the DB2 for i catalogs that were updated to include the AUTHORIZATION_LIST column.

|

Note: Catalogs QSYS2/SYSXSROBJECTAUTH and QSYS2/SYSVARIABLEAUTH were already updated in IBM i 7.1 to have the AUTHORIZATION_LIST column.

|

Table 8-2 Catalogs containing the AUTHORIZATION_LIST column

|

Catalog

|

Catalog description|

|

|

QSYS2/SYSPACKAGEAUTH

|

*SQLPKG - Packages

|

|

QSYS2/SYSUDTAUTH

|

*SQLUDT - User-defined types

|

|

QSYS2/SYSTABAUTH

|

*FILE - Tables

|

|

QSYS2/SYSSEQUENCEAUTH

|

*DTAARA - Sequences

|

|

QSYS2/SYSSCHEMAAUTH

|

*LIB - Schema

|

|

QSYS2/SYSROUTINEAUTH

|

*PGM and *SRVPGM - Procedures and Functions

|

|

QSYS2/SYSCOLAUTH

|

Columns

|

|

QSYS2/SYSXSROBJECTAUTH

|

*XSROBJ - XML Schema Repositories

|

|

QSYS2/SYSVARIABLEAUTH

|

*SRVPGM - Global Variables

|

8.3.3 New user-defined table function: QSYS2.GROUP_USERS()

QSYS2.GROUP_USERS() is a new UDTF that you can use to create queries that can specify a group profile as its parameter and list user profiles in this group as the output.

The QSYS2.GROUP_USERS() function returns user profiles that have the specified group set up as their main or supplemental group. See Figure 8-9.

Figure 8-9 Example of the QSYS2.GROUP_USERS() UDTF

8.3.4 New security view: QSYS2.GROUP_PROFILE_ENTRIES

The new QSYS2.GROUP_PROFILE_ENTRIES security view returns a table with the following three columns:

•GROUP_PROFILE_NAME

•USER_PROFILE_NAME

•USER_TEXT

It accounts for both group and supplemental group profiles.

Figure 8-10 shows an example of using the QSYS2.GROUP_PROFILE_ENTRIES security view.

Figure 8-10 Example of the QSYS2.GROUP_PROFILE_ENTRIES security view

8.3.5 New attribute column in the SYSIBM.AUTHORIZATIONS catalog

The SYSIBM.AUTHORIZATIONS view is a DB2 family compatible catalog that contains a row for every authorization ID.

The SYSIBM.AUTHORIZATIONS catalog is extended to include a new column that is called ALTHORIZATION_ATTR, which differentiates users from groups. See Figure 8-11 on page 335.

Figure 8-11 SYSIBM.AUTHORIZATIONS catalog showing the new ALTHORIZATION_ATTR column

8.3.6 New QSYS2.SQL_CHECK_AUTHORITY() UDF

The QSYS2.SQL_CHECK_AUTHORITY() UDF is used to determine whether the user calling this function has the authority to object that is specified. The object type must be *FILE. The first parameter is the library and the second parameter is the object name. The result type is SMALLINT.

There are two possible result values:

•1: User has authority

•0: User does not have authority

Figure 8-12 shows the user that has authority to the CZZ62690/QTXTSRC object.

Figure 8-12 Example of user of the QSYS2.SQL_CHECK_AUTHORITY UDF

8.3.7 Refined object auditing control on QIBM_DB_OPEN exit program

Previously, an exit program control (*OBJAUD) was added to limit exit program calls. Because of the wide use of *CHANGE object auditing in some environments, the *OBJAUD control did not reduce the calls to the exit program enough in some environments.

The Open Data Base File exit program was enhanced to support two new values for the exit program data: OBJAUD(*ALL) and OBJAUD(*CHANGE).

Example 8-7 shows that three approaches are now possible.

Example 8-7 Object auditing control on the QIBM_DB_OPEN exit program

First example: The exit program is called when using any object auditing.

ADDEXITPGM EXITPNT(QIBM_QDB_OPEN) FORMAT(DBOP0100) PGMNBR(7) PGM(MJATST/OPENEXIT2) THDSAFE(*YES) TEXT('MJA') REPLACE(*NO)PGMDTA(*JOB *CALC '*OBJAUD')

Second example: The exit program is called when using *ALL object auditing.

ADDEXITPGM EXITPNT(QIBM_QDB_OPEN) FORMAT(DBOP0100) PGMNBR(7) PGM(MJATST/OPENEXIT2) THDSAFE(*YES) TEXT('MJA') REPLACE(*NO) PGMDTA(*JOB *CALC 'OBJAUD(*ALL)')

Third example: The exit program is called when using *CHANGE object auditing.

ADDEXITPGM EXITPNT(QIBM_QDB_OPEN) FORMAT(DBOP0100) PGMNBR(7) PGM(MJATST/OPENEXIT2) THDSAFE(*YES) TEXT('MJA') REPLACE(*NO) PGMDTA(*JOB *CALC 'OBJAUD(*CHANGE)')

8.3.8 Simplified DDM/DRDA authentication management by using group profiles

The new function of simplified DDM/DRDA authentication management by using group profiles enhances the authentication process when users connect to a remote database by using DDM or DRDA, for example, by using the SQL command CONNECT TO <database>.

Before this enhancement, any user connecting to a remote database needed to have a server authentication entry (by using the Add Server Authentication Entry (ADDSVRAUTHE) CL command) with a password for their user profile.

|

Note: To use the ADDSRVAUTE CL command, you must have the following special authorities:

•Security administrator (*SECADM) user special authority

•Object management (*OBJMGT) user special authority

|

You can use this enhancement to have a server authentication entry that is based on a group profile instead of individual users. When a user is a member of a group profile, or has a group profile set up as supplemental in their user profile, then only the group profile must have a server authentication entry. See Example 8-8.

Example 8-8 Use a group profile authentication entry to access a remote database

First create a group profile called TESTGROUP:

CRTUSRPRF USRPRF(TESTGROUP) PASSWORD(*NONE)

Then create a user profile TESTUSR and make it a member of TESTGROUP:

CRTUSRPRF USRPRF(TESTUSR) PASSWORD(somepasswd) GRPPRF(TESTTEAM)

Create the server authentication entry:

ADDSVRAUTE USRPRF(TESTGROUP) SERVER(QDDMDRDASERVER or <RDB-name>) USRID(sysbusrid) PASSWORD(sysbpwd)

User TESTUSR then uses the STRSQL command to get to an interactive SQL session and issues the following command, where RDB-name is a remote relational database registered using the command WRKRDBDIRE:

CONNECT TO <RDB-name>

The connection attempt is done to the system with <RDB-name> using <sysbusrid> user profile and <sysbpwd> password.

The following steps define the process of group profile authentication:

1. Search the authentication entries where USRPRF=user profile and SERVER=application server name.

2. Search the authentication entries where USRPRF=user profile and SERVER='QDDMDRDASERVER'.

3. Search the authentication entries where USRPRF=group profile and SERVER=application server name.

4. Search the authentication entries where USRPRF=group profile and SERVER='QDDMDRDASERVER'.

5. Search the authentication entries where USRPRF=supplemental group profile and SERVER= application server name.

6. Search the authentication entries where USRPRF=supplemental group profile and SERVER='QDDMDRDASERVER'.

7. If no entry was found in all the previous steps, a USERID only authentication is attempted.

|

Note: When the default authentication entry search order (explained in the previous steps) is used, the search order ceases at connect time if a match is found for QDDMDRDASERVER.

|

The QIBM_DDMDRDA_SVRNAM_PRIORITY environment variable can be used to specified whether an explicit server name order is used when searching for authentication entries.

Example 8-9 shows an example of creating the QIBM_DDMDRDA_SVRNAM_PRIORITY environment variable.

Example 8-9 Create the QIBM_DDDMDRDA_SVRNAM_PRIORITY environment variable

ADDENVVAR ENVVAR(QIBM_DDMDRDA_SVRNAM_PRIORITY) VALUE(’Y’) LEVEL(*JOB or *SYS)

For more information about how to set up authority for DDM and DRDA, see IBM Knowledge Center:

8.3.9 IBM InfoSphere Guardium V9.0 and IBM Security Guardium V10

IBM Security Guardium® (formerly known as IBM InfoSphere® Guardium) is an enterprise information database audit and protection solution that helps enterprises protect and audit information across a diverse set of relational and non-relational data sources. It allows companies to stay compliant with wide range of standards. Here are some of security areas that are monitored:

•Access to sensitive data (successful or failed SELECTs)

•Schema changes (CREATE, DROP, ALTER TABLES, and so on)

•Security exceptions (failed logins, SQL errors, and so on)

•Accounts, roles, and permissions (GRANT and REVOKE)

The Security Guardium product captures database activity from DB2 for i by using the AIX based IBM S-TAP® software module running in PASE. Both audit information and SQL monitor information is streamed to S-TAP. Audit details that are passed to S-TAP can be filtered for both audit data and also DB2 monitor data for performance reasons.

Figure 8-13 shows how Security Guardium collects data from DB2 monitors and the audit journal.

Figure 8-13 InfoSphere Guardium V9.0 Collection Data flow

With InfoSphere Guardium V9.0, DB2 for IBM i can now be included as a data source, enabling the monitoring of accesses from native interfaces and through SQL. Supported DB2 for i data sources are from IBM i 6.1, 7.1, and 7.2.

The Data Access Monitor feature is enhanced in V9.0 with the following capabilities:

•Failover support for IBM i was added.

•Support for multiple policies (up to nine), with IBM i side configuration.

•Filtering improvements, with IBM i side configuration.

For more information about InfoSphere Guardium V9.0 and DB2 for i support, see the following website:

The successor version of InfoSphere Guardium V9.0 is IBM Security Guardium V10.

Version 10 has the Data Access Monitor feature and has a new Vulnerability Assessment (VA) feature. Vulnerability Assessment with IBM i contains a comprehensive set of more than 130 tests that show weaknesses in your IBM i security setup. It allows reporting for IBM i 6.1, 7.1, and 7.2 partitions.

Version 10 has the Data Access Monitor feature and has a new Vulnerability Assessment (VA) feature. Vulnerability Assessment with IBM i contains a comprehensive set of more than 130 tests that show weaknesses in your IBM i security setup. It allows reporting for IBM i 6.1, 7.1, and 7.2 partitions.

Data Access Monitor Enhancements in IBM Security Guardium V10

The Data Access Monitor feature has the following functions for the IBM i client:

•Failover support for IBM i was added.

•Support for multiple policies (up to nine), with IBM i side configuration.

•Filtering improvements, with IBM i side configuration.

•Support for S-TAP load balancing (non-enterprise).

•Encrypted traffic between S-TAP and collector.

•Closer alignment with other UNIX S-TAP.

Figure 8-14 shows the revised S-TAP architecture and enhanced Guardium topology for

IBM Security Guardium V10.

IBM Security Guardium V10.

Figure 8-14 IBM Security Guardium V10 Collection Data flow

For more information about Security Guardium V10 and DB2 for i support, see the following website:

There is also an article in IBM developerWorks covering more details of IBM Security Guardium V10:

8.4 DB2 functional enhancements

This section introduces the functional enhancements of DB2 for i 7.2, which include the following items:

8.4.1 OFFSET and LIMIT clauses

In SQL queries, it is now possible to specify OFFSET and LIMIT clauses:

•The OFFSET clause specifies how many rows to skip before any rows are retrieved as part of a query.

•The LIMIT clause specifies the maximum number of rows to return.

This support is useful when programming a web page containing data from large tables and the user must page up and page down to see additional records. You can use OFFSET to retrieve data only for a specific page, and use LIMIT to see how many records a page has.

The following considerations apply:

•This support is possible only when LIMIT is used as part of the outer full select of a DECLARE CURSOR statement or a prepared SELECT statement.

•The support does not exist within the Start SQL (STRSQL) command.

For more information about the OFFSET and LIMIT clauses, see IBM Knowledge Center:

8.4.2 CREATE OR REPLACE table SQL statement

DB2 for i now allows optional OR REPLACE capability to the CREATE TABLE statement. This improvement gives users the possibility of a much better approach to the modification of tables strategy. Before this support, the only way to modify a table was to use an ALTER TABLE statement and manually remove and re-create dependent objects.

The OR REPLACE capability specifies replacing the definition for the table if one exists at the current server. The existing definition is effectively altered before the new definition is replaced in the catalog.

Definition for the table exists if the following is true:

•FOR SYSTEM NAME is specified and the system-object-identifier matches the system-object-identifier of an existing table.

•FOR SYSTEM NAME is not specified and table-name is a system object name that matches the system-object-identifier of an existing table.

If a definition for the table exists and table-name is not a system object name, table-name can be changed to provide a new name for the table.

This option is ignored if a definition for the table does not exist in the current server.

When the table already exists, the CREATE OR REPLACE TABLE SQL statement can specify behavior that is related to the process of changing the database table by using the ON REPLACE clause.

There are three options:

•PRESERVE ALL ROWS

The current rows of the specified table are preserved. PRESERVE ALL ROWS is not allowed if WITH DATA is specified with result-table-as.

All rows of all partitions in a partitioned table are preserved. If the new table definition is a range partitioned table, the defined ranges must be able to contain all the rows from the existing partitions.

If a column is dropped, the column values are not preserved. If a column is altered, the column values can be modified.

If the table is not a partitioned table or is a hash partitioned table, PRESERVE ALL ROWS and PRESERVE ROWS are equivalent.

•PRESERVE ROWS

The current rows of the specified table are preserved. PRESERVE ROWS is not allowed if WITH DATA is specified with result-table-as.

If a partition of a range partitioned table is dropped, the rows of that partition are deleted without processing any delete triggers. To determine whether a partition of a range partitioned table is dropped, the range definitions and the partition names (if any) of the partitions in the new table definition are compared to the partitions in the existing table definition. If either the specified range or the name of a partition matches, it is preserved. If a partition does not have a partition-name, its boundary-spec must match an existing partition.

If a partition of a hash partition table is dropped, the rows of that partition are preserved.

If a column is dropped, the column values are not preserved. If a column is altered, the column values can be modified.

•DELETE ROWS

The current rows of the specified table are deleted. Any existing DELETE triggers are not fired.

REPLACE rules

When a table is re-created by REPLACE by using PRESERVE ROWS, the new definition of the table is compared to the old definition and logically, for each difference between the two, a corresponding ALTER operation is performed. When the DELETE ROWS option is used, the table is logically dropped and re-created; if objects that depend on the table remain valid, any modification is allowed.

For more information, see Table 8-3. For columns, constraints, and partitions, the comparisons are performed based on their names and attributes.

Table 8-3 Behavior of REPLACE compared to ALTER TABLE functions

|

New definition versus existing definition

|

Equivalent ALTER TABLE function

|

|

Column

|

|

|

The column exists in both and the attributes are the same.

|

No change

|

|

The column exists in both and the attributes are different.

|

ALTER COLUMN

|

|

The column exists only in new table definition.

|

ADD COLUMN

|

|

The column exists only in existing table definition.

|

DROP COLUMN RESTRICT

|

|

Constraint

|

|

|

The constraint exists in both and is the same.

|

No change

|

|

The constraint exists in both and is different.

|

DROP constraint RESTRICT and ADD constraint

|

|

The constraint exists only in the new table definition.

|

ADD constraint

|

|

The constraint exists only in the existing table definition.

|

DROP constraint RESTRICT

|

|

materialized-query-definition

|

|

|

materialized-query-definition exists in both and is the same.

|

No change

|

|

materialized-query-definition exists in both and is different.

|

ALTER MATERIALIZED QUERY

|

|

materialized-query-definition exists only in the new table definition.

|

ADD MATERIALIZED QUERY

|

|

materialized-query-definition exists only in the existing table definition.

|

DROP MATERIALIZED QUERY

|

|

partitioning-clause

|

|

|

partitioning-clause exists in both and is the same.

|

No change

|

|

partitioning-clause exists in both and is different.

|

ADD PARTITION, DROP PARTITION, and ALTER PARTITION

|

|

partitioning-clause exists only in the new table definition.

|

ADD partitioning-clause

|

|

partitioning-clause exists only in the existing table definition.

|

DROP PARTITIONING

|

|

NOT LOGGED INITIALLY

|

|

|

NOT LOGGED INITIALLY exists in both.

|

No change

|

|

NOT LOGGED INITIALLY exists only in the new table definition.

|

NOT LOGGED INITIALLY

|

|

NOT LOGGED INITIALLY exists only in the existing table definition.

|

Logged initially

|

|

VOLATILE

|

|

|

The VOLATILE attribute exists in both and is the same.

|

No change

|

|

The VOLATILE attribute exists only in the new table definition.

|

VOLATILE

|

|

The VOLATILE attribute exists only in the existing table definition.

|

NOT VOLATILE

|

|

media-preference

|

|

|

media-preference exists in both and is the same.

|

No change

|

|

media-preference exists only in the new table definition.

|

ALTER media-preference

|

|

media-preference exists only in the existing table definition.

|

UNIT ANY

|

|

memory-preference

|

|

|

memory-preference exists in both and is the same.

|

No change

|

|

memory-preference exists only in the new table definition.

|

ALTER memory-preference

|

|

memory-preference exists only in the existing table definition.

|

KEEP IN MEMORY NO

|

Any attributes that cannot be specified in the CREATE TABLE statement are preserved:

•Authorized users are maintained. The object owner might change.

•Current journal auditing is preserved. However, unlike other objects, REPLACE of a table generates a ZC (change object) journal audit entry.

•Current data journaling is preserved.

•Comments and labels are preserved.

•Triggers are preserved, if possible. If it is not possible to preserve a trigger, an error is returned.

•Masks and permissions are preserved, if possible. If it is not possible to preserve a mask or permission, an error is returned.

•Any views, materialized query tables, and indexes that depend on the table are preserved or re-created, if possible. If it is not possible to preserve a dependent view, materialized query table, or index, an error is returned.

The CREATE statement (including the REPLACE capability) can be complex. For more information, see IBM Knowledge Center:

8.4.3 DB2 JavaScript Object Notation store

Support for JavaScript Object Notation (JSON) documents was added to DB2 for i. You can store any JSON documents in DB2 as BLOBs. The current implementation for DB2 for i supports the DB2 JSON command-line processor and the DB2 JSON Java API.

The current implementation of DB2 for i matches the subset of support that is available for DB2 for Linux, UNIX, and Windows, and DB2 for z/OS.

The following actions are now supported:

•Use the JDB2 JSON Java API in Java applications to store and retrieve JSON documents as BLOB data from DB2 for i tables.

•Create JSON collections (single BLOB column table).

•Insert JSON documents into a JSON collection.

•Retrieve JSON documents.

•Convert JSON documents from BLOB to character data with the SYSTOOLS.BSON2JSON() UDF.

For details and examples, see the following article in IBM developerWorks:

8.4.4 Remote 3-part name support on ASSOCIATE LOCATOR

Before this enhancement, programmers could not consume result sets that were created on behalf of a remote procedure CALL. With this enhancement, the ASSOCIATE LOCATOR SQL statement can target RETURN TO CALLER style result sets that are created by a procedure that ran on a remote database due to a remote 3-part CALL.

This style of programming is an alternative to the INSERT with remote subselect statement.

Requirements:

•The application requestor (AR) target must be IBM i 7.1 or higher.

•The application server (AS) target must be IBM i 6.1 or higher.

For more information about how to use this enhancement, see IBM Knowledge Center:

8.4.5 Flexible views

Before the flexible views enhancement, programmers could build static views only by using the CREATE VIEW SQL statement, which created a logical file. If a different view or a view with different parameters (WHERE clause) was needed, a new static view had to be created again by using the CREATE VIEW SQL statement.

This enhancement provides a way to build views that are flexible and usable in many more situations just by changing the parameters. The WHERE clause of the flexible view can contain both global built-in variables and the DB2 global variables. These views also can be used for inserting and updating data.

8.4.6 Increased time stamp precision

In IBM i 7.2, up to 12 digits can be set as the time stamp precision. Before IBM i 7.2, only six digits were supported as the time stamp precision. This enhancement applies to DDL and DML. You can specify the time stamp precision in the CREATE TABLE statement. You can also adjust existing tables by using the ALTER TABLE statement.

Example 8-10 Increased time stamp precision

CREATE TABLE corpdb.time_travel(

old_time TIMESTAMP,

new_time TIMESTAMP(12),

no_time TIMESTAMP(0),

Last_Change TIMESTAMP NOT NULL IMPLICITLY HIDDEN FOR EACH ROW ON UPDATE AS

ROW CHANGE TIMESTAMP);

INSERT INTO corpdb.time_travel

VALUES(current timestamp, current timestamp,

current timestamp);

INSERT INTO corpdb.time_travel

VALUES(current timestamp, current timestamp(12),

current timestamp);

SELECT old_time,

new_time,

no_time,

last_change

FROM corpdb.time_travel;

SELECT new_time - last_change as new_minus_last,

new_time - old_time as new_minus_old,

new_time - no_time as new_minus_no

FROM corpdb.time_travel;

Figure 8-15 shows the results of the first SELECT statement in Example 8-10. Notice that the precision of the data of each field varies depending on the precision digits.

Figure 8-15 Results of the first SELECT statement

The second SELECT statement in Example 8-10 calculates the difference of NEW_TIME and each other field. Figure 8-16 shows the results of this SELECT statement. The second record of the result is expressed with the precision as 12 digits because the value that is stored in NEW_TIME has 12 digits as its precision.

Figure 8-16 Results of the second SELECT statement

|

Note: You can adjust the precision of the time stamp columns of existing tables by using an ALTER TABLE statement. However, the existing data in those columns has the remaining initial digits because precision of time stamp data is determined at the time of generation.

|

For more information about timestamp precision, see IBM Knowledge Center:

8.4.7 Named and default parameter support for UDF and UDTFs

Similar to named and default parameters for procedures in IBM i 7.1 TR5, IBM i 7.2 adds this support for SQL and external UDFs. This enhancement provides the usability that is found with CL commands to UDF and UDTFs.

Example 8-11 shows an example of a UDF that specifies a default value to a parameter.

Example 8-11 Named and default parameter support on UDF and UDTFs

CREATE OR REPLACE FUNCTION DEPTNAME(

P_EMPID VARCHAR(6) , P_REQUESTED_IN_LOWER_CASE INTEGER DEFAULT 0)

RETURNS VARCHAR(30)

LANGUAGE SQL

D:BEGIN ATOMIC

DECLARE V_DEPARTMENT_NAME VARCHAR(30);

DECLARE V_ERR VARCHAR(70);

SET V_DEPARTMENT_NAME = (

SELECT CASE

WHEN P_REQUESTED_IN_LOWER_CASE = 0 THEN

D.DEPTNAME

ELSE

LOWER(D.DEPTNAME)

END CASE

FROM DEPARTMENT D, EMPLOYEE E

WHERE E.WORKDEPT = D.DEPTNO AND

E.EMPNO = P_EMPID);

IF V_DEPARTMENT_NAME IS NULL THEN

SET V_ERR = 'Error: employee ' CONCAT P_EMPID CONCAT ' was not found';

SIGNAL SQLSTATE '80000' SET MESSAGE_TEXT = V_ERR;

END IF ;

RETURN V_DEPARTMENT_NAME;

END D;

Example 8-12 shows the VALUES statement that uses the example UDF in Example 8-11 as specifying different values to the P_REQUESTED_IN_LOWER_CASE parameter.

Example 8-12 VALUES statement specifying a different value to the parameter in the UDF

VALUES(DEPTNAME('000110'),

DEPTNAME('000110', 1 ),

DEPTNAME('000110', P_REQUESTED_IN_LOWER_CASE=>1));

Figure 8-17 on page 347 shows the results of Example 8-12.

Figure 8-17 Results of the VALUES statement that uses the UDF

8.4.8 Function resolution by using casting rules

Before IBM i 7.2, function resolution looked for only an exact match with the following perspectives:

•Function name

•Number of parameters

•Data type of parameters

With IBM i 7.2, if DB2 for i does not find an exact match when using function resolution, it looks for the best fit by using casting rules. With these new casting rules, if CAST() is supported for the parameter data type mismatch, the function is found.

For more information about rules, see IBM Knowledge Center:

Example 8-13 describes a sample function and a VALUES statement that uses that function.

Example 8-13 Sample function that casting might have occurred

CREATE OR REPLACE FUNCTION MY_CONCAT(

FIRST_PART VARCHAR(10),

SECOND_PART VARCHAR(50))

RETURNS VARCHAR(60)

LANGUAGE SQL

BEGIN

RETURN(FIRST_PART CONCAT SECOND_PART);

END;

VALUES(MY_CONCAT(123,456789));

Figure 8-18 shows the results of the VALUES statement with an SQLCODE-204 error before IBM i 7.2.

Figure 8-18 Results of the VALUES statement with an SQLCODE-204 error before IBM i 7.2

Figure 8-19 shows the results with IBM i 7.2, in which the function is found with casting rules.

Figure 8-19 Results of the VALUES statement with the FUNCTION found with casting rules

8.4.9 Use of ARRAYs within scalar UDFs

IBM i 7.2 can create a type that is an array and use it in SQL procedures. In addition to this array support, an array can be used in scalar UDFs and in SQL procedures.

Example 8-14 shows an example of creating an array type and using it in a UDF.

Example 8-14 Array support in scalar UDFs

CREATE TABLE EMPPHONE(ID VARCHAR(6) NOT NULL,

PRIORITY INTEGER NOT NULL,

PHONENUMBER VARCHAR(12),

PRIMARY KEY (ID, PRIORITY));

INSERT INTO EMPPHONE VALUES('000001', 1, '03-1234-5678');

INSERT INTO EMPPHONE VALUES('000001', 2, '03-9012-3456');

INSERT INTO EMPPHONE VALUES('000001', 3, '03-7890-1234');

CREATE TYPE PHONELIST AS VARCHAR(12) ARRAY[10];

CREATE OR REPLACE FUNCTION getPhoneNumbers (EmpID CHAR(6)) RETURNS VARCHAR(130)

BEGIN

DECLARE numbers PHONELIST;

DECLARE i INTEGER;

DECLARE resultValue VARCHAR(130);

SELECT ARRAY_AGG(PHONENUMBER ORDER BY PRIORITY) INTO numbers

FROM EMPPHONE

WHERE ID = EmpID;

SET resultValue = '';

SET i = 1;

WHILE i <= CARDINALITY(numbers) DO

SET resultValue = TRIM(resultValue) ||','|| TRIM(numbers[i]);

SET i = i + 1;

END WHILE;

RETURN TRIM(L ',' FROM resultValue);

END;

VALUES(getPhoneNumbers('000001'));

Figure 8-20 shows the results of creating an array type and using it in a UDF.

Figure 8-20 Results of a scalar UDF that uses an array

8.4.10 Built-in global variables

New built-in global variables are supported in IBM i 7.2, as shown in Table 8-4. These variables are referenced-purpose-only variables and maintained by DB2 for i automatically. These variables can be referenced anywhere a column name can be used. Global variables fit nicely into view definitions and RCAC masks and permissions.

Table 8-4 DB2 built-in global variables that are introduced in IBM i 7.2

|

Variable name

|

Schema

|

Data type

|

Size

|

|

CLIENT_IPADDR

|

SYSIBM

|

VARCHAR

|

128

|

|

CLIENT_HOST

|

SYSIBM

|

VARCHAR

|

255

|

|

CLIENT_PORT

|

SYSIBM

|

INTEGER

|

-

|

|

PACKAGE_NAME

|

SYSIBM

|

VARCHAR

|

128

|

|

PACKAGE_SCHEMA

|

SYSIBM

|

VARCHAR

|

128

|

|

PACKAGE_VERSION

|

SYSIBM

|

VARCHAR

|

64

|

|

ROUTINE_SCHEMA

|

SYSIBM

|

VARCHAR

|

128

|

|

ROUTINE_SPECIFIC_NAME

|

SYSIBM

|

VARCHAR

|

128

|

|

ROUTINE_TYPE

|

SYSIBM

|

CHAR

|

1

|

|

JOB_NAME

|

QSYS2

|

VARCHAR

|

28

|

|

SERVER_MODE_JOB_NAME

|

QSYS2

|

VARCHAR

|

28

|

Example 8-15 shows an example of a SELECT statement querying some client information.

Example 8-15 Example of a SELECT statement to get client information from global variables

SELECT SYSIBM.client_host AS CLIENT_HOST,

SYSIBM.client_ipaddr AS CLIENT_IP,

SYSIBM.client_port AS CLIENT_PORT

FROM SYSIBM.SYSDUMMY1

As for getting client information, TCP/IP services in QSYS2, which were introduced in

IBM i 7.1, can be also used, as shown in Example 8-16.

IBM i 7.1, can be also used, as shown in Example 8-16.

Example 8-16 Get client information from TCP/IP services in QSYS2

SELECT * FROM QSYS2.TCPIP_INFO

For more information about DB2 built-in global variables, see IBM Knowledge Center:

8.4.11 Expressions in PREPARE and EXECUTE IMMEDIATE statements

In IBM i 7.2, expressions can be used in the PREPARE and EXECUTE IMMEDIATE statements in addition to variables.

Example 8-17 and Example 8-18 are the statements for getting the information about advised indexes from QSYS2 and putting them into a table in QTEMP. Example 8-17 is an example for before IBM i 7.2 and Example 8-18 is the example for IBM i 7.2. With this enhancement, many SET statements can be eliminated from the generation of the WHERE clause.

Example 8-17 Example of EXECUTE IMMEDIATE with concatenating strings before IBM i 7.2

SET INSERT_STMT = 'INSERT INTO QTEMP.TMPIDXADV SELECT * FROM

QSYS2.CONDENSEDINDEXADVICE WHERE ';

IF (P_LIBRARY IS NOT NULL) THEN

SET WHERE_CLAUSE = 'TABLE_SCHEMA = ''' CONCAT

RTRIM(P_LIBRARY) CONCAT ''' AND ';

ELSE

SET WHERE_CLAUSE = '';

END IF;

IF (P_FILE IS NOT NULL) THEN

SET WHERE_CLAUSE = WHERE_CLAUSE CONCAT ' SYSTEM_TABLE_NAME = ''' CONCAT

RTRIM(P_FILE) CONCAT ''' AND ';

END IF;

IF (P_TIMES_ADVISED IS NOT NULL) THEN

SET WHERE_CLAUSE = WHERE_CLAUSE CONCAT ' TIMES_ADVISED >= ' CONCAT

P_TIMES_ADVISED CONCAT ' AND ';

END IF;

IF (P_MTI_USED IS NOT NULL) THEN

SET WHERE_CLAUSE = WHERE_CLAUSE CONCAT 'MTI_USED >= ' CONCAT

P_MTI_USED CONCAT ' AND ';

END IF;

IF (P_AVERAGE_QUERY_ESTIMATE IS NOT NULL) THEN

SET WHERE_CLAUSE = WHERE_CLAUSE CONCAT ' AVERAGE_QUERY_ESTIMATE >= ' CONCAT

P_AVERAGE_QUERY_ESTIMATE CONCAT ' AND ';

END IF;

SET WHERE_CLAUSE = WHERE_CLAUSE CONCAT ' NLSS_TABLE_NAME = ''*HEX'' ';

SET INSERT_STMT = INSERT_STMT CONCAT WHERE_CLAUSE;

EXECUTE IMMEDIATE INSERT_STMT;

Example 8-18 Example of EXECUTE IMMEDIATE with concatenating strings for IBM i 7.2

EXECUTE IMMEDIATE 'INSERT INTO QTEMP.TMPIDXADV SELECT * FROM

QSYS2.CONDENSEDINDEXADVICE WHERE ' CONCAT

CASE WHEN P_LIBRARY IS NOT NULL THEN

' TABLE_SCHEMA = ''' CONCAT RTRIM(P_LIBRARY) CONCAT ''' AND '

ELSE '' END CONCAT

CASE WHEN P_FILE IS NOT NULL THEN

' SYSTEM_TABLE_NAME = ''' CONCAT RTRIM(P_FILE) CONCAT ''' AND '

ELSE '' END CONCAT

CASE WHEN P_TIMES_ADVISED IS NOT NULL THEN

' TIMES_ADVISED >= ' CONCAT P_TIMES_ADVISED CONCAT ' AND '

ELSE '' END CONCAT

CASE WHEN P_MTI_USED IS NOT NULL THEN

' MTI_USED >= ' CONCAT P_MTI_USED CONCAT ' AND '

ELSE '' END CONCAT

CASE WHEN P_AVERAGE_QUERY_ESTIMATE IS NOT NULL THEN

' AVERAGE_QUERY_ESTIMATE >= ' CONCAT P_AVERAGE_QUERY_ESTIMATE CONCAT ' AND '

ELSE '' END CONCAT

' NLSS_TABLE_NAME = ''*HEX'' ';

8.4.12 Autonomous procedures

In IBM i 7.2, an autonomous procedure was introduced. An autonomous procedure is run in a unit of work that is independent from the calling application. A new AUTONOMOUS option is supported in the CREATE PROCEDURE and ALTER PROCEDURE statements.

By specifying a procedure as autonomous, any process in that procedure can be performed regardless of the result of the invoking transaction.

Example 8-19 shows an example of creating an autonomous procedure that is intended to write an application log to a table. In this example, procedure WriteLog is specified as AUTONOMOUS.

Example 8-19 Autonomous procedure

CREATE TABLE TRACKING_TABLE (LOGMSG VARCHAR(1000),

LOGTIME TIMESTAMP(12));

COMMIT;

CREATE OR REPLACE PROCEDURE WriteLog(loginfo VARCHAR(1000))

AUTONOMOUS

BEGIN

INSERT INTO TRACKING_TABLE VALUES(loginfo, current TIMESTAMP(12));

END;

CALL WriteLog('*****INSERTION 1 START*****');

INSERT INTO EMPPHONE VALUES('000002', 1, '03-9876-5432');

CALL WriteLog('*****INSERTION 1 END*****');

CALL WriteLog('*****INSERTION 2 START*****');

INSERT INTO EMPPHONE VALUES('000002', 2, '03-8765-4321');

CALL WriteLog('*****INSERTION 2 END*****');

/**roll back insertions**/

ROLLBACK;

SELECT * FROM EMPPHONE WHERE ID = '000002';

SELECT * FROM TRACKING_TABLE;

Figure 8-21 show the results of creating an array type and using it in a UDF.

Figure 8-21 Results of an AUTONOMOUS procedure

For more information about AUTONOMOUS, see the IBM Knowledge Center:

8.4.13 New SQL TRUNCATE statement

A new SQL TRUNCATE statement deletes all rows from a table. This statement can be used in an application program and also interactively.

The TRUNCATE statement has the following additional functions:

•DROP or REUSE storage

•IGNORE or RESTRICT when delete triggers are present

•CONTINUE or RESTART identity values

•IMMEDIATE, which performs the operation without commit, even if it is running under commitment control

Figure 8-22 shows the syntax of the TRUNCATE statement.

Figure 8-22 Syntax of the TRUNCATE statement

For more information about the TRUNCATE statement, see IBM Knowledge Center:

8.4.14 New LPAD() and RPAD() built-in functions

Two new built-in functions, LPAD() and RPAD(), are introduced in IBM i 7.2. These functions return a string that is composed of strings that is padded on the left or right.

Example 8-20 shows an example of the LPAD() built-in function. This example assumes that the CUSTOMER table, which has a NAME column with VARCHAR(15), contains the values of Chris, Meg, and John.

Example 8-20 LPAD() built-in function

CREATE TABLE CUSTOMER(CUSTNO CHAR(6), NAME VARCHAR(15));

INSERT INTO CUSTOMER VALUES('000001', 'Chris');

INSERT INTO CUSTOMER VALUES('000002', 'Meg');

INSERT INTO CUSTOMER VALUES('000003', 'John');

SELECT LPAD(NAME, 15, '.') AS NAME FROM CUSTOMER;

Figure 8-23 show the results of the SELECT statement, which pads out a value on the left with periods.

Figure 8-23 Results of the SELECT statement with LPAD()

Example 8-21 shows the example of RPAD() built-in function.

Example 8-21 SELECT statement with RPAD()

SELECT RPAD(NAME, 10, '.') AS NAME FROM CUSTOMER;

Opposite to LPAD(), the results of the SELECT statement, which is shown in Figure 8-24, pads out a value on the right with periods. Note that 10 is specified as the length parameter in RPAD() so that the result is returned as a 10-digit value.

Figure 8-24 Results of the SELECT statement with RPAD()

Both LPAD() and RPAD() can be used on single-byte character set (SBCS) data and double-byte character set (DBCS) data, such as Japan Mix CCSID 5035. DBCS characters can also be specified as a pad string, as can SBCS characters.

Figure 8-25 shows an example of LPAD() for DBCS data within a column that is defined as VARCHAR(15) CCSID 5035 and aligned each row at the right end. A double-byte space (x’4040’ in CCSID 5035) is used as a pad string in this example. As shown in Figure 8-25, specifying DBCS data as a pad string is required for aligning DBCS data at the right or left end.

Figure 8-25 Results of an example of LPAD() for DBCS data

For more information about LPAD() and RPAD(), including considerations when using Unicode, DBCS, or SBCS/DBCS mixed data, see IBM Knowledge Center:

•LPAD()

•RPAD()

8.4.15 Pipelined table functions

Pipelined table functions provide the flexibility to create programmatically virtual tables that are greater than what the SELECT or CREATE VIEW statements can provide.

Pipelined table functions can pass the result of an SQL statement or process and return the result of UDTFs. Before IBM i 7.2 TR1, creating an external UDTF is required for obtaining the same result as the pipelined table function.

Example 8-22 shows an example of creating a pipeline SQL UDTF that returns a table of CURRENT_VALUE from QSYS2.SYSLIMITS.

Example 8-22 Example of pipeline SQL UDTF

CREATE FUNCTION producer()

RETURNS TABLE (largest_table_sizes INTEGER)

LANGUAGE SQL

BEGIN

FOR LimitCursor CURSOR FOR

SELECT CURRENT_VALUE

FROM QSYS2.SYSLIMITS

WHERE SIZING_NAME = 'MAXIMUM NUMBER OF ALL ROWS'

ORDER BY CURRENT_VALUE DESC

DO

PIPE (CURRENT_VALUE);

END FOR;

RETURN;

END;

Example 8-23 shows an example of creating an external UDTF that returns the same result of a pipeline SQL UDTF, as shown in Example 8-22. These examples show that pipelined functions allow the flexibility to code more complex UDTFs purely in SQL without having to build and manage a compiled program or service program.

Example 8-23 Example of external UDTF

CRTSQLCI OBJ(applib/producer) SRCFILE(appsrc/c80) COMMIT(*NONE) OUTPUT(*PRINT) OPTION(*NOGEN)

CRTCMOD MODULE(applib/producer) SRCFILE(qtemp/qsqltemp) TERASPACE(*YES) STGMDL(*INHERIT)

CRTSRVPGM SRVPGM(applib/udfs) MODULE(applib/producer) EXPORT(*ALL) ACTGRP(*CALLER)

CREATE FUNCTION producer() RETURNS TABLE (int1 INTEGER) EXTERNAL NAME applib.udfs(producer) LANGUAGE C PARAMETER STYLE SQL

Pipelined table functions can be used in the following cases:

•UDTF input parameters

•Ability to handle errors and warnings

•Application logging

•References to multiple databases in a single query

•Customized join behavior

These table functions are preferred in the case where only a subset of the result table is consumed in big data, analytic, and performance scenarios, rather than building a temporary table. Using pipelined table functions provides memory savings and better performance instead of creating a temporary table.

For more information about pipelined table functions, see the following website:

8.4.16 Regular expressions

A regular expression in an SQL statement is supported by IBM i 7.2. A regular expression is one of the ways of expressing a collection of strings as a single string and is used for specifying a pattern for a complex search.

Table 8-5 shows the built-in functions and predicates for regular expressions in DB2 for i.

Table 8-5 Function or predicate for regular expressions

|

Function or predicate

|

Description

|

|

REGEXP_LIKE predicate

|

Searches for a regular expression pattern in a string and returns True or False.

|

|

REGEXP_COUNT

REGEXP_MATCH_COUNT

|

Returns a count of the number of times that a pattern is matched in a string.

|

|

REGEXP_INSTR

|

Returns the starting or ending position of the matched substring.

|

|

REGEXP_SUBSTR

REGEXP_EXTRACT

|

Returns one occurrence of a substring of a string that matches the pattern.

|

|

REGEXP_REPLACE

|

Returns a modified version of the source string where occurrences of the pattern found in the source string are replaced with the specified replacement string.

|

Example 8-24 shows an example of a regular expression in a pipelined table function that searches and returns URL candidates from a given string.

Example 8-24 Example of a regular expression in a pipelined table function

CREATE OR REPLACE FUNCTION FindHits(v_search_string CLOB(1M), v_pattern varchar(32000) DEFAULT '(w+.)+((org)|(com)|(gov)|(edu))')

RETURNS TABLE (website_reference varchar(512))

LANGUAGE SQL

BEGIN

DECLARE V_Count INTEGER;

DECLARE LOOPVAR INTEGER DEFAULT 0;

SET V_Count = REGEXP_COUNT(v_search_string, v_pattern,1,'i');

WHILE LOOPVAR < V_Count DO

SET LOOPVAR = LOOPVAR + 1;

PIPE(values(REGEXP_SUBSTR(v_search_string,v_pattern, 1, LOOPVAR, 'i')));

END WHILE;

RETURN;

END;

SELECT * FROM TABLE(FindHits('Are you interested in any of these colleges: abcd.EDU or www.efgh.Edu. We could even visit WWW.ijkl.edu if we have time.')) A;

For more information about regular expressions, see the following website:

8.4.17 JOB_NAME and SERVER_MODE_JOB_NAME built-in global variables

New built-in global variables JOB_NAME and SERVER_MODE_JOB_NAME are supported by IBM i 7.2. JOB_NAME contains the name of the current job and SERVER_MODE_JOB_NAME contains the name of the job that established the SQL server mode connection.

Each of these global variables has the following characteristics:

•Read-only, with values maintained by the system.

•Type is VARCHAR(28).

•Schema is QSYS2.

•Scope of this global variable is session.

If there is no server mode connection, the value of SERVER_MODE_JOB_NAME is NULL. This means that SERVE_MODE_JOB_NAME is valid for only QSQSRVR and if it referenced outside of a QSQSRVR job, the NULL value is returned.

For more information about these global variables, see the following links:

•IBM i Technology Updates:

•IBM Knowledge Center:

8.4.18 RUNSQL control of output listing

The parameters that are shown in Figure 8-26 are now available in the Run SQL (RUNSQL) CL command. The RUNSQL CL command can be used to run a single SQL statement. These new parameters work the same as described in the RUNSQLSTM CL command.

|

Run SQL (RUNSQL)

Type choices, press Enter.

Source listing options . . . . . OPTION *NOLIST

Print file . . . . . . . . . . . PRTFILE QSYSPRT

Library . . . . . . . . . . . *LIBL

Second level text . . . . . . . SECLVLTXT *NO

|

Figure 8-26 New parameters on the RUNSQL CL command

For more information about the RUNSQL CL command, see the following website:

8.4.19 LOCK TABLE ability to target non-*FIRST members

The LOCK TABLE SQL statement is enhanced to allow applications to target specific members within a multiple-member physical file. An application can use the Override Database File (OVRDBF) or the CREATE ALIAS SQL statements to identify the non-*FIRST member.

For more information about LOCK TABLE SQL statement, see IBM Knowledge Center:

8.4.20 QUSRJOBI() retrieval of DB2 built-in global variables

The QUSRJOBI() API JOBI0900 format is extended to allow for direct access to the following DB2 for i built-in global variable values that are mentioned in 8.4.10, “Built-in global variables” on page 351:

•CLIENT_IPADDR

•CLIENT_HOST

•CLIENT_PORT

•ROUTINE_TYPE

•ROUTINE_SCHEMA

•ROUTINE_NAME

For more information about this enhancement, including the position of each value in JOBI0900, see the following website:

8.4.21 SQL functions (user-defined functions and user-defined table functions) parameter limit

The maximum number of parameters for user-defined functions and user-defined table functions was increased from 90 to 1024. Also, the maximum number of returned columns was increased from (125 minus the number of parameters) to (1025 minus the number of parameters).

8.4.22 New binary scalar functions

New built-in scalar functions were introduced in IBM i 7.2 to support interaction with Universal Unique Identifiers (UUIDs) across distribute systems:

•VARBINARY_FORMAT scalar function: This scalar function converts character data and returns a binary string.

•VARCHAR_FORMAT_BINARY scalar function: This scalar function converts binary data and returns a character string.

Both scalar functions can specify a format-string that is used to interpret a given expression and then convert from or into the UUIDs standard format.

For more information, see IBM Knowledge Center:

8.4.23 Adding and dropping partitions spanning DDS logical files

In previous IBM i versions, if you wanted to add a partition or drop a partition of a DDS logical file, you had to delete the logical file or all members in that logical file had to be removed before running the ALTER TABLE statement.

In IBM i 7.2, the ALTER TABLE ADD PARTITION and DROP PARTITION statements allow spanning of DDS logical files. If the DDS logical file was created over all existing partitions, DB2 for i manages the automatic re-creation of the logical file to compensate for removed partitions and to include new partitions.

Figure 8-27 shows the ALTER TABLE ADD PARTITON support for spanning DDS logical files.

Figure 8-27 Spanning DDS logical files

8.4.24 Direct control of system names for global variables

The optional clause FOR SYSTEM NAME was added to the CREATE VARIABLE SQL statement. The FOR SYSTEM NAME clause directly defines the name for these objects.

In Example 8-25, LOC is the system-object-identifier, which is assigned as a system object name instead of an operating system-generated one. However, selecting this system-object-identifier has some considerations:

•The system name must not be the same as any global variable already existing on the same system.

•The system name must be unqualified.

Example 8-25 Example of a CREATE VARIABLE SQL statement using the FOR SYSTEM NAME clause

CREATE VARIABLE MYSCHEMA.MY_LOCATION FOR SYSTEM NAME LOC CHAR(20) DEFAULT (select LOCATION from MYSCHEMA.locations where USER_PROFILE_NAME = USER);

For more information about this topic, see the IBM Knowledge Center:

8.4.25 LOCK TABLE ability to target non-FIRST members

The LOCK TABLE SQL statement is enhanced to allow applications to target specific members within a multiple-member physical file. The application can use the Override Database File (OVRDBF) command or the CREATE ALIAS SQL statement to identify the non-*FIRST member.

8.5 DB2 for i services and catalogs

DB2 for i now allows programmers to use SQL as a query language and a traditional programming language. Over time, the SQL language has gained new language elements that allow traditional programming patterns. Using UDFs, stored procedures, triggers, and referential integrity support, it is now easy to keep part of the programming logic encapsulated within the database itself, reducing the complexity of programming in third-generation programming languages, such as ILE RPG, ILE C/C++, ILE COBOL, and Java.

Moreover, DB2 for i provides a service that allows programmers and system administrators to access system information by using the standard SQL interface. This allows programming and system management to be managed in an easier way.

The following topics are covered in this section:

8.5.1 DB2 for i catalogs

DB2 for i catalogs contain information about DB2 related objects, such as tables, indexes, schemas, columns, fields, labels, and views.

There are three classes of catalog views:

•DB2 for i catalog tables and views (QSYS2)

The catalog tables and view contain information about all tables, parameters, procedures, functions, distinct types, packages, XSR objects, views, indexes, aliases, sequences, variables, triggers, and constraints in the entire relational database.

•ODBC and JDBC catalog views (SYSIBM)

The ODBC and JDBC catalog views are designed to satisfy ODBC and JDBC metadata API requests.

•ANS and ISO catalog views (QSYS2)

The ANS and ISO catalog views are designed to comply with the ANS and ISO SQL standard. These views will be modified as the ANS and ISO standard is enhanced or modified.

|

Note: IBM i catalogs contain tables or views that are related only to the IBM i operating system.

|

DB2 for i catalogs can be grouped by related objects, as shown in the following tables.

Table 8-6 lists the catalogs.

Table 8-6 Catalogs

|

Name

|

Catalog type

|

Description

|

|

SYSCATALOGS

|

DB2

|

Information about relational database

|

|

INFORMATION_SCHEMA_CATALOG_NAME

|

ANS/ISO

|

Information about relational database

|

Table 8-7 lists the schemas.

Table 8-7 Schemas

|

Name

|

Catalog type

|

Description

|

|

SYSSCHEMAS

|

DB2

|

Information about schemas

|

|

SQLSCHEMAS

|

ODBC/JDBC

|

Information about schemas

|

|

SCHEMATA

|

ANS/ISO

|

Statistical information about schemas

|

Table 8-8 lists the database support.

Table 8-8 Database support

|

Name

|

Catalog type

|

Description

|

|

SQL_FEATURES

|

ANS/ISO

|

Information about the feature that is supported by the database manager

|

|

SQL_LANGUAGES

|

ANS/ISO

|

Information about the supported languages

|

|

SQL_SIZING

|

ANS/ISO

|

Information about the limits that are supported by the database manager

|

|

CHARACTER_SETS

|

ANS/ISO

|

Information about supported CCSIDs

|

Table 8-9 lists the tables, views, and indexes.

Table 8-9 Tables, views, and indexes

|

Name

|

Catalog type

|

Description

|

|

SYSCOLUMS

|

DB2

|

Information about column attributes

|

|

SYSCOLUMS2

|

DB2

|

Information about column attributes

|

|

SYSFIELDS

|

DB2

|

Information about field procedures

|

|

SYSINDEXES

|

DB2

|

Information about indexes

|

|

SYSKEYS

|

DB2

|

Information about indexes keys

|

|

SYSTABLEDEP

|

DB2

|

Information about materialized query table dependencies

|

|

SYSTABLES

|

DB2

|

Information about tables and views

|

|

SYSVIEWDEP

|

DB2

|

Information about view dependencies on tables

|

|

SYSVIEWS

|

DB2

|

Information about definition of a view

|

|

SQLCOLUMNS

|

ODBC/JDBC

|

Information about column attributes

|

|

SQLSPECIALCOLUMNS

|

ODBC/JDBC

|

Information about the columns of a table that can be used to uniquely identify a row

|

|

SQLTABLES

|

ODBC/JDBC

|

Information about tables

|

|

COLUMNS

|

ANS/ISO

|

Information about columns

|

|

TABLES

|

ANS/ISO

|

Information about tables

|

|

VIEWS

|

ANS/ISO

|

Information about views

|

Table 8-10 lists the constraints.

Table 8-10 Constraints

|

Name

|

Catalog type

|

Description

|

|

SYSCHKCST

|

DB2

|

Information about check constraints

|

|

SYSCST

|

DB2

|

Information about all constraints

|

|

SYSCSTCOL

|

DB2

|

Information about columns referenced in a constraint

|

|

SYSCSTDEP

|

DB2

|

Information about constraint dependencies on tables

|

|

SYSKEYCST

|

DB2

|

Information about unique, primary, and foreign keys

|

|

SYSREFCST

|

DB2

|

Information about referential constraints

|

|

SQLFOREIGNKEYS

|

ODBC/JDBC

|

Information about foreign keys

|

|

SQLPRIMARYKEYS

|

ODBC/JDBC

|

Information about primary keys

|

|

CHECK_CONSTRAINTS

|

ANS/ISO

|

Information about check constraints

|

|

REFERENTIAL_CONSTRAINTS

|

ANS/ISO

|