Chapter 1

Interdomain Routing and Internet Multicast

This chapter explains the fundamental requirements for interdomain multicast and the three pillars of interdomain design: the control plane for source identification, the control plane for receiver identification, and the downstream control plane.

Introduction to Interdomain Multicast

Applications may require support for multicasting over large and diverse networks, such as the Internet. It is possible that a sender may exist on one side of a diverse network, far away from potential receivers. Multicast receivers could exist on any network, including networks that are altogether foreign to the sender’s network, being under completely different administrative control with different policies governing multicast forwarding.

In such scenarios, there is no reasonable expectation that all Layer 3 devices in the forwarding path will share similar configurations or policies. This is certainly the reality of multicast applications that use the Internet. Therefore, additional protocols and configuration outside basic multicast transport are required to provide internetwork multicast service. Why is this the case? Aren’t large internetworks, such as the Internet, using the same Internet Protocol (IP) for multicasting? If each network is administered using different rules, how does anything on the Internet work? As discussed in the book IP Multicast, Volume 1, the de facto standard forwarding protocol for IP Multicast is Protocol Independent Multicast (PIM). If PIM is universal, why are different policies required? A brief introduction to Internet principles will answer these questions and help you understand why additional consideration is needed when designing multicast applications across internetworks.

Internet Protocol was created as a “best effort” service. Even when IP was expanded to enable the World Wide Web (WWW) and multinetwork connectivity, best effort was a universal maxim. This maxim still exists; it dictates the forwarding behavior of today’s Internet and also, therefore, that of any other large multidomain network. Best effort in this context means that as IP traffic passes from one network to the next, it is assumed that each transit network is properly configured for optimal forwarding to any appropriate destinations. There are no guarantees of optimal forwarding—or even that packets will make it to the final destination at all.

Note: The concept of best-effort forwarding is universal and applies to all IP traffic: unicast, multicast, and broadcast. This introduction briefly explores this concept, which further exposes the need for additional forwarding mechanisms for multicast traffic across diverse networks. While basic in nature, this review establishes the foundation for multicast forwarding across internetworks. An advanced understanding of unicast Internet forwarding, including the use of Border Gateway Protocol (BGP), is assumed throughout this chapter.

If you examine the Internet from a macro perspective, you see that it is essentially a mesh of connections between many disparate networks. Typically, Internet service providers (ISPs) connect to each other for transit and/or peering to provide interconnection to other service providers or customers.

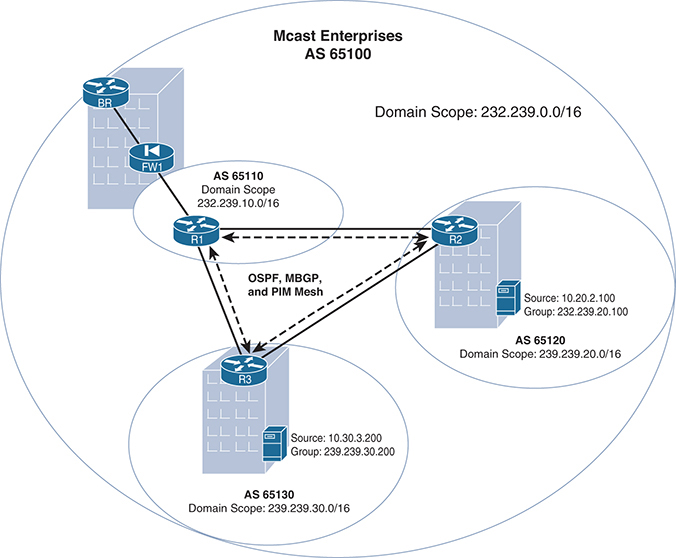

End customers include all manner of connections, including homes, cellular networks, small and medium-sized businesses, research institutions, hospitals, governments, enterprise businesses, and others. Figure 1-1 shows a small, isolated example of how this interconnectivity works, using a fictional company called Mcast Enterprises.

Figure 1-1 Mcast Enterprises Interconnectivity

Each of the network entities (surrounded by an oval) shown in Figure 1-1 is known as an autonomous system (AS)—that is, a network that has administrative and operational boundaries, with clear demarcations between itself and any other network AS. Like IP addresses, autonomous systems are numbered, and the assignment of numbers is controlled by the Internet Assigned Numbers Authority (IANA). Figure 1-2 shows the previous Internet example network represented as simple AS bubbles using private autonomous system numbers (ASNs).

Figure 1-2 The Mcast Enterprises Network as a System of Connected Autonomous Systems

Note: ASNs, as defined by the IETF, are public, just like IP addresses. However, as with IP addresses, there is a private number range that is reserved for use in non-public networks. The standard 16-bit private ASN range is 64512–65535, which is defined by RFC 6996 (a 2013 update to RFC 1930). Even though Internet functions may be discussed, all numbers used in this text (for IP addressing, ASNs, and multicast group numbers) are private to prevent confusion with existing Internet services and to protect public interest.

Some routing information is shared between the interconnected ASs to provide a complete internetwork picture. Best-effort forwarding implies that as routers look up destination information and traffic transits between ASs, each AS has its own forwarding rules. To illustrate this concept, imagine that a home user is connected to ISP Red (AS 65001) and sends an IP web request to a server within Mcast Enterprises (AS 65100). The enterprise does not have a direct connection to ISP Red. Therefore, ISP Red must forward the packets to ISP Blue, and ISP Blue can then forward the traffic to the enterprise AS with the server. ISP Red knows that ISP Blue can reach the enterprise web server at address 10.10.1.100 because ISP Blue shared that routing information with all the ASs it is connected to. ISP Red does not control the network policy or functions of ISP Blue and must trust that the traffic can be successfully passed to the server. In this situation, ISP Blue acts as a transit network. Figure 1-3 illustrates this request.

Figure 1-3 Best-Effort Forwarding from One AS to Another

Some protocols are designed to make best-effort forwarding between ASs more precise. This set of protocols is maintained by the IETF, an international organization that governs all protocols related to IP forwarding, including PIM and BGP. The IETF—like the Internet—is an open society in which standards are formed collaboratively. This means there is no inherent administrative mandate placed on network operating system vendors or networks connecting to the Internet to follow protocol rules precisely or even in the same way.

This open concept is one of the more miraculous and special characteristics of the modern Internet. Networks and devices that wish to communicate across network boundaries should use IETF-compliant software and configurations. Internet routers that follow IETF protocol specifications should be able to forward IP packets to any destination on any permitted IP network in the world. This assumes that every Internet-connected router shares some protocols with its neighbors, and those protocols are properly implemented (as discussed further later in this chapter); each router updates neighbor ASs with at least a summary of the routes it knows about. This does not assume that every network is configured or administered in the same way.

For example, an AS is created using routing protocols and policies as borders and demarcation points. In fact, routing protocols are specifically built for these two purposes. Internal routing is handled by an Interior Gateway Protocol (IGP). Examples of IGPs include Open Shortest Path First (OSPF) and Intermediate System-to-Intermediate System (IS-IS). Routers use a chosen IGP on all the routed links within the AS. When routing protocols need to share information with another AS, an External Gateway Protocol (EGP) is used to provide demarcation. This allows you to use completely separate routing policies and security between ASs that might be obstructive or unnecessary for internal links.

Border Gateway Protocol version 4 (BGPv4) is the EGP that connects all Internet autonomous systems together. Figure 1-4 shows an expanded view of the Mcast Enterprises connectivity from Figure 1-3, with internal IGP connections and an EGP link with ISP Blue. Mcast Enterprises shares a single summary route for all internal links to ISP Blue via BGP.

Figure 1-4 AS Demarcation: IGP Links Using OSPF and EGP Links Using BGP

The separation between internal (IGP) and external (EGP) routes provides several important benefits. The first is protection of critical internal infrastructure from outside influence, securing the internal routing domain. Both security and routing policies can be applied to the EGP neighborship with outside networks. Another clear benefit is the ability to better engineer traffic. Best-effort forwarding may be acceptable for Internet connections and internetwork routing. However, you definitely need more finite control over internal routes. In addition, if there are multiple external links that share similar routing information, you may want to control external path selection or influence incoming path selection—without compromising internal routing fidelity. Finally, you may choose to import only specific routes from external neighbors or share only specific internal routes with outside neighbors. Selective route sharing provides administrators control over how traffic will or will not pass through each AS.

Why is all this relevant to multicast? PIM is the IETF standard for Any-Source Multicast (ASM) and Source-Specific Multicast (SSM) in IP networks. Many people refer to PIM as a multicast routing protocol. However, PIM is unlike any IGP or EGP. It is less concerned with complex route sharing policy than with building loop-free forwarding topologies or trees. PIM uses the information learned from IP unicast routing protocols to build these trees. PIM networking and neighborships have neither an internal nor an external characteristic. PIM neighborships on a single router can exist between both IGP neighbors and EGP neighbors. If multicast internetworking is required between two ASs, PIM is a requirement. Without this relationship, a tree cannot be completed.

Crossing the administrative demarcation point from one AS to another means crossing into a network operating under a completely different set of rules and with potentially limited shared unicast routing information. Even when all the routers in two different networks are using PIM for forward multicasting, forming a forwarding tree across these networks using PIM alone is virtually impossible because Reverse Path Forwarding (RPF) information will be incomplete. In addition, it is still necessary to secure and create a prescriptive control plane for IP Multicast forwarding as you enter and exit each AS. The following sections explore these concepts further and discuss how to best forward multicast application traffic across internetworks and the Internet.

What Is a Multicast Domain? A Refresher

Before we really discuss interdomain multicast forwarding, let’s clearly define the characteristics of a multicast domain. Just like the unicast routing protocols OSPF, IS-IS, and EIGRP, PIM routers have the capability to dynamically share information about multicast trees. Most networks use only one IGP routing protocol for internal route sharing and routing table building, and there are some similarities between multicast domains and unicast domains.

When an IGP network is properly designed, routers in the network have the same routes in their individual routing information base (RIB). The routes may be summarized into larger entries on some routers—even as far as having only one summary (default) route on stub routers. Often times this process is controlled by the use of configured route sharing policy. Link-state routing protocols, such as IS-IS or OSPF, can use regions or areas to achieve summarization and route selection. The routing protocol dynamically provides the necessary routing information to all the segmented routers that need the information, allowing network architects to create boundaries between various portions of a network.

The deployed IGP also has a natural physical boundary. When the interface of an IGP router is not configured to send/receive IGP information, that interface bounds the IGP. This serves the purpose of preventing internal routing information from leaking to routers that should not or do not need internal information.

As discussed earlier in this chapter, routes between two autonomous systems are shared through the use of an EGP, and BGP is the EGP of the Internet. Most administrators configure BGP to share only essential IGP-learned routes with external routers so that only internal networks meant for public access are reachable by external devices. The routing process of the IGP is kept separate and secure from external influence. For most networks, the natural boundary of the internal routing domain lies between the IGP and the EGP of the network.

Multicast networks must also have boundaries. These boundaries may be drastically different from those of the underlying unicast network. Why? It is important to remember that IP Multicast networks are overlays on the IGP network, and most network routers can have only one PIM process. PIM uses the information found in the RIB from router to router to build both a local multicast forwarding tree and a networkwide forwarding tree. Let’s quickly review how this works.

As you know, when a router forwards unicast IP traffic, it is concerned only with the destination of the packet. The router receives a packet from an upstream device, reads the IP destination from the packet header, and then makes a path selection, forwarding the packet toward the intended destination. The RIB contains the destination information for the router to make the best forwarding decision. As explained in IP Multicast, Volume 1, multicast forwarding uses this same table but completely different logic to get packets from the source to multiple receivers on multiple paths. Receivers express interest in a multicast stream (a flow of IP packets) by subscribing to a particular group address. PIM tracks these active groups and subscribed receivers. Because receivers can request the stream from nearly any location in the network, there are many possible destination paths.

The multicast router needs to build a forwarding tree that includes a root at the source of the stream and branches down any paths toward joined receivers. The branches of the tree cannot loop back to the source, or the forwarding paradigm of efficiency will be entirely broken. PIM uses the RIB to calculate the source of packets rather than the destination. Forwarding of this nature, away from a source and toward receivers, with source route verification, is called Reverse Path Forwarding (RPF).

When a multicast packet arrives at a router, the first thing the router does is perform an RPF check. The IP source of the packet header and the interface on which it was received are compared to the RIB. If the RIB contains a proper best route to the source on the same interface on which the packet was received, the RPF check is successful. PIM then forwards that packet, as long as it has a proper tree constructed (or can construct a new tree) for the destination group.

If the required entries are not in the unicast RIB and the RPF table, the router drops any multicast packets to prevent a loop. The router doesn’t just make these RPF checks independently for every packet. RPF checks are performed by the PIM process on the router, and they are also used for building the multicast forwarding tree. If a packet fails the RPF check, the interface on which the packet is received is not added to any trees for the destination group. In fact, PIM uses RPF in almost all tree-building activities.

In addition, PIM as a protocol is independent of any unicast routing protocols or unicast forwarding. Proper and efficient multicast packet forwarding is PIM’s main purpose; in other words, PIM is designed for tree building and loop prevention. As of this writing, most PIM domains run PIM Sparse-Mode (PIM–SM). A quick review of PIM–SM mechanics provides additional aid in the exploration of multicast domains.

There are two types of forwarding trees in PIM–SM: the shortest-path tree (also known as a source tree) and the shared tree. The source tree is a tree that flows from the source (the root of the tree) to the receivers (the leaves) via the shortest (most efficient) network path. You can also think of the source as a server and the receivers as clients of that server. As mentioned earlier, clients must be connected to the Layer 3 network to be included in the tree. This allows the router to see the best path between the source and the receivers.

The subscribed clients become a “group,” and, in fact, clients use a multicast group address to perform the subscription. Internet Group Management Protocol (IGMP) is the protocol that manages the client subscription process. IGMP shares the IP group subscriptions with PIM. PIM then uses those groups to build the source tree and shares the tree information with other PIM neighbors.

Note: This review is only meant to be high level as it is germane to understanding why additional protocols are required for interdomain routing. If any of these topics are more than a simple review for you, you can find a deeper study of IGMP, PIM mechanics, and tree building in additional texts, such as IP Multicast, Volume 1.

Keep in mind that there may be many locations in a network that have a receiver. Any time a router has receivers that are reached by multiple interfaces, the tree must branch, and PIM must RPF check any received sources before adding forwarding entries to the router’s Multicast Forwarding Information Base (MFIB). A source tree in a multicast forwarding table is represented by an (S, G) entry (for source, group). The (S, G) entry contains RPF information such as the interface closest to the source and the interfaces in the path of any downstream receivers.

The branches in the entry are displayed as a list of outgoing interfaces, called an outgoing interface list (OIL). This is essentially how PIM builds the source tree within the forwarding table. Neighboring PIM routers share this information with each other so that routers will independently build proper trees, both locally and across the network. Example 1-1 shows what a completed tree with incoming interfaces and OILs look like on an IOS-XE router by using the show ip mroute command.

Example 1-1 Completed Trees

R1# show ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route,

x - VxLAN group

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.1.1.1), 2d01h/00:03:22, RP 192.168.100.100, flags: SJC

Incoming interface: Ethernet2/0, RPF nbr 10.1.5.1

Outgoing interface list:

Ethernet0/0, Forward/Sparse, 2d01h/00:03:22

(10.1.1.1, 239.1.1.1), 00:04:53/00:02:31, flags: T

Incoming interface: Ethernet1/0, RPF nbr 10.1.3.1

Outgoing interface list:

Ethernet0/0, Forward/Sparse, 00:04:53/00:03:22

It would be very difficult for every router to manage this process completely independently in a very large network, or if there were a great number of sources (which is a benefit of opting for a source tree multicast design that provides an optimal path for fast convergence). In addition, distribution of the PIM tree information could become very cumbersome to routers that are in the path of multiple source, receivers, or groups. This is especially true if sources only send packets between long intervals, consequently causing repeated table time-outs and then subsequent tree rebuilding and processing. The shared tree is the answer to this problem in the PIM–SM network, giving routers a measure of protocol efficiency.

The main function of the shared tree is to shift the initial tree-building process to a single router. The shared tree flows from this single router toward the receivers. An additional benefit of the shared tree occurs when clients are subscribed to a group but there is not yet any active source for the group. The shared tree allows each router in the path to maintain state for the receivers only so that reprocessing of the tree branches does not need to occur again when a source comes online. The central processing location of the shared tree is known as the rendezvous point (RP).

When a receiver subscribes to a group through IGMP, the PIM process on the local router records the join, and then RPF checks the receivers against the location of the RP. If the RP is not located in the same direction as the receiver, PIM creates a local tree with the RP as the root and the receiver-bound interfaces in the OIL as the leaves or branches. The shared tree is represented in the Multicast Routing Information Base (MRIB) and MFIB as a (*, G) entry. PIM shares this information with its neighbors in the direction of the RP.

When the source begins sending packets to a group, the source-connected router registers the source with the RP and forwards the packets to the RP via unicast tunneling. The RP acts as a temporary conduit to the receivers, with packets flowing down the shared tree toward the receivers from the RP. Meanwhile, the RP signals the source-connected router to begin building a source tree. PIM on the source-connected router builds the tree and then shares the (S, G) information with its neighbors. (For more on this concept, see IP Multicast, Volume 1.)

If a PIM neighbor is in the path of the multicast flow, the router joins the source tree while also sharing the information via PIM to its neighbors. Only the routers in the path must build the source tree, which improves processing efficiency. The RP is removed from any forwarding for the (S, G) once the source tree is complete, unless of course the RP happens to be in the path of the flow. (This process is very quick, taking only milliseconds on geographically small domains.) All routers in the path must use the same RP for each group in order for this to work. Each router maintains a table of group-to-RP mappings to keep RP information current. If any router in the path has a group mapping that is incongruent with that of its neighbor, the shared-tree construction fails. RP-to-group mappings are managed through either static RP configurations or through dynamic RP protocols, such as Cisco’s Auto-RP or the open standard protocol Bootstrap Router (BSR).

Figure 1-5 illustrates the differences between a source tree and the shared tree in a basic network.

Figure 1-5 Basic Source Tree versus Shared Tree

With this reminder and understanding of RPF and PIM mechanics, let’s establish that a PIM router must have three critical components to build a complete multicast forwarding tree. These three components must be present in every multicast domain and are the primary pillars of interdomain forwarding. Let’s call them the three pillars of interdomain design:

![]() The multicast control plane for source identification: The router must know a proper path to any multicast source, either from the unicast RIB or learned (either statically or dynamically) through a specific RPF exception.

The multicast control plane for source identification: The router must know a proper path to any multicast source, either from the unicast RIB or learned (either statically or dynamically) through a specific RPF exception.

![]() The multicast control plane for receiver identification: The router must know about any legitimate receivers that have joined the group and where they are located in the network.

The multicast control plane for receiver identification: The router must know about any legitimate receivers that have joined the group and where they are located in the network.

![]() The downstream multicast control plane and MRIB: The router must know when a source is actively sending packets for a given group. PIM–SM domains must also be able to build a shared tree from the local domain’s RP, even when the source has registered to a remote RP in a different domain.

The downstream multicast control plane and MRIB: The router must know when a source is actively sending packets for a given group. PIM–SM domains must also be able to build a shared tree from the local domain’s RP, even when the source has registered to a remote RP in a different domain.

So far, this chapter has covered a lot of basics—multicast 101, you might say. These basics are inherently fundamental to understanding the critical components of interdomain multicast forwarding. In order to route multicast packets using PIM, the following must occur: (1) Routers must be able to build proper forwarding trees (2) independent of any remote unicast routing processes and (3) regardless of the location of the source or (4) the receivers for a given multicast group. This includes instances where sources and receivers are potentially separated by many administrative domains.

Inside an AS, especially one with only a single multicast domain, network administrators could use many methods for fulfilling these four criteria. All of the network is under singular control, so there is no concern about what happens outside the AS. For example, a network administrator could deploy multiple routing processes to satisfy the first requirement. He or she could even use multiple protocols through the use of protocol redistribution. The sophisticated link-state protocols OSPF and IS-IS provide built-in mechanisms for very tightly controlling route information sharing. PIM routers, on the other hand, can share-tree state information with any PIM neighbors and have no similar structures, needing only to satisfy the four criteria. A PIM domain forms more organically through both PIM configuration and protocol interaction. This means there are many ways to define a multicast domain. In its simplest terms, a multicast domain could be the administrative reach of the PIM process. Such a domain would include any router that is configured for PIM and has a PIM neighbor(s), IGMP subscribed receivers, or connected sources. A multicast domain could mirror exactly the administrative domain or autonomous system of the local network.

To understand this concept, let’s look more closely at Mcast Enterprises, the fictional example from earlier in this chapter. Figure 1-6 shows Mcast Enterprises using a single AS, with one IGP and one companywide multicast domain that includes all IP Multicast groups (224.0.0.0/10). If there is no PIM neighborship with the border ISP (Router SP3-1 from Figure 1-1), the PIM domain ends naturally at that border.

In this case, the domain exists wherever PIM is configured, but what if PIM were configured between the AS border router (BR) of Mcast Enterprises and ISP Blue (SP3-1)? Wouldn’t the domain then encompass both autonomous systems?

Such a broad definition of a domain may not be very useful. If a domain encompasses any PIM-connected routers, a single multicast domain could extend across the global Internet! While that might be convenient for the purposes of multicast forwarding, it would not be secure or desirable.

A domain can also be defined by the potential reach of a multicast packet, encompassing all the paths between sources and receivers. However, to have all multicast sources and groups available to all routers within a given domain, even inside a single AS may not be the best design. It may also not be very efficient, depending on the locations of sources, receivers, and RPs.

Clearly, PIM networks can use very flexible definitions for domains. The scope and borders of a multicast domain are essentially wherever an administrator designs them to be. This means that multicast domains also need much tighter and more distinctly configured boundaries than their unicast counterparts. For example, organizations that source or receive multicast flows over the global Internet need a secure boundary between internal and external multicast resources, just like they have for unicast routing resources. After all, an Internet multicast application could potentially use RPF information or send/receive packets from nearly anywhere.

PIM Domain Design Types

Because the definitions of multicast domains are fluid, it is important to define those types of domains that make logical sense and those most commonly deployed. You also need to establish some guidelines and best practices around how best to deploy them. In almost all cases, the best design is the one that matches the particular needs of the applications running on the network. Using an application-centered approach means that administrators are free to define domains according to need rather than a set prescriptive methodology.

The primary focus of defining a domain is drawing borders or boundaries around the desired reach of multicast applications. To accomplish this, network architects use router configurations, IP Multicast groups and scoping, RP placement strategies, and other policy to define and create domain boundaries. If the multicast overlay is very simple, then the domain may also be very simple, even encompassing the entire AS (refer to Figure 1-6). This type of domain would likely span an entire IGP network, with universal PIM neighbor relationships between all IGP routers. A single RP could be used for all group mappings. In this type of domain, all multicast groups and sources would be available to all systems within the domain.

These types of domains with AS-wide scope are becoming more and more rare in practice. Application and security requirements often require tighter borders around specific flows for specific applications. An administrator could use scoping in a much more effective way.

Domains by Group, or Group Scope

In many cases, the best way to scope a domain is by application. It is best practice for individual applications to use different multicast groups across an AS. That means that you can isolate an application by group number and scope the domain by group number. This is perhaps the most common method of bounding a domain in most enterprise networks.

It is also very common to have numerous applications with similar policy requirements within a network. They may need the same security zoning, or the same quality of service (QoS), or perhaps similar traffic engineering. In these cases, you can use group scopes to accomplish the proper grouping of applications for policy purposes. For example, a local-only set of applications with a singular policy requirement could be summarized by a summary address. Two applications using groups 239.20.2.100 and 239.20.2.200 could be localized by using summary address 239.20.0.0/16. To keep these applications local to a specific geography, the domain should use a single RP or an Anycast RP pair for that specific domain.

Figure 1-7 shows just such a model—a domain scoping in the Mcast Enterprises network using a combination of geography and application type, as defined by a group scope. Assuming that this network is using PIM–SM, each domain must have at least one active RP mapped to the groups in the scope that is tied to the local region-specific application or enterprise-specific groups. (Operational simplicity would require separate nodes representing RPs for different scope.) In this case, R1, R2, and R3 are RPs/border routers for their respective domains.

Figure 1-7 Domain Scope Using Application and Geography Type

Chapter 5, “IP Multicast Design Considerations and Implementation,” in IP Multicast, Volume 1 explains domain scoping at length and provides additional examples of how to scope a domain based on application, geography, or other properties. Most IP Multicast problems that occur in networks happen because the networks haven’t been properly segmented. (This is actually often true of unicast networks as well.) A well-defined scope that is based on applications can be very helpful in achieving proper segmentation.

Domains by RP Scope

In some larger networks, the location and policy of the applications are of less concern than the resiliency of network resources. If there are many applications and the multicast network is very busy, RP resources could simply be overloaded. It may be necessary to scope on RP location rather than on application type or group.

Scoping by RP allows the network architect to spread multicast pathing resources across the network. It is not uncommon to use Anycast RP groupings to manage such large deployments. For example, with the network shown in Figure 1-7, instead of using each router as a single RP, all three routers could be grouped together for Anycast RP in a single large domain. Figure 1-8 shows an updated design for Mcast Enterprises for just such a scenario.

Figure 1-8 A Single Domain Scoped with Anycast RP

The advantage of this design is that no one RP can become a single point of failure, and there is geographic resistance to overutilization of RP resources. This design is common among enterprises with many applications, senders, and receivers spread out across large geographies. There is no limitation to the way in which scoping can occur, and multiple domains can overlap each other to achieve the best of both the RP domain scope and the group scope design methodologies.

Overlapping Domains and Subdomains

If the network design requires multiple types of domains and accompanying policies, it is likely that a hybrid design model is required. This type of design might include many multicast domains that overlap each other in certain places. There could also be sub-domains of a much larger domain.

Let’s look one more time at the Mcast Enterprises network, this time using a hybrid design (see Figure 1-9). In this case, an application is sourced at R2 with group 239.20.2.100. That application is meant to be a geographically local resource and should not be shared with the larger network. In addition, R2 is also connected to a source for group 239.1.2.200, which has an AS-wide scope.

Remember that each domain needs a domain-specific RP or RP pair. In this case, R2 is used as the local domain RP and R1 as the RP for an AS-wide scoped domain. Because the 239.20/16 domain is essentially a subset of the private multicast group space designated by 239.0.0.0/8, the 239.20/16 domain is a subdomain of the much larger domain that uses R1 as the RP. This design also uses two additional subdomains, with R1 as local RP and R3 as local RP.

For further domain resiliency, it would not be unreasonable to use R1 as a backup or Anycast RP for any of the other groups as well. In these cases, you would use very specific source network filters at the domain boundaries. Otherwise, you may want to look more closely at how to accomplish forwarding between these domains. Table 1-1 breaks down the scope and RP assignments for each domain and subdomain.

Table 1-1 Overlapping Domain Scopes for Figure 1-9

Domain |

RP Assignment |

Group Mapping/Scope |

Global domain |

R1 |

239.0.0.0/8 |

Subdomain 1 |

R1 |

239.10.0.0/16 |

Subdomain 2 |

R2 |

239.20.0.0/16 |

Subdomain 3 |

R3 |

239.30.0.0/16 |

In a hybrid design scenario, such as the example shown in Figure 1-9, it may be necessary to forward multicast traffic down a path that is not congruent with the unicast path; this is known as traffic engineering. In addition, Mcast Enterprises may have some multicast applications that require forwarding to the Internet, as previously discussed, or forwarding between the domains outlined in Figure 1-9, which is even more common. Additional protocols are required to make this type of interdomain forwarding happen.

Forwarding Between Domains

Now that you understand the three pillars of interdomain design and the types of domains typically deployed, you also need to meet these pillar requirements in order to forward traffic from one domain to another domain. If any of these elements are missing from the network design, forwarding between domains simply cannot occur. But how does a network build an MRIB for remote groups or identify sources and receivers that are not connected to the local domain?

Each domain in the path needs to be supplemented with information from all the domains in between the source and the receivers. There are essentially two ways to accomplish this: statically or dynamically. A static solution involves a network administrator manually entering information into the edge of the network in order to complete tree building that connects the remote root to the local branches. Consider, for example, two PIM–SM domains: one that contains the source of a flow and one that contains the receivers.

The first static tree-building method requires the source network to statically nail the multicast flow to the external interface, using an IGMP static-group join. Once this is accomplished, if it is a PIM–SM domain, the receiver network can use the edge router as either a physical RP or a virtual RP to which routers in the network can map groups and complete shared and source trees. This is shown in Figure 1-10, using the Mcast Enterprises network as the receiver network and ISP Blue as the source network. Both networks are using PIM–SM for forwarding.

When the edge router is used for RP services, it can pick up the join from the remote network and automatically form the shared and source trees as packets come in from the source network. If Mcast Enterprises does not wish to use the edge router as an RP but instead uses a centralized enterprise RP like R1, tree building will fail as the edge router will not have the shared-tree information necessary for forwarding. The second interdomain static tree-building method solves this problem by using a PIM dense-mode proxy (see Figure 1-11), which normally provides a proxy for connecting a dense-mode domain to a sparse-mode domain.

Static methods are fine for certain network situations. For example, a services company that has an extranet connection and unidirectional flows could create a simple interdomain solution like the ones shown previously. Not only is such a solution simple, it provides a clear demarcation between two domains, leaving each domain free to use any domain design type desired.

Every static configuration in networking also has weaknesses. If any scale to the solution is required, it is likely that these static methods simply will not work. For example, scaling statically across three or more domains requires that each flow be nailed up statically at each edge. With a large number of flows, this can become an administrative nightmare that grows exponentially with the number of connections and domains. In addition, the source domain will have almost no control over subscriptions and bandwidth usage of flows as they pass outside the source domain.

If scale and sophisticated flow management are required, dynamic information sharing for the three pillars is also required. Dynamic methods use existing IGP/EGP protocols in conjunction with three additional multicast-specific protocols that an architect can implement between domains to share information: Multicast BGP (MBGP), PIM, and Multicast Source Discovery Protocol (MSDP). The remainder of this chapter deals with the configuration and operation of these three protocols in a multidomain environment.

Autonomous System Borders and Multicast BGP

As discussed earlier, each Internet-connected organization has unique needs and requirements that drive the implementation of IETF protocol policies and configurations. A unique Internet-connected network, with singular administrative control, is commonly referred to as an autonomous system (AS). The Internet is essentially a collection of networks, with ASs connected together in a massive, global nexus. A connection between any two ASs is an administrative demarcation (border) point. As IP packets cross each AS border, a best-effort trust is implied; that is, the packet is forwarded to the final destination with reasonable service.

Each border-connecting router needs to share routing information through a common protocol with its neighbors in any other AS. This does not mean that the two border routers use the same protocols, configuration, or policy to communicate with other routers inside the AS. It is a near certain guarantee that an ISP will have a completely different set of protocols and configurations than would an enterprise network to which it provides service.

The AS border represents a policy- and protocol-based plane of separation between routing information that an organization wishes to make public and routing information that it intends to keep private. Routing protocols used within the AS are generally meant to be private.

The modern Internet today uses Border Gateway Protocol (BGP)—specifically version 4, or BGPv4—as the EGP protocol for sharing forwarding information between AS border routers. Any IP routing protocol could be used internally to share routes among intra-AS IP routers. Internal route sharing may or may not include routes learned by border routers via BGP. Any acceptable IP-based IGP can be used within the AS.

Figure 1-12 expands on the AS view and more clearly illustrates the demarcation between internal and external routing protocols. In this example, not only is there an AS demarcation between Mcast Enterprises and ISP Blue but Mcast Enterprises has also implemented a BGP confederation internally. Both AS border routers share an eBGP neighborship. All Mcast Enterprises routes are advertised by the BR to the Internet via SP3-1, the border router of ISP Blue. Because Mcast Enterprises is using a confederation, all routes are advertised from a single BGP AS, AS 65100. In this scenario, BR advertises a single summary prefix of 10.0.0.0/8 to the Internet via ISP Blue.

Note: BGP confederations are not required in this design. The authors added confederations in order to more fully illustrate multicast BGP relationships.

As mentioned earlier, the practice of best-effort networking also applies to internetworks that are not a part of the Internet. Large internetworks may wish to segregate network geographies for specific policy or traffic engineering requirements. Many enterprises use BGP with private AS numbers to accomplish this task. In some ways, this mirrors the best-effort service of the Internet but on a much smaller scale. However, what happens when multicast is added to tiered networks or interconnected networks such as these? Can multicast packets naturally cross AS boundaries without additional considerations?

Remember the three pillars of interdomain design. Autonomous system borders naturally create a problem for the first of these requirements: themulticast control plane for source identification. Remember that the router must know a proper path to any multicast source, either from the unicast RIB or learned (either statically or dynamically) through a specific RPF exception.

There is no RPF information from the IGP at a domain boundary. Therefore, if PIM needs to build a forwarding tree across a domain boundary, there are no valid paths on which to build an OIL. If any part of the multicast tree lies beyond the boundary, you need to add a static or dynamic RPF entry to complete the tree at the border router.

As discussed in IP Multicast, Volume 1, static entries are configured by using the ip mroute command in IOS-XE and NX-OS. For IOS-XR, you add a static entry by using the static-rpf command. However, adding static RPF entries for large enterprises or ISPs is simply not practical. You need a way to transport this information across multiple ASs, in the same way you transport routing information for unicast networks. Autonomous system border routers typically use BGP to engineer traffic between ASs. The IETF added specific, multiprotocol extensions to BGPv4 for this very purpose.

RFC 2283 created multiprotocol BGPv4 extensions (the most current RFC is RFC 4760, with updates in RFC 7606). This is often referred to as Multiprotocol Border Gateway Protocol (MBGP). MBGP uses the same underlying IP unicast routing mechanics inherent in BGPv4 to forward routing and IP prefix information about many other types of protocols. Whereas BGPv4 in its original form was meant to carry routing information and updates exclusively for IPv4 unicast, MBGP supports IPv6, multicast, and label-switched virtual private networks (VPNs) (using MPLS VPN technology, as discussed in Chapter 3, “Multicast MPLS VPNs”), and other types of networking protocols as well. In the case of multicast, MBGP carries multicast-specific route prefix information against which routers can RPF check source and destination multicast traffic. The best part of this protocol arrangement is that MBGP can use all the same tuning and control parameters for multicast prefix information sharing that apply to regular IPv4 unicast routing in BGP.

The MBGP RFC accomplishes extends BGP reachability information by adding two additional path attributes, MP_REACH_NLRI and MP_UNREACH_NLRI. NLRI, or Network Learning Reachability Information, is essentially a descriptive name for the prefix, path, and attribute information shared between BGP speakers. These two additional attributes create a simple way to learn and advertise multiple sets of routing information, individualized by an address family. MBGP address families include IPv4 unicast, IPv6 unicast, Multiprotocol Label Switching labels, and, of course, IPv4 and IPv6 multicast.

The main advantage of MBGP is that it allows AS-border and internal routers to support noncongruent (traffic engineered) multicast topologies. This is discussed at length in Chapter 5 in IP Multicast, Volume 1. This concept is particularly relevant to Internet multicast and interdomain multicast. Multicast NLRI reachability information can now be shared with all the great filtering and route preferencing of standard BGP for unicast, allowing Internet providers to create a specific feed for multicast traffic.

Configuring and Verifying MBGP for Multicast

Configuring and operating MBGP is extraordinarily easy to do, especially if you already have a basic understanding of BGP configurations for IPv4 unicast. The routing information and configuration is essentially identical standard BGPv4 for unicast prefixes. The differences between a unicast BGP table and multicast BGP table occur because of specific filtering or sharing policies implemented per address family, leading to potentially noncongruent tables.

MBGP configuration begins by separating BGP neighbor activations by address family. A non-MBGP configuration typically consists of a series of neighbor statements with filter and routing parameters. Example 1-2 is a non-MBGP-enabled configuration on the BR between the other routers in Mcast Enterprises and ISP Blue from Figure 1-12.

Example 1-2 Standard BGP Configuration

BR(config)# router bgp 65100 BR(config-router)# neighbor 10.0.0.1 remote-as 65100 BR(config-router)# neighbor 10.0.0.1 update-source Loopback0 BR(config-router)# neighbor 10.0.0.2 remote-as 65100 BR(config-router)# neighbor 10.0.0.2 update-source Loopback0 BR(config-router)# neighbor 10.0.0.3 remote-as 65100 BR(config-router)# neighbor 10.0.0.3 update-source Loopback0 BR(config-router)# neighbor 172.23.31.1 remote-as 65003 BR(config-router)# network 10.0.0.0

MBGP commands can be separated into two configuration types: BGP neighborship commands and BGP policy commands. In IOS-XE and NX-OS, all neighbors and neighbor-related parameters (for example, remote-as, MD5 authentication, update-source, AS pathing info, timers, and so on) must be configured and established under the global BGP routing process subconfiguration mode. BGP policy commands (such as route-map filters, network statements, and redistribution), are no longer configured globally but by address family.

Let’s look more closely at an example of an MBGP configuration. Figure 1-13 shows a snapshot of just the external, unicast, BGP connection between the BR and the border route in ISP Blue, SP3-1.

Example 1-3 shows the same router configuration on the BR from above but this time using address families for IPv4 unicast and IPv4 multicast, with the additional multicast configuration. In this example, BR is using IOS-XE.

Example 1-3 MBGP Address-Family Configuration on the BR

BR(config)# router bgp 65100 BR(config-router)# neighbor 10.0.0.1 remote-as 65100 BR(config-router)# neighbor 10.0.0.1 update-source Loopback0 BR(config-router)# neighbor 172.23.31.1 remote-as 65003 BR(config-router-af)# address-family ipv4 BR(config-router-af)# network 10.0.0.0 BR(config-router-af)# neighbor 10.0.0.1 activate BR(config-router-af)# neighbor 172.23.31.1 activate BR(config-router-af)# exit-address-family BR(config-router)# address-family ipv4 multicast BR(config-router-af)# network 10.0.0.0 BR(config-router-af)# neighbor 10.0.0.1 activate BR(config-router-af)# neighbor 172.23.31.1 activate BR(config-router-af)# exit-address-family

The activate command option under each configured address family is critical and is required for any peer to activate information sharing for a specific NLRI—in this case IPv4 unicast and multicast. It is important to note that a neighbor does not have to have consistent address-family activation. For example, a neighbor may be activated for multicast NLRI sharing, but not for unicast. Once the neighbor is activated, all policy commands must be entered under an address family.

Note: BGP neighbor commands must be consistent across peers, regardless of address-family activation. This means that a peer must be established with a neighbor router in compliance with all the explicit RFC neighborship requirements for BGP. For example, you must use consistent AS numbers between global peers. You cannot have two peerings with the same node in multiple ASs. The standard rules of BGP still apply, regardless of the policy configuration.

In IOS-XR (a service provider–oriented operating system), multiprotocol usage is essentially assumed in the configuration. Address families are required for almost all protocol configurations, and policy commands are entered per neighbor and per address family. Example 1-4 shows the same configuration as before, for the BR at Mcast Enterprises using IOS-XR.

Example 1-4 BGP Address-Family Configuration for IOS-XR on the BR

RP/0/0/CPU0:BR(config)# router bgp 65100 RP/0/0/CPU0:BR(config-bgp)# address-family ipv4 unicast RP/0/0/CPU0:BR(config-bgp-af)# network 10.0.0.0 RP/0/0/CPU0:BR(config-bgp-af)# exit RP/0/0/CPU0:BR(config-bgp)# address-family ipv4 multicast RP/0/0/CPU0:BR(config-bgp-af)# network 10.0.0.0 RP/0/0/CPU0:BR(config-bgp-af)# exit RP/0/0/CPU0:BR(config-bgp)# neighbor 10.0.0.1 RP/0/0/CPU0:BR(config-bgp-nbr)# remote-as 65100 RP/0/0/CPU0:BR(config-bgp-nbr)# address-family ipv4 unicast RP/0/0/CPU0:BR(config-bgp-nbr-af)# exit RP/0/0/CPU0:BR(config-bgp-nbr)# address-family ipv4 multicast RP/0/0/CPU0:BR(config-bgp)# neighbor 172.23.31.1 RP/0/0/CPU0:BR(config-bgp-nbr)# remote-as 65003 RP/0/0/CPU0:BR(config-bgp-nbr)# address-family ipv4 unicast RP/0/0/CPU0:BR(config-bgp-nbr-af)# exit RP/0/0/CPU0:BR(config-bgp-nbr)# address-family ipv4 multicast RP/0/0/CPU0:BR(config-bgp-nbr-af)# commit RP/0/0/CPU0:BR(config-bgp-nbr-af)# end

This method of policy configuration provides unique and potentially incongruent tables between NLRI address families in BGP. Activation and policy do not need to be consistent between NLRI (address family) instances. It also naturally stands to reason that checking the multicast BGP table requires different commands from checking the unicast table. To learn more about this type of BGP configuration, check out the many Cisco Press books written about BGP and BGP design. Internet Routing Architectures by Sam Halabi would be a good place to start.

Any BGP commands that are used to check on neighborship status and establishment parameters are universal. Commands like show ip bgp neighbors in IOS-XE do not change. In fact, this is still the best command to use to check on the neighborship status of an MBGP peer. However, additional information about new NLRI address families is also included. The BR output in Example 1-5 shows this in action.

Example 1-5 show ip bgp neighbors (Truncated) on the BR

BR# sh ip bgp neighbors

BGP neighbor is 10.0.0.1, remote AS 65100, internal link

BGP version 4, remote router ID 10.0.0.1

BGP state = Established, up for 00:31:41

Last read 00:00:14, last write 00:00:48, hold time is 180, keepalive interval is

60 seconds

Neighbor sessions:

1 active, is not multisession capable (disabled)

Neighbor capabilities:

Route refresh: advertised and received(new)

Four-octets ASN Capability: advertised and received

Address family IPv4 Unicast: advertised and received

Address family IPv4 Multicast: advertised

Enhanced Refresh Capability: advertised and received

Multisession Capability:

Stateful switchover support enabled: NO for session 1

Message statistics:

InQ depth is 0

OutQ depth is 0

Sent Rcvd

Opens: 1 1

Notifications: 0 0

Updates: 9 1

Keepalives: 35 36

Route Refresh: 0 0

Total: 45 38

Default minimum time between advertisement runs is 0 seconds

For address family: IPv4 Unicast

Session: 10.0.0.1

BGP table version 18, neighbor version 18/0

Output queue size : 0

Index 4, Advertise bit 1

4 update-group member

Slow-peer detection is disabled

Slow-peer split-update-group dynamic is disabled

Sent Rcvd

Prefix activity: ---- ----

Prefixes Current: 4 0

Prefixes Total: 7 0

Implicit Withdraw: 0 0

Explicit Withdraw: 3 0

Used as bestpath: n/a 0

Used as multipath: n/a 0

Outbound Inbound

Local Policy Denied Prefixes: -------- -------

Total: 0 0

Number of NLRIs in the update sent: max 2, min 0

Last detected as dynamic slow peer: never

Dynamic slow peer recovered: never

Refresh Epoch: 1

Last Sent Refresh Start-of-rib: never

Last Sent Refresh End-of-rib: never

Last Received Refresh Start-of-rib: never

Last Received Refresh End-of-rib: never

Sent Rcvd

Refresh activity: ---- ----

Refresh Start-of-RIB 0 0

Refresh End-of-RIB 0 0

For address family: IPv4 Multicast

BGP table version 2, neighbor version 1/2

Output queue size : 0

Index 0, Advertise bit 0

Uses NEXT_HOP attribute for MBGP NLRIs

Slow-peer detection is disabled

Slow-peer split-update-group dynamic is disabled

Sent Rcvd

Prefix activity: ---- ----

Prefixes Current: 0 0

Prefixes Total: 0 0

Implicit Withdraw: 0 0

Explicit Withdraw: 0 0

Used as bestpath: n/a 0

Used as multipath: n/a 0

Outbound Inbound

Local Policy Denied Prefixes: -------- -------

Total: 0 0

Number of NLRIs in the update sent: max 0, min 0

Last detected as dynamic slow peer: never

Dynamic slow peer recovered: never

Refresh Epoch: 1

Last Sent Refresh Start-of-rib: never

Last Sent Refresh End-of-rib: never

Last Received Refresh Start-of-rib: never

Last Received Refresh End-of-rib: never

Sent Rcvd

Refresh activity: ---- ----

Refresh Start-of-RIB 0 0

Refresh End-of-RIB 0 0

Additional verification commands for the IPv4 multicast address family are generally found using the ipv4 multicast keyword in many standard BGP commands. For example, the command for showing the standard IPv4 unicast BGP prefix table is show ip bgp. Notice in Example 1-6 what happens to the BGP multicast prefix table when ipv4 multicast is added. In this instance, the two tables are not congruent, as indicated by the highlighted multicast table output.

Example 1-6 show ip bgp versus show ip bgp ipv4 multicast

BR# show ip bgp

BGP table version is 18, local router ID is 10.0.0.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0 0.0.0.0 0 32768 i

*> 172.21.0.0 172.23.31.1 0 65003 65001 i

*> 172.22.0.0 172.23.31.1 0 65003 65002 i

*> 172.23.0.0 172.23.31.1 0 0 65003 i

BR# show ip bgp ipv4 multicast

BGP table version is 2, local router ID is 10.0.0.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0 0.0.0.0 0 32768 i

* 172.23.0.0 172.23.31.1 0 0 65003 i

Note: This is only a basic introduction to MBGP configuration. It is beyond the scope of this text to delve deeply into the interworking of the BGP protocol stack. If you are planning to configure interdomain multicast, it is recommend that you spend time getting acquainted with BGP. It will also help you in better understanding the material presented in Chapter 3.

Domain Borders and Configured Multicast Boundaries

As mentioned earlier, most autonomous systems use IGPs and EGPs to establish clear demarcation points and borders. The easiest way to create a clear border around a PIM domain is to simply configure the router to not apply PIM–SM to border interfaces. Without PIM–SM configuration for those interfaces, no neighborships form between the border router inside the AS and the border router of the neighboring AS.

What does this mean for the PIM–SM domain border in an interdomain forwarding model? If PIM is not sharing join/leave information between the neighboring border routers, the second critical element in the three pillars of interdomain design—the multicast control plane for receiver identification—becomes a problem. Remember that—the router must know about any legitimate receivers that have joined the group and where they are located in the network.

Here then, is the big question: Is a PIM–SM relationship required between ASs in order to perform multicast interdomain forwarding? The short answer is yes! There are certainly ways to work around this requirement (such as by using protocol rules), but the best recommendation is to configure PIM on any interdomain multicast interfaces in order to maintain PIM neighborships with external PIM routers.

Why is this necessary? The biggest reason is that the local domain needs the join/prune PIM–SM messages for any receivers that may not be part of the local domain. Remember that without the (*, G) information, the local RP cannot help the network build a shared tree linking the source and receivers through the RP. Let’s examine this relationship in action in an example.

Let’s limit the scope to just the PIM relationship between the BR in Mcast Enterprises and SP3-1. In this particular instance, there is a receiver for an Internet multicast group, 239.120.1.1, connected to R2, as shown in Figure 1-14, with additional PIM configuration on the interface on BR. SP3-1 does not have PIM configured on the interface facing the BR, interface Ethernet0/0.

Figure 1-14 Network Diagram for Examples 1-7 Through 1-10

As you can see from the show ip mroute 239.120.1.1 command output in Example 1-7, router SP3-1 has no entries for 239.120.1.1. It does not have a PIM relationship to the BR in Mcast Enterprises, so there is no way for the BR to share that information.

Example 1-7 No ip mroute Entries

SP3-1# show ip mroute 239.120.1.1 Group 239.120.1.1 not found

If PIM–SM is configured on SP3-1, a neighborship forms between the BR and SP3-1. Once this is up, SP3-1 learns the (*, G) entry from the BR. This can be seen by debugging PIM with the command debug ip pim. Example 1-8 shows output for this command on SP3-1.

Example 1-8 debug ip pim on SP3-1

SP3-1(config-if)# int e0/0 SP3-1(config-if)# do debug ip pim SP3-1(config-if)# ip pim sparse-mode SP3-1(config-if)# *Feb 19 22:34:49.481: %PIM-5-NBRCHG: neighbor 172.23.31.4 UP on interface Ethernet0/0 *Feb 19 22:34:49.489: PIM(0): Changing DR for Ethernet0/0, from 0.0.0.0 to 172.23.31.4 SP3-1(config-if)# *Feb 19 22:34:49.489: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 172.23.31.4 on interface Ethernet0/0 SP3-1(config-if)# *Feb 19 22:34:50.622: PIM(0): Check DR after interface: Ethernet0/0 came up! SP3-1(config-if)# *Feb 19 22:34:56.239: PIM(0): Building Triggered (*,G) Join / (S,G,RP-bit) Prune message for 239.120.1.1 *Feb 19 22:34:56.239: PIM(0): Check RP 172.23.0.2 into the (*, 239.120.1.1) entry

As you can see, once the neighbor is up, router BR sends a PIM join/prune message to SP3-1, indicating that a receiver for group 239.120.1.1 is registered to the Mcast Enterprises multicast domain. SP3-1 now uses this message to build a state entry for the (*, 239.120.1.1) tree. Without this message, there is no way for SP3-1 or any other upstream routers to know that a receiver is located down the E0/0 path toward the BR of Mcast Enterprises.

There’s also another problem here. Have you already identified it? SP3-1 is looking for an RP on which to build a shared tree for the (*, G) join that it just received. However, as you can see from the last message in the debug output, the RP for this domain is 172.23.0.2, which is located through a different interface, interface E0/1. This means the RPF check for the (*, G) is going to fail, and the outgoing interface list for (*, G) will have an outgoing interface list (OIL) of NULL. Until that issue is resolved, all the packets from the domain are dropped in the bit bucket.

You can verify all this with the show ip mroute 239.120.1.1 command on SP3-1 and by pinging the group from the ISP-Blue domain. Pinging 239.120.1.1 from SP3-1 should make SP3-1 E0/0 a source for that group, and the ping should fail because it is unable to cross the domain. Example 1-9 shows this behavior in action.

Example 1-9 Multicast Reachability Failure for 239.120.1.1

SP3-1# sh ip mroute 239.120.1.1

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

G - Received BGP C-Mroute, g - Sent BGP C-Mroute,

N - Received BGP Shared-Tree Prune, n - BGP C-Mroute suppressed,

Q - Received BGP S-A Route, q - Sent BGP S-A Route,

V - RD & Vector, v - Vector, p - PIM Joins on route

Outgoing interface flags: H - Hardware switched, A - Assert winner, p - PIM Join

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.120.1.1), 00:00:20/stopped, RP 172.23.0.2, flags: SP

Incoming interface: Ethernet0/1, RPF nbr 172.23.1.2

Outgoing interface list: Null

(172.23.31.1, 239.120.1.1), 00:00:20/00:02:39, flags: PT

Incoming interface: Ethernet0/0, RPF nbr 0.0.0.0

Outgoing interface list: Null

SP3-1# ping 239.120.1.1

Type escape sequence to abort.

Sending 1, 100-byte ICMP Echos to 239.120.1.1, timeout is 2 seconds:

...

You can see in Example 1-9 that the ping does fail, as expected. It is important to remember that BGP is included in this network configuration—in particular, MBGP for the IPv4 multicast NLRI address family. The issue is not that the RPF check for the source has failed but rather that the RPF check against the RP has failed because the RP is in a different direction and has no knowledge of the (*, G) to marry with the source. Something else is needed to complete the tree. This is addressed this in the next section.

Note: There is also an interesting relationship between using PIM interfaces at domain borders and MBGP. If an external multicast MBGP peer is configured on a router on an interface that has no PIM configuration, you get some very odd behavior in BGP.

One of the many rules of BGP states that for a route to be valid, the route and interface to the next hop must also be valid and learned via the routing table and not recursively through BGP. In the case of multicast MBGP, BGP refers to the PIM topology table in the router to determine if the next-hop interface is valid. If the interface on which the external MBGP (E-MBGP) route was learned is not enabled in the PIM topology, the route fails the best-path selection algorithm and shows in the BGP table as “inaccessible.” If you are using MBGP to carry multicast NLRI data across domains, make sure the cross-domain interfaces on which BGP is configured are also configured for an interdomain PIM neighborship. This is shown in Example 1-10, using the IOS-XE command show ip bgp ipv4 multicast. Example 1-10 reveals that the prefix is received from the peer but is not installed in the RPF table.

Example 1-10 BGP IPv4 Multicast Prefix Acceptance With and Without Active PIM on the External Peer Interface

BR# show ip bgp ipv4 multicast

BGP table version is 2, local router ID is 10.0.0.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0 0.0.0.0 0 32768 i

* 172.23.0.0 172.23.31.1 0 0 65003 i

BR# show ip bgp ipv4 multicast 172.23.0.0

BGP routing table entry for 172.23.0.0/16, version 0

Paths: (1 available, no best path)

Flag: 0x820

Not advertised to any peer

Refresh Epoch 2

65003, (received & used)

Next, you need to add PIM to the MBGP peering interface, as shown in Example 1-11.

Example 1-11 Adding PIM to Complete the RPF Check for BGP

BR(config)# interface ethernet0/1 BR(config-if)# ip pim sparse-mode *Feb 19 20:46:06.252: %PIM-5-NBRCHG: neighbor 172.23.31.1 UP on interface Ethernet0/1 *Feb 19 20:46:07.640: %PIM-5-DRCHG: DR change from neighbor 0.0.0.0 to 172.23.31.4 on interface Ethernet0/1 BR# show ip bgp ipv4 multicast BGP table version is 2, local router ID is 10.0.0.4 Status codes: s suppressed, d damped, h history, * valid, > best, i - internal, r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter, x best-external, a additional-path, c RIB-compressed, Origin codes: i - IGP, e - EGP, ? - incomplete RPKI validation codes: V valid, I invalid, N Not found Network Next Hop Metric LocPrf Weight Path *> 10.0.0.0 0.0.0.0 0 32768 i * 172.23.0.0 172.23.31.1 0 0 65003 i

Clearing the BGP neighbors, using the command clear ip bgp *, immediately clears the prefix table, as shown in Example 1-12.

Example 1-12 Clearing the BGP Table

BR# clear ip bgp * *Feb 19 20:46:29.014: %BGP-5-ADJCHANGE: neighbor 10.0.0.1 Down User reset *Feb 19 20:46:29.014: %BGP_SESSION-5-ADJCHANGE: neighbor 10.0.0.1 IPv4 Multicast topology base removed from session User reset *Feb 19 20:46:29.014: %BGP_SESSION-5-ADJCHANGE: neighbor 10.0.0.1 IPv4 Unicast topology base removed from session User reset *Feb 19 20:46:29.014: %BGP-5-ADJCHANGE: neighbor 172.23.31.1 Down User reset *Feb 19 20:46:29.014: %BGP_SESSION-5-ADJCHANGE: neighbor 172.23.31.1 IPv4 Multicast topology base removed from session User reset *Feb 19 20:46:29.014: %BGP_SESSION-5-ADJCHANGE: neighbor 172.23.31.1 IPv4 Unicast topology base removed from session User reset BR# *Feb 19 20:46:29.929: %BGP-5-ADJCHANGE: neighbor 10.0.0.1 Up *Feb 19 20:46:29.942: %BGP-5-ADJCHANGE: neighbor 172.23.31.1 Up

Once the neighbor is up, the prefix is received again and is chosen as a BGP best path, as shown in Example 1-13, again using the show ip bgp ipv4 multicast command.

Example 1-13 RPF and BGP Alignment

BR# show ip bgp ipv4 multicast

BGP table version is 2, local router ID is 10.0.0.4

Status codes: s suppressed, d damped, h history, * valid, > best, i - internal,

r RIB-failure, S Stale, m multipath, b backup-path, f RT-Filter,

x best-external, a additional-path, c RIB-compressed,

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 10.0.0.0 0.0.0.0 0 32768 i

*> 172.23.0.0 172.23.31.1 0 0 65003 i

Multicast Source Discovery Protocol

The first and second pillars of interdomain design are addressed extensively in the previous section. Let’s look more closely at the third pillar: the downstream multicast control plane and MRIB. Remember that the router must know when a source is actively sending packets for a given group. PIM–SM domains must also be able to build a shared tree from the local domain’s RP, even when the source has registered to a remote RP in a different domain.

For multidomain or interdomain forwarding, you need ways to deal specifically with these requirements. If a source is in a remote SM domain and actively sending packets, it is registered to an RP that is also not in the domain. That domain will have built the appropriate tree(s) to distribute the packets via PIM. Without the RP in the local domain knowing about the source, no shared tree can be built by the local RP. In addition, how can you RPF check the source tree if you don’t know about that source?

The IETF created Multicast Source Discovery Protocol (MSDP) to address this specific issue. The IETF defined MSDP in RFC 3618 as a stop-gap measure for bridging PIM–SM domains until IETF members could establish other PIM extensions to accomplish the same thing. MSDP soon became more than a stop-gap; it is now an industry standard and requirement for PIM–SM interdomain forwarding. As discussed in IP Multicast, Volume 1, the usefulness of MSDP was even extended to allow for RP redundancy via Anycast RP, as initially defined in RFC 3446. MSDP’s main purpose is to allow a local RP to notify RPs in other domains about the active sources about which it knows.

MSDP accomplishes this by allowing an RP to peer with other RPs via a Transport Control Protocol (TCP) connection to share active multicast source information. This is very similar to the way BGP routers use TCP connections to create neighborships for sharing prefix and path information across autonomous systems. In fact, MSDP, like BGP, uses ASNs in its calculations for building active source tables.

Configuring a basic MSDP peer is quite simple. For the most part, the configuration steps are similar to those for configuring basic BGP, though with some exceptions. Tables 1-2 through 1-4 show the configuration commands needed to enable peering between RPs in IOS-XE, NX-OS, and IOS-XR.

Table 1-2 IOS-XE MSDP Peering Commands

Router(Config)#ip msdp [ vrf vrf-name ] peer { peer-name | peer-address } [ connect-source interface-type interface-number ] [ remote-as as-number ] |

|

Router(config)#no ip msdp [ vrf vrf-name ] peer { peer-name | peer-address } |

|

Syntax Options |

Purpose |

vrf |

(Optional) Supports the multicast VPN routing and forwarding (VRF) instance. |

vrf-name |

(Optional) Specifies the name assigned to the VRF. |

peer-name peer-address |

Specifies the Domain Name System (DNS) name or IP address of the router that is to be the MSDP peer. |

(Optional) Specifies the interface type and number whose primary address becomes the source IP address for the TCP connection. This interface is on the router being configured. |

|

remote-as as-number |

(Optional) Specifies the autonomous system number of the MSDP peer. This keyword and argument are used for display purposes only. |

Table 1-3 NX-OS MSDP Peering Commands

Nexus(config)#ip msdp peer peer-address connect-source if-type if-number [ remote-as asn ] |

|

Nexus(config)#no ip msdp peer |

|

Syntax Options |

Purpose |

connect-source |

Configures a local IP address for a TCP connection. |

if-type |

Specifies the interface type. For more information, use the question mark (?) online help function. |

if-number |

Specifies the interface or subinterface number. For more information about the numbering syntax for the networking device, use the question mark (?) online help function. |

remote-as asn |

(Optional) Configures a remote autonomous system number. |

connect-source |

Configures a local IP address for a TCP connection. |

Table 1-4 IOS-XR MSDP Peering Commands

RP/0/RP0/CPU0:router(config)#router msdp |

|

RP/0/RP0/CPU0:router(config-msdp)#(no)originator-id |

|

RP/0/RP0/CPU0:router(config-msdp)#(no) peer |

|

Syntax Options |

Purpose |

type |

Specifies the interface type. For more information, use the question mark (?) online help function. |

interface-path-id |

Specifies the physical interface or virtual interface. |

peer-address |

Specifies the IP address or DNS name of the router that is to be the MSDP peer. |

Example 1-14 shows a sample MSDP peering configuration on router SP3-2 to peer with the RP and border router, SP2-1 (172.22.0.1), in ISP Green, as depicted in the mock-Internet example.

Example 1-14 Basic MSDP Configuration, SP3-1 to SP2-1

SP3-2>en SP3-2# config t Enter configuration commands, one per line. End with CNTL/Z. SP3-2(config)# ip msdp peer 172.22.0.1 connect-source lo0

Figure 1-15 shows the mock-Internet map expanded. (The configuration snippet in Example 1-14 is from a router using IOS-XE.)

Figure 1-15 Mock-Internet Map

As you know, when a source-connected router sees new packets coming from a source, it registers that source with its local RP. If the local RP is enabled for MSDP, it completes the shared tree and, if validated, it creates a special state entry called a source active (SA). The local RP then shares that SA information with any MSDP peer RPs in other domains.

A remote RP that receives this SA advertisement validates the source against its own RPF table. Once validated, the remote RP uses the source and location information to calculate an interdomain forwarding tree, if it has subscribed receivers for that source’s group in its local PIM–SM domain. It also forwards the SA entry to any other peers to which it is connected, except for the peer from which it received the SA advertisement. This processing allows a remote RP to learn about remote active sources, while facilitating the shared-tree and source-tree building process for the local domain, completing multicast forwarding trees across multiple domains. This means that in Example 1-14, the RP in ISP Blue (SP3-1) receives SA advertisements from the configured MSDP peer and RP in ISP Green (SP2-1, 172.22.0.1).

Aside from being necessary to interdomain multicast forwarding, MSDP has some inherent advantages. For one, because a single RP for the entire Internet is not required, no one entity is responsible for the quality of local domain forwarding. Each AS can implement its own RP strategy and connect to other domains in a best-effort manner, just as with unicast routing. Administrators can also use policy to control MSDP sharing behavior, which means autonomous systems can use a single domain strategy for both internal and external forwarding. Shared multicast trees always stay local to the domain, even when join messages are shared with neighboring domains. When receivers are downstream from the remote domain, a (*, G) entry is created only for that domain, with branches at the border edge interface. Because RP resources are only required within the local domain, there are no global resource exhaustion problems or global reliability issues.

MSDP uses TCP port 639 for peering sessions. Also like BGP, MSDP has a specific state machine that allows it to listen for peers, establish TCP connections, and maintain those connections while checking for accuracy and authorization. Figure 1-16 illustrates the MSDP state machine described in RFC 3618.

Figure 1-16 MSDP State Machine

Table 1-5 explains the Events (EX) and Actions (AX) of the state machine shown in Figure 1-16.

Table 1-5 MSDP State Machine Events and Actions

Event/Action |

Description |

E1 |

MSDP peering is configured and enabled. |

E2 |

The peer IP address is less than the MSDP source address. |

E3 |

The peer IP address is greater than the MSDP source address. |

E4 |

TCP peering is established (active/master side of the connection). |

E5 |

TCP peering is established (active/master side of the connection). |

E6 |

The connect retry timer has expired. |

E7 |

MSDP peering is disabled. |

E8 |

The hold timer has expired. |

E9 |

A TLV format error has been detected in the MSDP packet. |

E10 |

Any other error is detected. |

A1 |

The peering process begins, with resources allocated and peer IP addresses compared. |

A2 |

TCP is set to Active OPEN and the connect retry timer is set to [ConnectRetry-Period]. |

A3 |

TCP is set to Passive OPEN (listen). |

A4 |

The connect retry timer is deleted, and the keepalive TLV is sent, while the keepalive and hold timer are set to configured periods. |

A5 |