| 7 | Networks and Positive Feedback |

The industrial economy was populated with oligopolies: industries in which a few large firms dominated their markets. This was a comfortable world, in which market shares rose and fell only gradually. This stability in the marketplace was mirrored by lifetime employment of managers. In the United States, the automobile industry, the steel industry, the aluminum industry, the petroleum industry, various chemicals markets, and many others followed this pattern through much of the twentieth century.

In contrast, the information economy is populated by temporary monopolies. Hardware and software firms vie for dominance, knowing that today’s leading technology or architecture will, more likely than not, be toppled in short order by an upstart with superior technology.

What has changed? There is a central difference between the old and new economies: the old industrial economy was driven by economies of scale; the new information economy is driven by the economics of networks. In this chapter we describe in detail the basic principles of network economics and map out their implications for market dynamics and competitive strategy. The key concept is positive feedback.

The familiar if sad tale of Apple Computer illustrates this crucial concept. Apple has suffered of late because positive feedback has fueled the competing system offered by Microsoft and Intel. As Wintel’s share of the personal computer market grew, users found the Wintel system more and more attractive. Success begat more success, which is the essence of positive feedback. With Apple’s share continuing to decline, many computer users now worry that the Apple Macintosh will shortly become the Sony Beta of computers, orphaned and doomed to a slow death as support from software producers gradually fades away. This worry is cutting into Apple’s sales, making it a potentially self-fulfilling forecast. Failure breeds failure: this, too, is the essence of positive feedback.

Why is positive feedback so important in high-technology industries? Our answer to this question is organized around the concept of a network. We are all familiar with physical networks such as telephone networks, railroad networks, and airline networks. Some high-tech networks are much like these “real” networks: networks of compatible fax machines, networks of compatible modems, networks of e-mail users, networks of ATM machines, and the Internet itself. But many other high-tech products reside in “virtual” networks: the network of Macintosh users, the network of CD machines, or the network of Nintendo 64 users.

In “real” networks, the linkages between nodes are physical connections, such as railroad tracks or telephone wires. In virtual networks, the linkages between the nodes are invisible, but no less critical for market dynamics and competitive strategy. We are in the same computer network if we can use the same software and share the same files. Just as a spur railroad is in peril if it cannot connect to the main line, woe to those whose hardware or software is incompatible with the majority of other users. In the case of Apple, there is effectively a network of Macintosh users, which is in danger of falling below critical mass.

Whether real or virtual, networks have a fundamental economic characteristic: the value of connecting to a network depends on the number of other people already connected to it.

This fundamental value proposition goes under many names: network effects, network externalities, and demand-side economies of scale. They all refer to essentially the same point: other things being equal, it’s better to be connected to a bigger network than a smaller one. As we will see below, it is this “bigger is better” aspect of networks that gives rise to the positive feedback observed so commonly in today’s economy.

Throughout this book we have stressed the idea that many aspects of the new economy can be found in the old economy if you look in the right places. Positive feedback and network externalities are not a creation of the 1990s. To the contrary, network externalities have long been recognized as critical in the transportation and communications industries, where companies compete by expanding the reach of their networks and where one network can dramatically increase its value by interconnecting with other networks. Anyone trying to navigate the network economy has much to learn from the history of the postal service, railroads, airlines, and telephones.

In this chapter we introduce and illustrate the key economic concepts that underlie market dynamics and competitive strategy in both real and virtual networks. Based on these concepts, we identify four generic strategies that are effective in network markets. We then show how these concepts and strategies work in practice through a series of historical case studies.

In the two chapters that follow this one, we build on the economic framework developed here, constructing a step-by-step strategic guide to the key issues facing so many players in markets for information technology. In Chapter 8 we discuss how to work with allies to successfully establish a new technology—that is, to launch a new network. As you might expect, negotiations over interconnection and standardization are critical. In Chapter 9, we examine what happens if these negotiations break down: how to fight a standards war, how to get positive feedback working in favor of your technology in a battle against an incompatible rival technology.

POSITIVE FEEDBACK

The notion of positive feedback is crucial to understanding the economics of information technology. Positive feedback makes the strong get stronger and the weak get weaker, leading to extreme outcomes. If you have ever experienced feedback talking into a microphone, where a loud noise becomes deafening through repeated amplification, you have witnessed positive feedback in action. Just as an audio signal can feed on itself until the limits of the system (or the human ear) are reached, positive feedback in the marketplace leads to extremes: dominance of the market by a single firm or technology.

The backward cousin of positive feedback is negative feedback. In a negative-feedback system, the strong get weaker and the weak get stronger, pushing both toward a happy medium. The industrial oligopolies listed in the beginning of this chapter exhibited negative feedback, at least in their mature phase. Attempts by the industry leader to capture share from smaller players would often trigger vigorous responses as smaller players sought to keep capacity utilization from falling. Such competitive responses prevent the leading firm from obtaining a dominant position. Furthermore, past a certain size, companies found growth difficult owing to the sheer complexity of managing a large enterprise. And as the larger firms became burdened with high costs, smaller, more nimble firms found profitable niches. All of these ebbs and flows represent negative feedback in action: the market found a balanced equilibrium rather than heading toward the extreme of a single winner. Sometimes sales fell below a critical mass, and companies like Studebaker went out of business or were acquired by more efficient rivals. But by and large, dramatic changes in market share were uncommon and oligopoly rather than monopoly was the norm.

Positive feedback should not be confused with growth as such. Yes, if a technology is on a roll, as is the Internet today, positive feedback translates into rapid growth: success feeds on itself. This is a virtuous cycle. But there is a dark side of this force. If your product is seen as failing, those very perceptions can spell doom. The Apple Macintosh is now in this danger zone, where “positive” feedback does not feel very positive. The virtuous cycle of growth can easily change to a vicious cycle of collapse. A death spiral represents positive feedback in action; “the weak get weaker” is the inevitable flip side of “the strong get stronger.”

When two or more firms compete for a market where there is strong positive feedback, only one may emerge as the winner. Economists say that such a market is tippy, meaning that it can tip in favor of one player or another. It is unlikely that all will survive. It was clear to all parties in the battle over 56Kbps modem standards that multiple, incompatible modems could not coexist for long; the only question was which protocol would triumph or if a single, compromise standard could be negotiated. Other examples of tippy markets were the video recorder market in the 1980s (VHS v. Beta) and the personal computer operating systems market of the 1990s (Wintel v. Apple). In its most extreme form, positive feedback can lead to a winner-take-all market in which a single firm or technology vanquishes all others, as has happened in several of these cases.

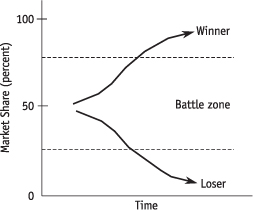

Figure 7.1 shows how a winner-take-all market evolves over time. The technology starting with an initial lead, perhaps 60 percent of the market, grows to near 100 percent, while the technology starting with 40 percent of the market declines to 10 percent. These dynamics are driven by the strong desire of users to select the technology that ultimately will prevail—that is, to choose the network that has (or will have) the most users. As a result, the strong get stronger and the weak get weaker; both effects represent the positive feedback so common in markets for information infrastructure.

The biggest winners in the information economy, apart from consumers generally, are companies that have launched technologies that have been propelled forward by positive feedback. This requires patience and foresight, not to mention a healthy dose of luck. Successful strategies in a positive-feedback industry are inherently dynamic. Our primary goal in this part of the book is to identify the elements of winning strategies in network industries and to help you craft the strategy most likely to succeed in your setting.

Figure 7.1. Positive Feedback

Nintendo is a fine example of a company that created enormous value by harnessing positive feedback. When Nintendo entered the U.S. market for home video games in 1985, the market was considered saturated, and Atari, the dominant firm in the previous generation, had shown little interest in rejuvenating the market. Yet by Christmas 1986, the Nintendo Entertainment System (NES) was the hottest toy on the market. The very popularity of the NES fueled more demand and enticed more game developers to write games to the Nintendo system, making the system yet more attractive. Nintendo managed that most difficult of high-tech tricks: to hop on the positive-feedback curve while retaining strong control over its technology. Every independent game developer paid royalties to Nintendo. They even promised not to make their games available on rival systems for two years following their release!

Our focus in this chapter is on markets with significant positive feedback resulting from demand-side or supply-side economies of scale. These scale economies apply most directly to the market leaders in an industry. But smaller players, too, must understand these same principles, whether they are planning to offer their own smaller differentiated networks or to hook into a larger network sponsored by an industry leader.

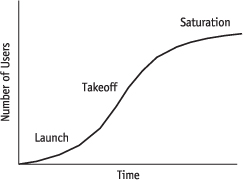

Positive-feedback systems follow a predictable pattern. Again and again, we see adoption of new technologies following an S-shaped curve with three phases: (1) flat during launch, then (2) a steep rise during takeoff as positive feedback kicks in, followed by (3) leveling off as saturation is reached. The typical pattern is illustrated in Figure 7.2.

Figure 7.2. Adoption Dynamics

This S-shaped, or “logistic,” pattern of growth is also common in the biological world; for example, the spread of viruses tends to follow this pattern. In the information technology arena, the S-shaped pattern can be seen in the adoption of the fax machine, the CD, color TV, video game machines, e-mail, and the Internet (we can assure you that current growth rates will slow down; it is just a matter of when).

DEMAND-SIDE ECONOMIES OF SCALE

Positive feedback is not entirely new; virtually every industry goes through a positive feedback phase early in its evolution. General Motors was more efficient than the smaller car companies in large part because of its scale. This efficiency fueled further growth by General Motors. This source of positive feedback is known as economies of scale in production: larger firms tend to have lower unit costs (at least up to a point). From today’s perspective, we can refer to these traditional economies of scale as supply-side economies of scale.

Despite its supply-side economies of scale, General Motors never grew to take over the entire automobile market. Why was this market, like many industrial markets of the twentieth century, an oligopoly rather than a monopoly? Because traditional economies of scale based on manufacturing have generally been exhausted at scales well below total market dominance, at least in the large U.S. market. In other words, positive feedback based on supply-side economies of scale ran into natural limits, at which point negative feedback took over. These limits often arose out of the difficulties of managing enormous organizations. Owing to the managerial genius of Alfred Sloan, General Motors was able to push back these limits, but even Sloan could not eliminate negative feedback entirely.

In the information economy, positive feedback has appeared in a new, more virulent form based on the demand side of the market, not just the supply side. Consider Microsoft. As of May 1998, Microsoft had a market capitalization of about $210 billion. This enormous value is not based on the economies of scale in developing software. Oh, sure, there are scale economies, in designing software, as for any other information product. But there are several other available operating systems that offer comparable (or superior) performance to Windows 95 and Windows NT, and the cost of developing rival operating systems is tiny in comparison with Microsoft’s market capitalization. The same is true of Microsoft’s key application software. No, Microsoft’s dominance is based on demand-side economies of scale. Microsoft’s customers value its operating systems because they are widely used, the de facto industry standard. Rival operating systems just don’t have the critical mass to pose much of a threat. Unlike the supply-side economies of scale, demand-side economies of scale don’t dissipate when the market gets large enough: if everybody else uses Microsoft Word, that’s even more reason for you to use it too.

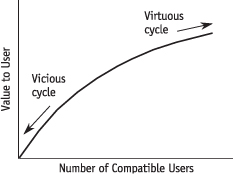

The positive relationship between popularity and value is illustrated in Figure 7.3. The arrow in the upper-right portion of the curve depicts a virtuous cycle: the popular product with many compatible users becomes more and more valuable to each user as it attracts ever more users. The arrow in the lower-left portion of the curve represents a vicious cycle: a death spiral in which the product loses value as it is abandoned by users, eventually stranding those diehards who hang on the longest, because of their unique preference for the product or their high switching costs.

Lotus 1-2-3 took great advantage of demand-side scale economies during the 1980s. Based on superior performance, Lotus 1-2-3 enjoyed the largest installed base of users among spreadsheet programs by the early 1980s. As personal computers became faster and more companies appreciated the power of spreadsheets, new users voted overwhelmingly for Lotus 1-2-3, in part so they could share files with other users and in part because many users were skilled in preparing sophisticated Lotus macros. This process fed on itself in a virtuous cycle. Lotus 1-2-3 had the most users, and so attracted yet more devotees. The result was an explosion in the size of the spreadsheet market. At the same time, VisiCalc, the pioneer spreadsheet program for personal computers, was stuck in a vicious cycle of decline, suffering from the dark side of positive feedback. Unable to respond quickly by introducing a superior product, VisiCalc quickly succumbed.

Figure 7.3. Popularity Adds Value in a Network Industry

Suppose your product is poised in the middle of the curve in Figure 7.3. Which way will it evolve? If consumers expect your product to become popular, a bandwagon will form, the virtuous cycle will begin, and consumers’ expectations will prove correct. But if consumers expect your product to flop, your product will lack momentum, the vicious cycle will take over, and again consumers’ expectations will prove correct. The beautiful if frightening implication: success and failure are driven as much by consumer expectations and luck as by the underlying value of the product. A nudge in the right direction, at the right time, can make all the difference. Marketing strategy designed to influence consumer expectations is critical in network markets. The aura of inevitability is a powerful weapon when demand-side economies of scale are strong.

Demand-side economies of scale are the norm in information industries. In consumer electronics, buyers are wary of products that are not yet popular, fearing they will pick a loser and be left stranded with marginally valuable equipment. Edsel buyers at least had a car they could drive, but PicturePhone customers found little use for their equipment when this technology flopped in the 1970s. As a result, many information technologies and formats get off to a slow start, then either reach critical mass and take off or fail to do so and simply flop.

We do not mean to suggest that positive feedback works so quickly, or so predictably, that winners emerge instantly and losers give up before trying. Far from it. There is no shortage of examples in which two (or more) technologies have gone head-to-head, with the outcome very much in the balance for years. Winner-take-all does not mean give-up-if-you-are-behind. Being first to market usually helps, but there are dozens of examples showing that a head start isn’t necessarily decisive: think of WordStar, VisiCalc, and DR-DOS.

Nor are demand-side economies of scale so strong that the loser necessarily departs from the field of battle: WordPerfect lost the lion’s share of the word processor market to Microsoft Word, but is still a player. More so than in the past, however, in the information economy the lion’s share of the rewards will go to the winner, not the number two player who just manages to survive.

Positive feedback based on demand-side economies of scale, while far more important now than in the past, is not entirely novel. Any communications network has this feature: the more people using the network, the more valuable it is to each one of them. The early history of telephones in the United States, which we discuss in detail later in the chapter, shows how strong demand-side scale economies, along with some clever maneuvering, can lead to dominance by a single firm. In the case of telephony, AT&T emerged as the dominant telephone network in the United States during the early years of this century, fending off significant competition and establishing a monopoly over long-distance service.

Transportation networks share similar properties: the more destinations it can reach, the more valuable a network becomes. Hence, the more developed network tends to grow at the expense of smaller networks, especially if the smaller networks are not able to exchange traffic with the larger network, a practice generally known as interlining in the railroad and airline industries.

Both demand-side economies of scale and supply-side economies of scale have been around for a long time. But the combination of the two that has arisen in many information technology industries is new. The result is a “double whammy” in which growth on the demand side both reduces cost on the supply side and makes the product more attractive to other users—accelerating the growth in demand even more. The result is especially strong positive feedback, causing entire industries to be created or destroyed far more rapidly than during the industrial age.

NETWORK EXTERNALITIES

We said earlier that large networks are more attractive to users than small ones. The term that economists use to describe this effect, network externalities, usefully highlights two aspects of information systems that are crucial for competitive strategy.

First, focus on the word network. As we have suggested, it is enlightening to view information technologies in terms of virtual networks, which share many properties with real networks such as communications and transportation networks. We think of all users of Macintosh users as belonging to the “Mac network.” Apple is the sponsor of this network. The sponsor of a network creates and manages that network, hoping to profit by building its size. Apple established the Mac network in the first place by introducing the Macintosh. Apple controls the interfaces that govern access to the network—for example, through its pricing of the Mac, by setting the licensing terms on which clones can be built, and by bringing infringement actions against unauthorized hardware vendors. And Apple is primarily responsible for making architectural improvements to the Mac.

Apple also exerts a powerful influence on the supply of products that are complementary to the Mac machine, notably software and peripheral devices, through its control over interfaces. Computer buyers are picking a network, not simply a product, when they buy a Mac, and Apple must design its strategy accordingly. Building a network involves more than just building a product: finding partners, building strategic alliances, and knowing how to get the bandwagon rolling can be every bit as important as engineering design skills.

Second, focus on one of economists’ favorite words: externalities. Externalities arise when one market participant affects others without compensation being paid. Like feedback, externalities come in two flavors: negative and positive. The classic example of a negative externality is pollution: my sewage ruins your swimming or drinking water. Happily, network externalities are normally positive, not negative: when I join your network, the network is bigger and better, to your benefit.

Positive network externalities give rise to positive feedback: when I buy a fax machine, the value of your fax machine is enhanced since you can now send faxes to me and receive faxes from me. Even if you don’t have a fax machine yet, you are more tempted to get one yourself since you can now use it to communicate with me.

Network externalities are what lie behind Metcalfe’s law, named after Bob Metcalfe, the inventor of Ethernet. (Metcalfe tells us it was George Gilder who attributed this law to him, but he’s willing to take credit for it.)

Metcalfe’s law is more a rule of thumb than a law, but it does arise in a relatively natural way. If there are n people in a network, and the value of the network to each of them is proportional to the number of other users, then the total value of the network (to all the users) is proportional to n × (n − 1) = n2 − n. If the value of a network to a single user is $1 for each other user on the network, then a network of size 10 has a total value of roughly $100. In contrast, a network of size 100 has a total value of roughly $10,000. A tenfold increase in the size of the network leads to a hundredfold increase in its value.

COLLECTIVE SWITCHING COSTS

Network externalities make it virtually impossible for a small network to thrive. But every new network has to start from scratch. The challenge to companies seeking to introduce new but incompatible technology into the market is to build network size by overcoming the collective switching costs—that is, the combined switching costs of all users.

As we emphasized in Chapter 5, switching costs often stem from durable complementary assets, such as LPs and phonographs, hardware and software, or information systems and the training to use them. With network effects, one person’s investment in a network is complementary to another person’s similar investments, vastly expanding the number of complementary assets. When I invest by learning to write programs for the Access database language, then Access software, and investments in that language, become more valuable for you.

In many information industries, collective switching costs are the biggest single force working in favor of incumbents. Worse yet for would-be entrants and innovators, switching costs work in a nonlinear way: convincing ten people connected in a network to switch to your incompatible network is more than ten times as hard as getting one customer to switch. But you need all ten, or most of them: no one will want to be the first to give up the network externalities and risk being stranded. Precisely because various users find it so difficult to coordinate to switch to an incompatible technology, control over a large installed base of users can be the greatest asset you can have.

The layout of the typewriter keyboard offers a fascinating example of collective switching costs and the difficulties of coordinating a move to superior technology. The now-standard keyboard configuration is known as the QWERTY keyboard, since the top row starts with letters QWERTY. According to many reports, early promoters of the Type Writer brand of machine in the 1870s intentionally picked this awkward configuration to slow down typists and thus reduce the incidence of jamming, to which their machines were prone. This was a sensible solution to the commercial problem faced by these pioneers: to develop a machine that would reliably be faster than a copyist could write. QWERTY also allowed salesmen to impress customers by typing their brand name, Type Writer, rapidly, using keys only from the top row.

Very soon after QWERTY was introduced, however, the problem of jamming was greatly reduced through advances in typewriter design. Certainly, today, the jamming of computer keyboards is rare indeed! And sure enough, alternative keyboards developed early in the twentieth century were reputed to be superior. The Dvorak layout, patented in 1932 with a home row of AOEUIDHTNS that includes all five vowels, has long been used by speed typists. All this would suggest that QWERTY should by now have given way to more efficient keyboard layouts.

Why, then, are we all still using QWERTY keyboards? One answer is straightforward: the costs we all would bear to learn a new keyboard are simply too high to make the transition worthwhile. Some scholars assert that there is nothing more than this to the QWERTY story. Under this story, Dvorak is just not good enough to overcome the individual switching costs of learning it. Other scholars claim, however, that we would collectively be better off switching to the Dvorak layout (this calculation should include our children, who have yet to be trained on QWERTY), but no one is willing to lead the move to Dvorak. Under this interpretation, the collective switching costs are far higher than all of our individual switching costs, because coordination is so difficult.

Coordination costs were indeed significant in the age of the typewriter. Ask yourself this question: in buying a typewriter for your office, why pick the leading layout, QWERTY, if other layouts are more efficient? Two reasons stand out. Both are based on the fact that the typewriter keyboard system has two elements: the keyboard layout and the human component of the system, namely, the typist. First, trained typists you plan to hire already know QWERTY. Second, untrained typists you plan to hire will prefer to train on a QWERTY keyboard so as to acquire marketable skills. Human capital (training) is specific to the keyboard layout, giving rise to network effects. In a flat market consisting mostly of replacement sales, buyers will have a strong preference to replace old QWERTY typewriters with new ones. And in a growing market, new sales will be tilted toward the layout with the larger installed base. Either way, positive feedback rules. We find these coordination costs less compelling now, however. Typists who develop proficiency on the Dvorak layout can use those skills in a new job simply by reprogramming their computer keyboard. Thus, we find the ongoing persistence of the QWERTY keyboard in today’s computer society at odds with the strongest claims of superiority of the Dvorak layout.

IS YOUR INDUSTRY SUBJECT TO POSITIVE FEEDBACK?

We do not want to leave the impression that all information infrastructure markets are dominated by the forces of positive feedback. Many companies can compete by adhering to widely accepted standards. For example, many companies compete to sell telephone handsets and PBXs; they need only interconnect properly with the public switched telephone network. Likewise, while there are strong network effects in the personal computer industry, there are no significant demand-side economies of scale within the market for IBM-compatible personal computers. If one person has a Dell and his coworker has a Compaq, they can still exchange files, e-mail, and advice. The customer-level equipment in telephony and PC hardware has been effectively standardized, so that interoperability and its accompanying network effects are no longer the problem they once were.

Another example of a high-tech industry that currently does not experience large network effects is that of Internet service providers. At one time, America Online, CompuServe, and Delphi attempted to provide proprietary systems of menus, e-mail, and discussion groups. It was clumsy, if not impossible, to send e-mail from one provider to another. In those days there were network externalities, and consumers gravitated toward those networks that offered the best connections to other consumers.

The commercialization of the Internet changed all that. The availability of standardized protocols for menus/browsers, e-mail, and chat removed the advantage of being a larger ISP and led to the creation of thousands of smaller providers. If you are on AOL, you can still exchange e-mail with your sister in Boston who is an IBM network customer.

This situation may well change in the future as new Internet technology allows providers to offer differential quality of service for applications such as video conferencing. A large ISP may gain an advantage based on the technological fact that it is easier to control quality of service for traffic that stays on a single network. Video conferencing with your sister in Boston could be a lot easier if you are both on the same network—creating a significant network externality that could well alter the structure of the ISP industry and lead to greater consolidation and concentration. A number of observers have expressed concern that the proposed acquisition of MCI by Worldcom will permit Worldcom to gain dominance by providing superior service to customers whose traffic stays entirely on Worldcom’s network.

Our point is that you need to think carefully about the magnitude and significance of network externalities in your industry. Ford used to offer costly rebates and sell thousands of Tauruses to Hertz (which it owns) to gain the title of best-selling car. But was it really worth it? Who buys a car just because other people buy it? Don’t let the idea of positive feedback carry you away: not every market tips.

Will your market tip toward a single dominant technology or vendor? This is a critical question to ask before forging ahead with any of the basic strategies we have just described. If your market is a true winner-take-all market subject to such tipping, standardization may be critical for the market to take off at all. Plus, these same positive feedback conditions make it very risky to compete because of the dark side of positive feedback: a necessary implication of “winner-take-all” is “loser-gets-nothing.” On the other hand, if there is room for several players in your industry, competition takes on a different tone than if there will be only one survivor in a standards war.

Table 7.1. Likelihood of Market Tipping to a Single Technology

| Low Economies of Scale | High Economies of Scale | |

| Low demand for variety | Unlikely | High |

| High demand for variety | Low | Depends |

Whether a market tips or not depends on the balance between two fundamental forces: economies of scale and variety. See Table 7.1 for a classification.

Strong scale economies, on either the demand or the supply side of the market, will make a market tippy. But standardization typically entails a loss of variety, even if the leading technology can be implemented with a broad product line. If different users have highly distinct needs, the market is less likely to tip. In high-definition television (HDTV), different countries use different systems, both because of the legacy of earlier incompatible systems and because of the tendency to favor domestic firms over foreign ones. As a result, the worldwide market has not tipped, although each individual country has. The fact is, most network externalities in television do not cross national or regional borders: not very many people want to take a TV from the United States to Japan, so little is lost when different regions use incompatible transmission standards.

We’ve emphasized demand-side scale economies, but tippiness depends on the sum total of all scale economies. True, the strongest positive feedback in information industries comes on the demand side, but you should not ignore the supply side in assessing tipping. Traditional economies of scale that are specific to each technology will amplify demand-side economies of scale. So, too, will dynamic scale economies that arise based on learning-by-doing and the experience curve.

Even though we started this section by saying that there are no significant demand-side economies of scale for IBM-compatible personal computers, it doesn’t follow that this market is immune from positive feedback since there may well be significant economies of scale on the production side of the market. Four companies, Compaq, Dell, HP, and IBM, now control 24 percent of the market for personal computers, and some analysts expect this fraction to grow, claiming that these companies can produce desktop boxes at a smaller unit cost than their smaller competitors. This may be so, but it is important to recognize that this is just old-fashioned supply-side economies of scale; these different brands of personal computers interoperate well enough that demand-side economies of scale are not particularly important.

Information goods and information infrastructure often exhibit both demand-side and supply-side economies of scale. One reason Digital Equipment Corporation has had difficulty making its Alpha chip fly as an alternative to Intel chips, despite its impressive performance, is that Digital lacks the scale to drive manufacturing costs down. Digital is now hoping to overcome that obstacle by sourcing its chips from Intel and Samsung, which can operate chip fabrication facilities at far greater scale than Digital has achieved. Still, whether Digital can attract enough partners to generate positive feedback for the Alpha chip remains to be seen. The United States and Europe are currently competing to convince countries around the world to adopt their HDTV formats. Tipping may occur for HDTV not based on network effects but because of good old-fashioned economies of scale in making television sets.

We have emphasized the network nature of information technology, with many of our examples coming from the hardware side. The same effects occur on the software side. It is hard for a new virtual reality product to gain market share without people having access to a viewer for that product … but no one wants to buy a viewer if there is no content to view.

However, the Internet has made this chicken-and-egg problem a lot more manageable. Now you can download the viewer prior to, or even concurrently with, downloading the content. Want to read a PDF file? No problem—click over to Adobe’s site and download the latest version of Acrobat. New technologies like Marimba even allow your system to upgrade its viewers over the Internet automatically. If your viewer is written in Java, you can download the viewer along with the content. It’s like using your computer to download the fax machine along with the fax!

The Internet distribution of new applications and standards is very convenient and reduces some of the network externalities for software by reducing switching costs. Variety can be supported more easily if an entire system can be offered on demand. But the Internet certainly doesn’t eliminate network externalities in software. Interoperability is still a big issue on the production side: even if users can download the appropriate virtual reality viewer, producers won’t want to produce to half-a-dozen different standards. In fact, it’s because of this producer resistance that Microsoft and Netscape agreed on a Virtual Reality Markup Language standard, as we discuss in Chapter 8.

IGNITING POSITIVE FEEDBACK: PERFORMANCE VERSUS COMPATIBILITY

What does it take for a new technology to succeed in the market? How can a new technology get into a virtuous cycle rather than a vicious one? Philips and Sony certainly managed it when they introduced compact disks in the early 1980s. Fifteen years later, phonographs and long-playing records (LPs) are scarce indeed; our children hardly know what they are.

How can you make network externalities work for you to launch a new product or technology? How can you overcome collective switching costs and build a new network of users? Let there be no doubt: building your own base of users for a new technology in the face of an established network can be daunting. There are plenty of failures in consumer electronics alone, not to mention more arcane areas. Indeed, Sony and Philips have had more than a little trouble duplicating their CD feat. They teamed up to introduce digital audio tape (DAT) in 1987, which offered the sound quality of CD along with the ability to record music. But DAT bombed, in part because of the delays based on concerns about copy protection.

Philips tried on its own with the digital compact cassette (DCC) in 1992. These cassettes had the advantage that DCC machines (unlike DAT machines) could play conventional cassettes, making the new technology backward compatible. But the sound quality of the DCC offered no big improvement over conventional CDs. Without a compelling reason to switch, consumers refused to adopt the new technology. Sony, too, had its own offering around this time, the minidisk. While minidisks are still around (especially in Japan), this product never really got on the positive feedback curve, either.

There are two basic approaches for dealing with the problem of consumer inertia: the evolution strategy of compatibility and the revolution strategy of compelling performance. Combinations are possible, but the key is to understand these two fundamental approaches. These strategies reflect an underlying tension when the forces of innovation meet up with network externalities: is it better to wipe the slate clean and come up with the best product possible (revolution) or to give up some performance to ensure compatibility and thus ease consumer adoption (evolution)?

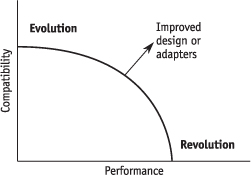

Figure 7.4. Performance versus Compatibility

Figure 7.4 illustrates the trade-off. You can improve performance at the cost of increasing customer switching costs, or vice-versa. An outcome of high compatibility with limited performance improvement, in the upper-left corner of the figure, characterizes the evolution approach. An outcome of little or no compatibility but sharply superior performance, in the lower-right corner of the figure, characterizes the revolution approach. Ideally, you would like to have an improved product that is also compatible with the installed base, but technology is usually not so forgiving, and adapters and emulators are notoriously buggy. You will inevitably face the tradeoff in Figure 7.4.

EVOLUTION: OFFER A MIGRATION PATH

The history of color television in the United States, discussed later in the chapter, teaches us that compatibility with the installed base of equipment is often critical to the launch of a new generation of technology. The CBS color system, incompatible with existing black-and-white sets, failed despite FCC endorsement as the official standard. When compatibility is critical, consumers must be offered a smooth migration path to a new information technology. Taking little baby steps toward a new technology is a lot easier than making a gigantic leap of faith.

The evolution strategy, which offers consumers an easy migration path, centers on reducing switching costs so that consumers can gradually try your new technology. This is what Borland tried to do in copying certain commands from Lotus 1-2-3. This is what Microsoft did by including in Word extensive, specialized help for WordPerfect users, as well as making it easy to convert WordPerfect files into Word format. Offering a migration path is evolutionary in nature. This strategy can be employed on a modest scale, even by a relatively small player in the industry.

In virtual networks, the evolution strategy of offering consumers a migration path requires an ability to achieve compatibility with existing products. In real networks, the evolution strategy requires physical interconnection to existing networks. In either case, interfaces are critical. The key to the evolution strategy is to build a new network by linking it first to the old one.

One of the risks of following the evolution approach is that one of your competitors may try a revolution strategy for its product. Compromising performance to ensure backward compatibility may leave an opening for a competitor to come in with a technologically superior market. This is precisely what happened to the dBase program in 1990 when it was challenged by Paradox, FoxPro, and Access in the market for relational database software.

Intel is facing this dilemma with its Merced chip. The 32-bit architecture of Intel’s recent chips has been hugely successful for Intel, but to move to a 64-bit architecture the company will have to introduce some incompatibilities—or will it? Intel claims that its forthcoming Merced chip will offer the best of both worlds, running both 32-bit and 64-bit applications. There is a lot of speculation about the Merced architecture, but Intel is keeping quiet about strategy, since it recognizes that it will be especially vulnerable during this transition.

Can you offer your customers an attractive migration path to a new technology? To lure customers, the migration path must be smooth, and it must lead somewhere. You will need to overcome two obstacles to execute this strategy: technical and legal.

Technical Obstacles

The technical obstacles you’ll face have to do with the need to develop a technology that is at the same time compatible with, and yet superior to, existing products. Only in this way can you keep customers’ switching costs low, by offering backward compatibility, and still offer improved performance. We’ll see in our example of high-definition television how this strategy can go awry: to avoid stranding existing TV sets in the early 1990s, the Europeans promoted a standard for the transmission of high-definition signals that conventional TV sets could decipher. But they paid a high price: the signal was not as sharp as true HDTV, and the technology bombed despite strong government pressure on the satellite industry to adopt it.

Technical obstacles to the thorny compatibility/performance tradeoff are not unique to upstart firms trying to supplant market leaders. Those same market leaders face these obstacles as well. Microsoft held back the performance of Windows 95 so that users could run old DOS applications. Microsoft has clearly stated that Windows 95 is a transition operating system and that its eventual goal is to move everyone to Windows NT.

One way to deal with the compatibility/performance trade-off is to offer one-way compatibility. When Microsoft offered Office 97 as an upgrade to Office 95, it designed the file formats used by Office 97 to be incompatible with the Office 95 formats. Word 97 could read files from Word 95, but not the other way around. With this tactic, Microsoft could introduce product improvements while making it easy for Word 97 users to import the files they had created using older versions.

This one-way compatibility created an interesting dynamic: influential early adopters had a hard time sharing files with their slower-to-adopt colleagues. Something had to give. Microsoft surely was hoping that organizations would shift everyone over to Office 97 to ensure full interoperability. However, Microsoft may have gone too far. When this problem became widely recognized, and potential users saw the costs of a heterogeneous environment, they began to delay deployment of Office 97. Microsoft’s response was to release two free applications: Word Viewer, for viewing Word 97 files and Word Converter, for converting Word 97 to Word 95.

Remember, your strategy with respect to selling upgrades should be to give the users a reason to upgrade and then to make the process of upgrading as easy as possible. The reason to upgrade can be a “pull” (such as desirable new features) or a “push” (such as a desire to be compatible with others). The difficulty with the push strategy is that users may decide not to upgrade at all, which is why Microsoft eventually softened its “incompatibility” strategy.

In some cases, the desire to maintain compatibility with previous generations has been the undoing of market leaders. The dBase programming language was hobbled because each new version of dBase had to be able to run programs written for all earlier versions. Over time, layers of dBase programming code accumulated on top of each other. Ashton-Tate, the maker of dBase, recognized that this resulted in awkward “bloatware,” which degraded the performance of dBase. Unable to improve dBase in a timely fashion, and facing competition from Borland’s more elegant, object-oriented, relational database program, Paradox, dBase’s fortunes fell sharply. Ashton-Tate was slain by the dark side of positive feedback. Ultimately, Borland acquired Ashton-Tate with the idea of migrating the dBase installed base to Paradox.

We offer three strategies for helping to smooth user migration paths to new technologies:

Use creative design. Good engineering and product design can greatly ease the compatibility/performance trade-off. As shown in Figure 7.4, improved designs shift the entire trade-off between compatibility and performance favorably. Intensive effort in the early 1950s by engineers at NBC enabled them to offer a method of transmitting color television signals so that black-and-white sets could successfully receive these same signals. The breakthrough was the use of complex electronic methods that converted the three color signals (red, green, and blue) into two signals (luminance and color).

Think in terms of the system. Remember, you may be making only one component, but the user cares about the whole system. To ease the transition to digital television, the FCC is loaning broadcasters extra spectrum space so they can broadcast both conventional and HDTV digital signals, which will ease the burden of switching costs.

Consider converters and bridge technologies. HDTV is again a good example: once broadcasters cease transmitting conventional TV signals, anyone with an analog television will have to buy a converter to receive digital over-the-air broadcasts. This isn’t ideal, but it still offers a migration path to the installed base of analog TV viewers.

Legal Obstacles

The second kind of obstacle you’ll find as you build a migration path is legal and contractual: you need to have or obtain the legal right to sell products that are compatible with the established installed base of products. Sometimes this is not an issue: there are no legal barriers to building TV sets that can receive today’s broadcast television signals. But sometimes this kind of barrier can be insurmountable. Incumbents with intellectual property rights over an older generation of technology may have the ability to unilaterally blockade a migration path. Whether they use this ability to stop rivals in their tracks, or simply to extract licensing revenues, is a basic strategy choice for these rights holders. For example, no one can sell an audio machine in the United States that will play CDs without a license from Philips and Sony, at least until their patents expire. Sony and Philips used their power over CD technology in negotiating with Time Warner, Toshiba, and others over the DVD standard. As a result, the new DVD machines will be able to read regular audio CDs; they will also incorporate technology from Sony and Philips.

REVOLUTION: OFFER COMPELLING PERFORMANCE

The revolution strategy involves brute force: offer a product so much better than what people are using that enough users will bear the pain of switching to it. Usually, this strategy works by first attracting customers who care the most about performance and working down from there to the mass market. Sony and Philips appealed first to the audiophiles, who then brought in the more casual music listeners when prices of machines and disks fell. Fax machines first made inroads in the United States for exchanging documents with Japan, where the time and language differences made faxes especially attractive; from this base, the fax population exploded. HDTV set manufacturers are hoping to first sell to the so-called vidiots, those who simply must have the very best quality video and the largest TV sets available. The trick is to offer compelling performance to first attract pioneering and influential users, then to use this base to start up a bandwagon propelled by self-fulfilling consumer beliefs in the inevitable success of your product.

How big a performance advance must you offer to succeed? Andy Grove speaks of the “10X” rule of thumb: you need to offer performance “ten times better” than the established technology to start a revolution. We like the idea, and certainly agree that substantial improvements in performance are necessary to make the revolution strategy work. But in most applications performance cannot easily be reduced to a single measure, as implied by the 10X rule. Also, as economists, we must point out that the magnitude of switching costs enters into the calculation, too. Sega’s ability to make inroads against Nintendo in the video game business in the early 1990s was aided by the presence of lots of customers with low switching costs: there is a new crop of ten-year-old boys every year who are skilled at convincing Mom and Dad that they just have to get the system with the coolest new games or graphics.

Likewise, a growing market offers more opportunities to establish a beachhead against an established player. New customers alone can provide critical mass. More generally, a rapidly growing market tends to enhance the attractiveness of the revolution strategy. If the market is growing rapidly, or if consumer lock-in is relatively mild, performance looms larger relative to backward compatibility.

The revolution strategy is inherently risky. It cannot work on a small scale and usually requires powerful allies. Worse yet, it is devilishly difficult to tell early on whether your technology will take off or crash and burn. Even the successful technologies start off slowly and accelerate from there, following the logistic, or S-shaped, growth pattern we noted earlier.

IGNITING POSITIVE FEEDBACK: OPENNESS VERSUS CONTROL

Anyone launching a new technology must also face a second fundamental trade-off, in addition to the performance/compatibility trade-off. Do you choose an “open” approach by offering to make the necessary interfaces and specifications available to others, or do you attempt to maintain control by keeping your system proprietary? This trade-off is closely related to our discussion of lock-in in Chapters 5 and 6.

Proprietary control will be exceedingly valuable if your product or system takes off. As we discussed in Chapter 6, an installed base is more valuable if you do not face rivals who can offer products to locked-in customers. Likewise, your network is far more valuable if you can control the ability of others to interconnect with you. Intel’s market capitalization today would be far less if Intel had previously agreed to license all the intellectual property embodied in its Pentium chips to many rival chip makers.

However, failure to open up a technology can spell its demise, if consumers fear lock-in or if you face a strong rival whose system offers comparable performance but is nonproprietary. Sony faced precisely this problem with its Beta video cassette recorder system and lost out to the more open VHS system, which is now the standard. Openness will bolster your chances of success by attracting allies and assuring would-be customers that they will be able to turn to multiple suppliers down the road.

Which route is best, openness or control? The answer depends on whether you are strong enough to ignite positive feedback on your own. Strength in network markets is measured along three primary dimensions: existing market position, technical capabilities, and control of intellectual property such as patents and copyrights. In Chapter 9 we will explore more fully the key assets that determine companies’ strengths in network markets.

Of course, there is no one right choice between control and openness. Indeed, a single company might well choose control for some products and openness for others. Intel has maintained considerable control over the MMX multimedia specification for its Pentium chips. At the same time, Intel recently promoted new, open interface specifications for graphics controllers, its accelerated graphics port (AGP), so as to hasten improvements in visual computing and thus fuel demand for Intel’s microprocessors. Intel picked control over MMX, but openness for AGP.

In choosing between openness and control, remember that your ultimate goal is to maximize the value of your technology, not your control over it. This is the same point we discussed in the case of intellectual property rights in Chapter 4. Ultimately, your profits will flow from the competitive advantages you can retain while assembling enough support to get your technology off the ground.

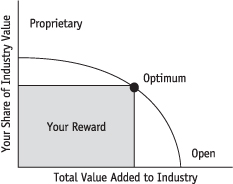

Think of your reward using this formula:

Your reward = Total value added to industry

× your share of industry value

The total value added to the industry depends first on the inherent value of the technology—what improvement it offers over existing alternatives. But when network effects are strong, total value also depends on how widely the technology is adopted—that is, the network size. Your share of the value added depends on your ultimate market share, your profit margin, any royalty payments you make or receive, and the effects the new technology has on your sales of other products. Does it cannibalize or stimulate them?

Roughly speaking, strategies to achieve openness emphasize the first term in this formula, the total value added to the industry. Strategies to achieve control emphasize the second term, your share of industry value. We will focus on openness strategies in Chapter 8 and on control strategies in Chapter 9.

The fundamental trade-off between openness and control is shown in Figure 7.5: you can have a large share of a small market (the upper-left portion of the diagram), or a small share of a large market (the lower-right portion of the diagram). Unless you have made a real technical breakthrough or are extremely lucky, it is almost impossible to have it both ways. At the optimum, you choose the approach that maximizes your reward—that is, the total value you receive.

Figure 7.5. Openness versus Control

This trade-off is fundamental in network markets. To maximize the value of your new technology, you will likely need to share that value with other players in the industry. This comes back to the point we have made repeatedly: information technology is comprised of systems, and an increase in the value of one component necessarily spills over to other components. Capturing the value from improvements to one component typically requires the cooperation of those providing other components. Count on the best of those suppliers to insist on getting a share of the rewards as a condition for their cooperation.

Unless you are in a truly dominant position at the outset, trying to control the technology yourself can leave you a large share of a tiny pie. Opening up the technology freely can fuel positive feedback and maximize the total value added of the technology. But what share of the benefits will you be able to preserve for yourself? Sometimes even leading firms conclude that they would rather grow the market quickly, through openness, than maintain control. Adobe did this with its PostScript language, and Sun followed its example with Java.

The boundary between openness and control is not sharp; intermediate approaches are frequently used. For example, a company pursuing an openness strategy can still retain exclusive control over changes to the technology, as Sun is attempting to do with Java. Likewise, a company pursuing a control strategy can still offer access to its network for a price, as Nintendo did by charging royalties to game developers who were writing games for its Nintendo Entertainment System.

Openness

The openness strategy is critical when no one firm is strong enough to dictate technology standards. Openness also arises naturally when multiple products must work together, making coordination in product design essential.

Openness is a more cautious strategy than control. The underlying idea is to forsake control over the technology to get the bandwagon rolling. If the new technology draws on contributions from several different companies, each agrees to cede control over its piece in order to create an attractive package: the whole is greater than the sum of the parts.

The term openness means many things to many people. The Unix X/Open consortium defines open systems as “systems and software environments based on standards which are vendor independent and commonly available.”

As we emphasized in our discussion of lock-in, beware vague promises of openness. Openness may be in the eye of the beholder. Netscape insists that it is congenitally open, but some observers detect efforts by Netscape to keep control. Cisco is often lauded for using open Internet standards for its routers and switches, but, again, some see a deep proprietary streak there, too.

Openness involves more than technical specifications; timing is also important. Microsoft has been accused of keeping secret certain application programming interfaces (APIs), in violation of its earlier promises that Windows would be open. Even harder to assess, independent software vendors (ISVs) have at times been very concerned that Microsoft provides APIs for new versions of Windows to its in-house development team before giving them to the ISVs. To some extent this seems inevitable as part of improving the operating system and making sure it will work smoothly with new applications. On the other hand, ISVs are justifiably unhappy when placed at a competitive disadvantage relative to Microsoft’s own programmers, especially since they already face the threat of having their program’s functionality subsumed into the operating system itself.

Within the openness category, we can fruitfully distinguish between a full openness strategy and an alliance strategy for establishing new product standards. We study full openness and alliance strategies in Chapter 8 in the context of standards negotiations.

Under full openness, anybody has the right to make products complying with the standard, whether they contributed to its development or not. Under an alliance approach, each member of the alliance contributes something toward the standard, and, in exchange, each is allowed to make products complying with the standard. Nonmembers can be blocked from offering such products or charged for the right to do so. In other words, the alliance members all have guaranteed (usually free) access to the network they have created, but outsiders may be blocked from accessing it or required to pay a special fee for such access.

In some industries with strong network characteristics, full openness is the only feasible approach. For years, basic telecommunications standards have been hammered out by official standard-setting bodies, either domestically or internationally. The standard-setting process at the International Telecommunications Union (ITU), for example, has led to hundreds of standards, including those for fax machines and modems. The ITU, like other formal standard-setting bodies, insists, as a quid pro quo for endorsement of a standard, that no single firm or group of firms maintains proprietary control over the standard. We will discuss tactics in formal standard setting in detail in Chapter 8.

The full openness strategy is not confined to formal standard setting, however. Whatever the institutional setting, full openness is a natural way of overcoming a stalemate in which no single firm is in a position to drive home its preferred standard without widespread support.

One way to pursue a full openness strategy is to place the technology in the hands of a neutral third party. Even this approach can be plagued with difficulties, however. Is the third party really neutral, or just a cover operation for the company contributing the technology? Doubts have arisen, for example, over whether Microsoft has really ceded control of ActiveX. We’ll address ActiveX more fully in the next chapter.

In the end, it’s worth asking who really wants openness and how everyone’s interests are likely to evolve as the installed base grows or competition shifts. Usually, the upstart wants openness to neutralize installed-base disadvantages or to help assemble allies. In the Internet arena, where Microsoft was a latecomer, it initially pushed for open standards. Open Internet standards, at least initially, shift competition to marketing, brand name, and distribution, where Microsoft is strong. In desktop applications, where Microsoft is the dominant player, the company has not pushed for open standards and, it is claimed, has actively resisted them.

Alliances are increasingly commonplace in the information economy. We do not mean those so-called strategic alliances involving widespread cooperation between a pair of companies. Rather, we mean an alliance formed by a group of companies for the express purpose of promoting a specific technology or standard. Alliances typically involve extensive wheeling and dealing, as multiple players negotiate based on the three key assets: control of the existing installed base, technical superiority, and intellectual property rights.

The widely heralded convergence between the computer and telecommunications industry offers many opportunities for alliances. Recently, for example, Compaq, Intel, and Microsoft announced a consortium for setting standards for digital subscriber line (DSL) technology, which promises to offer high-speed access to the Internet over residential phone lines. These three superstars of the information industry have partnered with seven of the eight regional Bell operating companies to promote unified hardware and software interfaces

Alliances come in many forms, depending on the assets that the different players bring to the table. Some of them operate as “special interest groups” (SIGs) or “task forces,” groups of independent companies that meet to coordinate product standards, interfaces, protocols, and specifications. Cross-licensing of critical patents is common in this context, as is sharing of confidential design information under nondisclosure agreements. Some players hope to achieve royalty income and negotiate royalty arrangements that will attract critical allies. Others hope to gain from manufacturing skills or time-to-market prowess, so long as they are not blocked by patents or excessive royalty payments.

Alliances span the distance between full openness and control. At one end of the spectrum is an alliance that makes the technology freely available to all participants, but not (necessarily) to outsiders. Automatic teller machine networks and credit card networks work this way. For example, Visa and MasterCard both require merchant banks to make payments to card-issuing banks in the form of “interchange fees” as a means of covering the costs and risks borne by card-issuing banks, but the Visa and MasterCard associations themselves impose only modest fees on transactions to cover their own costs. And membership in Visa and MasterCard is generally open to any bank, so long as that bank does not issue rival cards, such as the Discover card.

At the other end of the spectrum is an alliance built like a web around a sponsor, a central actor that collects royalties from others, preserves proprietary rights over a key component of the network, and/or maintains control over the evolution of the technology. We described how Apple is the sponsor of the Macintosh network. Likewise, Sun is the sponsor of Java. If the sponsor charges significant royalties or retains exclusive rights to control the evolution of the technology, we would classify that situation as one of control, not openness. Sun is walking a thin line, wanting to retain its partners in the battle with Microsoft but also wanting to generate revenues from its substantial investment in Java.

Control

Only those in the strongest position can hope to exert strong control over newly introduced information technologies. Usually these are market leaders: AT&T was a prime example in its day; Microsoft, Intel, TCI, and Visa are examples today. In rare cases, strength flows from sheer technical superiority: at one time or another, Apple, Nintendo, Sony, Philips, and Qualcomm have all been in this privileged position.

Companies strong enough to unilaterally control product standards and interfaces have power. Even if they are not being challenged for supremacy, however, they have much to lose by promoting poorly conceived standards. For example, Microsoft is not about to lose its leadership position in desktop operating systems, even if it slips up when designing new APIs between its operating system and applications or makes some design errors in its next release of Windows. But this is not to say that Microsoft can be reckless or careless in this design process: Microsoft still needs to attract independent software developers to its platform, it still has powerful incentives to improve Windows to drive upgrade sales and reach new users, and it wants the overall Windows “system” to improve to make further inroads against Unix-based workstations.

GENERIC STRATEGIES IN NETWORK MARKETS

We are now ready to introduce the four generic strategies for companies seeking to introduce new information technology into the marketplace. These four strategies for igniting positive feedback follow logically from the two basic trade-offs discussed in the previous sections: (1) the tradeoff between performance and compatibility as reflected in the choice between revolution and evolution and (2) the trade-off between openness and control. The combination of each of these two trade-offs yields the four generic strategies shown in Table 7.2.

The first row in Table 7.2 represents the choice of compatibility, the evolution strategy. The second row represents the choice to accept incompatibility in order to maximize performance, the revolution strategy. Either of these approaches can be combined with openness or control. The left-hand column in Table 7.2 represents the decision to retain proprietary control, the right-hand column the decision to open up the technology to others.

Table 7.2. Generic Network Strategies

| Control | Openness | |

| Compatibility | Controlled migration | Open migration |

| Performance | Performance play | Discontinuity |

The four generic network strategies that emerge from this analysis can be found in Table 7.2: performance play, controlled migration, open migration, and discontinuity. In the next few pages, we describe the four strategies, say a bit about their pros and cons, and give examples of companies that have pursued them. We offer a more in-depth discussion of how the generic strategies work and when to use them in Chapters 8 and 9.

These four generic strategies arise again and again. The players and the context change, but not these four strategies. Incumbents may find it easier to achieve backward compatibility, but entrants and incumbents alike must choose one of our generic strategies. In some markets, a single firm or coalition is pursuing one of the generic strategies. In other cases, two incompatible technologies are engaged in a battle to build their own new networks. In these standards wars, which we explore in Chapter 9, the very nature of the battle depends on the pair of generic strategies employed by the combatants.

Performance Play

Performance play is the boldest and riskiest of the four generic strategies. A performance play involves the introduction of a new, incompatible technology over which the vendor retains strong proprietary control. Nintendo followed this approach when it introduced its Nintendo Entertainment System in the mid-1980s. More recently, U.S. Robotics used the performance play with its Palm Pilot device. Iomega did likewise in launching its Zip drive.

Performance play makes the most sense if your advantage is primarily based on the development of a striking new technology that offers users substantial advantages over existing technology. Performance play is especially attractive to firms that are outsiders, with no installed base to worry about. Entrants and upstarts with compelling new technology can more easily afford to ignore backward compatibility and push for an entirely new technology than could an established player who would have to worry about cannibalizing sales of existing products or stranding loyal customers.

Even if you are a new entrant to the market with “way cool” technology, you may need to consider sacrificing some performance so as to design your system to reduce consumer switching costs; this is the controlled migration strategy. You also need to assess your strength and assemble allies as needed. For example, you might agree to license your key patents for small or nominal royalties to help ignite positive feedback. The more allies you need, the more open you make your system, the closer you are to the discontinuity strategy than the performance play.

Controlled Migration

In controlled migration, consumers are offered a new and improved technology that is compatible with their existing technology, but is proprietary. Windows 98 and the Intel Pentium II chip are examples of this strategy. Upgrades and updates of software programs, like the annual release of TurboTax by Intuit, tend to fit into this category as well. Such upgrades are offered by a single vendor, they can read data files and programming created for earlier versions, and they rely on many of the same skills that users developed for earlier versions.

If you have secure domination in your market, you can introduce the new technology as a premium version of the old technology, selling it first to those who find the improvements most valuable. Thus, controlled migration often is a dynamic form of the versioning strategy described in Chapter 3. Controlled migration has the further advantage of making it harder for an upstart to leapfrog ahead of you with a performance play.

Open Migration

Open migration is very friendly to consumers: the new product is supplied by many vendors and requires little by way of switching costs. Multiple generations of modems and fax machines have followed the open migration model. Each new generation conforms to an agreed-upon standard and communicates smoothly with earlier generations of machines.

Open migration makes the most sense if your advantage is primarily based on manufacturing capabilities. In that case, you will benefit from a larger total market and an agreed-upon set of specifications, which will allow your manufacturing skills and scale economies to shine. Owing to its fine engineering and skill in manufacturing, Hewlett-Packard has commonly adopted this strategy.

Discontinuity

Discontinuity refers to the situation in which a new product or technology is incompatible with existing technology but is available from multiple suppliers. The introduction of the CD audio system and the 3½″ loppy disk are examples of discontinuity. Like open migration, discontinuity favors suppliers that are efficient manufacturers (in the case of hardware) or that are best placed to provide value-added services or software enhancements (in the case of software).

HISTORICAL EXAMPLES OF POSITIVE FEEDBACK

The best way to get a feel for these strategies is to see them in action. In practice, the revolution versus evolution choice emerges in the design of new product standards and negotiation over those standards. The openness versus control choice arises when industry leaders set the terms on which their networks interconnect.

Fortunately, positive feedback and network externalities have been around for a while, so history can be our guide. As we have stressed, while information technology is hurtling forward at breathtaking speeds, the underlying economic principles are not all that novel. Even in this consummately high-tech area of standards, networks, interfaces, and compatibility, there is much to learn from history.

The case studies that follow illustrate the generic strategies and foreshadow some of the key strategic points we will develop in the next two chapters. All of our examples illustrate positive feedback in action: the triumph of one technology over others, in some cases by virtue of a modest head start or a fleeting performance advantage. One of the great attractions of historical examples is that we can see what happened after the dust finally settles, giving us some needed perspective in analyzing current battles.

When you stop to think about it, compatibility and standards have been an issue for as long as human beings have used spoken language or, more to the point, multiple languages. The Tower of Babel reminds us that standardization is hard. You don’t hear Esperanto spoken very much (though its promoters do have a site on the Web). English has done remarkably well as an international language for scientific and technical purposes and is being given an extra boost by the Internet, but language barriers have hardly been eliminated.

Turning from biblical to merely historical times, Eli Whitney amazed President John Adams in 1798 by disassembling a dozen muskets, scrambling the parts, and then reassembling them in working order. As a result, Whitney received a government contract for $134,000 to produce 10,000 army muskets using his “uniformity system.” This standardization of parts allowed for mass production and ushered in the American industrial revolution.

A humorous standards battle of sorts was triggered by the invention of the telephone. The early telephone links involved a continuously open line between two parties. Since the phone did not ring, how was the calling party to get the attention of those on the other end of the line? Thomas Edison consciously invented a brand-new word designed to capture the attention of those on the other end: “Hello!” This was a variant of the English “Hallow!” but reengineered by Edison to make it more effective. Edison, who was hard of hearing, estimated that a spoken “Hello!” could be heard ten to twenty feet away.

Soon thereafter, when telephones were equipped with bells to announce incoming calls, the more pressing issue was how to answer the telephone. This was a touchy issue; in the 1870s it was considered impolite to speak to anyone else unless you had been introduced! In 1878, when Edison opened the first public telephone exchange (in New Haven, Connecticut, on January 28, 1878), his operating manuals promoted “Hello!” as the proper way to answer the phone. (“What is wanted?” was noted as a more cautious alternative.) At the same time, Alexander Graham Bell, the inventor of the telephone, proclaimed that “Ahoy!” was the correct way to answer the telephone. By 1880, “Hello” had won this standards battle. This is an early example of how control over distribution channels, which Edison had through his manuals, can lead to control over interface standards.

Railroad Gauges

A more instructive example of standards battles involves the history of railroad gauges in the United States during the nineteenth century.

As railroads began to be built in the early nineteenth century, tracks of varying widths (gauges) were employed. Somewhat arbitrary early choices had major, lasting impacts. One of the first railroads in the South, for example, the South Carolina, picked 5-foot gauge tracks. Over time, other railroads all over the South followed suit. In the North, by contrast, the “standard” gauge of 4′8½″, popular in England for mining, was common. Evidently, this was about the width of cart track in Roman times, being the most efficient width of a loaded vehicle that could be pulled by a flesh-and-blood (not iron) horse. The persistence of the 4′8½″ gauge, which now is standard in the United States, is a good reminder that inertia is a powerful and durable force when standards are involved and that seemingly insignificant historical events can lead to lasting technological lock-in.

By 1860, seven different gauges were in use in America. Just over half of the total mileage was of the 4′8½″ standard. The next most popular was the 5-foot gauge concentrated in the South. As things turned out, having different gauges was advantageous to the South, since the North could not easily use railroads to move its troops to battle in southern territory during the Civil War. Noting this example, the Germans were careful to ensure that their railroads used a gauge different from the Russian railroads! The rest of Europe adopted the same gauge, which made things easy for Hitler during World War II: a significant fraction of German troop movements in Europe were accomplished by rail.