Chapter 7. Intercloud Security

This chapter covers the following topics:

![]() Customer Adoption Challenges for Cloud Computing: In this section, we will discuss the core concerns organizations have about cloud adoption.

Customer Adoption Challenges for Cloud Computing: In this section, we will discuss the core concerns organizations have about cloud adoption.

![]() Understanding the Security Landscape: In this section, we will discuss the current state of security threats related to cloud architectures and what organizations should do to address those threats.

Understanding the Security Landscape: In this section, we will discuss the current state of security threats related to cloud architectures and what organizations should do to address those threats.

![]() Building a Cloud-Focused Security Program: In this section, we will discuss the ways organizations can build a comprehensive security program to address cloud adoption challenges.

Building a Cloud-Focused Security Program: In this section, we will discuss the ways organizations can build a comprehensive security program to address cloud adoption challenges.

![]() Intercloud Security Architecture: In this section, we will present the security architecture of Intercloud and discuss different components of this architecture.

Intercloud Security Architecture: In this section, we will present the security architecture of Intercloud and discuss different components of this architecture.

![]() Intercloud Identity Architecture: In this section, we will present the identity architecture of a federated Intercloud partner ecosystem.

Intercloud Identity Architecture: In this section, we will present the identity architecture of a federated Intercloud partner ecosystem.

We are at a stage where cloud computing services have existed in the industry for a while. However, each of these instantiations has the unique mark of its origins. Google’s AppEngine is a PaaS programming API-based vision of cloud computing that is arguably designed to bring the developer to the Google “OS.” Amazon’s services are closer to the IaaS model, providing APIs to storage and machine images and bringing the user to the VM model of cloud.

Of course from within one of these clouds, explicit instructions can be called and issued through an API from one cloud provider to another. For example, code executing within Google AppEngine can also reference storage residing on AWS Simple Storage Service (S3).

Intercloud addresses this challenge by defining a systematic approach for clouds to interoperate. Standards groups and industry associations and consortia are now accelerating efforts to create open interoperability specifications with the goal of establishing defined methods of connecting clouds together.

Security for the Intercloud, a subject that was briefly discussed in earlier chapters, is one of the biggest hindrances to customers adopting cloud.1 The Cloud Security Alliance has been assessing cloud security scenarios; however, the Intercloud is rather nascent from a cloud security perspective.

Customer Adoption Challenges for Cloud Computing

Security is a unique domain that applies both horizontally and vertically across all defined architectures. A comprehensive integrated security architecture that provides security controls at all levels from access, physical and virtual infrastructure, and applications is imperative for any services delivery platform. In other words, it provides CISOs (chief information security officers) and CSOs (chief security officers) a view of security for their IT organizations. As the threat landscape keeps evolving and organizations continue to struggle with operationalizing their security infrastructure, having adaptive embedded security in all aspects (element, segment, edge, virtual, or even transport) is critical.

One of the biggest customer challenges for cloud adoption is around security. Organizations are concerned with data confidentiality, integrity, and sensitivity once information is moved into the cloud. According to a study conducted by the Cloud Security Alliance, security concerns are cited as the number-one reason for not adopting cloud.2 This provides a significant opportunity for service/cloud providers to offer industry- and standards-compliant infrastructures while providing end-to-end secure hosted IaaS, PaaS, and SaaS services. Aside from having to keep up with the ever-changing security challenges and threats, organizations are also challenged to manage their own security due to the growing number of complex security tools, operations, and management. The compilation of complex threat vectors across all layers of IT enterprises and vast tooling options have also increased the need for large highly skilled and paid security staff.

Additional security challenges related to cloud adoption include the following:

![]() Threat protection: The IT infrastructure must be protected from both external and internal threats. Externally, infrastructure and applications must be protected from data loss, network and application attacks, and other sophisticated attacks using state-of-the-art malware and techniques. Once these threats are detected, they must be remediated as soon as possible to avoid data and productivity loss.

Threat protection: The IT infrastructure must be protected from both external and internal threats. Externally, infrastructure and applications must be protected from data loss, network and application attacks, and other sophisticated attacks using state-of-the-art malware and techniques. Once these threats are detected, they must be remediated as soon as possible to avoid data and productivity loss.

![]() Challenges posed by new technology transitions: Multitenancy is a good example. Instead of having a physically dedicated infrastructure (servers, switches, storage) for each application, business unit, or function, large virtual and cloud infrastructures use multitenancy to logically separate those business groups that require a protected and trusted virtual computing environment. Data flow between these segmented environments must be secure, so that it goes into and out of only its assigned tenant and only persons or services with approved access to that tenant can add or retrieve the data.

Challenges posed by new technology transitions: Multitenancy is a good example. Instead of having a physically dedicated infrastructure (servers, switches, storage) for each application, business unit, or function, large virtual and cloud infrastructures use multitenancy to logically separate those business groups that require a protected and trusted virtual computing environment. Data flow between these segmented environments must be secure, so that it goes into and out of only its assigned tenant and only persons or services with approved access to that tenant can add or retrieve the data.

![]() Visibility: Maintaining compliance and providing visibility into the virtual and cloud data center are of primary concern. Customers want to ensure that the security controls that are used in the physical world are also present in the virtual domain.

Visibility: Maintaining compliance and providing visibility into the virtual and cloud data center are of primary concern. Customers want to ensure that the security controls that are used in the physical world are also present in the virtual domain.

![]() Proliferation of mobile devices: The IT departments are grasping for any standard or proven approaches that make bring your own device (BYOD) access to enterprise resources both secure and reliable. The task is dauntingly complex, and new and unforeseen consequences of BYOD are cropping up regularly.

Proliferation of mobile devices: The IT departments are grasping for any standard or proven approaches that make bring your own device (BYOD) access to enterprise resources both secure and reliable. The task is dauntingly complex, and new and unforeseen consequences of BYOD are cropping up regularly.

Taking an architectural approach resolves most of these security challenges by providing guidelines to

1. Harden an infrastructure before a security threat

2. Perform forensics and mitigation during a security incident

3. Perform postmortem procedures after an event to avoid similar incidents in the future

Customer Adoption Challenges for the Intercloud

As we move into Intercloud where data can theoretically be on any cloud provider, the security concerns increase tremendously. The security controls that you may have in your private cloud might be different from the security controls in your preferred cloud provider, which might be completely different from those of a cloud provider in a different geography. How do you ensure that security controls are consistently applied to all providers within the Intercloud? Customers want to move to the cloud to ease the overall management of their infrastructure. However, they also want to simplify the overall user experience when they need to access their applications and data. If the data resides within the Intercloud among many cloud partners, customers want to ensure that

![]() There is close identity integration with the Intercloud so that users do not have to authenticate multiple times while accessing their data

There is close identity integration with the Intercloud so that users do not have to authenticate multiple times while accessing their data

![]() The security controls and policies are consistently applied even when data is being moved from one cloud provider to another within this ecosystem

The security controls and policies are consistently applied even when data is being moved from one cloud provider to another within this ecosystem

![]() Data movement does not impact the user experience, and users don’t have to manually decide which cloud to connect to

Data movement does not impact the user experience, and users don’t have to manually decide which cloud to connect to

Understanding the Security Landscape

We all agree that the security landscape has changed. Everyone in the organization is a target. Users can act as a steppingstone for attackers to achieve their goals—to access and exfiltrate your sensitive data. In order to defeat an attacker, you must be one step ahead of them to implement the proper security controls to

![]() Visualize the activities in your cloud environment

Visualize the activities in your cloud environment

![]() Mitigate if malicious activities are discovered

Mitigate if malicious activities are discovered

The attackers are after your data; it is your responsibility to secure your house to protect your belongings. Even if your data is with a cloud provider, it is still your responsibility to ensure that the cloud provider has all the necessary controls in place to safeguard your data.

Attacker Goals

An attacker may attempt to use the Intercloud framework to launch various attacks against other systems. The Intercloud framework provides various functions that an attacker can abuse.

In general, an attacker may wish to do the following:

1. Compromise the confidentiality, authenticity, and availability of network functions and data flowing over the network.

2. Compromise the confidentiality, authenticity, and availability of network-attached devices and data on those devices.

3. Compromise the access control or availability of devices used by the Intercloud framework.

4. Compromise the access control or availability of services provided by the Intercloud framework.

5. Obtain services or resources under false pretenses.

The value of the assets affected varies from trivial to large amounts of money.

An attacker is assumed to have access to network links and therefore can act as a man-in-the-middle that might be able to observe and modify all traffic. The attacker could modify any portion of the message, including the address, header, and payload. The attacker could selectively insert and delete messages. The attacker may not have access to all links simultaneously; however, the attacker can capture a packet at one location and replay it at another immediately or at some later point in time. It is possible that some links may be difficult to compromise because of physical security and other topological restrictions; however, such restrictions typically limit the ways in which the system can be deployed and are undesirable. If such restrictions are part of the solution, the system still needs to evaluate how attacks on other links can compromise the protected link (off-path attacks). The amount of resources you should spend on protecting the data depends upon the security of the link and the criticality of your data.

Resource-Based Attacks

An attacker may attempt to use the Intercloud framework to launch various attacks against other systems. The Intercloud framework provides various functions that can be abused by an attacker. The following subsections provide a few examples of resource-based attacks.

Attacks on Devices and Hosts

An attacker may be able to compromise devices and hosts that make up the solution. In general, given enough resources an attacker can compromise any host. However, not all hosts present the same risk of compromise. A host may be compromised remotely because of software design or implementation vulnerabilities. A host may also be compromised through local physical access. A host compromise may be complete, in which case all information on the host and all functions of the host are available to an attacker. A host may be compromised so it can be used in an unauthorized manner, but not all information may be compromised. A host may be rendered unavailable without the functions it performs or data that it contains being compromised. A risk-based analysis should be conducted to understand the impact of a host being compromised based on the different types of use cases. This helps identify which hosted functions and data are the most important to protect. Some hosts will be critical and require more resource expenditure by the customer and vendor to assure security. The analysis should also consider whether an attacker can use an authorized host in an inappropriate way.

A host or device may be partially or completely compromised by an attacker. If an attacker fully compromises a host, he or she can use the host or device to perform any function for which it is authorized and as a result can obtain and modify any data that is on the host. A compromise of the host, regardless of how minor it is, will result in loss of its data integrity and trustworthiness.

Hypervisor Security

Hypervisor is the brains behind the running of the cloud infrastructure. It allows organizations and cloud providers to run multiple operating systems on a single host by sharing the physical components like the memory, CPU, and disk. It also controls and monitors the overall system modules and their interaction with each other. One of the most concerning attacks is on the hypervisor of a host running production VMs. If the hypervisor itself is compromised, the integrity of the data and the VMs hosting that data become a question mark. Can you imagine a scenario where the hypervisor of a cloud provider becomes compromised? If it does, can you trust the integrity of the system and its data? Unfortunately, the answer is no. Once someone has access to the hypervisor, it is game over and you will have to assume that all systems and data are compromised. Even if your virtual system is fully patched and secured, a hypervisor compromise trumps all system-level security controls. For example, a rogue VM may gain access to a host’s physical resources (memory, processor) due to a hypervisor misconfiguration. The rogue machine could have a rootkit installed to compromise the hypervisor and attack other VMs that are hosted on the same machine. Many security professionals do not realize that having a network IDS does not provide visibility and control at the VM level. Hence, if the hypervisor is compromised, the owner of the VM may not recognize the breach for months.

Hypervisors, like any other software, are also open to programming flaws that could allow someone with malicious intent to exploit a vulnerability and gain unauthorized access into the host and other virtual systems. It is fundamental that cloud providers address vulnerabilities related to hypervisors as a priority. They must have all the tools deployed to detect and mitigate unauthorized access into the host. If end customers doubt the security of hypervisors, they will certainly doubt the foundation of cloud computing.

When accessing a cloud provider, ensure that the provider has security controls in place to limit the attack surface area. Examples of security controls include but are not limited to

![]() Granular access control policies

Granular access control policies

![]() Monitoring of allowed drivers that can run emulated code

Monitoring of allowed drivers that can run emulated code

![]() Monitoring of hardware resources

Monitoring of hardware resources

![]() Patch and vulnerability management

Patch and vulnerability management

Cloud Storage Security

As mentioned earlier, organizations conduct a cloud security assessment to identify risks associated with sensitive data residing in the cloud. Many of them hold data back for fear of data leakage. A CSP may store data outside of the host nation. Law enforcement agencies in the host country may issue warrants to access data. Some of the other issues related to cloud storage include the following:

![]() Intermingling of data with other tenants: Because in a multitenant environment resources are shared among multiple parties, it is entirely possible that data leakage could occur due to an accident and/or a malicious hacker’s attack.

Intermingling of data with other tenants: Because in a multitenant environment resources are shared among multiple parties, it is entirely possible that data leakage could occur due to an accident and/or a malicious hacker’s attack.

![]() Encryption of data in motion and at rest: Since the cloud provider controls the multitenant environment, it is best to assume that the cloud provider is susceptible to data breaches. It is highly recommended to send only encrypted files to the cloud. Doing so also protects your data against any measure of snooping. Once data is moved to the cloud, ensure that it is also encrypted using the strongest available algorithms on the cloud storage disks.

Encryption of data in motion and at rest: Since the cloud provider controls the multitenant environment, it is best to assume that the cloud provider is susceptible to data breaches. It is highly recommended to send only encrypted files to the cloud. Doing so also protects your data against any measure of snooping. Once data is moved to the cloud, ensure that it is also encrypted using the strongest available algorithms on the cloud storage disks.

![]() Backup of data and offsite storage: In some instances, organizational data could be intermingled during the data backup process. A number of cloud providers back up their tenants’ data in different cloud locations and offsite facilities for high availability. Having encrypted data will help ensure that it remains encrypted at offsite and other backup locations.

Backup of data and offsite storage: In some instances, organizational data could be intermingled during the data backup process. A number of cloud providers back up their tenants’ data in different cloud locations and offsite facilities for high availability. Having encrypted data will help ensure that it remains encrypted at offsite and other backup locations.

Attack Vectors

This section describes potential vectors for an attacker to reach his or her goals. The analysis in the following sections will focus on vectors that make use of the Intercloud framework. We do not cover threats and mitigation outside of the Intercloud framework other than to describe their possible use as an attack vector manipulated by the Intercloud framework.

Since the Intercloud framework has a protocol that is carried over the Internet, its messages can potentially be intercepted, observed, and manipulated by an attacker. An attacker may

![]() Generate, manipulate, or delete messages that control the workloads

Generate, manipulate, or delete messages that control the workloads

![]() Manipulate responses from end systems to falsify data so the administrator thinks that the resource is other than what it should be or the state of a workload is different from what it actually is

Manipulate responses from end systems to falsify data so the administrator thinks that the resource is other than what it should be or the state of a workload is different from what it actually is

![]() Observe protocol messages and extract information, such as topology information, performance information, and physical location, that may be useful in planning physical or cyber attacks

Observe protocol messages and extract information, such as topology information, performance information, and physical location, that may be useful in planning physical or cyber attacks

![]() Attempt to manipulate protocol messages to attack a workload through the network

Attempt to manipulate protocol messages to attack a workload through the network

![]() Observe, generate, and manipulate protocol messages in order to attack the Intercloud framework or other systems

Observe, generate, and manipulate protocol messages in order to attack the Intercloud framework or other systems

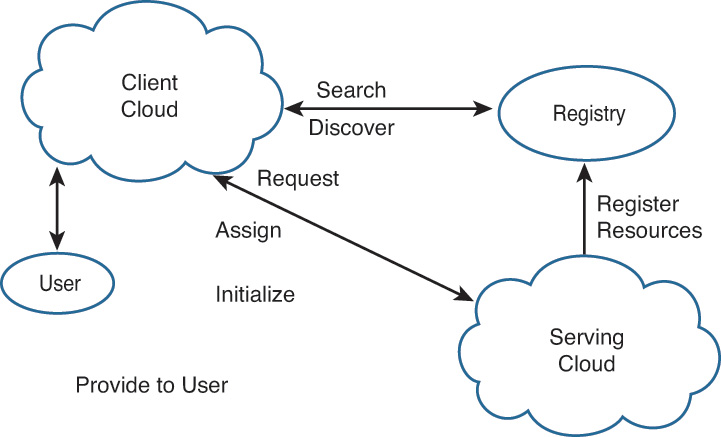

A participant within the Intercloud ecosystem uses a discovery mechanism so that the Intercloud framework can discover its services. This allows the endpoints of the Intercloud framework to create an inventory of services. These messages will contain identity and attribute information. If these messages are spoofed, the endpoints will have an incorrect understanding of what services are available. Therefore, these messages should be accompanied by entity authentication and integrity protection.

Currently no specific protocols or APIs have been defined for registration, discovery, request, assign, and initialize mechanisms or the channel for transport of packets between the cloud and the resources. Once the protocols are chosen, the specifics of securing them can be defined with more specificity. There has been some discussion within the SNIA work group3 on clouds to use HTTP with RESTful interfaces. With this proposal HTTP with SSL could be used to secure the traffic in transit. The entities should perform mutual authentication to assure the identity of all parties involved in a transaction and provide some type of automated key management. Automated key management could provide for stronger keys and allow entities to join the system securely.

It may not be possible to have all aspects of the framework upgraded at the same time to support the same credentials and algorithms. Therefore, it is necessary to have secure negotiation mechanisms that allow different algorithms and procedures while minimizing the chance of an insecure rollback.

Building a Cloud-Focused Security Program

The implementation of security controls for data protection within the Intercloud is not too different from the security controls for data within an enterprise domain. However, the security challenges are often different when data resides in an infrastructure that the enterprise does not own. The operational and management models are usually different, thus posing risks that are new within the Intercloud compared to a traditional IT environment. In a cloud computing environment, the risk is around the transmission, storage, and maintenance of data (who can see the data and who has access to it). However, the protection of data always remains the responsibility of the data owner. The data owners need to know where the data processing occurs to ensure compliance with local and global legislation.

Trust Between the Intercloud Participants

Peer authentication establishes the identity of a peer as the first step in determining what level of trust and authorization to place in it. In order to perform peer entity authentication, it is necessary to assign an identity to each entity that can be cryptographically verified. It is desirable for authentication to be mutual. Different types of entities can make use of different types of credentials to establish identity. Some types of credentials that are typically supported are pre-shared keys, certificates, passwords, tokens, and smart cards. Peer authentication forms the basis for providing identity-based accounting and auditing. The authentication credentials or authentication mechanism may provide a way to establish cryptographic key material to provide message authentication, integrity protection, and encryption. Credential-based authorization provides a mechanism that allows an entity to prove it is authorized for some purpose. The authorization credential can be directly tied to an identity authenticated during peer entity authentication. Some examples of this include attribute certificates and SAML assertions. In other cases, it may be a separately validated credential such as a group symmetric key that proves membership in a group. In the latter case, it may not be possible to uniquely identify any one peer in a group, so identity-based accounting and auditing are more of a challenge. The mechanism for proving possession of the authorization credentials may provide a way to establish cryptographic key material to provide message authentication, integrity protection, and encryption.

The current peer authentication proposal defines three entities:

1. The CSP that is acting in the role of a client cloud and whose interface is represented by the CSU of the service management framework

2. The CSP that is acting in the role of a serving cloud and whose interface is represented by the CSP of the service management framework

3. The cloud registry service that allows for enrollment of CSPs and their resources, and for discovery of those resources by other CSPs that desire to request additional resources

There will be a need to have strong authentication that can be used to authenticate each entity to the other entity. The authentication credentials will be the technical basis or enforcement of the business trust relationship. In the current timeframe, customers of cloud services are creating relationships with cloud providers in a one-to-one fashion. As cloud providers begin to provision resources from other cloud providers, they will establish trust through bilateral agreements and contracts. The credentials used for these bilateral relationships can be shared secrets, public and private key pairs, or X.509 digital certificates. As the number of relationships increases, the scaling ability of credentials favors the use of X.509 digital certificates from public key infrastructures (PKIs).

There is an evolution to more complex and scalable relationships that has been seen in other types of federation. For users and cloud providers over time there will be movement toward group or community membership that provides the trust relationship among community members. For example, higher education already has an identity federation, eduroam, among colleges and universities. There will be diversity in the communities formed. Each community may grow in isolation from other communities. There may also be some overlap when members belong to multiple communities, but the trust relationships remain separate.

These communities will most likely support the use of registries among their members. The trust relationships are now grounded in membership in the community rather than in a one-to-one relationship between members. Although any of the previously mentioned credentials could be used, this situation benefits from the use of X.509 digital certificates between its members.

Due to the diversity of communities and the challenge of any single entity being able to be an umbrella organization, there will be the need to allow federation between the different communities. This will most likely be accomplished through many different organizations cross-federating together. Eventually, a hierarchy may appear with several federated communities coming together to create a higher-level authority to allow for better scaling. It is hard to impose such a structure from the beginning, but it is the path that other entities have followed.

Components of Building Trust

A trust model is helpful in accessing and monitoring the data that organizations keep in the cloud. The model also allows them to certify and comply with the relevant laws and standards while addressing complex security and privacy challenges. Components used in building trust with CSPs include encryption, key management, enrollment, revocation, and message integrity and authentication, as described in the following subsections.

Encryption

Encryption is used to protect messages from unauthorized viewing. Encryption keys can be based on peer authentication credentials or exchanges, in which case they protect the data from unauthorized viewing by anyone but the communicating parties. If the encryption keys are based directly on authorization credentials or exchanges, any authorized party can observe the messages.

Key Management

Key management is necessary to make sure that fresh strong keys are available for use when needed. Successful key management strategies are tied to mutual authentication and authorization mechanisms. Different credentials can be used with these mechanisms.

Enrollment

It is often necessary to bring untrusted entities into the system as trusted members. These mechanisms fall under the classification of enrollment.

Revocation

In some cases, a member of the system becomes untrusted due to compromise or normal operational lifecycle. The revocation process takes the entity from trusted status to untrusted status.

Message Integrity and Authentication

Message integrity and authentication techniques ensure that data transmitted between peers has not been modified. These mechanisms are rooted in key material based on peer entity authentication or authorization credentials. If the authenticated key material is based on peer entity authentication, then only the communicating peers have the authorization to modify or generate messages. If the key material is based directly on an authorization credential, then any authorized party can modify or generate messages. Message integrity should also take care to mitigate replay attacks.

Security, Privacy, and Compliance Risks

Moving data into the cloud does not change its associated risk. In fact, it increases the overall risk and your obligation to protect sensitive information. Organizations must conduct information risk assessments to comprehend the complexities of both cloud and privacy regulations. An assessment will allow you to ponder your risk tolerance and build strategies before moving data into the cloud. If you have not fully bought into adopting cloud, start with moving less sensitive data into the cloud and keep more sensitive data locally within your private data centers. Once you have confidence that your CSP has enough controls in place to safeguard your data, build a roadmap of applications and data that can eventually be moved. When you are deciding how to prioritize data, ask these simple questions:

1. If data is breached, what will be the impact to your organization?

2. What will be your mitigation/response in a data breach event?

3. How can you improve the overall visibility of your data in the cloud?

4. What steps can you take to reduce the overall risk associated with data in the cloud?

5. Do the mitigation steps provide value when data is moved in the cloud?

A number of risk assessment tools are available to help you develop a cloud migration strategy. Cloud Controls Matrix from Cloud Security Alliance is a good place to start if you don’t have a strategy already.4 Another tool, Cloud Computing Risk Assessment5 by ENISA, is a great resource, especially for European organizations. The goal of this chapter is not to provide a complete catalog of risks that are associated with cloud computing but to prompt you to realize that such risks are critical and that you must have a game plan to tackle them.

Risk of Device Compromise

Any device may be partially or completely compromised in a network; however, in general some hosts have a higher risk of compromise than others. This may be due to their physical location, vulnerabilities in their software, number and type of users that operate the system, and type of hardware among other things. Endpoints have varying levels of risk associated with being compromised; some will be heavily protected and others may be out in the open with almost no protection. They will be implemented on a wide variety of systems with a wide variety of usage models. A general assumption for the Intercloud framework is that endpoints as a general class have a high risk of compromise as they are directly interfaced to the Internet.

Risk of Privacy

We all agree that cloud computing is here to stay. It provides an opportunity for IT teams (network and security operations) to enable business agility while maintaining privacy and compliance. As you can imagine, moving critical information in the cloud may lead organizations to have little to no control over it. Even if organizations decide against moving private information to the cloud, lack of security controls may allow mixing it with other organizational data. A risk assessment allows the enterprise to comprehend the complexities of privacy regulations. At the end of the day, organizations are still responsible for their data, even if it resides in the cloud. Organizations must keep a check on their cloud providers for the following reasons:

![]() A CSP could move information among multiple geographically scattered data centers in order to meet its SLAs and data availability requirements.

A CSP could move information among multiple geographically scattered data centers in order to meet its SLAs and data availability requirements.

![]() A CSP might not have a good decommissioning process. It is possible that the CSP would end up keeping an organization’s personally identifiable information (PII) even after the termination of the contract.

A CSP might not have a good decommissioning process. It is possible that the CSP would end up keeping an organization’s personally identifiable information (PII) even after the termination of the contract.

![]() A CSP could use some of the information for its own purposes.

A CSP could use some of the information for its own purposes.

Organizations must have well-defined clauses in their CSP contracts to protect against data leaks and data usage. If they do not, they must understand the risk of losing data or implement adequate safeguards for its protection. It is important that the data owner be compliant with the data protection regulations. It is not just that the cloud provider has to install procedures to make that happen. It is a duty of the data owner to make sure that private data cannot be misused.

Risk of Compliance

One of the biggest hurdles in cloud adoption is adherence to industry and regulatory directives. As you can imagine, before a cloud adoption decision is made, organizations conduct a thorough assessment of where they stand with regard to compliance requirements. The legal issues around data storage location further complicate compliance for data in the cloud. If your data resides in one geographical area, it might be subject to one regulation; however, if it is moved to a different place, it might be subject to a totally different regulation. Therefore, if you use or plan to use a multinational cloud provider or the Intercloud ecosystem of partners, you must do your due diligence to check whether your cloud provider meets the certifications (such as HIPAA, SOX, ISO/IEC 27001, PCI DSS, FISMA, to name a few). For example, the European Union Data Protection Act requires that all personal information be kept within the EU boundaries. If you picked a cloud provider with geographically dispersed data centers and you have PII for EU personnel, you must assure that data resides on servers that are physically located in EU countries. You must work with the cloud provider to show where your data resides (in both normal and failed states) and that appropriate documentation is available to prove its location.

Secure Cloud Architecture

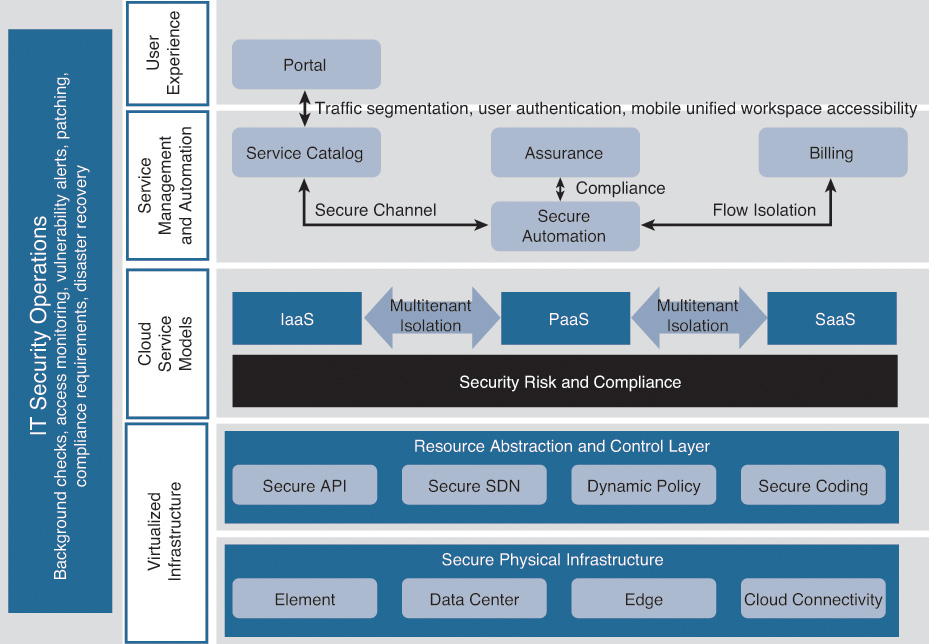

As discussed in Chapter 4, “Intercloud Architecture(s) and Provisioning,” the Cisco Customer Solution Architecture (CSA) is a transformational customer-facing blueprint that delivers IT-based services for enterprise and service providers to achieve their business goals. Each layer serves the layer above, and APIs connect each layer for fulfillment, assurance, and metering/billing. Typically, fulfillment flows from top to bottom, and assurance and billing information flows from bottom layers to top layers. As you can imagine, security resides at each layer to deliver secure cloud services to the customers. Security is the underpinning and embedded DNA of any good cloud architecture. End-to-end security is critical in assuring data confidentiality, integrity, and availability. This architecture can be further divided into the following sub-components as shown in Figure 7-1: secure physical infrastructure layer, secure resource abstraction and control layer, secure cloud services model, secure management and automation, and secure portal and user experience.

Secure Physical Infrastructure Layer

At the bottom of the secure Cisco CSA is the physical infrastructure layer. This layer represents securing the physical cloud infrastructure products and the connectivity to the cloud from customer networks, customer partners, or other cloud brokers. Element security covers the area of traffic that can pass, terminate, or get blocked in reference to the element. The data center component ensures that all interconnected entities and products in the data center are fully secured. The cloud edge component ensures that you are employing all the necessary tools and technologies to protect your internal infrastructure from outside attacks, and the secure cloud connectivity layer ensures that you are securing all the external connections into your data center. These may include connections from customer networks, connections to other data centers, or connections to partners or external clouds (or brokers or exchanges).

Secure Resource Abstraction and Control Layer

This layer looks at the physical layer in a more holistic fashion by allowing the orchestration, network, and security controllers to enforce appropriate policies on the traffic and the underlying network infrastructure. As networking programmability becomes more mainstream and devices offer API support (read or read/write) for flexible and automated deployment, new security vulnerabilities will be introduced. It is imperative that the API callouts be secure to avoid disruption of traffic. The controller having access to potentially a significant network and application footprint needs to be secured.

Secure Cloud Services Model

Regardless of the cloud services model, the most important thing to consider is the protection or segmentation of customer environments. Segmentation applies at multiple levels; one is segmentation with the customer (LOB segmentation), the second is in the context of multi-customer tenancy. In the case of the same customer, it is important to be sure you offer security controls when a host (such as a web server) is making calls to another host (such as a database) or when an administrator logs in to a network entity. Providing multitenant and host-level traffic segregation is imperative in alleviating customer concerns around their data and information integrity. Additionally, it is not just about security control; make sure that you are monitoring and inspecting all data and activities as well. Anomaly detection in traffic patterns, data, and content access by whom, when, and what are extremely important.

Secure Management and Automation

In the service management and automation layer, it is imperative to encrypt traffic communication from the automation controller to all the other components (such as services catalog, assurance, and billing) of the architecture. If the utility-based (pay-as-you-use) billing system is used, the traffic statistics to the billing server need to be encrypted as well and completely isolated from other customer traffic.

Secure Portal and User Experience

This top layer of the architecture ensures that communication (in and out) is encrypted and the entities are mutually authenticated by trusting a common authentication framework. Since the portal may serve multiple customers, ensuring complete traffic and data isolation is critical. User experience on both fixed and mobile devices is imperative.

Security Framework

As mentioned earlier, moving data into the cloud does not change its associated risk. Before applying blanket security controls or policies, you need to know what your business goals and objectives are. You need to understand what resources are considered critical and valuable in your enterprise. If someone gets hold of those resources (whether data or physical boxes), what implications does it have for your business? Are you going to be in the news if a security incident involves a data breach when some customer-facing information is in the darknet? Therefore, it is important that you identify threats to those goals and objectives.

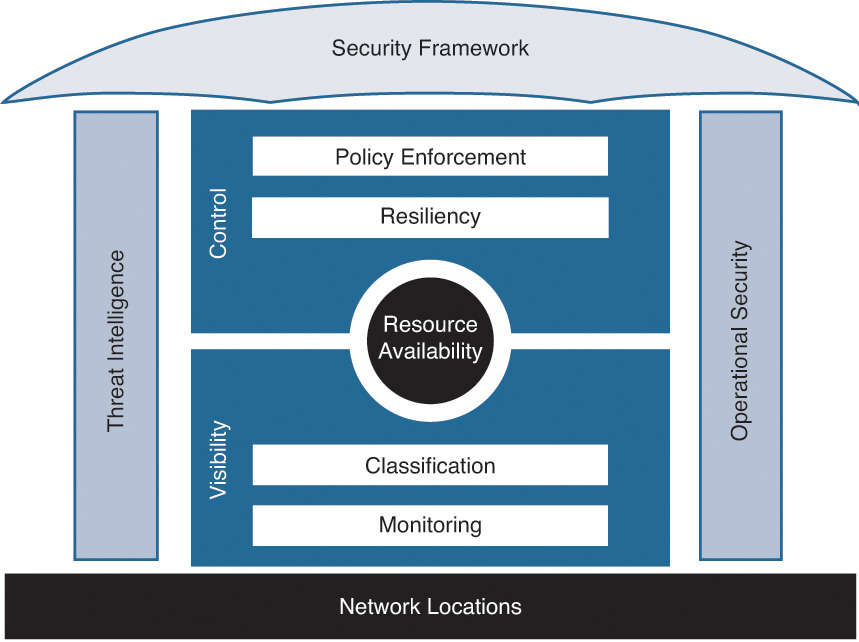

A security framework can be leveraged as a guide to secure a company’s critical resources by involving all major stakeholders to identify the assets, their risk exposure, and the security controls that need to be applied. The framework should be looked at as the beginning of a continuous enhancement process by incorporating emerging elements, and by considering the lessons learned from its real-world application.6 A security framework, at a minimum, should have the elements described in the following subsections, as shown in Figure 7-2.

Asset Visibility

Asset visibility is one of the core components of this framework. It allows you to have full visibility into your IT infrastructure and allows you to answer questions such as these: Who is accessing your network? What are they doing after getting access? Where are they coming from? What devices are they using? How are they hopping from one component of the architecture to another? With network virtualization, cloud adoption, and proliferation of devices, it is imperative to look at the entire context of the connection before allowing access to the critical data.

Asset Classification

One of the more difficult tasks in any fraud, revenue assurance, security, or compliance risk assessment is classifying assets and tagging them with a financial value. You must ensure that assets are identified, properly classified, and protected throughout their lifecycles. Information, like other assets, must be properly managed from its creation to disposal. As with other assets, not all information has the same value or importance in a network architecture, and therefore information requires different levels of protection. Information asset classification and data management are critical to ensure that the business’s information assets have a level of protection corresponding to the sensitivity and value of the information asset.

The provisions of the policy collectively apply to all information assets, including but not limited to paper, electronic, and film. The term “information asset” is not used within this framework to refer to the technology that is used to store, process, access, and manipulate the information.

Asset Monitoring

Accounting and auditing are used to monitor and validate correct operation of the framework. All authorized transactions should be logged along with the identities and authorizations involved. Note that if the logged identities are not based on peer entity authentication, it may not be possible to trace a problem directly back to the misbehaving entity.

In this framework, the purpose of the visibility principle is to evaluate the capabilities of a network infrastructure in identifying who or what is using the infrastructure, in observing and monitoring the activities occurring on the network, and in building the intelligence from activities that you are monitoring.

Security Controls

Once you have a comprehensive context of a connection request, you can apply appropriate security controls to authorize or reject the session. The security controls are designed to enforce actions to keep a cloud infrastructure resilient, safe, and secure.

Asset Resiliency

Similarly, the purpose of the control principle is to evaluate the capabilities of a network infrastructure in withstanding and recovering from security anomalies; in separating and creating boundaries around users, traffic, and devices; and in ensuring that the network activities conform to a desired state or behavior.

Asset Policy Enforcement

There is always a risk that part of the system may be compromised. An actionable policy attempts to contain the risk of compromise of a given component as much as possible to limit how far the compromise can propagate throughout the system. Some of the policy enforcement principles are listed here:

![]() Principle of least privilege: A component should not be given more privilege than is necessary for it to perform its function. This prevents a compromised component from overstepping its bounds and directly affecting unrelated areas of the system.

Principle of least privilege: A component should not be given more privilege than is necessary for it to perform its function. This prevents a compromised component from overstepping its bounds and directly affecting unrelated areas of the system.

![]() Separation of duties: The more functions a single entity performs, the more valuable that entity becomes and the more resources are needed to protect it. Separation of duties goes hand in hand with least privilege to prevent compromised components from overstepping their bounds. Separating out highly privileged components helps to reduce management costs by allowing security administrators to focus their resources. Trust, but verify and validate.

Separation of duties: The more functions a single entity performs, the more valuable that entity becomes and the more resources are needed to protect it. Separation of duties goes hand in hand with least privilege to prevent compromised components from overstepping their bounds. Separating out highly privileged components helps to reduce management costs by allowing security administrators to focus their resources. Trust, but verify and validate.

In order for the system to operate, participating entities must trust one another to perform various functions correctly. However, not all entities are trusted equally for the same function. Entities should validate that their peers are not overstepping the boundaries of least privilege or performing beyond their duties. For example, an endpoint that is generating messages should make sure that peers that are authorized only to send response messages do not send request messages. The verifier is responsible for parameter validation, filtering out messages that fail validation, and alerting the system through logs or other means when this happens.

Operational Security

Many people think that information and network security are just about technology and products, focusing on their reliability and sophistication. They often neglect to assess their business goals against the security risks to their assets. Unfortunately, in many cases, they become a victim of data breaches or hacking attempts where the confidential information is lost and business continuity is disrupted. The lack of credible and relevant security operational processes typically contributes to this paradigm. For example, in case of a data breach, how long does it take you to detect that something malicious is happening? How long does it take you to contain the incident? What steps does the operations staff take to detect anomalies? What does the lifecycle of an incident look like? How is the auditing of network and security logs done? What is the patch management or incident management process and how well is it followed?

These are just some examples; the list can be much longer. The goal is to define a set of sub-processes for each high-level process (or operational area), then build metrics for each sub-process. More importantly, assemble these metrics into a model that can be used to track operational improvement.

Security Intelligence

Leveraging security intelligence is one of the most important factors in discovering the external posture of your cloud instance. Two main sources of threat intelligence are available to an organization:

1. The CSP can build security analytics by observing and monitoring the traffic passing through its cloud infrastructure.

2. External threat intelligence providers can provide a view into active threats or attacks, even, in some cases, before they happen to an organization’s cloud instance. These providers correlate global threat and attack data and provide a threat-centric view to an organization by analyzing indicators of compromise. In some cases, these providers use proprietary heuristics or preconfigured rules and advanced correlation techniques to determine anomalous or suspicious activities.

Regardless of the source of your security intelligence, what is important is to validate that the anomalous activity is indeed malicious in nature against your sensitive data in the cloud.

Network Locations

We tend to put our jewels in a safe place by building a secure perimeter around them. What we fail to realize is that thieves might try a different approach to steal our belongings. They will not always try to come in from the outside. They could already be inside your safe house or could already have access to your jewels through other means. The framework needs to identify all the entry points to your critical resources. With increased adoption of cloud services, some data could be accessed outside of your control points. It is also possible that the services hosted in the cloud could have access to data in your data center.

Depending on the nature of your business, you may have an ecosystem of partners that need to access certain data. Do you know where they connect from or how they access your data? Are you sure they don’t have access to data that they are not authorized to? The flexibility within the framework allows you to logically break apart a location into specific areas based on your organizational structure and need.

Intercloud Security Architecture

The Intercloud is built to address the biggest customer need—the simplicity of the overall user experience when accessing applications and data. This enables a customer and an end user to spin up multiple systems in no time across different geographies and different cloud providers. The Intercloud architecture is composed of a user-interfacing cloud, called the client cloud, that discovers and requests additional resources from the cloud partners, called the serving clouds. With this close integration, the appropriate resources are made available to users as if they were part of their own cloud infrastructure. This scenario is presented in Figure 7-3.

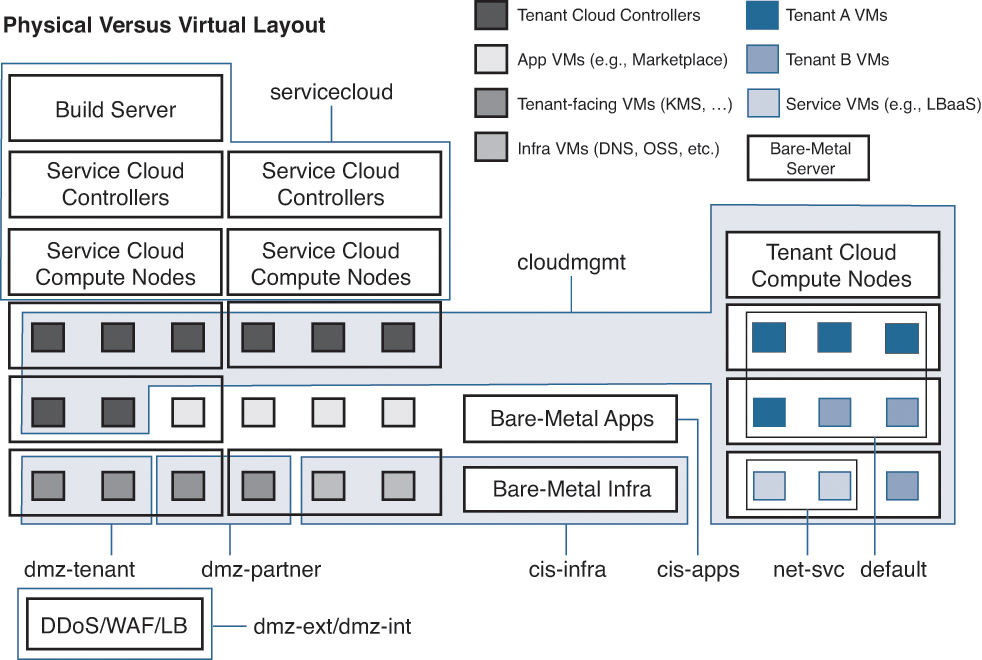

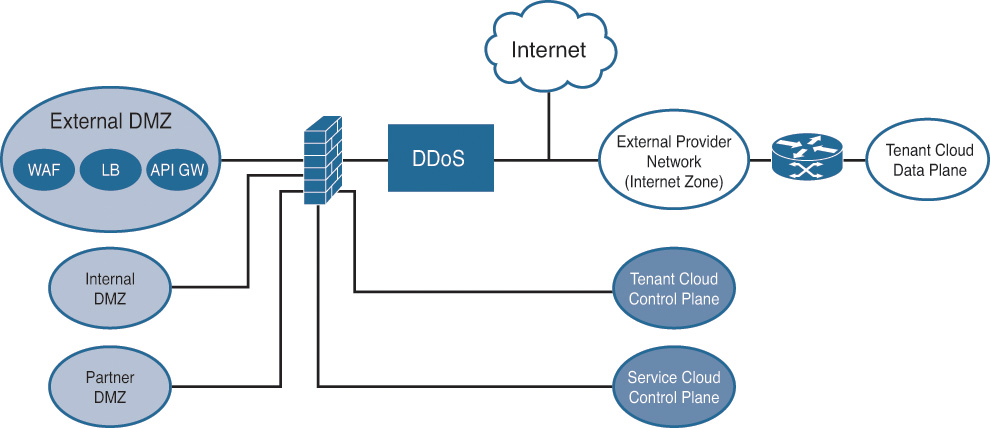

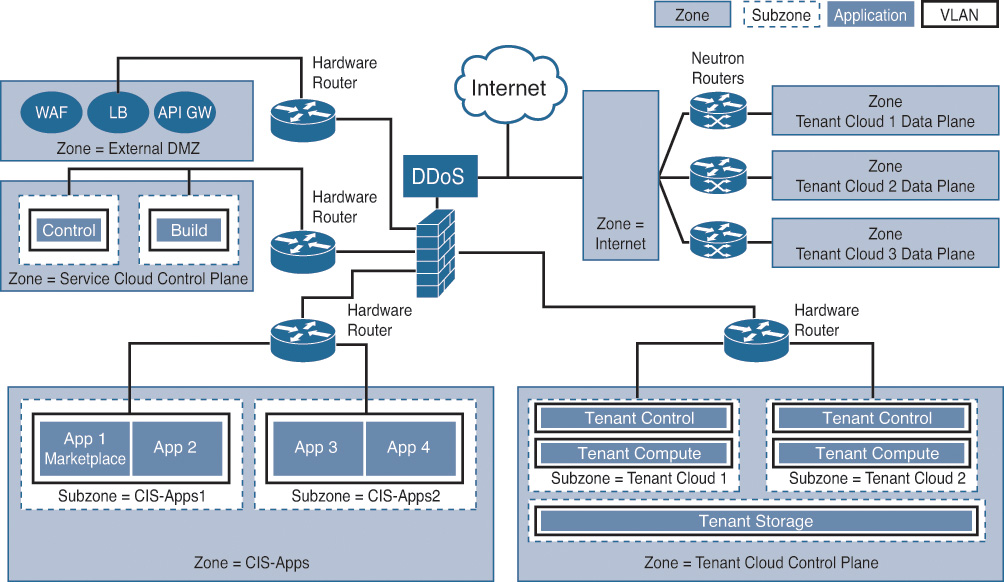

The Cisco Intercloud design uses a nested OpenStack architecture. The service cloud acts as a “controller cloud” to manage the tenants. A tenant instance not only includes generic common components like the prime services catalog and identity management system but also includes components like Nova, Neutron, and Cinder, to name a few. A set of network firewalls and WAFs creates a protected zone before allowing access to the OpenStack modules like Horizon, Keystone, and the onboarding APIs. This standard design, shown in Figure 7-4, requires all traffic to be inspected from the outside to protect the critical elements of the core architecture.

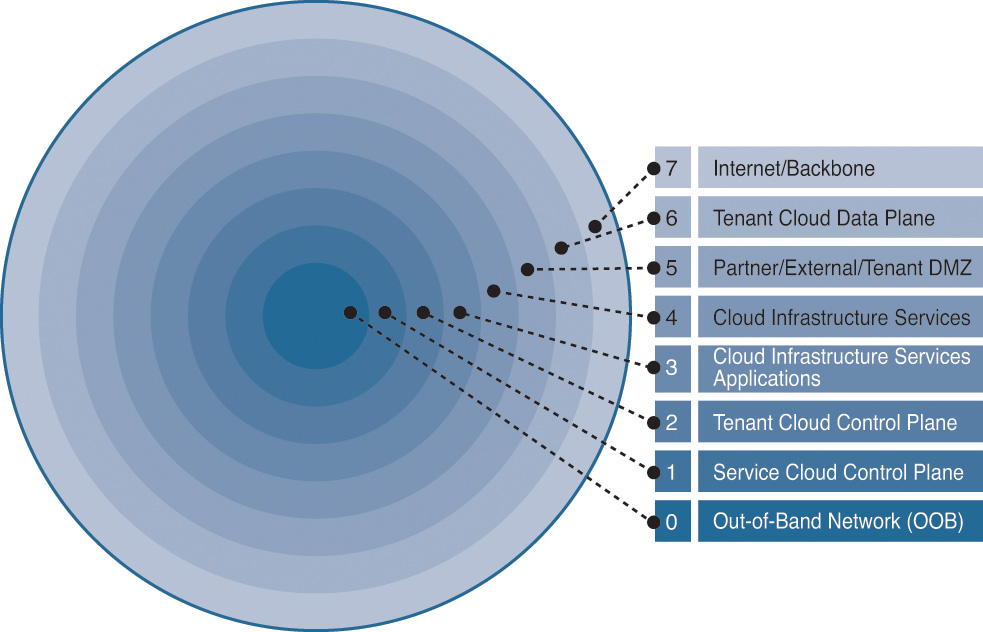

Security Zones

The Cisco Intercloud architecture is based on a layered security model creating different levels of trust when traffic is flowing from one part of the network to another. At the center of this model is the most trusted and most secure function of the network—the out-of-band management channel—and the outermost layer (or zone) is the most untrusted network—the Internet—as shown in Figure 7-5. The layers between the outermost and the center zones apply different sets of access control rules and security policies to inspect and authorize the appropriate flows. Typically, there is a coarse-grained boundary for the traffic flowing within the same security layer.

Figure 7-6 shows a logical representation of different zones of the Intercloud architecture. As you can see, the tenant cloud control plane resides in a different security zone from the tenant cloud data plane through the use of logical firewall instances. Security for the control plane of a tenant and its resources is more critical than the security of its data plane. In some cases, the cloud provider cannot inspect or protect the data traffic for a tenant due to geographic laws or industry regulations.

In some cases, subzones are created within each security layer to further segment traffic. These subzones provide fine-grained boundaries around different types of components or services offered within the same security zone for the traffic flowing within the same security layer. The idea is to segment specific services, applications, or modules to prevent any unauthorized activity and to limit the spread of security compromise within a zone. This segmentation is achieved by applying specific access control polices and security groups. For example, the tenant cloud control plane zone can be further divided into tenant-specific subzones to provide complete isolation for each cloud tenant. This ensures traffic separation and allows you to meet security compliances around data segmentation. Figure 7-7 shows a logical representation of how subzones lie within each security zone.

Security Monitoring

Going back to the security framework discussed earlier in the chapter, you need to have full visibility into the architecture to be able to understand security threats and risks. Within the Intercloud, security monitoring is achieved by inspecting and analyzing traffic at various security zones as shown in Figure 7-7. The inspection and monitoring solutions leveraged within the Intercloud include an intrusion prevention system for content inspection and analysis, network firewalls for data segmentation and customer zones, traditional VLANs and security groups between the subzones, web inspection firewalls for deep web content analysis, and distributed denial-of-service (DDoS) prevention technologies to protect the entire architecture from internal and external attacks.

Intercloud Identity Architecture

In many cases, authorization is performed by using an identity that is authenticated during peer entity authentication to look up authorizations in an access control list (ACL) or database. Note that other identities besides peer identities may be used to look up entries in a database; however, if these are not tied to peer entity authentication in some way, identity-based accounting and auditing will be difficult.

Identity Challenges in Multitiered Cloud Models

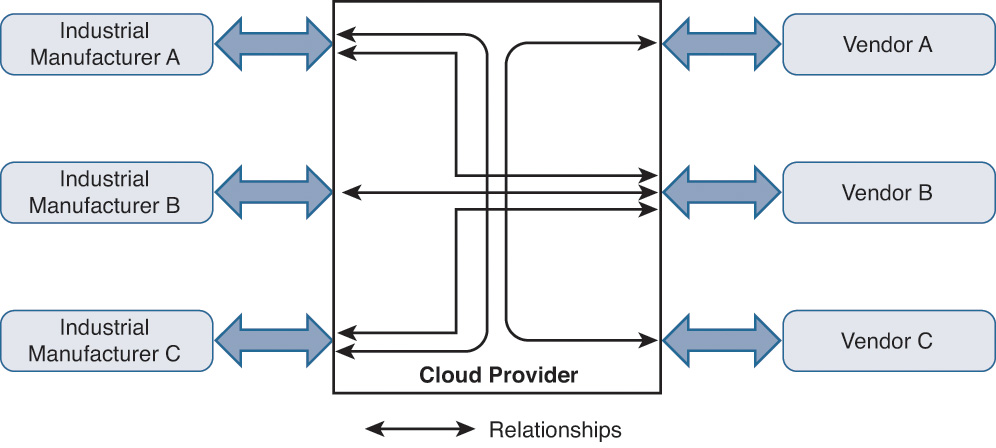

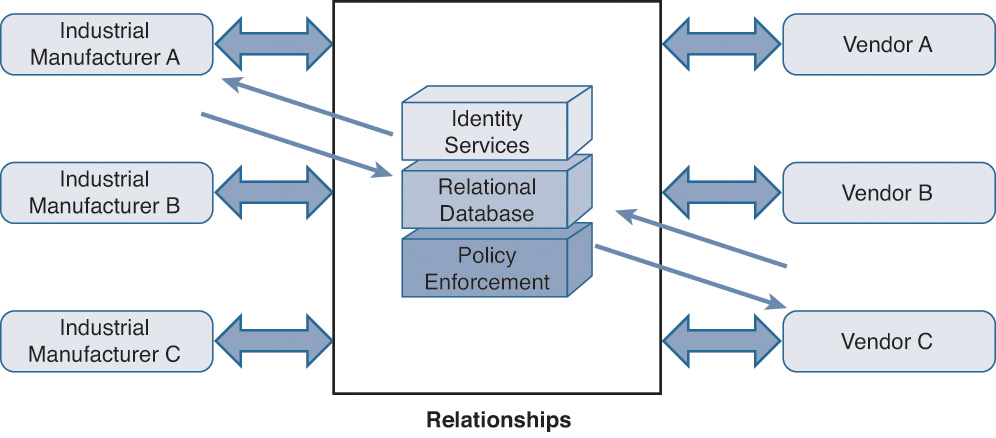

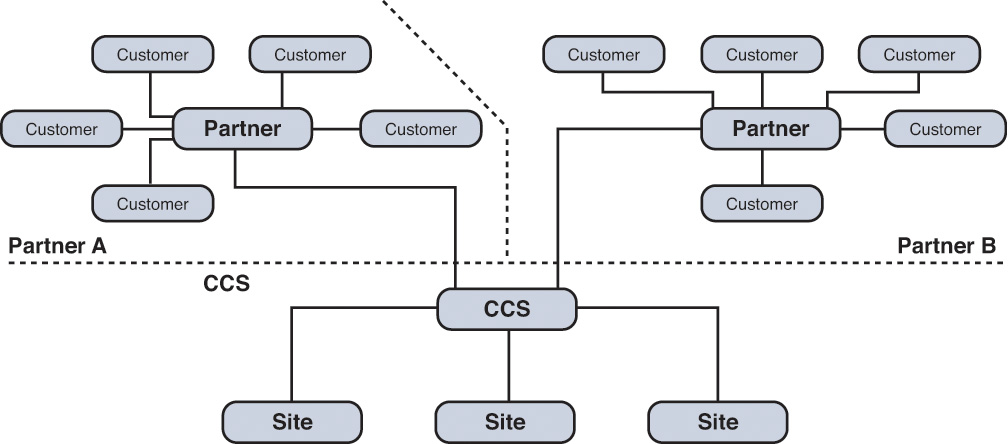

Almost all enterprises have business relationships with their partners. A network is now composed of various vendors that work collaboratively to provide a solution for a customer’s needs. As an example, consider a large industrial manufacturer where you can find appliances from Vendor A, robotic automation from Vendor B, and industrial software from Vendor C. Employees from these vendors regularly visit the manufacturing plant to service their onsite devices or perhaps troubleshoot technical problems. Figure 7-8 shows the high-level topology where some of the partners connect virtually through a cloud provider.

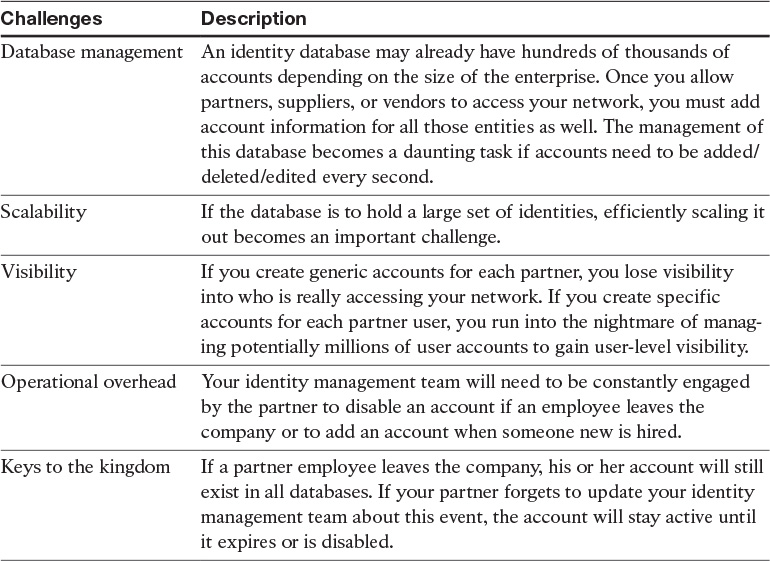

Every time a partner employee needs remote access to the company’s network, the IT team ends up creating specific accounts for all those vendors in their identity system (Active Directory, LDAP, RADIUS, to name a few). This model presents a number of challenges for the customer to efficiently manage this large partner community within its own identity infrastructure. Table 7-1 lists a few important challenges of this deployment model.

The most efficient method to solve identity challenges in a partner ecosystem space is to provide a policy-driven identity brokerage infrastructure where customers can establish identity brokerage services between their business partners in a trust relationship. Individual companies will benefit from authenticating into the partner networks while maintaining their own identity infrastructure and database. By using a closely knit identity federation service, users from one customer (or administrative domain) can access the resources and devices when they roam in the networks that are owned by different customers (or another administrative domain).

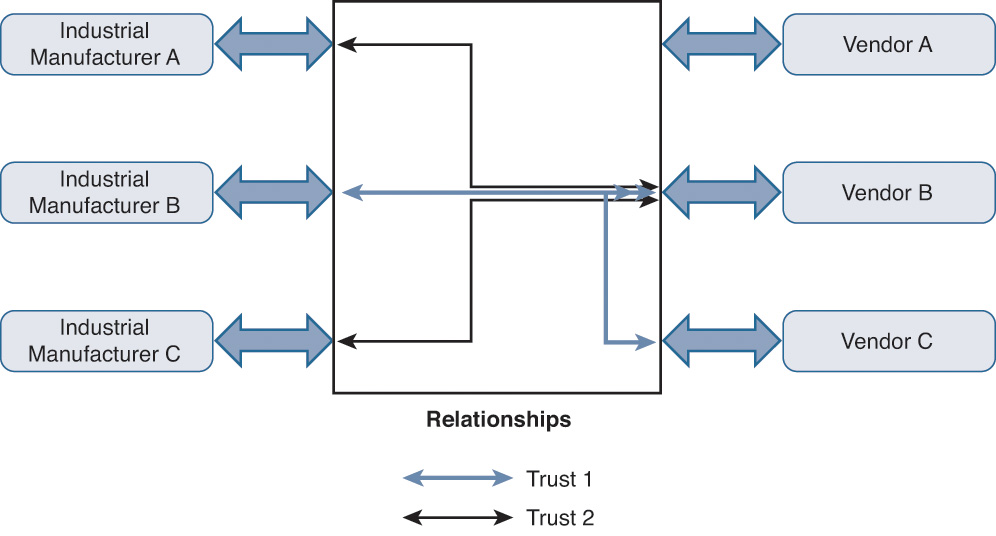

The trust relationship between the two partners (or companies) can be defined based on the level of access you want to provide to your business partner. Additionally, the trust relationship can vary between the partners. It is possible that you want to provide full access of your infrastructure to a partner but very restricted access to a different partner. Additionally, you can also apply the same authorization level to multiple partners based on your trust relationship with them, as shown in Figure 7-9.

Figure 7-10 shows two cloud-based identity federations leveraging the same identity infrastructure. The first federation focuses on Company A and all of its potential partners, and the second federation focuses on Company B and all of its potential partners. As you may have noticed, a number of partners exist in both federations and consequently belong to two different trust domains.

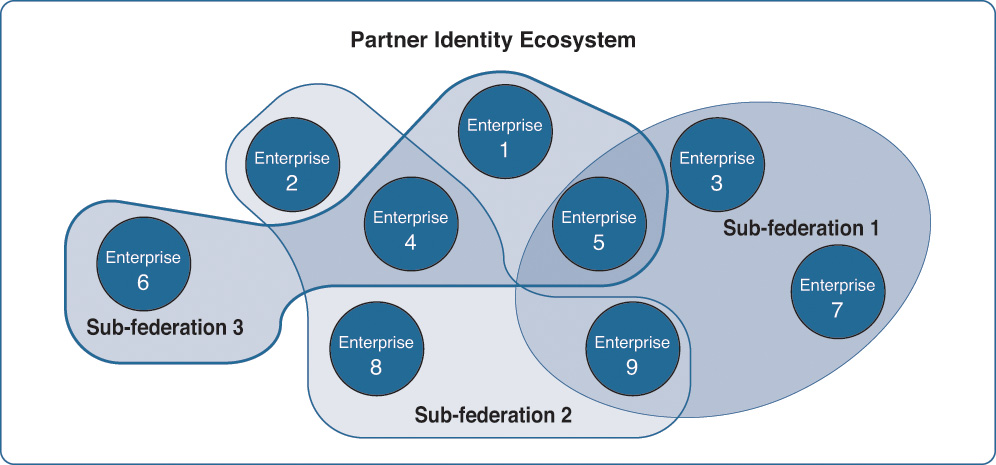

When you start building different trust levels and relationships through a common platform, you are in a way building an ecosystem where entities can register and can communicate with other entities. The level of communication, again, is based on a predefined trust between them. The boundaries and policy enforcement can be controlled within this ecosystem. As can be seen in Figure 7-11, you can define unlimited trust relationships (sub-federations) among the subscribed companies of the identity system. An enterprise can be a part of one (such as Enterprise 2 in Figure 7-11) or can be a part of two or more trust relationships (such as Enterprise 4).

Intercloud User Identity Management

The concept of federation has been around for a number of years. It allows users to access and use resources owned by a different entity. Security researchers have built several architectural models to satisfy the need for the required degree of isolation between the users of the infrastructure, and the nature of the federation relationship between the different domain owners.

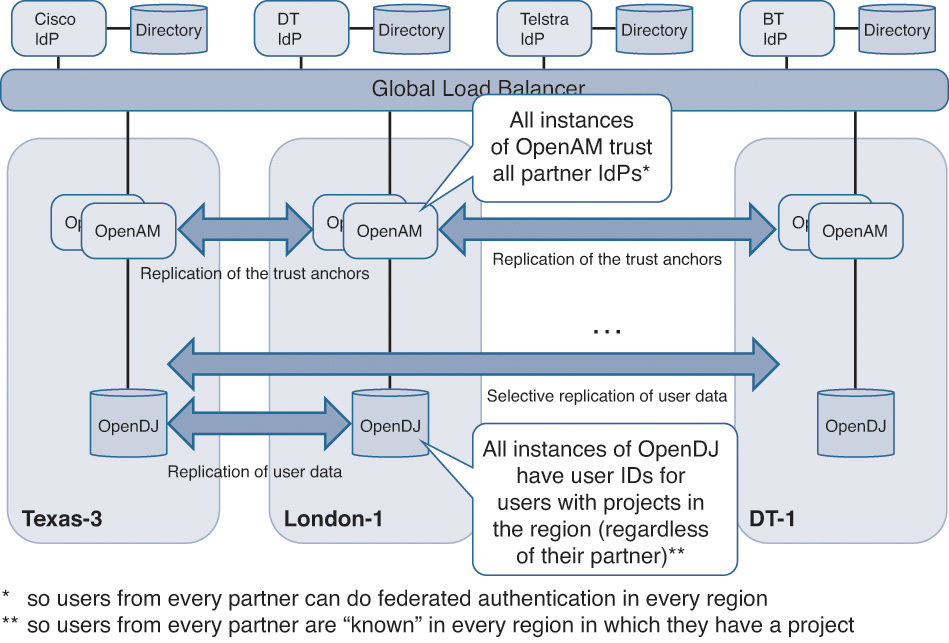

In order to provide a scalable and trusted OpenStack environment, an identity gateway broker is implemented between the Intercloud partner’s environment and the organizations that need to access cloud resources. In the Intercloud, all partners have connectivity in every data center to access resources. The beauty of the Intercloud federation is its simplicity, which establishes a one-time trust, on the one hand, between a particular organization’s identity provider (IdP) and a client cloud and, on the other hand, between the client cloud and the other serving clouds. This eliminates the need for creating all the possible combinations of trust relationships between the IdPs and Intercloud data centers. We achieve that by having all OpenAM deployments act as one single entity, through load balancers, to the IdPs, as illustrated in Figure 7-12. This allows all OpenAM servers to have the same view of the world and the same set of trust relationships.

We want to store user data within the OpenDJ instances in their respective home region(s). The unique user identifier is replicated to the OpenDJ instances for the regions in which a user is a member of a project. This allows a fully operational IdM (identity management) stack in all Intercloud data centers so it can federate with all partners (and through them their customers).

The trust is established by exchanging the identity-specific metadata through the out-of-band channels between the providers. OpenAM is leveraged to send a user to the right IdP. For example, OpenAM will figure out the right IdP and redirect the authentication request when a user who hasn’t been authenticated by the home organization’s IdP needs to access a resource. The access is eventually granted based on the user’s membership to a project. This is where the end-to-end trust model within the Intercloud plays a critical role. End users are authenticated within their own cloud provider based on their mutual trust. When resources need to be accessed by the user, the cloud provider, which authenticated the user, sends an identity insertion to the other cloud provider, which provides resources, as shown in Figure 7-13.

Summary

This chapter discussed best practices in security for cloud and its various implementations. In the context of the Intercloud, the identity and security elements were discussed in detail. We examined the application of key industry groups like the Cloud Security Alliance and how the security models applied to today’s public clouds, private clouds, and hybrid clouds set the foundation for the Intercloud. We also presented a security framework that leverages two important security principles: asset/data visibility and security controls. We also discussed how the security framework can help to correlate data by incorporating threat intelligence and operational processes.

Key Messages

![]() Security is still a major concern in cloud adoption.

Security is still a major concern in cloud adoption.

![]() Before adopting cloud, perform a cloud security assessment to determine the risks associated with privacy, compliance, and data loss, especially when data is moved outside of certain geographic boundaries.

Before adopting cloud, perform a cloud security assessment to determine the risks associated with privacy, compliance, and data loss, especially when data is moved outside of certain geographic boundaries.

![]() A secure customer solutions architecture can act as a blueprint for both CSPs and CSUs to ensure that security is fully baked into their infrastructure.

A secure customer solutions architecture can act as a blueprint for both CSPs and CSUs to ensure that security is fully baked into their infrastructure.

![]() Intercloud leverages scalable and federated OpenStack components to simplify the identity management process.

Intercloud leverages scalable and federated OpenStack components to simplify the identity management process.

![]() Intercloud security architecture addresses the challenges that organizations are concerned about.

Intercloud security architecture addresses the challenges that organizations are concerned about.

![]() Intercloud user identity management demonstrates how identity information is passed from one cloud partner to another leveraging the federation services.

Intercloud user identity management demonstrates how identity information is passed from one cloud partner to another leveraging the federation services.

2. https://downloads.cloudsecurityalliance.org/initiatives/surveys/financial-services/Cloud_Adoption_In_The_Financial_Services_Sector_Survey_March2015_FINAL.pdf.

4. https://cloudsecurityalliance.org/download/cloud-controls-matrix-v3-0-1/.

5. www.enisa.europa.eu/activities/risk-management/files/deliverables/cloud-computing-risk-assessment.

6. www.enisa.europa.eu/activities/Resilience-and-CIIP/cloud-computing/governmental-cloud-security/security-framework-for-govenmental-clouds.