Estimation discussions, in my experience, have one very valuable outcome. It's not the story points, but it's the conversations and interactions that happen around the story details. As the team builds familiarity with the user context, business goals, existing framework, technology options, assumptions, and so on, there are valuable ideas that take shape.

Breaking down a user story into tasks (or things to do) helps the team align on the best implementation approach. The pros and cons of an approach are discussed. The trade-offs (what we will not get by following this plan) are discussed. Assumptions are challenged. Risks are identified. Ideation kicks in. The most important outcomes from an estimation session are these ideas, tasks, and questions.

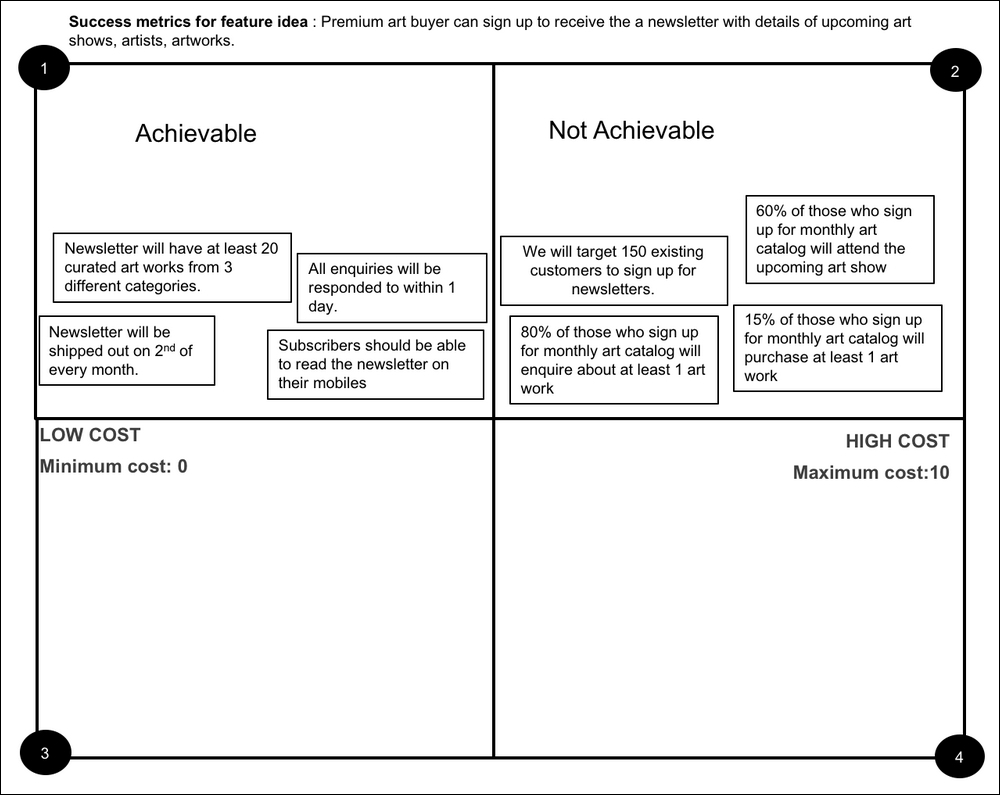

So, if we flip this around, and conduct implementation discussions instead of estimation sessions, then we can expect that estimates will be just one minor detail to derive. We saw in Chapter 5, Identify the Impact-Driven Product, that each feature idea can be rated on a scale of 0-10, based on the cost to build that feature idea. The rating was based not just on estimates but also based on the complexity, technology readiness, risks, dependencies, available skill sets (technical and supporting business functions), and resources (hardware and software).

In order for us to arrive at this cost rating, we can follow this process:

- Break down a feature idea into success metrics: As discussed in Chapter 5, Identify the Impact-Driven Product, we can identify the SMART success metrics, owners, and timelines to measure success.

- Identify success metrics that need technical implementation: The cost for activities that can best be handled manually (for instance, content creation in the case of ArtGalore art catalog newsletter), can be evaluated differently. Other business functions can evaluate their implementation costs for success metrics of they own. We can decide when a feature is done (can fully deliver impact) based on which success criteria must be fulfilled in the product for us to launch it. In the case of time-bound feature ideas (where we are required to launch within a certain date / time period due to market compulsions, such as in the case of ArtGalore), we can identify which success criteria can deliver the most impact and arrive at a doable / not doable decision, as described here in step 6.

- Add details for every success metric: The intent of the details is to increase familiarity with the user context, business outcomes, and internal/external constraints.

- Facilitate an implementation discussion for one feature idea, across all its success metrics: The smaller the number of items to discuss, the fewer the chances of decision fatigue. Enable teams to ideate on implementation, and arrive at tasks to do, risks, dependencies, open questions, assumptions, and so on.

- Call out assumptions: Team size, definition of done (are we estimating for development complete or testing complete or production ready) and so on, also need to be agreed upon.

- In the case of time-bound feature ideas: Arrive at a doable / not doable decision. Can we meet the timeline with the current scope/implementation plan? If not, can we reduce the scope or change the implementation plan? What support do we need to meet the timeline? The intent should be to answer how to meet a success criterion. The intent is not to answer when we will be able to deliver. Team velocity itself becomes irrelevant, since it is no longer about maintaining a predictable pace of delivery, but it is now about being accountable for delivering impact.

Capture these details from the discussion. This is based on how we identify a cost rating for the feature idea based on these discussions.

On a scale of 0-10, a feature idea effectively falls into two categories: the 0-5 low-cost bucket (doable) which would give us quick wins and nice to haves and the 5-10 high-cost bucket (not doable), which would give us the strategic wins and deprioritize buckets. So, while we evaluate the implementation details, the question we're trying to answer is: what box in the impact-cost matrix does this feature idea fall in?

So, we can do the same for each success metric under a feature idea, as shown in the following image. A feature idea could have many success metrics, some of which could be quick wins some of which could be strategic wins. Some could be nice to haves and some are in deprioritize (depending on the impact score of the feature idea):

We can then evaluate if the feature idea can still deliver value with the quick wins and strategic wins success metrics. We can assess dependencies and so on and then arrive at the overall cost rating for the feature idea. We can arrive at a doable / not doable (within given constraints) decision with a much higher level of confidence.

This allows us to set time-bound goals for developers to work on. The measure of success for product teams becomes the outcomes they have been instrumental in delivering, not the number of story points they have delivered. This can help us to structure teams based on the shorter-term quick-win work streams and longer-term strategic work streams. It also takes away the need to track velocity, and we can now focus on outcomes and success metrics.