All right, we've got Nose installed, so what's it good for? Nose looks through a directory structure, finds the test files, sorts out the tests that they contain, runs the tests, and reports the results back to you. That's a lot of work that you don't have to do each time you want to run your tests—which should be often.

Nose recognizes the test files based on their names. Any file or directory whose name contains test or Test either at the beginning or following any of the characters _ (underscore), . (dot), or – (dash) is recognized as a place where the tests might be found. So are Python source files and package directories. Any file that might contain tests is checked for unittest TestCases as well as any functions whose names indicate that they're tests. Nose can find and execute the doctest tests, as well, that are either embedded in docstrings or written in separate test files. By default, it won't look for the doctest tests unless we tell it to. We'll see how to change the default settings shortly.

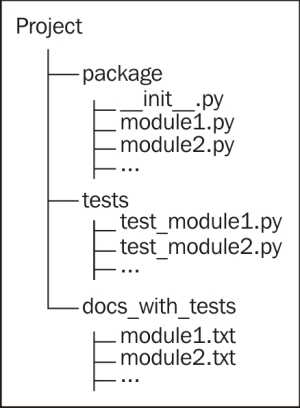

Since Nose is so willing to go looking for our tests, we have a lot of freedom with respect to how we can organize them. It often turns out to be a good idea to separate all of the tests into their own directory, or larger projects into a whole tree of directories. A big project can end up having many thousands of tests, so organizing them for easy navigation is a big benefit. If doctests are being used as documentation as well as testing, it's probably a good idea to store them in yet another separate directory with a name that communicates that they are documentary. For a moderately-sized project, this recommended structure might look like the following:

This structure is only a recommendation... it's for your benefit, not for Nose. If you feel that a different structure will make things easier for you, go ahead and use it.

We're going to take some of our tests from the previous chapters and organize them into a tree of directories. Then, we're going to use Nose to run them all.

The first step is to create a directory that will hold our code and tests. You can call it whatever you like, but I'll refer to is as project here.

Copy the pid.py, avl_tree.py, and employees.py files from the previous chapters into the project directory. Also place test.py from Chapter 2, Working with doctest, here, but rename it to inline_doctest.py. We want it to be treated as a source file, not as a test file, so you can see how Nose handles source files with doctests in their docstrings. Modules and packages placed in the project directory will be available for tests no matter where the test is placed in the tree.

Create a subdirectory of project called test_chapter2, and place the AVL.txt and test.txt files from Chapter 2, Working with doctest, into it.

Create a subdirectory of project called test_chapter3, and place PID.txt into it.

Create a subdirectory of project called test_chapter5, and place all of the test_* modules from Chapter 5, Structured Testing with unittest, into it.

Now, we're ready to run our tests using the following code:

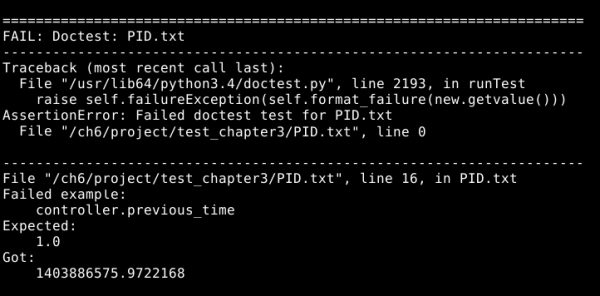

python3 -m nose --with-doctest --doctest-extension=txt -vAll of the tests should run. We expect to see a few failures, since some of the tests from the previous chapters were intended to fail, for illustrative purposes. There's one failure, as shown in the following screenshot, though, that we need to consider:

The first part of this error report can be safely ignored: it just means that the whole doctest file is being treated as a failing test by Nose. The useful information comes in the second part of the report. It tells us that where we were expecting to get a previous time of 1.0, instead we're getting a very large number (this will be different, and larger, when you run the test for yourself, as it represents the time in seconds since a point several decades in the past). What's going on? Didn't we replace time.time for that test with a mock? Let's take a look at the relevant part of pid.txt:

>>> import time >>> real_time = time.time >>> time.time = (float(x) for x in range(1, 1000)).__next__ >>> import pid >>> controller = pid.PID(P = 0.5, I = 0.5, D = 0.5, setpoint = 0, ... initial = 12) >>> controller.gains (0.5, 0.5, 0.5) >>> controller.setpoint [0.0] >>> controller.previous_time 1.0

We mocked time.time, sure enough (although it would be better to use the unittest.mock patch function). How is it that from time import time in pid.py is getting the wrong (which is to say, real) time function? What if pid.py had already been imported before this test ran? Then from time import time would already have been run before our mock was put in place, and it would never know about the mock. So, was pid.py imported by some thing else, before pid.txt imported it? As it happens, it was: Nose itself imported it, when it was scanning for tests to be executed. If we're using Nose, we can't count on our import statements actually being the first to import any given module. We can fix the problem easily, though, by using patch to replace the time function where our test code finds it:

>>> from unittest.mock import Mock, patch >>> import pid >>> with patch('pid.time', Mock(side_effect = [1.0, 2.0, 3.0])): ... controller = pid.PID(P = 0.5, I = 0.5, D = 0.5, setpoint = 0, ... initial = 12) >>> controller.gains (0.5, 0.5, 0.5) >>> controller.setpoint [0.0] >>> controller.previous_time 1.0

Don't get confused: we switched to using unittest.mock for this test because it's a better tool for mocking objects, not because it solves the problem. The real solution is that we switched from replacing time.time to replacing pid.time. Nothing in pid.py refers to time.time, except for the import line. Every other place in the code that references time looks it up in the module's own global scope. That means it's pid.time that we really need to mock, and it always was. The fact that pid.time is another name for time.time is irrelevant; we should mock the object where it's found, not where it came from.

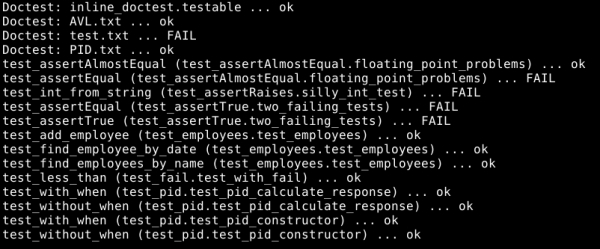

Now, when we run the tests again, the only failures are the expected ones. Your summary report (that we get because we passed -v to Nose on the command line) should look like this:

We just saw how hidden assumptions can break tests, just as they can break the code being tested. Until now, we've been assuming that, when one of our tests imports a module, that's the first time the module has been imported. Some of our tests relied on this assumption to replace library objects with mocks. Now that we're dealing with running many tests aggregated together, with no guaranteed order of execution, this assumption isn't reliable. On top of that, the module that we had trouble with actually had to be imported to search it for tests, before any of our tests were run. A quick switch of the affected tests to use a better approach, and we were good to go.

So, we just ran all of these tests with a single command, and we can spread our tests across as many directories, source files, and documents as we need to keep everything organized. That's pretty nice. We're getting to the point where testing is useful in the real world.

We can store our tests in a separate and well-organized directory structure, and run them all with a single, quick, and simple command. We can also easily run a subset of our tests by passing the filenames, module names, or directories containing the tests we want to run as command-line parameters.