9

Content- and Entertainment-Centric Software

9.1 iClouds and MyClouds

In this chapter we review how content and entertainment software has changed over the past five to seven years and set this in the context of contemporary events (announcements that have occurred literally as this chapter is being written (June 2011)).

These include the announcement by Apple of iCloud,1 a set of free cloud services intended to encourage people to store data and access applications on demand remotely (in the cloud) for access from any device but including the iPhone, iPad, iPod touch, Mac and PC. The assumption is that you might own all of these and if anything changes on any one device it changes on all of them.

The iCloud is serviced from three data centres the latest of which2 is a 500 000 square foot facility in Maiden North Carolina five times the size of an existing 109 000 square foot facility in Newark California and represents an investment of somewhere between $500 million and one billion dollars.

At the same time Microsoft have announced upgrades to the X Box 360 including the ability to access live TV programs from Sky TV in the UK, Canal+ in France and Foxtel in Australia with presumably the intention of inking similar deals with providers in the USA and in parallel started marketing the Microsoft My Cloud.3

In May 2011 Microsoft spent $8 billion dollars buying the Skype telephone service and rumours in the Press started circulating about their intent to buy Nokia's handset business, now with a market value less than HTC, the Taiwan-based manufacturer of smart phones.

Five years earlier this would have seemed an unlikely scenario with Nokia consolidating its dominance in the handset market. Unfortunately for Nokia the company proved overreliant on Symbian, its home-grown operating system and failed to realise the scale of the impact that the introduction of the iPhone would have on the smart-phone market and Nokia's position in that market. Competition at the lower end of the market in developing countries further eroded market share and margin.

In 2010 Stephen Elop formerly President of Microsoft's business division and prior to that COO of Juniper Networks, Adobe Systems and Macromedia joined Nokia as President and CEO.

Microsoft were themselves trying to work out a response to the shift that had occurred in the last quarter of 2010 when smart phones started outselling personal computers. (Approximately 100 million smart phones against 94 million personal computers.)

This had and has two implications. The added value for Microsoft per PC shipped is close to $40, the added value for Microsoft in a mobile phone is $15 dollars and the market has to be shared with Apple and Android. Both are problematic. Apple has a first-mover advantage that at the time of writing appears unshakeable. Android is effectively open sourced and therefore will be likely to force down operating system realised value.

Buying Nokia might be a solution but that all depends on what happens next both in terms of handset hardware and software form factor and functionality. It also depends on the market mix. If everyone bought $500 smart phones then additional software value would be realised. However, what we are trying to establish is the mix between hardware and software value going forward and who will own that value. The answer is hidden somewhere in the hardware and software changes that have happened in the last five years, the hardware and software changes that are happening today, the hardware and software changes that are likely to happen over the next five years and the impact these changes will have on the smart phone market, TV market, games market and content and entertainment sector and application added value. We just need to know where to look. The journey takes us from camera phones via gaming platforms to a future that may be uncertain but in theory at least should be predictable. The analysis needs to include successes and failures. The accuracy of the prediction is dependent on the amount of detail that we can capture and the depth of the analysis that we apply to that detail so here we go.

9.2 Lessons from the Past

At CEBIT in March 2005 Samsung launched their V770 camera phone with the stated aim of proving that a ‘ midtier’ digital camera phone capability could be packaged into a camera phone physical form factor. The 770 was a 7-megapixel phone with interchangeable lenses. In common with many other camera phones, it also supported basic camcorder capability. The product was enthusiastically hailed as a breakthrough by the technical press but relatively few were sold. The problem was that the product attempted to be a digital camera with a mobile phone added in as an extra.

Given that there was no real enthusiasm by the operator community to provide additional subsidy for the product it fell between two stools, being overtaken by digital cameras that worked better and mobile phones that worked better and cost less, albeit with less exotic imaging functionality. It also looked odd, felt heavy bulky and unbalanced and for all these combined reasons failed to set a trend.

Six years later the Samsung Galaxy4 offers a similar resolution (eight megapixel) and LED flash, autofocus, touch focus, face detection, smile shot and image stabilisation with a video capability that can record full 1080p HD video and a preinstalled image and video editor. The success of this product suggests that early disappointments (in relative terms) can serve to calibrate later market introductions in a positive way.

Despite the fact that most high-end cameras will now also shoot HD video, digital camcorders still exist as a discreet product sector but as with still cameras functionality can be shown to be changing over time. A digital camcorder six years ago (2005) from Canon priced at around £600 to £700 typically had 800 000 or 1.2-megapixel CCD sensors. The default resolution (DV video) was usually 720 by 480 pixels (345 000 pixels) with the additional pixels being used for image stabilisation, digital zoom and still photography. Camcorders with 3- or 4-megapixel CCD sensors were also sold on their ability to produce quality still images in addition to video. Video images were typically recorded on to tape or disk and still images recorded on plug-in memory.

9.2.1 Resolution Expectations

The top of the range Samsung 770 seven-megapixel camera phone could ‘only’ handle QVGA 320 by 240 pixel video (76 000 pixels). Users of camcorders expected to have 720 by 480 pixel DV resolution (345 600 pixels) more or less equivalent to 640 by 480 pixel VGA (307 200 pixels). This gave adequate quality when displayed on a computer monitor.

The other format found in video phones in 2005 was 176 by 144 pixel QCIF (25 344 pixels) derived from 352 by 288 pixel CIF (101 376 pixels), which is one quarter the size of a standard 704 by 576 pixel PAL TV image known as 4CIF (405 504 pixels).

Note that CIF (common intermediate format) is a standard originally from the TV industry, whereas VGA (video graphics array) was introduced in 1987 as a standard for describing computer monitor resolution. The VGA standard, however, included 1920 by 1080 pixel high-definition TV (2.073 megapixel) that at that time was an aspiration but is now a common reality.

Today, HD video recorders are available for around £300 and will work in conditions of almost total darkness (around one and a half lux) and when required can realise a still image resolution of seven or eight megapixels. Just as digital cameras can now perform as video camcorders, video camcorders can also perform as a still image camera. A contemporary product such as Panasonic HDC SD905 is a 1080-pixel HD device that shoots at up to 50 frames a second with an optional additional lens for capturing 3D images.

9.2.2 Resolution, Displays and the Human Visual System

Just as in audio systems where you would not generally marry a £5000 amplifier to a pair of £5.00 speakers, there is not much point in capturing high-quality video and showing it on a low-resolution display.

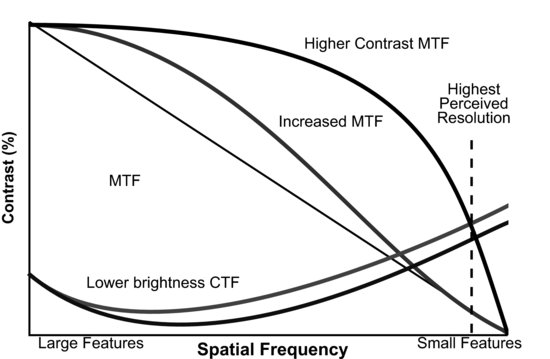

From an end-user-experience perspective the useful most relevant measure of display quality is perceived picture quality. This can either be done by establishing a ‘subjective quality factor’ that is more or less equivalent to the mean opinion scoring used in voice. A more objective method is to use a method known as the modulation transfer function area. This is illustrated in Figure 9.1.

The quality of a video stream is partly a function of the frame rate and colour depth, but also a function of the contrast ratio, resolution and brightness of the display. Contrast ratio defines the dynamic range of the display (the ratio of the brightest white the system can generate divided by the darkest black), resolution defines the display's ability to resolve fine detail expressed as the number of horizontal and vertical pixels. Brightness is, well, brightness, measured in foot-Lamberts or candelas per square metre.

Brightness captures attention, contrast conveys information.

To be meaningful, brightness and contrast need to be characterised across the range of spatial frequencies being displayed. Spatial frequency is the ratio of large features to small features – the smaller the features in an image, the higher the spatial frequency. The overall number of pixels in the display determines the limiting resolution. The modulation transfer function is a way of comparing contrast to spatial frequency. As features get smaller, the contrast ratio will reduce. However, this measure does not take into account the limitations of human vision. We need a minimum contrast for an image to become distinguishable and this is measured using a contrast-threshold function.

Figure 9.1 Contrast threshold function and MTFA. Reproduced by permission of Planar Systems Inc. (Clarity Visual Specialist Display Technologies).6

Adding together the modulation transfer function of the imaging system and the contrast threshold function of human vision yields a crossover point that determines the highest perceptible resolution.

Increasing the brightness does not make much difference to the maximum perceived resolution, whereas increasing the contrast ratio significantly increases the amount of image content conveyed to the viewer.

The example in Figure 9.1 is applied to benchmarking quality in larger display systems but the same rule set applies to smaller displays. These performance metrics are important because they define the real-world experience of the user – a better quality image makes for a more immersive experience, the more immersive the experience, the longer a session will last and the more valuable it should be.

9.2.3 Frame-Rate Expectations

Six years ago the Samsung V770 produced 15 or 30 frames per second. 30 frames per second would normally be considered adequate for video (cinema film runs at 24 fps, PAL at 50 and NTSCC at 30 fps) but a number of streaming applications in 3G networks were being trailed in 2005 at 35 fps (the faster frame rate hides some of the quality issues). Some of the higher-tier camcorders could even then shoot at 240 frames per second. Camera phones have therefore not destroyed the compact camera, digital SLR or camcorder – these product sectors all remain alive and well partly due to continuous innovation and the pull-through effect of big-screen HDTV. All of these products have had an impact on memory footprint and memory technologies.

9.2.4 Memory Expectations Over Time

Six years ago the Samsung V770 had 32 MB of internal memory and an MMC Micro card slot. The Canon Camcorder had 11 GB of memory on its DV cassette plus 8 Mb on the SD card. The Hitachi camcorder had 9.4 GB of memory available on the DVD plus the SD card. Both the tape and DVD formats were capable of storing half an hour of DV quality video and audio.

9.3 Memory Options

9.3.1 Tape

Tape storage is rapidly becoming a historical curiosity, but back in 2005 it was a relatively mainstream option for camcorders. The Mini DV tape usually used had a number of advantages – relatively reliable and robust and low cost, less than 4 dollars for 11 GB of storage. There was, however, a mechanical process involved. The tape moved at 18.9 mm a second over a read/write head rotating at 9000 rpm. The head laid down tracks diagonally across the tape, 12 tracks per frame for PAL and 10 tracks per frame for NTSCC. This means that the tape was quite bulky (the cassette was 12.2 mm by 48 mm by 66 mm) and the recording sequential – that is, random access requires the tape to be mechanically wound forward or back. It is thus not suitable (if used on its own) for mixed media (video, audio and data) applications.

9.3.2 Optical Storage

DVDs in comparison can be configured for random access (DVD-RAM), or sequential access (DVD-RW and/or DVD+RW).DVDs come in three sizes, four and a half inch, three-inch mini DVDs and Sony's two and a half inch universal media disk. Data is stored in a spiral of pits (up to 48 kilometres long for a double-sided DVD) embedded within the disk. The reflectivity of the pits can be changed by the application of heat from a red (640 nanometre) laser that is also used to read the data. A DVD RAM can be rewritten about 10 000 times, a DVD-RW or DVD+RW can be rewritten about 1000 times (the whole disk gets erased on the write cycle). The disks rotate at between 200 and 500 rpm. Although DVD-RW and DVD+RW are optimised for sequential storage, there are zone storage and search techniques that mean they can be used for storing and reading a mix of video, audio and data. The spin speed changes to keep the read speed constant as the laser moves out from the centre of the disk. The transfer rate is typically 10 Mbps. The seek time on a DVD RAM disk is typically about 75 ms.

A standard four and a half inch DVD holds 4.7 GB of data, (9.4 GB on a double-sided disk), a mini DVD holds 1.4 GB and a universal mini disk holds 1.8 GB. And as stated in an earlier chapter moving to a blue laser at 405 nanometres rather than a red laser (a Blu Ray DVD) increases available storage to close to 30 gigabytes, enough for a two-hour HD movie.

The Sony Universal Media Disk was used (as a read-only device) in the original Sony PlayStation Portable released in 2004. The PlayStation Portable was significant in that it could deliver PS2 quality graphics on a high-brightness screen. PS2, the second generation of PlayStation produced by Sony had been released in March 2000 in Japan and competed with Microsoft's X Box, the Nintendo Game Cube and Sega's Dreamcast. The PS2 outsold all other consoles and reached 150 million units shipped by early 2011 with over 2000 titles available and over 150 billion titles sold since launch.

In 2007 Sony introduced a new portable device PSP 2000, also known as the PSP Slim as it was 33% lighter and 19% slimmer. In 2008 the PSP 3000 was introduced with an improved LCD screen with five times the contrast ratio and a built-in microphone. A redesigned and smaller model, the PSP Go, was launched in 2009. This removed the UMD drive and relied on WiFi connectivity for game downloading and Bluetooth connectivity for multiplayer headsets and handset connectivity.

The PSP2 also called the Sony NGP also called the Vita was introduced in June 2011 with an OLED7 multitouch front display and multitouch pad on the back. The product included WiFi and 3G connectivity with a ‘near’ application to allow users to discover other Vita users nearby and ‘Party’ that allows users to voice chat or text chat while they are gaming.

Figure 9.2 Sony PlayStation Vita. Reproduced with permission of Sony Computer Entertainment Inc.

The device has a front and rear camera with a frame rate of 60 or 120 frames per second, a six-axis motion sensing system (three-axis gyroscope, three-axis accelerometer and three-axis electronic compass, built-in GPS and Bluetooth). It is shown in Figure 9.2.

9.4 Gaming in the Cloud and Gaming and TV Integration

Taking the Sony portable offerings as a reference, over a period of five years this product sector has moved from a business model based on shipping products on an optical disk to a business model based on paying for downloaded games over the internet stored on local memory.

The assumption must be that this model also now evolves into just downloading games on demand as and when needed, effectively cloud-based gaming. Similarly, the Microsoft X box is evolving into a dual-purpose entertainment platform that can either store record and play TV programmes and/or download games from the internet. The X Box therefore needs a substantial amount of hard-disk space of the order of 250 GB.

A desk-top hard disk is typically now a three and a half inch diameter device spinning at between 10 000 and 15 000 rpm. The capacity in 2005 was typically 100 GB. Six years later capacity is typically somewhere between 750 and 1000 GB (one terabyte). The power budget of these devices (8 to 10 W) precludes their use in portable products.

Lap tops typically use two and a half inch diameter hard disks offering 250 GB of capacity. A spin speed of 10 000 rpm produces a seek time of under 5 milliseconds but power consumption is still over 5 W. Reducing the spin speed to 5000 rpm increased the seek time to about 15 milliseconds but reduces the power to about 3 W.

In 2005 iPods used 1.8-inch hard disks with between 20 and 60 GB of storage and a variable 3990 to 4200 rpm spin speed. Power consumption was about 1.3 watts but as with other portable products solid-state memory has taken over. In 2005 vendors were promoting either 1-inch drives (Hitachi) or 0.8-inch ‘microdrives’ (Toshiba and Samsung) for cellular phones.

The one-inch drives fitted into a Type 2 PC card form factor (5.2 mm) and weighed 16 g. Microdrives had a depth of 3.3 mm and weigh 10 g. One-inch disks typically held about 8 to 10 GB and microdrives were either 2 or 4 GB. Both had a spin speed of 3600 rpm giving a seek time of 12 to 15 milliseconds and a transfer rate of 4 or 5 M/bytes per second. A one inch drive had a power consumption of just under one watt, a 0.85-inch drive, about 700 milliwatts.

Some cellular handsets became available with hard disks (the Samsung V5400 for example and the 3-GB SGH-i300 launched in the UK) and Toshiba brought forward the availability of a 4-GB 0.85-inch hard disk but in practice over the past six years solid-state memory has eclipsed all other options for mobile applications. A hard drive remains the most economic and space effective way to put a terabyte of storage on your desk top but is not optimum in a mobile product form factor.

9.4.1 DV Tape versus DVD versus Hard-Disk Cost and Performance Comparisons

Tapes may well continue to disappear from the market but they still offer good value for money. A mini DV tape costing 4 dollars implies a storage cost of less than 0.04 of a cent per megabyte but the memory medium cannot be used for random access applications.

An optical disk could potentially offer similar cost/performance ratios with the added benefit of sequential storage and random access capability.

A 4-GB microdrive can provide sequential storage and fast random access (15 milliseconds rather than the 75 milliseconds of a DVD) but presently costs about 10 cents per megabyte and as we have stated is nonoptimum when used in portable products.

9.4.2 Hard-Disk Shock Protection and Memory Backup

A number of manufacturers (Toshiba for example) have MEMS-based accelerometers built in to hard-disk drives to detect when a product is being dropped. The head can then be kept clear of the disk to prevent damage. Analogue Devices (low-g iMEMS) and Microelectronics have digital accelerometers targeted at hard-disk protection.

This is standard now in most lap tops though if you have dropped a lap top your problems may be more fundamental than just a damaged memory. Also, lap tops increasingly are going to solid-state memory linked to cloud connectivity, what used to be called the thin client model.

9.5 Solid-State Storage

This thin client model has mixed implications for the solid-state memory market. On the one hand, there is an increasing need for short-term storage but thin clients linked to cloud connectivity imply that neither the application nor the content are stored in the device but are downloaded as required on demand.

The future of both embedded memory and plug-in memory is thus open to debate. The debate benefits from an understanding of the various solid-state memory options and their relative advantages and disadvantages.

9.5.1 Flash (Nor and Nand), SRAM and SDRAM

Flash-based solid-state memory can be configured to look and behave like a hard disk (given its ability to retain data in power down) and has the big advantage of not having any moving parts. It is however, more expensive and comes in a bewildering range of form factors.

Embedded nonvolatile (FLASH) memory in a cellular handset is typically a mix of NOR for code storage and NOR and NAND for data storage. NOR sets the input memory state at 0, NAND sets it at 1. NAND costs less than NOR because it takes a number of memory capacitors and matches them to a smaller number of switching transistors. NAND is more error prone but the cost benefits make it a preferred option for most embedded data storage applications. Wear-levelling techniques have also increased the number of write cycles to typically 100 000 or more even on extreme geometry devices.

Flash memory, originally developed by Intel, works on the basis of having two transistors separated from each other by a thin oxide layer – one transistor is known as the ‘control gate’, the other is the ‘floating gate’. The floating gate can have its state changed by a process known as tunnelling. An electrical charge is applied to the floating gate transistor that then acts like an electron gun, pushing electrons through the oxide layer. A cell sensor measures the level of charge passing through the floating gate. If it is more than 50% of the charge, the state value is 1, if less than 50%, the value is 0. The gate will remain in this state until another electrical charge is applied. In other words it is nonvolatile. It will retain its state even when power is not applied to the device.

The embedded (nonvolatile) flash memory sits alongside (volatile but lower cost) SRAM (static random access) and DRAM memory (dynamic random access). Random access means just that, the memory is optimised for fast random access rather than steady sequential access.

SRAM offers very fast read/write cycles (a few nanoseconds) but the data is held in a volatile ‘floating gate’ (a volatile flip flop). It does not need refreshing as often as DRAM (hence the description static) but is still relatively power hungry. SRAM is used for temporary cache memory.

DRAM memory holds data in a storage cell rather than a flip flop (which is why it is called dynamic). DRAM offers the lowest cost per bit of the available solid-state memories. A typical DRAM device will store up to 64 MB (512 Mb). SDRAM is synchronous DRAM – synchronous means the device synchronises itself with the CPU (central processing unit).

DRAM products are inherently power hungry in that the storage cells leak and require a periodic refresh to maintain the information stored in the device. There are techniques such as temperature-compensated refresh, partial array self-refresh and deep power down that help reduce power drain. These products are sometimes described as mobile DRAM or mobile SDRAM. The volatility and related power-drain issues of these devices and their optimisation for random rather than sequential access makes them unsuitable for storing large amounts of video requiring sequential access.

It has therefore now become increasingly common to supplement embedded memory with plug-in memory. Most plug-in memory consists of a NAND flash device (or multiple devices) on a die with a dedicated microcontroller.

In the context of a cellular handset, plug-in memory can be summarised in terms of when the technology was introduced.

9.5.2 FLASH Based Plug-In Memory – The SIM card as an Example

9.5.2.1 The SIM Card

The SIM card (subscriber identity module) became an integral part of the GSM specification process in the mid to late 1980s. The original idea was that it should be used embedded on a full-size ISO card (the standard form factor for credit cards) but as phone form factors became smaller through the 1990s it became normal to use the device on its own. The device is 0.8 mm high, which apart from the Smart Media Card (see below) makes it the thinnest of any of the plug-in memories and one of the smallest (26 mm × 15 mm). Early SIMs consisted of an 8-bit microcontroller, 8 k of ROM and 250 or so bytes of RAM and were used primarily to store the user's phone number (IMSI/TMSI) and to provide the basis for the A3/A5/A8 challenge and response authentication and encryption algorithms. Early SIMS also had a limited amount of data bus bandwidth, typically 100 kbps.

A SIM in 2005 had the same mechanical form factor but used a 16-bit or 32-bit microcontroller. Most of the vendor product roadmaps suggested then that one gigabyte of data storage would be technically and economically feasible within 2 to 3 years coupled with a faster bus (up to 5 Mbits/s) and faster clock speed (up to 5 MHz). These devices were termed HC SIMS (high-capacity SIMS), or MegaSIMS or SuperSIMS depending on the vendor and were targeted at the image storage market. A SIM used with a UMTS phone was known as a USIM (universal subscriber identity module) with additional functionality in terms of device and service identification. An ISIM was a SIM optimised to work with the IP multimedia subsystem intended to provide the basis for IPQOS management and control.

Although the SIM remains a mandated component within UMTS and a mandated part of the 3GPP1 and 3GPP2 standardisation process, it's longer-term role in managing authentication, access and service control is being challenged by microprocessor and memory manufacturers.

ARM11 microprocessors for example were promoted in terms of their ability to manage user keys and as a mechanism for providing network virus and M commerce protection. ARM/TI dual-core microprocessor /DSPs were promoted in terms of their ability to provide end-to-end security, multimedia and digital rights management.

Intel were promoting their 2005 offering ‘Bulverde’ on the basis of its built-in hardware-based security capabilities (DES, triple DES and AES encryption) and conformance with IT industry (EAL4+/EAL5+evaluation assurance levels) rather than telco industry security standards.

The SD card and MMC cards available at the time also promoted enhanced security and authentication features as part of their future network operator focused value-added proposition. Smart card/SIM/storage card combinations such as MOPASS (a Hitachi-led consortium working on SIM card/memory card functional integration) strengthened this story. These products were sometimes described as ‘bridge media’ products.

Thus, it was possible that some of the functionality proposed for the USIM/ISIM would migrate onto competitive device platforms particularly in markets with no prior SIM/USIM experience. The 1-gigabyte capacity of the SIM also could have been assumed to be insufficient for many of the evolving imaging applications, particularly video storage and movie image management and/or any associated gaming applications.

And that's how it has turned out so far. SIM cards have not made significant inroads into new markets and still predominantly perform the functions that they have always performed.

9.5.2.2 Compact Flash8

In 1994, SanDisk introduced Compact Flash, a 4-MB flash card with a depth of 3.3 mm (type 1 PC card) by 36.4 by 42.8 mm and a weight of 11 g. Compact Flash was compatible with the Integrated Device Electronics standard, which meant that the flash looks like a (small) hard disk to the operating system. Capacities ranged from 16 MB to 12 GB and the products were widely used in digital cameras. The devices could withstand a shock of 2000 Gs equivalent to a 10-foot drop and were claimed to be able to retain data for up to 100 years. Specification revisions included an increase in data transfer rate from 16 MB/s to 66 MB/s.

9.5.2.3 Smart Media9

In 1995, Toshiba launched Smart Media, an 8-MB device. This was the slimmest of the plug-in memories with a depth of only 0.76 mm but had the disadvantage of being dependent on the host controller to manage memory read/writes (it had no built-in controller but is just a NAND chip on a die). This and a relatively high pin count, has limited its application in smaller form factor devices, although it found its way into a number of digital cameras and camcorders (for still-image storage).

9.5.2.4 Multimedia Cards10

Introduced in 1997 by Siemens and Sandisk, multimedia cards were targeted from the start at small form factor cell phones and (not surprisingly, given their name), multimedia applications. Standard MMC cards had a depth of 1.4 mm and are 24 mm long and 32 mm wide. An MMC microcard was 18 mm long.

There was an MMC Plus and HS (high speed) road map with a variable bit width bus (1 bit, 4 bit, 8 bit), a 52-MHz clock rate ( a multiple of the GSM 13 MHz clock), a 52-M/byte per second transfer rate and a search time of 12 milliseconds (equivalent to a hard disk at the time). Storage footprints were from 32 MB to 2 GB. A standards group working with the Consumer Electronics Advanced Technology Attachment standards body was established to address how an MMC card would interoperate with disk-drive memory and looked at future optimisation of MMC devices for audio and video stream management.

9.5.2.5 Memory Stick11

The Memory Stick was introduced by Sony in 1998. It had a depth of 2.8 mm, a width of 21.5 mm and is 50 mm long (the same length as an AA battery). The ‘top of the range’ model in 2005 was the Memory Stick Pro providing 2 GB of storage with a maximum transfer rate of 20 MB/s and a minimum write speed of 15 Mbps. A thinner version, the Memory Stick Pro Duo, had a depth of 1.6 mm and a capacity of up to 512 MB. These devices were optimised for video (and other types of media including images, audio, voice, maps and games). The high transfer speeds needed for video did, however, have a cost in terms of power drain – a memory stick used at full throttle used upwards of 360 milliwatts. Sony had a product road map showing 4 GB devices available by the end of 2005 and 8 GB devices by the end of 2006. Five years later in 2011 a 32-GB memory stick costs £40, a 2-GB memory stick costs £4.00. Most of us use memory sticks given to us as free promotional items at conferences, which was probably not the business model that Sony originally anticipated.

9.5.2.6 SD (Secure Digital) Cards12

Introduced by a group of manufacturers including Sandisk, Toshiba and Panasonic in 2000/2001, the SD card was developed to be backwards compatible with (most) MMC cards but with a reduced pin count (2 rather than 9 pins) and (as the name implies) extended digital rights management capabilities.

The standard SD card was the same width and length as the standard MMC card (24 by 32 mm) but is thicker (2.1 mm) and therefore able to support slightly more storage with a road map of 2- to 8-GB devices. The device weighed 2 g and had a smaller cousin known as a Mini SD card that was 1.4 mm by 21.5 mm by 20 mm and weighed 1 g and a Micro SD card which was 1 mm by 11 mm by 15 mm and weighs virtually nothing. As you may have noticed, Mini SD cards and Micro SD cards were not compatible with MMC microcards. Seek times were similar to MMC. Rather like the memory stick, the SD card had separate file directories for voice (SD voice), audio (SD audio) and video (SD video).

9.5.2.7 xD Picture Cards13

Introduced by Toshiba, Fuji and Olympus in 2002, Picture Cards had a depth of 1.7 mm, a width of 20 mm and a length of 25 mm, weighed 2 g and were presently available in capacities up to1 GB with a 2- to 8-GB product road map similar to the memory stick. A 1-GB card would store about 18 minutes worth of Quick Time movie at VGA resolution (640 by 480) at a frame rate of 15 fps including sound, or 9 minutes at 30 fps.

9.5.2.8 USB Flash Drives/Portable Hard Drive14

Slightly different in that they were specifically designed for moving data files on to or from a hard disk, USB flash drives plugged straight into the USB port. This did not mean that you had to have a 12 mm by 4 mm USB port in the host device but delivered flexibility in terms of plug in I/O functionality (hot swapping, USB hubs and all those other nice things that USB ports deliver). The objective was to have devices that were compatible with the USB2.0 standards15 for high-speed USB that included transfer rates of up to 480 Mbps. This became standardised as USB 2.0 and remains a ubiquitous method of providing connectivity with computing devices and of delivering power for devices that need recharging).

The lessons from this are, however, salutary. The adoption of plug in solid-state memory has not been helped by the bewildering choice of formats and form factors that resulted in a loss of scale economy and an offer to consumers that was completely incomprehensible to the majority of the buying public. Incompatibility between formats and an inability to export and import data between formats compounded the problem.

This has almost certainly helped build the case for cloud computing on the basis of simplicity and ease of use and cost, irrespective of whether the application is content or entertainment centric.

The iCloud may be the next evolution in this cloud connectivity story presumably with an iGame console as yet (at time of writing) to be announced.

1 http://www.apple.com/uk/icloud/?cid=mc-uk-g-clb-icloud&sissr=1.

2 http://www.datacenterknowledge.com/archives/2010/02/22/first-look-apples-massive-idatacenter/.

3 http://www.microsoft.com/en-us/cloud/default.aspx?fbid=_7o8c0rKG39.

4 http://www.samsung.com/global/microsite/galaxys2/html/.

5 http://www.panasonic.co.uk/html/en_GB/Products/Camcorders/1MOS+HD+Camcorders/HDC-SD90/Overview/6828169/index.html?gclid=CMHi-rvMnKoCFcxzfAodzDpSyg.

6 http://www.clarityvisual.com/.

7 Organic light-emitting diode display.

9 http://eu.computers.toshiba-europe.com/.

11 http://www.sony.co.uk/product/rec-memory-stick.

12 http://www.sdcard.org/home/.