7

Device-Centric Software

7.1 Battery Drain – The Memristor as One Solution

The last six chapters have looked at how user hardware influences network value and user value. The end point in the last chapter was smart televisions and 3D-capable high-end lap tops.

If it was just one application being supported at any one time then life would be reasonably simple but in practice there is a user expectation that the device and the network to which it is connected should be capable of supporting multiple tasks simultaneously.

An example would be a lap top or tablet user texting or sending e mails while watching a video on You Tube with a Skype session going on in the background. Then the phone rings. At this point the weakest point will cause some form of instability that will become noticeable to the user. This might be caused by a processor bandwidth limitation, a memory bandwidth limitation, an interconnect limitation, a network bandwidth limitation or an energy bandwidth limitation (the battery goes flat). For example, the lap-top user forums referenced in the last chapter are mainly focused on how to disable functionality to reduce battery drain, which rather defeats the point of the product.

There are potential solutions, one of which is the memristor.

The theory of resistance with memory was put forward in 1971 in a paper1 by Professor Leon Chua, from the University of California Berkeley and was premised on the basis that circuit design has three elements, a resistor, capacitor and inductor but four variables with the fourth being a combination of resistance and memory not realisable from any combination of the other three elements.

The device remained as a theoretical concept up until 2008 when HP Labs2 demonstrated a nanotechnology scaled titanium oxide structure with atoms that moved when a voltage is applied. The device opens up the prospect of a step function increase in the energy efficiency of computing and switching systems and the opportunity to create memories that retain information without the need for power.

It would appear that very small devices constructed as 3D structures at the molecular level may well be the key enablers for large and/or complex products connected to large and/or complex networks and may make the delivery of 3D images and voice and audio and text and positioning information to portable and mobile devices via mobile broadband networks more cost and energy economic than presently expected.

The only problem is that it will probably be at least twenty years before these components are available commercially, so in the meantime we will just need to make do with existing available technologies. So let's look at a present high-end product and see how the hardware form factor determines software form factor and how the combination of hardware and software form factor determines the user experience.

7.2 Plane Switching, Displays and Visual Acuity

Unsurprisingly, Japanese and Korean TV and PC manufacturers have been working to translate the innovations applied in large-screen displays to small-screen displays including inplane switching. Introduced by Hitachi in 1996, inplane switching uses two transistors for each pixel with an electrical field being applied through each end of the crystal that meant that the molecules moved along the horizontal axis of the crystal rather than at an oblique angle. This reduces light scatter and improves picture uniformity and colour fidelity at wide viewing angles. The two-transistor structure also allows for faster response times.

Inplane switching is used now in a wide range of products including the iPad that has a 9.7-inch 1024-by-768 resolution LED-backlit LCD screen with a viewing angle of up to 178 degrees with 8-bit colour depth. The iPhone 4 uses similar technology (on a smaller screen obviously) as does the iMac. Both the iPhone and iPad have ambient light sensing and an 800 to 1 contrast ratio.

Anyway, back to LG. In 1995 LG partnered with Philips to invest in display technologies and have captured significant market share in high-definition and more recent 3D displays. Their first and probably the world's first 3D smart phone, the Optimus 3D was introduced in February 2011. The device has a 4.3-inch WVGA display provides bright images allowing users to view 2D (up to 1080p) and 3D (up to 720p). LG claim the display is 50% brighter than the iPhone4 producing 700 nits of brightness

A nit by the way is not just something your children catch at school but is a US measurement term for luminance from the Latin ‘nitere’ to shine. A nit quantifies the amount of visible light leaving a point on a surface in a given direction. This can be a physical surface or an imaginary plane and the light leaving the surface can be due to reflection, transmission and/or emission. A nit is equivalent a one candela per square metre and you do wonder what was wrong with candelas as a measurement term even if candles do seem a touch archaic as a base for comparing luminance levels. Other measurement terms used in the past include the apostilb, blondels, skots, stilbs and the footlambert.

Luminous flux is also measured in lumens with one lux equal to one lumen per square metre. There is no direct relationship between lumens and candelas or nits as luminosity is also a function of the composite wavelengths, so a conversion is only possible if the spectral composition of the light is known.

For example, the maximum sensitivity of the human eye is at wavelengths of 555 nanometres, which is green to you and me and presumably designed so that we can spot edible greenery at a distance. The luminosity function falls to zero for wavelengths outside the visible spectrum. As an interesting if only tangentially relevant aside, The Institute of Cognitive and Evolutionary Anthropology,3 having measured global skulls from the last 100 years collected together in a dusty basement in Oxford, have discovered that people living in high latitudes have bigger eyes and brains. The bigger eyes are so that they can see better in low light conditions. The bigger brains do not make them more intelligent but are needed to provide the additional visual processing power required. Animals that forage at night similarly have bigger eyes and brains than animals that forage during the day.

Anyway, we use nits to describe display brightness and lux to describe the light conditions that dictate whether we can take pictures with our mobile phone. For the Optimus 3D product these pictures are captured through two five-megapixel lenses set apart to allow for stereoscopic processing to produce 3D images/HDTV that can either be displayed on the phone or on an HDTV or uploaded to the You Tube 3D channel rather slowly if you happened to be using a 3G connection.

7.3 Relationship of Display Technologies to Processor Architectures, Software Performance and Power Efficiency

Actually before you get this far the phone has to process all this stuff, which it does via Texas Instruments OMAP4 dual-core dual-channel dual-memory architecture.

The concept of dual core is to have identical processing subsystems including general-purpose and special-purpose processors running the same instruction set with each processor having equal access to memory, input and output (I/O) outputs with a single copy of the operating system controlling all cores. Any of the processors can run any thread. Some specialised processors including graphic processors may be handling several thousand threads in parallel but the tasks are largely repetitive and similar. Video processing, image analysis and signal processing are all tasks that are inherently parallel.

Power consumption is managed by implementing adaptive power-down modes driven by a policy data base. These trade task latency against power drain, taking into consideration that some tasks are latency and delay sensitive and some are not. Note that it is not just delay but the amount by which that delay varies over time that can be problematic, particularly for tasks that are intrinsically deterministic. User experience expectations will include things like boot time, how long applications take to launch and execute and whether the system appears stable and predictable when multitasking.

All of this then has to be shown to work with legacy Symbian systems, Linux systems including Android and LiMO and Microsoft Windows Mobile. Anecdotally, most software engineers would admit though not generally publicly that most smart phones ship with typically several hundred software faults. Solving these faults results in time to market delay and creates a different set of faults that are generally equally problematic.

These applications processors can be drawing the best part of 5 watts at full steam so have to be carefully managed, as these products would ideally have an eight hour to ten hour duty cycle. Memory and interface specifications also have to be very adequately dimensioned to avoid problems with task latency and task interrupts.

As at 2011 a high-end smart phone such as the LG device mentioned above and the iPad use low-power double data rate 2 (LPDDR2) mobile DRAM. This has a maximum of 8.5 Gbytes/second peak data throughput at a power consumption of 360 mW. Note this is memory power consumption not processor power consumption. Actually this is four devices housed in a package on package. The clock speed is from 100 to 533 MHz, increasing to a proposed 800 MHz for LPDDR3. Clock speeds of this order are of course running at close to the RF frequencies and therefore have to be carefully managed.

There are various contenders for next-generation mobile DRAM, either evolved versions of LPDDR or alternatives promoted by vendors such as Rambus or Micron or Samsung. Samsung incidentally have almost a 50% share of this multibillion dollar market and support an option called SPMT that replaces the traditional 32 data lane technology with a four-channel, 128-lane technology that enables a total of 512 I/Os with a total bandwidth of 12 Gbytes with a target consumption of 500 mW by 2013.

The average DRAM content is a smart phone or tablet is heading towards a gigabyte. A PC hosts about 3.5 Gbytes but a lot more smart phones now get shipped every year than PCs and people don't keep them as long. Also, mobile DRAM costs two to four times more than a PC DRAM because of the more stringent size and power demands.

A high-end applications processor such as Qualcomm's Snapdragon APQ8064 is claimed to realise speeds of up to 2.5 GHz per core and is available in single-, dual- and quadcore versions.

Drifting back through old RTT technology topics unearthed a study of media processor capabilities in 2004. Back then an application processor was considered to be a media processor with a Java hardware accelerator. The processor supported media-related applications like gaming, 3D processing, authentication and the housekeeping needed for J2ME personal profiles and MIDP (mobile information device profiles).

Most of these devices were ARM based (and still are today) with the exception of Renesas (the SH mobile widely used in many Japanese FOMA phones). Essentially, there were two approaches to delivering performance and power efficiency. Vendors such as Intel and Samsung used fast clock speeds to get performance and then (using Intel as an example), implement voltage and frequency scaling to reduce power drain. Usually, the application processors clocked at a multiple of the original GSM clock reference on the basis that the devices would be used in dual-mode GSM/UMTS handsets. The Intel device frequency scaled from 156 to 312 MHz and the Samsung device scaled up to 533 MHz (13 times 13 MHz). Consider that most baseband processors ticked along at 52 MHz (3 times 13 MHz) this represented a significant additional processor load being introduced to support multimedia functionality.

Given the diversity of media processors being sold or sampled to handset manufacturers and the diversity of vendor solutions, there was an obvious need to try and standardise the hardware and software interfaces used in these devices and to provide some form of common benchmarking for processor performance. This resulted in the formation of MIPI (the Mobile Industry Processor Interface)4 alliance. This was established by TI, ARM, ST and Nokia as an industry standard for power management, memory interfaces, hardware/software partitioning, peripheral devices and more recently RF/baseband interfaces.

In parallel, the Embedded Microprocessor Benchmark Consortium5 was established to work on a J2ME benchmark suite with a set of standardised processor tasks (image decoding, chess, cryptography) so that processors could be compared in terms of delay, delay variability and multitasking performance. This work continues today.

Part of the benchmarking process had to address the issue of video quality, which in turn was complicated by the fact that different vendors used different error-concealment techniques. One of the reasons GSM voice calls are reasonably consistent in mobile to mobile calls is due to the fact that error concealment is carefully specified across the range of full rate, enhanced full rate and adaptive multirate codecs. M-PEG4, however, permits substantial vendor differentiation in the way that error concealment is realised. This introduced a number of additional quality management challenges.

Six years on, the story has moved on but the narrative stays the same. Headline clock cycle counts do not translate directly across into task speed and/or task consistency and stability. Take as an example Samsung's applications processor originally called Humming Bird but now called Exynos, a name apparently derived from the Greek words smart (expynos) and green (prasinos). This is an ARM-based device and competes directly with the Qualcomm Snapdragon device (also ARM based) and TI OMAP and Intel and AMD processors. Intel has a power optimised product codenamed Moorestown that at the time of writing is yet to be launched.

All these devices build on the reduced instruction set architecture used in the original Acorn Computer. It is reassuring to know that rather like acorns and oak trees big ideas can still originate from small beginnings. Both the Snapdragon device and the Samsung device (used in Samsung's Galaxy tablet) are optimised for high speed and low power. but achieve that in different ways. Snapdragon chips are optimised to achieve more instructions per cycle and integrate additional functionality including an FM and GPS receiver, HSPA Plus and EVDO modem and Bluetooth and WiFi baseband. The Samsung device has a logic design that can do binary calculations.

Both devices can be underclocked or overclocked and the 1-GHz version of the chip sets used in present products can process about 2000 instructions per second. However, the number of instructions needed to perform a task can be very different. This can be due to either how well the software has been compiled or hardware constraints dictated, for example, by memory and interface constraints (referenced earlier) or usually a combination of both.

This can mean that certain tasks such as floating-point calculations and 2D or 3D graphics may run faster on a particular device and in general it can be stated that software design rather than hardware capability is usually the dominant performance differentiator. A processor rated at 1 GHz will not necessarily be run at 1 GHz.

Code bandwidth has increased in parallel with processor and memory bandwidth.

In ‘3G Handset and Network Design’ (page 122) we had a table showing how code bandwidth had increased between 1985 and 2002 from 10 000 lines of code to one million lines of code that implied that code bandwidth had increased by an order of magnitude every eight years.

Today (nine years later) an Android operating system in a smart phone consists of 12 million lines of code including 3 million lines of XML, 2.8 million lines of C, 2.1 million lines of Java and 1.75 million lines of C++, suggesting that the rule still more or less applies.

The impact of this code bandwidth expansion on income and costs is still unclear. Almost inevitably more software complexity implies more software faults, but additionally the Android applications store is open to any developer. This means that a handset vendor cannot test applications supplied by third parties in terms of their quality and efficiency either in terms of user experience or consumption of processor and memory bandwidth. This can result and has resulted in an increase in product returns on the basis of poor performance, a source of frustration for a handset vendor who has had no direct income from the supply of the application but is having to absorb an associated product support cost.

There may be a rule in which an increase in processor bandwidth, memory bandwidth, code bandwidth and application bandwidth can be related to an increase in offered traffic volume and value but the effect is composite. To say that an order of magnitude increase in code bandwidth results in an order of magnitude increase in per user bandwidth by the same amount over the same period of time is not valid or supported by observation.

The present rate of increase for example is significantly higher with some operators reporting a fourfold increase annually and present forecasts are generally proving to be on the low side (as discussed in earlier chapters). However, if the opportunity cost of delivering this data in a fully loaded network exceeds the realisable value then it could be assumed that price increases will choke this growth in the future, unless value can be realised in some other way. A compromised or inconsistent user experience including a short duty cycle may similarly limit future growth rates. Also, the value may be realised by the content owners or, as stated above, the application vendors rather than the entities delivering or storing the content.

Apple has managed this by establishing and then holding on to control of application added value. No other vendor has managed this including Nokia, who have made progress with their OVI application store though with a focus on developing market rather than developed market applications. The theory of course is that application value has a pull-through effect both on product sales and on monthly revenues and per subscriber margin.

This may well be true but the counterbalance is that application value incurs application cost at least part of which shows up in product-return costs or product-support costs. The product-return costs as stated above will generally fall on the handset vendor. Product-support costs for example customer help lines, may well fall on the operator. Although deferred, these costs can be substantial.

Present smart-phone sales practice has at least partially translated these costs into additional revenue. This has been achieved by implementing tariffs schemes that are so impenetrably complicated that most of us end up paying more per month than we need to. Many of us also have smart phones that have functions that we never discover or use, which supports the view that the most profitable user is the user who pays £25 a month and never uses the phone. If the user pays £8.00 a month to insure the phone and never leaves the house that is even better.

It is, however, reasonable to state that the functionality of device-centric software and the application value realisable from that software is intrinsically determined by the hardware form factor of the device that in turn determines uplink and downlink offered traffic value and delivery cost.

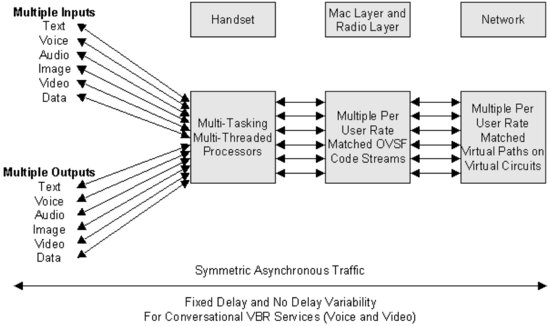

Consider a power-optimised multitasking multithreaded media processor in a mobile broadband device. An example is shown in Figure 7.1.

Figure 7.1 Multithreaded processor functional block diagram.

The core has to be capable of handling multiple inputs and outputs that may combine text, voice, audio (including high-quality audio), image, video and data. For conversational variable bit rate traffic (a small but significant percentage of the future traffic mix), the processor has to maintain the time interdependency between the input and output data streams. In effect, the processor must act as a real-time operating system in the way that it handles multiple media streams into, across and out of the processor core.

The same level of determinism then needs to be applied to the MAC and physical radio layer, preserving the time interdependency of the multiple per user rate matched OVSF code streams and then on into the network with multiple per user rate matched virtual paths and circuits.

Note that together, the handset media processor, MAC layer and radio layer and network transport have to deliver tightly managed fixed delay and no delay variability, a requirement inconsistent with many existing processor cores. Processor loading is also generally being considered in terms of asymmetric loading – for instance, a higher processor load on the downlink (from network to mobile).

This assumption is really not appropriate for conversational variable bit rate services that are and always will be symmetric in terms of their processor load – a balanced, though highly asynchronous, uplink and downlink.

Note also that a balanced uplink/downlink implies a higher RF power budget for the handset. Most RF power budgets assume that the handset will be receiving more data than it is transmitting, which is not necessarily the case. A higher RF power budget in the handset places additional emphasis on processor and RF PA power efficiency. Given this, one of the most useful benchmarks for a video handset should be its milliamp/hour per megabyte performance under specified channel conditions. Given that the milliamp/hour per megabyte metric effectively determines network loading and margin, this could turn out to be one of the more important measures of phone (and processor) performance.

7.4 Audio Bandwidth Cost and Value

There should also at least in theory be some relationship between quality and value and there is certainly a relationship between quality and delivery and storage cost. Audio bandwidth for example is a product of the codec being used.6 The adaptive multirate narrowband speech codec (AMR NB) for example has a sampling frequency of 8000 Hz and maps to a constant rate channel rate of 4.75, 5.15, 5.90, 6.70, 7.40, 7.95, 10.2 or 12.2 kbit/s. The adaptive MultiRate Wideband Speech Codec (AMR-WB) samples at 16 000 Hz and maps to a constant rate channel rate of 6.6, 8.85, 12.65, 4.25, 15.85, 18.25, 19.85, 23.05 or 23.85 kbit/s. The MPEG4 advanced audio codec samples at 48 000 Hz and can be either mono or stereo mapping to a channel rate of 24, 48, 56 or 96 kbit/s.

Any of the above can be made variable bit rate by changing the bit rate switch.

7.5 Video Bandwidth Cost and Value

For video coding the mandatory H263 codec supports a QCIF frame size of 176 by 144 pixels or sub-QCIF 128 by 96 pixels at up to 15 frames per second. MPEG4 is similar. Both map to a maximum 64-kb channel.

In 3G Handset and Network Design we suggested users might be prepared to pay extra for better quality images and video. Image and video quality is usually already degraded as a result of compression with the loss of quality being determined as a Q factor.

In J-PEG (image encoding), 8 × 8 pixel blocks are transformed in the frequency domain and expressed as digital coefficients. A Q factor of 100 means that pixel blocks need to be identical to be coded as ‘the same’. A Q factor of 50 means that pixel blocks can be quite different from one another, but the differences are ignored by the encoder. The result is a reduction in image ‘verity’ – the image resembles the original but has incurred loss of information. In digital cameras, 90 equates to fine camera mode, a Q factor of 70 equates to standard camera mode.

These compression standards have been developed generally to maximise storage bandwidth. You can store more Q70 pictures in your camera than Q90 pictures. However, choice of Q also has a significant impact on transmission bandwidth. An image with a Q of 90 (a 172-kbyte file) would take just over 40 seconds to send on a 33 kbps (uncoded) channel. The same picture with a Q of 5 would be 12 kb and could be sent in under 3 seconds. The delivery cost has been reduced by an order of magnitude. The question is how much value has been lost from the picture. The question also arises as to who sizes the image and decides on its original quality, the user, the application, the user's device or the network. Similar issues arise with video encoding excepting that in addition to the resolution, colour depth and Q comprehended by J-PEG, we have to add in frame rate.

Perceptions of quality will, however, be quite subtle. A CCD imaging device can produce a 3-megapixel image at 24 bits per pixel. Consider sending these at a frame rate of 15 frames per second and you have a recipe for disaster. The image can be compressed and/or the frame rate can be slowed down. Interestingly, with fast-moving action (which looks better with faster frame rates) we become less sensitive to colour depth. Table 7.1 shows how we can exploit this. In this example, frame rate is increased but colour depth is reduced from 10 bits to 8 bits (and processor clock speed is doubled).

Note also how frame rates can potentially increase with decreasing numbers of pixels.

Table 7.1 Pixel resolution and frame rate

| Frame rates at 10 bits versus 8 bits per pixel output | ||

| Example | 10 bits (@ 16 MHz) | 8 bits (@ 32 MHz) |

| 1280 × 1024 | 9.3 | 18.6 |

| 1024 × 768 | 12.4 | 24.8 |

| 800 × 600 | 15.9 | 31.8 |

| 640 × 480 | 19.6 | 39.2 |

| 320 × 240 | 39.2 | 78.4 |

This gives us a wider range of opportunities for image scaling. The problem is to decide on proportionate user ‘value’ as quality increases or decreases.

Table 7.2 shows as a further example the dynamic range of colour depth that we can choose to support.

Table 7.2 Colour depth

| Colour Depth | Number of Possible Colours |

| 1 | 2 (ie Black and White) |

| 2 | 4 (Grey-scales) |

| 4 | 16 (Grey-scales) |

| 8 | 256 |

| 16 | 65 536 |

| 24 | 16 777 216 |

The M-PEG 4 core visual profile specified by 3GPP1 covers 4- to 12-bit colour depth. 24-bit is generally described as high colour depth and 32-bit colour depth is true colour. 32-bit is presently only used for high-resolution scanning applications.Similarly handset hardware determines (or should determine) downlink traffic. There is not much point in delivering CD quality audio to a standard handset with low-quality audio drivers. There is not much point in delivering a 24-bit colour depth image to a handset with a grey-scale display. There is not much point in delivering a 15 frame per second video stream to a display driver and display that can only handle 12 frames per second.

Intriguingly, quality perceptions can also be quite subtle on the downlink. Smaller displays can provide the illusion of better quality. Also, good-quality displays more readily expose source coding and channel impairments, or, put another way, we can get away with sending poor-quality pictures provided they are being displayed on a poor-quality display.

These hardware issues highlight the requirement for device discovery. We need to know the hardware and software form factor of the device to which we are sending content. It also highlights the number of factors that can influence quality in a multimedia exchange – both actual and perceived. This includes the imaging bandwidth of the device, how those images are processed and how those images are managed to create user experience value.

7.6 Code Bandwidth and Application Bandwidth Value, Patent Value and Connectivity Value

The developing narrative is that bandwidth can be described in different ways. As code bandwidth has increased, application bandwidth has increased.

Application bandwidth value can be realised from end users and/or from patent income. As this book was being finished, Nortel raised $4.4 billion from the sale of 6000 patents to Apple, RIM and Microsoft. Some of the patents were in traditional areas such as semiconductors, wireless, data networking and optical communications but many were application based.

As application bandwidth has increased, the way in which devices interact with the network and the way that people use their phones and interact with one another has changed. The result has been a significant increase in network loading though quite how effectively this is being translated into operator owned network value is open to debate. The risk is that the operator ends up absorbing the direct cost of delivery and the indirect cost of customer help lines and product returns but misses out on the associated revenue.

However, it is too early to get depressed about this.

A substantial amount of application value is dependent on connectivity. Connectivity value does not seem to be increasing as fast as connectivity cost, but this may be because operators are filling up underutilised network bandwidth and can therefore regard additional loading as incremental revenue. Also, we are fundamentally lazy and often do not bother to change from inappropriate tariffs where we are paying more than we need for services that we never use, always good for operator profits.

In the longer term it is also interesting to consider the similarities between telecoms and the tobacco industry. Both industries are built on a business model of addiction and dependency. In telecoms and specifically in mobile communications, as application bandwidth increases, addiction and dependency increases. This should yield increasing returns over time. Let us hope there are no other similarities.

1 http://inst.eecs.berkeley.edu/∼ee129/sp10/handouts/IEEE_SpectrumMemristor1201.pdf.

3 http://www.ox.ac.uk/media/news_stories/2011/112707.html.

5 http://www.eembc.org/home.php.

6 http://library.forum.nokia.com/index.jsp?topic=/Design_and_User_Experience_Library/GUID-D56F2920-1302-4D62-8B39-06C677EC2CE1.html.