13

Wireless Core Network Hardware

13.1 The Need to Reduce End-to-End Delivery Cost

In Chapter 12 we suggested a brave new world in which telecom networks including mobile broadband networks would deliver social, political, intellectual, economic and possibly environmental value. However, this can only be achieved if the networks are financially sustainable.

This might seem back to front, but in Chapter 21 we review a series of forecasts including our own that suggest that one consequence of the transition from telecom networks to information networks is that there will be a 30-fold increase in data volume over the next five years over mobile broadband networks and a threefold increase in value.

This implies a substantial need to reduce end-to-end delivery cost. Backhaul costs presently represent 30% of this cost but are increasing over time. This cost of delivery is not just a function of volume but is also influenced by traffic mix and the degree of multiplexing gain that can be achieved.

13.1.1 Multiplexing Gain

Multiplexing gain is a function of single users having variable bit rate requirements that are then averaged over multiple users. Some of the bits are not latency sensitive and can be buffered. Additionally, there may be more than one point-to-point routing option that provides additional multiplexing gain. Mobile broadband offered traffic is highly asynchronous (bursty).

On the one hand, it could be argued that this makes multiplexing more rather than less effective. The counterargument is that bursty traffic remains bursty even after aggregation (persistent burstiness). What this means is that bursty traffic behaves in different ways and is affected by the characteristics of the paths over which it travels.

Generally, it can be stated that conversational, streamed and interactive traffic costs more to deliver than best effort and can be shown not to aggregate efficiently. The offered traffic can also be highly asymmetric. These characteristics together mean that backhaul has to be over provisioned in order to meet defined user QOS and QOE expectations. This increases capital and operational cost. However, we have also stated in previous chapters that although best-effort traffic can be used to increase delivery bandwidth utilisation this is only achieved by buffering that itself introduces cost.

Backhaul strictly defined is a delivery network not a buffered network. Buffering will be applied at the edge of the network. Delivery options include point-to-point microwave, point-to-multipoint microwave, multipoint to multipoint microwave also known as mesh networks, free-space optics, fibre, cable and copper.

Many markets are growing at a rate where traditional fibre or copper backhaul cannot be deployed fast enough. Point-to-point or point-to-multipoint microwave or free-space optic links are alternative options. These offer deployment time scale advantage and easy through-life reconfigurability but have different optimum fiscal- and energy-efficiency thresholds that are dependent on the technology and topology used and the characteristics of the offered traffic. In addition to the offered traffic mix, the trade off points for each option will be determined by network density, local geography, the mix between urban and rural coverage and local economic and business model considerations. The assumption is that future peak and average throughput requirements can be met either by more aggressive deployment of higher-order modulation schemes into existing bands and/or by implementing links in unlicensed or lightly licensed spectrum at 60, 70 and 80 GHz.

13.2 Microwave-Link Economics

Microwave-link economics and the choice of channel bandwidth and modulation schemes are also influenced directly and indirectly by spectral allocation and regulatory policy. A typical licensed spectrum product offer from a vendor such as Ericsson includes options at 6, 8, 11, 13, 18, 23, 26, 28, 32 and 38 GHz with a range of dish antennas from 0.2 to 3.7 M. The products use 4 to 256 QAM adaptive modulation and scale from 3.5 to 56 MHz channels. RF output power scales from +30 dBm (CQPSK at 6 GHz) to +16 dBm (256 QAM at 38 GHz), the receiver sensitivity thresholds for a one in 106 bit error rate scale from –59 dBm (256 QAM in a 56 MHz channel at 38 GHz) to –91 dBm for C QPSK at 6 GHz and the throughput scales from 4.1 Mbps to 345 Mbps. NSN having a matching portfolio though scaling to 450 Mb/s per carrier. These systems are usually frequency division duplexed.

Other option include 5-GHz systems using the unlicensed 5-GHz spectrum (802.11a) and/or the 60-GHz unlicensed band and/or the ‘lightly licensed’ 80-GHz band. These systems are usually time division duplexed.

As frequency increases bandwidth increases and antennas get smaller for a given gain. A one degree beam width antenna for example gives about 50 dBi of gain. Conversely at a given frequency, the bigger the dish the higher the gain. This translates into higher throughput but mast costs will increase, a function of weight and wind loading.

The problem (and opportunity) with microwave links is that as you move into the millimetre bands (30 to 300 GHz) the wavelength is the same length as a raindrop.1 This causes nonresonant absorption that has to be accommodated in the link budget and/or by rerouting in exceptional but localised weather events (thunderstorms). There are also nonresonant absorption peaks for water at 22 and 183 GHz and oxygen at 60 and 119 GHz. The oxygen peak at 60 GHz is what allows this band to be made available as unlicensed spectrum as the absorption, of the order if 15 dB per kilometre provides protection against system-to-system interference. Note that these are line-of-sight systems. With fibre, cable or copper you can turn a corner but with microwave you can only do that by installing another microwave dish and/or repeater.

Either way, line-of-sight point-to-point engineers argue that narrow beam width dish antennas are the most effective way to achieve link budget gain (and by implication, throughput) with the wide choice of available frequency bands, meaning that an optimum choice of system can usually be made.

13.2.1 Point-to-Multipoint

Another alternative option is point-to-multipoint microwave.

In point-to-multipoint a sectored antenna with for example a 90-degree beam width will service multiple sites, typically of the order of nine or so. The band options are ETSI standard 10.5 GHz, 26 GHz and 28 GHz. Bandwidths are presently 7, 14 and 28 MHz and throughput scales to 150 Mbps per sector with modulation options ranging from QPSK to 256 QAM. The systems are normally duplex with a duplex spacing of the order of 350 MHz at 10.5 GHz or 1008 MHz at 26 and 28 GHz. Output power is +20 dBm at 10.5 GHz and +18 dBm at 26 or 28 GHz. A 90-degree sectored antenna at the base station at 10.5 GHz provides 15 dBi of gain at 10.5 GHz and 17 dBi of gain at 26 or 28 GHz. A 0.3-m dish at the other end gives 28 dBi of gain at 10.5 GHz, 36 dBi at 26 GHz and 37 dBi at 28 GHz. Increasing the dish size to 0.6 m increases the gain to 33 dBi at 10.5 GHz, 40.5 dBi at 26 GHz and 42 dBi at 28 GHz.2

The spectral efficiency argument is based on the multiplexing and scheduling gain achievable from point-to-multipoint and its impact on backhaul efficiency and throughput. A microwave point-to-point connection achieves a similar scheduler gain to the one-to-one gain achieved in the RAN.

A microwave point-to-multipoint connection achieves the same one-to-one scheduler gain but has additional gain from multiuser diversity. Point-to-multipoint backhaul is therefore conceptually similar to a sectored node or e node B. Throughput in terms of joules per bit may also be lower, offering operational cost benefits, for example in solar-powered or diesel-powered applications. Point-to-multipoint could potentially be more broadly deployed but limited vendor support and limited spectral availability may constrain market adoption.

13.3 The Backhaul Mix

So how will the backhaul mix between fibre, cable, copper and wireless change over time? Let's try and answer the question initially in terms of mobile broadband access backhaul economics.

It seems likely that backhaul from femtocells will continue to be carried for at least part of the way over copper twisted pair with the caveat that fibre over utility poles may change this over time, a topic addressed in the next chapter.

Microwave vendors argue that microwave transport will meet the foreseeable capacity needs for mobile backhaul for many years to come using a combination of licensed, lightly licensed or unlicensed spectrum. The argument is based on the assumption that a 10-MHz LTE radio channel will require a backhaul of the order of 150 Mbit/s.

The number of channels supported depends on the configuration of the base station on the site including the number of sectors and number of channels per sector, the channel spacing used (5, 10, 20 MHz or bonded channels up to 100 MHz) and cell geometry. Cell geometry is the term used to describe the distribution of users in a cell relative to the cell centre. The further away from the cell centre the weaker the link budget and therefore the lower the data throughput.

The IP packet throughput is therefore likely to be significantly less than the headline physical layer throughput figures would imply. A counterargument is that if operators start sharing the radio access network or if neutral host networks become more prevalent and if legacy GSM voice channels and HSPA and LTE data channels and ultimately presumably LTE IP voice get aggregated together then this implies several hundred MHz of instantaneously available bandwidth. A 13-band base station, if such a beast existed would have access to more than 1100 MHz of radio bandwidth, potentially available on each of three or more sectors with an average efficiency of between 2 and 3 bits per Hz. This is a gigabit rather than megabit discussion.

The offered traffic limit in this case would probably be the battery and heat-rise limitations of the user devices accessing the network and/or the number of users you would need to pack together to use the bandwidth in a small to medium-size cell. You may as well deliver television to each site as well adding a few more gigabits to the backhaul mix at least in one direction. Microwave vendors might argue that whatever the loading microwave can provide enough capacity just by adding more bandwidth. However, given that the dish antennas are band specific this implies a lot of antenna hardware on a site/mast that might be space- and weight-loading constrained. Line-of-sight problems would also shift the balance towards copper or fibre.

13.4 The HRAN and LRAN

The relative technology economics of wireless versus copper and fibre are therefore complex with many interdependencies. Ericsson makes things simpler3 by dividing mobile broadband backhaul into two parts, the lower radio access network (LRAN) and the high radio access network (HRAN). The HRAN aggregates traffic from several LRAN networks using fibre or microwave. The LRAN provides the last mile of connectivity to anything between 10 and 100 radio base station sites normally, though not always, using microwave but with fibre and copper as alternative options.

They make the completely obvious point that microwave will generally have the lowest cost of ownership unless an alternative is already there or physically close. However, as you would expect from a vendor with very positive ambitions for LTE the argument is made that at some point in time some base stations may need gigabit backhaul links. The regulated licensed spectrum between 6 GHz and 38 GHz supports channel bandwidths up to 50 MHz, which with high-level modulation can just about deliver 500 Mbps under ideal conditions. Adding polarisation multiplexing and multiple input/output techniques increases this and adding channels multiplies the capacity.

As at 2011 Ericsson are/were offering 14 MHz or 28 MHz channels for LTE backhaul with the longer-term option of migrating to aggregated 28 MHz channels. The lightly licensed 71–76 GHz and 81 to 86 GHz bands known as E band support 10 GHz bandwidths but using simpler modulation on a single carrier. The light licensing has been adopted in the US and UK, takes a few minutes online and costs about $100 dollars a year. The bands became available in the US in 2003 and in 2006 in Europe. Using these bands, however, depends on local weather conditions. A tropical rainstorm every afternoon will seriously affect availability and throughput, but then we don't get a lot of tropical rainstorms in the UK, though actually we had one yesterday. There are some applications where throughput becomes more important when it rains. For instance, there are generally more traffic accidents and cars tend to break down so calls to the emergency and breakdown services will increase. Snow storms can also be a problem at some frequencies as can sand storms, though we don't get a lot of those in the UK either. For all of these weather-related events availability can be provided in other ways, for example by having multiple routing options or by having other microwave links at other frequencies.

The copper offer (reviewed way back in Chapter 2) includes high bit rate DSL (HDSL), enhanced high bit rate DSL (HDSL2) and in some cases single pair HDSL (SHDSL).

If there is a fibre to the curb nearby then this allows enhanced very high speed DSL (VDSL2) to be used providing 100 Mbps of upstream and downstream bandwidth. The 100 Mbps can be increased by implementing a crosstalk cancellation technique called vectoring and line bonding (multiple twisted pairs dedicated to the link). The vector cancellation characterises the crosstalk from the adjacent VDSL twisted pairs by knowing the transmitted symbols and calculating the crosstalk coupling gains then predistorting the transmitted symbol stream on the wanted channel to eliminate the crosstalk.

Microwave vendors are, however, keen to point out that whatever you might do to copper it can suffer the same fate as fibre and be taken out by accident when roads are dug up and can take several days to restore and make the case that a combination of backhaul provides the best guarantee of availability.

The microwave economic case is, however, dependent on the link budget including the fade margin (see weather events above). Link budgets are determined by the system gain that is a composite of the transmitted output power and TX/RX antenna gains minus the receiver threshold. This is set as the minimum received power required to support a bit error rate of less than one in 1012. The system gain for a commercial backhaul radio will be of the order of between 160 and 200 dB. This implies that a range of up to 3 km is practical, but not more. This in turn implies these systems can only be used in urban or suburban applications with systems in the lower bands being better suited to rural applications.

Excepting that caveat Ericsson provide an example of a radio that supports 1.25 Gbps in both directions effectively an optical rate interface with a latency of less than a microsecond, transmitting in the higher band (81 to 86 GHz) and receiving in the lower band (71 to 76 GHz) on 5 GHz channels using differential binary phase shift keying (DPSK). The system gain is 162 dB. This would support 1.5-km hop lengths in heavy rain.

The point-to-point microwave market is served both by Tier 1 vendors including Ericsson, NSN and Huawei and more specialist vendors such as Bridgewave, Proxim, Aviat and Ceragon. The Tier 1 vendors have an advantage in that they have greater visibility to the future form factor of mobile broadband base stations, but essentially there should be room for both.

Ron Nadiv the VP of Technology and Systems Engineering at Ceragon has written a comprehensive book on Wireless Wireline and Photonic Networks published by John Wiley4 that treats the topic of microwave backhaul in substantially greater detail than attempted here. A supporting White Paper5 covers some of the same topics.

A summary of some of the points made about topology is, however, useful and relevant.

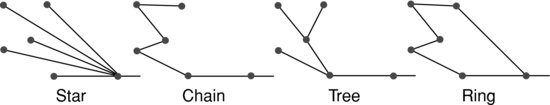

Most radio networks have a backhaul topology that is a combination of trees and rings, the tree being a combination of chain and star topologies, as shown in Figure 13.1.

Figure 13.1 Network topologies. Reprinted with permission of John Wiley & Sons, Inc.

Star topologies use a separate link from a hub to each site, which is simple but requires longer radio links and line-of-sight for each link that may or may not be possible. Interference is also more likely as all links originate at the same point so opportunities to achieve frequency reuse are limited.

Chain topologies don't have these problems but if one link goes they all go, just like those cheap Christmas lights you bought last year.

Putting the chain and star together produces a tree. In a tree topology fewer links will cause a major network failure and therefore fewer links require protection schemes (hardware duplication for example). Closing the chain produces the ring that provides the most protection.

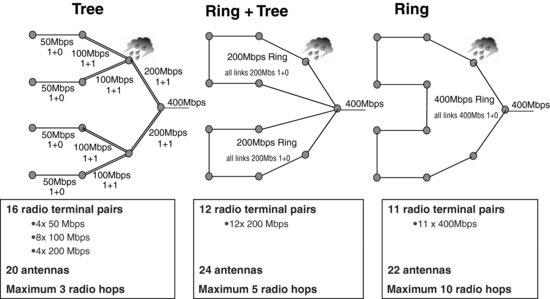

Ceragon then develop a test case in which ten cell sites each requiring 50 Mbps aggregate to a total of 400 Mbps with every link that supports more than one site being protected. The options are shown in Figure 13.2.

Figure 13.2 Aggregation topologies test case. Reprinted with permission of John Wiley & Sons, Inc.

The trade offs are as follows. The ring topology requires fewer microwave links but the links have to be higher capacity and use more spectrum, though since the ring has no more than two links to every node good frequency reuse can probably be achieved. The ring also requires some additional antennas but has inherently good availability due to the fact that a service failure would only occur when both paths fail. However, a ring will introduce additional latency and delay variation that might affect synchronisation signals and traffic latency. However, some of the tree links have lower bandwidth than those in the ring, which may introduce delay variation. Statistical multiplexing is more effective in a ring topology as the total capacity of the links in a ring is usually greater than the links in the branches of a tree. Rings are, however, more expensive to upgrade as each link needs an identical upgrade.

A practical example is then given of a Latin American operator transitioning from a TDM network to an IP network. Each cell site requires 45 Mbps of bandwidth for Ethernet traffic with additional E1 lines (2.048 Mbit/s supporting 32 by 64 kilobit voice channels). All wireless links were required to have hardware redundancy.

An analysis suggested that the shift towards Ethernet improved the Capex and Opex economics of the ring topology compared to other options and offered better resiliency. The ring topology is combined with ring-based protection using the Rapid Spanning Tree Protocol. Apparently, this meets the Next Generation Mobile Network requirement of path restoration within a range of 50 to 250 milliseconds.

The other wireless option briefly referenced in Chapter 2 was free-space optics at 800 nm or 1550 nm with 1550 nm being the preferred option for safety reasons. These systems can support high bandwidth but are subject to scintillation and fog attenuation that precludes their use in many more mainstream applications.

13.5 Summary – Backhaul Options Economic Comparisons

In a voice-centric network a single T1 (1.544 Mbps) or E1 2 Mbit/s backhaul connection would have been sufficient to support a moderate size cell. Simplistically, an LTE channel with a peak data rate of 150 Mbit/ with three sectors needs up to 450 Mbit/s of capacity. Cell geometry reduces this. Adding channels per sector and/or implementing neutral host networks with shared backhaul increases this. Gigabit rather than megabit backhaul requirements are therefore at least plausible. Practical limits with copper are presently of the order of 100 Mbits but essentially this is a bandwidth-limited medium with propagation limitations and significant interference constraints (crosstalk).

It is usually very fully amortised but lease costs, for example, for an E1 or T1 line can be substantial both in terms of a set up charge and monthly rental and this cost increases linearly with capacity – you don't get a discount for multiple T1/E1 lines, which means that 10 Mbps of bandwidth cost ten times as much as 1 Mbps. So, for example, a T1/E1 circuit in 2008 would cost anywhere between $150 and $750 per month with a set up charge of over $500 dollars.

Fibre using higher-order modulation can deliver multigigabit throughput but is often not available as a legacy installation and therefore has to be aggressively amortised. A leased fibre capacity of 155 Mbps would be in the region of between $4000 and $7500 dollars per month and a set up charge of around $7000 dollars, assuming of course that the fibre is available, which it may not be.

The T1/E1 costs and bandwidth limitations and fibre cost and availability limitations together determine the market share of microwave systems in backhaul provision. After factoring in hardware costs, cable costs and licensing costs the payback for microwave compared to multiple T1/E1 lines is somewhere in the order of 4 to 12 months The availability of 10-Mbps Ethernet over copper over distances of up to 1.5 miles has changed this trade off point in some markets, but these services do not support TDM services.

The microwave versus fibre economics calculation is very much determined by distance and population density with the cost of digging the trench directly dependent on distance. The capex cost for fibre is definitely higher, up to about 2 Gbps, but the exact crossover point will be dependent on local factors. If the fibre can be shared amongst multiple operators the cost per bit will of course be substantially lower.

13.6 Other Topics

So now I have noticed that I had also said I would address core network hardware, router hardware, server hardware and cost and energy economics in this chapter. I am going to offer several excuses for not doing this at this point

Core network hardware has already been addressed in 3G Handset and Network Design and subsequently in more extensive and accurate detail by other more technically literate authors including Harri Holma and Antti Toskala's LTE for UMTS book (John Wiley ISBN 978 0 470 66000-3)

My own take on the changing shape of core network architecture eight years ago was that the overall complexity would increase rather than decrease over time but would become distributed and that cost would shift to routers that would be surprisingly hardware centric in order to realise throughput and latency metrics.

Lots of high-performance memory would also be needed in each router. The ability to buffer at router nodes meant that network bandwidth utilisation could be substantially increased but there were two associated costs, the memory and the bandwidth occupied by the signalling needed to avoid buffer overflow that would result in packet loss. The section on deep packet examination (Chapter 18 page 406, has proved particularly prescient).

Anyway, that's enough bragging for the moment.

The problem about server architectures is that I know very little about the subject but it is the sheer scale of the server farm phenomenon that is by far the most interesting thing about them. In an earlier chapter we mused on the fact that Google now have over one million servers humming away in order to ensure we can become instant experts on everything, something I have never had a problem with, even before the internet was invented.

The initial bright idea was to install server farms in places like Nevada until it was realised that the heat generated from the servers combined with the heat generated from the noon day sun was not an energy-economic proposition. So the latest trend is to choose places like Iceland that are both cold and have plenty of geothermal power to waste.

There are measures that can be implemented to make servers more efficient. This includes the use of the techniques used in mobile handset application processor hardware such as dynamic voltage frequency scaling, clock gating and improving utilisation. The concept is that power consumption should be proportional to the activity level. The devices are sometimes described as energy proportional machines.6

However, the argument that telecommunications networks are saving the planet needs to be approached with caution. Apparently we are travelling less on business due to effortless teleconferencing but somehow I think the ease with which we can now book a week end flight to Prague and back may have more than offset this trend.

Better just to accept that telecommunications makes us more socially, economically and intellectually efficient and leave it at that. But now it is time to do telegraph poles.

1 See 3G Handset and Network Design, Chapter 15, page 368.

2 Point-to-multipoint hardware examples, with thanks from Cambridge Broadband Networks.

3 http://www.ericsson.com/ericsson/corpinfo/publications/review/2009_02/files/Backhaul.pdf.

4 http://onlinelibrary.wiley.com/doi/10.1002/9780470630976.fmatter/pdf.

http://eu.wiley.com/WileyCDA/WileyTitle/productCd-0470543566,descCd-description.html

5 http://www.ceragon.com/files/Ceragon_Wireless_Backhaul_Topologies_%20Tree_vs_%20Ring_%20White%20Paper.pdf.

6 The case for energy proportional computing, Barroso, IEEE Transactions, December 2007.