Key Concepts

Plan the layout of how the hardware and software are to be deployed

Ensure the e-commerce applications can coexist on the same hardware in the same environment

Anticipate both typical and peak demand for each site

Avoid single point of failure

Plan for maintenance

Sharing the same infrastructure among multiple sites invariably creates technical problems that need to be solved. After all, inherently the reason the sites are different from each other is because they have different requirements, and fitting them onto the same platform can be a challenge.

From a technical point of view, there can be three forms of sharing: sharing hardware, sharing software, and sharing data. With a shared hardware arrangement, the same set of servers would be used for handling the requests coming from all the sites. However, although the actual computers are shared, the software applications used by the sites remain different.

Sharing software would imply that the sites not only make use of the same hardware, but also the same software. With this arrangement, a single installation of software would benefit all the sites.

Sharing data is the next step, where multiple sites not only make use of the same application, but also use the same database. With this approach, the data of all sites is managed together, and also data can be shared among sites.

We will consider the opportunities that exist for these forms of sharing and describe some of the typical methodologies that can be used and the challenges that must be overcome.

Before exploring the ways of sharing hardware and software among commerce sites, we must first give an overview of what a commerce application looks like when it is deployed. This overview is clearly not meant to give a comprehensive guide to all the possible ways to set up sites, and we don’t describe all the necessary equipment or the required software. Instead, we describe one typical way that the company’s computing environment can be set up to enable a commerce site. We then examine what portions of this environment can be shared among different sites, under different sharing arrangements.

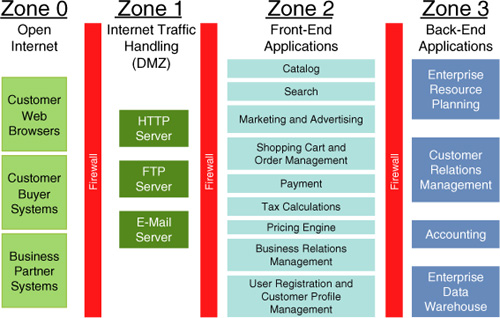

Generally, you can view the overall environment as being partitioned into zones. The division into zones is normally done for security reasons to protect the company’s internal systems from possibly malicious traffic coming from the Internet. This is illustrated in Figure 14.1.

Zone 3 is the most protected zone, and it contains the company’s internal systems and back-end applications. The common characteristic of these applications is that they cannot be directly accessed by customers or by anyone else from the Internet. Instead, zone 3 applications are used only by the company’s internal staff, such as employees or contractors.

Zone 2 contains front-end applications, which are accessed by the company’s customers or business partners. This includes the applications that handle traffic on the commerce sites, such as presentation of catalog, capturing orders, and presenting marketing content.

Frequently, the commerce applications in zone 2 are integrated with the back-end applications in zone 3. For example, sometimes the commerce system might have a standalone pricing engine deployed in zone 3 that is responsible to determine the prices of products. In other cases, the commerce system needs to retrieve prices and inventory from the company’s Enterprise Resource Planning (ERP) system located in zone 3. Similarly, when the commerce system in zone 2 captures an order, it transfers the order to the back-end systems in zone 3 to initiate the fulfillment and billing processes.

Even though the applications in zone 2 are used by the customers from the Internet, it is a good idea for this zone to still be somewhat isolated from the unpredictable and often malicious Internet traffic. Commerce applications handle vast amounts of monetary transactions and contain sensitive data, such as customer profiles, orders, and pricing; and these must be protected from attacks.

To protect the company’s internal systems, typically the computing environment includes a special zone, called the Demilitarized Zone (zone 1 or DMZ in Figure 14.1). A connection can never be made directly between a customer computer and a system in zone 2. Instead, the connection is made to the handling application in zone 1, which then forwards the request to the relevant application in zone 2, as shown in Figure 14.2.

When a customer clicks on a product on the company’s Web site, the web browser on the customer’s laptop computer would make a connection to the corresponding web server in zone 1. Based on various characteristics of the request, such as the URL, the customer’s cookie, or the HTTP header, the web server delegates the request to the relevant application in zone 2. The commerce application retrieves the product description and other relevant information from its own database. Depending on the system, some information, such as price and inventory, might need to be retrieved from a back-end application located in zone 3. When all the information is gathered, the commerce application returns it to the web server, which in turn presents the resultant web page to the customer’s web browser.

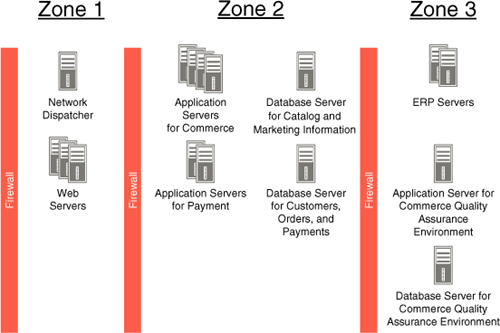

Let’s now explore the computers that would be required in each zone. For this, we use a much simplified example; in real life the setup would require many more applications and many more computers than we show here. Figure 14.3 shows an example of the components in each zone.

In this example, there are two front-end applications in zone 2 that are responsible for the site, namely the commerce application and the payment application. The commerce application is responsible for most aspects of the site, such as search, catalog presentation, shopping cart, marketing and advertising, and user profile. The payment application is responsible only for processing payments, such as validating the customer’s credit card number or authorizing payments when an order is submitted.

The front-end applications interact with the back-end applications in zone 3. For example, the commerce application interacts with the company’s back-end ERP system to retrieve product inventory or to transfer captured orders for fulfillment.

Also in Zone 3, we placed the quality assurance environment. Catalog updates, presentation changes, or new marketing campaigns are not made directly on the front-end applications. Instead, the changes are first made in the quality assurance environment. After the changes are tested and approved, the corresponding modifications to data are moved, or “promoted,” to the production environment. The commerce application in the quality assurance environment is usually similar to the front-end commerce application, except that it is available only to the company’s internal staff.

The quality assurance environment is the master copy of the site so that the data that resides in this environment is considered to be the most current and correct version. If anything happens to the production site, this quality assurance environment can be used to rebuild it; therefore, it is a good idea to protect this environment as much as possible from threats coming over the Internet. That is why we put the quality assurance environment in the protected zone 3, in which it is only accessible by the company’s line of business or IT staff.

In real life, there are typically many different applications that must come together to be used as a single site. Typical examples include product searches, shopping carts, and order captures, which often are handled by separate front-end applications. There can also be applications that are dedicated to advertising, order status tracking, customer profile management, and many other commerce functions.

Now see how these components in our simple application configuration look when they are deployed on actual hardware, as shown in Figure 14.4.

We see in the diagram that in zone 1, there are two types of servers: one responsible for the dispatch of customer requests, and three web servers that actually handle the requests. The reason for having multiple servers is to handle large volume of traffic—normally detailed performance studies would determine the actual number of servers necessary, and the number of processors in each server. Another reason to have multiple servers is to ensure redundancy, so that if one server goes down, the others can still continue providing service to the customers and hence the site stays up.

In zone 2, we have the application and database servers for the commerce and payment applications. Frequently in real deployments, there is an extra firewall between application and database servers, which ensures extra security for the database. However, for simplicity we omitted this detail here.

In Figure 14.4, for both redundancy and performance reasons, there are four commerce application servers and two payment application servers. However, each database resides only on a single server because it is sufficient to satisfy the site’s performance needs. Nevertheless, the two database servers could also provide redundancy for each other. In other words, if payment database server goes down, the commerce database server can take over. This, of course, assumes that the corresponding databases are mirrored on both servers.

In zone 3, there are two ERP servers to provide redundancy. This is necessary because the ERP system is needed by the front-end commerce systems to serve customers. The ERP system contains the product inventory and pricing, and hence it is important to make sure that this data is always available to customers. If one of the ERP servers goes down, the other one is still available to service customer requests.

On the other hand, the quality assurance environment is accessed only by internal staff rather than by customers. If the quality assurance environment is temporarily unavailable, there is no disruption of service to the customers, and therefore, there is no need for ensuring redundancy. The amount of traffic on the quality assurance environment is also relatively small because it is used only for administration and for testing. Therefore, a single application server and a single database server suffice for the quality assurance environment of the commerce application.

For simplicity, we ignored many other types of hardware that would be needed, such as network routers, firewalls, system monitoring, backups, and so on. So in reality, our example underestimates the actual need for hardware for a commerce site.

Overall, we can count 12 machines necessary for this site, plus 2 machines are used for the ERP system. This might seem like a large quantity of hardware, but it is not extraordinary for a large site. Frequently companies use many dozen of machines to run all the necessary applications and to handle the necessary volume of traffic on their site.

In Figure 14.4, the commerce application uses two separate databases: one for catalog and marketing data, and another one for orders and customer data. This is sometimes done because the characteristics of these two kinds of data are different. Catalog and marketing information is generally updated relatively infrequently, whereas order and payment information is updated constantly, with every transaction. It can be convenient to keep this data separate from each other so that each database can be managed separately; for example, backup schedules, archival of stale data, and performance tuning can all be geared to the needs of each kind of data.

Another reason to separate this data from each other is to simplify updates. The servers in zone 2 hold the “production” copy of marketing and catalog data, whereas the master copy resides in the quality assurance environment in zone 3. At the same time, the primary copy of customer and order data resides in the operational database in zone 2. Therefore, it is convenient to keep this data separate from each other so that the operational copy of catalog and marketing information can be updated easily at any time, without disturbing the order and customer information.

Depending on the way the applications are designed, a single database server might actually be holding multiple databases. In our example, the database server for catalog and marketing holds only a single database. However, the other database server might have up to three databases: one for customer data, one for orders, and one for payment. The essential characteristic here is that each database might be used by multiple applications, and each application might be using multiple databases. This database layout is illustrated in Figure 14.5.

You notice that in this example the commerce application, both in production and in the quality assurance environments, uses three databases: Catalog and marketing, Customers, and Orders. The catalog and marketing data in production is regularly synchronized with the master copy in quality assurance. However, the customer and orders databases are needed only in production. In the quality assurance environment, these hold only sample data, enough to enable the company’s personnel to test the site. There is no need to update these databases with real data; all that is needed is to create a few user profiles and a few sample orders.

The next step is to determine the software that must be acquired for the commerce site. Again, this will clearly vary for each company, and in reality there will be a need for much more software than we list here; but for the sake of simplicity, let’s just focus on the following small list of software:

• Operating system for each of the machines

• Database for the commerce and payment applications

• Web server

• Application server

• Commerce and payment applications (possibly purchased in a single package from one vendor, or separate packages from different vendors)

Figure 14.6 illustrates how each of the software packages is associated with the various applications. Because this book focuses on front-end commerce systems, we have not expanded upon the software required for the ERP application.

Usually the price of the database and the commerce applications depends on how big the installation is. For example, frequently vendors charge per machine or per processor so that larger sites end up paying more for the same software, when compared to smaller sites.

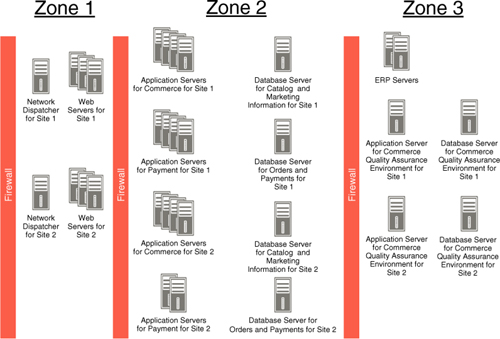

If a company has multiple sites, each site’s installation is deployed within the three zones as we have described. In the worst-case scenario, when neither hardware nor software are shared, the cost of installation and maintenance is directly proportional to the number of sites. Figure 14.7 shows this arrangement.

We see that each site needs its own set of servers in each zone, so that now with two sites, the company needs 24 servers. We assume that both sites use the same ERP systems; in real life, it is common that even such back-end systems are also different. For example, if each site is created for an independent division of the company, it is likely that each division also has its own ERP systems.

Another factor to consider is that potentially the hardware and software used for the different sites could be completely different. For example, one site could be using Windows® servers, and hence the servers are all Intel®-based machines. The other site could be using AIX® servers, and hence its servers are IBM machines.

This gets even more complicated when software is taken into account. An Intel-based server could be using Microsoft® Windows operating system with .Net-based commerce applications. The same server could be used to run Linux operating system, and IBM WebSphere-based commerce applications. Even within the same choice of hardware and software, the database packages from a single vendor could run on different hardware; for example, both Oracle and DB2® database have versions that run on Microsoft Windows and on Linux.

The worst-case scenario is that each site uses completely different hardware and software so that even the skills necessary to maintain all the facilities are different. This also means that the company has to find IT staff that are qualified to operate each of the kinds of machines, operating systems, databases, and applications. We see that without an arrangement to share hardware and software, the cost of having multiple sites can quickly become large.

To promote reuse of hardware and software resources, the company’s IT management could try to standardize its computing environment. For example, it might mandate that all the sites’ hardware comes from the same vendor, that the same operating systems are used, and that a single type of database is used for all applications of all the sites.

This standardization is useful even if all the sites have their own completely independent installations. One key benefit is that it reduces the complexity of maintenance. For example, if all databases are of the same version, a single set of skills is necessary to maintain them so that one group of people could look after the databases of multiple sites. Similarly, a common set of administrative procedures can be instituted, making the business and technical processes easier to understand. As a result of the simplified maintenance procedures, the company could also expect to reduce the total operating cost of all sites.

Although the standardization of IT infrastructure is certainly a good idea, it is only the first step leading to the goal of reduced cost and simplified administration. The bigger benefit would come from actual sharing of infrastructure among sites. Fundamentally, the sharing of infrastructure can be achieved at multiple levels, depending on how much hardware and software standardization is achieved among sites. To focus the discussion, we consider the opportunities for sharing of hardware and software within each of the three zones where the sites must be deployed.

In the example that we started with Figure 14.1, the equipment in zone 1 is responsible for handling Internet traffic that is coming to the company’s commerce sites. The two components that we discussed included a network dispatcher and the web servers that accept the request and send the response to the customer’s computers. To reply to each request, the web server can retrieve information from its own file system, such as images or HTML files. If necessary, the web server can also create corresponding requests to the business logic residing in the application servers in zone 2.

Depending on the characteristics of the company’s installation, it might be possible for multiple sites to share the same web server, as shown in Figure 14.8.

This arrangement could reduce the total number of machines necessary for the web servers. This is especially true if the peak demand of site 1 happens during a different period compared to the peak demand of site 2. For example, let’s say that site 1 is a B2B site, mostly busy during the regular business hours 9 a.m. to 5 p.m., whereas site 2 is a B2C site, whose peak demand is 5 p.m. to 11 p.m. In this case, the capacity available due to the B2B site’s pattern of usage in the evening is automatically allocated to the B2C site.

On the other hand, sharing the web servers while keeping application servers separate could introduce several complexities that must be overcome. One typical issue to deal with is that the security requirements of site 1 and site 2 might be different, yet they must be satisfied by the same web server. For example, site 1 might be open only to registered users, and all pages including product pages are encrypted with SSL, whereas site 2 is available to any guest, and product pages are clear-text. Hence, the web server must be configured to distinguish the requests to site 1 and to site 2 and make sure that each request is handled correctly. This is usually possible because the two sites have different URLs, and even different IP addresses.

Another complexity has to do with the gathering of statistics about customer visits to each site. The statistics are necessary for operational purposes, such as to measure the level of activity on each area of the site and to track the performance and response time of the site. Some of the necessary statistics are accumulated by web servers based on the requests coming in to the site. The IT administrators must, therefore, be careful to ensure that the necessary statistics are captured in the context of each site, rather than for the web server as a whole. Again, this is usually possible because web servers log their traffic based on incoming URLs, and the different sites are easily distinguished by their URLs.

Problem tracing is another issue that is addressed by tracking URL information. If any problem occurs on one of the sites, the administrators must trace the request’s trail throughout all the systems touched by it. The environment must be adapted so that the processing of each request can be traced; for example, to determine the application servers that were invoked for a request.

Even though it can be beneficial to share web servers among multiple sites, this is not always possible. Probably the most difficult issue to resolve is the incompatibility of application servers. For example, if one site’s application servers are based on .Net technology, while the other site is using J2EE, adapting a single web server to both sites might be tricky. Setting up sharing arrangement in such a case would require IT architects to have a good understanding and experience with both sets of technologies and also to spend time testing to ensure that this works well.

What we see here is yet another reason for the company to try to standardize its application infrastructure. Even if hardware is currently not shared, it is a good idea to at least ensure that the same basic technology is used throughout the operations, so sharing can be introduced later.

In our example, zone 2 is used for various commerce applications, such as catalog presentation, marketing, order capture, payment, and so on. Sharing of this infrastructure between sites should be studied in the scope of each application.

Referring to the example in Figure 14.1, there are two applications: one commerce application, and one payment application. From the technical planning point of view, it is often difficult to share all the applications among multiple sites. For example, the different commerce applications might have a lot of complex business logic unique to the products for sale and the business rules of the corresponding company divisions, and merging them into one site would require much research and development. On the other hand, the payment application is much more confined in its scope, and it might be much easier, at least initially, to focus on sharing just that application.

Let’s say that the company manages to share the payment application among the multiple sites, whereas the commerce applications remain independent. Figure 14.9 illustrates the infrastructure that results in this case.

In Figure 14.9, each site still has its own set of servers for its commerce application. However, there is only one shared set of servers for payment application, which is used by all the commerce sites. This is an improvement over the arrangement in Figure 14.7, where each site had its own set of payment servers.

With this approach of sharing application incrementally, it might also be possible to create a plan where the first shared application is delivered rapidly, whereas subsequent applications can wait until the future phases of the project. As we discussed in Part II, “Implementer’s Considerations for Efficient Multisite Commerce,” project managers and executives are usually interested in building such a plan for incremental delivery. We discussed that a plan must deal with such organizational aspects as governance of the project, requirements management, and for dealing with the fears and resistance of various lines of business. However, in addition to dealing with these organizational issues, phased delivery needs a technical approach that allows breaking up development into logical parts, where each phase of development yields a reasonably complete platform that can be used to deploy at least some of the sites.

Application-level sharing creates the technical means of delivering such a phased development plan. The idea is that each phase would include adding one or more shared applications to the platform, or expanding the capabilities of existing shared applications. As a result, in each phase a few sites can move to make use of these shared applications. As the number of shared applications grows, and as their capabilities improve, the infrastructure used by the sites is more and more shared.

Over time, the more applications that are shared, the more savings that are achieved. Therefore, the IT goal should be to ultimately share all the applications among the sites. It is important, therefore, to view the sharing of individual applications only as a temporary step on the way to the fully shared platform used by all the company’s sites.

The applications that reside within zone 3 are not accessible to external customers but are used only by the company’s internal IT and LOB staff. Examples of these applications include the quality assurance environment for the sites and the company’s back-end systems.

Sharing the quality assurance environment for an application among sites is possible only if the application itself is shared, as shown in Figure 14.10.

In this example, the hardware and software for the commerce application is shared for all sites. Correspondingly, the same quality assurance environment is also used for all sites.

Not all applications deployed in zone 2 require a quality assurance environment in zone 3. In our example, the payment application does not have quality assurance environment. Instead, when the application needs to be updated, it is temporarily shut down, and the updates are applied offline. This is possible because the payment application is not critical to the commerce sites—orders can continue to be taken even while the payment application is down.

Such decisions on the needs and the configuration of the quality assurance environment depend on the technical capabilities of each application and the business requirements. However, from the point of view of sharing hardware and software among sites, the general rule is to follow the deployment arrangement in zone 2. If the production environment can share hardware and software, so can the quality assurance environment. On the other hand, if the production environment has an independent installation for each site, it is a good idea to keep the quality assurance environments separate as well.

In the context of a single site, however, it might be possible to use the same hardware for the quality assurance environment for multiple applications. In our example, if the company decides to have a quality assurance environment for the payment application, a single server could be used for quality assurance of both commerce and payment applications. Of course, this assumes that the applications are fundamentally compatible; for example, they use the same operating system and the same application server. If such an arrangement is possible, it presents a way to significantly reduce operational costs. After all, the quality assurance environment is used only for testing and does not need to provide high performance or high availability. Therefore, it might be technically feasible to deploy multiple applications on one physical machine, even if in production this is undesirable.

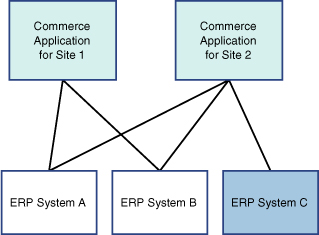

ERP systems are generally not tied to a single front-end system, but rather they handle orders coming from many channels. For example, a single ERP system could be responsible for processing orders coming via online Web sites, call center, fax and e-mail, and automated orders from B2B channels. Thus, the commerce site typically sees only a portion of all the orders in the company. Furthermore, multiple sites might use inventory from a single ERP system, and orders from multiple sites might be transferred to the same ERP system. This could be the case, for example, if the different sites sell largely the same products to different market segments, such as a site for plumbers, a site for building contractors, and a site for do-it-yourself consumers.

By the same token, in many cases different sites might use different ERP systems. For example, if the sites serve the needs of different brands or different divisions, most likely they have to deal with correspondingly different ERP systems as well.

In some cases, the whole picture can be extremely complex, where each site must integrate with multiple ERP systems. For example, if a site presents products from multiple divisions, the order must be transferred to the ERP systems of the divisions responsible for the products in the order. An illustration of this complexity is shown in Figure 14.11.

ERP integration is complex and requires significant computing resources, both from the site’s application and from the ERP systems. To simplify the computing environment, it would make more sense to employ the concept of an Enterprise Service Bus (ESB). With this idea, all sites standardize on their messaging with the ESB, based on a companywide protocol. The service bus is then responsible for delivery of the message to each system, in accordance with the technical characteristics and the format that is supported, as shown in Figure 14.12.

From a deployment point of view, the Enterprise Service Bus is likely implemented on a set of computers; that is, the ESB requires the maintenance of a number of additional machines in the company’s infrastructure. However, in many cases, the ESB not only provides configurable message mappings and guaranteed delivery, but also it offloads much messaging and mapping logic from the applications. Therefore, it is possible that the use of ESB will reduce the load on these systems, and hence the total number of systems in the company’s inventory would remain the same or be reduced.

In the example in Figure 14.13, an ESB machine is added to zone 3, but at the same time there is one less machine necessary for the commerce application in zone 2.

Having introduced the concept of ESB, we will not elaborate on it, but we do recommend you to research this topic and the larger topic of Service Oriented Architecture. The understanding and employment of both of these concepts is important to the technical implementation of sharing hardware and software for multiple sites. There is much literature available on this subject, such as Enterprise Service Bus by David A. Chappell, or SOA in Practice by Nicolai Josuttis.

Now that we discussed what the shared infrastructure would look like, we look at some of the typical techniques that can be used to share hardware or software. We have seen that each site requires a multitude of servers deployed in different zones that are responsible for different aspects of running the site.

In this discussion, we focus on the application servers, which are typically deployed in zone 2. The reason for this is that these commerce applications are usually the ones that contain the most logic and data that is specifically used for the commerce sites. After all, the web servers are usually quite generic in nature and contain relatively little site-specific logic. Although each site might put some images or web pages on the web server, fundamentally the web server requires relatively little customization for each site.

The back-end systems deployed in zone 3 are usually not site-specific. Typically these systems are used not just by the company’s Web sites, but also by many other activities within the company. In many cases, these systems predate the company’s web strategy, and little effort is made to adapt them to the needs of the company’s Web sites.

You can see that the bulk of the commerce business logic and data falls within the commerce applications; we, therefore, must consider the problem of sharing these commerce applications among sites. Depending on the level of sharing, this might mean using the same hardware at multiple sites, or having the different sites make use of the same software. In the most effective commerce platform, multiple sites also share the data.

One seemingly simple way to use the same hardware for the applications used by multiple sites is server partitioning. With this technique, the server machine is partitioned, or divided into what looks like multiple servers or segments, and each segment is used to run the applications necessary for a single site. In this way, the different applications do not interfere with each other because each site’s application effectively has its own image of a machine.

Partitioning can be done so that the machine is segmented at the hardware level, in which case each segment is assigned the computing resources that it can use. With hardware partitioning, different segments can even use different operating systems, as shown in Figure 14.14. This method provides the most independence of the operation of each site on shared hardware.

Partitioning can also be done at the operating system level. In this case, all the applications on the machine must use the same version of the operating system, but they effectively run independently of each other, as shown in Figure 14.15.

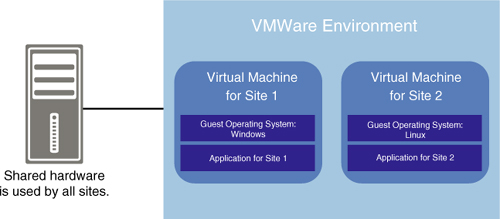

Finally, partitioning can be accomplished by using a virtualization application, such as VMware. In this case, the application allows the creation of multiple images of the machine, and each image runs independently of the other, as shown in Figure 14.16.

The advantage of hardware partitioning is that the operation of each site is the least likely to affect the other sites. Also, with hardware partitioning, it is possible for sites to share the same hardware, even if they require different operating systems because each segment might have a different operating system on it.

However, there are a number of disadvantages to this technique. First, using hardware partitioning allows the operation of few sites on a single machine—this is because each segment is assigned a specific set of resources, such as the number of processors. You can create only a few segments on a single machine, based on the number of processors available. Another disadvantage of hardware partitioning is poor resource sharing; it is possible that while one site is mostly idle, the other sites would run out of resources because processing power is never shared among the segments.

With operating system partitions, resource sharing is better because the same operating system manages processors for all partitions and allocates processing power as needed. However, this form of deploying multiple sites on a single server is still not ideal. One problem is that it is difficult to administer because each site requires the full software stack installed on its partition. This means that each site needs to be maintained completely separately from the other, even if some of the software is exactly the same.

Another big administrative problem with server partitioning is that this technique makes it difficult to create additional sites. Each time a new site is needed, the existing hardware or operating system must be reconfigured, which is a nontrivial task that severely disrupts the operation of existing sites.

With virtualization technology, it would be easier to create additional sites because the creation of a virtual machine does not need to disrupt the existing virtual machines. However, this technique still suffers from the administrative disadvantages of server partitioning. Each site still requires the maintenance of the full software stack needed by the commerce applications. In addition, creation of a new site is still a complex task that can be carried out only by highly qualified IT personnel.

Because of these reasons, we do not recommend employing server partitioning as the way to share hardware among multiple sites.

Another way for multiple sites to share the same hardware is for their applications to be deployed within the same operating system. You can see an illustration of this arrangement in Figure 14.17.

To make it possible for the applications to coexist, they must use the same operating system, with the same maintenance level. However, merely matching the versions of the operating system supported by the applications is usually not sufficient because the coexistence of applications can create other dependencies. For example, an application might require the installation of additional software on the same machine. Even though the applications might coexist, it is possible that the additional software is not compatible with the hardware or the operating system, and therefore, requires separate hardware.

Another difficult situation could arise if initially all applications are compatible, but then a future version of one of the applications requires a different maintenance level of the operating system from the other applications. All these dependencies can be difficult to sort out through the lifecycle of the applications and the operating system.

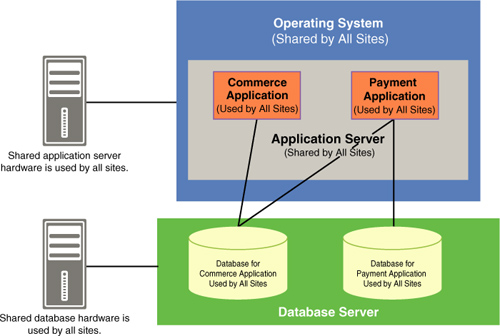

One common approach to simplify the dependencies is to make sure that different applications make use of the same application server, as shown in Figure 14.18.

In this setup, the same application server, running on a single machine, is used by applications pertaining to multiple sites. This means that the different applications must not only support the same operating system, but also the same application server.

Similarly, the different applications can use the same database server. This means that, whereas the actual databases are distinct and the data is not shared between the sites, the databases are all deployed within the same server, as shown in Figure 14.19.

To make this arrangement possible, you need to make sure that the different applications all support the same versions of the application server and the same version of the database.

At first glance, this matching of versions seems to be a trivial check with the application vendors. However, in reality, this match-up is not always easy to achieve. For example, sometimes the application vendors nominally claim compatibility with the same brand of application server, but a closer examination reveals that they require different maintenance levels, which for some inexplicable reason cannot coexist within the same installation. Another example is where the applications can coexist perfectly well within the same application server, but for some reason, they need different levels of the database. Such issues can be difficult to resolve, and the result is that in many cases, they effectively force the applications of different sites to use different hardware.

As the number of sites used by the company grows, this problem of application compatibility will only get worse. New sites will likely need additional applications, making the entire environment more and more complicated.

In some cases, the problem might not be due to the application vendor but self-inflicted by the additional improvements and customizations done by the company. For example, let’s say that the company has two sites, one for the B2B market and one for the B2C market, and uses the same commerce application for both of them. For the B2B market, the commerce application was modified to support additional order approval workflow and business contract-based pricing rules, which were not supported in the original vendor’s application.

Depending on the implementation of these customizations, it could turn out that they only work on one particular version of the application server and database and do not work with the most current versions. At the same time, the B2C application wants to use the most current level of application server and database. As a result of this conflict, the B2B and B2C sites cannot share the same application server, or even the same hardware, even though they started out from the same vendor’s application!

Carefully research and document the entire software stack, and get confirmation from the vendors and the implementers to ensure that the proposed configuration works. Even if the application vendors officially support the configuration, it is a good idea to do additional system testing before deploying the application to ensure that unexpected problems do not show up.

There is one more approach to allow multiple sites to share the same hardware and software, and that is where all the sites use the same set of applications. In this arrangement, an application is inherently designed to support multiple sites, as is shown in Figure 14.20.

With this approach, there is no need to have separate installations for each site, but rather all the sites share the entire software stack. This makes the maintenance of the software a lot easier and less time-consuming. At the same time, the problem of having to match the versions of operating systems and databases becomes much less complicated to manage. Because a single commerce application is used for all sites, there is no need to worry about its compatibility with each site’s environment.

In the example in Figure 14.20, we see that there are two applications used: a commerce application and a payment application, in the same environment. In this case, make sure that these two applications must safely coexist. However, there are only two applications involved for all sites, and this problem will not get worse as the number of sites increases.

When multiple sites are created and managed within the same application, they are able to share not only the hardware and software, but also the data. In our example, there is a single commerce database and a single payment database, both managing data for all the sites. Similarly, the application could be designed so that multiple sites make use of the same catalog or present the same advertisements. This means that there is no longer any need to copy the same catalog or marketing data to each site’s database. Rather, the single modification of data is automatically reflected in all the company’s sites. At the same time, with this approach data is maintained together. This means that you would not back up or archive data for one site, but for all sites at once.

In practice, designing an application to allow sharing data among sites is not trivia, and not all commerce applications are capable of sharing data among sites. For example, some applications duplicate each site’s data, even though all the data is managed within the same database. Clearly, this is not true data sharing, and you must look carefully at application design to make sure that data truly is shared among sites. The rest of the chapters in this book are dedicated to the topic of data sharing and to the techniques that a commerce application can use to make data sharing as easy as possible.

Thanks to the capability to share data, this approach usually presents the opportunity for designing the most cost-effective and scalable multisite platform. As we described in previous chapters, when multiple sites use the same data, the company not only saves on IT costs of maintaining the platform, but also saves on its line of business administrative costs. Furthermore, sharing data can often open additional business opportunities so that the different sites can leverage each other’s data to improve their offerings or provide additional services to the customer. We discussed several examples of such opportunities in Part I, “Business Perspective—Opportunities and Challenges of Multisite Commerce”; one typical example is the capability for a company to cross-sell products from one division on another division’s sites.

At the same time, when applications and data are shared by multiple sites, there is the most technical risk involved. Due to programming errors, an action seemingly relating to only one site could unexpectedly affect other sites. In the worst case, the activity of one site could theoretically even damage or destroy the data pertaining to other sites. This is all possible because fundamentally all sites use the same application and the same database.

Therefore, the applications that are shared by multiple sites must be carefully designed to make sure that they operate correctly. Furthermore, extensive testing of these applications must be done so that operational stability of the platform can be ensured.

The basic problem of sharing hardware or software is that this arrangement creates interdependencies between the sites that make site management more difficult. To illustrate this, consider a typical situation. Imagine that a company has two sites: a B2B site and a B2C site.

Now suppose that the B2B site requires a new capability to be added, perhaps allowing customers to create multiple shopping carts, or allowing customer service representatives to give customers discounts. Enabling such new capabilities requires an update to the software, which means that the site needs to be taken down for the duration of the update. The expected downtime could take several hours. However, this downtime is completely unacceptable to the B2C site, which is in the middle of the holiday campaign. Therefore, the B2B site will have to wait several weeks or months for the upgrade, until the line of business responsible for the B2C site considers the necessary downtime to be tolerable by its business.

Be aware of the typical interdependencies that are created by introducing a shared platform so that the technical designers can plan for them. We describe some of the most common and critical interdependencies that the platform designers must be aware of.

Determining the correct computing resource to allocate to each application is not an easy task, and it becomes even harder if multiple applications use the same hardware. One advantage of shared hardware is that during peak demand for one site, hardware resources can be diverted from other less active sites. Normally, hardware is sized to accommodate not only typical load, but also to handle peak demand, which can be much larger. If the sites use separate hardware, each site must accommodate peak demand on its own. However, if hardware is shared and peak demand periods do not overlap, the same spare capacity can be applied to multiple sites, thereby reducing the total hardware sizing. An example of this is shown in Figure 14.21.

In this example, site 1 has its peak load in the afternoon, and by 5 p.m. the load falls off considerably. By contrast, the load on site 2 becomes heavy around 6 p.m. in the evening. Therefore, the spare capacity needed by each site to accommodate their peak demand can be used to complement the needs of the other site. Hence, the total processing power necessary when using shared hardware is less than it would be if each site had employed its own hardware.

The big question, though, is how much processing power is necessary. If the platform needs to satisfy the needs of many sites, it is necessary to estimate the medium and peak demands on all sites at all times, and then come up with the overall expected CPU utilization chart. However, what if for some reason, a few of the sites have sudden spikes in customer traffic? In other words, what is the real spare capacity that is necessary on a shared platform? This determination requires much technical expertise and extensive experience to account for the combined needs of all the sites that depend on the same hardware.

When the same hardware is shared for multiple sites, there is the danger of failure on one site affecting all the other sites. For example, let’s say an undiscovered software defect in unique circumstances not only kills the application for that site, but somehow also crashes the entire application server or even the operating system that the site is on. This would affect all the sites in which applications are deployed on that machine.

Even with a single site, you need to try to avoid such failures, and normally the site’s computing environment includes multiple servers that replicate each other’s functions. If one server goes down, the others can pick up the load.

The same concept applies when multiple sites share the same set of machines—if one machine goes down, the others can handle the customer requests in its place. However, from the overall cost of view, there is an advantage to the case where multiple sites use the same hardware. Building a resilient infrastructure where no single server can take the site down is expensive. When the applications of many sites share the same hardware, the company needs to invest only in building one such infrastructure, which would save costs.

The same cost advantage applies when dealing with denial-of-service attack, in which malicious hackers from the Internet bombard the site with millions of access requests, simply to try and load the network or the hardware beyond its capacity.

During such an attack, the site could become too slow, or even completely inaccessible to legitimate customers. The danger is that if the same hardware and the same network resources are used by other sites, even though the attack focused on only one site, it inadvertently puts all the sites out of business!

Creating network protection against such an attack is quite expensive, and when a secure environment is created, it is much more cost-effective to employ the same infrastructure for many sites. Nevertheless, you must realize that in the end, sharing of hardware does introduce dependencies among the sites. There will always be extreme and rare scenarios where the failure of one site affects other sites. Sites that are built upon independent hardware do provide the maximum resilience, provided that the company can invest in the necessary infrastructure, with duplicated services, for each site.

However, for the vast majority of businesses, the cost savings of shared infrastructure, such as reduced hardware and software costs, allow the company to create a single resilient infrastructure, which in the end works sufficiently well for the company’s needs.

The problem of maintenance arises even if each site is deployed in its own independent environment with no sharing of software or hardware with other sites. The issue is that when it is necessary to upgrade the hardware, it is common that the site would have to be taken down for the duration of the upgrade, causing a temporary outage of the site.

One way to avoid this temporary outage is to upgrade the hardware in stages. For example, if there are four machines that all run the same application, it might be possible to take down the machines one by one so that at any given time, there are still three machines available to run the site. This procedure, however, is probably more expensive and makes the entire upgrade process last longer.

If the upgrade is not for the improvement of each machine, but the replacement of all of them, a temporary outage might be unavoidable. The least disruptive procedure to follow is to set up the new servers in production environment, and when everything is ready, to do a quick switch-over from the old servers to the new servers. This switch-over process might not be instantaneous, however, and a short outage of the site is often required; for example, to allow final testing that ensures that the new installation works before making it available to customers.

A similar issue applies to software maintenance, such as installing fixes, or installing a new version of any of the software, such as the operating system, application server, or application. This upgrade process must take place during a scheduled maintenance window when the site is temporarily taken down.

When the same hardware and application server is used by multiple sites, the issue of maintenance is complicated by the conflicting interests of the different sites. During the maintenance window, all the sites will be down, and it might be more difficult to find a time window that is acceptable to all sites.

For example, frequently a maintenance window is scheduled on weekends in the middle of the night when the usage of the site is lowest. However, if the common installation is used to operate sites around the world, there are always some sites that serve the needs of regions where right now, it is their peak time of the day!

There are three common ways to deal with this problem of scheduling a maintenance window that affects all sites. The first is to avoid the need for such a window by making sure that at least a portion of the production environment is always available to handle customer traffic. As we described, this makes the upgrade process more complicated and takes more time, but it does ensure the best availability of the sites.

The second way is to create several server farms, each one located in a large geographical area. For example, one large manufacturer created three server farms: one for America, one for Europe, and one for Asia. This approach increases the costs of the sites, but it does have advantages. The hardware in each server farm is used by customers in the same geographical area with a limited variation in their local time. Hence, it is possible to find a time window when it is night in all the countries that make use of these servers, and scheduling an acceptable maintenance window becomes much easier.

Another advantage of having multiple server installations is for improved resilience. One of the installations can go entirely down for a reason beyond anyone’s control, such as natural disaster of a major power outage in that area. In this case, the other server farm can keep the affected sites up and available to customers, while service is restored in the affected area.

The increased resilience of multiple server farms also allows for smoother upgrade processes. The server farms are upgraded one by one, while the traffic to the sites that they normally serve is handled by the other server farms that are still available online.

If multiple server farms or machine-by-machine upgrades are not an option, the company needs to deal with the issue in an administrative manner. Essentially, either by negotiation or by imposition, a single common maintenance window is imposed on all the sites at the same time. With a compromise, this window would be found at a time that might be inconvenient for some of the sites but at least does not occur during the peak usage and does not violate the business-critical requirements. The business then has to deal with the ramifications of this decision by making sure that all site outages are planned for and are advertised well in advance.

We have seen that for multiple sites to share the same platform, it is important to plan the layout of the hardware and software within the overall IT infrastructure. This planning must take into account such aspects as security and performance requirements, and the administration needs of the platform and of the sites.

The first step to creating a shared platform is to standardize the company’s computing environment, making sure that all sites use the same types of hardware, same operating systems, same application servers, and the same databases.

The next step is to deploy the site applications within the same operating system, and even within the same application server. With a truly shared platform, however, all the sites use the same set of applications, and also the sites share the same data.

For this arrangement to work, the installation and maintenance must be carefully planned. You need to ensure that the applications of all the sites can coexist within the same environment. Make sure that the hardware is sized correctly; that is, that sufficient processing and database resources are available to handle the load of all the sites. Another important consideration is to avoid a single point of failure in which a problem on one site causes outage in other sites. Finally, the operation of multiple sites on the same hardware and software infrastructure requires the careful planning of maintenance and upgrades so that the maintenance procedures and schedule are acceptable to the requirements and the business-critical needs of all the affected sites.

To summarize the discussion, Table 14.1 lists the typical opportunities for sharing hardware or software among sites. Table 14.2 shows the typical methods that can be employed to share the infrastructure. Table 14.3 shows the typical challenges that must be overcome when multiple sites share the same platform.