Chapter 15

gRPC, Protobuf, and gNMI

In the previous two chapters, you learned about two network management protocols, NETCONF and RESTCONF. These two protocols form the foundation of network programmability and are the two most ubiquitous protocols in today’s enterprise networks—for service providers and data centers alike. However, the development of network technologies, including network automation protocols, is never in a frozen state; as new challenges arise, new solutions need to be developed to meet those challenges. This chapter introduces a new protocol that has been to developed to solve some of these newly emerging challenges: gRPC. This protocol relies on an absolutely new data serialization format named Protocol buffers (shortly Protobuf). Also, the new transport requires a new message set, new specification, which benefits from the transport at most. This role is taken by gNMI, which stands for gRPC Network Management Interface.

Requirements for Efficient Transport

One of the challenges that originated in the data center world and then later became applicable to enterprise and service provider networks as well involves multiple requirements in different dimensions that may seem, initially, contradictory:

On one hand, there is ever-growing utilization of interfaces in data centers and service provider networks, where typical traffic rates today are on the order of hundreds of gigabits per second. Hence, there is an ever-growing need to reduce any overhead traffic, including management plane traffic, as much as possible.

On the other hand, there is a need to collect as much operational data as possible from the network elements, including counters, routing protocols states, and the contents of MPLS FIB and MAC address tables. The need extends to analyzing this data in real time—or as close to real time as possible. In other words, there is a need for this telemetry to be continuously streamed from network devices.

There is an additional complexity associated with streaming telemetry: Telemetry avoids the unnecessary load on network element resources, as well as unnecessary traffic on the transport media caused by request/response operations, required if streaming telemetry is not used. Originally, the concept of subscriptions was covered in RFC 5277, which addresses NETCONF event notifications. However, early production implementations of NETCONF did not implement subscriptions. Later, Cisco extended the capability of NETCONF subscriptions to some Cisco IOS XE platforms. (Refer to RFC 8640 for further details.) However, subscriptions involve huge administrative overhead on the wire due to XML encapsulation and therefore are not very efficient.

These requirements collectively drove research for a solution that would both fulfill the industry requirement and mitigate the shortcomings of the solutions implemented then. As you have already learned, network automation and programmability employs similar technologies and protocols to those used for application development and interaction. For example, NETCONF was inspired by SOAP/XML and is based on XML, and RESTCONF was inspired by and based on REST. To network programmability researchers, this indicated that a solution probably existed in the applications domain and just needed to be ported to the network programmability domain. And as expected, such a solution was found: gRPC.

History and Principles of gRPC

Some of the most complex applications shaping the Internet today, such as search engines, social media networks, and cloud infrastructures, are highly distributed by nature. This distribution is a prerequisite to provide the ability to scale and provide a sufficient level of resilience. Modern distributed applications are built using a microservices architecture (see https://microservices.io for more details). The microservices architecture basically refers to the splitting of a complex multicomponent application into multiple smaller applications, with each application (called a microservice) performing its own small subset of functions. This approach paves the way to simplify each application and remove the dependencies and spaghetti code often seen in monolithic applications, where different parts of the applications are bundled very tightly. On the other hand, in order for an overall application to work, the microservices communicate with each other using remote-procedure calls (RPCs) over a network, as each microservice has an associated IP address and TCP or UDP port. NETCONF is an RPC-based protocol (refer to Chapter 14, “NETCONF and RESTCONF”). So is gRPC. gRPC is a recursive acronym that stands for gRPC remote-procedure call. It is also possible to find other interpretations of the g part of the name gRPC, such as general-purpose or Google. Both of those are possible, as Google is the developer and core contributor/maintainer of gRPC. Currently, gRPC is a project within the Cloud Native Computing Foundation (CNCF).

Google made gRPC publicly available in 2015 but had been using the ideas of quick and highly performant RPC to manage the microservices in its data centers since the early 2000s. The name of the protocol back then was Stubby, and it was tightly bundled with Google’s service architecture, so it could neither be generalized nor reused by others. (For more details, see https://grpc.io/blog/principles/.) At the same time, in the public space, multiple developments, such as HTTP/2 and SPDY, introduced latency-reducing enhancements and optimization of handling of the requests competing for the same resources. As a result, Google reworked its Stubby protocol into gRPC, leveraging HTTP/2 and its features geared toward enhanced performance (such as binary framing, header compression, and multiplexing; see Chapter 8, “Advanced HTTP,” for details) and created an open-source project that was eventually adopted by CNCF and that can be used by a wider audience.

The following concepts form the basis of gRPC:

Performance and speed: One of the core goals of Stubby and, hence, gRPC is to provide fast connectivity between services, and the overall system architecture was developed to implement this concept. One example is the implementation of static paths toward resources rather than dynamic paths such as those implemented by RESTCONF. With a dynamic path, it is possible to include multiple optional queries in the URI, and they need to be parsed before call processing. In contrast, gRPC implements a static path, and all the queries must be part of the message body.

Microservices oriented: gRPC was created to interconnect microservices that may be highly distributed across a data center or even between different data centers. It takes into account the networking components of an application, such as delays and losses.

Platform agnostic: gRPC can be used on any platform or operating system, even those that have limited CPU and memory, such as mobile devices and IoT sensors.

Open source: Open-source software is booming now, and for a system to be popular and widely adopted, it is important that its core functionality be open source and free to use. gRPC is open source.

Language independent: gRPC was developed to be available for use in all the programming languages that have wide user bases, such as Python, Go, C/C++, Java, and Ruby. In addition, cross-platform implementation is possible, where the client and server sides are implemented in different languages (for example, a Python client and C++ servers).

General purpose: Because it was built with a focus on microservices and Google architecture, gRPC is generic enough to be used as a communication system between different applications and in different scenarios (for example, the gNMI specification for network management or streaming).

Streaming: gRPC supports various communication patterns, such as basic request/response operations, unidirectional streaming, and bidirectional streaming. It supports both synchronous and asynchronous operations.

Payload agnostic: Originally, gRPC relied on Protocol buffers (discussed in detail later in this chapter) for both data serialization and encoding. Today, it supports any other data encoding, such as JSON or XML. However, Protocol buffers have very dense data encoding and may provide better efficiency compared to other data encodings.

Metadata support: A lot of applications, especially those communicating over the Internet (which is not a secure environment), require authentication. Application authentication is typically implemented using metadata, which is also the case with gRPC. Generally, gRPC provides the facility to transmit any metadata, which is usually a very useful feature.

Flow control: Network connectivity bandwidth is often unequal inside and outside a data center. For example, the servers inside a data center might be connected with 10 Gbps interfaces, whereas customers connected to the data center from the outside may be connected to low-speed interfaces. gRPC has a built-in mechanism to be able to handle these differences to allow stable connectivity and service operation.

gRPC as a Transport

As you have already seen in this chapter, gRPC is very flexible. gRPC has the following characteristics:

No fixed port: gRPC works over TCP; however, gRPC doesn’t have any predefined port. The port is defined solely by the application or vendor. For example, the TCP port that is used for management of network elements via gRPC on Cisco is different from the port used by Arista, which is different from the port used by Nokia. On the one hand, such a flexibility provides an advantage in terms of security (as there are no fixed attack vectors). On the other hand, it makes managing a multivendor network more complicated.

No predefined calls and messages: gRPC is a fast RPC framework. Unlike NETCONF, it doesn’t have any predefined structure for its messages. Each application uses its own set of calls and messages, called a specification. For example, gNMI is a gRPC specification, as it defines its own set of RPC calls and associated messages.

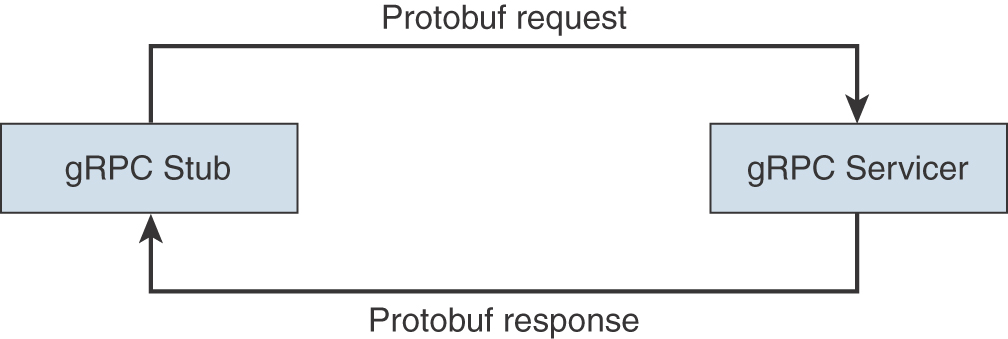

In a nutshell, gRPC gives you great flexibility to deploy any service you need, with very few limitations. Figure 15-1 provides a high-level overview of the communications flow with gRPC.

Figure 15-1 The General gRPC Communications Flow

In gRPC terminology, servicer refers to the server side of the application. Basically, it is the side that listens to customer requests, processes them, and provides responses. The gRPC client side is called stub, and it is the side that typically originates the requests and receives the responses from the servicer. The communication between the stub and the servicer is called a channel. The channel is specified by the target host address (for example, domain name, IPv4 or IPv6 addresses) and TCP port, and it is established for the duration of the communication and is typically short-lived; however, in some circumstances, it lives for a longer time.

Note

The term servicer is a Python-specific term and refers to the interface generated from the service definition. More specifically, a servicer Python class is generated for each service and acts as the superclass of a service implementation. A function is generated in the servicer class for each method in the service. This will make more sense as you progress through the chapter. The majority of gRPC documentation refers to the two ends of the gRPC communication as stub and server or client and server. To avoid confusion and to keep things simple, the term server is replaced by servicer throughout the chapter.

In terms of communication patterns, gRPC supports the following scenarios:

Unary RPC: This is one of the simplest communication methods between the stub and the servicer. It involves a single request from the stub to the servicer and a single response back from the servicer to the stub. It is the same as any NETCONF or RESTCONF request/response operation.

Server-side streaming RPC: This scenario starts as a unary RPC with the stub’s request; however, in the response, the servicer streams a number of messages (sometimes quite a large number of them).

Client-side streaming RPC: In this scenario, the stub streams a number of messages to the servicer, and the servicer responds back with a single message.

Bidirectional RPC: Both the stub and the servicer can stream a number of messages to each other. It is important for the streams to be independent of each other so that they can be implemented in an asynchronous manner. The streams may be confirmed by some sort of acknowledgment message from each side.

In addition, gRPC supports transmission of the metadata with each message, pretty much as NETCONF or RESTCONF do. One of the popular use cases for metadata is authentication of the messages; this is a mandatory part for the gNMI specification and is based on the gRPC transport.

gRPC is a programming language–neutral technology, which means it can be implemented in virtually any language. It is supported in C++, Go, Ruby, Python, Java, and many other languages. Because gRPC is language independent, the stub and the servicer can be developed and implemented in different languages and interact seamlessly with each other, as long as they follow the same specification. In this book, we focus on Python, and later in this chapter you will see Python scripts to manage network elements using gRPC from the stub’s perspective.

One of the key aspects of any protocol or framework used to manage network elements is the set of calls and messages of that protocol. gRPC is very flexible, and it allows you to define your own set of calls and messages. Obviously, to make it work for management of network elements, the messages and RPC calls should be implemented in the network elements’ software, which requires access to source code. Later in this chapter, you will learn about gNMI, which is the specification (that is, the set of the calls and messages) used over gRPC transport. But before that, you need to understand Protocol buffers, which are discussed next.

The Protocol Buffers Data Format

Google developed Protocol buffers (or Protobuf for short) to serve as the main language to define both the gRPC message format and RPC calls. Protocol buffers are one of the core technologies developed and used by Google to serialize data for communication between the elements of highly loaded systems. The reason they are so efficient has to do with the way the data is encoded for transmission: Only key indexes, data types, and values are converted to binary format and sent over the wire. Example 15-1 shows a sample Protobuf message.

Example 15-1 Simple Protobuf Message

syntax = "proto3";

message DeviceRoutes {

int32 id = 1;

string hostname = 2;

int64 routes_number = 3;

}

Example 15-1 consists of two parts: syntax and message. The syntax section defines which version of the Protocol buffers are to be used. The most recent and widely used version is Version 3; hence, the syntax variable is set to proto3. The second section is the message section, which is effectively a schema, like a JSON schema or a YANG file, that defines the following:

Variables: The schema defines the names of the variables that may exist in the schema. All variables are optional and may or may not exist in the actual message. In Example 15-1, id, hostname, and routes_number are the names of the variables.

Data types: The schema associates each variable with a certain data type. In Example 15-1, int32, int64, and string are the data types. These data types are built-in types (see https://developers.google.com/protocol-buffers/docs/proto3#json); however, if required, you can create your own data types. For instance, you can create an enum type with some options or even use another message defined in the same file as a data type.

Indexes: The schema identifies an index associated with each variable name, as the names aren’t included in the Protobuf message sent over the wire. It is the indexes that are included. This is very different from XML and JSON data formats, where the actual key names are transferred. The indexes are both an advantage and a disadvantage of the Protobuf: On the one hand, they allow you to save a lot of bandwidth on the wire, especially in bandwidth-hungry applications such as streaming telemetry. On the other hand, the sender and receiver must have the same schema, or it will be impossible to decode the data out of the binary stream. Indexes must be unique within the level of the message—such as 1, 2, and 3 in Example 15-1. In each nested level, though, they can start with 1 again.

With all these details in mind, take a look at the more complicated Protobuf messages in Example 15-2.

Example 15-2 Complex Protobuf Schema with Multiple Messages and User-Defined Data Types

syntax = "proto3";

enum AddressFamily {

IPV4 = 0;

IPV6 = 1;

VPNV4 = 2;

VPNV6 = 3;

L2VPN = 4;

}

enum SubAddressFamily {

UNICAST = 0;

MULTICAST = 1;

EVPN = 2;

}

message Routes {

AddressFamily afi = 1;

SubAddressFamily safi = 2;

message Route {

string route = 1;

string next_hop = 2;

}

repeated Route route = 3;

}

message DeviceRoutes {

int32 id = 1;

string hostname = 2;

int64 routes_number = 3;

Routes routes = 4;

}

Although Example 15-2 is much longer than Example 15-1, it strictly follows the guidelines mentioned previously. You can see the named user-defined data types AddressFamily and SubAddressFamily created using the enum (enumerate) built-in data type. Each of the new data types has some allowed values, each associated with an index; the index rules stated previously are applied. These data types in turn are used in the new message Routes, where they are associated with the variables afi and safi and the corresponding indexes 1 and 2. Inside the message Routes, a nested message Route is created, and it must be called in the parent message in order to be used. It is called with the index 3 because 1 and 2 are already used by afi and safi. The message name Route is put in the data type position and is prepended by the keyword repeated, which means the variable routes can be defined several times; this is, effectively, the Protobuf’s implementation of lists or arrays.

It is also possible to call one message from another message that is not nested. Hence, you can see in the original DeviceRoutes message the new variable routes, which has data type Routes (after the message Routes {}) and index 4.

As mentioned earlier in this chapter, Protocol buffers are used not only to define the message structure within gRPC but also to identify the structure of the RPC operations: which request message is associated with which operation type and what response message is sent back, as demonstrated in Example 15-3.

Example 15-3 Sample gRPC Specification in Protobuf

syntax = "proto3";

enum AddressFamily {

IPV4 = 0;

IPV6 = 1;

VPNV4 = 2;

VPNV6 = 3;

L2VPN = 4;

}

enum SubAddressFamily {

UNICAST = 0;

MULTICAST = 1;

EVPN2 = 2;

}

message Routes {

AddressFamily afi = 1;

SubAddressFamily safi = 2;

message Route {

string route = 1;

string next_hop = 2;

}

repeated Route route = 3;

}

message DeviceRoutes {

int32 id = 1;

string hostname = 2;

int64 routes_number = 3;

Routes routes = 4;

}

message RouteRequest {

string hostname = 1;

AddressFamily afi = 2;

SubAddressFamily safi = 3;

}

service RouteData {

rpc CollectRoutes(RouteRequest) returns (DeviceRoutes) {}

}

Besides the additional message RouteRequest, you can see something completely new in Example 15-3: the service part. The service part is an abstract definition that ultimately contains the set of rpc operations. There should be at least one rpc operation per service. In Example 15-3, the rpc operation is called CollectRoutes, and it states that the client side, which is called stub in gRPC, should send the RouteRequest message, whereas the servicer should respond with the DeviceRoutes message. This is an example of the definition of unary RPC; however, there are three more types, as outlined earlier, and they can be defined in the following manner:

Server-side streaming RPC: rpc CollectRoutes(RouteRequest) returns (stream DeviceRoutes) {}

Client-side streaming RPC: rpc CollectRoutes(stream RouteRequest) returns (DeviceRoutes) {}

Bidirectional RPC: rpc CollectRoutes(stream RouteRequest) returns (stream DeviceRoutes) {}

Altogether, the set of messages and services are named in the specification and stored in a proto file. This file is named after its format and has the extension .proto, as shown in Example 15-4.

Example 15-4 Sample Protocol Buffers Message

$ cat NPAF.proto

syntax = "proto3";

enum AddressFamily {

IPV4 = 0;

IPV6 = 1;

VPNV4 = 2;

VPNV6 = 3;

L2VPN = 4;

}

// Further output is truncated for brevity

In Example 15-3, the specification is developed with the idea of route distribution between the stub and servicer. Despite the fact that the specification is application dependent, there should be some standard specifications to allow interoperability between the devices in the real world. One of the most popular specifications in the network automation world is gNMI, which is widely used in data centers. You will learn about gNMI at the end of this chapter.

Working with gRPC and Protobuf in Python

Like gRPC, the Protocol buffers are programming language independent. This means that Protocol buffers can be implemented in any popular programming language, such as C++, Go, Java, or Python. On the other hand, each programming language has its own consumption model that enables the conversion of the proto files into the programming language–specific structure. For example, in Python, the data construction is a set of two files with metaclasses that are created as a conversion of the single proto file. There are two files generated out of a single proto file because the messages and the service (RPC) part are generated in Python separately.

This conversion is done using a tool developed by Google. This tool, which is called protoc (Protocol Buffers Compiler), can create the proper output of the proto file in any desired programming language, including Python. There are multiple ways to get protoc, but in case of Python, the most sensible way to get it is to install the Python package with the grpc tools, as shown in Example 15-5.

Note

All the examples in the rest of the chapter use Python Version 3.7, and backward compatibility with earlier versions isn’t guaranteed.

Example 15-5 Installing protoc for Python

$ pip install grpcio grpcio_tools Collecting grpcio Using cached grpcio-1.30.0-cp37-cp37m-macosx_10_9_x86_64.whl (2.8 MB) Collecting grpcio_tools Using cached grpcio_tools-1.30.0-cp37-cp37m-macosx_10_9_x86_64.whl (2.0 MB) ! Some output is truncated for brevity Successfully installed grpcio-1.30.0 grpcio-tools-1.30.0 protobuf-3.12.2 six-1.15.0

As part of the dependency resolution, grpcio_tools also installs the protobuf package, which is used by protoc. At this point, you can convert the proto file in the Python metaclasses by using the protoc method from grpc_tools, as shown in Example 15-6.

Example 15-6 Converting the proto File in Python Metaclasses

$ ls npaf.proto requirements.txt venv $ python3.7 -m grpc_tools.protoc -I=. --python_out=. --grpc_python_out=. npaf.proto $ ls npaf.proto npaf_pb2.py npaf_pb2_grpc.py requirements.txt

Despite the fact that the module installed is grpcio_tools, in Python it is called grpc_tools. (This different naming can be confusing, but the two names refer to the same module.) You need to provide a number of arguments to this module:

-I: Contains the source folder with the proto file.

--python_out: Provides the path where the file containing the Python classes for Protobuf messages (ending with _pb2.py) is stored.

--grpc_python_out: Points to the directory where the file containing the Python classes for the gRPC service (ending with _pb2_grpc.py) is located.

As mentioned earlier, each of the resulting files has its own set of associated information. The file with messages is the most complicated, as it contains the conversion of the Protobuf message format in the Python data structure using various descriptors, as demonstrated in Example 15-7.

Example 15-7 The Auto-generated Python File with Protobuf Messages

$ cat npaf_pb2.py | grep '= _descriptor' DESCRIPTOR = _descriptor.FileDescriptor( _ADDRESSFAMILY = _descriptor.EnumDescriptor( _SUBADDRESSFAMILY = _descriptor.EnumDescriptor( _ROUTES_ROUTE = _descriptor.Descriptor( _ROUTES = _descriptor.Descriptor( _DEVICEROUTES = _descriptor.Descriptor( _ROUTEREQUEST = _descriptor.Descriptor( _ROUTEDATA = _descriptor.ServiceDescriptor(

As you can see in Example 15-7, the names of the variables are in line with the names of the messages shown in Example 15-3. The file in Example 15-7 is generated automatically and should not be modified manually. The second auto-generated file contains the methods and classes used on the stub and the servicer sides, as you can see in Example 15-8.

Example 15-8 The Auto-generated Python File with Protobuf Services

$ cat npaf_pb2_grpc.py | grep 'class|def'

class RouteDataStub(object):

def __init__(self, channel):

class RouteDataServicer(object):

def CollectRoutes(self, request, context):

def add_RouteDataServicer_to_server(servicer, server):

class RouteData(object):

def CollectRoutes(request,

The names of the classes are auto-generated from the name of the service—in this case, RouteData—and the keyword Stub or Servicer. As you can imagine, RouteDataStub is a class used on the client side, and RouteDataServicer is used on the server side, which is listening for the customer requests. RouteDataServicer also has the method CollectRoutes, which is further defined inside the server-side script to perform any activity necessary based on the logic.

The best way to explain the logic of gRPC in Python is to show the creation of a simple application that has both servicer and stub parts. By now you should be familiar with Python and should be able to read the code of the gRPC stub provided in Example 15-9.

Example 15-9 Sample gRPC Client

#!/usr/bin/env python

# Modules

import grpc

from npaf_pb2_grpc import RouteDataStub

from npaf_pb2 import RouteRequest, DeviceRoutes

# Variables

server_data = {

'address': '127.0.0.1',

'port': '51111'

}

# User-defined function

def build_message():

msg = RouteRequest()

msg.hostname = 'router1'

msg.afi = 0

msg.safi = 0

return msg

# Body

if __name__ == '__main__':

with grpc.insecure_channel(f'{server_data["address"]}:{server_data["port"]}') as

channel:

stub = RouteDataStub(channel)

request_message = build_message()

print(f'Sending the CollectRequest to

{server_data["address"]}:{server_data["port"]}...')

response_message = stub.CollectRoutes(request_message)

print(f'Received the response to CollectRequest from

{server_data["address"]}:{server_data["port"]}:

')

print(response_message)

Example 15-9 shows a generic gRPC client that allows you to connect to a gRPC-speaking server. It requires the module called grpc (installed in Example 15-5 as grpcio) and the building blocks of your Protobuf service and messages:

RouteDataStub: This class is used to send the request CollectRoutes to the gRPC servicer and get the response.

RouteRequest and DeviceRoutes: These messages are used to structure and serialize/deserialize messages sent from the stub to the servicer and from the servicer to the stub.

In the Variables section of Example 15-9, you can see the simple Python dictionary server_data, which contains the IP address and TCP port of the gRPC server. Next is a user-defined function that constructs the data structure using the schema associated with a certain Protobuf message. The data structure, as you can see, is a number of properties of the class RouteRequest, which strictly follows the RouteRequest message in the original proto file: the names of the class’s properties are exactly the same as the names of the variables inside the proto file (shown in Example 15-3). This data is saved within the function in the object msg, which is returned as a result of the function’s execution.

In the main part of the application, you can see the object channel created using the function insecure_channel from the grpc module. insecure in this function name means that the channel is not protected by encryption (for example, an SSL certificate), and the information is transmitted and received in plaintext. Unlike RESTCONF, gRPC doesn’t have an option to skip certificate verification for self-signed certificates. Therefore, you need to think about the certificates’ distribution for self-signed certificates or PKI if you want to deploy secure_channel; this approach is recommended for production networks. The argument for insecure_channel is a string with the IP address and port of the gRPC servicer.

Over the gRPC channel, you need to invoke the stub itself; how it is invoked is application specific. In this case, it is invoked using the class RouteDataStub, which is auto-generated out of the gRPC part of the Protobuf specification. From a Python perspective, the stub is also an object that has methods named after the RPC calls in the original proto file for this service. The input for the method is a message in the proper format for the proto file (RouteRequest in this case), and the output is the response message (DeviceRoute in this case). As the response is provided, the result of the method execution is saved in the variable response_message, which is printed afterward.

Once you have familiarized yourself with Python’s gRPC client, you should do the same with the server side; however, to be fair, it is a little bit more complicated, as you can see in Example 15-10.

Example 15-10 Sample gRPC Server

#!/usr/bin/env python

# Modules

import grpc

from npaf_pb2 import RouteRequest, DeviceRoutes

import npaf_pb2_grpc

from concurrent import futures

# Variables

server_data = {

'address': '127.0.0.1',

'port': '51111'

}

# Classes

class RouteDataServicer(npaf_pb2_grpc.RouteDataServicer):

def CollectRoutes(self, request, context):

print(request)

return self.__constructResponse(request.hostname, request.afi, request.safi)

def __constructResponse(self, hostname, afi, safi):

msg = DeviceRoutes()

msg.hostname = hostname

msg.id = 1

msg.routes_number = 10

msg.routes.afi = afi

msg.routes.safi = safi

msg.routes.route.add(route='192.168.1.0/24', next_hop='10.0.0.1')

msg.routes.route.add(route='192.168.2.0/24', next_hop='10.0.0.1')

msg.routes.route.add(route='192.168.3.0/24', next_hop='10.0.0.2')

return msg

# Body

if __name__ == '__main__':

server = grpc.server(thread_pool=futures.ThreadPoolExecutor(max_workers=10))

npaf_pb2_grpc.add_RouteDataServicer_to_server(RouteDataServicer(), server)

print(f'Starting gRPC service at {server_data["port"]}...')

server.add_insecure_port(f'{server_data["address"]}:{server_data["port"]}')

server.start()

server.wait_for_termination()

The code in Example 15-10 is a bit more complicated than the code on the client side, mainly because you need to define on the server side what should be done upon receiving the customers’ requests. Let’s analyze this Python script from the beginning.

To import external artifacts to the server’s script, you need the same grpc module as in Example 15-9 because it handles the gRPC connectivity. In addition, you need to import the structure of RPC calls, and so the whole auto-generated file npaf_pb2_grpc is imported as well, as are the messages used with this operation; therefore, the RouteRequest and DeviceRoutes classes are imported from the file npaf_pb2. Finally, you should also import the futures library from the concurrent module. gRPC was developed quite recently, with performance and scalability in mind. It therefore needs to be built with multiple resources (that is, with a number of parallel threads processing the calls). Following the import of the external resources, you define the server’s IP address and ports that will listen to the gRPC session. The same variable from Example 15-9 matches the one in Example 15-10 to allow the communication between the stub and the servicer.

The next section of Example 15-10 defines a class that controls how the server will behave upon receiving the calls from the customer. This class is based on the auto-generated class RouteDataServicer from the imported file npaf_pb2_grpc. It is effectively a child of the npaf_pb2_grpc.RouteDataServicer class. This approach allows you to focus on only the relevant business logic in your script while benefiting from the session handling that is auto-generated using the protoc tool. Within this class, you need to have methods following the names of the RPC operations from the original service for each proto file. The method CollectRoutes has some external inputs besides its own attributes (self): request and context. The request variable contains the message body received from the client, and context contains various administrative information (for example, metadata, if used). In Example 15-10, this method just prints the received message and sends back the response.

The response is generated using an additional class that doesn’t exist in the original specification. This is a very important concept because nothing prevents you from adding your own methods and attributes according to your requirements. This is why you see the additional private method __constructResponse created to build the response message. The response message must have the DeviceRoutes format, according to the proto file. Therefore, the object msg is instantiated from the imported DeviceRoutes class. The logic is as follows:

The properties of the object following the Protobuf message are named after the variables from the proto file.

If there are multiple levels of nesting, variables are stacked and interconnected with the . symbol.

Whenever you have to deal with an entry defined as repeated in the proto file, you need to use the function .add(), which contains arguments. Those arguments (route and next_hop in Example 15-3) are defined in the appropriate Protobuf message in the proto file.

The __constructResponse method returns the msg object. Ultimately, this object contains a Protobuf message; hence, the method is called in the original method CollectRoutes to generate the reply message.

The last piece of the code actually brings up the gRPC servicer. To do that, the object server is instantiated using the server class from the grpc module, where the mandatory argument is the number of workers created to serve the gRPC requests. Afterward, the specific auto-generated function add_RouteDataServicer_to_server from npaf_pb2_grpc binds the servicer class RouteDataServicer you created to the gRPC object server. Once this is done, the method add_insecure_port associates the TCP socket with the server. The server listens to the incoming requests on this socket. Besides insecure_port, there is also a possibility to use secure_port, which involves using SSL certificates. At this point, the servicer is ready for operation. It is started, and to allow it to stay for a prolonged period of time, it is put in the mode wait_for_termination(), which prevents it from shutting down until it is explicitly terminated by the operator.

By now you should have an understanding of how the basic client and server sides of the gRPC application with your own specification works. So that you can understand it even better, Example 15-11 shows the process of the gRPC server launch.

Example 15-11 Launching a Sample gRPC Server

$ python npaf_server.py Starting gRPC service at 51111...

The server part is now listening to customer requests, and you can execute the client-side script, as demonstrated in Example 15-12.

Example 15-12 Testing the Sample gRPC Client

$ python npaf_client.py

Sending the CollectRequest to 127.0.0.1:51111...

Received the response to CollectRequest from 127.0.0.1:51111:

id: 1

hostname: "router1"

routes_number: 10

routes {

route {

route: "192.168.1.0/24"

next_hop: "10.0.0.1"

}

route {

route: "192.168.2.0/24"

next_hop: "10.0.0.1"

}

route {

route: "192.168.3.0/24"

next_hop: "10.0.0.2"

}

}

Immediately after the client’s script is executed, the gRPC stub sends the CollectRoute RPC to the server’s address, 127.0.0.1, and port 51111, as defined in the variables. The servicer processes the request and response with the generated message. The server’s script contains the print(request) instruction, which prints the customer’s messages, which are shown in Example 15-13.

Example 15-13 Logging gRPC Processing at the Servicer

$ python npaf_server.py Starting gRPC service at 51111... hostname: "router1"

The server’s method CollectRoutes prints the incoming message, which has the RouteRequest format, according to the proto file. There is an interesting factor here: If you look at Example 15-3, earlier in this chapter, you can see the three variables (router, afi, and safi), but the printed output has only one (router). In fact, you can call these variables as properties inside the script, and they will have a value of zero. This is the behavior of proto3: If the value of the variable is zero, it is not sent. Always think about efficiency when you work with Protocol buffers.

The gNMI Specification

In this chapter, you have learned about gRPC, including the transport mechanism and how it operates. One of the key things demonstrated earlier in the chapter is that gRPC provides only the transport and type of communication, and the name of the RPCs and format of the messages are application specific. Together, the gRPC services, RPCs, and messages related to a certain application are called the specification. gRPC Network Management Interface (gNMI) is a gRPC specification that defines the gRPC service name, RPCs, and messages.

Before we look at the details of the gNMI specification, it is worth understanding what problems it aims to solve and why it has been developed in a particular way.

Originally the only way to perform activities related to management of network elements was to use the CLI and Telnet or SSH. That process is not suitable for collecting operational data, and SNMP was introduced. For decades, SNMP has been a major mechanism for polling the various types of operational states from the network elements, and it has been used in most networks. However, SNMP’s ability to change the configuration of the network elements is very limited. Some attempts were made across the industry to build XML-based network management (for example, NX-API in Cisco Nexus), but they were typically limited to particular platforms rather than being widespread.

The next major step in network management was the introduction of YANG and the module-based approach to managing the network elements. The configuration of network elements and their operational data following YANG modules became structured in hierarchical trees consisting of key/value pairs; this approach is much more suitable for management using programmability. The first standard protocol to support YANG was NETCONF; it standardized the programmable management of network elements across different vendors, and this allowed for API-based interaction with network elements, much as in any other distributed application (for example, communication between a database and a back-end server in a web application). However, the collection of the operational data using NETCONF is a bit complicated, as it must be polled by the server. Some early implementations of NETCONF agents caused very high CPU utilization on routers during requests, which made those agents suitable for configuration changes but not for continuous data polling.

The industry was experimenting with various protocols to effectively distribute operational data in YANG modules—from the network elements to the network management system relying on UDP or TCP protocols with proprietary sets of messages and communication flows. As gRPC was developed and became available for the wider public, network vendors added gRPC as another mechanism to distribute or stream the operational data in a process known as streaming telemetry.

As had been the case years before, the networking industry had to rely on two protocols to manage network functions in a programmable way. However, this time it was different: gRPC provided a broad framework for various communication types, including the unary type that is suitable for a traditional request/response operation, such as a configuration, and streaming in any direction suitable for the telemetry. The big consumers of the network technologies transitioned to becoming the developers themselves and started looking at how the capability of gRPC could be further applied to network management to find a single protocol that would be suitable for configuration and data collection. This development process took a couple years and resulted in gNMI. To paraphrase the official gNMI specification, gNMI is an attempt to use a single gRPC service definition to cover both configuration and telemetry and to simplify the implementation of an agent on the network devices and allow use of a single network management system (NMS) driver to interact with network devices to configure and collect operational state.

The Anatomy of gNMI

All the details of the gNMI specification are located in the single proto file gnmi.proto, which you can find in the official OpenConfig/gNMI repository at GitHub (https://github.com/openconfig/gnmi/blob/master/proto/gnmi/gnmi.proto). This file has very good and detailed documentation in the form of internal comments that help you easily understand all the parts of the proto file. Example 15-14 shows the structure of the gNMI service and RPCs.

Example 15-14 gNMI Specification: Service and RPCs

$ cat gnmi.proto

! Some output is truncated for brevity

service gNMI {

rpc Capabilities(CapabilityRequest) returns (CapabilityResponse);

rpc Get(GetRequest) returns (GetResponse);

rpc Set(SetRequest) returns (SetResponse);

rpc Subscribe(stream SubscribeRequest) returns (stream SubscribeResponse);

}

! Further output is truncated for brevity

As you can see in Example 15-14, the gNMI service consists of only one gRPC service and four RPCs, which is fewer RPC types than in NETCONF and fewer API calls than in RESTCONF. However, in terms of functionality, it can perform all the same operations. These are the four gNMI RPCs:

Capabilities: This RPC aims to collect the list of supported capabilities by the network device. This list includes the version of gNMI (the most recent at this writing is 0.7.0) and the supported YANG modules so that the gNMI client knows what can be configured on the target network element or collected from it. From a results perspective, this RPC is similar to the capability exchange that happens during the NETCONF hello process described earlier in this book. This is a unary gRPC operation, which means it has a single client request followed by a single response from the server side.

Get: This RPC implements a mechanism to collect some data from a network device. Depending on the requested scope, the information can be limited by scope (for example, only configuration, only operations, only states), or it is possible to collect all the information available along a certain path that is constructed based on a particular YANG module. Much like Capabilities, Get is a unary RPC operation. In NETCONF terms, this gRPC operation unites get-config and get requests.

Set: This RPC is used to change the configuration of the target network device. The scope of configuration change can be quite broad; hence, to clarify it, you can either update the configuration (that is, change the value of a key) along the provided path or replace or delete it completely. Therefore, Set is comparable to NETCONF’s edit-config operation with either the merge, replace, or delete option. Like Capabilities and Get, Set is a unary gRPC operation.

Subscribe: This RPC creates the framework for streaming and event-driven telemetry. It allows the client to signal to the server its interest in receiving information (stream) about the values from a certain path on a regular basis. When the subscription is done, the server starts sending information until the client sends a request to unsubscribe. There is no analogue of this communication type in NETCONF (or in RESTCONF). From the gRPC operation’s type standpoint, this is bidirectional streaming.

One of the key concepts in gNMI is Path, which is used in Get, Set, and Subscribe RPCs. Effectively, Path is very similar to a URI (refer to Chapter 14). Within any application that uses gNMI, the Path setting looks as shown in Example 15-15.

Example 15-15 gNMI Specification: A Sample Path Value in the RESTCONF Format

openconfig-interfaces:interfaces/interface[name=Loopback0]

The Path value starts with the name of the YANG module (in this case, openconfig-interfaces) followed by the column separator, :, and then the YANG tree, using the / separator for a parent/children relationship. When the list is part of the path, a specific entry from the list is chosen, and the path has an element identification that consists of a key/value pair in square brackets—in this case, [name=Loopback0]. In the early days of the gNMI specification, the Path value was in the string format; Example 15-15 shows a single value of the string format. However, this is not the case anymore, and in the current version of the gNMI specification, Path is serialized following specific Protocol buffer messages. Example 15-16 demonstrates this gNMI format.

Example 15-16 gNMI Specification: gNMI Path Format

$ cat gnmi.proto

! Some output is truncated for brevity

message Path {

repeated string element = 1 [deprecated=true];

string origin = 2;

repeated PathElem elem = 3;

string target = 4;

}

message PathElem {

string name = 1;

map<string, string> key = 2;

}

! Further output is truncated for brevity

The original string format has been deprecated. A gNMI Path value may contain origin, which refers to the YANG module in use, and multiple entries (called elems) of the PathElem messages. The PathElem messages define two elements of Path: name, which is the name of the relevant YANG leaf, leaf-list, container, or list in string format, and key, which is a key/value pair used in the event that it is necessary to specify the element from the list. Using this specification, Path is now serialized in the Protobuf binary and looks as shown in Example 15-17.

Example 15-17 gNMI Specification: Path in the Protobuf Binary

origin: "openconfig-interfaces"

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Loopback0"

}

}

The Get RPC

As we continue to look at the gNMI specification, let’s now consider the Get RPC. As you saw earlier, in Example 15-14, the Get request is defined by the GetRequest Protocol buffer message, as shown in Example 15-18.

Example 15-18 gNMI Specification: GetRequest Message

$ cat gnmi.proto

! Some output is truncated for brevity

message GetRequest {

Path prefix = 1;

repeated Path path = 2;

enum DataType {

ALL = 0;

CONFIG = 1;

STATE = 2;

OPERATIONAL = 3;

}

DataType type = 3;

Encoding encoding = 5;

repeated ModelData use_models = 6;

repeated gnmi_ext.Extension extension = 7;

}

message ModelData {

string name = 1;

string organization = 2;

string version = 3;

}

! Further output is truncated for brevity

In Example 15-18, the variables prefix and path are combined together to provide a unique path to the resource that is being polled. Depending on the gNMI client implementation, it might be that only one of two is provided or that both are provided:

An empty prefix and the path value openconfig-interfaces:interfaces/interface[name=Loopback0] result in openconfig-interfaces:interfaces/interface[name=Loopback0].

The prefix value openconfig-interfaces:interfaces and the path value interface[name=Loopback0] result in openconfig-interfaces:interfaces/interface[name=Loopback0] as well.

Finally, the prefix value openconfig-interfaces:interfaces/interface[name=Loopback0] and an empty path also result in openconfig-interfaces:interfaces/interface[name=Loopback0].

The reason for this flexibility is that a single GetRequest can query multiple resources simultaneously, which allows multiple path entries per message. However, gNMI, like gRPC, is all about efficiency. Therefore, the prefix value may contain the part of the path that is common for all the requested resources, whereas the path value will have the part that is different. Ultimately, prefix is a single entry per message, whereas path is a list.

Besides the path, two more elements are mandatory from a logical standpoint: the type, which defines the scope of the information to be collected, and the encoding, which defines the encoding of the information that is expected to be received. The following scopes are available for the requested information:

ALL for all the information available at the provided path.

CONFIG for the read/write elements in the YANG modules used.

STATE for the read-only elements in the YANG modules used.

OPERATIONAL for elements marked in the schema as operational. This refers to data elements whose values relate to the state of processes or interactions running on a device.

In terms of the encoding, although gNMI is defined in the Protobuf, it supports other types of encoding, as shown in Example 15-19.

Example 15-19 gNMI Specification: Types of Data Encoding

$ cat gnmi.proto

! Some output is truncated for brevity

enum Encoding {

JSON = 0;

BYTES = 1;

PROTO = 2;

ASCII = 3;

JSON_IETF = 4;

}

! Further output is truncated for brevity

Example 15-19 doesn’t mean, though, that the whole message is structured in the binary (BYTES) or JSON format. It means that the specific part of the response message that contains data extracted from the device will be in one of these formats. The most popular format today, which is implemented across the vast majority of network vendors, is either JSON or JSON_IETF, which refers to JSON as defined in RFC 7951.

These values are mandatory from a logical standpoint because they are required to identify what you want to collect and how to represent it. However, as you learned earlier, if a key has the value 0, which is also applicable for enum data, then it is not sent inside the gRPC/Protobuf. Ultimately, this means that the default encoding is JSON, and the default type is ALL.

You can use the optional use_model variable to further narrow down the specific YANG module by providing the full name, organization, and even version. According to the specification, the GetRequest message could look as shown in Example 15-20.

Example 15-20 gNMI Specification: Sample GetRequest Message

path {

origin: "openconfig-interfaces"

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Ethernet1"

}

}

}

path {

origin: "openconfig-interfaces"

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Loopback0"

}

}

}

The message in Example 15-20 contains two paths and uses default encoding and type parameters. If the network device that receives this request has gRPC enabled, the gNMI client may receive the response shown in Example 15-21.

Example 15-21 gNMI Specification: Sample GetResponse Message

notification {

update {

path {

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Ethernet1"

}

}

}

val {

json_ietf_val: "{"openconfig-interfaces:config": {"description": "",

"enabled": true,"loopback-mode": false, "mtu": 0, "name": "Ethernet1",

"openconfig-vlan:tpid": "openconfig-vlan-types:TPID_0X8100", "type":

"iana-if-type:ethernetCsmacd"}, "openconfig-if-ethernet:ethernet":

{"config": {"openconfig-hercules-interfaces:forwarding-viable": true,

"mac-address": "00:00:00:00:00:00", "port-speed": "SPEED_UNKNOWN"},

"state": {"auto-negotiate": false, "counters": {"in-crc-errors": "0",

"in-fragment-frames": "0", "in-jabber-frames": "0", "in-mac-control-

frames": "0", "in-mac-pause-frames": "0", "in-oversize-frames": "0",

"out-mac-control-frames": "0", "out-mac-pause-frames": "0"}, "duplex-

mode": "FULL", "enable-flow-control": false, "openconfig-hercules-

interfaces:forwarding-viable": true, "hw-mac-address": "08:00:27:86:de:54",

"mac-address": "08:00:27:86:de:54", "negotiated-port-speed":

"SPEED_UNKNOWN", "port-speed": "SPEED_UNKNOWN",}}, "openconfig-

interfaces:hold-time": {"config": {"down": 0, "up": 0}, "state":

{"down": 0, "up": 0}}, "openconfig-interfaces:name": "Ethernet1",

"openconfig-interfaces:state": {"admin-status": "UP", "counters":

{"in-broadcast-pkts": "0", "in-discards": "0", "in-errors": "0",

"in-fcs-errors": "0", "in-multicast-pkts": "0", "in-octets": "0",

"in-unicast-pkts": "0", "out-broadcast-pkts": "0", "out-discards":

"0", "out-errors": "0", "out-multicast-pkts": "305", "out-octets":

"29890", "out-unicast-pkts": "0"}, "description": "", "enabled": true,

"openconfig-platform-port:hardware-port": "Port1", "ifindex": 1,

"last-change": "1595167904282637056", "loopback-mode": false, "mtu":

0, "name": "Ethernet1", "oper-status": "UP", "openconfig-vlan:tpid":

"openconfig-vlan-types:TPID_0X8100", "type": "iana-if-type:ethernetCsmacd"},

"openconfig-interfaces:subinterfaces": {"subinterface": [{"config":

{"description": "", "enabled": true, "index": 0}, "index": 0,

"openconfig-if-ip:ipv4": {"config": {"dhcp-client": false, "enabled":

false, "mtu": 1500}, "state": {"dhcp-client": false, "enabled": false,

"mtu": 1500}}, "openconfig-if-ip:ipv6": {"config": {"dhcp-client":

false, "enabled": false, "mtu": 1500}, "state": {"dhcp-client": false,

"enabled": false, "mtu": 1500}}, "state": {"counters": {"in-broadcast-

pkts": "0", "in-discards": "0", "in-errors": "0", "in-fcs-errors":

"0", "in-multicast-pkts": "0", "in-octets": "0", "in-unicast-pkts":

"0", "out-broadcast-pkts": "0", "out-discards": "0", "out-errors":

"0", "out-multicast-pkts": "305", "out-octets": "29890", "out-unicast-

pkts": "0"}, "description": "", "enabled": true, "index": 0}}]}}"

}

}

}

notification {

}

In Example 15-21, you can see that there are two notification sections:

The first one contains information which indicates that the interface named in that section is configured.

The second one is empty, which means that an interface named in the first section doesn’t exist on the device.

Even without looking into the details of the corresponding GetResponse message, you can figure out the message structure. However, to prevent you from having any doubts, Example 15-22 shows the relevant Protocol buffers messages.

Example 15-22 gNMI Specification: Messages Associated with the GetResponse Message

$ cat gnmi.proto

! Some output is truncated for brevity

message GetResponse {

repeated Notification notification = 1;

Error error = 2 [deprecated=true];

repeated gnmi_ext.Extension extension = 3;

}

message Notification {

int64 timestamp = 1;

Path prefix = 2;

string alias = 3;

repeated Update update = 4;

repeated Path delete = 5;

}

message Update {

Path path = 1;

Value value = 2 [deprecated=true];

TypedValue val = 3;

uint32 duplicates = 4;

}

message TypedValue {

oneof value {

string string_val = 1;

int64 int_val = 2;

uint64 uint_val = 3;

bool bool_val = 4;

bytes bytes_val = 5;

float float_val = 6;

Decimal64 decimal_val = 7;

ScalarArray leaflist_val = 8;

google.protobuf.Any any_val = 9;

bytes json_val = 10;

bytes json_ietf_val = 11;

string ascii_val = 12;

bytes proto_bytes = 13;

}

}

! Further output is truncated for brevity

At a high level, the GetResponse message consists of multiple notification entries, which have the format of a Notification message. (This is why Example 15-21 shows two notification entries.) The Notification message is a multipurpose message that is used not only in GetResponse but also in SubscribeResponse (which is used in streaming). It may contain a timestamp setting (depending on whether the network device vendor has implemented it), which provides the time, in nanoseconds, from the beginning of the epoch (January 1, 1970, 00:00:00 UTC). It may also include prefix, explained earlier, and alias, which may be used if supported by the network device to compress the path; when an alias is created for a path, the messages contain the value of the alias instead of a prefix, which helps reduce the length of the transmitted message. However, all those variables are optional. What is mandatory is either the update or delete variable; update is used to provide the information per GetResponse if the information exists, and delete contains the paths of the elements that were deleted (delete is not used in the current version of the specification for GetRequest and is used for SetRequest only).

Finally, the Update message contains the path to the resource being polled and the value stored in the variable val, which is further defined by the message TypedValue. Inside this message is the value of val, which is a long list of the data types. (You might notice in Example 15-21 that the response is in the json_ietf_val data type.)

The Set RPC

The Set RPC operation is generally similar to the Get, as it is also a unary gRPC type; however, it has its own set of messages. Example 15-23 shows the details of the SetRequest message.

Example 15-23 gNMI Specification: SetRequest Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message SetRequest {

Path prefix = 1;

repeated Path delete = 2;

repeated Update replace = 3;

repeated Update update = 4;

repeated gnmi_ext.Extension extension = 5;

}

! Further output is truncated for brevity

Although the message in Example 15-23 is very compact, it allows you to choose the necessary operation—and it is possible to have multiple operations in a single message due to repeated keyword. These are the potential operations:

prefix: This operation is covered earlier in this chapter, in the section “The Get RPC.”

delete: This operation contains the paths that are to be removed. You use this operation when you delete a part of the configuration on a network device.

replace: This operation contains the data in the Update message format (refer to Example 15-22). Because the Update message contains the path toward the resource and val with the actual data to be set along the path, the logic of this operation causes the data provided to overwrite what already exists in the destination node.

update: This operation also relies on the Update message format. However, the logic of the operation is different: The data from the val entry is merged with what already exists in the node that has the provided path. If nothing exists, then a new entry is created.

Example 15-21 shows a GetRequest for the Loopback0 interface; however, in that example, GetRequest is not configured on the target network function. Hence, in the GetResponse from Example 15-22, the notification for this request is empty. Example 15-24 illustrates the use of SetRequest to configure the Loopback0 interface at the target network function.

Example 15-24 gNMI Specification: Sample SetRequest Message

update {

path {

origin: "openconfig-interfaces"

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Loopback0"

}

}

}

val {

json_val: "{"name": "Loopback0", "config": {"name": "Loopback0",

"enabled": true, "type": "iana-if-type:softwareLoopback", "description":

"Test IF 2"}, "subinterfaces": {"subinterface": [{"index": 0, "config":

{"index": 0, "enabled": true}, "openconfig-if-ip:ipv4": {"addresses":

{"address": [{"ip": "10.1.255.62", "config": {"ip": "10.1.255.62",

"prefix-length": 32, "addr-type": "PRIMARY"}}]}}}]}}"

}

}

In Example 15-24, you can see the update entry that sets the path for the node to be configured and val, which contains the entry json_val. This entry has a value that is a JSON-encoded string containing all the key/value pairs to be set on the target device. The corresponding SetResponse message might look as shown in Example 15-25.

Example 15-25 gNMI Specification: Sample SetResponse Message

response {

path {

origin: "openconfig-interfaces"

elem {

name: "interfaces"

}

elem {

name: "interface"

key {

key: "name"

value: "Loopback0"

}

}

}

op: UPDATE

}

timestamp: 1595182804100815323

This message follows the SetResponse Protobuf message specification, as shown in Example 15-26.

Example 15-26 gNMI Specification: SetRequest Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message SetResponse {

Path prefix = 1;

repeated UpdateResult response = 2;

Error message = 3 [deprecated=true];

int64 timestamp = 4;

}

message UpdateResult {

enum Operation {

INVALID = 0;

DELETE = 1;

REPLACE = 2;

UPDATE = 3;

}

int64 timestamp = 1 [deprecated=true];

Path path = 2;

Error message = 3 [deprecated=true];

Operation op = 4;

}

! Further output is truncated for brevity

The parent message GetResponse contains prefix, timestamp, and response, formatted according to the UpdateResult message. In the current version of the gNMI specification (0.7.0), the response message contains the path of the affected resource and the result of the operation in the variable op, which corresponds to the SetRequest type: DELETE, REPLACE, or UPDATE.

The Capabilities RPC

You have learned details about some complicated RPCs and their associated messages. The Capabilities RPC is significantly easier to understand. As mentioned earlier, the Capabilities RPC is used to collect information about the YANG modules supported by the target network device. The CapabilityRequest message in Example 15-27 is the simplest Protobuf message described in this book.

Example 15-27 gNMI Specification: CapabilityRequest Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message CapabilityRequest {

}

! Further output is truncated for brevity

This message effectively doesn’t have a variable at all. In fact, this is quite logical: When the client queries a network device about what capabilities it supports, it doesn’t need to specify anything else. Example 15-28 shows the format of the CapabilityResponse message.

Example 15-28 gNMI Specification: CapabilityResponse Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message CapabilityResponse {

repeated ModelData supported_models = 1;

repeated Encoding supported_encodings = 2;

string gNMI_version = 3;

}

! Further output is truncated for brevity

The ModelData and Encoding message formats (which are explained in the section “The Get RPC,” earlier in this chapter) are provided with the keyword repeated because a network device might (and typically does) support multiple YANG modules or encoding formats. The variable gNMI_version is not repeated, though, as the network device would have a single version of the gNMI specification implemented.

A sample CapabilityRequest message is not shown, as this type of message doesn’t contain any data. However, a CapabilityResponse message can be rather interesting, as shown in Example 15-29.

Example 15-29 gNMI Specification: Sample CapabilityResponse Message

supported_models {

name: "openconfig-packet-match"

organization: "OpenConfig working group"

version: "1.1.1"

}

supported_models {

name: "openconfig-hercules-platform"

organization: "OpenConfig Hercules Working Group"

version: "0.2.0"

}

supported_models {

name: "openconfig-bgp"

organization: "OpenConfig working group"

version: "6.0.0"

}

!

! Some output is truncated for brevity

!

supported_encodings: JSON

supported_encodings: JSON_IETF

supported_encodings: ASCII

gNMI_version: "0.7.0"

From Example 15-29, you can see that the YANG modules provided in the support_models variable have a format similar to the format shown in Chapter 14 for NETCONF. Close to the end of the message are multiple supported_encodings entries, which hold information about the encoding types supported by the network device. Finally, gNMI_version contains the version of the gNMI specification implemented on the device.

The Subscribe RPC

The Subscribe RPC is used in streaming or event-driven telemetry. Per the gNMI specification, both the client and the server can stream information bidirectionally. The general flow is as follows:

The client signals its interest to the gNMI server, which resides on a network device, to receive the information from a certain path.

The server streams the information for the subscribed categories until the client unsubscribes.

The SubscribeResponse message format is quite simple, as it relies on the Notification message, which you are already familiar with (see Example 15-30).

Example 15-30 gNMI Specification: SubscribeResponse Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message SubscribeResponse {

oneof response {

Notification update = 1;

bool sync_response = 3;

Error error = 4 [deprecated=true];

}

repeated gnmi_ext.Extension extension = 5;

}

! Further output is truncated for brevity

This message contains the single entry response, which can be in either Notification or boolean format. The variable update with the Notification message format is used to distribute the actual data of the corresponding paths, whereas sync_respones indicates that all data values corresponding to the path specified in SubscriptionList have been transmitted at least once.

You are probably wondering what SubscriptionList is. It is part of SubscribeRequest, which is quite a complicated message, as you can see in Example 15-31.

Example 15-31 gNMI Specification: SubscribeRequest Message Format

$ cat gnmi.proto

! Some output is truncated for brevity

message SubscribeRequest {

oneof request {

SubscriptionList subscribe = 1;

Poll poll = 3;

AliasList aliases = 4;

}

}

message Poll {

}

message SubscriptionList {

Path prefix = 1;

repeated Subscription subscription = 2;

bool use_aliases = 3;

QOSMarking qos = 4;

enum Mode {

STREAM = 0;

ONCE = 1;

POLL = 2;

}

Mode mode = 5;

bool allow_aggregation = 6;

repeated ModelData use_models = 7;

Encoding encoding = 8;

bool updates_only = 9;

}

message Subscription {

Path path = 1;

SubscriptionMode mode = 2;

uint64 sample_interval = 3;

bool suppress_redundant = 4;

uint64 heartbeat_interval = 5;

}

enum SubscriptionMode {

TARGET_DEFINED = 0;

ON_CHANGE = 1;

SAMPLE = 2;

}

message QOSMarking {

uint32 marking = 1;

}

! Further output is truncated for brevity

SubscribeRequest consists of one of the three possible types: SubscribeList, Poll, or AliasList. AliasList is used to create a mapping of the paths to short names to improve the efficiency of the communication by reducing the data sent between the gNMI client (the NMS) and the gNMI server (the network device). The aliases are optional. The Poll message, as you can see in Example 15-31, doesn’t have any further parameters. In fact, it is used in poll-based information collection, where the client sends the Poll messages to trigger the network device to send the update when the subscription is created.

The subscription is created inside the SubscribeList message, which has the following fields:

prefix: This field contains the part of the path that is common for all the subscriptions.

Subscription: This field (or fields) contains a path that it wants to receive the information from, and a subscription mode (defined by the target, on information change, and sampling over a certain time interval). In addition, sample_interval identifies how often updates are sent from the server to the client, suppress_redundant optimizes the transmission in the update in such a way that the updates aren’t sent if there are no changes even if the sample time comes, and heartbeat_interval changes the behavior of suppress_redundant so that the update is sent once per sample_interval to notify the client that the server is up and running.

use_aliases: This field notifies the server about whether the aliases should be used.

qos: This field tells the server which QoS marking it should use for the telemetry packets.

mode: This field sets the type of the subscriptions for all the paths. The available options are STREAM (which means the server streams the data on the defined sample_interval), POLL (which means the server sends the updates per the client’s Poll message), and ONCE (which means the server sends the response once per the client’s request and then terminates the gRPC session).

allow_aggregation: This field checks whether there are any data paths available for aggregation so that it can aggregate them into a single update message and send a bulk update to the client.

use_models: This field makes it possible to narrow the search for the paths specified in Subscription to certain data models.

encoding: This field sets the data format that the server should use to send updates to the client.

updates_only: This field changes the way the information from the server is sent; instead of sending the state of the identified paths, it sends only updates to the states compared to the previous information distribution.

Sample messages showing the SubscribeRequest and the SubscribeResponse messages are omitted for brevity, but you can guess their content based on the examples of other messages in this chapter.

Note

In some of the messages shown in this chapter, you might have noticed references to the gNMI extensions. These extensions are available for future possible development of gNMI functionality and are optional to any of the messages. They aren’t widely used today.

Managing Network Elements with gNMI/gRPC

Earlier in this chapter, you saw Python code that can be used to create the client side (the stub) and the server side (the servicer) of a gRPC-based application. In the case of gNMI, the servicer is already deployed on a network device. Therefore, you just need to enable it either in secure mode (using the SSL certificates and/or PKI) or insecure mode (without encryption) and create the code for the stub part. Depending on the network operating system, some of the modes (such as insecure) may be not available.

Before you enable gNMI, you need to ensure that your software supports it. If it does, you can enable gNMI on Cisco IOS XE network devices as shown in Example 15-32.

Example 15-32 Enabling gNMI in Insecure Mode in Cisco IOS XE

NPAF_R1> enable NPAF_R1# configure terminal NPAF_R1(config)# gnmi-yang NPAF_R1(config)# gnmi-yang server NPAF_R1(config)# gnmi-yang port 57400 NPAF_R1(config)# end

gNMI doesn’t have a predefined port number; hence, different vendors implement different default values for the gNMI service. You can set this parameter to the value you like. However, if you don’t set the value, Cisco IOS XE uses the default, which is TCP/50052. Refer to the official Cisco documentation to enable the secure gNMI server on Cisco IOS XE network devices. You can verify whether the service is operational as shown in Example 15-33.

Example 15-33 Verifying That gNMI Is Operational in Cisco IOS XE

NPAF_R1# show gnmi-yang state State Status -------------------------------- Enabled Up

Besides Cisco IOS XE, gNMI is supported in the latest releases of Cisco IOS XR (starting from 6.0.0) and Cisco NX OS (starting from 7.0(3)I5(1)) as well. Moreover, the vast majority of other network operating systems, such as Nokia SR OS, Arista EOS, and Juniper Junos, support gNMI as well. Therefore, you can use it as the main protocol to manage your network.

There are currently no Python libraries that work well with gNMI specifically. However, the library grpc (used earlier in this chapter) can help you create the proper Python scripts. If you cloned the gNMI specification from the official repository, you have the gnmi.proto file, and by using the grpc_tools.protoc module, you can convert the file into a set of Python metaclasses as shown in Example 15-34.

Example 15-34 Creating Python Metaclasses for the gNMI Specification

$ ls gnmi.proto $ python -m grpc_tools.protoc -I=. --python_out=. --grpc_python_out=. Gnmi.proto $ ls gnmi_pb2_grpc.py gnmi_pb2.py gnmi.proto State Status -------------------------------- Enabled Up

Earlier in this chapter, in Example 15-9, you saw how to create the gNMI stub for an arbitrary specification. In this section, you will create it by using the gNMI specification, leveraging explanations provided earlier in this chapter. Example 15-35 shows a script to use the Get RPC from the gNMI specification.

Example 15-35 Sample gNMI Get Python Script

$ cat gNMI_Client.py

#!/usr/bin/env python

# Modules

import grpc

from gnmi_pb2_grpc import *

from gnmi_pb2 import *

import re

import sys

import json

# Variables

path = {'inventory': 'inventory/inventory.json', 'network_functions':

'inventory/network_functions'}

# User-defined functions

def json_to_dict(path):

with open(path, 'r') as f:

return json.loads(f.read())

def gnmi_path_generator(path_in_question):

gnmi_path = Path()

keys = []

# Subtracting all the keys from the elements and storing them separately

while re.match('.*?[.+?=.+?].*?', path_in_question):

temp_key, temp_value = re.sub('.*?[(.+?)].*?', 'g<1>',

path_in_question).split('=')

keys.append({temp_key: temp_value})

path_in_question = re.sub('(.*?[).+?(].*?)', f'g<1>{len(keys) - 1}g<2>',

path_in_question)

path_elements = path_in_question.split('/')

for pe_entry in path_elements:

if not re.match('.+?:.+?', pe_entry) and len(path_elements) == 1:

sys.exit(f'You haven't specified either YANG module or the top-level

container in '{pe_entry}'.')

elif re.match('.+?:.+?', pe_entry):

gnmi_path.origin = pe_entry.split(':')[0]

gnmi_path.elem.add(name=pe_entry.split(':')[1])

elif re.match('.+?[d+?].*?', pe_entry):

key_id = int(re.sub('.+?[(d+?)].*?', 'g<1>', pe_entry))

gnmi_path.elem.add(name=pe_entry.split('[')[0], key=keys[key_id])

else:

gnmi_path.elem.add(name=pe_entry)

return gnmi_path

# Body

if __name__ == '__main__':

inventory = json_to_dict(path['inventory'])

for td_entry in inventory['network_functions']:

print(f'Getting data from {td_entry["ip_address"]} over gNMI...

')

metadata = [('username', td_entry['username']), ('password',

td_entry['password'])]

channel = grpc.insecure_channel(f'{td_entry["ip_address"]}:

{td_entry["port"]}', metadata)

grpc.channel_ready_future(channel).result(timeout=5)

stub = gNMIStub(channel)

device_data =

json_to_dict(f'{path["network_functions"]}/{td_entry["hostname"]}.json')

gnmi_message = []

for itc_entry in device_data['intent_config']:

intent_path = gnmi_path_generator(itc_entry['path'])

gnmi_message.append(intent_path)

gnmi_message_request = GetRequest(path=gnmi_message, type=0, encoding=0)

gnmi_message_response = stub.Get(gnmi_message_request, metadata=metadata)

print(gnmi_message_response)

The script shown in Example 15-35 was used to generate the GetRequest and GetResponse messages shown earlier in this chapter.

Summary

This chapter describes gRPC transport, Protobuf data encoding, and gNMI specification, including the following points:

gRPC is a fast and robust framework created by Google to allow communication between applications.

gRPC supports four types of communication: unary RPC, server-based streaming, client-based streaming, and bidirectional streaming.

gRPC doesn’t define any message types or formats. Each application may have its own set of messages and RPC operations.

Protocol buffers are used to specify the services, the RPCs, and the message formats for the gRPC services.