To view the results, we need to grab the files off each host machine and then concatenate them together to form a composite whole. We can then view the final merged file using a JMeter GUI client. To grab the files, we can use any SSH File Transfer Protocol (SFTP) tool of our choice. If you are on a Unix-flavored machine, chances are that you already have the scp command-line utility handy; that's what we will be using here. To proceed, we will need the name of the host machine that we are trying to connect to. To get this, type the exit command on the console of the first virtual machine.

You will see a line similar to the following:

ubuntu@ip-10-190-237-149:~$ exit logout Connection to ec2-23-23-1-249.compute-1.amazonaws.com closed.

The ec2-xxxxxx.compute-1.amazonaws.com line is the host name of the machine. We can now connect to the box using our keypair file and retrieve the results file. At the console, issue the following command:

scp -i [PATH TO YOUR KEYPAIR FILE] ubuntu@[HOSTNAME]:"*.csv" [DESTINATION DIRECTORY ON LOCAL MACHINE]

As an example, on our box our keypair file named book-test.pem is stored under the .ec2 directory in our home directory, and we want to place the results file in /tmpdirectory. Therefore, we run the following command:

scp -i ~/.ec2/book-test.pem [email protected]:"*.csv" /tmp

This will transfer all the .csv files on the AWS instance to our local machine under the /tmp directory.

Repeat the command for the three additional virtual boxes.

After transferring all the result files from the virtual machines, we can terminate all the instances as we are done with them.

You can either shut down each one individually using vagrant destroy[VM ALIAS NAME](vagrant destroy vm1 will shut down virtual box aliased vm1), or shut down all the running instances using vagrant destroy.

With our entire results file from all hosts now available locally, we will need to merge them together to get an aggregate of response time across all hosts. We can do this with any editor that can deal with the CSV file format. Basically, you will open a file (say vm1-out.csv) and append the entire contents of the other files (vm2-out.csv, vm3-out.csv, and vm4-out.csv) to it. Alternatively, this can all be done from the command line. For those on Unix-flavored machines, the cat command can be employed. Open the command line and change directory to the location where you have transferred the result files. Then, run the following on the console:

cat vm1-out.csv vm2-out.csv vm3-out.csv vm4-out.csv >> merged-out.csv

This creates a file named merged-out.csv that can now be opened in our JMeter GUI client. To do that, perform the following steps:

- Launch the JMeter GUI.

- Add a Summary Report listener by navigating to Test Plan | Add | Listener | Summary Report.

- Click on Summary Report.

- Click on the Browse... button.

- Select the merged-out.csv file.

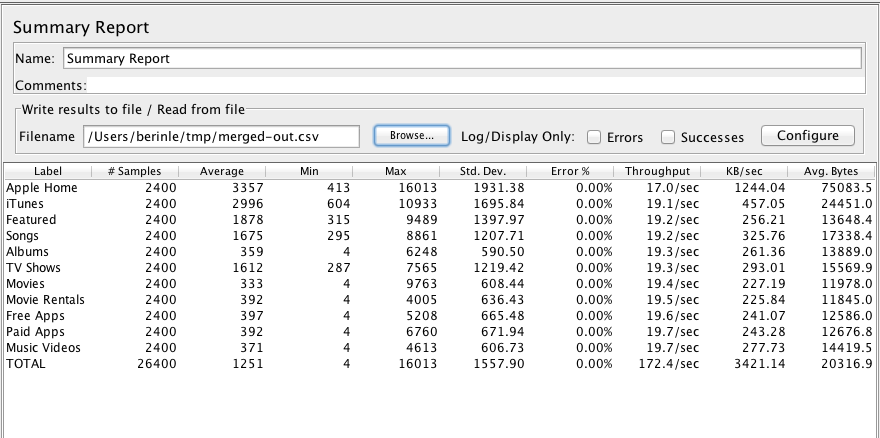

Since our test plan spins off 300 users and runs for two iterations, each virtual node generates 600 samples. Since we ran this across four nodes, we have a total of 2,400 samples generated, as can be seen from the Summary Report listener in the following screenshot:

We also see that the Max response time is not too shabby. There were no errors reported on any of the nodes and the throughput was good for our run. These are not bad numbers considering that we used AWS small instances. We can always put more stress on the application or web servers by spinning off more nodes to run test plans or using higher capacity machines on AWS. Although we have only used four virtual boxes for illustrative purposes here, nothing prevents you from scaling out to hundreds of machines to run your test plans. As you start to scale out to more and more servers for your test plans, it may become increasingly difficult and cumbersome to simultaneously start your test plans across all nodes.

At the time of writing, we discovered yet another tool that promises to ease management pain across multiple AWS nodes or in-house networked machines. This tool helps spin off AWS instances (like we have done here), install JMeter, run a test plan distributing the load across the number of instances spun, and gather all the results from all hosts to your local box, all the while giving you the real-time aggregate information on the console. At the end of the tests, it terminates all AWS instances that were started. We gave it a spin, but couldn't quite get it working as advertised. It is still worth keeping an eye on the project, and you can find out more about it at https://github.com/oliverlloyd/jmeter-ec2. Furthermore, we should mention that there are some services out on the web, helping to bring ease into distributed testing. Two of these services are Flood.io (http://flood.io) and BlazeMeter (http://blazemeter.com/). We will cover these two awesome cloud services in the sections that follow.