Human–Computer Interface in Pervasive Environments

Human–computer interaction (HCI) bridges the physical world and the digital world, providing communication channels for humans to send requests to and fetch responses from computer systems. Traditional HCI is restricted to a one–one mode where only one user interacts with one device (such as a personal computer) through a few communication channels such as a mouse and a keyboard. However, in past decades, technological development has diversified the devices with which humans interact (e.g., personal digital assistant [PDA], smartphone, tablet computer, etc.) and the modalities by which humans interact (e.g., speech, gesture, touch, etc.). For this reason, users now require HCIs to migrate their interactions across different devices or modalities in pervasive environments when they are not satisfied with current devices. To provide better user experiences under multidevice and multimodal environments, we propose an HCI service selection algorithm considering not only context information and user preferences but also interservice relations, such as relative location. Simulation results illustrate that the algorithm is effective and scalable for interaction service selection. We then propose a Web service–based HCI migration framework to support an open pervasive space full of dynamically emerging and disappearing devices.

Computer systems play a prime role in support of information delivery. Generally, the effectiveness of information systems largely relies on two factors: information quality and presentation. On the other hand, expansion of the Internet and other sources of digital media have provided people with access to a wealth of information. This trend (or information overload) features new requirements for both high information quality and smart presentation. Moreover, versatile sources of digital media greatly extend computer interfaces and thereby promote HCI technologies to a new generation.

Traditional HCI is restricted to a one–one mode where only one user interacts with one device, such as a personal computer, through a few communication channels, such as a mouse and a keyboard. Research on HCI has promoted new technologies and improvements in user experience. However, in past decades, the rise of mobile devices (e.g., PDA, smartphone, tablet computer, etc.) has impacted the monotonous interaction pattern between human and personal computers, and the development in computer vision and sound processing has enriched communication channels to digital media (e.g., speech, gesture, touch, etc.). Trends such as ubiquitous computing [1] gradually have made traditional HCI technology appear insufficient and inconvenient, while collaborative and distributed HCI technologies using multiple devices and interaction modalities are gaining more and more attention.

We offer two possible scenarios for multimodal and multiplatform HCI under a pervasive computing circumstance.

■ Scenario 1. Suppose a user is taking a trip and bringing his camera. He takes photos of the landscape and would like to share them on a social network. Before uploading, he wants to edit these photos and select some of them for sharing. He then unlocks his mobile phone where the photos just taken are displayed. He edits some photos and uploads them to Facebook. While he is uploading the photos, he finds a new portrait of his friend. To obtain a larger view of the image, he steps near a public computer and that Web page is automatically displayed on the computer without any login requirements. In this scenario, interaction is migrated among the camera, mobile phone, and public computer.

■ Scenario 2. Suppose a father is reading a business document on his personal computer. The document is displayed on the screen as a traditional PDF file. In a while, his 10-year-old son would like to use his father’s computer to continue reading a story about Peter Pan he had not finished the night before. After the son takes a seat and opens the story, the computer automatically reads it to him aloud and displays it word by word according to the child’s reading speed. In this scenario, interaction is migrated from a visual form to an audio form, according to different user contexts.

These two simple scenarios illustrate the following requirements for HCI within a pervasive environment:

■ One user, many devices. Interaction needs to be migrated across multiplatforms according to context awareness such as physical positions of users and devices. The whole interaction system must be able to dynamically detect nearby devices and establish appropriate connections with them. Moreover, interactions may be distributed among several devices.

■ Many users, one device. Interaction needs to be migrated across multimodals according to user preferences and contexts.

One solution to these requirements is called interaction migration, which is to migrate the interaction process among multiple devices, and even different modalities, in a graceful manner.

Interaction migration can be achieved at different levels. Process level migration is a straightforward intuition, but its tendency to form bottlenecks when transferring large data and reconstructing processes on various embedded platforms hampers its widespread use. Task level migration, on the other hand, extracts logic tasks and their relationships from an application using a model-based method [2]; then migration can be achieved by distributing tasks to multiple devices. However, although such migration techniques perform well at modeling logic and temporal relationships among tasks, to construct ontology and categorization of tasks is nontrivial, thus placing obstacles for migration among interaction modalities. In recent years, a more flexible and nature concept (or service-oriented concept) has been proposed for HCI modeling. The nature and progression of the service encounter has become a key concern of human–computer interface designers, which is similar to a majority of services supported and provided by commercial information systems. [3]. This service-oriented HCI concept transforms interaction migration problems to service selection problems and attempts to find solutions from Web service selection techniques.

We adopt the service-oriented concept and present a context-aware HCI service selection process that considers not only context information and user preferences but also interservice relations. Our main contributions are:

1. Context-awareness method for service selection. Take both interservice contexts and user contexts into consideration during migration request submission and service matching algorithm, making matching decision more flexible and intelligent.

2. Service selection algorithm with good scalability. Introduce interaction hot spot to evaluate service combination results.

3. Formulate device matching during migration process as service combination discovery process. Use service concept to describe interaction functionalities of application. This service-oriented concept generalizes interaction types and makes it possible to migrate interaction to multiple devices and modalities.

We further proposed a Web service–based HCI migration framework in which the interaction logic of application is modeled as an interaction service; through such modeling and ontology of services, it is possible to migrate interaction to multiple devices and modalities. Besides, user preference and context awareness are concerned in the framework by enclosing them into descriptions of interaction interfaces. Moreover, the framework supports dynamically emerging and disappearing devices in pervasive environments.

5.2 HCI Service and Interaction Migration

The proliferation of mobile devices and networks leads to an increasing demand for multidevice and multimodal interactions. The idea of interaction migration has been proposed to meet this demand in which HCI can be migrated across different platforms and modalities to provide better user experiences. Various interaction migration systems take advantage of model-based methods to separate interaction tasks and to generate corresponding interfaces on target platforms [2,4,5]. Some authors, however, use another mechanism based on Web service structure to provide migration under pervasive computing environment [1,6,7].

Our idea follows a service-oriented architecture (SOA) that models HCI by interaction services and then formulates devices’ semantics matching as a service selection process. To address the issue of service selection or service combination discovery, researchers have developed language descriptions for Web services such as WSDL [8], BPEL [9], and DAML [10]. These languages successfully unify the structure and provide a formal description of Web service functionality. The shortfall of these languages is their ignorance of semantics. Therefore, ontology languages, such as OWL-S [11], are deployed to specify the Web service semantics.

Within these ontology-based service-matching methods [12–16], OWLS-MX [12] is the first hybrid OWL-S service matchmaker. It exploits features of both logic-based and information retrieval (IR)-based approximate matching. It uses IR to handle semantics, thus improving its performance in Web service matching [13], and uses a ranking method to decide the most suitable services. It also presents a device ontology that provides a general framework for device description. When the accuracy and scalability of semantic matching processes are considered, ranking surpasses other methods for its robustness. Other researchers take a look at a finer decomposition of applications. For example, ScudWare [14] successfully proposes a middleware platform for smart vehicle space. Different from other frameworks, it further divides service into many interdependent components. These components support migration and replication, thus making them able to be distributed onto different devices. The behaviors of components are crucial during the process of application migration and thereby are nontrivial to performing such partitions.

Besides service modeling, another important factor of concern in service selection is context. A context is “any information that can be used to characterize the situation of an entity. An entity is a person, place, or object that is considered relevant to the interaction between a user and an application, including the user and the application themselves” [17]. Combining contexts into service selection makes selection framework more flexible and more intelligent when meeting environment changes and user behaviors automatically [18] presents a context-based matching for Web service combination. The paper adopts an ontology-based categorization, a two-level mechanism for modeling, and a peer-to-peer matching architecture. In another implementation of context awareness [19], the authors add context attributes to the famous mobile service discovery system JINI. In another study [20], the authors highlight the context awareness in mobile network environments. They propose an algorithm for context-aware network selection.

Our approach also considers context in semantic matching. Our framework is similar to others [19] because we integrate context attributes into interaction device descriptions and use them to aid in service matching. In addition to context information, we also consider user preferences for each kind of service by recording interaction history. This kind of information helps match user-expecting devices with certain services, thus increasing matching accuracy.

In order to achieve better accuracy, we have made two assumptions: one is that services are well categorized so that different services are distinguished from each other; the other is that a powerful middleware is used so that services are described in a standard and unified format, which greatly reduces the difficulty for service matching. We believe that the two assumptions are reasonable. For the first, services in a pervasive computing environment can be categorized according to their corresponding hardware features because they are device-oriented and their service boundaries are definite. For the second, powerful middlewares are being well studied, and some of them are even OS level platforms. This progress in research and industry practices has made it possible to perform semantic matching on the middleware level.

In another study [21], the authors consider the problem of bottlenecks in centralized dynamic query optimization methods due to messages exchanged on a bad network. They present a decentralized method for the optimization processes. Another study has conducted interesting research on user measurement of the adoption of mobile services [22] and lists some of the constructs that may influence the user acceptance of services. Context, personal initiatives and characteristics, trust, perceived ease of use, perceived usefulness, and intention to use are mentioned as important constructs of their instrument.

Service selection is a classical problem in Web service–related research. There are several projects studying the problem of quality of service (QoS) empowered service selection. In one study [23], authors present a QoS-aware middleware supporting quality-driven Web service combination. They propose two service selection approaches for constructing composite services: local optimization and global planning [24], which offer similar approaches in service selection with QoS constraints in a global view. Both methods are based on linear programming and are best suitable for small-sized problems because their complexity increases exponentially when problem size increases [25]. The authors present an autonomic service-provisioning framework to establish QoS-assured end-to-end communication paths across independent domains. They model the domain composition and adaptation problem as classical k-multiconstrained optimal path (MCOP) problems. These works all use Internet-wide service selection and thus do not consider the service location during selection. In HCI, service location is an important factor in service selection. We use a local matching procedure to obtain the matching degree for each service and a global selection procedure to find the best service combination. Service combination is selected when a service can effectively cover some areas, defined as interaction hot spots, where a user can effectively meet the HCI requirements.

In another study [26], the authors introduce a context-based collaborative selection of SOA services. Although it takes service locations into account, the distance-based location is not sufficient for HCI service selection because most devices/services not only have an interaction range requirement but also the best interaction angle. In two more studies [27,28], the authors give an introduction to HCI migration. They mention the problem of device location but do not define location constraints.

Based on the idea of interaction service, we further design a framework using Web service technologies to support HCI migration in environments composed of dynamically emerging and disappearing devices.

Previous works have designed several frameworks for multiplatform collaborative Web browsing. A good example of collaborative Web browsing can be found in WebSplitter [29], a framework based on XML splitting Web pages and delivering appropriate partial views to different users. Bandelloni and Paternò [30], adopting similar ideas, implemented Web pages migration between a PC and a PDA by recording system states and creating pages with XHTML. This research provides access for users to browsing Web pages on various types of devices. However, the framework is only for collaborative Web browsing.

The main obstacle to designing a framework for general application user interfaces is the heterogeneity of different platforms. With various limitations and functionalities, it is hard to decide the suitable UI elements regarding the target platforms. One model-based approach [5] tried to define application tasks and modeled temporal operators between tasks. This ConcurTask Trees (CTT) model divides the whole interaction process into tasks and describes the logic structure among them, which makes the responsibilities of target platform user interfaces clear. Patern`o and Santoro made great contributions within this realm and offered detailed support for applying the model [31,32]. Another important issue is automatic generation of user interfaces. Unfortunately, automatic generation is not a general solution due to the varying factors that have to be considered within the design process, although a semiautomatic mechanism is more general and flexible [33].

Based on a CTT model and XML-based language, TERESA [34] supports transformation from task models to abstract user interfaces and then to user interfaces on specific platforms. The principle is to extract an abstract description of the user interface on a source platform by a task model and then to design a specific user interface by the environment on the target platform. TERESA is able to recognize suitable platforms for each task set and to describe the user interfaces using an XML-based language, which is finally parsed along with platform-dependent information to generate user interfaces. TERESA tools perform rapidly in deploying applications that can be migrated over multiple devices, but it addresses only the diversity of multiple platforms while neglecting the task semantics of users. With these insights, Bandelloni and Paternò [35] integrated TERESA to develop a service to support runtime migration for Web applications. However, the application migration service designed by Bandelloni needs a centralized server and device registration, which is costly and unadoptable for new devices. Moreover, due to its being based on task model, which is constrained by the task functionality, it is unable to handle multimodal interactions such as visual to audio.

5.3 Context-Driven HCI Service Selection

The basic idea of our design is that, when encountering interaction migration, users are usually inexpert in deciding the target platform because they do not have the insight into device capability and compatibility. Therefore, our selection method aims at providing an automatic selection mechanism rather than a user-initiative selection with the help of context information and user preference. We also separate interaction tasks from application logic and model them as interaction services. With the help of service description technology, we propose a structure for interaction service selection to achieve multimodal and multiplatform migration.

5.3.1 Interaction Service Selection Overview

Figure 5.1 shows an overall description of the selection process in our framework. Three main components comprise the interaction environment: the user device, which contains user identification and personal information; the context manager, which maintains context information caught by various sensors; and the interaction device, which performs service matching and provides interaction interfaces. The following steps are involved in the whole process.

1. Initiate migration request. Migration requests can either be driven by context or announced by users. In both situations, those requests are sent to the context manager. In our design, the context manager takes charge of all sensor networks in the environment, while the user device holds the user identification. The context manager is able to trace the changing contexts of a certain user by monitoring parameters such as user position, facial expressions, and gestures. A key idea is that it is often natural for a user to migrate interaction when context changes. For instance, a user may express the need for interaction migration by performing a certain gesture or by simply approaching target devices. Techniques such as human behavior modeling [36] are potential solutions for such context detection. Therefore, for a user-driven migration request, the user device directly forwards the migration request to the context manager. Notably, although initiated by the user, the migration requests do not directly specific target devices; this is different from a user-initiative selection method. On the other hand, when a switch in context is detected (context-driven situation), the context manager will notify the user device to forward the migration request. This seemingly redundant behavior is necessary because the context manager does not hold any user information (for privacy reasons).

Figure 5.1 Selection process overview.

2. Dispatch requests. The whole service selection process is divided into two parts: local service matching and global combination selection. Local service matching is performed on device terminals in a distributed manner. Therefore, after receiving migration requests from a user device, the context manager dispatches requests to devices near the user for local service matching. The degree of matching will be sent back to the context manager after calculation. Future improvements such as device filtering and request reforming can be implemented within this step to address accuracy and performance concerns.

3. Local service matching. Local service matching is performed on device terminals and returns the degree of matching to the context manager. Service functionality requirements, user preferences, and contexts are taken into account in the algorithm. Those interaction devices that cannot provide these services will not respond to the request, thus alleviating burdens on network transmission within a device-rich environment. Context information can be retrieved from the context manager if needed in local selection matching.

4. Global combination selection. After receiving the matching degrees calculated by device terminals, the context manager selects the best service combination for the user’s requests. User request, interservice contexts, and local matching degrees are integrated here to come up with the best service combination. Due to the complexity of the combination problem, we provide an approximate algorithm for finding the optimal service combination. The final selected service combination results are returned to the user device.

5. Migration. After the user device obtains target interaction devices, it multicasts connection request to those targets. Selected devices then send back Web service descriptions so that connections between the user device and the targets can be established. Subsequent interaction processes are not included in this section because we focus on service selection.

5.3.2.1 Service-Oriented Middleware Support

Although having been proposed for decades, the concept of interaction migration has not been implemented in practical applications. The major obstacle is that it is hard for application developers to cover tedious details of interaction processes and to modify existing applications to support such migration. To solve this problem, we propose an HCI migration support environment (MSE, refer to Section 5.5), which exists between the application level and OS level, acting as middleware, hiding platform-dependent details from users, and providing interaction migration Application Program Interfaces (APIs) for upper applications. Here, we first assume there is middleware in which HCI processes can be fulfilled by calling interaction services. In the system, local and remote interaction services provided by interaction devices can be called with a unified interface. Therefore, when encountering interaction logic, the upper level applications just call APIs provided by the middleware and let it select target interaction services. Hence, interaction migration can be treated as seeking proper remote service combinations that can fulfill requested interaction requirements. The service-oriented middleware then needs to take charge of extracting service descriptions based on an application’s interaction behavior. Two questions should be answered in the descriptions: “what services are needed?” and “what are the basic properties of these services?” The system can answer the two questions by tracing the APIs invoked by upper level applications. We categorize APIs into several interaction service types (such as video display service and keyboard input service) and record key parameters as service properties. Therefore, once an application calls an API, our middleware can specify corresponding types and properties of services called by the application (shown in Figure 5.2).

Figure 5.2 Service-oriented middleware support.

5.3.2.2 User History and Preference

We assume that different users possess different preferences for interaction devices, and such preferences can be acquired from users’ interaction histories. For example, a user who prefers large screens may have a higher average screen size in his previously used devices. Therefore, catching the parameters of a user-expecting device from a history is crucial in selecting appropriate interaction devices. In our method, we adopt a service-oriented concept and associate parameters of device hardware with interaction services. In addition, the interaction services are categorized into independent groups such as video output and keyboard input to facilitate service matching.

Assume that there are k service categories C = {c1, c2, …, ck}. Let a device d with hardware feature set Fd = {f1, f2, …, fn}. We assume that it can provide services Sd = {s1, s2, …, sm}. The properties Pi of service si are a subset of the hardware feature set; that is, Pi ⊆ Fd. We then extract general properties from set service properties, since each service can be assigned to a service category. General properties actually belong to a service category because they are used by all services in the same category. For instance, a desktop computer commonly has two service categories: video output and audio output. If it has two displays, then there are two interaction services on the computer that both belong to the video output category. Screen size and resolution are general properties of these two services.

In user devices, we maintain a service property set Uc to represent user preferences and to describe a user’s expected properties for service category c∈C. Notably, we ONLY record general properties for a service category because only those common properties can be used for comparisons among the services in a category. User preferences are formed from device-using records in the past history. After a user selects a service s from category c, the user device will record the general properties of the service and update the ith properties of corresponding user preferences by:

(5.1) |

where denotes the ith property in user preferences for category c after using a service from c for the kth time; α denotes the update rate for the newly incoming device descriptions; and ci,k denotes the ith general property value of the kth time selected service. Suppose the initial user preference is , then a candidate value for α is 1/k, which means the user preferences are calculated by averaging past device parameters. When requesting migration to some services, a user device will envelope user preferences for these services and send them to nearby devices for service matching.

Our goal is to propose a context-aware method for selecting proper interaction services that makes it necessary to deploy a context manager to monitor the environment and users, to record and analyze contexts, and to provide global access for interaction devices. Another important responsibility of the context manager is to integrate multimodal context information such as speech, gestures, writings, facial expressions, or combinations thereof. The context manager runs a software system connected with user devices and sensors. We do not put much emphasis on how the context manager connects with sensors although we do provide insight into what contexts should be provided and how we use the information. We assume that the context manager is able to monitor data from sensors and to detect context switches along with user behaviors. The context manager should maintain two major types of context information:

■ Environment context. Including environment temperature, moisture, brightness, current time, and so on, environment context is crucial to those interaction devices that have some running constraints under environmental condition. For example, high temperature and moisture may greatly influence some sensitive devices, decreasing their process ability and thus harming user experiences. Another interesting use of environment context is related to time records. For instance, a device may record when its services are being used, compare the current time with the records, and judge whether it is appropriate for providing such services.

■ User context. Researchers have made great progress in HCI technology [37], such as face detection [38], expression analysis [39], gesture and large-scale body movement recognition [40], and even eye tracking [41]. For a smart pervasive environment, it is usual to deploy these technologies, corresponding sensors, and algorithms; these are called context probers in our framework. Our context manager monitors these context probers and fetches context information from them. A simple example is how user position matched to the nearest interaction devices. We can also apply user face orientation information based on detection technology to match proper screens in a user’s vision.

In our method, context information mainly aids interaction migration in two ways. First, context information helps determine the moment when to launch interaction migrations. Second, a comparison between contexts and device parameters improves service matching accuracy. Moreover, the context manager is responsible for the algorithm of service combination selection that will be discussed in the following section.

Local service matching in Figure 5.1 is performed on each interaction device that receives migration requests from the context manager. Matching degrees are then sent back to the context manager for further selection of service combinations. Interaction devices are designed to calculate matching degrees because the context manager will be a network bottleneck if too much device and service information needs to be transmitted back. A device profile is maintained on each interaction device to compare with service descriptions and with contexts. The service matching procedure contains the following three parts:

■ Service property matching. The top priority is to judge whether the current interaction device is capable of providing the service required; that is, to determine whether the device can meet the basic properties of required service. Our solution is to keep device capability information in a device profile, including supported service types and properties for each service type. Hence, the interaction device merely needs to judge whether requested services exist in supported service categories and whether corresponding service properties are within the capabilities of the HCI service. If service property matching fails, the device will not reply to the user device. Otherwise, the following two matching processes will be performed, and a matching degree will be returned.

■ User preference matching. Our goal is to select what are potentially the most satisfying services for users. Therefore, this part calculates to what extent the interaction device satisfies a user’s expectation—namely user preference—when considering certain services. User preferences are enclosed in the migration request, although device features for its services are recorded in a device profile.

■ Context matching. The main contribution of our method is the utilization of context. We first retrieve context information from the context manager, and then we evaluate environment and user context information by a context evaluation function, preset in interaction devices, to measure whether devices are suitable to provide services under current circumstances. The context evaluation function fc(x) for a context c varies according to the actual semantics of the context. For example, Euclidean distance

(5.2) |

where p denotes the position of a device, performs well for position context to measure whether a user is close to the device, while cosine similarity metric

(5.3) |

where o denotes the orientation of a device, is suitable for context variables such as user face orientation and screen orientation. Interaction devices can also specify weight for each context c to denote how significant the context will influence service si’s selection on device d.

The final matching degree is summed up from the results of user preference matching and context matching. Matching degrees are calculated for each requested service. After collecting matching degrees, the context manager starts a global service combination selection.

5.3.5 Global Combination Selection

We have presented a local service matching procedure by which matching degrees can be derived for each requested service. In simple scenarios, the information from service matching degrees is enough for a successful interaction migration. However, there are limitations that may lead to unreasonable migration. For example, because the local service matching procedure considers little about the interservice contexts such as relative distance between two target devices, it may result in a bad user experience if the distance is too long. Therefore, we propose a global selection procedure to find the best service combination. We focus on the relative locations as an example of processing interrelations among services. In fact, more general interservice relations can be modeled in our framework using similar approaches. In order to represent the relationship among different services in terms of relative distance, we develop a service-coverage model and corresponding search algorithm. The coverage idea is similar to target coverage scheduling proposed elsewhere [42]. Each interaction service in our framework resembles directional sensors in Han et al. [42], but we use soft evaluation rather than hard classification for our effective region of interaction services.

In reality, different services on different devices have different ranges to provide effective interaction service. We use effective region to represent the range within which a user can interact with a service under a QoS guarantee. The effective region for a device’s interaction service without any physical barrier can be reasonably modeled as a sector centered at the device. The sector angle represents the device’s interaction angle. As illustrated in Figure 5.3, a slashed area denotes the computer’s effective region within which users can use the computer effectively.

For a given device, different services may have different effective regions. For example, visual service may have a smaller effective region than audio service. Even for a given device’s interaction service, HCI effectiveness may vary from point to point within its effective region. In Figure 5.3, users may feel it is more convenient to use the computer at point A than at point B. Therefore, we use function EVi for service i to represent the HCI effectiveness at point p:

(5.4) |

The effectiveness can be zero if p is out of one device’s effective region while within the effective region of another device, the function returns a device- and service-dependent score between (0,1].

Figure 5.3 The effective area is modeled as a sector.

Interaction migration happens only when the user enters a different area that is called the user active scope (UAS). The UAS information is maintained by the context manager. We assume that the context manager can properly divide user space into different UASs according to the information about building inside a structure that can be initially set in the system. Meanwhile, users are inclined to move within a UAS for effective interactions.

It is flexible to define a UAS that depends on both environment and applications. For example, a room can be a single UAS in some situations while, in other applications, it may be divided into several UASs.

In Figure 5.4, a UAS is covered by several continuous squares. A UAS square is an atomic unit that users can step in or out of to interact with some devices. The UAS square will be used in the service combination selection algorithm we present later. It is obvious that a large UAS square can speed up our selection algorithm, but it will decrease its accuracy.

Figure 5.4 User active scope is represented by continuous squares.

5.3.5.3 Service Combination Selection Algorithm

Service location is concerned with HCI migration. Actually, two individually best matched services usually cannot be combined to provide HCI services in one application if they are not close enough. Therefore, we develop a global combination selection algorithm to take service location into account. Our algorithm includes the following two procedures:

1. Service-coverage coloring procedure. Suppose there are M different services in a UAS and, for each square unit, we use an M-bit string to represent whether users can effectively interact with the corresponding service in the squares. If the effective region of service i covers square p, then sc[p][i] is set to 1; otherwise, 0. We call this step coloring, and it is performed on the context manager. The coloring procedure running on the context manager includes following three steps:

a. The context manager retrieves information about a user’s current UAS.

b. Divides the UAS into N continuous squares.

c. For each of the M services, the context manager colors the squares within its effective region.

Figure 5.5 shows the result of the coloring procedure for the UAS in Figure 5.4. For a given UAS, the coloring procedure only needs to run once after its device position changes.

2. Service combination selection procedure: Suppose there are K categories of services required to be migrated. Different devices can provide the same category of services. So we need to find out the best service for each required service category. We denote services in current UAS as set S and the service category set as C. Therefore, we have ∀c∈C, c⊆S and ∀s∈S, ∃c∈C, s.t. s∈c. A subset S′ denotes those returned (matching) services. Denote the service category set of S′ as C′. Then ∀c′∈C′, c′⊆S′ and ∀s′∈S′, ∃c′∈C′, s.t. s′∈c′. Matching degree ds, s∈S’ denotes a local matching degree for service s. Denote position (square) set as P. Then the coverage information for service s and position p can be denoted as sc[p][s], p∈P, s∈S. We now suppose the context manager has derived matching degrees for the current migration through a local service matching procedure. The context manager then performs as follows:

a. For each square p(p∈P), find the most suitable service i for service category c satisfies:

(5.5) |

b. Calculate the overall matching degree for square p by:

(5.6) |

Figure 5.5 Results of the coloring procedure are represented by continuous squares.

where t(p) is the overall matching degree for square p and wc is the weight of the service category c. wc is determined by applications.

c. Select the square sel with the maximum overall matching degree:

(5.7) |

The square sel will be the best position where user can have most effective interaction, and the corresponding services will compose the best service combination.

5.4 Scenario Study: Video Calls at a Smart Office

In this section, we study a specific scenario, having video calls with a customer at a smart office, to illustrate how our context-aware service selection framework works to provide better user experiences.

5.4.1.1 The Smart Office Environment

As smart devices become more and more common in our daily life, the concept of the smart office becomes a hot idea in pervasive computing. Thus, we start our scenario study in such a typical smart environment.

One smart office may consist of the following devices:

1. Displays, projectors, and so on, categorized as visual devices because they can output visual images.

2. Loudspeakers, wireless headphones, and forth, categorized as audio devices because they can output audios.

3. Microphones, categorized as voice devices, for the reason that they can input voices.

4. Video cameras, categorized as video input devices.

5. Desktop computers, laptops, and tablet computers, categorized as compound devices because they can provide multiple services.

6. Printers and other irrelative kinds of devices.

Figure 5.6 shows the layout of our smart office. There are four working blocks in the office with a desktop computer in each block. Computer 01 has an embedded video camera and microphone. Near Computer 02, there is an external video camera and a wireless microphone. The devices and corresponding services contained are listed in Table 5.1.

Figure 5.6 Layout of the smart office.

To construct this smart environment, these devices must satisfy two basic requirements. First, they themselves must be smart, which means that certain computing ability is required so that they can think independently. Second, they must be connected together, either in a wired or wireless way, so that they can communicate with each other in order to act together. Development in mobile and wireless networks has presented several approaches for connecting these devices [43].

A smart office should also be aware of the status of both users and the environment. Thus, sensors become its most important part. For example, heat sensors can be used to collect information about temperatures; surveillance cameras can be used to track users’ motions and their face orientations. The data collected by these sensors are called context information.

Table 5.1 Devices and Services Used in Simulation

Device Name |

Service Name |

Category |

Projector |

projector_display |

VO |

Loudspeaker |

loudspeaker_sound |

AO |

Computer 01 |

comp01_display |

VO |

comp01_sound |

AO |

|

comp01_camera |

VI |

|

comp01_micro |

AI |

|

Computer 02 |

comp02_display |

VO |

comp02_sound |

AO |

|

HD VideoCam |

ext_video_camera |

VI |

Wireless Micro |

microphone |

AI |

Computer 03 |

comp03_display |

VO |

comp03_sound |

AO |

|

Computer 04 |

comp04_display |

VO |

comp04_sound |

AO |

Note: AI, audio input; AO, audio output; VI, video input; VO, video output.

Suppose there is a user, named Bob, having a video call on his smartphone. Due to the small screen of his phone, he may not be satisfied. So he walks into the office that has better devices. In such a scenario, our framework should be able to detect Bob’s intentions, find the devices that can better satisfy Bob’s need, and migrate interaction to these new devices.

An HCI migration request is sent by a user device. A user device is a core device in our framework. It can be used to identify the user and to decide whether to start an application migration when the user’s context is changing. One typical user device may be a smartphone, for the reason that it is portable, easy to obtain user preferences, and has good process ability. People frequently use them to check e-mails, surf the Internet, and to connect with different devices. All these activities imply users’ preferences toward certain services and devices, which can be recorded as user preferences by the smartphone in order to facilitate service matching.

After the user device decides to start an HCI migration, requests are sent to the context manager and then dispatched to device terminals. The request is written basically in XML format. It is made up of three parts: the service description, user preference, and user identification information. The service description should contain descriptions of an application’s related services. These descriptions must contain the essential requirements, namely service properties, so that receivers can decide whether they can offer such services based on these requirements. User preference contains information for devices to decide whether their services can meet the user’s need. Finally, user identification information is provided to identify who initiates the request.

In this scenario, because Bob is having a video call on his smartphone, which is powerless to present videos and sound tracks, video output service, audio output service, video input service, and audio input service are four important service categories to be contained in the migration request. Bob’s requirements can be explained as the migration of these four services in our framework. Figure 5.7 illustrates the structure of the migration request within our scenario. Among the four service categories, video output service is investigated the most. Three properties—format, resolution, and bandwidth—denote the supporting video format, video resolution, and transmission bandwidth, respectively. An XML format of this request can be found in Appendix 5.7.1.

As is mentioned earlier, the context environment is made up of the data collected from different sensors. These data are stored on a local server called the context manager, which is responsible for monitoring context information and for providing access for interaction devices. Context information consists of two parts: environment and user context. In our scenario, several user contexts need to be considered by our framework (such as user position and face orientation) because, within these contexts, those devices with video display service having screens that are close or nearly face to face with the user will achieve a high degree of matching in service matching. The structure of context information retrieved from the context manager is relatively simple (as shown in Figure 5.8). An XML format is given in Appendix 5.7.2.

Figure 5.7 Structure of service migration request in video call scenario.

The device profile is rather essential to the local service-matching algorithm, as is mentioned in Section 5.3.4. Each device has their own device description (the device profile) that describes their abilities. The profile contains several service descriptions, each of which is used to describe one of the device’s features when offering this service. Similar to the format of a request, the service description in a device profile also contains corresponding parts for matching service properties and user preferences, as is mentioned in Section 5.3.2. What seems different is that context information is considered here. The relevant context information may vary with different devices and services. For example, in our scenario, Computer 02 can receive input from a keyboard, display videos, and can play sound tracks. Then these three abilities are categorized into three different services: keyboard input service, video output service, and audio output service. Figure 5.9 illustrates the schema structure of Computer 02’s device profile, and some essential properties and contexts of video output service are illustrated.

Figure 5.8 Structure of context information in video call scenario.

Figure 5.9 Structure of device profile of Computer 02 in video call scenario.

In our scenario, when Computer 02 receives a migration request and context information from the context manager, it then starts a local service matching process. It first compares the service name and properties between the request and its device profile to know whether it can offer this service. The result is that video output service and audio play service are within the matching scope; keyboard input service is unused; and video input service and audio input service are not supported. Second, for these successfully matched services, device information regarding these services will be compared with user preferences in the request to measure the divergence to the user-expecting device. Finally, Computer 02 considers context information by calculating distance to the user and cosine similarity between screen and user face orientations. The final matching degree is then sent back to the context manager. After receiving matching degrees, the context manager will perform a global service combination selection. In the following section, we illustrate the simulation result of this process for our scenario.

We illustrate simulation results of our context-aware HCI service selection algorithm applying to the smart office scenario discussed earlier and compare our results with the method presented in Wang et al. [44] and show that modeling context information and user preferences alone is insufficient for discovering an optimal service combination. Our service selection algorithm that considers interrelations among services is much more effective in searching target service combinations for interaction migration. As is mentioned earlier, we categorize interaction services into several groups and pick the best matches in each group to form the best service combination regarding a position unit. In this simulation, we predefined four service categories: video output service, video input service, audio output service, and audio input service. Each device in the simulation contains several services from one or more service categories. Services provided by different devices may consist of various descriptions or EV functions due to the diversity in device parameters. It is easy to extend this simulation to more complex situations by simply adding customized service descriptions and registering through a uniform service interface. The devices and corresponding services contained within are illustrated in Table 5.1.

Our simulation aims at giving solutions to the smart office scenario mentioned in Section 5.4. Our service selection algorithm first scans each continuous square in a user’s UAS and finds the best service combination. Figure 5.10 shows overall matching degrees for Equation 5.6 for each square in the room with regard to different selected services. The room setup can be referred in Figure 5.6. The room is divided into many squares (which are not shown due to the large number), and each square is colored by #000000 to #FFFFFF corresponding to the matching degree in that square. A deeper color means a higher matching degree in that square regarding the selected services and therefore a better user experience with the selected services in that square. The matching degrees are normalized to [0, 1]. Figure 5.10a shows matching degrees for the local optimal service combination of each square in the room. The graph shows two potential areas for interaction migration—marked by solid and dashed circles in Figure 5.10a. The best service combination selected in the solid circle is {comp01_display, comp01_sound, comp01_camera, comp01_micro}, and the best one in the dashed circle is {comp02_display, comp02_sound, ext_video_camera, microphone}. It is reasonable to have multiple high scoring areas in a UAS because different service combinations may satisfy a user’s migration requests. For the final decision of interaction migration, one may list all potential service combinations in matching degree order, or one may just pick the service combination with the highest score.

Figure 5.10 Overall matching degrees (Equation 5.6) of each square in the room with regard to different selected services. (a) matching degrees for the local optimal service combination of each square in the room; (b) matching degrees of each square for the globally optimal service combination; (c) matching degree of our service selection algorithm.

After assigning local matching degrees to each square, the best service combination is then picked from the square with the highest matching degrees (global selection procedure). In our simulation, the squares with the best matching degrees occur near the device Computer 01 whose service combination is {comp01_display, comp01_sound, comp01_camera, comp01_micro}. The target device for interaction migration is then Computer 01. Figure 5.10b illustrates the matching degrees of each square for the globally optimal service combination. One observation is that the chosen services are close to each other (actually in the same device). This is an ideal situation for interaction migration because users can easily have access to devices thereby increasing user experience.

For algorithm comparison, we apply the service selection algorithm in another study [44] to this smart office scenario and present the result in Figure 5.10c. The selected services in Figure 5.10c are {projector_display, loudspeaker_sound, ext_video_camera, microphone} and matching degrees are highlighted with gray density. Each individual service selected is the best one that matches the user’s requests (e.g., the selected device projector is powerful in video output due to its large screen and high resolution). However, the overall matching degree in the room of the selected service combination is much less than what we selected (Figure 5.10b). The reason is our algorithm takes interrelation of services into consideration that benefit from discovering a good service combination. The room setup can be referred in Figure 5.6. The room is divided into many squares (not shown due to the large number) and each square is colored by #000000 to #FFFFFF, corresponding to the matching degree in that square. A deeper color means a higher matching degree. The matching degrees are normalized to [0, 1]. (a) Matching degrees for the local optimal service combination of each square in the room. (b) Matching degrees of each square for the globally optimal service combination. (c) Matching degrees of each square using the selection algorithm [44].

Response latency is always a crucial factor in HCI technology. For service-oriented interaction migration, selection algorithm may be a bottleneck for the overall migration process. An efficient service selection algorithm needs to be scalable with an increasing number of services. In our algorithm, we compute matching degrees for each square divided in UAS by selecting the best services within each service category. Let the number of service categories be C, each service category contains S services, and the total number of squares divided in UAS be N. The overall complexity of our service selection algorithm is O(CSN). The computing time grows quadratically on average as more services are added because C is linearly related to S. Another key factor influencing computation time is the number of squares. For a fixed size interaction migration area, more squares that are divided usually means more accurate results for service selection but more computing costs. Fortunately, the computing time grows linearly with the number of squares (as shown in Figure 5.11). Moreover, the area of a square usually is not necessarily too small because users may feel no difference between two different squares if they are smaller than the users’ sensitivity criterion.

5.5 A Web Service–Based HCI Migration Framework

We propose a Web service–based HCI migration framework to support open pervasive space full of dynamically emerging and disappearing devices based on the idea of the aforementioned HCI service selection. To avoid costly centralized services and improve scalability, our framework resides on each device, composing a peer-to-peer structure. With user preferences and context awareness implemented in each device, we do not need a centralized context manager. Therefore, there will be some differences between the HCI migration framework and the service selection structure presented earlier, including system components and interaction processes.

Figure 5.11 Computing time for increasing number of squares divided with a fixed number of services.

5.5.1 HCI Migration Support Environment

Traditional implementation of application user interface migration relies on centralized service, which performs costly and awkwardly when faced with newly emerging devices. Static device registration is incompetent in open pervasive surroundings because, contrary to a local smart zone, we can never know what devices will be involved in an interaction area. Today, however, users tend to prefer services that can support dynamic updates of device information; otherwise, they instead would use one device to finish the work regardless of its inconvenience [45]. Therefore, our framework design for interaction migration aims at the hot-swapping of interaction devices. As shown in Figure 5.12, the HCI MSE lies between the application level and OS level, acting as a middleware, hiding platform-dependent details from users and providing interaction migration APIs for upper applications. For each platform within the interaction zone, an MSE defines the boundary of application and makes the application free from the burdensome affairs of multiplatform interaction creations such as communication with other MSEs and so on. The application merely needs to submit interaction requirements and tasks and then leave the details to the MSE. With each device represented as an interaction Web service (discussed in following sections), the whole connection structure resembles a peer-to-peer framework and thus avoids centralized services.

Figure 5.12 Framework position between application and OS system.

Figure 5.13 Framework of HCI migration support environment.

The framework can be divided into two ends (shown in Figure 5.13):

1. A front end that is platform-independent and responsible for handling user preferences and context awareness.

2. A back end that is platform-dependent and in charge of interaction migration and communication with other devices.

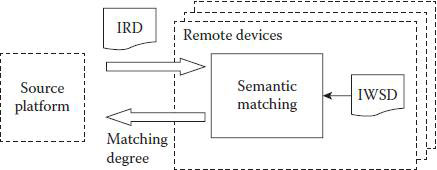

The front end formalizes user preferences, context information, and application constraints as the interaction requirements description. The back end then utilizes these descriptions as input for the interaction devices manager (IDM) that fetches device information from the network and exerts semantic matching to select appropriate devices for migration. The IDM also maintains platform-dependent information and acts as an interaction device to respond to requests from other platforms. Devices that provide interaction are represented as interaction Web services and are provided for semantic matching. After selecting target devices, interactions are performed through networks.

5.5.2 Interaction Requirements Description

HCI involves both human behaviors and application manners. With this consideration, we need to formalize a description, namely an interaction requirements description (IRD), to include both user requirements and application response.

Figure 5.14 demonstrates the composition of an application in general: program logic, interaction elements, and interaction constraints. Program logic assures the correct reaction of an application to certain interaction input. Therefore, program logic can be separated from the interaction framework, which makes our framework independent of application functionality. The IRD includes three parts:

1. Definition of interaction elements. For graphical user interface (GUI), interaction elements include buttons, links, and other interface elements. In our condition, which is more general, the definition covers all input and output items during interaction and the descriptions of their names, types, and functionality.

2. Interaction constraints. There are two sources of constraints. Requirements on interaction devices proposed by users and context-driven constraints are formalized as constraint descriptions used to select proper interaction devices. For example, the physical position relationship between a user and devices is taken into consideration. The second kind of constraint is involved in the application interaction process. For example, if application interface contains audio material, constraints should involve support for audio output. When conflict occurs between user-defined and application-dependent constraints, we grant user-defined constraints a higher privilege because interactions need to more willingly satisfy users’ feelings.

Figure 5.14 General compositions of applications.

3. Interaction display moment. Defined by program logic, the moment for interaction display assures the continuity of the application process. When reaching the moment for interaction display, the application sends interaction requests through the MSE. All the elements displayed at the same moment make up the distributed HCI.

5.5.3 Interaction Devices Manager

Heterogeneous device structures always tax multiplatform interaction because it is hard to design a universally compatible interface, and it is costly to maintain several copies for multiplatform support. Another crucial problem for interaction migration is the matching of appropriate platforms. An intuitive idea is to analyze the migration requests from application users and fetch the most fit device service according to device description. Fortunately, the IRD discussed earlier has combined user requests, physical contexts, and application interaction elements, contributing to the accurate and swift matching of interaction devices. Nevertheless, there still remain problems for managing device information and providing a proper matching mechanism.

Our solution is to deploy an IDM to take charge of each connected device. When a new device emerges, a new instance is created by IDM to represent the corresponding device in each device within the network connection. The instance is a skeleton representation of devices to reduce the storage burden, from which contents are fetched when applications request migration. When receiving migration requests, the IDM first creates an IRD based on applications, user behaviors, and context, and then sends an IRD to connected devices. Each remote device is represented as an interaction Web service. Based on this concept, an IDM on each remote device maintains an interaction Web service description (IWSD), which includes interaction-related information such as display resolution, window size, and supports for other interaction modes. Semantic analysis is then carried out to calculate the matching degree between its own IWSD and the provided IRD. Semantic similarity measures the ability of the devices to fulfill the constraints of this interaction. All matching degrees are returned to the source platform for a final decision. For a successfully matched device (the one with the highest similarity), MSE announces these devices and locks the relevant resources for this device to avoid its being occupied by other interaction requests. It is entirely possible to match multiple devices (devices of the same kinds), and an MSE on a source platform will decide according to interaction semantics whether to distribute parts, or the total process of interaction, to those devices. Once interaction devices are decided, migration requests will be sent to device terminals and a connection will be established.

The best feature of our framework structure is the absence of a centralized server, which is replaced by an HCI MSE on each device. Such framework is similar to the well-known peer-to-peer structure. Those devices that receive interaction requests from an MSE on a source platform are represented as IWS for application users. For each IWS, an IDM takes charge of maintaining the IWSD of the device (by static configuration or auto-generation), which is then used to compare it with a received IRD from a source platform. The matching degrees are returned to the source platform. The MSE on the source platform selects devices with the most fitting Web service description to the IRD and decides target platforms (see Figure 5.15). The key consideration of such a design is that we distribute the semantic matching process to remote devices, in a parallel-computing form, which largely relieves the data processing burden for the source platform. Traditional semantic technologies can be applied to implement this modular such as Web Service Description Language–Semantics (WSDL-S). Another advantage for this framework is that it is independent of communication protocols that reduce the difficulty for implementation.

5.5.5 Runtime Migration Demonstration

Supporting multiplatform interaction migration means integrating platform information and creating appropriate interaction under the correct circumstances. Our migration service resides on each device side, composing a peer-to-peer structure that avoids static registration and supports dynamic device awareness. Such a design, though heavy for small devices [35], indicates its potentiality—especially with the development of the data processing capacity of embedded devices. To affirm practicality, our framework is designed to meet three main requirements: dynamic device awareness, user preference, and usability criterion. To meet the usability criterion and relieve the burden of data processing on the client side, we follow two principles in our designation:

1. Less data storage on each device. Each device only stores its own platform information. Device awareness is accomplished by using negotiation among devices. Based on this mechanism, device descriptions are not fetched by other devices, thus saving storage.

Figure 5.15 Semantic matching.

2. Less data processing on each device. Task model tools such as TERESA may surpass the process ability for current small devices. Therefore, we recommend semantic analysis among Web service descriptions for device selection. Our distributed semantic matching process also follows this principle to ease the pressure on client side.

With these design choices, our migration process consists of the following main steps (the sequence of output interaction migration is demonstrated in Figure 5.16):

1. Request for interaction migration. Application requests for interaction migration through APIs provided by MSE: The MSE receives interaction elements from the application and combines with user preference to formalize interaction constraints. Requests can also specify the moment for interaction migration (if not specified, interaction will immediately validate). User preference can be obtained by manual input or by physical environment parameters such as position relationship between user and devices detected by GPS, wireless network, or static configurations. After extracting an IRD from requests, the MSE sends an interaction message to devices in the vicinity, asking for device information.

2. Interaction devices selection. Device information is represented by Web description language (such as WSDL-S): The IDM is in charge of the semantics of Unlocking device these Web services. After receiving an IRD from a source platform, all IDMs calculate semantic matching degrees according to the IRD, lock themselves, and return the results. The MSE collects these semantic matching degrees from the devices and selects the highest matches from among them. The MSE then multicasts the matching results to these devices to establish an interaction connection. The MSE also notifies other devices not chosen to release their locks.

Figure 5.16 The migration process.

3. Interaction rendering. When the MSE determines the interaction devices within the networks, remote devices represented by matched Web services will receive messages from the source platform. Interaction contents are rendered for remote devices in the messages to validate by calling device drives. The IRD will also be transmitted to remote devices through the network. For GUI, remote devices can deploy auto-generation tools to create a GUI based on the interaction elements given. Moreover, callback instances will also be transmitted to remote devices for interaction feedback.

4. Interaction termination. In our framework design, the display moment for interaction elements is determined by program logic. For the interaction output process, the application invokes the interaction termination API provided by the MSE to instruct remote devices to clear output. For the interaction input process, the interaction finishes once input results are returned from remote devices to the source platform. Resource locks on remote devices are released after the interaction’s termination.

For input interaction migration, the whole process is similar except that input results will return to the application at the end of the process. We can also divide the migration process according to physical positions. The application and MSE objects to the left side of the sequence graph reside on the source platform, which requests the migration. The IDMs, IWS, and device objects to the right side of the sequence graph reside on target platforms (or remote devices), which receive requests and provide interaction.

We proposed a service-oriented framework for HCI migration and provide a context-driven method for service selection within a multimodal environment. Our method extracts user preferences from users’ interaction history to describe user-expecting devices when interacting. Moreover, two procedures—local service matching and global combination selection—are proposed to discover the best combination of interaction services. By integrating user preferences, service, and environment contexts, our service matching algorithm is intelligent enough to handle context changes and to provide a better user experience. We also presented a Web service–based HCI migration framework, which avoids centralized servers, and is thus able to support interactions with dynamically emerging and disappearing devices.

Future work will be undertaken to improve continuity of migrated interaction and conflict resolution for busy devices. We are also interested in applying a more flexible service description format to support more accurate semantics and usercustomizing service types.

<?xml version="1.0"?>

<xsd:schema xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<xsd:element name=" Request " />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="App" type="xsd:string"/>

<xsd:element name="UserID" type="xsd:string"/>

<xsd:element ref="Video Output Service " />

<xsd:element ref="Audio Output Service " />

<xsd:element ref="Video Input Service " />

<xsd:element ref="Audio Input Service " />

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Video Output Service" />

<xsd:complexType>

<xsd:sequence>

<xsd:element ref=" Properties" />

<xsd:element ref=" Preferences " />

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Properties " />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="Format" type="xsd:string"/>

<xsd:element name="Resolution" type="xsd:string"/>

<xsd:element name="Bandwidth" type="xsd:string"/>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Preferences " />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="Device Info" type="xsd:string"/>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

</xsd:schema>

<?xml version="1.0"?>

<xsd:schema xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<xsd:element name=" Context " />

<xsd:complexType>

<xsd:sequence>

<xsd:element ref="Environment Context"/>

<xsd:element ref="User Context" />

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Environment Context" />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="Time " type="xsd:string"/>

<xsd:element name="Temperature" type="xsd:string"/>

<xsd:element name="Brightness" type="xsd:string"/>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" User Context" />

<xsd:complexType>

<xsd:sequence>

<xsd:attribute ref="User Identification"/>

<xsd:element name="Position" type="xsd:string"/>

<xsd:element name="Orientation" type="xsd:string"/>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

</xsd:schema>

<?xml version="1.0"?>

<xsd:schema xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<xsd:element name=" Profile" />

<xsd:complexType>

<xsd:sequence>

<xsd:element ref="Video Output Service" />

<xsd:element ref="Audio Output Service" />

<xsd:element ref="Keyboard Input Service" />

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name="Video Output Service" />

<xsd:complexType>

<xsd:sequence>

<xsd:element ref="Properties" />

<xsd:element ref="Context" />

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Properties " />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

<xsd:element name=" Context " />

<xsd:complexType>

<xsd:sequence>

<xsd:element name="Position" type="xsd:string"/>

<xsd:element name="Orientation" type="xsd:string"/>

<xsd:element name="Others" type="xsd:string"/>

</xsd:sequence>

</xsd:complexType>

</xsd:element>

</xsd:schema>

Human–Computer Interaction

J. A. Jacko and A. Sears, Eds., The Human-computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications. Hillsdale, NJ: L. Erlbaum Associates Inc., 2003.

This book provides HCI theories, principles, advances, and case studies, and it captures the current and emerging subdisciplines within HCIs related to research, development, and practice.

Multimodal Interaction

This is W3C multimodal interaction workgroup homepage providing information such as the definition of multimodal interaction and multimodal architecture and the specifications of multimodal interaction (e.g., EMMA, InkML, and EmotionML).

ConcurTaskTrees

http://giove.cnuce.cnr.it/tools/CTTE/CTT_publications/index.html

ConcurTaskTrees is a notation for task model specifications used to design interactive applications. This site provides a variety of information about the ConcurTaskTrees features, tools to develop task models, and related publications.

Web Service

This page contains information about several Apache projects related to Web services such as Axiom, SOAP, XML-RPC, and WSS4J.

WSDL

WSDL is an XML language for describing Web services. This page describes the Web Services Description Language Version 2.0 (WSDL 2.0).

Semantic Matching

This site provides information about concepts, technologies, tools, and even evaluation datasets of semantic matching.

1. M. Weiser, The computer for the 21st century, Scientific American, vol. 265, no. 3, pp. 94–104, 1991.

2. R. Bandelloni, F. Paternò, Flexible interface migration, in Proceedings of ACM IUI, 2004.

3. A. Sarmento, Issues of Human Computer Interaction, IRM Press, 2004.

4. F. Paternò, Model-Based Design and Evaluation of Interactive Applications, Springer-Verlag, Berlin, Germany, 1999.

5. K. Luyten, J. Van den Bergh, C. Vandervelpen, K. Coninx, Designing distributed user interfaces for ambient intelligent environments using models and simulations, Computers and Graphics, vol. 30, no. 5, pp. 702–713, 2006.

6. Y. Shen, M. Wang, M. Guo, Towards a web service based HCI migration framework, in Proceedings of the 6th International Conference on Embedded and Multimedia Computing (EMC-11), 2011.

7. F. Paternò, C. Santoro, A. Scorcia, A migration platform based on web services for migratory web applications, Journal of Web Engineering, vol. 7, no. 3, pp. 220–228, 2008.

8. R. Chinnici, J.-J. Moreau, A. Ryman, S. Weerawarana, Web services description language (WSDL) version 2.0 part 1: Core language, World Wide Web Consortium, Recommendation REC-wsdl20-20070626, June 2007.

9. R. Khalaf, N. Mukhi, S. Weerawarana, In Proc. of the WWW Conference (Alternate Paper Tracks), Budapest, Hungary, May 20–24, 2003.

10. S.A. McIlraith, T.C. Son, H. Zeng, Semantic web services, IEEE Intelligent Systems, vol. 16, no. 2, pp. 46–53, 2001.

11. D.L. Martin, M. Paolucci, S.A. McIlraith, M.H. Burstein, D.V. McDermott, D.L. McGuinness, B. Parsia, et al. Bringing semantics to web services: The owl-s approach, in Proceedings of SWSWPC, pp. 26–42, 2004.

12. M. Klusch, B. Fries, K.P. Sycara, Automated semantic web service discovery with owls-mx, in Proceedings of AAMAS, pp. 915–922, 2006.

13. A. Bandara, T.R. Payne, D. De Roure, T. Lewis, A semantic framework for prioritybased service matching in pervasive environments, in Proceedings of OTM Workshops, pp. 783–793, 2007.

14. Z. Wu, Q. Wu, H. Cheng, G. Pan, M. Zhao, J. Sun, Scudware: A semantic and adaptive middleware platform for smart vehicle space, IEEE Transactions on Intelligent Transportation Systems, vol. 8, no. 1, pp. 121–132, 2007.

15. S.B. Mokhtar, A. Kaul, N. Georgantas, V. Issarny, Efficient semantic service discovery in pervasive computing environments, in Proceedings of Middleware, pp. 240–259, 2006.

16. D. Chakraborty, A. Joshi, Y. Yesha, T.W. Finin, Toward distributed service discovery in pervasive computing environments, IEEE Transactions on Mobile Computing, vol. 5, no. 2, pp. 97–112, 2006.

17. A.K. Dey, Providing Architectural Support for Building Context-Aware Applications, PhD thesis, Georgia Institute of Technology, College of Computing, Atlanta, GA, 2000.

18. B. Medjahed, Y. Atif, Context-based matching for web service composition, Distributed and Parallel Databases, vol. 21, no. 1, pp. 5–37, 2007.

19. C. Lee, A. Helal, Context attributes: An approach to enable context-awareness for service discovery, in Proceedings of SAINT, pp. 22–30, 2003.

20. P. TalebiFard, V.C.M. Leung, Context-aware mobility management in heterogeneous network environments, Journal of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications, vol. 2, no. 2, pp. 19–32, 2011.

21. F. Morvan, A. Hameurlain, A mobile relational algebra, Mobile Information Systems, vol. 7, no. 1, pp. 1–20, 2011.

22. S. Gao, J. Krogstie, K. Siau, Developing an instrument to measure the adoption of mobile services, Mobile Information Systems, vol. 7, no. 1, pp. 45–67, 2011.