14 Laphroaig screams high five to the heavens!

Gott bewahre mich vor jemand, der nur ein Büchlein gelesen hat; эTO самиздат.

The MD5 hash of this PDF is5EAF00D25C14232555A51A50B126746C. March 20, 2017.

€0, $0 USD, $0 AUD, 10s 6d GBP, 0 RSD, 0 SEK, $50 CAD, 6 × 1029 Pengő (3 × 108 Adőpengő).

Neighbors, please join me in reading this fifteenth release of the International Journal of Proof of Concept or Get the Fuck Out, a friendly little collection of articles for ladies and gentlemen of distinguished ability and taste in the field of reverse engineering and the study of weird machines. This release is a gift to our fine neighbors in Heidelberg, Canberra, and Miami.

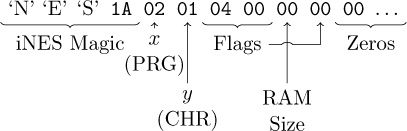

After our paper release, and only when quality control has been passed, we will make an electronic release with a filename of pocorgtfo14.pdf. It is a valid PDF, ZIP, and a cartridge ROM for the Nintendo Entertainment System (NES).

On page 14, Vicki Pfau shares with us the story of how she reverse engineered the Pokémon Z-Ring, an accessory for the Nintendo 3DS whose wireless connection uses audio, rather than radio. In true PoC||GTFO spirit, she then re-implements this protocol for the classic GameBoy.

Pastor Manul Laphroaig is back with a new sermon on page 32 concerning Liet Kynes, Desert Studies, and the Weirding Way.

Taylor Hornby on page 37 shares with us some handy techniques for communicating between processors by reading shared memory pages, without writes.

Mike Myers on page 46 shares some tricks for breaking Windows user-mode keyloggers through the injection of fake events.

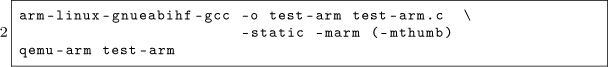

Niek Timmers and Albert Spruyt consider a rather specific, but in these days important, question in exploitation: suppose that there is a region of memory that is encrypted, but not validated or write-protected. You haven’t got the key, so you’re able to corrupt it, but only in multiples of the block size and only without a clue as to which bits will become what. On page 66, they calculate the odds of that corrupted code becoming the equivalent of a NOP sled in ARM and Thumb, in userland and kernel, on bare metal and in emulation.

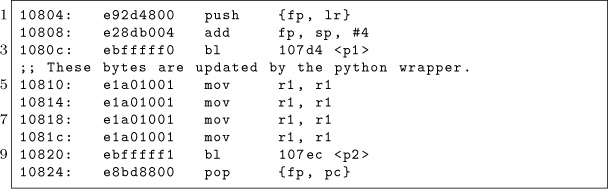

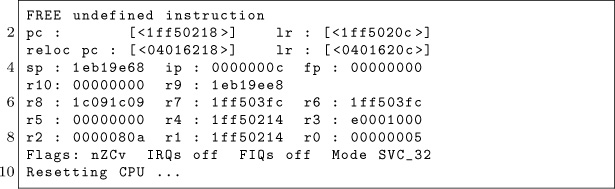

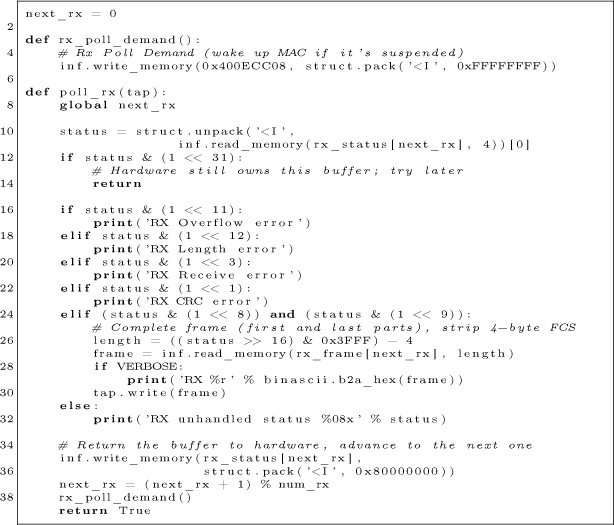

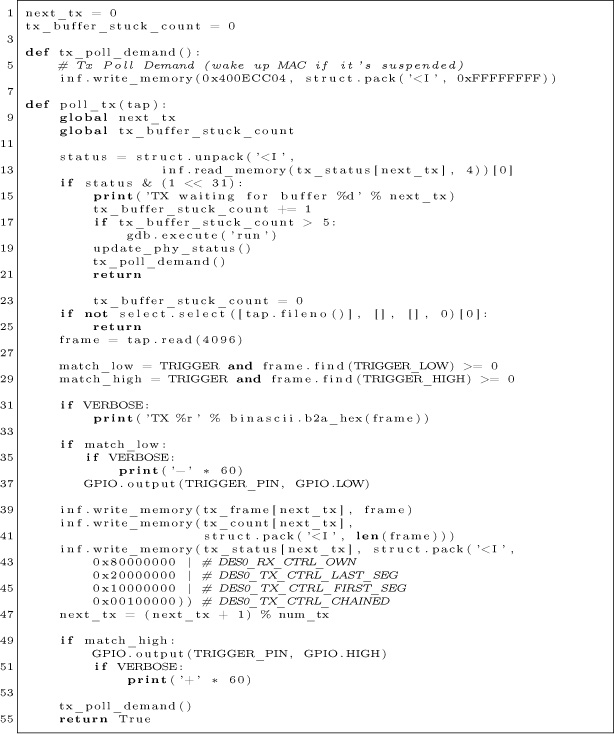

In PoC||GTFO 13:4, Micah Elizabeth Scott shared with us her epic tale of hacking a Wacom tablet. Her firmware dump in that article depended upon voltage-glitching a device over USB, which is made considerably easier by underclocking both the target and the USB bus. That was possible because she used the synchronous clock on an SPI bus to shuffle USB packets between her underclocked domain and real-time. In her latest article, to be found on page 74, she explains how to bridge an underclocked Ethernet network by routing packets over GDB, OpenOCD, and a JTAG/SWD bus.

Geoff Chappell is back again, ready to take you to a Windows Wonderland, where you will first achieve a Mad Hatter’s enlightenment, then wonder what the Caterpillar was smoking. Seven years after the Stuxnet hype, you will finally get the straight explanation of how its Control Panel shortcuts were abused. Just as in 2010, when he warned that bugs might remain, and in 2015 when Microsoft admitted that bugs did in fact remain, Geoff still thinks that some funny behaviors are lurking inside of the Control Panel and .LNK files. You will find his article on page 89, and remember what the dormouse said!

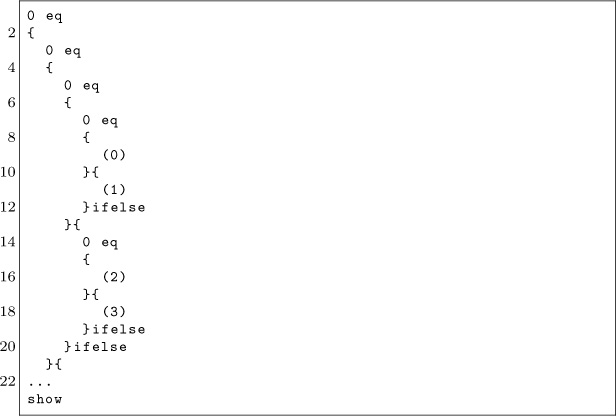

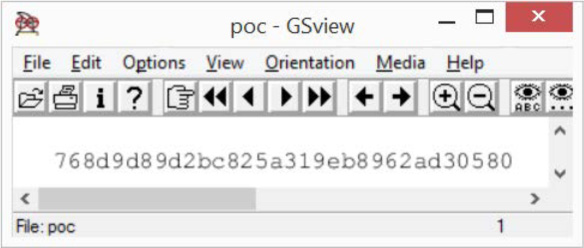

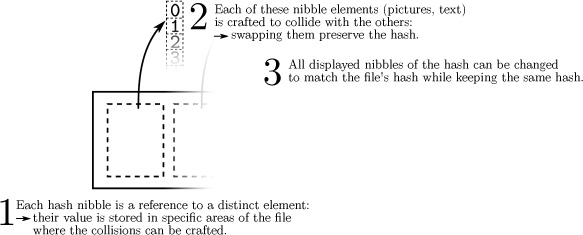

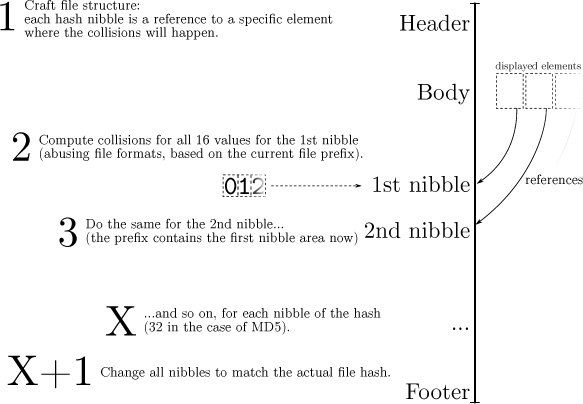

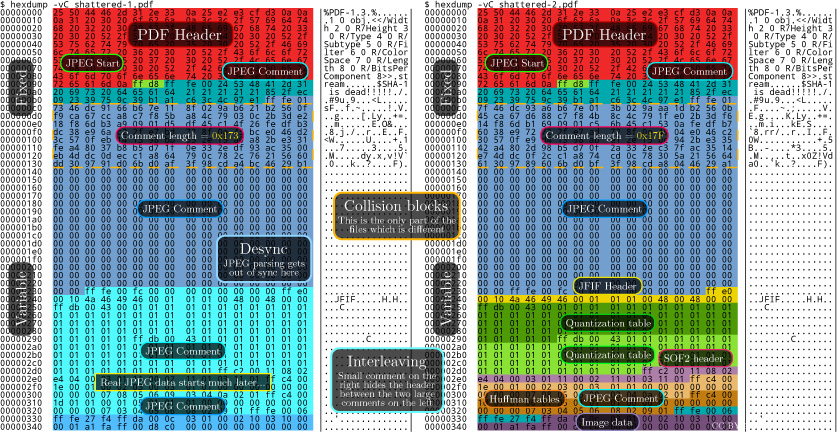

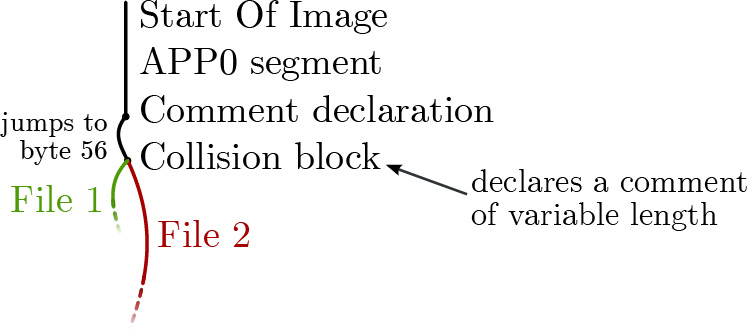

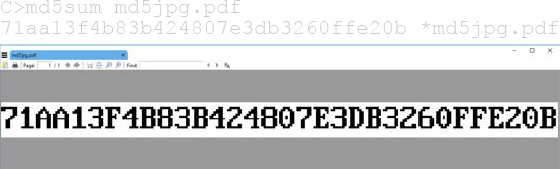

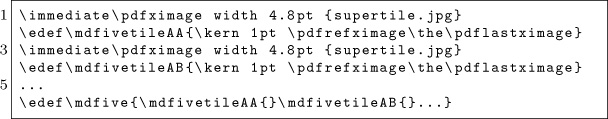

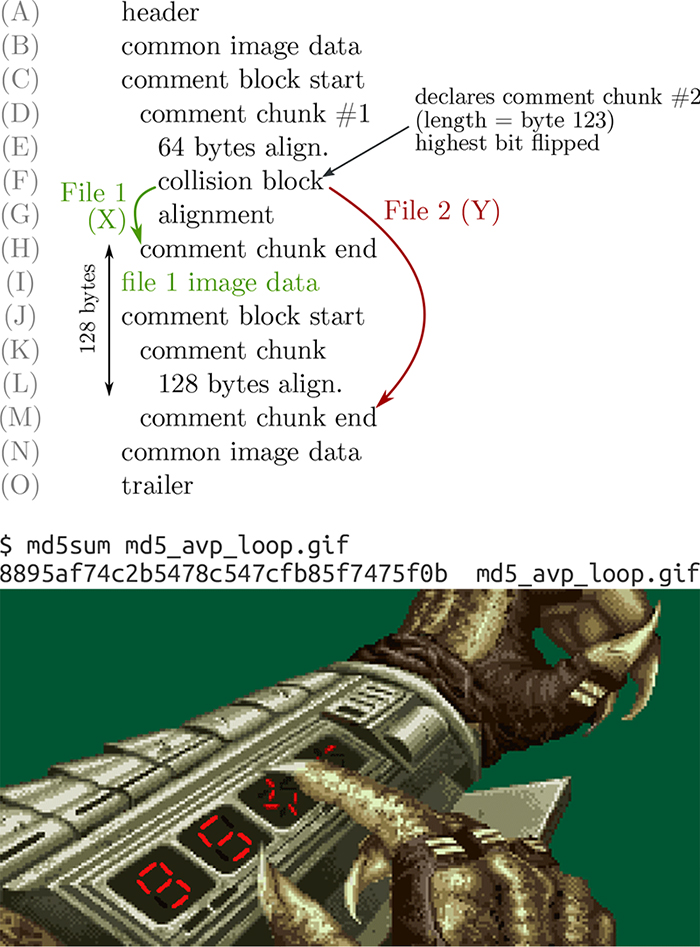

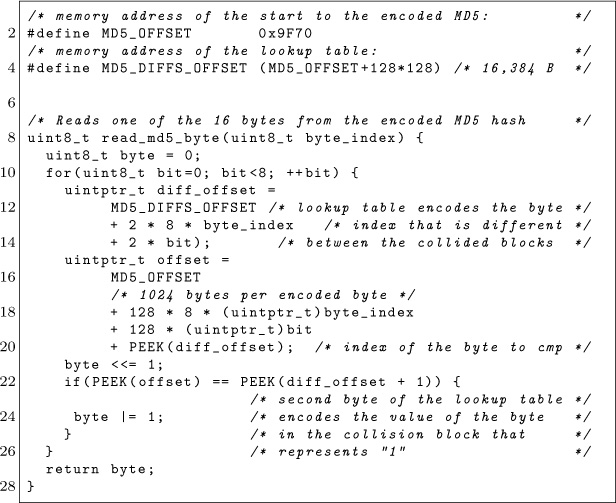

With the recent publication of a collided SHA1 PDF by the good neighbors at CWI and Google Research, folks have asked us to begin publishing SHA1 hashes instead of the MD5 sums that we traditionally publish. We might begin that in our next release, but for now, we received a flurry of nifty MD5 collisions. On page 112, Greg Kopf will show you how to make a PostScript image that contains its own checksum. On page 122, Mako describes a nifty trick for doing the same to a PDF, and on page 130 is Kristoffer Janke’s trick for generating a GIF that contains its own MD5 checksum.

On page 138, the Evans Sultanik and Teran describe how they coerced this PDF to be an NES ROM that, when run, prints its own MD5 checksum.

14:02 Z-Ring Phreaking from a Gameboy

by Vicki Pfau

At the end of last year, following their usual three-year cycle, Nintendo released a new generation of Pokémon games for their latest portable console, the Nintendo 3DS. This time, their new entry in the series spectacularly destroyed several sales records, becoming the most pre-ordered game in Nintendo’s history. And of course, along with a new Pokémon title, there are always several things that follow suit, such as a new season of the long running anime, a flood of cheapo toys, and datamining the latest games into oblivion. This article is not about the anime or the datamining; rather, it’s about one of the cheapo toys.

The two new games, Pokémon Sun and Pokémon Moon, focus on a series of four islands known as Alola in the middle of the ocean. Alola is totally not Hawaii.0 The game opens with a cutscene of a mysterious girl holding a bag and running away from several other mysterious figures. Near the beginning of the game, the player character runs into this mystery girl, known as Lillie, as she runs up to a bridge, and a rare Pokémon named Nebby pops out of the bag and refuses to go back in. It shudders in fear on the bridge as it’s harried by a pack of birds—sorry, Flying type Pokémons. The player character runs up to protect the Pokémon, but instead gets pecked at mercilessly.

Nebby responds by blowing up the bridge. The player and Nebby fall to their certain doom, only to be saved by the Guardian Pokémon of the island, Tapu Koko, who grabs them right before they hit the bottom of the ravine. Tapu Koko flies up to where Lillie is watching in awe, and delivers the pair along with an ugly stone that happens to have a well-defined Z shape on it. This sparkling stone is crafted by the kahuna of the island1 into what is known as a Z-Ring. So obviously there’s a toy of this.

In the game, the Z-Ring is an ugly, bulky stone bracelet given to random 11-year old children. You shove sparkling Z-Crystals onto it, and it lets you activate special Z-Powers on your Pokémon, unlocking super-special-ultimate Z-Moves to devastate an opponent. In real life, the Z-Ring is an ugly, bulky plastic bracelet given to random 11-year old children. You shove plastic Z-Crystals onto it, and it plays super-compressed audio as lights flash, and the ring vibrates a bit. More importantly, when you activate a Z-Power in-game, it somehow signals the physical Z-Ring to play the associated sound, regardless of which cheap plastic polyhedron you have inserted into it at the time. How does it communicate? Some people speculated about whether the interface was Bluetooth LE or a custom wireless communication protocol, but I had not seen anyone else reverse it. I decided to dig in myself.

The toy is overpriced compared to its build quality, but having seen one at a store recently, I decided to pick it up and take a look. After all, I’d done only minimal hardware reversing, and this seemed to be a good excuse to do more. The package included the Z-Ring bracelet, three Z-Crystals, and a little Pikachu toy. Trying to unbox it, I discovered that the packaging was horrendous. It’s difficult to remove all of the components without breaking anything. I feel sorry for all of the kids who got this for Christmas and then promptly broke off Pikachu’s tail as they eagerly tried to remove it from the plastic.

The bracelet itself has slots on the sides to hold six Z-Crystals and one on the top that has the signature giant Z around it. The slot on the top has three pogo pins, which connect to pads on a Z-Crystal. The center of these is GND, with one pin being used to light the LED through a series resistor (R1, 56 Ω) and the other pin being used to sense an identity resistor (R2, 18 kΩ for green).

It also has a tri-state switch on the side. One setting (Mode I) is for synchronizing to a 3DS, another (Mode II) is for role-play and synchronizes with six tracks on the Sun/Moon soundtrack, and the final (neutral) setting is the closest thing it has to an off mode. A button on the side will still light up the device in the neutral setting, presumably for store demo reasons.

My first step in reverse engineering the device was figuring out how to pair it with my 3DS. Having beaten my copy of Pokémon Sun already, I presumably had everything needed in-game to pair with the device, but there was no explicit mention of the toy in-game. Included in the toy’s packaging were two tiny pamphlets, one of which was an instruction manual. However, the instruction manual was extremely minimal and mostly just described how to use the toy on its own. The only thing I could find about the 3DS interface was an instruction to turn up the 3DS volume and set the audio to stereo. There was also a little icon of headphones with a line through them. I realized that it didn’t pair with the 3DS at all. It was sound-triggered!

I pulled out my 3DS, loaded up the game, and tried using a Z-Power in-game with the associated Z-Crystal inserted into the top of the toy. Sure enough, with the sound all the way up, the Z-Ring activated and synchronized with what the game was doing.

Now that I knew I’d need to record audio from the game, I pulled up Audacity on my laptop and started recording game audio from the speakers. Expecting the audio to be in ultrasonic range, I cranked up the sample rate to 96 kHz (although whether or not my laptop microphone can actually detect sound above 22 kHz is questionable) and stared at it in Audacity’s spectrogram mode. Although I saw a few splotches at the top of the audible range, playing them back did not trigger the Z-Ring at all. However, playing back the whole recording did. I tried playing subsets of the sample until I found portions that triggered the Z-Ring. As I kept cropping the audio shorter and shorter, I finally found what I was looking for. The trigger wasn’t ultrasonic. It was in fact completely audible!

When you activate a Z-Power in the game, a short little jingle always plays. I had previously assumed that the jingle was just for flavor, but when I looked at it, there were several distinctive lines on the spectrogram. The very beginning of the jingle included seven different tones, so I tried playing back that section. Sure enough, the Z-Ring activated. I cropped it down to the first four tones, and the Z-Ring would reliably activate and play a specific sample whenever I played the audio back. Rearranging the tones, I got it to play back a different sample. That was how to signal the toy, but now the task was finding all of the samples stored on the Z-Ring without dumping the ROM.

Looking at the recording in the spectrogram, it was pretty clear that the first tone, which lasts all of 40 milliseconds and is a few hundred hertz lower than the rest of the signal, is a marker indicating that the next few tones describe which sample to play back. I quickly reconstructed the four tones as just sine waves in Audacity to test my hypothesis, and sure enough, I was able to trigger the tones using the constructed signal as well. However, that was a tedious process and did not lend itself to being able to explore and document all of the tone combinations. I knew I needed to write some software to help me quickly change the tones, so I could document all the combinations. Since it looked as if the signal was various combinations of approximately four different frequencies, it would take some exploration to get everything.

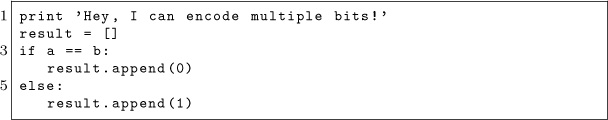

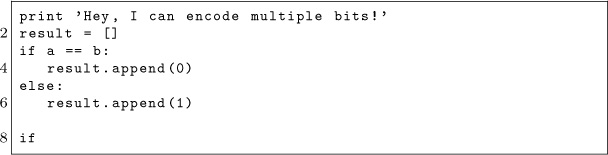

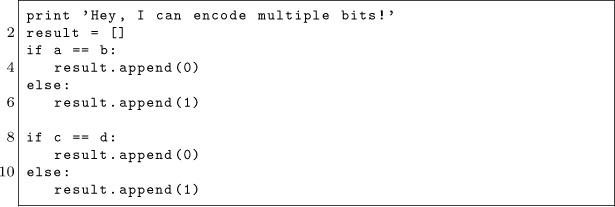

I’m lazy and didn’t feel like writing a tone generator and hooking it up to an audio output device and going through all of the steps I’d need to get sine waves of programmatically-defined frequencies to come out of my computer. However, I’m a special kind of lazy, and I really appreciate irony. The game is for the 3DS, right? What system is Pokémon famous for originating on? The original Game Boy, a platform with hardware for generating audible tones! Whereas the 3DS also has a microphone, the audio communication is only used in one direction. Perfect!

Now, I’d never written a program for the Game Boy, but I had implemented a Game Boy emulator. Fixing bugs on an emulator requires debugging both the emulator and the emulated software at the same time, so I’m quite familiar with the Game Boy’s unique variant of Z80, making the barrier of entry significantly lower than I thought it would be. I installed Rednex GameBoy Development System,2 one of the two most popular toolchains for compiling Game Boy homebrew ROMs, and wrote a few hundred lines of assembly. I figured the Game Boy’s audio channel 3, which uses 32-sample wavetables of four-bit audio, would be my best chance to approximate a sine wave. After a bit of experimenting, I managed to get it to create the right tones. But the first obstacle to playing back these tones properly was the timing. The first tone plays for 40 milliseconds, and the remaining tones each last 20 milliseconds. A frame on the gameboy is roughly 16 milliseconds long, so I couldn’t synchronize on frame boundaries, yet I found a busy loop to be impractical. (Yes, gameboy games often use busy loops for timing-sensitive operations.)

Fortunately, the gameboy has a built-in timer that can fire an interrupt after a given number of cycles, so, after a bit of math, I managed to get the timing right. Success! I could play back a series of tones from a table in RAM with the right timing and the right frequencies.

Sure enough, when I played this back in an emulator, the Z-Ring activated! The ROM plays the tones upon boot and had no user interface for configuring which tones to play, but recompiling the ROM was fast enough that it wasn’t really an issue.

The natural next step was uploading the program to a real Game Boy. I quickly installed the program onto a flash cart that I had purchased while developing the emulator. I booted up my original Game Boy, the tones played, and. . . the Z-Ring did not activate. No matter how many times I restarted the program, the tones would not activate the Z-Ring. I recorded the audio it was playing, and the tones were right. I was utterly confused until I looked a bit closer at the recording: the signal was getting quieter with every subsequent tone. I thought that this must be a bug in the hardware, as the Game Boy’s audio hardware is notorious for having different quirks between models and even CPU revisions. I tried turning off the audio channel and turning it back on again a few cycles later to see if that fixed anything. It still worked in the emulator, so I put it back on the flash cart, and this time it worked! I could consistently trigger one of the samples I’d seen, but some of the other ones seemed to randomly select one of three tones to play. Something wasn’t quite right with my tone generation, so I decided to halve the sample period, which would give me more leeway to finely adjust the frequency. This didn’t appear to help at all, unfortunately. Scoping out all of the combinations of the tones I thought were in range yielded about thirty responses out of the 64 combinations I tried. Unfortunately, many of the responses appeared to be the same, and many of them weren’t consistent. Additionally, samples I knew the Z-Ring had were not triggered by any of these combinations. Clearly something was wrong.

I needed a source of several unique known-good signals, so I scoured YouTube and found an “All Z-Moves” video. Sure enough, it triggered from the Z-Ring a bunch of reactions I hadn’t seen yet. Taking a closer look, I saw that the signal was actually all seven tones (not four), and extending the program to use seven tones suddenly yielded much more consistent results. Great! The bad news was that beyond the first, fixed tone, there were four variations of each subsequent tone, leading to a total of 46 combinations. That’s 4,096, a hell of a lot to scope out.

I decided to take another route and catalog every signal in the video as a known pattern. I could try other signals later. Slowly, I went through the video and found every trigger. It seemed that there were two separate commands per move: one was for the initial half of the scene, where the Pokémon is “surrounded by Z-Power,” and then the actual Z-Move was a separate signal. Unfortunately, three of the former signals had been unintentionally cropped from the video, leaving me with holes in my data. Sitting back and looking at the data, I started noticing patterns. I had numbered each tone from 0 (the lowest) to 3 (the highest), and every single one of the first 15 signals (one for each of the 18 Pokémon types in-game, minus the three missing types) ended with a 3. Some of the latter 18 (the associated Z-Powers per type) ended with a 1, but most ended with a 3. I wasn’t quite sure what that meant until I saw that other tones were either a 0 or a 2, and the remainder were either a 1 or a 3. Each tone encoded only one bit, and they were staggered to make sure the adjacent bits were differentiable!

This reduced the number of possibilities from over four thousand to a more manageable sixty-four. It also lent itself to an easy sorting technique, with the last bit being MSB and the first being LSB. As I sorted the data, I noticed that the first 18 fell neatly into the in-game type ordering, leaving three holes for the missing types, and the next 18 all sorted identically. This let me fill in the holes and left me with 36 of the 64 combinations already filled in. I also found 11 special, Pokémon-specific (instead of type-specific) Z-Moves, giving me 47 total signals and 17 holes left. As I explored the remaining holes, I found five audio samples of Pikachu saying different things, and the other 12 didn’t correspond to anything I recognized.

In the process, I added a basic user interface to the Game Boy program that lets you either select from the presets or set the tones manually. Given the naming scheme of these Z-Crystals,3 I naturally decided to name it Phreakium-Z.4

I thought I had found all of the Z-Ring’s sound triggers, but it was pointed out to me while I was preparing to publish my results that the official soundtrack release had six “Z-Ring Synchronized” tracks that interfaced with the Z-Ring. I had already purchased the soundtrack, so I took a look and tried playing back the tracks with the Z-Ring nearby. Nothing happened. More importantly, the distinctive jingle of the 5 kHz tones was completely absent from the tracks. So what was I missing? I tried switching it from Mode I into Mode II, and the Z-Ring lit up, perfectly synchronizing with the music. But where were the triggers? There was nothing visible in the 4–6 kHz range this time around. Although I could clip portions of tracks down to specific triggers, I couldn’t see anything in the spectrogram until I expanded the visible range all the way up to 20 kHz. This time the triggers were indeed ultrasonic or very nearly so.

Human hearing caps out at approximately 20 kHz, but most adults can only hear up to about 15 kHz. The sample rates of sound devices are typically no greater than 48 kHz, allowing the production of frequencies up to 24 kHz, including only a narrow band of ultrasonic frequencies. Given the generally poor quality of speakers at extremely high frequencies, you can imagine my surprise when I saw a very clear signal at around 19 kHz.

Zooming in, I saw the distinctive pattern of a lower, longer initial tone followed by several staggered data tones. However, this time it was a 9-bit signal, with a 60 ms initial tone at exactly 18.5 kHz and a 20 ms gap between the bits. Unfortunately, 18 kHz is well above the point at which I can get any fine adjustments in the Game Boy’s audio output, so I needed to shift gears and actually write something for the computer. At first I wrote something quick in Rust, but this proved to be a bit tedious. I realized I could make something quite a bit more portable: a JavaScript web interface using WebAudio.5

After narrowing down the exact frequencies used in the tones and debugging the JavaScript (as it turns out, I’ve gotten quite rusty), I whipped up a quick interface that I could use to explore commands. After all, 512 commands is quite a bit more than the 64 from Mode I.

Despite being a larger number of combinations, 512 was still a reasonable number to explore in a few hours. After I got the WebAudio version working consistently, I added the ability to take a number from 0 to 511 and output the correspondingly indexed tone, and I began documenting the individual responses generated.

I noticed that the first 64 indices of the 512 were in fact identical to the 64 Mode I tones, so that was quick to document. Once I got past those, I noticed that the responses from the Z-Ring no longer corresponded to game actions but were instead more granular single actions. For example, instead of a sequence of vibrations and light colors that corresponded to the animation of a Z-Move in game, a response included only one sound effect coupled with one lighting effect or one lighting effect with one vibration effect. There was also a series of sound effects that did not appear in Mode I and that seemed to be linked to individual Pokémon types. Many of the responses seemed randomly ordered, almost as though the developers had added the commands ad hoc without realizing that ordering similar responses would be sensible. Huge swaths of the command set ended up being the Cartesian product of a light color with a vibration effect. This ended up being enough of the command set that I was able to document the remainder of the commands within only a handful of hours.

Most of the individual commands weren’t interesting, but I did find eight additional Pikachu voice samples and a rather interesting command that — when played two or three times in a row — kicked the Z-Ring into what appeared to be a diagnostic mode. It performed a series of vibrations followed by a series of tones unique to this response, after which the Z-Ring stopped responding to commands. After a few seconds, the light on the bottom, which is completely undocumented in the manual and had not illuminated before, started blinking, and the light on top turned red. However, it still didn’t respond to any commands. Eventually I discovered that switching it to the neutral mode would change the light to blue for a few seconds, and then the toy would revert to a usable state. I’m still unsure of whether this was a diagnostic mode, a program upload mode, or something completely different.

By this point I’d put in several hours over a few days into figuring out every nook and cranny of this device. Having become bored with it, I decided to bite the bullet and disassemble the hardware. I found inside a speaker, a microphone, a motor with a lopsided weight for generating the vibrations, and a PCB. The PCB, although rather densely populated, did not contain many interesting components other than an epoxy blob labeled U1, an MX25L8006E flash chip labeled U2, and some test points. You will find a dump of this ROM attached.6 At this point, I decided to call it a week and put the Z-Ring back together; it was just a novelty, after all.

These are the 512 commands of the Z-Ring.

000: Normalium-Z

001: Firium-Z

002: Waterium-Z

003: Grassium-Z

004: Electrium-Z

005: Icium-Z

006: Fightium-Z

007: Poisonium-Z

008: Groundium-Z

009: Flyium-Z

00A: Psychium-Z

00B: Buginium-Z

00C: Rockium-Z

00D: Ghostium-Z

00E: Dragonium-Z

00F: Darkium-Z

010: Steelium-Z

011: Fairium-Z

012: Breakneck Blitz

013: Inferno Overdrive

014: Hydro Vortex

015: Bloom Doom

016: Gigavolt Havoc

017: Subzero Slammer

018: All-Out Pummeling

019: Acid Downpour

01A: Tectonic Rage

01B: Supersonic Skystrike

01C: Shattered Psyche

01D: Savage Spin-Out

01E: Continental Crush

01F: Never-Ending Nightmare

020: Devastating Drake

021: Black Hole Eclipse

022: Corkscrew Crash

023: Twinkle Tackle

024: Sinister Arrow Raid (Decidium-Z)

025: Malicious Moonsault (Incinium-Z)

026: Oceanic Operetta (Primarium-Z)

027: Catastropika (Pikachunium-Z)

028: Guardian of Alola (Tapunium-Z)

029: Stoked Sparksurfer (Aloraichium-Z)

02A: Pulverizing Pancake (Snorlium-Z)

02B: Extreme Evoboost (Eevium-Z)

02C: Genesis Supernova (Mewium-Z)

02D: Soul-Stealing 7-Star Strike (Marshadium-Z)

02E: (unknown)

02F: (unknown)

030: 10,000,000 Volt Thunderbolt (Pikashunium-Z)

031: (unknown)

032: (unknown)

033: (unknown)

034: (unknown)

035: (unknown)

036: (unknown)

037: (unknown)

038: (unknown)

039: Pikachu 1

03A: Pikachu 2

03B: Pikachu 3

03C: Pikachu 4

03D: Pikachu 5

03E: (unknown)

03F: (no response)

040: SFX/Light (Normal)

041: SFX/Light (Fire)

042: SFX/Light (Water)

043: SFX/Light (Grass)

044: SFX/Light (Electric)

045: SFX/Light (Ice)

046: SFX/Light (Fighting)

047: SFX/Light (Poison)

048: SFX/Light (Ground)

049: SFX/Light (Flying)

04A: SFX/Light (Psychic)

04B: SFX/Light (Bug)

04C: SFX/Light (Rock)

04D: SFX/Light (Ghost)

04E: SFX/Light (Dragon)

04F: SFX/Light (Dark)

050: SFX/Light (Steel)

051: SFX/Light (Fairy)

052: (no response)

053: Vibration (soft, short)

054: Vibration (soft, medium)

055: Vibration (pattern 1)

056: Vibration (pattern 2)

057: Vibration (pattern 3)

058: Vibration (pattern 4)

059: Vibration (pattern 5)

05A: Vibration (pattern 6)

05B: Vibration (pattern 7)

05C: Vibration (pattern 8)

05D: Vibration (pattern 8)

05E: Vibration (pattern 9)

05F: Vibration (pattern 10)

060: Vibration (pattern 11)

061: Vibration (pattern 12)

062: Vibration (pattern 13)

063: Vibration (pattern 14)

064: Light (yellow)

065: Light (pale blue)

066: Light (white)

067: Light (pattern 1)

068: Light (pattern 2)

069: Vibration (pattern 15)

06A: Vibration (pattern 16)

06B: Light/Vibration (red, very short)

06C: Light/Vibration (red, short)

06D: Light/Vibration (red, medium)

06E: Light (red)

06F: Light (yellow/green)

070: Light (green)

071: Light (blue)

072: Light (purple)

073: Light (pale purple)

074: Light (magenta)

075: Light (pale green)

076: Light (cyan)

077: Light (pale blue/purple)

078: Light (gray)

079: Light (pattern purple, pale purple)

07A: Light/Vibration (pale yellow, short)

07B: Light/Vibration (pale yellow, short)

07C: (no response)

07D: (no response)

07E: Self test/program mode? (reboots afterwards)

07F: Light (pale yellow)

080: Light (pale blue)

081: Light (pale magenta)

082: SFX/Vibration (Normal)

083: SFX/Vibration (Fire)

084: SFX/Vibration (Water)

085: SFX/Vibration (Grass)

086: SFX/Vibration (Electric)

087: SFX/Vibration (Ice)

088: SFX/Vibration (Fighting)

089: SFX/Vibration (Poison)

08A: SFX/Vibration (Ground)

08B: SFX/Vibration (Flying)

08C: SFX/Vibration (Psychic)

08D: SFX/Vibration (Bug)

08E: SFX/Vibration (Rock)

08F: SFX/Vibration (Ghost)

090: SFX/Vibration (Dragon)

091: SFX/Vibration (Dark)

092: SFX/Vibration (Steel)

093: SFX/Vibration (Fairy)

094: Pikachu 1

095: Pikachu 2

096: Pikachu 3

097: Pikachu 4

098: Pikachu 5

099: Vibration (speed 1, hard, 2x)

09A: Vibration (speed 1, hard, 4x)

09B: Vibration (speed 1, hard, 8x)

09C: Vibration (speed 1, hard, 16x)

09D: Vibration (speed 1, pattern, 2x)

09E: Vibration (speed 1, pattern, 4x)

09F: Vibration (speed 1, pattern, 8x)

0A0: Vibration (speed 1, pattern, 16x)

0A1: Vibration (speed 2, hard, 2x)

0A2: Vibration (speed 2, hard, 4x)

0A3: Vibration (speed 2, hard, 8x)

0A4: Vibration (speed 2, hard, 16x)

0A5: Vibration (speed 2, pattern, 2x)

0A6: Vibration (speed 2, pattern, 4x)

0A7: Vibration (speed 2, pattern, 8x)

0A8: Vibration (speed 2, pattern, 16x)

0A9: Vibration (speed 3, hard, 2x)

0AA: Vibration (speed 3, hard, 4x)

0AB: Vibration (speed 3, hard, 8x)

0AC: Vibration (speed 3, hard, 16x)

0AD: Vibration (speed 3, pattern, 2x)

0AE: Vibration (speed 3, pattern, 4x)

0AF: Vibration (speed 3, pattern, 8x)

0B0: Vibration (speed 3, pattern, 16x)

0B1: Vibration (speed 4, hard, 2x)

0B2: Vibration (speed 4, hard, 4x)

0B3: Vibration (speed 4, hard, 8x)

0B4: Vibration (speed 4, hard, 16x)

0B5: Vibration (speed 4, pattern, 2x)

0B6: Vibration (speed 4, pattern, 4x)

0B7: Vibration (speed 4, pattern, 8x)

0B8: Vibration (speed 4, pattern, 16x)

0B9: Vibration (speed 5, hard, 2x)

0BA: Vibration (speed 5, hard, 4x)

0BB: Vibration (speed 5, hard, 8x)

0BC: Vibration (speed 5, hard, 16x)

0BD: Vibration (speed 5, pattern, 2x)

0BE: Vibration (speed 5, pattern, 4x)

0BF: Vibration (speed 5, pattern, 8x)

0C0: Vibration (speed 6, hard, 16x)

0C1: Vibration (speed 6, hard, 2x)

0C2: Vibration (speed 6, hard, 4x)

0C3: Vibration (speed 6, hard, 8x)

0C4: Vibration (speed 6, hard, 16x)

0C5: Vibration (speed 6, pattern, 2x)

0C6: Vibration (speed 6, pattern, 4x)

0C7: Vibration (speed 6, pattern, 8x)

0C8: Vibration (speed 6, pattern, 16x)

0C9: Vibration (speed 7, hard, 2x)

0CA: Vibration (speed 7, hard, 4x)

0CB: Vibration (speed 7, hard, 8x)

0CC: Vibration (speed 7, hard, 16x)

0CD: Vibration (speed 7, pattern, 2x)

0CE: Vibration (speed 7, pattern, 4x)

0CF: Vibration (speed 7, pattern, 8x)

0D0: Vibration (speed 7, pattern, 16x)

0D1: Vibration (speed 8, hard, 2x)

0D2: Vibration (speed 8, hard, 4x)

0D3: Vibration (speed 8, hard, 8x)

0D4: Vibration (speed 8, hard, 16x)

0D5: Vibration (speed 8, pattern, 2x)

0D6: Vibration (speed 8, pattern, 4x)

0D7: Vibration (speed 8, pattern, 8x)

0D8: Vibration (speed 8, pattern, 16x)

0D9: Vibration (speed 9, hard, 2x)

0DA: Vibration (speed 9, hard, 4x)

0DB: Vibration (speed 9, hard, 8x)

0DC: Vibration (speed 9, hard, 16x)

0DD: Vibration (speed 9, pattern, 2x)

0DE: Vibration (speed 9, pattern, 4x)

0DF: Vibration (speed 9, pattern, 8x)

0E0: Vibration (speed 9, pattern, 16x)

0E1: Vibration (speed 10, hard, 2x)

0E2: Vibration (speed 10, hard, 4x)

0E3: Vibration (speed 10, hard, 8x)

0E4: Vibration (speed 10, hard, 16x)

0E5: Vibration (speed 10, pattern, 2x)

0E6: Vibration (speed 10, pattern, 4x)

0E7: Vibration (speed 10, pattern, 8x)

0E8: Vibration (speed 10, pattern, 16x)

0E9: Vibration (speed 11, hard, 2x)

0EA: Vibration (speed 11, hard, 4x)

0EB: Vibration (speed 11, hard, 8x)

0EC: Vibration (speed 11, hard, 16x)

0ED: Vibration (speed 11, pattern, 2x)

0EE: Vibration (speed 11, pattern, 4x)

0EF: Vibration (speed 11, pattern, 8x)

0F0: Vibration (speed 11, pattern, 16x)

0F1: Vibration (speed 12, hard, 2x)

0F2: Vibration (speed 12, hard, 4x)

0F3: Vibration (speed 12, hard, 8x)

0F4: Vibration (speed 12, hard, 16x)

0F5: Vibration (speed 12, pattern, 2x)

0F6: Vibration (speed 12, pattern, 4x)

0F7: Vibration (speed 12, pattern, 8x)

0F8: Vibration (speed 12, pattern, 16x)

0F9: Vibration (speed 13, hard, 2x)

0FA: Vibration (speed 13, hard, 4x)

0FB: Vibration (speed 13, hard, 8x)

0FC: Vibration (speed 13, hard, 16x)

0FD: Vibration (speed 13, pattern, 2x)

0FE: Vibration (speed 13, pattern, 4x)

0FF: Vibration (speed 13, pattern, 8x)

100: Vibration (speed 13, pattern, 16x)

101: Vibration (speed 14, hard, 2x)

102: Vibration (speed 14, hard, 4x)

103: Vibration (speed 14, hard, 8x)

104: Vibration (speed 14, hard, 16x)

105: Vibration (speed 14, pattern, 2x)

106: Vibration (speed 14, pattern, 4x)

107: Vibration (speed 14, pattern, 8x)

108: Vibration (speed 14, pattern, 16x)

109: Vibration (speed 15, hard, 2x)

10A: Vibration (speed 15, hard, 4x)

10B: Vibration (speed 15, hard, 8x)

10C: Vibration (speed 15, hard, 16x)

10D: Vibration (speed 15, pattern, 2x)

10E: Vibration (speed 15, pattern, 4x)

10F: Vibration (speed 15, pattern, 8x)

110: Vibration (speed 15, pattern, 16x)

111: Vibration (speed 16, hard, 2x)

112: Vibration (speed 16, hard, 4x)

113: Vibration (speed 16, hard, 8x)

114: Vibration (speed 16, hard, 16x)

115: Vibration (speed 16, pattern, 2x)

116: Vibration (speed 16, pattern, 4x)

117: Vibration (speed 16, pattern, 8x)

118: Vibration (speed 16, pattern, 16x)

119: Vibration (speed 17, hard, 2x)

11A: Vibration (speed 17, hard, 4x)

11B: Vibration (speed 17, hard, 8x)

11C: Vibration (speed 17, hard, 16x)

11D: Vibration (speed 17, pattern, 2x)

11E: Vibration (speed 17, pattern, 4x)

11F: Vibration (speed 17, pattern, 8x)

120: Vibration (speed 17, pattern, 16x)

121: Vibration (speed 18, hard, 2x)

122: Vibration (speed 18, hard, 4x)

123: Vibration (speed 18, hard, 8x)

124: Vibration (speed 18, hard, 16x)

125: Vibration (speed 18, pattern, 2x)

126: Vibration (speed 18, pattern, 4x)

127: Vibration (speed 18, pattern, 8x)

128: Vibration (speed 18, pattern, 16x)

129: Vibration (speed 19, hard, 2x)

12A: Vibration (speed 19, hard, 4x)

12B: Vibration (speed 19, hard, 8x)

12C: Vibration (speed 19, hard, 16x)

12D: Vibration (speed 19, pattern, 2x)

12E: Vibration (speed 19, pattern, 4x)

12F: Vibration (speed 19, pattern, 8x)

130: Vibration (speed 19, pattern, 16x)

131: Vibration (speed 20, hard, 2x)

132: Vibration (speed 20, hard, 4x)

133: Vibration (speed 20, hard, 8x)

134: Vibration (speed 20, hard, 16x)

135: Vibration (speed 20, pattern, 2x)

136: Vibration (speed 20, pattern, 4x)

137: Vibration (speed 20, pattern, 8x)

138: Vibration (speed 20, pattern, 16x)

139: Vibration (speed 21, hard, 2x)

13A: Vibration (speed 21, hard, 4x)

13B: Vibration (speed 21, hard, 8x)

13C: Vibration (speed 21, hard, 16x)

13D: Vibration (speed 21, pattern, 2x)

13E: Vibration (speed 21, pattern, 4x)

13F: Vibration (speed 21, pattern, 8x)

140: Vibration (speed 21, pattern, 16x)

141: Vibration (speed 22, hard, 2x)

142: Vibration (speed 22, hard, 4x)

143: Vibration (speed 22, hard, 8x)

144: Vibration (speed 22, hard, 16x)

145: Vibration (speed 22, pattern, 2x)

146: Vibration (speed 22, pattern, 4x)

147: Vibration (speed 22, pattern, 8x)

148: Vibration (speed 22, pattern, 16x)

149: Vibration (soft, very long)

14A: Pikachu 6

14B: Pikachu 7

14C: Pikachu 8

14D: Pikachu 9

14E: Pikachu 10

14F: Pikachu 11

150: Pikachu 12

151: Light/Vibration (red, pattern 1)

152: Light/Vibration (red, pattern 2)

153: Light/Vibration (red, pattern 3)

154: Light/Vibration (red, pattern 4)

155: Light/Vibration (red, pattern 5)

156: Light/Vibration (red, pattern 6)

157: Light/Vibration (red, pattern 7)

158: Light/Vibration (red, pattern 8)

159: Light/Vibration (red, pattern 9)

15A: Light/Vibration (red, pattern 10)

15B: Light/Vibration (red, pattern 11)

15C: Light/Vibration (red, pattern 12)

15D: Light/Vibration (red, pattern 13)

15E: Light/Vibration (red, pattern 14)

15F: Light/Vibration (red, pattern 15)

160: Light/Vibration (red, pattern 16)

161: Light/Vibration (red, pattern 17)

162: Pikachu 13

163: Light (pale magenta)

164: Vibration (pattern 15)

165: Light/Vibration (pattern)

166: Light (pale yellow/green)

167: Light (pale blue/purple)

168: Light (magenta)

169: Light (yellow/green)

16A: Light (cyan)

16B: Light (pale blue)

16C: Light (very pale blue)

16D: Light (pale magenta)

16E: Light (pale yellow)

16F: Light/Vibration (blue, pattern 1)

170: Light/Vibration (blue, pattern 2)

171: Light/Vibration (blue, pattern 3)

172: Light/Vibration (blue, pattern 4)

173: Light/Vibration (blue, pattern 5)

174: Light/Vibration (blue, pattern 6)

175: Light/Vibration (blue, pattern 7)

176: Light/Vibration (blue, pattern 8)

177: Light/Vibration (blue, pattern 9)

178: Light/Vibration (blue, pattern 10)

179: Light/Vibration (blue, pattern 11)

17A: Light/Vibration (blue, pattern 12)

17B: Light/Vibration (blue, pattern 13)

17C: Light/Vibration (blue, pattern 14)

17D: Light/Vibration (blue, pattern 15)

17E: Light/Vibration (blue, pattern 16)

17F: Light/Vibration (blue, pattern 17)

180: Light/Vibration (blue, pattern 18)

181: Light/Vibration (green, pattern 1)

182: Light/Vibration (green, pattern 2)

183: Light/Vibration (green, pattern 3)

184: Light/Vibration (green, pattern 4)

185: Light/Vibration (green, pattern 5)

186: Light/Vibration (green, pattern 6)

187: Light/Vibration (green, pattern 7)

188: Light/Vibration (green, pattern 8)

189: Light/Vibration (green, pattern 9)

18A: Light/Vibration (green, pattern 10)

18B: Light/Vibration (green, pattern 11)

18C: Light/Vibration (green, pattern 12)

18D: Light/Vibration (green, pattern 13)

18E: Light/Vibration (green, pattern 14)

18F: Light/Vibration (green, pattern 15)

190: Light/Vibration (green, pattern 16)

191: Light/Vibration (green, pattern 17)

192: Light/Vibration (green, pattern 18)

193: Light/Vibration (yellow/green, pattern 1)

194: Light/Vibration (yellow/green, pattern 2)

195: Light/Vibration (yellow/green, pattern 3)

196: Light/Vibration (yellow/green, pattern 4)

197: Light/Vibration (yellow/green, pattern 5)

198: Light/Vibration (yellow/green, pattern 6)

199: Light/Vibration (yellow/green, pattern 7)

19A: Light/Vibration (yellow/green, pattern 8)

19B: Light/Vibration (yellow/green, pattern 9)

19C: Light/Vibration (yellow/green, pattern 10)

19D: Light/Vibration (yellow/green, pattern 11)

19E: Light/Vibration (yellow/green, pattern 12)

19F: Light/Vibration (yellow/green, pattern 13)

1A0: Light/Vibration (yellow/green, pattern 14)

1A1: Light/Vibration (yellow/green, pattern 15)

1A2: Light/Vibration (yellow/green, pattern 16)

1A3: Light/Vibration (yellow/green, pattern 17)

1A4: Light/Vibration (yellow/green, pattern 18)

1A5: Light/Vibration (purple, pattern 1)

1A6: Light/Vibration (purple, pattern 2)

1A7: Light/Vibration (purple, pattern 3)

1A8: Light/Vibration (purple, pattern 4)

1A9: Light/Vibration (purple, pattern 5)

1AA: Light/Vibration (purple, pattern 6)

1AB: Light/Vibration (purple, pattern 7)

1AC: Light/Vibration (purple, pattern 8)

1AD: Light/Vibration (purple, pattern 9)

1AE: Light/Vibration (purple, pattern 10)

1AF: Light/Vibration (purple, pattern 11)

1B0: Light/Vibration (purple, pattern 12)

1B1: Light/Vibration (purple, pattern 13)

1B2: Light/Vibration (purple, pattern 14)

1B3: Light/Vibration (purple, pattern 15)

1B4: Light/Vibration (purple, pattern 16)

1B5: Light/Vibration (purple, pattern 17)

1B6: Light/Vibration (purple, pattern 18)

1B7: Light/Vibration (yellow, pattern 1)

1B8: Light/Vibration (yellow, pattern 2)

1B9: Light/Vibration (yellow, pattern 3)

1BA: Light/Vibration (yellow, pattern 4)

1BB: Light/Vibration (yellow, pattern 5)

1BC: Light/Vibration (yellow, pattern 6)

1BD: Light/Vibration (yellow, pattern 7)

1BE: Light/Vibration (yellow, pattern 8)

1BF: Light/Vibration (yellow, pattern 9)

1C0: Light/Vibration (yellow, pattern 10)

1C1: Light/Vibration (yellow, pattern 11)

1C2: Light/Vibration (yellow, pattern 12)

1C3: Light/Vibration (yellow, pattern 13)

1C4: Light/Vibration (yellow, pattern 14)

1C5: Light/Vibration (yellow, pattern 15)

1C6: Light/Vibration (yellow, pattern 16)

1C7: Light/Vibration (yellow, pattern 17)

1C8: Light/Vibration (yellow, pattern 18)

1C9: Light/Vibration (white, pattern 1)

1CA: Light/Vibration (white, pattern 2)

1CB: Light/Vibration (white, pattern 3)

1CC: Light/Vibration (white, pattern 4)

1CD: Light/Vibration (white, pattern 5)

1CE: Light/Vibration (white, pattern 6)

1CF: Light/Vibration (white, pattern 7)

1D0: Light/Vibration (white, pattern 8)

1D1: Light/Vibration (white, pattern 9)

1D2: Light/Vibration (white, pattern 10)

1D3: Light/Vibration (white, pattern 11)

1D4: Light/Vibration (white, pattern 12)

1D5: Light/Vibration (white, pattern 13)

1D6: Light/Vibration (white, pattern 14)

1D7: Light/Vibration (white, pattern 15)

1D8: Light/Vibration (white, pattern 16)

1D9: Light/Vibration (white, pattern 17)

1DA: Light/Vibration (white, pattern 18)

1DB: Light/Vibration (red, medium)

1DC: Light/Vibration (yellow/green, medium)

1DD: Light/Vibration (green, medium)

1DE: Light/Vibration (blue, very short)

1DF: Light/Vibration (blue, short)

1E0: Light/Vibration (blue, medium)

1E1: Light/Vibration (green, very short)

1E2: Light/Vibration (green, short)

1E3: Light/Vibration (green, medium)

1E4: Light/Vibration (yellow/green, very short)

1E5: Light/Vibration (yellow/green, short)

1E6: Light/Vibration (yellow/green, medium)

1E7: Light/Vibration (purple, very short)

1E8: Light/Vibration (purple, short)

1E9: Light/Vibration (purple, medium)

1EA: Light/Vibration (yellow, very short)

1EB: Light/Vibration (yellow, short)

1EC: Light/Vibration (yellow, medium)

1ED: Light/Vibration (white, very short)

1EE: Light/Vibration (white, short)

1EF: Light/Vibration (white, medium)

1F0: Light/Vibration (red, pattern 18)

1F1: Light (red, indefinite)

1F2: Light (yellow, indefinite)

1F3: Light (green, indefinite)

1F4: Light (blue, indefinite)

1F5: Light (purple, indefinite)

1F6: Light (pattern, indefinite)

1F7: SFX/Light (sparkle, gray)

1F8: (turn off light)

1F9: Light/Vibration (blue, medium)

1FA: Light/Vibration (pale purple, medium)

1FB: Light/Vibration (pattern, medium)

1FC: (no response)

1FD: (no response)

1FE: (no response)

1FF: (no response)

14:03 Concerning Desert Studies, Cyberwar, and the Desert Power

by Naib Manul Laphroaig0

Gather round, neighbors, as we close the moisture seals and relax the water discipline. Take off your face masks and breathe the sietch air freely. It is time for a story of the things that were and the things that will come.

Knowledge and water. These are the things that rule the universe. They are alike—and one truly needs to lack them to appreciate their worth. Those who have them in abundance proclaim their value—and waste them thoughtlessly, without a care. They make sure their wealth and their education degrees are on display for the world, and ever so hard to miss; they waste both time and water to put us in our place. Yet were they to see just one of our hidden caches, they would realize how silly their displays are in comparison.

For while they pour out the water and the time of their lives, and treat us as savages and dismiss us, we are working to change the face of this world.

Their scientists have imperial ranks, and their city schools teach—before and above any useful subject—respect for these ranks and for those who pose as “scientists” on the imperial TV. And yet, guess who knows more physics, biology, and planetary ecology that matters. Guess who knows how their systems actually work, from the smallest water valve in a stillsuit to the ecosystems of an entire planet. They mock Shai-hulud and dismiss us Fremen as the unwashed rabble tinkering to survive in the desert—yet their degrees don’t impress the sand.

The works of the ignorant are like sand. When yet sparse, they merely vex and irritate like loose grains; when abundant, they become like dunes that overwhelm all water, life, and knowledge. Verily, these are the dunes where knowledge goes to die. As the ignorant labor, sand multiplies, until it covers the face of the world and pervades every breath of the wind.

And then there was a Dr. Kynes. To imperial paymasters, he was just another official on the long roll getting ever longer. To the people of the city he was just another bureaucrat to avoid if they could, or to bribe if they couldn’t. To his fellow civil servants—who considered themselves scholars, yet spent more time over paperwork than most clerks—he was an odd case carrying on about things that mattered nothing to one’s career, as absolutely everybody knew; in short, they only listened to him if they felt charitable at the moment.

For all these alleged experts, the order of life was already scientifically organized about the best it could be. One would succeed by improving the standard model of a stillsuit, or just as well by selling a lot of crappy ones.

One did not succeed by talking about changing a planet. Planets were already as organized as they could be. A paper could be written, of course, but, to be published, the paper had to have both neatly tabulated results and a summary of prior work. There was no prior published work on changing planets, no journals devoted to it, and no outstanding funding solicitations. One would not even get invited to lecture about it. It was a waste of time, useless for advancement in rank.

Besides, highly ranked minds must have already thought about it, and did not take it up; clearly, the problem was intractable. Indeed, weren’t there already dissertations on the hundred different aspects of sand, and of desert plants, and of the native animals and birds? There were even some on the silly native myths. Getting on the bad side of the water-sellers, considering how much they were donating to the cause of higher learning, was also not a wise move.

But Kynes knew a secret: knowledge was water, and water was knowledge. The point of knowledge was to provide what was needed the most, not ranks or lectures. And he knew another secret: one could, in fact, figure out a thing that many superior minds hadn’t bothered with, be it even the size of the planet. And he may have guessed a third secret: if someone didn’t value water as life, there was no point of talking to them about water, or about knowledge. They would, at best, nod, and then go about their business. It is like spilling water on the sand.

That did not leave Kynes with a lot of options. In fact, it left him with none at all. And so he did a thing that no one else had done before: he left the city and walked out onto the sand. He went to find us, and he became Liet.

For those who live on the sand and are surrounded by it understand the true value of water, and of figuring things out, be they small or large. This Kynes sought, and this he found—with us, the Fremen.

His manner was odd to us, but he knew things of the sand that no city folk cared to know; he spoke of water in the sand as we heard none speak before. He must have figured it out—and there were just enough of us who knew that figuring things out was water and life. And so he became Liet.

His knowledge, rejected by bureaucrats, already turned into a water wealth no bureaucrat can yet conceive of. His peers wrote hundreds of thousands of papers since he left, and went on to higher ranks—and all of these will be scattered by the desert winds. A lot of useless technology will be sold and ground into dust on the sand—while Liet’s words are changing the desert slowly but surely.

Something strange has been going of late in their sheltered cities. There is talk of a “sand-war,” and of “sand warriors,” and of “sand power.” They are giving sand new names, and new certifications of “desert moisture security professionals” to their city plumbers. Their schools are now supposed to teach something they called SANDS, “Science, Agronomy, Nomenclature,1 Desert Studies,” to deliver a “sand superiority.” Their imperial news spread rumors of “anonymous senior imperial officials” unleashing “sand operations,” the houses major building up their “sand forces” and the houses minor demanding an investigation in the Landsraat.

Little do they know where the true sand power lies, and where the actual water and knowledge are being accumulated to transform the desert.

The sand will laugh at them—and one day the one who understands the true source of power will come after Liet, the stored water will come forth, the ecology will change—and a rain will fall.

Until then, we will keep the water and the knowledge. Until then, we, the Fremen, will train the new generations of those who know and those who figure things out!

14:04 Texting with Flush+Reload

by Taylor Hornby

Dear Editors and Readers of PoC||GTFO,

You’ve been lied to about how your computer works. You see, in a programming class they teach you just enough for you to get on with your job and no more. What you learn is a mere abstraction of the very complicated piece of physics sitting under your desk. To use your computer to its fullest potential, you must forget the familiar abstraction and finally see your computer for what it really is. Come with me, as we take a small step towards enlightenment.

You know what makes a computer—or so you think. There is a processor. There is a bank of main memory, which the processor reads, writes, and executes from. And there are processes, those entities that from time to time get loaded into the processor to do their work.

As we know, processes shouldn’t be trusted to play well together, and need to be kept separate. Many of the processor’s features were added to keep those processes isolated. It would be quite bad if one process could talk to another without the system administrator’s permission.

We also know that the faster a computer is, the more work it can do and the more useful it is. Even more features were introduced to the processor in order to make it go as fast as possible.

Accordingly, your processor most likely has a memory cache sitting between main memory and the processor, remembering recently-read data and code, so that the next time the processor reads from the same address, it doesn’t have to reach all the way out to main memory. The vendors will say this feature was added to make the processor go faster, and it does do a great job of that. But I will show you that the cache is also a feature to help hackers get around those annoying access controls that system administrators seem to love.

What I’m going to do is show you how to send a text message from one process to the other, using only memory reads. What!? How could this be possible? According to your programming class, you say, reads from memory are just reads, they can’t be used to send messages!

The gist is this: the cache remembers recently executed code, which means that it must also remember which code was recently executed. Processes are in control of the code they execute, so what we can do is execute a special pattern of code that the cache will remember. When the second process gets a chance to run, it will read the pattern out of the cache and recover the message. Oh how thoughtful it was of the processor designers to add this feature!

The undocumented feature we’ll be using is called “Flush + Reload,” and it was originally discovered by Yuval Yarom and Katrina Falkner.0 It’s available in most modern Intel processors, so if you’ve got one of those, you should be able to follow along.

It works like this. When Sally the Sender process gets loaded into memory, one copy of all her executed code gets loaded into main memory. When Robert the Receiver process loads Sally’s binary into his address space, the operating system isn’t going to load a second copy: that would be wasteful. Instead, it’s just going to point Robert’s page tables at Sally’s memory. If Sally and Robert could both write to the memory, it would be a huge problem since they could simply talk by writing messages to each other in the shared memory. But that isn’t a problem, because one of those processor security features stops both Sally and Robert from being able to write to the memory. How do they communicate then?

When Sally the Sender executes some of her code, the cache— the last-level cache, to be specific—is going to remember her most recently executed code. When Robert the Receiver reads a chunk of code in Sally’s binary, the read operation is going to be sent through the very same cache. So: if Sally ran the code not too long ago, Robert’s read will happen very fast. If Sally hasn’t run the code in a while, Robert’s read is going to be slow.

Sally and Robert are going to agree ahead of time on 27 locations in Sally’s binary. That’s one location for each letter of the alphabet, and one left over for the space character. To send a message to Robert, Sally is going to spell out the message by executing the code at the location for the letter she wants to send. Robert is going to continually read from all 27 locations in a loop, and when one of them happens faster than usual, he’ll know that’s a letter Sally just sent.

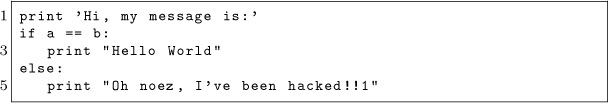

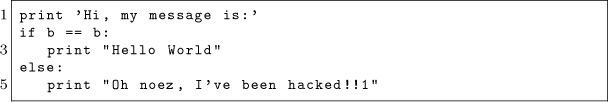

Page 14:04 contains msg.c, the source code for Sally’s binary. Notice that it doesn’t even explicitly make any system calls.

This program takes a message to send on the command-line and simply passes the processor’s thread of execution over the probe site corresponding to that character. To have Sally send the message “THE QUICK BROWN FOX JUMPS OVER THE LAZY DOG” we just compile it without optimizations, then run it.

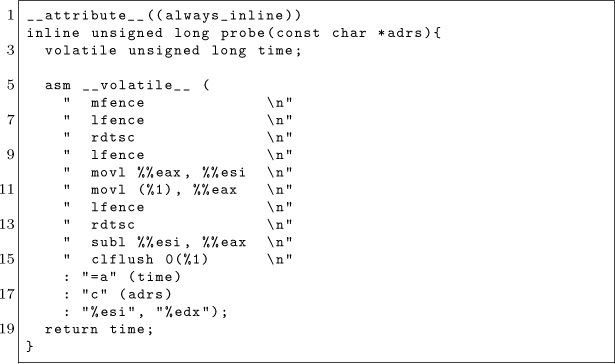

But how does Robert receive the message? Robert runs the program whose source code is at flush-reload/myversion. The key to that program is this bit of code, which times how long it takes to read from an address, and then flushes it from the cache.

By repeatedly running this code on those special probe sites in Sally’s binary, Robert will see which letters Sally is sending. Robert just needs to know where those probe sites are. It’s a matter of filtering the output of objdump to find those addresses, which can be done with this handy script:

Assuming this script works, it will output a list of command-line arguments for the receiver, enumerating which addresses to watch for getting entered into the cache:

The letter before the colon is the name of the probe site, followed by the address to watch after the colon. With those addresses, Robert can run the tool and receive Sally’s messages.

The -e option is the path to Sally’s binary, which must be exactly the same path as Sally executes. The -t parameter is the threshold that decides what’s a fast access or not. If the memory read is faster than that many clock cycles, it will be considered fast, which is to say that it’s in the cache. The -s option is how often in clock cycles to check all of the probes.

With Robert now listening for Sally’s messages, Sally can run this command in another terminal as another user to transmit her message.

$ ./ msg "The quick brown fox jumps over the lazy dog"

Robert sees the following output from the spy tool, where pipe characters separate successive scans over the probes, and between the pipe characters are all the names of the probes found to be in the cache during that scan.

WARNING: This processor does not have an invariant TSC.

Detected ELF type: Executable.

T|H|E|_|Q|U|I|C|K|_|_|B|B|R|O|W|N|_|F|O|X|_|J|U|M|P|S|_|

O|V|E|R|_|T|H|E|_|L|A|Z|Y|_|D|O|G|

There’s a bit of noise in the signal (note the replicated B’s), but it works! Don’t take my word for it, try it for yourself! It’s an eerie feeling to see one process send a message to another even though all they’re doing is reading from memory.

Now you see what the cache really is. Not only does it make your computer go faster, it also has this handy feature that lets you send messages between processes without having to go through a system call. You’re one step closer to enlightenment.

This is just the beginning. You’ll find a collection of tools and experiments that go much further than this.1 The attacks there use Flush+Reload to find out which PDF file you’ve opened, which web pages you’re visiting, and more.

I leave two open challenges to you fine readers:

1. Make the message-sending tool reliable, so that it doesn’t mangle messages even a little bit. Even cooler would be to make it a two-way reliable chat.

2. Extend the PDF-distinguishing attack in my poppler experiment2 to determine which page of pocorgtfo14.pdf is being viewed. As I’m reading this issue of PoC‖GTFO, I want you to be able to tell which page I’m looking at through the side channel.

Best of luck!

—Taylor Hornby

14:05 Anti-Keylogging with Noise

by Mike Myers

In PoC||GTFO 12:7, we learned that malware is inherently “drunk,” and we can exploit its inebriation. This time, our entonnoir de gavage will be filled with random keystrokes instead of single malt.

Gather ’round, neighbors, as we learn about the mechanisms behind the various Windows user-mode keylogging techniques employed by malware, and then investigate a technique for thwarting them all.

Background

Let’s start with a primer on the data flow path of keyboard input in Windows.

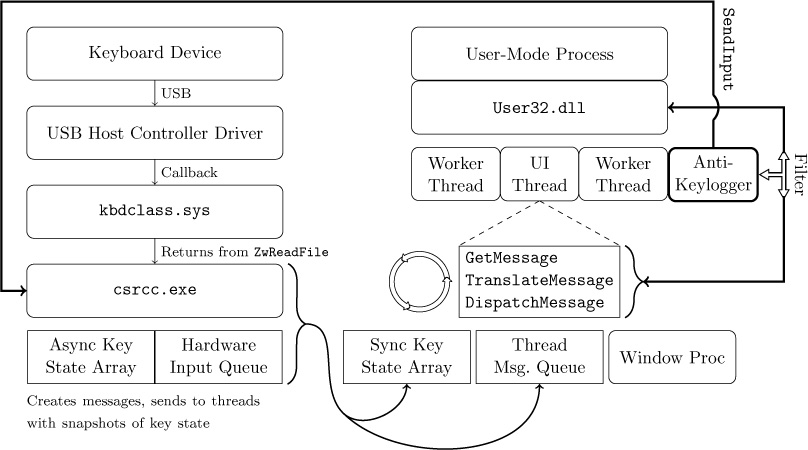

Figure 14.1 is a somewhat simplified diagram of the path of a keystroke from the keyboard peripheral device (top left), into the Windows operating system (left), and then into the active application (right). In more detail, the sequence of steps is as follows:

- The user presses down on a key.

- The keyboard’s internal microcontroller converts key-down activity to a device-specific “scan code,” and issues it to keyboard’s internal USB device controller.

- The keyboard’s internal USB device controller communicates the scan-code as a USB message to the USB host controller on the host system. The scan code is held in a circular buffer in the kernel.

- The keyboard driver(s) converts the scan code into a virtual key code. The virtual key code is applied as a change to a real-time system-wide data struct called the Async Key State Array.

- Windows OS process Csrcc.exe reads the input as a virtual key code, wraps it in a Windows “message,” and delivers it to the message queue of the UI thread of the user-mode application that has keyboard focus, along with a time-of-message update to a per-thread data struct called the Sync Key State Array.

- The user application’s “message pump” is a small loop that runs in its UI thread, retrieving Windows messages with GetMessage(), translating the virtual key codes into usable characters with TranslateMessage(), and finally sending the input to the appropriate callback function for a particular UI element (also known as the “Window proc”) that actually does something with the input, such as displaying a character or moving the caret.

Figure 14.1: Data flow of keyboard input in Windows.

For more detail, official documentation of Windows messages and Windows keyboard input can be found in MSDN MS632586 and MS645530.

User-Mode Keylogging Techniques in Malware

Malware that wants to intercept keyboard input can attempt to do so at any point along this path. However, for practical reasons input is usually intercepted using hooks within an application, rather than in the operating system kernel.

There are many reasons for this. First, hooking in the kernel requires Administrator privilege; including, today, a way to meet or circumvent the driver code-signing requirement. Hooking in the kernel before the keystroke reaches the keyboard driver only obtains a keyboard device-dependent “scan code” version of the keystroke, rather than its actual character or key value. Hooking in the kernel after the keyboard driver but before the application obtains only a “virtual key code” version of the keystroke, contextual with regard to the keyboard “layout” or language of the OS. Finally, hooking in the kernel means that the malware doesn’t know which application is receiving the keyboard input, because the OS has not yet dispatched the keystrokes to the active/focused application.

This is why, practically speaking, malware only has a handful of locations where it can intercept keyboard input: upon entering or leaving the system message queue, or upon entering or leaving the thread message queue.

Now that we know the hooking will likely be in user-mode, we can learn about the methods to do user-mode keystroke logging, which include:

- Hooking the Windows message functions TranslateMessage(), GetMessage(), and PeekMessage() to capture a copy of messages as they are retrieved from the per-thread message queue.

- Creating a Windows message hook for the WH_KEYBOARD message using SetWindowsHookEx().

- Similarly, creating a Windows message hook for the so-called “LowLevel Hook” (WH_KEYBOARD_LL) message with SetWindowsHookEx().

- Similarly, creating a Windows message hook for WH_JOURNAL-RECORD, in order to create a Journal Record Hook. Note that this method has been disabled since Windows Vista.

- Polling the system with GetAsyncKeyState().

- Similarly, polling the system with GetKeyboardState() or GetKeyState().

- Similarly, polling the system with GetRawInputData().

- Using DirectX to capture keyboard input (somewhat lower-level method).

- Stealing clipboard contents using, e.g., GetClipboardData().

- Stealing screenshots or enabling a remote desktop view (multiple methods).

The following table lists some pieces of malware and which method they use.

MALWARE |

KEYLOGGING TECHNIQUE |

Zeus |

Hooks TranslateMessage(), GetMessage(), PeekMessage(), and GetClipboardData(); uses GetKeyboardState().0 |

Sality |

GetMessage(), GetKeyState(), PeekMessage(), TranslateMessage(), GetClipboardData(). |

SpyEye |

Hooks TranslateMessage(), then uses GetKeyboardState(). |

Poison Ivy |

Polls GetKeyboardLayout(), GetAsyncKeyState(), GetClipboardData(), and uses SetWindowsHookEx(). |

Gh0st RAT |

Uses SetWindowsHookEx() with WH_GETMESSAGE, which is another way to hook GetMessage(). |

Anti-Keylogging with Keystroke Noise

One approach to thwarting keyloggers that might seem to have potential is to insert so many phantom keyboard devices into the system that the malware cannot reliably select the actual keyboard device for keylogging. However, based upon our new understanding of how common malware implements keylogging, it is clear that this approach will not be successful, because mal-ware does not capture keyboard input by reading it directly from the device. Malware is designed to intercept the input at a layer high enough as to be input device agnostic. We need a different technique.

Our idea is to generate random keyboard activity “noise” emanating at a low layer and removed again in a high layer, so that it ends up polluting a malware’s keylogger log, but does not actually interfere at the level of the user’s experience. Our approach, shown in Figure 14.2, is illustrated as a modification to the previous diagram.

Technical Approach

What we have done is create a piece of dynamically loadable code (currently a DLL) which, once loaded, checks for the presence of User32.dll and hooks its imported DispatchMessage() API. From the DispatchMessage hook, our code is able to filter out keystrokes immediately before they would otherwise be dispatched to a Window Proc. In other words, keystroke noise can be filtered here, at a point after potential malware would have already logged it. The next step is to inject the keystroke noise: our code runs in a separate thread and uses the SendInput() API to send random keystroke input that it generates. These keystrokes are sent into the keyboard IO path at a point before the hooks typically used by keylogging malware.

Figure 14.2: A noise generating anti-keylogger plugged into the Windows keyboard data flow.

In order avoid sending keystroke noise that will be delivered to a different application and therefore not filtered, our code must also use the SetWindowsHookEx() API to hook the WindowProc, in order to catch the messages that indicate our application is the one with keyboard focus. WM_SETFOCUS and WM_KILLFOCUS messages indicate gaining or losing keyboard input focus. We can’t catch these messages in our DispatchMessage() hook because, unlike keyboard, mouse, paint, and timer messages, the focus messages are not posted to the message queue. Instead they are sent directly to WindowProc. By coordinating the focus gained/lost events with the sending of keystroke noise, we prevent the noise from “leaking” out to other applications.

Related Research

In researching our concept, we found some prior art in the form of a European academic paper titled NoisyKey.1 They did not release their implementation, though, and were much more focused on a statistical analysis of the randomness of keys in the generated noise than in the noise channel technique itself. In fact, we encountered several technical obstacles never mentioned in their paper. We also discovered a commercial product called KeystrokeInterference. The trial version of KeystrokeInterference definitely defeated the keylogging methods we tested it against, but it did not appear to actually create dummy keystrokes. It seemed to simply cause keyloggers to gather incomplete data— depending on the method, they would either get nothing at all, only the Enter key, only punctuation, or they would get all of the keystroke events but only the letter “A” for all of them.

Thus, KeystrokeInterference doesn’t obfuscate the typing dynamics, and it appears to have a fundamentally different approach than we took. (It is not documented anywhere what that method actually is.)

Challenges

For keystroke noise to be effective as interference against a keylogger, the generated noise should be indistinguishable from user input. Three considerations to make are the rate of the noise input, emulating the real user’s typing dynamics, and generating the right mix of keystrokes in the noise.

Rate is fairly simple: the keystroke noise just has to be generated at a high enough rate that it well outnumbers the rate of keys actually typed by the user. Assuming an expert typist who might type at 80 WPM, a rough estimate is that our noise should be generated at a rate of at least several times that. We estimated that about 400 keystrokes per minute, or about six per second, should create a high enough noise to signal ratio that it is effectively impossible to discern which keys were typed. The goal here is to make sure that random noise keys separate all typed characters sufficiently that no strings of typed characters would appear together in a log.

Addressing the issue of keystroke dynamics is more complicated. Keystroke dynamics is a term that refers to the ability to identify a user or what they are typing based only on the rhythms of keyboard activity, without actually capturing the content of what they are typing. By flooding the input with random noise, we should break keystroke rhythm analysis of this kind, but only if the injected keystrokes have a random rhythm about them as well. If the injected keystrokes have their own rhythm that can be distinguished, then an attacker could theoretically learn to filter the noise out that way. We address this issue by inserting a random short delay before every injected keystroke. The random delay interval has an upper bound but no lower bound. The delay magnitude here is related to the rate of input described previously, but the randomness within a small range should mean that it is difficult or impossible to distinguish real from injected keystrokes based on intra-keystroke timing analysis.

Another challenge was detecting when our application had (keyboard) input focus. It is non-trivial for a Windows application to determine when its window area has been given input focus: although there are polling-based Windows APIs that can possibly indicate which Window is in the foreground (GetActiveWindow, GetForegroundWindow), they are neither efficient nor sufficient for our purposes. The best solution we have at the moment is that we installed a “Window Proc” hook to monitor for WM_SETFOCUS and other such messages. We also found it best to temporarily disable the keystroke noise generation while the user was click-dragging the window, because real keyboard input is not simultaneously possible with dragging movements. There are likely many other activation and focus states that we have not yet considered, and which will only be discovered through extensive testing.

Lastly, we had to address the need to generate keystroke noise that included all or most of the keys that a user would actually strike, including punctuation, some symbols, and capital letters. This is where we encountered the difficulty with the Shift key modifier. In order to create most non-alphanumeric keystrokes (and to create any capital letters, obviously), the Shift key needs to be held in concert with another key. This means that in order to generate such a character, we need to generate a Shift key down event, then the other required key down and up events, then a Shift key up event. The problem lies in the fact that the system reacts to our injected shift even if we filter it out: it will change the capitalization of the user’s actual keystrokes. Conversely, the user’s use of the Shift key will change the capitalization of the injected keys, and our filter routine will to fail recognize them as the ones we recently injected, allowing them through instead.

The first solution we attempted was to track every time the user hit the Shift key and every time we injected a Shift keystroke, and deconflict their states when doing our filter evaluation. Unfortunately, this approach was prone to failure. Subtle race conditions between Async Key State (“true” or “system” key state, which is the basis of the Shift key state’s affect on character capitalization) and Sync Key State (“per-thread” key state, which is effectively what we tracked in our filter) were difficult to debug. We also discovered that it is not possible to directly set and clear the Shift state of the Async Key State table using an API like SetKeyboardStateTable().

We considered using BlockInput() to ignore the user’s keyboard input while we generated our own, in order to resolve a Shift state confusion. However, in practice, this API can only be called from a High Integrity Level process (as of Windows Vista), making it impractical. It would probably also cause noticeable problems with keyboard responsiveness. It would not be acceptable as a solution.

Ultimately, the solution we found was to rely on a documented feature of SendInput() that will guarantee non-interleaving of inputs. Instead of calling SendInput() four times (Shift down, key down, key up, Shift up) with random delays in between, we would instead create an array of all four key events and call SendInput once. SendInput() then ensures that there are no other user inputs that intermingle with your injected inputs, when performed this way. Additionally, we use GetAsyncKeyState() immediately before SendInput in order to track the actual Shift state; if Shift were being held down by the user, we would not also inject an interfering Shift key down/up sequence. Together, these precautions solved the issue with conflicting Shift states. However, this has the downside of taking away our ability to model a user’s key-down-to-up rhythms using the random delays between those events as we originally intended.

Once we had made the change to our use of SendInput(), we noticed that these injected noise keys were no longer being picked up by certain methods of keylogging! Either they would completely not see the keystroke noise when injected this way, or they saw some of the noise, but not enough for it to be effective anymore. What we determined was happening is that certain keylogging methods are based on polling for keyboard state changes, and if activity (both a key down and its corresponding key up) happens in between two subsequent polls, it will be missed by the keylogger.

When using SendInput to instantaneously send a shifted key, all four key events (Shift key down, key down, key up, Shift key up) pass through the keyboard IO path in less time than a keylogger using a polling method can detect (at practical polling rates) even though it is fast enough to pick up input typed by a human. Clearly this will not work for our approach. Unfortunately, there is no support for managing the rate or delay used by SendInput; if you want a key to be “held” for a given amount of time, you have to call SendInput twice with a wait in between. This returns us to the problem of user input being interleaved with our use of the Shift key.

Our compromise solution was to put back our multiple SendInput() calls separated by delays, but only for keys that didn’t need Shift. For keys that need Shift to be held, we use the single SendInput() call method that doesn’t interleave the input with user input, but which also usually misses being picked up by polling-based keyloggers. To account for the fact that polling-based keyloggers would receive mostly only the slower unshifted key noise that we generate, we increased the noise amount proportionately. This hybrid approach also enables us to somewhat model keystroke dynamics, at least for the unshifted keystrokes whose timing we can control.

CPU and RAM usage of the PoC keystroke noise generator.

PoC Results

Our keystroke noise implementation produces successful results as tested against multiple user-mode keylogging methods.

Input-stealing methods that do not involve keylogging (such as screenshots and remote desktop) are not addressed by our approach. Fortunately, these are far less attractive methods to attackers: they are high-bandwidth and less effective in capturing all input. We also did not address kernel-mode keylogging techniques with our approach, but these too are uncommon in practical malware, as explained earlier.

Because the keystroke noise technique is an active technique (as opposed to a passive configuration change), it was important to test the CPU overhead incurred. As seen on page 60, the CPU overhead is incredibly minimal: it is less than 0.3% of one core of our test VM running on an early 2011 laptop with a second generation 2GHz Intel Core i7. Some of that CPU usage is due to the GUI of the demo app itself. The RAM overhead is similarly minimal; but again, what is pictured is mostly due to the demo app GUI.

Conclusions

Although real-time keyboard input is effectively masked from keyloggers by our approach, we did not address clipboard-stealing malware. If a user were to copy and paste sensitive information or credentials, our current approach would not disrupt malware’s ability to capture that information. Similarly, an attacker could take a brute-force approach of capturing what the user sees, and grab keyboard input that way. For approaches like these, there are other techniques that one could use. Perhaps they would be similar to the keystroke noise concept,2 but that is research that remains to be done.

Console-mode applications don’t rely on Windows messages, and as such, our method is not yet compatible with them. Console mode applications retrieve keyboard input differently, using the kbhit() and getkey() APIs. Likewise, any Windows application that checks for keyboard input without any use of Windows Messages (rare, but theoretically possible), by polling GetKeyboardState(), is also not yet compatible with our approach. There is nothing fundamentally incompatible; we would just need to instrument a different set of locations in the input path in order to filter out injected keyboard input before it is observed by console-mode applications or “abnormal” keyboard state checking of this sort.

Another area for further development is in the behavior of SendInput(). If we reverse engineer the SendInput API, we may be able to reimplement it in a way specifically suited for our task. Specifically we would like the timing between batched input elements to be controllable, while maintaining the input interleaving protection that it provides when called using batched input.

We discovered during research that a “low-level keyboard hook” (SetWindowsHookEx() with WH_KEYBOARD_LL) can check a flag on each callback called LLKHF_INJECTED, and know if the keystroke was injected in software, e.g., by a call to SendInput(). So in the future we would also seek a way to prevent win32k.sys from setting the LLKHF_INJECTED flag on our injected keystrokes. This flag is set in the kernel by win32k.sys!XxxKeyEvent, implying that it may require kernel-level code to alter this behavior. Although this would seem to be a clear way to defeat our approach, it may not be so. Although we have not tested it, any on-screen keyboard or remotely logged-on user’s key inputs supposedly come through the system with this flag set, so a keylogger may not want to filter on this flag. Once we propose loading kernel code to change a flag, though, we may as well change our method of injecting input and just avoid this problem entirely. By so doing we could also likely address the problem of kernel-mode keyloggers.

Acknowledgments

Funding for this work was provided in part by the Halting Attacks Via Obstructing Configurations (HAVOC) project under Mudge’s DARPA Cyber Fast Track program, Digital Operatives IR&D, and our famous Single Malt Gavage Funnel. With that said, all opinions and hyperbolic, metaphoric, gastronomic, trophic analogies expressed in this article are the author’s own and do not necessarily reflect the views of DARPA or the United States government.

14:06 How likely are random bytes to be a NOP sled on ARM?

by Niek Timmers and Albert Spruyt

Howdy folks!