Chapter 20. Telerobotics and Telepresence

The enabling technologies of virtual and augmented reality also hold significant promise in telerobotics, telepresence, and the control of semi-autonomous robots and systems from a distance. In this chapter we will explore three such applications, demonstrating use of these technologies in a high-end professional setting down to recent capabilities made available to the general hobbyist.

Defining Telerobotics and Telepresence

The objective of a telerobotic system is to connect humans and robots to reproduce operator actions at a distance (Ferre, 2007). The field is a branch of general robotics, the roots of which can be found in the need to perform work in hazardous or otherwise unreachable environments. The modern foundations of the field can be traced back to the 1950s and 1960s at Argonne National Laboratory and the handling of nuclear waste (Goertz, 1964). Today telerobotics has grown significantly to encompass a large number of fields including explosive ordnance disposal, control of remotely piloted vehicles, undersea exploration, and more.

Telepresence is defined as the experience or perception of presence within a physically remote environment by means of a communication medium (Steuer, 1992). Although in recent years the phrase has been liberally applied to a variety of disparate technologies such as video conferencing, face-to-face mobile chat, and even high-definition television, all the examples we provide in this chapter involve the use of some form of stereoscopic visualization technique, with head-mounted displays as the primary interface in most instances.

Space Applications and Robonaut

The International Space Station (ISS) is an extremely complex microgravity laboratory orbiting the Earth at an altitude of approximately 250 miles. Measuring 357 feet in length (just short of the size of a football field), the internal living volume is larger than a six-bedroom home. Fifty-two computers control the basic functionality of the station. In the U.S. segment alone, 1.5 million lines of software code run on 44 computers communicating via 100 different data networks. Tremendous amounts of information are passed between the different onboard systems and Earth stations. Nearly an acre of solar panels provide 75–90 kilowatts of power to the overall structure. Now think of the time and effort it takes to maintain this basic infrastructure, and this is before you consider the complex collection of experiments and engineering systems.

Early into the Space Shuttle program, NASA recognized the need for a means to assist astronauts in carrying out maintenance projects, experiments, and tasks deemed either too dangerous for crew members or too boring, mundane, and a poor use of an astronaut’s time (NASA, 2011). In 1996, the Dexterous Robotics Laboratory at NASA’s Johnson Space Center began collaboration with the Defense Advanced Research Projects Agency (DARPA) to develop a robotic assistant to help meet these needs.

The result of these collaborations is NASA’s Robonaut, a humanoid robot designed to function autonomously, as well as side by side with humans, carrying out some of these tasks. The newest model, developed by NASA and General Motors, is called Robonaut 2 (R2). As shown in Figure 20.1, the anthropomorphic R2 has a head, torso, arms, and dexterous hands capable of handling and manipulating many of the same tools as an astronaut would use to carry out different tasks. Delivered to the ISS in February 2011 aboard STS-133 (one of the final flights of the Space Shuttle), R2 is the first humanoid robot in space.

Credit: Image courtesy of NASA

Figure 20.1 Robonaut 2 is the next-generation dexterous humanoid robot, developed through a Space Act Agreement by NASA and General Motors. Controlled by an astronaut or ground personnel using a stereoscopic head-mounted display, vest, and specialized gloves, the system is being developed to perform a variety of tasks both inside and outside the International Space Station.

R2’s height is 3 feet, 4 inches (from waist to head) and incorporates more than 350 processors. The system can move at rates up to 7 feet per second. Between the fingers, arms, and head, the system is capable of movement across 42 degrees of freedom.

R2 has two primary operating modes; astronauts can give R2 a task to perform that is carried out autonomously, or the system can be controlled via teleoperation. In the case of the latter, as shown in Figure 20.2, an astronaut on the space station or a member of the ground crew dons a stereoscopic head-mounted display and pair of Cyberglove motion-capture data gloves. (See Chapter 12, “Devices to Enable Navigation and Interaction.”) Movement of R2’s head, arms, and hands are slaved precisely to those of the human operator.

Credit: Image courtesy of NASA

Figure 20.2 In the International Space Station’s Destiny laboratory, NASA astronaut Karen Nyberg wears a stereoscopic head-mounted display and CyberGloves while testing Robonaut 2’s capabilities.

In 2014, R2 received its first pair of legs measuring 9 feet in length to help the system move around the ISS to complete simple tasks. Instead of feet, the robot has clamps that allow it to latch onto objects and maneuver through the interior of the ISS.

Robotic Surgery

Efforts are also underway at developing the techniques and procedures to enable the Robonaut system to eventually be able to carry out basic surgery and medical procedures in case of an emergency. As an example of the emerging capabilities of the system, in 2015 researchers with Houston Methodist Institute for Technology, Innovation & Education (MITIE) used the R2 platform to perform multiple ground-based medical and surgical tasks via teleoperation. These tasks included intubation, assisting during simulated laparoscopic surgery, performing ultra sound guided procedures, and executing a SAGES (Society of American Gastrointestinal and Endoscopic Surgeons) training exercise (Dean and Diftler, 2015).

Of the many challenges that engineers face in fielding an effective robotic helper, and in particular, one that can carry out telemedicine procedures, is the time lag between a human operator’s movement or a command and the resulting action of the robotic system. Inside of a lab setting or across town, this time delay is negligible, but between a surgeon or physician on the ground and an orbiting space station, this delay could measure up to several seconds depending on the path the signal has to take (data relay satellites, ground stations, and so on). For deep space travel, these times increase dramatically. As an example, communications with spacecraft operating on Mars could have round-trip delays of up to 31 minutes depending on the planets’ relative positions (Dunn, 2015).

As R2 technology matures, NASA is considering the wide variety of additional areas where similar robots could be utilized in the space program, including being sent further away from Earth to test the system in harsher conditions. Ultimately, NASA envisions the potential use of such systems in servicing weather and reconnaissance satellites and eventually paving the way to remote locations such as Mars to be followed by human explorers (NASA, 2014).

Undersea Applications

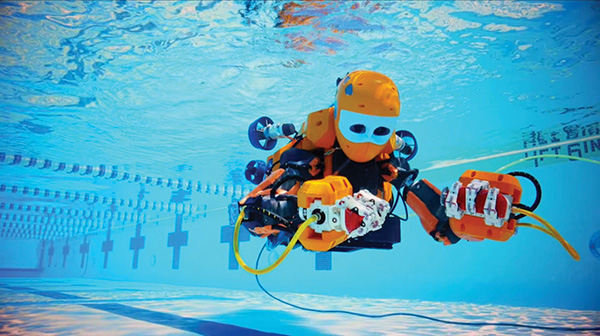

About 70% of the earth’s surface is covered with water. Similar to space, the world’s oceans present innumerable opportunities for exploration and discovery, but along with these opportunities come unique challenges and dangers. On one side, robotic submarines, autonomous underwater vehicles (AUVs), and underwater ROVs don’t have the dexterity or finesse of human divers. On the other side, human divers are unable to reach certain ocean depths without significant expense and danger. To address these challenges and open the doors to exploration and study of a greater portion of our planet, researchers with Stanford University have developed a highly advanced telerobotic system shown in Figure 20.3 known as OceanOne.

Credit: Image courtesy of Kurt Hickman / Stanford News Service

Figure 20.3 The OceanOne highly dexterous robot from Stanford University uses artificial intelligence, haptic feedback systems, and stereo vision to enable human operators to work and explore at remote underwater task sites.

Designed with the original intent of conducting studies of deep coral reefs in the Red Sea, the highly dexterous OceanOne system measures approximately 5 feet in length and includes two fully articulating arms, a torso, and a head with stereo video cameras capable of motion across two degrees of freedom (DOF). A tail section neatly combines redundant power units, onboard computers, controllers, and a propulsion system made up of eight multidirectional thrusters.

The end effectors of OceanOne’s arms include an array of sensors that send force feedback information to human operators located on the surface, enabling the pilot to delicately manipulate objects such as artifacts from shipwrecks, elements of a coral reef, sensors, and other instrumentation.

The system is outfitted with an array of sensors to measure conditions in the water and is able to automatically adjust for currents and turbulence to maintain position (known as station keeping).

The OceanOne system has already proven its capabilities and usefulness during a maiden dive in early 2016. Controlled by human operators on the surface, the system was used to explore La Lune, the flagship of King Louis XIV located 100 meters below the surface of the Mediterranean Sea approximately 20 miles off the southern coast of France. It is at this location that the vessel sank in 1664. During the dive, OceanOne was used to recover and return to the surface a delicate vase untouched by human hands for more than 350 years (Carey, 2016).

Terrestrial and Airborne Applications

In many Earth-based applications, experiencing a true sense of presence at a remote task site does not need to cost millions of dollars, nor does it have to take the form of the increasingly laughable model of a pole on wheels with tablet computer on top. The recent introduction of consumer-priced wide field of view (FOV) stereoscopic displays such as Oculus, HTC Vive, and OSVR are opening a host of opportunities for companies large and small to introduce alternative, highly useful, and cost effective remote visualization solutions.

DORA (Dexterous Observational Roving Automaton)

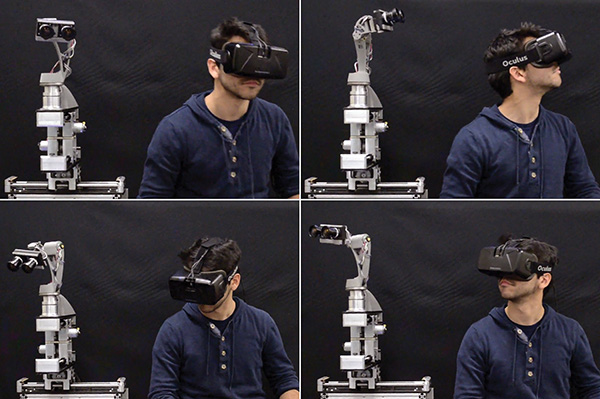

One such system is an immersive teleoperated robotic platform known as DORA (Dexterous Observational Roving Automaton), developed by an early-stage start-up team of student roboticists from the University of Pennsylvania. Sensors in the Oculus display system track the orientation of the operator’s head. This data is wirelessly transmitted to the robot’s microcontrollers, prompting actuators to move the stereo camera–equipped head to follow the user’s movements.

The idea behind DORA is pretty straightforward. As shown in Figure 20.4, the user puts on a stereoscopic head-mounted display, the video signals for which are supplied from the DORA platform. Sensors in the display are used to slave the motion of the DORA system to that of the wearer’s head. One of the most impressive features of the system was the original development price of less than $2,000 USD.

Credit: Image courtesy of Daleroy Sibanda / DORA

Figure 20.4 The DORA telerobotics platform gives users an immersive view of a remote task site by slaving the movement of stereo cameras to movement of an Oculus display, and thus, the operator’s head.

The team envisions its first markets to be application settings such as museums for remote tour rentals as well as a tool for use by first responders, disaster relief workers, and combat engineers to enter areas deemed too dangerous for a human operator, but with the added benefit of a variable stereoscopic first-person point of view, something woefully lacking in most current robotics platforms specifically designed for that purpose.

Transporter3D DIY Telepresence System

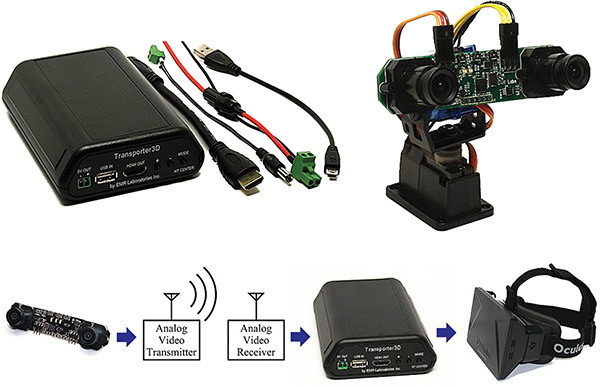

Despite the relative youth of the availability of consumer-level wide-field-of-view immersive display technologies, systems are already appearing on the market that enable technophiles to rapidly construct their own low-cost, first-person view (FPV) stereoscopic telepresence systems. One such offering of particular note is the Transporter3D system shown in Figure 20.5 from EMR Laboratories of Waterloo, Ontario, Canada.

Credit: Image courtesy of EMR Laboratories, Inc

Figure 20.5 The Transporter3D system converts remotely transmitted stereoscopic 3D video to real-time output over HDMI for viewing within stereoscopic head-mounted displays. The system is specifically designed for hobbyists and radio controlled aircraft enthusiasts.

The Transporter3D is a device that supports use of head-mounted displays by converting remotely transmitted stereoscopic 3D video to real-time output over HDMI that is specifically designed for the Oculus Rift and other HD HMDs. (See Chapter 6, “Fully Immersive Displays.”)

This 3D-Cam FPV camera is capable of both serial 3D (field sequential 3D over a single video transmitter) and parallel 3D (dual channel output), can be used in either 2D or 3D mode, and has remotely adjustable exposure and white balance locking for keeping ground and sky colors true under adverse lighting conditions. The camera lenses are positioned at the mean human inter-ocular distance for correct parallax offsets and ease of viewing. The IPD of the images shown in the Oculus display can also be easily adjusted for precision convergence alignment. Vertical and horizontal alignment of the onscreen images can be varied by the user, as can the aspect ratio of the displayed imagery.

A highly unique feature to this system is support for mounting the 3D-Cam FPV camera on a 3-axis pan/tilt/rollmount such as the FatShark gimbal shown in Figure 20.5. In this configuration, the system allows use of the Oculus IMU-based internal head tracker to slave movement of the camera to the operator’s head pan, tilt, and roll motions completely independent of any other controls. Thus, if mounted on an RC aircraft or helicopter, the human operator can swivel his head to look in any direction without impacting the flight of the aircraft.

Conclusion

Telerobotics and telepresence are not new fields, but until recently they have been the realm of defense and university labs due to the traditionally high cost of enabling technologies, and in particular, specialty wide FOV stereoscopic head-mounted displays. The introduction of consumer displays such as Oculus, HTC Vive, and OSVR will likely have a dramatic impact on this field, resulting in the introduction of a host of new systems and applications in the coming few years.