![]()

Building Customer Propensity Models

This chapter will provide a practical guide for building machine learning models. It focuses on buyer propensity models, showing how to apply the data science process to this business problem. Through a step-by-step guide, this chapter will explain how to apply key concepts and leverage the capabilities of Microsoft Azure Machine Learning for propensity modeling.

The Business Problem

Imagine that you are a marketing manager of a large bike manufacturer. You have to run a mailing campaign to entice more customers to buy your bikes. You have a limited budget and your management wants you to maximize the return on investment (ROI). So the goal of your mailing campaign is to find the best prospective customers who will buy your bikes.

With an unlimited budget the task is easy: you can simply buy lists and mail everyone. However, this brute force approach is wasteful and will yield a limited ROI since it will simply amount to junk mail for most recipients. It is very unlikely that you will meet your goals with this untargeted approach since it will lead to very low response rates.

A better approach is to use predictive analytics to target the best potential customers for your bikes, such as customers who are most likely to buy bikes. This class of predictive analytics is called buyer propensity models or customer targeting models. With this approach, you build models that predict the likelihood that a prospective customer will respond to your mailing campaign.

In this chapter, we will show you how to build this class of models in Azure Machine Learning. With the finished model you will score prospective customers and only mail those who are most likely to respond to your campaign. We will also show how you can maximize the ROI on your limited marketing budget.

![]() Note You will need to have an account on Azure Machine Learning. Refer to Chapter 2 for instructions to set up your new account if you do not have one yet.

Note You will need to have an account on Azure Machine Learning. Refer to Chapter 2 for instructions to set up your new account if you do not have one yet.

As you saw in Chapter 1, the data science process typically follows these five steps.

- Define the business problem.

- Data acquisition and preparation.

- Model development.

- Model deployment.

- Monitor model performance.

Having defined the business problem, you will explore data acquisition and preparation, model development, and evaluation in the rest of this chapter.

Data Acquisition and Preparation

In this section, you will see how to load data from different sources and analyze it in Azure Machine Learning. Azure Machine Learning enables you to load data from several sources including your local machine, the Web, a SQL database on Azure, Hive tables, or Azure Blob Storage.

Loading Data from Your Local File System

The easiest way to load data is from your local machine. Follow these steps.

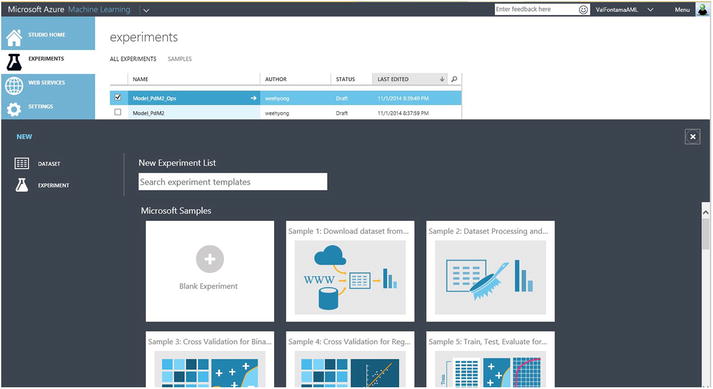

- Point your browser to the URL https://studio.azureml.net and log into your workspace in Azure Machine Learning.

- Next, click the Experiments item from the menu on the left pane.

- Click the +New button at the bottom left side of the window, and then select Dataset from the new menu shown in Figure 5-1.

Figure 5-1. Loading a new dataset from your local machine

- When you choose the option From Local File you will be prompted to select the data file to load from your machine.

You used these steps to load the BikeBuyer.csv file from your local file system into Azure Machine Learning.

Loading Data from Other Sources

You can load data from non-local sources using the Reader module in Azure Machine Learning. Specifically, with the Reader module you can load data from the Web, a SQL database on Azure, Azure table, Azure Blob storage, or Hive tables. To do this you will have to create a new experiment before calling the Reader module. Use the following steps to load data from any of the above sources with the Reader module.

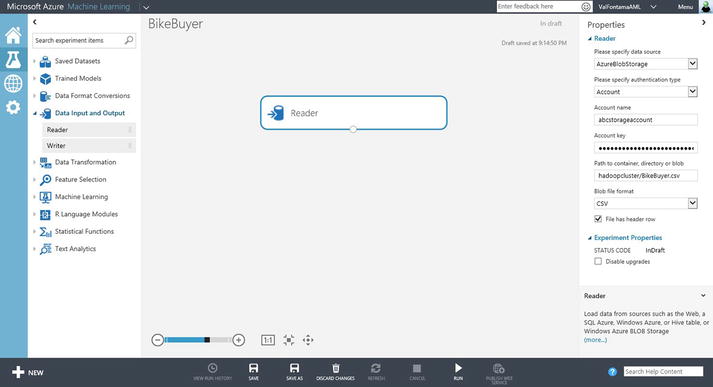

- Point your browser to the URL https://studio.azureml.net and log into your workspace in Azure Machine Learning.

- Next, click the Experiments item from the menu on the left pane.

- Click the +New button at the bottom left side of the window, and then select Experiment from the new menu. A new experiment is launched with a blank canvas, as shown in Figure 5-2.

Figure 5-2. Starting a new experiment in Azure Machine Learning

- Open the Data Input and Output menu item and you will see two modules, Reader and Writer.

- Drag the Reader module from the menu to any position on the canvas.

- Click the Reader module in the canvas and its properties will be shown on the right pane under Reader. Now specify the data source in the dropdown menu and then enter the values of all required parameters. Figure 5-3 shows the completed parameters we entered to read the BikeBuyer.csv file from our Azure Blob storage account. The Account Name and Account Key refer to a storage account on Microsoft Azure. You can obtain these parameters from the Azure portal as follows.

- Login to Microsoft Azure at http://azure.microsoft.com.

- On the top menu, click Portal. This takes you to the Azure portal.

- Select storage from the menu on the left pane. This lists all your storage accounts in the right pane.

- Click the storage account that contains the data you need for your machine learning model.

- At the bottom of the screen, click the key icon labeled Manage Access Keys. Your storage account name and access keys will be displayed. Use the Primary Access Key as your Account Key in the Reader module in Azure Machine Learning.

Figure 5-3. Loading the BikeBuyer.csv file from Azure Blob storage using the Reader module

![]() Note You can load several datasets from multiple sources into Azure Machine Learning using the above instructions. Note, however, that each Reader module only loads a single dataset at a time.

Note You can load several datasets from multiple sources into Azure Machine Learning using the above instructions. Note, however, that each Reader module only loads a single dataset at a time.

Data Analysis

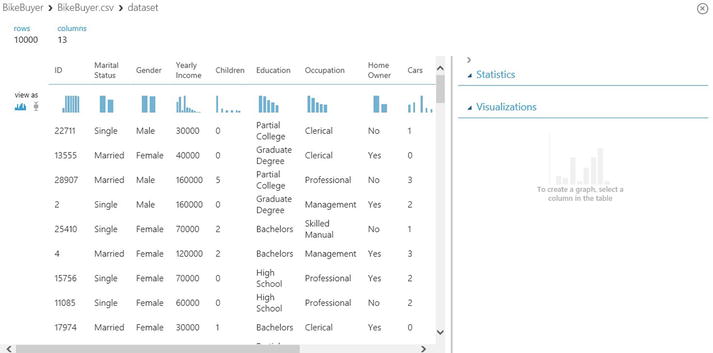

With the data loaded in Azure Machine Learning, the next step is to do pre-processing to prepare the data for modeling. It is always very useful to visualize the data as part of this process. You can visualize the Bike Buyer dataset either from the Reader module or by selecting BikeBuyer.csv from the Saved Datasets menu item in the left pane. When you hover over the small circle at the bottom of the Reader module, a menu is opened giving you two options: Download or Visualize. See Figure 5-4 for details. If you choose the Download option, you can save the data to your local machine and open it in Excel to view the data. Alternatively, you can visualize the data in Azure Machine Learning by choosing the Visualize option.

Figure 5-4. Two options for visualizing data in Azure Machine Learning

If you choose the Visualize option, the data will be rendered in a new window, as shown in Figure 5-5. Figure 5-5 shows the BikeBuyer.csv dataset that has historical sales data on all customers. You can see that this dataset has 10,000 rows and 13 columns including demographic variables such as marital status, gender, yearly income, number of children, occupation, age, etc. Other variables include home ownership status, number of cars, and commute distance. The last column, called Bike Buyer, is very important since it shows which customers bought bikes from your stores in the past.

Figure 5-5. Visualizing the Bike Buyer dataset in Azure Machine Learning

Figure 5-5 also shows descriptive statistics such as mean, median, standard deviation, etc. for all variables in the dataset. In addition, it shows the data type of each variable and the number of missing values. By scrolling down you will see a sample of the dataset showing actual values.

The first row at the top of the feature list is a set of thumbnails showing the distribution of each variable. You can see the full distribution by clicking on the thumbnail. For instance, if you click the thumbnail above yearly income, Azure Machine Learning opens the full histogram in the right pane of the screen; this is shown in Figure 5-6. This histogram shows you the distribution of the selected variable, and you can start to think about the ways in which it can be used in your model. For instance, in Figure 5-6, you can see clearly that yearly income is not drawn from a normal distribution. If anything, it looks more like a lognormal distribution. You can also spot outliers in the histogram. Azure Machine Learning lets you overlay a probability density function or a cumulative distribution on the histogram.

Figure 5-6. A histogram of yearly income

With Azure Machine Learning, you can also transform your data to a log scale. This is an important technique for capturing valid outliers that cannot be dropped. For instance, if your prospective targets include high income customers, it would be unwise to simply drop high income people as outliers. Log transformation is a better way to capture extreme incomes. Figure 5-7 shows the yearly income transformed with the log scale on the x-axis.

Figure 5-7. Transforming yearly income on a log scale

Another useful visualization tool in Azure Machine Learning is the box-and-whisker plot that is widely used in statistics. You can visualize your continuous variables with a box-and-whisker plot instead of a histogram. To do this, select the box-and-whisker icon in the first thumbnail labeled view as.

Figure 5-8 shows yearly income as a box-and-whisker plot instead of a histogram. On this plot, the y-value at the bottom of the box is the 25th percentile and the value at the top of the box is the 75th percentile. The line in the middle of the box is the median yearly income. The box-and-whisker plot shows outliers much more clearly: in Figure 5-8 the outliers are shown as three dots above the edge of the whisker. How are outliers determined? A rule of thumb is that any point that lies above or below 1.5*IQR is an outlier. IQR is the interquartile range measured as the 75th percentile - 25th percentile. On the box-and-whisker plot, IQR is the distance between the top and bottom of the box. Since you don’t plan to drop the outliers in this case, a good alternative is to transform yearly income with the log function. The result is shown in Figure 5-9. Now you see that after the log transformation the three dots disappear from the box-and-whisker plot. This means the three extreme yearly incomes no longer appear as outliers. This is a great way to include valid extreme values in your model. This treatment can also increase the power of your predictive model.

Figure 5-8. A box-and-whisker plot of yearly income

Figure 5-9. A box-and-whisker plot of the log of yearly income

More Data Treatment

In addition to visualization, Azure Machine Learning provides many other options for data pre-processing. The menu on the left pane has many modules organized by function. Many of these modules are useful for data pre-processing.

The Data Format Conversions item has five different modules for converting data formats. For instance, the module named Convert to CSV converts data from different types such as Dataset, DataTableDotNet, etc., to CSV format.

The Data Transformation item in the menu has sub-categories for data filtering, data manipulation, data sampling, and scaling. The menu item named Statistical Functions also has many relevant modules for data pre-processing.

Figure 5-10 shows some of these modules in action. The Join module (found under the Manipulation sub-category) enables you to join datasets. For instance, if there was a second relevant data for the propensity model, you could use the Join module to join it with the Bike Buyer dataset.

Figure 5-10. More options for data preparation

The module named Descriptive Statistics shows descriptive statistics of your dataset. For example, if you click the small circle at the bottom of this module, Azure Machine Learning shows descriptive statistics such as mean, median, standard deviation, skewness kurtosis, etc. of the Bike Buyer dataset. It also shows the number of missing values in each variable. As a rule of thumb, if a variable has 40% or more missing values, it can be dropped from the analysis, unless it is business critical.

You can resolve missing values with the module named Missing Values Scrubber. Like any other module in Azure Machine Learning, when you select this module, its parameters are shown on the right pane. This module allows you to handle missing values in a number of ways. First, you can remove columns with missing values. By default, the tool keeps all variables with missing values. Second, you can replace missing values with a hard-coded value in the parameter box. By default, the tool will replace any missing values with the number 0. Alternatively, you can replace missing values with the mean, median, or mode of the given variable.

The Linear Correlation module is also useful for computing the correlation of variables in your dataset. If you click on the small circle at the bottom of the Linear Correlation module, and then select Visualize, the tool displays a correlation matrix. In this matrix, you can see the pairwise correlation of all variables in the dataset. This module calculates the Pearson correlation coefficient. So other correlation types such as Spearman are not supported by this module. In addition, note that this module only calculates the correlation for continuous variables. For categorical variables, the module shows NaN.

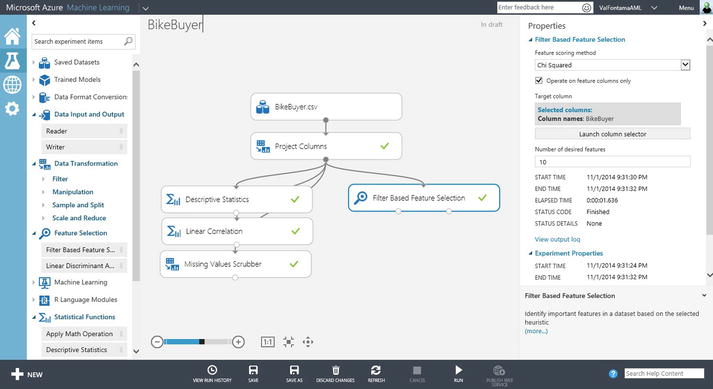

Feature Selection

Feature selection is a very important part of data pre-processing. Also known as variable selection, this is the process of finding the right variables to use in the predictive model. It is particularly critical when dealing with large datasets involving hundreds of variables. Throwing over 600 variables at a predictive model is wasteful of computer resources, and also reduces its predictive accuracy. Through feature selection you can find the most influential variables for the prediction. Since the Bike Buyer dataset only has 13 variables, you could skip this step. However, let’s see how to do feature selection in Azure Machine Learning because it is very important for large datasets.

To do feature selection in Azure Machine Learning, drag the module named Filter Based Feature Selection from the list of modules in the left pane. You can find this module by searching for it in the search box or by opening the Feature Selection category. To use this module, you need to connect it to a dataset as the input. Figure 5-11 shows it in use as a feature selection for the Bike Buyer dataset. Before running the experiment, use the Launch column selector in the right pane to define the target variable for prediction. In this case, choose the column Bike Buyer as the target since this is what you have to predict.

Figure 5-11. Feature selection in Azure Machine Learning

You also need to choose the scoring method that will be used for feature selection. Azure Machine Learning offers the following options for scoring:

- Pearson correlation

- Mutual information

- Kendall correlation

- Spearman correlation

- Chi Squared

- Fischer score

- Count based

The correlation methods find the set of variables that are highly correlated with the output, but have low correlation among themselves. The correlation is calculated using Pearson, Kendall, or Spearman correlation coefficients, depending on the option you choose.

The Fisher score uses the Fisher criterion from Statistics to rank variables. In contrast, the mutual information option is an information theoretic approach that uses mutual information to rank variables. The mutual information measures the dependence between the probability density of each variable and that of the outcome variable.

Finally, the Chi Squared option selects the best features using a test for independence; in other words, it tests whether each variable is independent of the outcome variable. It then ranks the variables based on the Chi Squared test.

![]() Note See http://en.wikipedia.org/wiki/Feature_selection#Correlation_feature_selection or http://jmlr.org/papers/volume3/guyon03a/guyon03a.pdf for more information on feature selection strategies.

Note See http://en.wikipedia.org/wiki/Feature_selection#Correlation_feature_selection or http://jmlr.org/papers/volume3/guyon03a/guyon03a.pdf for more information on feature selection strategies.

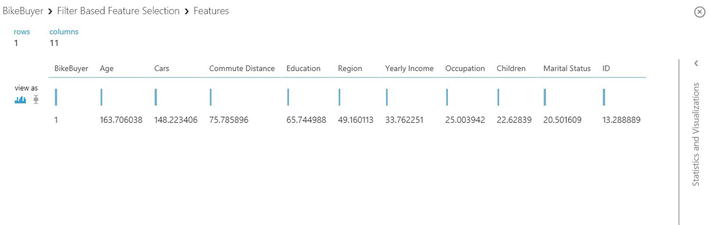

When you run the experiment, the Filter Based Feature Selection module produces two outputs. First, the filtered dataset lists the actual data for the most important variables. Second, the module shows a list of the variables by importance with the scores for each selected variable. Figure 5-12 shows the results of the features. In this case, you set the number of features to five and you used Chi Squared for scoring. Figure 5-12 shows six columns since the results set includes the target variable (i.e. Bike Buyer) plus the top ten variables, including age, cars, commute distance, education, and region. The last row of the results shows the score for each selected variable. Since the variables are ranked, the scores decrease from left to right.

Figure 5-12. The results of feature selection for the Bike Buyer dataset with the top variables

Note that the selected variables will vary based on the scoring method. So it is worth experimenting with different scoring methods before choosing the final set of variables. The Chi Squared and Mutual Information scoring methods produced a similar ranking of variables for the BikeBuyer dataset.

Training the Model

Once the data pre-processing is done, you are ready to train the predictive model. The first step is to choose the right type of algorithm for the problem at hand. For a propensity model, you will need a classification algorithm since your target variable is Boolean. The goal of your model is to predict whether a prospective customer will buy your bikes or not. Hence, it is a great example of a binary classification problem. As you saw in Chapters 1 and 4, classification algorithms use supervised learning for training. The training data has known outcomes for each feature set; in other words, each row in the historical data has the Bike Buyer field that shows whether the customer bought a bike or not. The Bike Buyer field is the dependent variable (also known as the response variable) that you have to predict. The input variables are the predictors (also known as independent variables or regressors) such as age, cars, commuting distance, education, region, etc. If you use logistic regression, your model can be represented as follows:

where b0 is a constant which is the intercept of the regression line; b1, b2, b3, etc. are the coefficients of the independent variables. e is the error that represents the variability in the data that is not explained by the selected variables. In this equation, BikeBuyer is the probability that a customer will buy a bike. Its value ranges from 0 to 1. Age, cars, and Communte_distance are the predictor variables.

During training, you present both the input and the output variables to the selected algorithm. At each learning iteration, the algorithm will try to predict the known outcome, using the current weights that have been learned so far. Initially, the prediction error is high because the weights have not been learned adequately. In subsequent iterations, the algorithm will adjust its weights to reduce the predictive error to the minimum. Note that each algorithm uses a different strategy to adjust the weights such that predictive error can be reduced. See Chapter 4 for more details on the statistical and machine learning algorithms in Azure Machine Learning.

Azure Machine Learning offers a wide range of classification algorithms from multiclass decision forest and jungle to two-class logistic regression, neural network, and support vector machines. For a customer propensity model, a two-class classification algorithm is appropriate since the response variable (Bike Buyer) has two classes. So you can use any of the two-class algorithms under the Classification sub-category. To see the full list of algorithms, expand the category named Initialize Model in the left pane. Expand the sub-category named Classification and Azure Machine Learning will list all available classification algorithms. We recommend experimenting with a few of these algorithms until you find the best one for the job. Figure 5-13 shows a simple but complete experiment for the bike buyer propensity model.

Figure 5-13. A simple experiment for customer propensity modeling

The Project Columns module simply excluded the column named ID since it is not relevant to the model. Use the Missing Values Scrubber module to handle missing values as discussed in the previous section. The Split module splits the data into two samples, one for training and the second for testing. In this experiment, you reserved 70% of the data for training and the remaining 30% for testing. This is one strategy commonly used to avoid over-fitting. Without the test sample, the model can easily memorize the data and noise. In that case, it will show very high accuracy for the training data but will perform poorly when tested with unseen data in production. Another good strategy to avoid over-fitting is cross-validation; this will be discussed later in this chapter.

Two modules are used for training the predictive model: the Two-class Logistic Regression module implements the logistic regression algorithm, while the Train Model actually does the training. The Train Model module trains any suitable algorithm to which it is connected. So it can be used to train any of the classification modules discussed earlier, such as Two-class Boosted Decision Tree, Two-class Decision Forest, Two-class Decision Jungle, Two-class Neural Network, or Two-class Support Vector Machine.

Model Testing and Validation

After the model is trained, the next step is to test it with a hold-out sample to avoid over-fitting. In this example, your test set is the 30% sample you created earlier with the Split module. Figure 5-13 shows how you use the module named Score Model to test the trained model.

Finally, the module named Evaluate Model is used to evaluate the performance of the model. Figure 5-13 shows how to use this module to evaluate the model’s performance on the test sample. The next section also provides more details on model evaluation.

As mentioned earlier, another strategy for avoiding over-fitting is cross-validation where you use not just two, but multiple samples of the data to train and test the model. Azure Machine Learning uses 10-fold validation where the original dataset is split into 10 samples. Figure 5-14 shows a modified experiment that uses cross-validation as well as the train and test set approach. On the right track of the experiment you replace the two modules, Train Model and Score Model, with the Cross Validate Model module. You can check the results of the cross-validation by clicking the small circle on the bottom right hand side of the Cross Validate Model module. This shows the performance of each of the 10 models.

Figure 5-14. A modified experiment with cross-validation

Model Performance

The Evaluate Model module is used to measure the performance of a trained model. This module takes two datasets as inputs. The first is a scored dataset from a tested model. The second is an optional dataset for comparison. After running the experiment you can check your model’s performance by clicking the small circle at the bottom of the module Evaluate Model. This module provides the following metrics to measure the performance of a classification model such as the propensity model:

- The Receiver Operating Characteristic (ROC) curve that plots the rate of true positives to false positives.

- The Lift curve (also known as the Gains curve) that plots the number of true positives versus the positive rate. This is popular in marketing.

- Precision versus recall chart.

- Confusion matrix that shows type I and II errors.

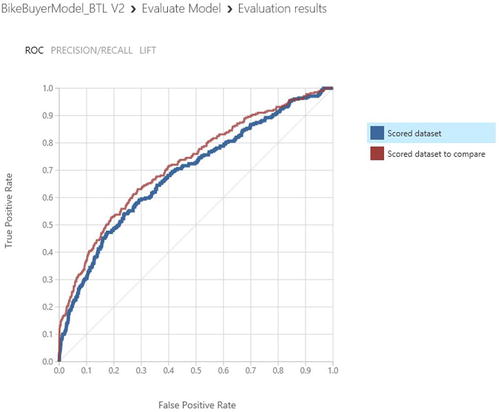

Figure 5-15 shows the ROC curve for the propensity model you built earlier. The ROC curve visually shows the performance of a predictive binary classification model. The diagonal line from (0,0) to (1,1) on the chart shows the performance of random guessing; so if you randomly selected who to target, your response would be on this diagonal line. A good predictive model should do much better than random guesses. Hence, on the ROC curve, a good model should fall above the diagonal line. The ideal model that is 100% accurate will have a vertical line from (0,0) to (0,1), followed by a horizontal line from (0,1) to (1,1).

Figure 5-15. The ROC curve for the customer propensity model

One way to measure the performance from the ROC curve is to measure the area under the curve (AUC). The higher the area under the curve, the better the model’s performance. The ideal model will have an AUC of 1.0, while a random guess will have an AUC of 0.5. The logistic regression model you built has an AUC of 0.689, which is much better random guess!

Figure 5-16 shows the confusion matrix for the logistic regression model you built earlier. The confusion matrix has four cells, namely

- True positives: These are cases where the customer actually bought a bike and the model correctly predicts this.

- True negatives: In the historical dataset these customers did not buy bikes, and the model correctly predicts that they would not buy.

- False positives: In this case, the model incorrectly predicts that the customer would buy a bike when in fact they did not. This is commonly referred to as Type I error. The logistic regression you built had only two false positives.

- False negatives: Here the model incorrectly predicts that the customer would not buy a bike when in real life the customer did buy one. This is also known as Type II error. The logistic regression model had up to 276 false negatives.

Figure 5-16. Confusion matrix and more performance metrics

In addition, Figure 5-16 also shows the accuracy, precision, and recall of the model. Here are the formulas for these metrics.

Precision is the rate of true positives in the results.

Recall is the percentage of buyers that the model identifies and is measured as

Finally, the accuracy measures how well the model correctly identifies buyers and non-buyers, as in

where tp = true positive, tn = true negative, fp = false positive, and fn = false negative.

You can also compare the performance of two models on a single ROC chart. As an example, let’s modify the experiment in Figure 5-14 to use the Two-class Boosted Decision tree module as the second trainer instead of the Two-class Logistic Regression module. Now your experiment has two different classifiers, the Two-class Logistic Regression on the left branch and the Two-class Boosted Decision Tree on the right branch. You connect the scored datasets from both models into the same Evaluate Model module. The updated experiment is shown in Figure 5-17 and the results are illustrated in Figure 5-18. On this chart, the curve labeled “scored dataset to compare” is the ROC curve for the Two-class Boosted Decision Tree model, while the one labeled “scored dataset” is the one for the Two-class Logistic Regression. You can see clearly that the boosted decision tree model outperforms the logistic regression model, as it has a higher lift over the diagonal line for random guesses. The area under the curve (AUC) for the boosted decision tree model is 0.722, which is better than that of the logistic regression model, which was 0.689, as you saw earlier.

Figure 5-17. Updated experiment that compares two models with the same Evaluate Model module

Figure 5-18. ROC curves comparing the performance of two predictive models, the boosted decision tree versus logistic regression

Summary

In this chapter, we provided a practical guide on how to build buyer propensity models with the Microsoft Azure Machine Learning service. Through a step-by-step guide in this chapter we explained how to build buyer propensity models in Microsoft Azure Machine Learning service. You learned how to perform data pre-processing and analysis, which are critical steps towards understanding the data that you are using for building customer propensity models. With that understanding of the data, you used a two-class logistic regression and a two-class boosted decision tree algorithm to perform classification. You also saw how to evaluate the performance of your models and avoid over-fitting.