QA engineer walks into a bar. Orders a beer. Orders 0 beers. Orders 999999999 beers. Orders a lizard. Orders –1 beers. Orders a sfdeljknesv.

—Bill Sempf (@sempf) on Twitter

In the second part of the book, we will focus on a powerful programming technique: automated testing. Automated testing is considered an essential Best Practice by many Python programmers. Why is that? In this chapter, we are going to see a gentle introduction into what automated testing is and what it is good for. In the chapters on debugging, we checked whether our program worked by simply executing it. We compared the output to our own expectations sometimes intuitively, sometimes using a prepared table of input/output values. However, this strategy does not scale very well with the size of our program: Imagine we were adding more elements to the game (types of blocks, levels, puzzles, etc.). Every time we add something, we need to play through the game and check

whether the new feature works.

whether the rest of the program still works.

However good our game is, manual testing quickly becomes a daunting task. Here, automated testing comes to the rescue. Testing is a boring, repetitive task, and as such we can (and should) automate it. What is automated testing? Basically, in automated testing we write a program to test another program. In a sense, we have already done this: in the first chapters, each of our modules contained a __main__ block that we used to execute each module to see whether it is working. But automated testing has a few differences. The term automated testing usually means that

The test code gives us a clear answer: The test passes or fails.

We test small units of code at a time.

We use a specialized testing framework that makes the test code simple.

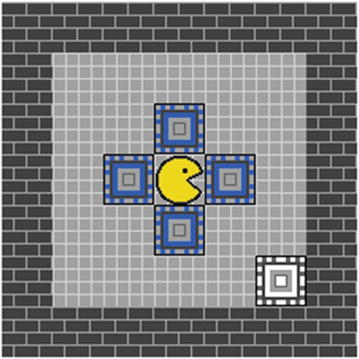

In this book, we are going to use the test framework py.test to write automated tests. The automated tests work like a scaffolding that keeps our code in place as it grows. Let us consider an example: We want to add movable crates to our mazes. There are crates in some corridors that we can push around, but not pull them. That means that if a crate ends up in a corner, we won’t ever get it out again. We may only move one crate at a time. In our example, we have to move the crate once in order to reach the exit (see Figure 8-1).

Figure 8-1. The four blue crates around the player shall be pushed. At least one needs to be moved in order to reach the exit.

Installing py.test

We will test the crate-moving feature using the py.test framework. First, we need to install py.test:

sudo pip install pytestTo run the tests, successfully, py.test needs to be able to import modules from the maze_run package. To make that possible, we need to add a file __init__.py that imports our modules:

from . import load_tilesfrom . import movesfrom . import draw_maze

We also need to set the PYTHONPATHvariable to the directory. You can do this on a bash console with

export PYTHONPATH=.We will have to do this every time we start a new console session. Alternatively, we can add the variable in our .bashrc file by adding the line

export PYTHONPATH=$PYTHONPATH:/home/krother/projects/maze_run/maze_runOf course, you need to adjust the path to your own maze_run directory.

Writing a Test Function

Next, we create the test itself in a file named test_crate.py. For now, we place that file in the same directory as the code for MazeRun. First, we import a few objects from earlier chapters and create a maze, using ’o’ as a symbol for crates:

from draw_maze import parse_gridfrom moves import movefrom moves import LEFT, RIGHT, UP, DOWNLEVEL = """########.....##..o..##.o*o.##..o..##.....########"""

Next, we implement a small Python function named test_move_crate_right.py that checks whether our code works correctly (if you try this at home, you may choose a different name as long as the function name starts with test_). Then we move the player once:

def test_move_crate_right():maze = parse_grid(LEVEL)move(maze, RIGHT)assert maze[3][4] == '*'

The assert statements in the last two lines check whether the player symbol (’*’) has moved one position to the right. If we run this program with the regular Python interpreter, nothing will happen, because the test_move_crate_right function isn’t called anywhere. Let’s see what py.test does with the function.

Running Tests

We execute the test by typing in the Unix terminal

py.testand obtain the following output:

============================= test session starts ==============================platform linux -- Python 3.4.0, pytest-2.9.2, py-1.4.31, pluggy-0.3.1rootdir: /home/krother/projects/maze_run/maze_run, inifile:collected 1 itemstest_crate.py .=========================== 1 passed in 0.06 seconds ===========================

What happened? py.test reports that it collected one test. It automatically found our test function in our test module (both identified by the prefix test_). Then it executed the tests in test_crate.py. The test passed, meaning the condition in the assert statement evaluated to True. Our first test was successful!

Writing a Failing Test

Of course, checking whether the player moved is not sufficient. We need to check whether the crate moved as well. To check the new position of the crate, we add a second assertion to our test function:

assert maze[3][5] == ’o’When running the py.test command again, it finds something to complain:

=================================== FAILURES ===================================_____________________________________test_crate_____________________________________def test_move_crate_right():maze = parse_grid(LEVEL)move(maze, RIGHT)assert maze[3][4] == ’*’> assert maze[3][5] == ’o’E assert ’.’ == ’o’E - .E + otest_crate.py:19: AssertionError=========================== 1 failed in 0.14 seconds ===========================

The test failed. In the output, we see the assert in line 17 is the one that failed. If the condition fails, assert raises an AssertionError, an Exception that is, honestly, not very helpful alone. However, py.test hijacks the Exception and tells us explicitly what the values on both sides of the comparison were. Instead of a crate (’o’) we see a dot (’.’). Apparently, the crate has not moved (instead the player eats the crate). Of course, the crate did not move, because we haven’t implemented the moving crate yet. If you would like to see it, you can start the game with the test level and chomp a few crates yourself.

Making the Test Pass

To move the crate and make the test pass, we need to edit the move() function in moves.py. We need to add an extra if condition for the ’o’ symbol. Let’s move the crate one tile to the right:

def move(level, direction):"""Handles moves on the level"""oldx, oldy = get_player_pos(level)newx = oldx + direction[0]newy = oldy + direction[1]if level[newy][newx] == ’x’:sys.exit(0)if level[newy][newx] == ’o’:level[newy][newx + 1] = ’o’if level[newy][newx] != ’#’:level[oldy][oldx] = ’˽’level[newy][newx] = ’*’

When we rerun the test, it passes again.

=========================== 1 passed in 0.06 seconds ===========================Passing Versus Failing Tests

The last example should make us concerned, or at least, think. The preceding code is only taking care of moves to the right. The code is, at best, incomplete. We know that the code does not work yet, but that the test passes. Concluding, we must take notice of a fundamental fact about automated testing: testing does not prove that code is correct.

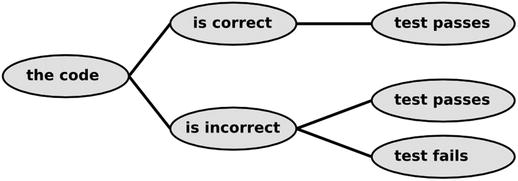

What is testing good for, then? Let us consider the alternatives in Figure 8-2: if the code is correct, the test passes. If the code is incorrect (buggy, wrong, incomplete, badly designed, etc.), both test outcomes are possible. Thus, if we observe that our test passes, this gives us little information. We cannot decide whether the code is correct or not, because the tests might be incomplete. It works better the other way around: whenever we observe a test that fails, we know for sure something is wrong. We still don’t know whether the code is wrong, whether the tests are incomplete, or both. But we have hard evidence that the situation requires further examination. In brief: automated testing proves the presence of defects, not their absence. Knowing that passing tests don’t mean much makes automated testing a bit tricky. A Best Practice that helps us to write meaningful tests: you may have noticed in the preceding section that we ran the test even before writing the code for moving crates. Writing a failing test first helps us prove that the code we write makes a difference. If the test switches from failed to passed we know that our code is more correct than it was before. We will revisit this idea in Chapter 11.

Figure 8-2. Possible outcomes when testing code. Testing does not prove that code is correct (with incorrect code, both outcomes are possible), but failing tests prove that something is wrong.

Writing Separate Test Functions

What can we do to push our code more firmly toward the correct state? The answer is astonishingly simple: Write more tests. You may notice that at the moment we have only implemented moving crates to the right. Technically, we can implement the other directions in the same way as the first. We implement each direction as a separate test function. All four function names start with test_, so that py.test discovers them automatically. To avoid code duplication, we place the call to move and the assertions in a helper functionmove_crate:

def move_crate(direction, plr_pos, crate_pos):"""Helper function for testing crate moves"""maze = parse_grid(LEVEL)move(maze, direction)assert maze[plr_pos[0]][plr_pos[1]] == ’*’assert maze[crate_pos[0]][crate_pos[1]] == ’o’def test_move_crate_left():move_crate(LEFT, (3, 2), (3, 1))def test_move_crate_right():move_crate(RIGHT, (3, 4), (3, 5))def test_move_crate_up():move_crate(UP, (2, 3), (1, 3))def test_move_crate_down():move_crate(DOWN, (4, 3), (5, 3))

Using helper functions to keep test code short and simple is a Best Practice, because code duplication is to be avoided generally, and test code is no exception to that rule. Also, automated tests ought to be simpler than the code being tested (otherwise we might end up debugging two complex programs instead of one). But why do we define four one-line functions instead of grouping all four calls in a single function? Calling py.test on this code again gives us an answer:

3 failed, 1 passed in 0.10 secondsOnly test_move_crate_right passes, because when implementing the move() function, we assumed that crates will always move to the right, which of course is nonsense. The output given by py.test not only tells us that three out of four tests fail, it also tells us precisely which of them. This gives us much more information than a single bigger test function would. Clearly, it is a Best Practice to write many small tests. The test results give us the information to rewrite the if condition handling crates in the move() function. The updated function is

def move(level, direction):"""Handles moves on the level"""oldx, oldy = get_player_pos(level)newx = oldx + direction[0]newy = oldy + direction[1]if level[newy][newx] == ’x’:sys.exit(0)if level[newy][newx] == ’o’:cratex = newx + direction[0]cratey = newy + direction[1]level[cratey][cratex] = ’o’if level[newy][newx] != ’#’:level[oldy][oldx] = ’˽’level[newy][newx] = ’*’

And now all four tests pass:

============================= test session starts ==============================platform linux -- Python 3.4.0, pytest-2.9.2, py-1.4.31, pluggy-0.3.1rootdir: /home/krother/projects/maze_run/part1_debugging, inifile:collected 4 itemstest_crate.py ....=========================== 4 passed in 0.07 seconds ===========================

Note

Many programmers argue that one test function should contain only one assertion. This is generally a good practice, but at this point it would unnecessarily inflate our code. We will see a more elegant way to structure our tests and simplify our code in Chapter 9.

Assertion s Provide Helpful Output

The assert statement will evaluate any valid boolean expression in Python. This allows us to test many different situations, a few of which are applied in the following to maze, a two-dimensional list:

def test_assert_examples():maze = parse_grid(LEVEL)assert len(maze) <= 7 # comparison operatorassert 1 < len(maze) < 10 # range checkassert maze[0][0] == ’#’ and maze[1][1] == ’.’ # logical operatorsassert maze[0].count(’#’) == 7 # using methods

As mentioned previously, py.test changes the output of assert from an AssertionError to a more meaningful output. A practical side of this is that we can compare lists, dictionaries, and other composite types in assert statements. As an example, we write another test using the minimalistic maze ’*o#’ consisting of just three tiles:

def test_push_crate_to_wall():maze = parse_grid("*o#")move(maze, RIGHT)assert maze[0] == [’*’, ’o’, ’#’]

The test fails when running py.test! Apparently, we did not take care about walls blocking the movement of crates yet. py.test reports the location of the mismatch between the two lists (actually, all three positions mismatch, but the first one is sufficient for the test to fail):

E assert [’ ’, ’*’, ’o’] == [’*’, ’o’, ’#’]E At index 0 diff: ’ ’ != ’*’

Similarly, we can write a test to make sure a crate blocks movement as well. This time, we compare two nested lists in the assert statement:

def test_push_crate_to_crate():maze = parse_grid("*oo")move(maze, RIGHT)assert maze == [[’*’, ’o’, ’o’]]

For a two-dimensional list, we get a precise report on the mismatching items as well:

E assert [[’ ’, ’*’, ’o’]] == [[’*’, ’o’, ’o’]]E At index 0 diff: [’ ’, ’*’, ’o’] != [’*’, ’o’, ’o’]

This kind of precise output is a lifesaver when testing large lists and similar composite types. Comparing the expected versus the factual output can be done in a single assert, and we won’t have to compare the lists manually in order to find the mismatch. The assert statement used by py.test is quite versatile. py.test often manages to create a meaningful output from the expression in case the test fails (with the -v option it gets even a bit better). Because we may use any kind of boolean expression in assert, we can construct most of the tests needed in practice.

Testing for Exceptions

There is one situation in which the assert statement does not work: if we want to test whether an Exception was raised. Let us consider an example: what should happen if we call the move() function with the direction parameter set to None? (We encountered this situation in Chapter 7.) Assume we want a TypeErrorto be created and want to test whether move raises the Exception. Testing that no Exception is generated would be easy:

move(maze, None)assert True

But it does not work the other way around. There is no syntactically correct way to write a single assert statement that calls move with None and passes if an Exception is raised inside the function. The problem is that if we want the TypeError to occur if everything works correctly, the assert will always fail because of that Exception. We could help ourselves with a clumsy workaround:

try:move(maze, None)except TypeError:assert Trueelse:assert False

Now the test fails if (and only if) the Exception does not occur. For this kind of situation, py.test provides a shortcut that is a recommended Best Practice. The context manager pytest.raisesallows us to specifically test for an Exception:

import pytestdef test_move_to_none():"""direction=None generates an Exception"""maze = parse_grid(LEVEL)with pytest.raises(TypeError):move(maze, None)

Why would we want to test against Exceptions? There are a couple of good reasons for doing so:

First, we do not want our code to show any random behavior. If the code fails, we want to fail it in a way we have specified before. The pytest.raises function is a great way to do that.

Second, it helps us to contain bugs—if we know what a wrong input behaves like, it will be less likely that an error propagates through our code.

Third, the source of an error becomes easier to find.

Fourth, it helps us to spot design flaws, things we simply did not think of before.

If the test fails, we see the message ’Failed: DID NOT RAISE’. However, it passes nicely.

Border Cases

How well do the tests we have written so far perform? Out of eight test functions, six pass and two fail. With py.test -v we obtain

============================= test session starts ==============================platform linux -- Python 3.4.0, pytest-2.9.2, py-1.4.31, pluggy-0.3.1rootdir: /home/krother/projects/maze_run/part1_debugging, inifile:collected 8 itemstest_crate.py::test_move_crate_left PASSEDtest_crate.py::test_move_crate_right PASSEDtest_crate.py::test_move_crate_up PASSEDtest_crate.py::test_move_crate_down PASSEDtest_crate.py::test_assert_examples PASSEDtest_crate.py::test_push_crate_to_wall FAILEDtest_crate.py::test_push_crate_to_crate FAILEDtest_crate.py::test_move_to_none PASSED==================================== FAILURES ==================================________________________________test_push_crate_to_wall__________________________def test_push_crate_to_wall():maze = parse_grid("*o#")move(maze, RIGHT)> assert maze[0] == [’*’, ’o’, ’#’]E assert [’ ’, ’*’, ’o’] == [’*’, ’o’, ’#’]E At index 0 diff: ’ ’ != ’*’E Full diff:E - [’ ’, ’*’, ’o’]E + [’*’, ’o’, ’#’]test_crate.py:51: AssertionError_______________________________test_push_crate_to_crate_____________________________def test_push_crate_to_crate():maze = parse_grid("*oo")move(maze, RIGHT)> assert maze == [[’*’, ’o’, ’o’]]E assert [[’ ’, ’*’, ’o’]] == [[’*’, ’o’, ’o’]]E At index 0 diff: [’ ’, ’*’, ’o’] != [’*’, ’o’, ’o’]E Full diff:E - [[’ ’, ’*’, ’o’]]E + [[’*’, ’o’, ’o’]]test_crate.py:57: AssertionError=============================== 2 failed, 6 passed in 0.11 seconds =============

If we examine the mismatching lists in the two failing tests, we notice that the crates can be moved too easily. A crate can be pushed through everything, including walls and other crates. Pushing it further out of the playing field would crash the program. We have neglected several possible situations that can arise. These are called border cases or edge cases. Generally, border cases aim at covering as many of the possible inputs with as little test code as possible. Good border cases cover a diverse range of inputs. For instance, typical border cases for float numbers include typical values and extreme values like the biggest and smallest value, empty and erroneous data, or wrong data types. In our case, the border cases we have not covered explicitly include

Pushing a crate into a wall.

Pushing a crate into another crate.

Pushing a crate into the exit.

To satisfy our border cases, we need to update the move() function once more:

def move(level, direction):"""Handles moves on the level"""oldx, oldy = get_player_pos(level)newx = oldx + direction[0]newy = oldy + direction[1]if level[newy][newx] == ’x’:sys.exit(0)if level[newy][newx] == ’o’:cratex = newx + direction[0]cratey = newy + direction[1]if level[cratey][cratex] in ’. ’:level[cratey][cratex] = ’o’level[newy][newx] = ’ ’elif level[cratey][cratex] == ’x’:raise NotImplementedError("Crate pushed to exit")if level[newy][newx] in ’. ’:level[oldy][oldx] = ’˽’level[newy][newx] = ’*’

The last addition makes all our tests pass:

=========================== 7 passed in 0.08 seconds ===========================We might test the same set of border cases for the other directions as well. But since the code we are testing is not very long or complex, we decide that our seven tests cover the possible situations well enough for now (also see Figure 8-3).

Figure 8-3. “I admit the chance of finding a seven-tentacled monster below the house was low, but I found it worth testing anyway.”

Complex Border Cases

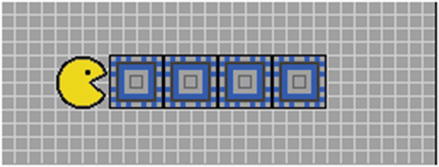

In the previous example, the border cases were relatively easy to identify. We simply considered what would happen if a certain tile got in the way of a crate. Often, border cases are not that simple to find. The reason is that the input contains many dimensions. To explore this idea, let us conduct a thought experiment: Imagine we would add powerups that make the player superstrong, so that it can move multiple crates (see Figure 8-4). Let’s say each player has a strength, represented as a floating-point number, and a player can move up to the square root of strength crates at a time.

Figure 8-4. When we add the strength of the player as an extra dimension, the border cases become a lot more complex.

This small addition raises a bunch of questions about how exactly the crates are moved:

What numerical precision is required for the strength value?

What is the maximum number of crates that are moved in practice?

What is the minimum number? Is it possible that the player is too weak to push anything?

How is the rounding of the square root to be done (up, down, mathematical)?

Can the strength ever be negative (here be imaginary numbers)?

Can the strength be infinite?

If we wanted to cover all border cases for the preceding example, we would have to consider at least one representative strength value for each of these six situations. What is more, imagine we wanted to make sure the power-up feature works in all possible situations. Then we would have to combine each representative strength value with each of the game situations we tested previously. The result would be dozens of additional tests. With a few more dimensions like the movement directions, power-up strength, tiles that could get in the way, and so on, it becomes infeasible to exhaustively cover all situations by testing. Fortunately, a crate-pushing game is not that complex in practice, but many real-world applications are. The situations with nasty border cases range from mathematical calculations, and reading input files to all kinds of pattern matching. In more than one of my programming projects, maintaining a clean, representative collection of border cases for testing (and forcing the program to understand them) was a major portion of the work invested. A general strategy is to pick border cases that represent our inputs well enough. If we imagine the possible inputs of our program as a multidimensional space, by choosing border cases we try to define the outline of that space with as few tests as possible and as many as necessary.

Benefits of Automated Testing

With the five tests we have written so far, we have an automatic procedure in place that allows us (or other developers) to check whether the moving crates work. There are several reasons the tests could fail during future development of the program:

We add more features to the game and accidentally break the crate feature.

We fix a defect and accidentally break the crate feature.

We rename the move function, so that our tests don’t find it any more.

We change the characters encoding wall and floor tiles, so that move_crate, our helper function, checks nonsense.

We try to run the test on a computer where Pygame is not installed.

Of course, our simple tests are unable to figure out automatically what exactly went wrong. But the remarkable thing about automated testing is that in all these situations, py.test will produce failing tests, which tells us: “Something went wrong, please investigate the problem further.” Isn’t that a nice thing to have?

Without doubt, writing automated tests creates extra work up front. How does that work pay off? There are three more benefits worth mentioning. First, automated testing saves time. We started this chapter with the premise that manual testing does not scale. Imagine you would have to go through all border cases manually each time you change something in your code. You either would spend a lot of time testing manually or skip a few tests and spend more time working on your code. With reproducible automated tests, the invested effort pays off after running the tests already a few times. Second, automated testing promotes well-structured code. It is worth pointing out that we could test the move() function entirely without initializing graphics or the event loop. The code is written in a way that is easy to test independently. If we were to test a single big function, high-quality tests would be complicated if not impossible to write. Third, automated testing is rewarding. When you execute your tests and see failing tests turn to passing, this gives the programmer a feeling of accomplishment. Often, this kind of positive feedback keeps you going, especially when biting through a difficult piece of code. At least this is what repeatedly happened to me and many other Python programmers. All three benefits are essential to the Best Practice of automated testing. To understand them in depth, we will need to revisit them when we have seen more automated testing techniques in the following chapters.

Other Test Framework s in Python

There are a few alternatives to py.test worth mentioning:

unittest

unittest is the default testing framework installed with Python. Because unittest doesn’t require any installation, it is good to know how it works. Practically, unittest provides a small subset of the possibilities of py.test, mostly grouping tests into classes.

nose

The test framework nose extends unittest by automatic test discovery and a couple of other convenient features. nose and py.test are distant cousins, and py.test is the more powerful of the two. We limit the description to a link to nose: http://nose.readthedocs.io .

doctest

The doctest framework works in a fundamentally different way. doctests are Python shell sessions written into Python documentation strings. The great thing about doctests is that they make it easy to include testable code examples in your documentation. How to include doctests in documentation is described in Chapter 17.

Writing a __main__ block

In some modules, you find test code in the __main__ block. If a program does not need the __main__ block for regular execution, we might as well write some tests for the functions in the module. For instance, we could test for the moving crate:

if __name__ == ’ __main__’:maze = parse_grid(LEVEL)move(maze, direction)if maze[3][5] == ’o’:print(’OK’)

This is an acceptable strategy in very small programs to test modules that are not executed directly. On one hand, we avoid a heavier infrastructure for some time. On the other hand, this kind of test quickly becomes cumbersome as the project grows. To name one thing, you cannot test multiple modules this way. As soon as the code grows beyond 100 lines it is worth to switch to a “real” test framework.

Best Practices

Automated tests consist of code that checks whether a program works.

py.test is a framework for writing automated tests in Python.

To import modules under test, they need to be imported in the file _init_.py.

Assertions decide whether a test passes or fails.

py.test provides detailed information why an assertion fails.

The function pytest.raises allows testing for failures explicitly.

Writing many small test functions provides more information than a few big tests.

Failing tests are the ones that provide the most useful information. Testing proves the presence, not the absence, of defects.

Writing a failing test first and then making it pass is a more reliable approach.

Tests should cover diverse border cases.

The work to collect and maintain border cases can be considerable.

Automated testing saves time, promotes maintainable code, and is rewarding.