3

Transfer

Using the experience gained on solving an earlier problem to solve a new one of the same type is called analogical transfer. The seeming ease with which human beings are able to generalise, classify and indeed to generate stereotypes suggests that transfer of learning is a simple and straightforward affair. As Jill Larkin (1989) puts it:

Everyone believes in transfer. We believe that through experience in learning we get better at learning. The second language you learn (human or computer) is supposed to be easier than the first … All these common beliefs reflect the sensible idea that, when one has acquired knowledge in one setting, it should save time and perhaps increase effectiveness for future learning in related settings.

(p. 283)

However, there are some surprising limits to our ability to transfer what we learn, and much research has gone into the study of the conditions under which transfer occurs or fails to occur.

At the beginning of the 20th century, and for a long time before that, it was assumed that what children learned from one subject would help them learn a host of others. The prime example of a subject that was supposed to transfer to all sorts of other disciplines was Latin. Its “logical” structure was thought to improve the learning of mathematics and the sciences as well as languages. In the 1980s and ’90s, the computer programming language LOGO replaced Latin as the domain that was believed by some to produce transfer of learning to other domains (Klahr & Carver, 1988; Papert, 1980, 1993). However, results appear somewhat mixed. Some researchers found that training on LOGO enhanced thinking skills in other areas (e.g., Au & Leung, 1991) – an example of general transfer – whereas others found no such effect (e.g., Mitterer & Rose-Krasnor, 1986) or found that training in LOGO transferred to another programming language such as BASIC (a degree of specific transfer) but not to a different area such as statistics (Smith, 1986).

Another view at the beginning of the 20th century, which goes back to Thorndike’s theory of identical elements (Thorndike, 1913; Thorndike & Woodworth, 1901), is that we cannot expect transfer when there are no similar surface elements, even if two problems share the same underlying features. Two tasks must share the same perceptually obvious features, such as colour or shape, before one can be used to cue the solution to the other, or they must share the same specific stimulus–response associations. A consequence of this view is that transfer is relatively rare. Latin cannot help one do mathematics because they don’t share identical surface elements. Learning a computer language such as Lisp is not going to help anyone learn a new language such as C# and so on.

Obviously, then, there is a degree of tension between whether transfer is usefully considered as general or specific. General transfer involves the learning of generalisable skills or habits. If you learn to write essays that get good marks in, say, politics or economics, then the chances are that you will be able to use your knowledge of how to structure an argument when you come to write essays in a totally different field such as history or psychology. You don’t have to learn to write essays from scratch when you shift to a different field. Furthermore, there is a phenomenon known as learning to learn: when someone performs a novel task once, such as learning a list of words or a list of paired associates (pairs of words in which the first word of the pair is often later used to cue the second), then performance on this type of task is greatly improved the second time it is performed, even though the words used are entirely different (Postman & Schwartz, 1964; Thune, 1951).

Specific transfer is related to Thorndike’s idea of identical elements. Some mathematics textbooks provide extensive practice at solving problems that are very similar. Once the students have learned how to solve the example they should, in principle, have little difficulty in solving the remaining problems. However, it should be noted that transfer really refers to transferring what one has learned on one task to a task that is different in some way from the earlier one. If two problems are very similar or identical, then what one is likely to find is improvement rather than transfer.

Have a look at the two problems in Activity 3.1. Feel free to try to solve them, but more importantly try to ascertain what makes one appear more difficult than the other.

Activity 3.1

Monster problems

A Move version

Three five-handed extraterrestrial monsters were holding three crystal globes. Because of the quantum-mechanical peculiarities of their neighbourhood, both monsters and globes come in exactly three sizes with no others permitted: small, medium and large. The medium-sized monster was holding the small globe; the small monster was holding the large globe; and the large monster was holding the medium-sized globe. Since this situation offended their keenly developed sense of symmetry, they proceeded to transfer globes from one monster to another so that each monster would have a globe proportionate to his own size. Monster etiquette complicated the solution of the problem since it requires:

- 1 That only one globe may be transferred at a time;

- 2 That if a monster is holding two globes, only the larger of the two may be transferred;

- 3 That a globe may not be transferred to a monster who is holding a larger globe.

By what sequence of transfers could the monsters have solved the problem?

A Change version

Three five-handed extraterrestrial monsters were holding three crystal globes. Because of the quantum-mechanical peculiarities of their neighbourhood, both monsters and globes come in exactly three sizes with no others permitted: small, medium and large. The medium-sized monster was holding the small globe; the small monster was holding the large globe; and the large monster was holding the medium-sized globe. Since this situation offended their keenly developed sense of symmetry, they proceeded to shrink and expand the globes so that each monster would have a globe proportionate to his own size. Monster etiquette complicated the solution of the problem since it requires:

- 1 That only one globe may be changed at a time;

- 2 That if two globes are of the same size, only the globe held by the larger monster can be changed;

- 3 That a globe may not be changed by a monster who is holding a larger globe.

By what sequence of changes could the monsters have solved the problem?

(from Hayes & Simon, 1977, p. 24)

We are constantly using knowledge we have gained in the past in new situations. In case you hadn’t noticed, the two problems in Activity 3.1 have the same underlying solution structure and the same one as the Tower of Hanoi problem. Transfer means applying what you have learned in one context to another context that is similar but which is also different enough so that it necessarily involves adapting what you have learned, or involves learning something new through generating an inference. Sometimes the contexts are very similar and the solution structures are similar, in which case you have an example of near transfer. On other occasions the contexts or domains may be entirely different which would involve far transfer. When a solver can successfully use a solution procedure used in the past to solve a target problem, this is known as positive transfer. Positive transfer means that learning to drive a Citroën reduces the length of time it will take you to learn to drive a Ford. This is because it is easy to transfer what has been learned from one situation (learning to drive a Citroën) to the new situation (learning to drive a Ford) since the two tasks are similar. At the same time there are likely to be differences between the two cars that necessitate learning something new, such as the layout of the dashboard, the position of levers, light switches, the feel of the pedals and so forth. However, it is also possible that a procedure learned in the past can impede one’s learning of a new procedure. This is known as negative transfer. In this case what you have learned prevents you from solving a new problem or at least prevents you from seeing an optimal solution. Imagine, for example, that you have learned to drive a car where the indicator lever is on the right of the steering column and the windscreen washer is on the left. After a few years you buy a new car where the indicator lever is on the left and the windscreen washer is on the right. In this case learning to use the levers in your old car might make it harder to learn to use the levers in your new one, and when you try to flick your headlights at another road user you find instead that water has just sprayed all over your windscreen. You try to apply what you have previously learned (a habit, for example) in the wrong circumstances.

It should be borne in mind that negative transfer is usually very hard to find. It is often confused with partial positive transfer. In the early days of word processing, different applications required different key combinations for cutting and pasting and the like. Suppose you learn to do word processing with one of these old applications that uses certain key combinations to do certain tasks, such as cutting and pasting until you are quite proficient at it. You are then presented with a totally new word processing package that uses different sets of key combinations. Just as one might get confused in a car whose indicators and windscreen wiper controls are reversed, so you might find it irritatingly hard to learn the new key combinations. You might readily feel that the old knowledge is interfering with the new. However, if you were to compare the time it took you to learn the original word processor for the first time with the time taken to learn the new one, you would probably find that you learned the new one faster than the old one despite the initial confusion (see e.g., Singley & Anderson, 1985, 1989; VanLehn, 1989). Whether this is true of the windscreen wiper/indicator scenario is a moot point.

Negative transfer – mental set

As was mentioned in Chapter 1, the Gestalt psychologists were interested in how one used previous experience in dealing with a new situation. Successfully using what you have learned was often reproductive thinking – using learned procedures to solve new problems of the same type. One of the potential side effects of such thinking is a form of mental set where previous experience prevents you from seeing a simpler, and possibly novel, solution, so mental set is an example of negative transfer. Information Box 3.1 provides an example of the kind of mental set that can occur.

Information Box 3.1 The Water Jars problem (Luchins & Luchins, 1959)

Rationale

The aim of much of Luchins’s work was to examine the effects of learning a solution procedure on subsequent problem solving, particularly when the subsequent problems can be solved using a far simpler procedure. In such cases learning actually impedes performance on subsequent tasks.

Method

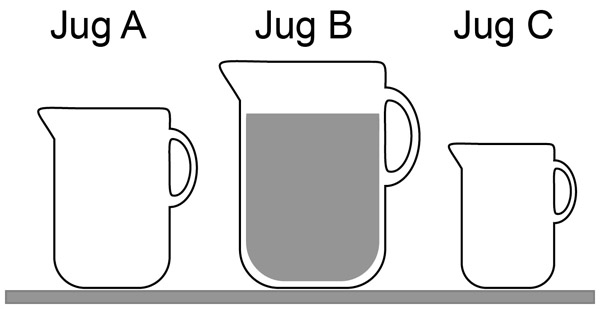

In these problems subjects are given a series of problems based on water jars that can contain different amounts of water (see Figure 3.1). Using those jars as measuring jugs, the participants had to end up with a set amount of water. For example, if the jar A can hold 18 litres, jar B can hold 43 litres and jar C can hold 10 litres, how can you end up with 5 litres?

The answer is to fill jar B with 43 litres, from it fill jar A and pour out the water from A, then fill C from B twice, emptying C each time. You end up with 5 litres in jar B.

After a series of such problems, subjects begin to realise that pouring water from one jar to another always follows the same pattern. In fact, the pattern or rule for pouring is B-A-2C: from the contents of jar B take out enough to fill A, and enough to fill C twice; that is, 43 − 18 − (2 × 10) = 5. Examples 1, 2 and 3 all follow that rule (try 2, 3 and 4 for yourself).

jug A jug B jug C goal

- 1 18 43 10 5

- 2 9 42 6 21

- 3 14 36 8 6

- 4 28 76 3 25

Results and discussion

When subjects reached problems at the very end of the series, the rule changed, as in example 4. Subjects who had induced the rule B-A-2C did much worse than control subjects on this problem. In fact, it can be solved very easily following the rule A-C, which is pretty trivial. Not only that, but when the problem could be solved by either rule (i.e., B-A-2C or A-C), subjects who had learned the complicated rule applied it without noticing that the simpler rule applied. For instance, both rules will work in example 3. Did you notice the easier solution in example 3?

The results showed the effects of what Gestalt psychologists referred to as Einstellung. It refers to a predisposition to use a learned procedure to solve a problem when a simpler solution could be used. Furthermore, those who had learned a rule got stuck on problems such as example 4 for far longer than those who had not learned a rule, showing the deleterious effects of reproductive thinking in this case.

To try to make sense of why human problem solving is affected by negative and positive transfer, Sweller (Sweller, 1980; Sweller & Gee, 1978) conducted a series of neat little experiments to show how the same set of training examples could produce both positive and negative transfer. According to Sweller (1980), positive transfer accounts for the sequence effect (Hull, 1920). The sequence effect means simply that if there is a series of similar problems graded in complexity, then it is easier to solve them in an easy-to-complex sequence than in a complex-to-easy sequence. A solver’s experience of solving the early examples speeds up the solution to the later ones because the solver sees the problems as being related – if the solution procedure has worked in the past, then a slight adaptation of it will likely work in the future. However, if having learned this easy-to-complex sequence a new problem appears that looks similar but has a different and much simpler solution, people get stumped and fail to solve a very simple problem due to negative transfer. Sweller’s results showed that you can solve a difficult problem if you have had experience with similar problems involving related rules. The downside of this effect is that a simple problem can be made difficult for the same reason. The same rule learning ability that makes an otherwise insoluble problem soluble produces a kind of mental set – a kind of programmed mental habit (Einstellung) – so that when a new problem is presented that requires a different although simpler hypothesis, the solver is stuck in this mental set.

Sweller’s studies are important for the light they cast on human thinking. He viewed the process by which we induce rules from experience as a natural adaptation. As a result, positive and negative transfer are two sides of the same coin. Einstellung is not an example of human rigid or irrational thinking but a consequence of the otherwise rather powerful thinking processes we have evolved to induce rules that in turn make our world predictable. The power of Sweller’s findings is attested to by the fact that recent studies keep coming back to the same assumption – that seeing similarities, however superficial, between problems allows people to generate hypotheses about how to solve them that are usually successful.

Quick access on the basis of surface content, even if it is not guaranteed to be correct, may be an attractive initial hypothesis given the longer time required for determining the deep structure … experts would be able to begin formulating the problem for solution while still reading it, thus saving time in solving the problem.

(Blessing & Anderson, 1996, p. 806)

Mechanisms of knowledge transfer

Nokes (2009) has claimed that there are three knowledge transfer mechanisms: analogy, knowledge compilation and constraint violation. The particular mechanism used by the learner largely depends on what knowledge needs to be transferred and the relative difficulty of the processing required. Apart from analogical transfer (dealt with later), the second mechanism, knowledge compilation (e.g., Anderson, 1983), translates declarative knowledge such as the instructions for driving a car into procedures for carrying out tasks which, in the case of problem solving, is in the form of production rules (if … then or condition … action rules). Thus a declarative representation is turned into a procedural representation for attaining a goal (driving, solving a category of problems, typing) that can be applied to new problems and situations. For example, E = mc2 is a declarative statement that can be turned into a procedure such as: IF the goal is to determine the energy of an object THEN multiply its mass by the square of the speed of light. Nokes’s third mechanism is constraint violation which involves a generate-evaluate-revise cycle. This mechanism involves transferring one’s knowledge of domain constraints to a new task whereby “the learner uses her or his prior constraint knowledge to identify and remedy the errors generated while performing new tasks” (Nokes, 2009, p. 4). Evaluation of these errors would lead to a revision of what needs to be done. Nokes provides the example of constraints in chess, where specific procedures for what moves to make in a given game can be generated using knowledge of the moves that the various pieces can make and other constraints such as avoiding checkmate. Thus this process is also a form of declarative-to-procedural transfer.

Transfer in well-defined problems

Using the methods described in Chapter 2 to analyse problem structures, we can tell if two problems have the same structure or not. If there are differences in people’s ability to solve two problems that share the same structure, then the disparity must lie in some aspect of problems other than the structure itself. Problems that have an identical structure and have the same restrictions are known as isomorphs. The Monster problems in Activity 3.1 described two such isomorphs. Have a look now at Activity 3.2.

Activity 3.2

The Himalayan Tea Ceremony problem

In the inns of certain Himalayan villages is practised a most civilised and refined tea ceremony. The ceremony involves a host and exactly two guests, neither more nor less. When his guests have arrived and have seated themselves at his table, the host performs three services for them. These services are listed here in the order of the nobility which the Himalayans attribute to them:

- stoking the fire,

- fanning the flames,

- passing the rice cakes.

During the ceremony, any of those present may ask another, “Honoured Sir, may I perform this onerous task for you?” However, a person may request of another only the least noble of the tasks which the other is performing. Further, if a person is performing any tasks, then he may not request a task which is nobler than the noblest task he is already performing. Custom requires that by the time the tea ceremony is over, all of the tasks will have been transferred from the host to the most senior of his guests.

How may this be accomplished?

(adapted from Simon & Hayes, 1976)

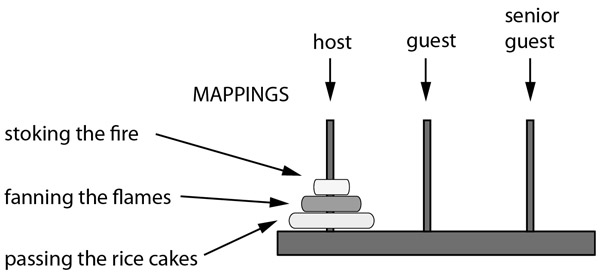

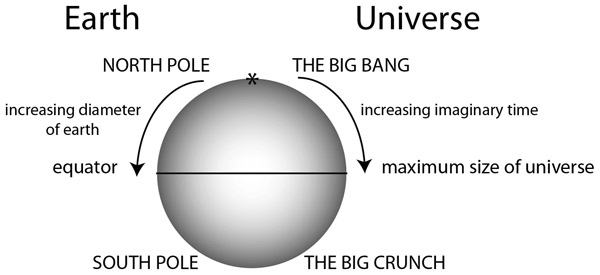

Activity 3.2 illustrates one further variant of the Tower of Hanoi problem. The only difference between the Tower of Hanoi problem and the various isomorphs you have seen (the Parking Lot problem, the Monster problems and the Himalayan Tea Ceremony problem) is in their cover stories. Because the problems look so different on the surface you may not always have realised that their underlying structure was identical with the Tower of Hanoi problem. All of these problems therefore differ in terms of their surface features but are similar in their underlying structural features, and it tends to be the surface features that most influence people (but see later in the chapter). The mappings between the Tower of Hanoi problem and the Himalayan Tea Ceremony problem are shown in Figure 3.2. The Himalayan Tea Ceremony problem is an example of a transfer problem, where tasks are transferred from one participant to another as in the first Monster problem in Activity 3.1. Simon and Hayes (1976) used 13 isomorphs of the Tower of Hanoi problem, all of which were variations of the Monster problem with two forms: Change and Transfer. They found that subjects were strongly influenced by the way the problem instructions were written. None of their subjects tried to map the Monster problem onto the Tower of Hanoi problem to make it easier to solve, and “only two or three even thought of trying or noticed the analogy” (p. 166).

The following problem (Activity 3.3) should, however, be recognisable.

Activity 3.3

The Jealous Husbands problem

Three jealous husbands and their wives having to cross a river at a ferry find a boat, but the boat is so small that it can contain no more than two persons. Find the simplest schedule of crossings that will permit all six people to cross the river so that none of the women shall be left in company with any of the men, unless her husband is present. It is assumed that all passengers on the boat disembark before the next trip, and at least one person has to be in the boat for each crossing.

This problem was used by Reed, Ernst and Banerji (1974) in their study of transfer between similar problems. The Jealous Husbands and Missionaries and Cannibals problems have an identical structure (see Figure 2.10 in Chapter 2). However, notice that there is one further restriction in the Jealous Husbands problem. A woman cannot be left in the company of other men unless her husband is present.

Moving two missionaries corresponds to moving any of three possible pairs of husbands since all husbands are not equivalent. But only one of the three possible moves may be legal, so there is a greater constraint on moves in the Jealous Husbands problem.

(Reed et al., 1974, p. 438)

This added constraint means that the Missionaries and Cannibals and the Jealous Husbands problems are not exactly isomorphic but are homomorphic. That is, the problems are similar but not identical. Reed et al.’s experiments are outlined in Information Box 3.2.

Information Box 3.2 The Jealous Husbands Problem (Reed et al., 1974)

Rationale

The aim of Reed et al.’s experiments was “to explore the role of analogy in problem solving”. They examined under what circumstances there would be an improvement on a second task.

Method

In experiment 1 subjects were asked to solve both the Missionaries and Cannibals (MC) problem and the Jealous Husbands (JH) problem. Approximately half did the MC problem before doing the JH problem and approximately half did them in the reverse order. No transfer was found between the two problems. Their next two experiments were therefore designed to find out how transfer could be produced. Experiment 2 looked for signs of improvement between repetitions of the same problem. Half the subjects did the MC problem twice and half did the JH problem twice. Experiment 3 tested whether being told the relationship between the problems would produce transfer. The independent variables were the time taken, the number of moves, and the number of illegal moves made by the subjects.

Results

In all cases there was no significant difference in the number of moves made, but in some conditions there was a reduction in time to solve the problem and a reduction in the number of illegal moves in the second presentation of a problem. As one might expect there was some improvement on the same problem presented twice. However, the only evidence for any transfer was from the Jealous Husbands problem to the Missionaries and Cannibals problem and then only when there was a hint that the two problems were the same.

Discussion

When a hint was given there was substantial transfer between the Jealous Husbands problem and the Missionaries and Cannibals problem, but not vice versa. Two conclusions can be drawn from this:

Transfer is unlikely unless

- Solvers are explicitly told that the earlier problem would help them;

- When the first problem is harder than the second.

Reed et al. found a number of things. First the extra constraint imposed by the Jealous Husbands problem made it harder to ensure that a move was legal or not. The effect of this was to increase the length of time subjects took to make a move and the number of illegal moves made. Second, transfer was asymmetrical; that is, there was some evidence of transfer only from Jealous Husbands to Missionaries and Cannibals but not the other way round. Third, there was no transfer between the two different problems unless the subjects were explicitly told to use the earlier problem (“Whenever you moved a husband previously, you should now move a missionary,” etc.). Fourth, subjects claimed to make little use of the earlier problem even when a hint was given to use it. No one claimed to remember the correct sequence of moves from the earlier problem. Instead subjects must have remembered the earlier problem at a “more global level”. That is, they remembered some general strategies (“Balance missionaries and cannibals,” “Move all missionaries first,” etc.).

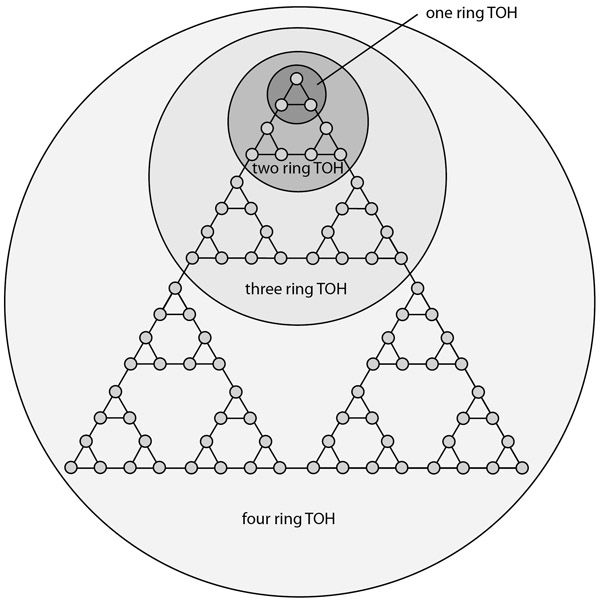

One other point emerges from Reed et al.’s experiments. As with Simon and Hayes’s (1976) Tower of Hanoi problem isomorphs, it was not the problem structure that was the source of the difficulty in problem solving. The main difficulty was knowing whether a particular move was legal or not. This aspect was examined further by (Luger & Bauer, 1978). They found no transfer asymmetry between the Tower of Hanoi problem and Himalayan Tea Ceremony problem – there was a transfer of learning no matter which of the two problems was presented first. One reason they give for the difference between their results and those of Reed et al. is that the Tower of Hanoi problem has “an interesting substructure of nested isomorphic subproblems” (p. 130) (see Figure 3.3). What this means is that learning to solve one of the two variants involves decomposing the problem into subproblems, the solution of which can be used in the other isomorphic problem. The Missionaries and Cannibals variants lack this kind of problem substructure.

From Luger and Bauer (1978).

Studies of analogical problem solving

So far we have looked at transfer in well-defined problems. Although transfer of learning is also known as analogical transfer, the term analogical problem solving has tended to be used for ill-defined problems.

There are many definitions of analogy. Holyoak (2012) refers to it as “an inductive mechanism based on structured comparisons of mental representations” (p. 234), and refers to analogies as ranging from the “mundane to the metaphorical” (Holyoak, 1985). “The essence of analogical thinking is the transfer of knowledge from one situation to another by a process of mapping – finding a set of one-to-one correspondences (often incomplete) between aspects of one body of information and aspects of another” (Gick & Holyoak, 1983, p. 2).

- Ah, but you have to find the right analogue first …

- Before going on, try to solve the Radiation problem in Activity 3.4.

Activity 3.4

Duncker’s (1945) Radiation problem

Suppose you are a doctor faced with a patient who has a malignant tumour in his stomach. It is impossible to operate on the patient, but unless the tumour is destroyed the patient will die. There is a kind of ray that can be used to destroy the tumour. If the rays reach the tumour all at once at a sufficiently high intensity, the tumour will be destroyed. Unfortunately, at this intensity the healthy tissue that the rays pass through will also be destroyed. At lower intensities the rays are harmless to healthy tissue, but they will not affect the tumour either. What type of procedure might be used to destroy the tumour with the rays, and at the same time avoid destroying the healthy tissue? (Gick & Holyoak, 1980, pp. 307–308).

The Radiation problem has the same solution structure as the Fortress problem you encountered in Activity 1.1 in Chapter 1. The solution was to split up the large force (the army) into groups, and get them to converge simultaneously (attack) on the target (the fortress).

Gick & Holyoak (1980, 1983) were interested in the effect of previous experience with an analogous problem on solving Duncker’s Radiation problem. They used various manipulations. Some were give different solutions to the Fortress problem (the source problem) to find out what effect that would have on the solutions subjects gave for the Radiation problem (the target). For example, when their subjects were given a solution to the Fortress problem whereby the general attacked down an “open supply route” – an unmined road – they tended to suggest a solution to the Radiation problem involving sending rays down the oesophagus. If the general dug a tunnel, then more subjects suggested operating on the patient with the tumour. The type of solution required for the Radiation problem based on the solution given previously for the Fortress problem is known as the “divide and converge” solution (or the “convergence” solution). Thus the type of solution presented in the early problem influenced the types of solution suggested for the later one. For the Radiation problem the convergence solution involves reducing the intensity of the rays and using several ray machines to focus on the tumour.

Another important point about their studies was that their subjects were often very poor at noticing an analogy and would only use one when they were given a hint to do so. Only about 10% of those in a control group who did not receive an analogy managed to solve the problem using the “divide and converge” solution. Of those who were given an analogy, only 30% used the Fortress problem analogue without being given a hint to do so; and between 75% and 80% used the analogy when given a hint. So, if you subtract the 10% who manage to solve the problem spontaneously, this means that only 20% noticed that the two problems were similar. This is in line with the findings by Simon and Hayes (1976) and Reed et al. (1974) (and many subsequent studies), who found that their subjects were very poor at noticing that the well-defined problems with which they were presented were analogous. Although the Fortress and Radiation problems are not well-defined they nevertheless have the same underlying solution structure. That is, they differ in their surface features (general, army, fortress vs. surgeon, rays, tumour) but the similarity lies in the underlying structural features of the problems and therefore at a more abstract level. Gick and Holyoak have pointed out this structural similarity, as shown in Table 3.1.

As a result of solving the problems, solvers abstract out the more general “divide and converge” solution schema.

Military problem |

|

|---|---|

Initial state |

|

Goal |

Use army to capture fortress |

Resources |

Sufficiently large army |

Constraint |

Unable to send entire army along one road |

Solution plan |

Send small groups along multiple roads simultaneously |

Outcome |

Fortress captured by army |

Radiation problem |

|

Initial state |

|

Goal |

Use rays to destroy tumour |

Resources |

Sufficiently powerful rays |

Constraint |

Unable to administer high-intensity rays from one direction safely |

Solution plan |

Administer low-intensity rays from multiple directions simultaneously |

Outcome |

Tumour destroyed by rays |

Convergence schema |

|

Initial state |

|

Goal |

Use force to overcome a central target |

Resources |

Sufficiently great force |

Constraints |

Unable to apply force along one path safely |

Solution plan |

Apply weak force along multiple paths simultaneously |

Outcome |

Central target overcome by force |

Reprinted from Gick, M. L., & Holyoak, K. J. (1983). Schema induction and analogical transfer. Cognitive Psychology, 15(1), 1–38 with permission from Elsevier.

Cognitive processes in analogical problem solving

Retrieval

As we have seen, the first major obstacle to using relevant past experience to help solve a current problem is accessing or retrieving the relevant past experience in the first place. Why should this be the case? Notice that the two cover stories differ. The Fortress problem is about military strategy and the Radiation problem involves a surgical procedure. They are from different domains so there is no obvious reason for us to connect the two. It would be time consuming, not to say foolish, to try to access information from our knowledge of mediaeval history when trying to solve a problem in thermodynamics. We are similarly not predisposed to seek an answer to a surgical problem in the domain of military strategy.

That said, when such cross-domain analogies are made they can be very fruitful. Since the 1980s, pilots have been using checklists to ensure things don’t get missed. For example, there was the case of an experienced pilot operating a new plane who forgot to perform a necessary step causing the plane to crash shortly after take-off. You might have heard “doors to manual and cross-check” when in a plane. Such checklist procedures among pilots and cabin crew have reduced the kinds of errors that used to be made until then. One pilot whose wife died in the operating theatre has been campaigning for the use of such checklists in health care settings including operating theatres. The introduction of the World Health Organization’s surgical safety checklists has been effective at improving communication between health care staff and reducing complications for patients (Pugel, Simianu, Flum, & Dellinger, 2015) largely based on the experience in the domain of aviation.

In studies of analogical problem solving such as those by Gick and Holyoak, the target problem is presumed to be in working memory and the source in long-term memory. In order for the source to be accessed there needs to be a useful memory probe; that is, information in working memory requires to be encoded (represented) in a way that can improve access to relevant analogues. Given what was said earlier about the difficulty of retrieving a relevant analogue, especially in an entirely different domain of knowledge, Kurtz and Loewenstein (2007) had participants compare two target problems to find out whether this helped them retrieve spontaneously a previously encountered story. That is, they attempted to see if the particular encoding of the targets allowed them to access an earlier problem setting (a “problem schema”), which they distinguished from the solution strategy in the source (the “solution schema”). Their studies showed that the way problems are encoded at a “deeper” level, as a result of comparing the two target problems and eliciting a partial schema, facilitated analogical access to a prior source.

Mapping

Once a relevant analogue is retrieved, the process of mapping is often (but not always) straightforward. Mapping involves forming a “structural alignment” between two problems or situations (e.g., Doumas, Hummel, & Sandhofer, 2008; Gentner, 1983; Gentner & Colhoun, 2010; Holyoak & Koh, 1987). This process includes matching elements (e.g., objects, characters) in the two problems that play the same roles in the problems (there is a one-to-one correspondence between the mapped elements: general–surgeon, armies–rays, etc.) which in turn requires the analogiser to look at the relations between the elements to determine what elements play what roles in the two problems. As a result of this type of matching, “candidate inferences are projected from the base [source] to the target” (Gentner & Colhoun, 2010, p. 37). So the inferences that are made in the source analogue that give it some coherence are carried across to the target, bearing in mind that the whole point of analogising is to generate inferences in the target.

Adaptation and application

The second major obstacle to using past experience is that of adapting the past experience so that the solution procedure can be applied to the current (target) problem. Despite being told that the Fortress problem and the Radiation problem involved the same “divide and converge” solution strategy, there were nevertheless about 20% of subjects in Gick and Holyoak’s study who still failed to solve the Radiation problem. The difficulty here is adapting one to solve the other, and there are various reasons why people might fail to map one solution onto the other. These include the effects of general knowledge, imposing constraints on a solution that are not in the problem statement, and the fact that there is not always a clear mapping between objects in one problem and objects in the other.

World knowledge. Various bits of general knowledge might prevent one from seeing how a solution might work. For example, a ray machine may be thought of as being possibly very large – you might expect one such machine in an operating theatre but perhaps not several. Where is the surgeon going to get hold of several ray machines? Alternatively, the solver may have a faulty mental model of how rays work. Is it possible to reduce the intensity of the rays? Someone once told me that he couldn’t imagine the effect of several weak rays focussing on a tumour – he wasn’t sure their effect would be cumulative – and therefore failed to see the analogy. There could be many other such interferences from other world knowledge on the ability to generate a solution.

Imposing unnecessary constraints. Sometimes solvers place constraints on problem solving that are not actually stated in the problem. For example, in the Radiation problem it says: “There is a kind of ray that can be used to destroy the tumour.” This might be taken to mean that there is only one ray machine (although it doesn’t explicitly say that). If there is only one machine then the Fortress solution will not work since several sources of weak rays are needed.

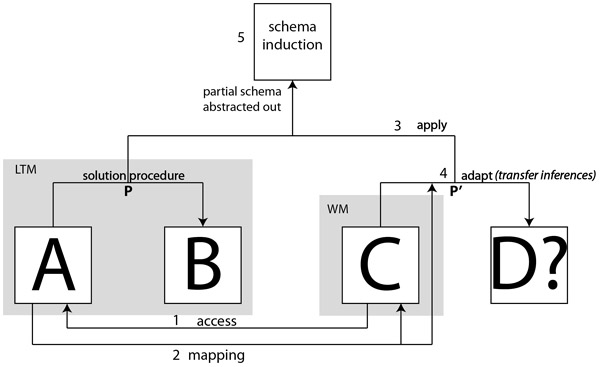

In Figure 3.4, A–B represents a previous problem assumed to be in long-term memory. C–D? is a target problem assumed to be in working memory, where C is a statement of the problem (the initial state) and D? is the goal to be achieved. Retrieval of an earlier problem is normally based on surface features (1), hence the link between the C and the A. (In Kurtz and Lowenstein’s study the link would be between the two goals and the solution procedure linking A and B.) The second stage is mapping where elements of the source (A) are mapped to the target (C) constrained by the role they play in the problem structure, through the “adapt” link (4). Analogising involves taking the solution procedure (P) in the source and applying it (3) to the target which would require some adaptation (4) of the procedure leading to an amended procedure (P′), assuming the problems are not identical. Adaptation involves generating inferences in the target based on the structure of the source. The result of the whole process is the abstraction of a partial schema (5). As more and more problems of the same type are encountered, the schema would become more decontextualised and more abstract and a problem category is thereby induced.

Although mapping and adapting are separated out here, they tend to work in parallel. According to Gentner and Colhoun (2010) adaptation includes an evaluation of the analogy to ensure structural soundness and factual validity given that inferences are not guaranteed to be correct but are more in the form of working hypotheses. Another aspect of evaluation is whether the analogy meets pragmatic constraints (Holyoak & Thagard, 1989a); that is, does the analogy meet the analogiser’s goals? Adaptation can also involve a re-representation of the relations in the analogues to create a better match between them (Gentner & Colhoun, 2010; Gentner & Smith, 2013).

Types of similarity

If we are to understand what is going on when analogies are noticed and used, we need to elaborate further on what is meant by similarity. That is, we need some way to express precisely in what way two (or more) problems or ideas can be said to be similar and in what ways they can be said to differ.

Holyoak (1984) has described four ways in which two problems can be said to be similar or different.

- 1 Identities. These are elements which are the same in both the source and the target (the analogues). They are more likely to be found in within-domain analogies. For example, if you are shown an example in mechanics involving a pulley and a slope and then are given a problem also involving a pulley and a slope, then there are likely to be several elements in the two problems that are identical. However, identities also include generalised rules that apply to both analogues such as “using a force to overcome a target”. These identities are equivalent to the schema that is deemed to be “implicit” in the source.

- 2 Indeterminate correspondences. These are elements of a problem that the problem solver has not yet mapped. In the course of problem solving, the solver may have to work at mapping elements from one problem to another – what role do “mines” play in the Radiation problem? It may be that some elements have been mapped and others have not yet been considered. The ones that have not yet been considered (or that the solver is unsure of) are the indeterminate correspondences. Trying to map the elements of the Monster Change problem in Activity 3.1 onto the Tower of Hanoi problem can be quite tricky and there may be aspects whose mappings are unclear.

- 3 Structure-preserving differences. These refer to the surface features of problems which, even when changed, do not affect the solution. Examples would be “armies” and “rays”, and “fortress” and “tumour”. Although entirely different, such surface features do not affect the underlying solution structure. Nevertheless, they play the same roles in both problems: armies and rays are agents of destruction; fortress and tumour are the respective targets. Structure-preserving differences also include those irrelevant aspects of a word problem that play no part in the solution. If the Fortress problem had included the line, “One fine summer’s day, a general arrived with an army,” the weather would be completely irrelevant.

- 4 Structure-violating differences; These differences do affect the underlying solution structure. The Fortress and Radiation problems use a solution involving division and convergence. However, whereas armies can be divided into smaller groups, a ray machine cannot; nor is it immediately obvious how the rays can be divided. The solution to the Radiation problem involves getting hold of several machines and reducing the intensity of the rays. The operators involved in the Fortress problem have to be modified in the Radiation problem.

Some of the objects in both problems play the same roles in the solution structure. Others do not. Table 3.2 shows where there are (relatively) obvious mappings and where the mappings are likely to break down. Matches can be found for tyrant, army and villages and for converging the groups simultaneously onto the fortress. However, look at 4, 5, 6 and 7 in Table 3.2. In 6 and 7 in particular it is not obvious how one might go about dividing a ray machine or rays or dispersing whatever results from the dividing around the tumour.

Source |

Target |

|

|---|---|---|

Fortress problem |

Radiation problem |

|

objects |

||

1. tyrant |

→ |

tumour |

2. army |

→ |

rays |

3. villages |

→ |

healthy tissue |

4. roads |

→ |

? |

5. mines |

→ |

? |

relations |

||

6. divide(army, groups) |

→ |

divide(rays, ?) |

7. disperse(groups, ends_of_roads) |

→ |

? |

8. converge(groups, fortress) |

→ |

converge(rays, tumour) |

Another point that Table 3.2 brings out is that to adapt a solution one often has to find the most appropriate level of abstraction. As Kahney (1993, p. 77) pointed out: “If abstraction processes are taken to the deepest level, then all problems are analogous.”

Surface similarity

A problem involving a car travelling at a certain speed for a certain length of time is similar to another problem involving a car travelling at the same speed but for a different length of time. Here all the objects (cars, speeds, times) are identical but the value of one of them (time) is different. If car were to be replaced by truck, the problems are still likely to be recognised as similar since the objects (car and truck) are closely semantically related.

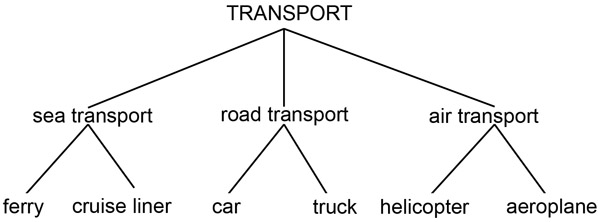

How, then, can we describe or represent semantic similarity? One way objects can be represented is as a hierarchy of semantically related concepts. “Car” is similar to “truck” as both are examples of road transport. “Aeroplanes” and “helicopters” are examples of air transport. “Ferries” and “cruise liners” are examples of sea transport. These examples are similar to each other since they are all forms of transport (Figure 3.5). The general term “transport” at the top of the hierarchy is the superordinate category. “Road transport” not only has a superordinate but also contains subordinate categories at a level below it. Because of their semantic similarity, a problem involving a helicopter travelling over a certain distance at a certain speed may remind you of the earlier problem involving the car.

Semantic constraints on analogising

Holyoak and Koh (1987) studied subjects’ ability to access and use analogous problems involving the convergence solution used in the Fortress and Radiation problems. What they found was that the more semantically similar the objects in the analogues were, the more subjects were likely to access and use the earlier problem to solve the target. For example, subjects given a problem involving a broken light bulb filament that required converging laser beams to repair it were more likely to solve the Radiation problem, where X-rays are used to destroy a tumour, than the subjects who were first given the Fortress problem, where armies attack a castle. This is because laser beams and X-rays are similar sorts of things – both problems involve converging beams of radiation. Furthermore, their semantic similarity suggests that they probably play similar roles in the problems and therefore have high transparency. “Armies” and “rays” are dissimilar objects, and have low transparency. Accessing a previous problem has been found to depend strongly on the amount of semantic similarity between objects in different problems (Gentner & Kurtz, 2006; Gentner, Rattermann, & Forbus, 1993; Gentner & Toupin, 1986; Holyoak & Koh, 1987; Ross, 1987).

Another aspect of surface similarity relates to the attributes of the objects being mapped. Chen, Mo and Honomichl (2004) examined very long-term analogical transfer from a folk tale learned in childhood to a target problem presented to participants as adults. The Chinese version of the folk tale was “Weigh the Elephant”, where an emperor received a large elephant as a gift and wanted to know its weight. Since there was no scale big enough to weigh the elephant, the emperor’s son came up with a solution involving putting the elephant in a boat and marking the point at which the water came up the side of the boat. The elephant was removed and stones were placed in the boat until the boat sank to the mark. The stones could then be weighed individually in a small scale and the weights added together to give the weight of the elephant. An analogue was presented to participants involving weighing an asteroid. An elephant and an asteroid are not all that semantically related but there was no problem in mapping them, as the salient aspect of the elephant and asteroid is that they are both big, heavy things.

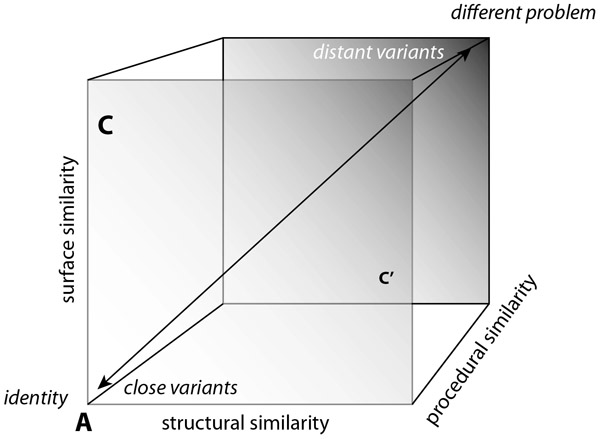

Most of the time we are likely to encounter problems that are neither identical to ones we have already solved nor differ so much that all we can say about them is that they are problems. Usually the problems we encounter are often variants of ones we have encountered before. These variants may be close to one we know or they may be distant variants (Figure 3.6). The degree to which problems of a particular type vary is likely to have an effect on our ability to notice that the problem we are engaged in is similar to an earlier one and on our ability to use that source problem to solve the target.

Figure 3.6 also illustrates that there are three respects in which problems can vary. Their surface features can change while the underlying solution procedure remains the same; or the surface features may be the same but the underlying solution procedure may differ. Table 3.3 shows examples of problems varying in their surface and structural features.

In Table 3.3 notice that some of the surface features or “objects” play a role in the solution (400 miles, 50 mph) whereas others do not (Glasgow, London). Notice also that, despite the similarity in the objects in the “similar-surface / dissimilar-structure” box, the solution procedure requires you to multiply two quantities whereas the source problem requires you to divide two quantities.

In Table 3.2 the objects in one problem are mapped to objects that play the same or a similar role in the other. This is “role-based relational reasoning” which depends on “explicit relational representations” (Holyoak, 2012). So problems can be similar in terms of their relational structure. To these, Chen (2002) has added procedural similarity (Figure 3.6) and has argued, based on a series of experiments, that the main obstacle to solving analogous problems was in the execution (apply in Figure 3.4) rather than in accessing or mapping. How these various forms of similarity interrelate is shown in Figure 3.6, which combines the ideas from researchers such as Gentner and Chen.

Source |

|||

|---|---|---|---|

A car travels 400 miles from Glasgow to London at an average speed of 50 mph. How long does it take to get there? |

|||

Surface “objects” car, 400, miles, Glasgow, London, 50, mph, “how long …” |

|||

Solution structure |

time = distance ÷ speed |

a = b ÷ c |

|

Target |

|||

Structure varies |

|||

similar |

dissimilar |

||

Surface varies |

similar |

A truck travels 480 kilometres from Paris to Lyon at an average speed of 80 kilometres per hour. How long does it take to get there? |

A car takes 10 hours to travel from Glasgow to London at an average speed of 40 miles per hour. What distance does it travel? |

dissimilar |

A tourist exchanges $300 for pounds sterling at an exchange rate of $1.50 to the pound. How many pounds does she get? |

A tourist exchanges her dollars for £200 pounds sterling at an exchange rate of $1.50 to the pound. How many dollars did she have? |

|

Solution structure |

a = b ÷ c |

b = a × c |

|

Relational similarity

So far we have seen how we can map objects in two situations or problems that are similar in that the objects are identical, semantically related and/or share salient attributes. Attribute mapping allows us to recognise and use similarities at a level above the level of the objects themselves and therefore to map the attributes of one object onto the attributes of another, such as the attribute “heavy” that can be applied to the asteroid and the elephant. However, we can go even further and look at similarities in the relations between two or more objects. In the sentence “Joe opened the wine bottle” there are two objects, “Joe” and “wine bottle”, and there is a relation between them represented by the verb “opened”. This proposition, which can be represented in natural language as “Joe opened the wine bottle,” can also be represented by

- opened(Joe, wine_bottle).

In this example there are two “slot fillers” – Joe and “wine_bottle”, and the term before the brackets is a relation rather than an attribute. The relations and attributes that are outside the brackets are collectively known as predicates. The things inside the brackets are arguments. A predicate can have many arguments. The verb “give”, for example, involves a giver, a receiver and an object that is given. “Charles gave Daphne a bunch of flowers” can be represented as

- gave(Charles, Daphne, bunch_of_flowers).

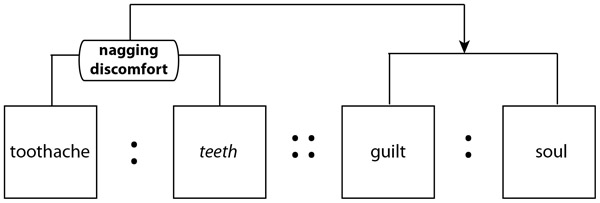

It is because we can understand the nature of relational similarity that we can make sense of analogies such as “guilt is the toothache of the soul” (Cotton, 2014). “Guilt” can be mapped onto “toothache” since guilt and toothache are specifically asserted as being the same. However, that mapping is insufficient and needs to be broken down further so that guilt maps to ache, and tooth to soul. We are then expected to infer from the statement that guilt is a constant, nagging, painful feeling based on what we know about toothaches. How are we able to make these inferences? In fact, there is something missing from the statement that we have to put back in for the sake of completeness. Guilt is not a physical pain caused by tooth decay. It is an emotional response to a past event. So another way of understanding the statement is to read it as “guilt bears the same relation to the soul (consciousness?) as toothache does to teeth” (or toothache:teeth::guilt:soul – toothache is to teeth as guilt is to soul – see Figure 3.7).

Relational similarity – reproductive or productive?

A preliminary requirement to finding a relation between two concepts involves a search through our semantic system. Compare the following two analogies:

- 1 uncle:nephew::aunt:?

- 2 alcohol:proof::gold:?

In the first case there is a well-known set of relations between uncle and nephew. The latter is the offspring of the former’s brother or sister. Both are male. The next item, “aunt”, invites us to generate a parallel known relation for a female offspring. We are reconstructing known sets of relations when we complete this analogy.

In the second case, as in the case of metaphors, the solver has possibly never juxtaposed these items before. Any solution the solver generates will therefore be constructed for the very first time. That is, the similarity between the relations (here the second item is a measure of the purity of the first item) is a novel one. Indeed if we couldn’t invent new relations on the spur of the moment, then we wouldn’t be able to understand what Richard Kadrey was going on about in his book Kill the Dead with his analogy “memories are bullets. Some whiz by and only spook you. Others tear you open and leave you in pieces” (Kadrey, 2010). For this reason, our ability to generate and understand a presumably infinite number of possible relations is seen as a productive ability (Bejar, Chaffin, & Embretson, 1991; Chaffin & Herrmann, 1988; Johnson-Laird, Herrmann, & Chaffin, 1984).

Bejar et al. (1991) argue that relations are of two types: ones that the person generating the relation already knows and ones that have to be constructed on the spot.

The variety of relations suggests that people are capable of recognizing an indefinitely large number of distinct semantic relations. In this case, the explicit listing of all possible relations required in a network representation of relations will not be possible.

(p. 18)

Structural similarity

So far we have seen that two things can be seen as similar if they are closely semantically related (e.g., trucks and vans); they can also be similar if they share the same attributes (yellow trucks and yellow books); and the relations between objects can also be regarded as similar. We have been climbing up a hierarchy of similarity, as it were. However, we can go even further and look at similarity between even more complex relational structures. A useful example of how similar hierarchical structures can be mapped can be seen in the work of Dedre Gentner.

Gentner’s structure mapping theory

According to Gentner, an analogy is not simply saying that one thing is like another. “Puppies are like kittens” or “milk is like water” are not analogies. They are in Gentner’s terms “literally similar” since they share the same attributes, such as “small” in the first case and “liquid” in the second, as well as the same relations (Gentner, 1989). Gentner and others (e.g., Falkenhainer, Forbus, & Gentner, 1989; Gentner, 1989; Gentner & Toupin, 1986; Holyoak, 2012; Holyoak, Lee, & Lu, 2010; Vosniadou, 1989) argue that real analogies involve a causal relation. In the analogy “puppies are to dogs as cats are to kittens,” there is a relation between “puppies” and “dogs” which also applies between “kittens” and “cats”, and this relation can readily be explained. An analogy properly so-called involves mapping an explanatory structure from a base domain (puppies and dogs) to a target (kittens and cats).

According to Gentner (1983), mapping a relational structure that holds in one domain onto another has to conform to the principle of systematicity, which states that people prefer to map hierarchical systems of relations, specifically conceptual relations (Gentner & Markman, 2005), in which the higher-order relations constrain the lower-order ones. Kevin Ashton (2015) uses many metaphors in his book How to Fly a Horse when discussing the history of how individuals came up with creative products. One is used to the warning about the perils of being certain about something when healthy doubt would be more appropriate, and one is to point out the usefulness of confidence in one’s abilities and ideas: “Confidence is a bridge. Certainty is a barricade.” In other words, it is only because we can infer that a bridge helps us get over an obstacle and that a barricade prevents us from doing so that we can map the bridge to confidence and the barricade to certainty, and make the further inferences that to succeed we need to be persistent, and to test our assumptions and beliefs rather than assume we are correct.

Gentner’s structure mapping theory is implemented as the structure mapping engine (Falkenhainer et al., 1989; Gentner & Kurtz, 2006; Gentner & Markman, 2005) and uses a predicate calculus of various orders to represent the structure of an analogy. At the lowest order, order 0, are the objects of the analogy (the salient surface features), such as “army”, “fortress”, “roads”, “general”. When an example is being used as an analogy, the objects in one domain are assumed to be put in correspondence with the objects in another to obtain the best match that fits the structure of the analogy.

At the next level, the objects at level 0 can become arguments to a predicate. So “army” and “fortress” can be related using the predicate “attack”, giving “attack(army, fortress)”. This would have order 1.

A predicate has the order 1 plus the maximum of the order of its arguments. “Army” and “fortress” are order 0; “greater_than(X, Y)” would be order 1; but “CAUSE[greater_than(X, Y), break(Y)]” would be order 2, since at least one of its arguments is already order 1.

CAUSE, IMPLIES and DEPENDS ON are typical higher-order relations. “On this definition, the order of an item indicates the depth of structure below it. Arguments with many layers of justifications will give rise to representation structures of higher order” (Gentner, 1989, p. 208).

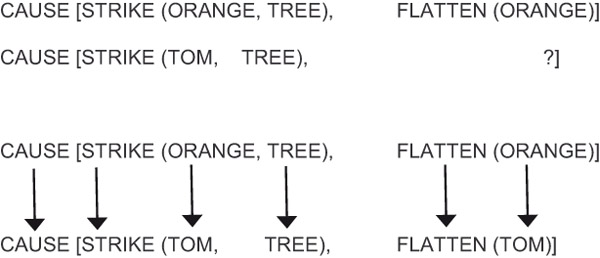

Mapping an explanatory structure allows one to make inferences in the new domain or problem, since the relations which apply in the source can be applied in the target. Notice that the inferences are based on purely structural grounds. A structure such as: CAUSE [STRIKE (ORANGE, TREE), FLATTEN (ORANGE)] can be used in a Tom and Jerry cartoon to decide what happens when Tom smashes into a tree – CAUSE [STRIKE (TOM, TREE), ?] – to generate the inference CAUSE [STRIKE (TOM, TREE), FLATTEN (TOM)]. The missing part concerning what happens when Tom strikes a tree can be filled in by referring to the structure expressing what happens when an orange hits the tree. The predicate FLATTEN (ORANGE) is inserted into the target with ORANGE mapped to TOM (Figure 3.8).

For Gentner, analogising involves a one-to-one systematic mapping of the structure of the base domain (source) onto the target. The surface features – the object descriptions – are not mapped onto the target since they play no role in the relational structure of the analogy. For example, the colour of the orange or of Jerry is irrelevant since it plays no part in the structure. The problems dealt with by Gick and Holyoak (1980, 1983) are therefore analogous under this definition.

Structure mapping, has been used to explain and model the process of analogising in a number of areas such as metaphor (Gentner & Bowdle, 2008; Gentner, Bowdle, Wolff, & Boronat, 2001); writing processes (Nash, Schumacher, & Carlson, 1993); and learning and generating new knowledge (Gentner & Colhoun, 2010). Sagi, Gentner and Lovett (2012) found that structure mapping could explain the differences in response times to assessing whether two images were different or similar and why.

When relational structures are ignored

There are cases where, rather than using a relational structure to generate analogical transfer, people including children have used the superficial features of objects to generate inferences. Gentner (Gentner & Colhoun, 2010; Gentner & Jeziorski, 1993) has argued that the alchemists from the 11th to the 17th century used a different form of analogical reasoning from modern scientists and used superficial similarity as the basis for generating inferences since they believed that, when objects resembled each other due to surface features such as colour, they were necessarily linked. Thus yellow flowers and yellow roots could be used to combat jaundice and red plants were effective against problems of the blood. This was an essentially magical belief in a form of cause and effect that was not based on any underlying causal relationship between the features of the base and the target. Similarly for hundreds of years the planets were associated with metals based mostly on colour (the Sun with gold, the Moon with silver, Mars with iron, etc.). Saturn was slow, therefore weighty, therefore linked to lead. There were seven known planets and seven known metals up to the mid-18th century. When platinum was discovered in Colombia, the conclusion by some was that there must be an as yet undiscovered planet. However, by this time analogies based on superficial features were being challenged and chemistry was emerging from alchemy. That said, homeopathy uses the same form of reasoning and is still prevalent today (Gentner & Colhoun, 2010).

Young children are also prone to use superficial features as the basis for making inferences about situations. An example is shown in Information Box 3.3.

Information Box 3.3 Systematicity and surface similarity in the development of analogy (Gentner and Toupin, 1986)

Rationale

The aim of the study was to examine the effects of varying the systematicity of a storyline and the transparency of objects in the stories (the degree of semantic similarity between the characters in the stories and the role they played in them). Since the study was conducted with children there was a further aim of finding out if there was a developmental trend in children’s ability to use structure mapping in analogising.

Method

Gentner and Toupin (1986) presented two groups of children aged 5–7 and 8–10 with stories they had to act out. The transparency of object correspondences and the systematicity of the first (source) story were varied in later stories. Transparency was varied by changing the characters that appeared in them. For example, the original story (Gentner refers to the source as the base) included characters such as a chipmunk who helped a moose to escape from a frog.

- In the high transparency condition, characters became squirrel, elk and toad.

- In the medium transparency condition, different types of animals (not semantically related to the ones in the source) were used.

- In the low transparency condition the roles of the characters were reversed (leading to “cross-mapping”): in the new story the elk played the role of the chipmunk in the original, the toad played the role of the moose and the squirrel that of the frog. (Figure 9.4 in Chapter 9 shows another example.)

Systematicity was varied by adding a sentence to the beginning to provide a setting and a final sentence to the story in the form of a moral summary of the tale (the systematic condition). The non-systematic condition had neither a moral nor a setting.

The children were given certain roles to play and asked to act out the original story and then to act it out again with different characters.

Results and discussion

Both age groups did well when the there was high transparency – similar characters playing similar roles – in spite of a lack of systematicity. However, for the medium and low transparency conditions the younger age group were more influenced by the similarity between the characters than the older age group; that is the surface similarity led them to produce a non-systematic solution. For the older age group the systematicity allowed them to use the proper mappings.

Where the children understood the story’s underlying rationale they were able to adapt their characters to fit the story’s structure. The rest of the time they simply imitated the sequence of actions taken by the semantically similar counterparts in the earlier story. The younger children may not have had an adequate understanding of the earlier story to be able to apply the rationale behind it.

These results do not confine themselves to children. Gentner and Schumacher (Gentner & Schumacher, 1987; Schumacher & Gentner, 1988) found the same results with adults. Their subjects had to learn a procedure for operating a computer-simulated device and then use it to learn a new device. Once again the systematicity and transparency were manipulated. The systematicity was varied by either providing a causal model of the device or simply a set of operating procedures. The transparency referred to the type of device components. The results showed that systematicity constrained learning and transfer to the target device. Transparency also had strong effects on transfer. The speed of learning the new device was greater when corresponding pairs of components were similar than when they were dissimilar.

Expository analogies

Instructing with analogies refers to “the presentation of analogical information that can be used in the form of analogical reasoning in learning” (Simons, 1984, p. 513). Another aim of using analogies in texts is to make the prose more interesting, and the two aims often overlap. However, there is always a point at which analogies break down. As a result, writers have to be very careful about the analogies they use.

In expository texts writers make much use of analogies to explain new concepts, and they are often quite effective in helping students understand them. The flow of water, for instance, is traditionally used to explain the flow of current in electricity. Indeed, “flow” and “current” applied to electricity derive from that analogy. Rutherford made the structure of the atom more comprehensible by drawing an analogy to the structure of the solar system. When analogies are used in a pedagogical context, their aim is often to allow the student to abstract out the shared underlying structure between the analogy and the new concept or procedure the student has to learn. Writers hope that it can thereafter be used to solve new problems or understand new concepts involving that shared structure.

The kind of analogy people develop or are told can have a strong influence on their subsequent thinking. If you think that some countries in the Far East stand like a row of dominoes, then when one falls under communist control the rest will fall inevitably one after the other – that’s what happens to rows of dominoes. If you think that Saddam Hussein is like Hitler and that the invasion of Kuwait was like the invasion of Poland in the Second World War, then this will influence your response to the invasion and to your dealings with Iraq. If your theory of psychosexual energy (Freud) or of animal behaviour (Tindbergen) takes hydraulics as an analogy, then you will naturally see aspects of human behaviour as the results of pressure building up in one place (suppressing sexual urges) and finding release in another (dreams and neuroses).

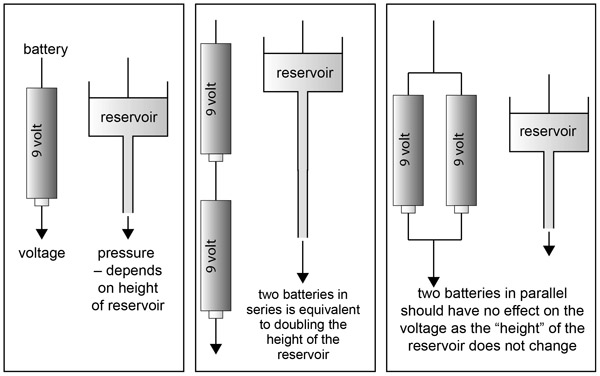

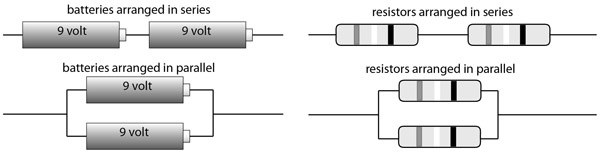

Flowing waters or teeming crowds: mental models of electricity

Our understanding of physical systems is often built on analogy. There are, for example, two useful analogies of electricity: one that involves the flow of water and one that involves the movement of people. These analogies can either help or hinder one’s understanding of the flow of electricity. Gentner and Gentner (1983) used these two analogies to examine their effects on how the flow of electricity is understood. The analogy with a plumbing system can be quite useful in understanding electricity. Several aspects of plumbing systems map onto electrical systems (see Table 3.4 and Figures 3.9 and 3.10). Gentner and Gentner found that subjects who were given an analogy with flowing water were more likely to make inferences about the effects of the flow of current in batteries that were in series and in parallel than those subjects who were given a moving people analogy. However, when the problem concerned resistors, more subjects who had been given the moving people analogy were able to infer the effects of the resistors than those who had been given the flowing water analogy. Those who were reasoning from a flowing water analogy believed the flow would be restricted no matter how the resistors were arranged. People using the analogy with people moving through turnstiles had a better mental model of the flow of electricity through the resistors.

|

source |

|||

|---|---|---|---|

target |

flowing water |

teeming crowds |

|

resistor |

→ |

narrow section of pipe |

turnstile |

current |

→ |

rate of flow of water |

movement of people |

voltage |

→ |

pressure |

number of people |

battery |

→ |

reservoir |

? |

Issing, Hannemann and Haack (1989) performed an experiment in which they examined the effects of different types of representation on transfer. The representations were pictorial analogies of the functioning of a transistor. They presented subjects with an expository text alone, the text plus a sluice analogy, the text plus a human analogy, and finally the text plus an electronics diagram of the transistor. Issing et al. found that the sluice analogy (involving the flow of water) led to better understanding of the function of the transistor. The human analogy was less effective and the diagram and text alone were the least effective.

Issing et al. argue that analogies depicting human-like situations are regarded as artificial and take on “more a motivating than cognitive function”. This, they say, explains why the human analogy fails to be as effective as the flow of water. This is, perhaps, a strange conclusion given that they are taking into account Gentner’s view of structure mapping. A more likely reason is that the water analogy shares more higher-order relations with the operation of transistors than the human analogy, and this would account for the stronger effect of the sluice analogy.

Donnelly and McDaniel (1993) performed a series of experiments on the effects of analogies on learning scientific concepts. In experiment 4 in their study, subjects were given either a literal version of the scientific concept, an analogy version or a familiar-domain version. Three examples are given in Table 3.5.

The study showed several important features of the effectiveness of analogies in teaching. First, the analogies helped subjects answer inference questions but did not help with the recall of facts in the target domain. One can understand this in terms of the goals of learning: providing an analogy obliges the learner to look at how the structure of the analogy can help understand the new concept. Providing just a literal version obliges the learner to concentrate more on the surface features. To put it yet another way, people will tend to concentrate on the surface features of new concepts or situations or problems unless given a way of re-analysing the concept or situation or problem (see Chapter 4).

Second, the analogies helped novices but not advanced learners. If the concept being tested is in a domain that is already known, then the analogy performs no useful purpose. One can therefore solve problems using one’s pre-existing domain-relevant knowledge (see also Novick & Holyoak, 1991). Analogies work for novices because they provide an anchor, or “advance organiser” (Ausubel, 1968). That is, novices can use a domain they know to make inferences about a domain they do not know.

Literal Version |

Collapsing Stars. Collapsing stars spin faster and faster as they fold in on themselves and their size decreases. This phenomenon of spinning faster as the star’s size shrinks occurs because of a principle called “conservation of angular momentum”. |

Analogy Version |

Collapsing Stars. Collapsing stars spin faster and faster as their size shrinks. Stars are thus like ice skaters, who pirouette faster as they pull in their arms. Both stars and skaters operate by a principle called “conservation of angular momentum”. |

Familiar-Domain Version |

Imagine how an ice skater pirouettes faster as he or she pulls in his or her arms. This ice skater is operating by a principle called “conservation of angular momentum”. |

Third, Donnelly and McDaniel suggest that subjects were able to argue (make inferences) from the analogy prior to the induction of a schema. That is, there was no integration of the source analogy with the target. This emphasises that schema induction is a by-product of analogising and that schemas are built up slowly (depending on the complexity of the domain).

There have been many further studies of between-domain analogies used to explain scientific concepts. There are also studies of how they are used in many fields. Pena and de Souza Andrade-Filho (2010) describe analogies in medicine and list their use as being “valuable for learning, reasoning, remembering and naming”.

The analogical reasoning also underlies modeling strategies, as long as we test a hypothesis in a model, and then try to extrapolate the results to other analog situations. The relation between the model and the “real” situation should operate within the same constraints of similarity, structure and purpose, proposed for an analogy. Guiot et al. (2007), for example, found that the analogy between cancer invasion and a “splashing water drop” leads to an understanding of tumor invasion as controlled by a parameter that is proportional to confining pressure and tumor radius and inversely proportional to its surface tension.

(Pena & de Souza Andrade-Filho, 2010, p. 613)

Niebert, Marsch and Treagust (2012) claim that it is not possible to teach, reason about or understand concepts in physics without analogies, and explaining physics concepts to the public requires a careful choice of analogy and metaphor (Brookes & Etkina, 2007).

Influencing thought

It is obvious from the preceding discussion that analogies can play an important role in influencing thinking. One way that analogies can operate is by activating a schema that in turn influences the way we think about a current situation. Several years ago genetically modified (GM) food was labelled “Frankenfood” by one British newspaper and the metaphor has stuck. The phrase probably helped influence the direction in which the debate about GM foods in Britain was going at the time. Britain’s bid to renegotiate membership of the EU in 2015 was at first met with a flurry of metaphors: Economy Minister Emmanuel Macron argued that we can’t have an “à la carte” European Union and also referred to Britain’s stance as wishing to “dismantle a mansion”. Foreign Minister Laurent Fabius used an often reported metaphor: “One can’t join a football club and decide in the middle of the match we are now going to play rugby.” Similarly, Keane (1997, p. 946) points out that using a war analogy when discussing drug pushing is likely to bring to mind “solutions that are based on police action and penal legislation rather than solutions that involve the funding of treatment centres or better education (an illness-of-society analogy might have the opposite effect)”.

In the business world, Cornelissen, Holt and Zundel (2011) have outlined ways in which organisational practices might be improved through persuasion using metaphors and analogies from outside the corporate or business domain. Landau, Meier and Keefer (2010) refer to the kinds of metaphors we use everyday, such as “cleanliness” referring to morality, “altitude” referring to social or economic status, “temperature” referring to degrees of friendliness and so on. They argue that such conceptual metaphors constitute “a unique cognitive mechanism that shapes social thought and attitudes” (p. 1046).

Thus we have to be extremely careful about the nature of the analogies we present when demonstrating something since they can have a profound effect on thinking about the current situation: