5

Developing skill

As a result of solving problems within a domain we learn something about that domain. Learning manifests itself as a permanent change in a person’s behaviour as a result of experience, although such a change can be further modified or even interfered with by further experience. The more experience one has, the more one is likely to develop a degree of expertise in a specific field. One can also acquire a depth of knowledge about the world around us as well as a deep knowledge about a particular domain. Fisher and Keil (2015) refer to the first as passive expertise influenced by our place in the world including such personal attributes as age and gender, and they refer to the second as formal expertise developed through deliberate study or practice. When we think of expertise it often tends to be formal expertise that comes to mind, although it need not refer to that.

Another definition of expertise is that of the sociologists who look at expertise in terms of social standing and through the conferment of awards and certificates. In this view, experts are people with a degree of prestige and concomitant power, and their status as experts is bestowed upon them by others in their field or even by the public. Collins and Evans (2015) and Collins, Evans and Weinel (2015) distinguish between interactional expertise and contributory expertise. The former is the kind of expertise that one might acquire, almost second-hand as it were, by examining the expertise of practitioners (those with contributory expertise), however they do not themselves engage in that field of expertise. For example, they may be journalists or social science researchers who gain a lot of knowledge about the field of the experts they are researching. In a written test there may be little to distinguish between the interactional expert and the contributory expert, but when it comes to practice there is a distinction between the two.

While Collins and Evans regard interactional expertise as being related to linguistic expertise in their conception of expertise, from a cognitive point of view it would seem to be more like the distinction between declarative knowledge and procedural knowledge. Both develop as a result of learning, in one case one learns facts about a field of knowledge (declarative knowledge) as well as increasing one’s related skill in that field (procedural knowledge). The two are necessarily interconnected since procedural knowledge often develops as a result of using one’s declarative knowledge in specific contexts. Declarative knowledge can come about as a result of instruction, reading and being told, but much is the result of the process of induction. With experience of the world, including all kinds of problems, we induce schemas that help us to recognise and categorise problem types and hence to access the relevant solution procedure. With repeated practice a procedure can become automated. This automaticity, in turn, frees up resources to deal with any novel aspects of a particular problem or situation. The result of learning and continual practice in a particular domain can lead eventually to expertise. Indeed, it is often said that an expert is someone who has had 10 years’ experience in a domain (Simon & Chase, 1973). There is obviously a continuum between absolute beginner and complete mastery of a field; but for many purposes, whatever the domain, someone whose knowledge and performance are superior to those of the general population can be considered a relative expert.

There is a variety of ways in which expertise can be and has been studied. For example, one can look at the development of expertise over time; one can compare directly experts with novices on a number of dimensions; one can look at individual examples of genius or of “ordinary” experts; or one can find out the kinds of things that experts do well and what they do to improve. Nevertheless, whatever aspect one concentrates on, there is at least an implicit comparison between experts and novices, the topic of Chapter 6. We begin, however, with how knowledge and skill are acquired in the first place.

Induction

Inductive reasoning allows us to generalise from our experience of the world. We don’t have to encounter every single piece of coal in the world to learn that coal burns – a few instances will do. The ability to reason inductively is extremely important for our survival. It allows us to reason from specific examples to reach a (probable) conclusion which in turn allows us to make predictions: “I’m cold. If I set fire to those black rocks I’ll get warm.” To put it another way, what appears to be true for a sample of a population or category is assumed to be true for the whole population or category. This is known as enumerative induction and is based on the argument form:

- Some As are B

- Therefore all As are Bs

Induction is not therefore a very sophisticated form of reasoning or inferencing, but for purposes of our survival it doesn’t have to be. Most organisms are innately predisposed to associate event A with event B. A rat can learn that pressing a lever means that food pellets will appear. Cats learn what kinds of things they can eat and what not to eat, what situations are dangerous, and that they can get fresh food at 5 o’clock in the morning by bursting into bedrooms and meowing loudly. Along with other animals we are even predisposed to make generalisations from single instances. It usually takes only a single attempt to eat a particular poisonous mushroom to ensure we avoid all mushrooms that look the same thereafter. This is liberal induction (Robertson, 1999), and we may even over-generalise and avoid any kind of mushroom forever after. Indeed, the newspapers are often full of stories of people tarring entire populations with the same brush as a result of the actions of a tiny unrepresentative sample from that population. The main problem with induction, as far as achieving our goals is concerned, is that there is no guarantee that the inference is correct. Inducing things too readily can be dangerous: “induction should come with a government health warning,” as Johnson-Laird has put it (Johnson-Laird, 1988a, p. 234).

However, the kind of induction that is more pertinent to problem solving in technical domains is conservative induction (Medin & Ross, 1989). This kind of induction means that people are very careful about just how far they think it is safe to generalise. In terms of problem solving, conservative induction means that we rely heavily on the context and the surface details of problems when using an example to solve another problem of the same type. As a result the generalisations we gradually form contain a great deal of specific, and probably unnecessary, information. Furthermore, according to Bassok (1997), different individuals are likely to induce different structures from different problem contents. Spencer and Weisberg (1986) and Catrambone and Holyoak (1989) found evidence for redundant and irrelevant specific information in whatever schema subjects had derived from the examples they had seen. Chen, Yanowitz and Daehler (1995) argued that children found it easier to use abstract principles when they were bound to a specific context. Bernardo (1994) also found that generalisations derived from problems included problem-specific information (see also Perkins & Salomon, 1989). Reeves and Weisberg (1993) argued that the details of problems are necessary to guide the transfer of a solution principle from one problem to another. This kind of inductive reasoning tends to be used only for “unnatural” or “biologically secondary” problems (see Chapter 1). The reason why induction is conservative in this way is due to the fact that we tend to base our early categorisations of (unnatural) objects, problems or whatever on the features of previously encountered examples rather than on abstractions. If those features vary little, then learning can be quite fast and the schema formed relatively “fixed” (Chen & Mo, 2004). If the features vary, then schema induction is more conservative.

Schema induction

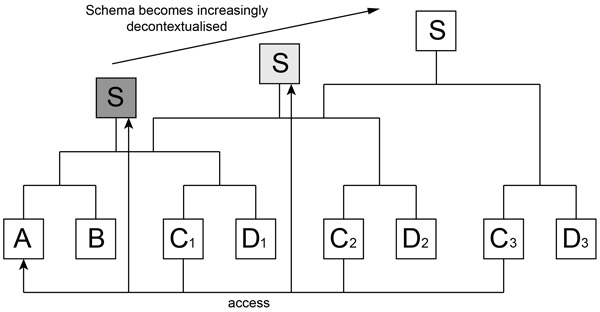

Figure 5.1 shows the effect of repeated exposure to similar problems. With experience the schema becomes increasingly decontextualised. Eventually the solver can either access the schema directly (which means, in effect, that the solver knows how to solve the problem and does not need an example to work from), or a paradigmatic example that instantiates the schema.

The literature on induction tends to concentrate on the induction of categories (such as fruit, animals, furniture, etc.), but the findings are equally applicable to learning problem categories (Cummins, 1992; Ross, 1996; VanLehn, 1986). Problem categories are generally characterised by what you have to do. More specifically, a problem type is usually characterised by not only the set of features they exhibit but also the relationships between those features. Those relationships, in turn, tend to relate to or be determined by what you have to do – i.e., the kinds of operators that should be applied to them.

One model of how we induce both categories and rules from experience, is that of Holland, Holyoak, Nisbett and Thagard (1986). They have produced a general cognitive architecture that lays emphasis on induction as a basic learning mechanism. An important aspect of Holland et al.’s model is the emphasis placed on rules representing categorisations derived from experience. Rules that lead to successful attainment of a goal are strengthened and are more likely to fire in future. Information Box 5.1 presents some of the main features of the model.

Information Box 5.1 Processes of induction

Holland, Holyoak, Nisbett and Thagard (1986) present a model of induction based on different types of rules. Synchronic rules represent the general features of an object or its category membership. Diachronic rules represent changes over time. There are two kinds of synchronic rule: categorical and associative (Holland et al., 1986, p. 42).

Categorical rules include rules such as:

- If an object is a dog, then it is an animal.

- If an object is a large slender dog with very long white and gold hair, then it is a collie.

- If an object is a dog, then it can bark.

Note that these rules encompass both a hierarchy (dogs are examples of animals) and the properties of individual members (dogs belong to the set of animals that bark), in contradistinction to the conceptual hierarchy of Collins and Quillian (1969) where properties are attached to examples at each level of a hierarchy.

Associative rules include:

- If an object is a dog then activate the “cat” concept.

- If an object is a dog then activate the “bone” concept.

Associative rules therefore represent the effects of spreading activation or priming.

Diachronic rules are also of two kinds “predictor” and “effector”.

Predictor rules permit expectations of what is likely to occur in the world. Examples of predictor rules are:

- If a person annoys a dog then the dog will growl.

- If a person whistles to a dog then the dog will come to the person.

Effector rules tell the system how to act in a given situation. They include rules such as:

- If a dog chases you then run away.

- If a dog approaches you with its tail wagging then pet it.

I toss a pen in the air and catch it when it falls back down. I kick a ball in the air; it rises then falls to Earth. I toss a set of keys to someone who catches it as it drops into her hand. The pen, the ball and the keys represent a set of objects (let’s call it S) that get thrown up and come down again due to the influence of gravity. This state of affairs is represented in Figure 5.2.

The set of objects represented by the ball in Figure 5.2 goes through a change of state from time t when it is rising to time (t + 1) when it is falling. Something acts on the upward moving object to produce a change of state (gravity, in this case). This change of state comes about through the action of a transition function (T). Thus if T acts on S(t) it brings about a change of state in the world which can be represented as S(t + 1). We therefore derive the equation:

T[S(t)] = S(t + 1).

When we are referring to human behaviour we are interested in the output of a cognitive system (our own actions on the world) so the equation could be modified thus:

T[S(t), O(t)] = S(t + 1),

where O(t) is the output of the cognitive system at time t. O(t) can also be regarded as applying a mental operator to a state of affairs in the world at time t just as gravity operated on the ball in Figure 5.2 at time t.

Our mental representation of the world tends to simplify the real world by categorising aspects of it. To make sense of the world and not overload the cognitive system a categorisation function “maps sets of world states into a smaller number of model states”. The model of the world produced by such a “many-to-one” mapping is known as a homomorphism.

Now sometimes generalisations (categorisations) can lead to errors. If you say “Boo” to a bird it will fly away. However, no amount of booing will cause a penguin to fly away despite the penguin being a bird. To cope with penguins another more specific rule is required. You end up with a hierarchy from superordinate (more general) categories to subordinate (more specific) categories and instances. Higher-level categories provide default expectations (birds fly away when you say “Boo!”). An exception (penguins waddle swiftly away when you say “Boo!”) evokes a lower level of the hierarchy. A hierarchical model that includes a set of transition functions (one for each level) is known as a quasi-homomorphism or q-morphism for short.

Figure 5.3 represents a mental model based on a state of affairs in the world (a sequence of operators applied to a sequence of problem states). The top half represents the world including transitions between states, and the bottom half is a mental model of that world.

Holland, John H., Keith J. Holyoak, Richard E. Nisbett, and Paul R. Thagard., Induction: Processes Of Inference, Figure 2.5, p. 40, © 1986 Massachusetts Institute of Technology, by permission of The MIT Press.

A problem model is valid only if (1) given a model state S′i, and (2) given a model state S′g that corresponds to a goal state Sg in the environment, then (3) any sequence of actions (operator categories) in the model, [O′ (1), O′ (2), … O′(n)], which transforms S′i into S′g in the model, describes a sequence of effector actions that will attain the goal Sg in the environment. An ideal problem model thus is one that describes all those elements in the world necessary and sufficient for the concrete realization of a successful solution plan. The process of induction is directed by the goal of generating mental models that increasingly approximate this ideal.

(Holland et al., 1986, p. 40)

In the homomorphism represented in Figure 5.4, P is a categorisation function that serves to map elements in the world to elements in the model.

Analogical problem solving can be modelled in this system by assuming a “second-order morphism”. Figure 5.4 shows the original (source) model as World A and the target problem as World B. Analogical problem solving takes place by using the model of the source to generate a model of the target.

The categorisation function PA and transition function T′A in the source model provide a morphism for some aspects of the world (problem). There is also an analogical mapping function P* that allows a model of the target problem to be generated by mapping aspects of the source to the target.

Generalisation

Similar conditions producing the same action can trigger condition-simplifying generalisation. Suppose there are learned rules such as “If X has wings and X is brown then X can fly” and “If X has wings and X is black then X can fly.” A simplifying generalisation would remove the “X is brown” and “X is black” conditions, since they do not seem to be relevant to flying.

People have beliefs about the degrees to which instances of objects that form categories tend to vary. That is, the generalisations that we form depend on the nature of the categories and our beliefs about the variability of features of instances of the category. Induction therefore ranges from liberal to conservative. The PI system takes this variability into account in inducing instance-based generalisation.

Specialisation

It is in the nature of our world that there are exceptions to rules. Once we have learned a rule that says that if an animal has wings then it can fly and we encounter a penguin our prediction is going to be wrong. PI can generate an exception rule (the q-morphism is redefined) that seems to cover the case of penguins. The unusual properties of penguins can be built into the condition part of the rule.

Expertise

The acquisition of expertise can be modelled by assuming that the sequence of rules that led to a successful solution are strengthened by a “bucket brigade” algorithm. This algorithm allows strength increments to be passed back along a sequence of linked rules. The final rule in the chain is the one that gets the most strength increments. Since PI is a model designed to show how we learn from experience of instances, and since it includes algorithms for both generalisation and specialisation, it is a general model that helps explain some of the processes involved the development of expertise.

There are various kinds of knowledge that can be encapsulated in schemas since schemas can represent structured knowledge from the specific to the abstract. Marshall (1995) has listed some of the types of knowledge captured by problem schemas. These include knowledge of typical features or configurations of features, abstractions and exemplars, planning knowledge and problem solving algorithms. Information Box 5.2 gives these in more detail and also identifies five basic word problem schemas that can be derived from a problem’s textbase.

Information Box 5.2 Problem schemas (Marshall, 1995)

Marshall states that there are four knowledge types associated with schemas:

Identification knowledge. This type of knowledge is made up of a configuration of several problem features. It is the aspect of a problem schema that allows pattern recognition. For example, in the river problems that Hinsley, Hayes and Simon’s (1977) subjects recognised, which involved boats or rafts travelling at different speeds and being pulled along by the current, they would include those aspects mentioned by expert subjects such as speeds of the boats and river, direction of travel and so forth.

Elaboration knowledge. This is declarative knowledge of the main features of a problem. It includes both specific examples and more general abstractions. Both of these allow the creation of a mental model (a problem model) in a given situation. For example, there may be a specific paradigmatic river crossing example that allows people to access the relevant solution schema.

Planning knowledge. This is an aspect of problem solution knowledge used to create plans, goals, and sub-goals. Someone could recognise a problem type (through identification knowledge and elaboration knowledge) but not have the planning knowledge to solve it. For example, “This is a recursion problem, isn’t it? But I have no idea what to do.”

Execution knowledge. This is the knowledge of the procedure for solving a problem allowing someone to carry out the steps derived through the planning knowledge. The solver is here following a relevant algorithm, for example, or has developed a certain procedural skill related to this type of problem. This would include the kind of skill required to carry out a plan to cap a blown oil well, or mount a takeover bid, or whatever.

Marshall identifies five basic word problem schemas (based on the problems’ textbase):

- Change: Stan had 35 stamps in his stamp collection. His uncle sent him eight more for a birthday present. How many stamps are now in his collection?

- [Operator: add quantities mentioned]

- Group: In Mr Harrison’s third grade class there were 18 boys and 17 girls. How many children are in Mr Harrison’s class?

- [Operator: add quantities mentioned]

- Compare: Bill walks a mile in 15 minutes. His brother Tom walks the same distance in 18 minutes. Which one is the faster walker?

- [Operator: subtract quantities mentioned]

- Restate: At the pet store there are twice as many kittens as puppies in the store window. There are eight kittens in the window. How many puppies are also in the window?

- [Operator: divide quantity]

- Vary: Mary bought a package of gum that had five sticks of gum in it. How many sticks would she have if she bought five packages of gum?

- [Operator: multiply quantities mentioned]

In Piagetian terms, a problem that appears to match a pre-existing schema can be assimilated into the schema. However, when aspects of the context or the solution procedure differ, the schema may need to be adapted through some form of re-representation or restructuring – “accommodation” in Piaget’s terms. Chen and Mo (2004) found evidence that solving problems whose procedures varied little led to a schema formation with a limited range of applicability and, indeed, to mental set (they used variations of Luchins’s (1942) water jugs experiment). Where problems required adaptations to the procedural operations required (the detailed implementation of procedures that instantiate an abstract solution principle), a schema was formed at a level of abstraction that allowed the students to solve far transfer problems. They argue that exposure to problems that vary in multiple dimensions such as solution procedure, quantities involved, semantic context or combinations of the three leads to the induction of a schema that is flexible and has a wide range of applicability: “The provision of diverse, representative source instances along different dimensions allows for the construction of a less context-embedded and/or procedure bound, and thus more flexible and powerful, schema” (Chen & Mo, 2004, p. 596).

Marshall includes execution knowledge as a specific type of schematic knowledge structure. Anderson (1993) prefers to separate the schema knowledge and the procedural knowledge required to solve it. Generally speaking, schemas serve to identify the problem type and indicate at a fairly general level how this type of problem should be solved. For a more detailed view of how a certain category of problems should be solved it is best to look at the specific procedures for solving them. In a sense this means that problems can be viewed at different grain sizes. Anderson (1993) has argued that, if we are interested in skill acquisition rather than problem categorisation, then we would be better to look at the specific solution method used. It is to this aspect of problem solving skill acquisition that we now turn.

Schema development and the effects of automatisation

Schemas are useful since they allow aspects of knowledge to be abstracted out of the context in which the knowledge was originally gained. That knowledge can therefore be transferred to another context. The benefits are that the abstracted knowledge can be applied to a wide variety of similar situations. However, there are drawbacks to schematisation.

being abstractions, they are reductive of the reality from which they are abstracted, and they can be reductive of the reality to which they are applied … An undesirable result is that these mental structures can cause people to see the world as too orderly and repeatable, causing intrusion on a situation of expectations that are not actually there.

(Feltovich, Spiro, & Coulson, 1997, p. 126)

One would therefore expect that one of the effects of schema acquisition, and of knowledge compilation in general, would be a potential rigidity in expert performance. As we have seen (Chapter 3), rule learning can lead to functional fixedness or Einstellung (Frensch & Sternberg, 1989; Sternberg & Frensch, 1992). Sternberg and Frensch, for example, have argued that experts’ automaticity frees up resources to apply to novel problems. That is, their knowledge is compiled and no longer accessible to consciousness (Anderson, 1987). Automaticity can therefore lead to lack of control when a learned routine is inappropriate to the task in hand. When a routine procedure is required, there is probably little difference between expert and novice performance. Indeed there is an argument that the routinisation of behaviour can lead experts to make more mistakes than novices (or at least mistakes that novices are unlikely to make.

In Reason’s Generic Error-Modelling System (GEMS) (Reason, 1990) there are three types of error: skill-based (SB) slips and lapses (that occur in automatised actions), rule-based (RB) mistakes, and knowledge-based (KB) mistakes. SB and RB errors are likely to be caused by following well-learned “strong but wrong” routines, and hence are more likely with increased skill levels. In SB errors “the guidance of action tends to be snatched by the most active motor schema in the ‘vicinity’ of the node at which an attentional check is omitted or mistimed” (p. 57). RB errors are likewise triggered by mismatching environmental signs to well-known “troubleshooting” rules. KB mistakes are unpredictable as

they arise from a complex interaction between “bounded rationality” and incomplete or inaccurate mental models … No matter how expert people are at coping with familiar problems, their performance will begin to approximate that of novices once their repertoire of rules has been exhausted by the demands of a novel situation.

(Reason, 1990, p. 58)

There are therefore circumstances when novices may do better or at least no worse than novices. Since novices and experts differ in the way they represent information, Adelson (1984) hypothesised that expert programmers would represent programs in a more abstract form than novices. Novices, she argued, would represent programs in a more concrete form than experts who would represent them in terms of what the program does. An entailment of this theory is that they would differ in how they dealt with questions based on a concrete or an abstract representation of a program. In one experiment she represented a program as a flowchart (an abstract representation) or described how it functioned (a concrete representation). She then asked questions about either what the program did (abstract) or how it did it (concrete). She found that she could induce experts to make more errors than novices if they were asked a concrete question when presented with an abstract representation. Likewise, novices made more errors in the abstract condition.

Cognitive architectures

Problem solving, learning and the ultimate development of expertise are behaviours that require explanation. One can try to explain them by looking solely at the behaviour emitted given the context (stimuli) in which an organism is embedded, and thus paying no attention to what is going on inside the head of the solver. We would therefore end up with more of a description than an explanation. Alternatively, one could look at the internal workings of the brain to see what is going on when people try to solve problems. However, the firing of neurons does not explain the difficulty a student has with algebra or an expert’s intuition. As Marr (1982; 2010, p. 27) put it in relation to visual perception: “Trying to understand perception by studying only neurons is like trying to understand bird flight by studying only feathers.” Somewhere between the details of the firings of neurons in the brain and overt human behaviour lies a level of abstraction that is useful for understanding how the mind works in terms of the representations and processes involved.

Marr’s computational model of visual perception involved three levels of analysis:

- 1 The computational level:

- What problems does the visual system need to solve – how to navigate around the world without bumping into things and how to avoid being eaten.

- 2 The algorithmic level:

- How does the system or device do what it does – what representations and processes are used to navigate and avoid danger.

- 3 The implementation level:

- How is the system or device physically realised – what neural structures and neuronal activities are used to implement those representations and processes.

Since Marr’s account, cognitive psychologists have focussed on the algorithmic level, and for our purposes this means the level of the architecture of cognition allowing cognitive mechanisms to be described irrespective of their implementation, which in turn allows for models of cognition to be used to test theories of cognition. Just as an architect may produce a two-dimensional plan or a three-dimensional model that shows how the different parts of a building are interconnected and serviced, so a psychologist can build a model that incorporates a theory of how we think and learn. One can build a model to examine specific aspects of cognition, such as face recognition or sentence parsing, or one can build a general model or architecture in which a variety of cognitive processes can be modelled. Anderson (2007) provides an analogy with the architecture of a house where the raw materials are such things as bricks and mortar, the specific structure is dictated by the market and the function is habitation. In a mind, the raw materials are neurons, neurotransmitters and the like, the structure is the result of evolution and the function is cognition. Such a cognitive architecture describes the functional aspects of a thinking system. For example, a cognitive system has to have input modules that encode information coming in from the outside world; there also has to be some kind of storage system that holds information long enough to use it either in the short term or in the long term; there also has to be some kind of executive control module that allows the system to make decisions about what information is relevant to its current needs and goals and to make inferences based on stored knowledge; and finally, it also has to be able to solve problems and learn from experience.

Several such general models of cognition exist. One class of them is based around production systems introduced in Chapter 3. An example would be “if it rains, and you want to go outside and you want to stay dry, then take an umbrella.” You might see the “if” part and the “then” part referred to in various ways, such as the left side of a rule and the right side of a rule, or goal and sub-goal, or condition–action. The condition part of a rule specifies the circumstances (“it is raining” – an external event; “I want to stay dry” – an internal state) under which the action part (“take an umbrella”) is triggered. If a set of circumstances matches the condition part of a rule then the action part is said to “fire”. A production system is therefore a set of condition–action rules and a production system architecture has some form of production memory, a declarative memory store and some form of executive control and working memory along with connections between them and the outside world.

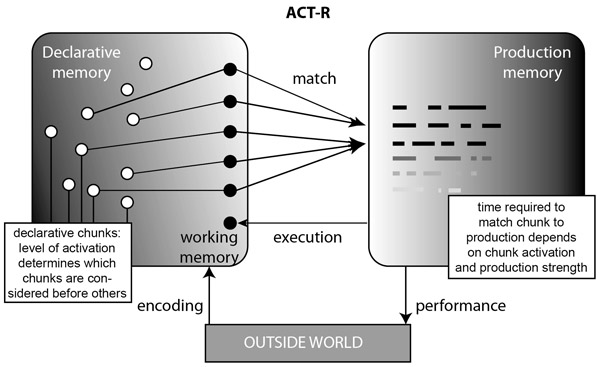

Anderson’s (1983, 1993) Adaptive Control of Thought–Rational (ACT-R) architecture includes these three memory systems. Information entering working memory from the outside world or retrieved from declarative memory is matched with the condition parts of rules in production memory and the action part of the production is then executed. Declarative memory can be verbalised, described or reported and items are stored in a “tangled hierarchy”. Procedural memory can be observed and is expressed in a person’s performance. People can get better at using procedural skills but forget the declarative base from which it was created.

When the executed part of the production rule enters working memory it may in turn lead to the firing of other rules. For example, information entering the senses from the world (“it is raining”) may enter working memory. This information may match with one of the conditions of a production rule (“if it is raining and you need to go outside and you want to stay dry then take an umbrella”), in which case the action part of the rule fires (“take an umbrella”), and this in turn enters working memory (“you have an umbrella”) and may in turn trigger a further production (“if you have an umbrella and it is raining then open the umbrella”), and so on.

In some architectures however, declarative knowledge can be expressed as productions. Thus in the SOAR architecture (Laird, Newell, & Rosenbloom, 1987; Laird & Rosenbloom, 1996) there is no separate declarative memory store. Both SOAR and ACT-R claim to model a wide variety of human thinking. Anderson has produced a series of models that attempt to approximate as closely as possible to a general theory of cognition. Anderson’s model has evolved over several decades and has gone through a number of versions. The most recent is known as ACT-R 7, where the “7” refers to the 7th iteration of the architecture. The term “rational”, in the sense used in the architecture, does not mean “logically correct reasoning”, rather it refers to the fact that organisms attempt to act in their own best interests. Chapter 2 referred to Newell and Simon’s idea of “intendedly rational behaviour” or “limited rationality”. Laird and Rosenbloom (1996) refer to the “principle of rationality” ascribed to Newell that governs the behaviour of an intelligent “agent” whereby “the actions it intends are those that its knowledge indicates will achieve its goals” (p. 2). Anderson’s term is based on the sense used by economists. In this sense “human behavior is optimal in achieving human goals” (Anderson, 1990, p. 28). Anderson’s General Principle of Rationality states: “The cognitive system operates at all times to optimize the adaptation of the behavior of the organism” (Anderson, 1990, p. 28).

Certain aspects of cognition seemed to be designed to optimize the information processing of the system … optimization means maximizing the probability of achieving [one’s] goals while minimizing the cost of doing so or, to put it more precisely, maximizing the expected utility, where this is defined as expected gain minus expected cost.

(Anderson, 1993, p. 47)

In a sense, where Anderson’s definition emphasises the evolutionary process that shapes our thinking, Laird and Rosenbloom’s emphasises the results of that process. Our cognitive system has evolved to allow us to adapt as best we can to the exigencies of the environment in which we find ourselves and in line with our goals. This entails a balance between the costs involved in, say, a memory search, and the gains one might get from such a search (the usefulness of the retrieved memory). As a result of taking this view of rationality, the cognitive mechanisms built into ACT-R are based on Bayesian probabilities (see Information Box 5.3). For example, the world we inhabit has a certain structure. Features of objects in the world tend to co-vary. If we see an animal with wings and covered in feathers there is a high likelihood that the animal can fly. It would be a useful for a cognitive system to be constructed to make that kind of assumption relatively easily. It’s wrong, but it’s only wrong in a very small percentage of cases. The gains of having a system that can make fast inductions of this kind outweigh the costs of being wrong on the rare occasion. Assume that you are an experienced driver and you are waiting at a junction. A car is coming from the left signalling a right turn into your road. There is a very high probability that the car will, indeed, turn right. In this case, however, the costs of being wrong are rather high, so you might wait for other features to show themselves such as the car slowing down before you decide to pull out.

Information Box 5.3 Bayes’s theorem

Almost all events or features in the world are based on probabilities rather than certainties. The movement of billiard balls on a table becomes rapidly unpredictable the more the cue ball strikes the other balls, uncertainties govern the movement and position of fundamental particles, if someone has long hair then that person is probably a woman, most (but not all) fruits are sweet and so on. Fortunately, some events or co-variations of features are more likely than others, otherwise the world would be even more unpredictable than it already is. Our beliefs reflect the fact that some things are more likely to occur than others. Aristophanes probably did not weigh up the benefits of going out in a boat with the potential costs of being killed by a falling tortoise.

Bayes’s theorem allows us to combine our prior beliefs or the prior likelihood of something being the case with changes in the environment. When new evidence comes to light, or if a particular behaviour proves to be useful in achieving our goals, then our beliefs (or our behaviour) can be updated based on this new evidence. The theorem is expressed as:

Odds(A given B) = LR × Odds(A),

where A refers to one’s beliefs, a theory, a genetic mutation being useful, or whatever; B refers to the observed evidence or some other type of event. Odds (A given B), could reflect the odds of an illness (A) given a symptom (B), for example. In the equation, Odds(A) refers to what is known as the “prior odds” – a measure of the plausibility of a belief or the likelihood of an event. For example, a suspicion that someone is suffering from malaria might be quite high if that person has just returned from Africa. The LR is the Likelihood Ratio and is given by the formula:

where P is the probability, B is an event (or evidence) and A is the aforementioned belief, theory or other event. The Likelihood Ratio therefore takes into account both the probability of an event (B) happening in conjunction with another event (A) (a symptom accompanying an illness) and in the absence of A (the probability of a symptom being displayed for any other reason).

Anderson has used Bayesian probabilities in his rational analysis of behaviour and cognition. For example, in the domain of memory, the probability that an item will be retrieved depends on the how recently the item was used, the number of times it has been used in the past and the likelihood it will be retrieved in a given context. In problem solving there is an increased likelihood that the inductive inferences produced by using an example problem with a similar goal will be relevant in subsequent similar situations.

Memory structures in ACT-R

The current version of ACT-R makes use of both “symbolic” and “subsymbolic” levels. Anderson (2007, p. 33) describes the distinction between the two in the ACT-R system thus: “The symbolic level in ACT-R is an abstract characterization of how the brain structures encode knowledge. The subsymbolic level is an abstract characterization of the role of neural computation in making that knowledge available.” Both the declarative module and the procedural module consist of symbolic and subsymbolic levels. In the procedural module, decisions about what rule should be applied is at the subsymbolic level based on the utility of the production rules given the prevailing conditions (see also Simena & Polk, 2010, concerning the interface between symbolic and subsymbolic systems).

Declarative memory in ACT-R

The “chunk” is the basic unit of knowledge in declarative memory. A chunk is a data structure also known as working memory elements (WMEs or “wimees”). Only a limited number of elements can be combined into a chunk – generally three. Examples would be USA, BSE, AIDS. Chunks have configural properties such that the different component elements have different roles. For example, 1066 is a chunk since it is a meaningful unit of knowledge (assuming you know about the Norman Conquest), but the elements rearranged to form 6106 would no longer constitute a chunk. Chunks can be hierarchically organised. Broadbent (1975) found that 54% of people split items entering working memory into pairs, 29% split them into three items, 9% into four items, and 9% longer. French phone numbers, being divided into pairs of numbers, are therefore ideal. Generally speaking, two or three items make a reasonably good chunk size.

Chunks can also be used to represent schemas in declarative memory. Information Box 5.4 represents a schema for an addition problem. In the first part the problem is given a name (problem1). This is followed by a series of “slots”, the first one (the isa slot) being the problem type. The next row has a slot for columns which are listed in brackets. This part also shows a simple example of a hierarchical arrangement since the columns themselves are represented as schematic chunks. If we take column1 as an example we can see that this represents configural information. The chunk type (represented by the isa slot) determines to an extent the types of associated slots that follow. The chunk size is also determined by the number of associated slots.

Information Box 5.4 Schema representation of the problem 264 + 716 (Anderson, 1993, p. 30)

264

+716

- problem1

- isa numberarray

- columns (column0 column1 column2 column3)

-

column0

- isa column

- toprow blank

- bottomrow +

- answerrow blank

- column1

- isa column

- toprow two

- bottomrow seven

- answerrow blank

- column2

- isa column

- toprow six

- bottomrow one

- answerrow blank

- column3

- isa column

- toprow four

- bottomrow six

- answerrow blank

Chunks have a certain inherent strength or base-level activation depending on the extent to which they have been activated (needed) in the past. The activation of a chunk at a given moment is governed by an equation that incorporates the base level activation, the context which includes elements currently in the buffers, the weight of attention focussed on one of the elements in the buffers and the strength of association between the chunk and that element.

Working memory in ACT-R

Working memory is simply that part of declarative memory that is currently active and that maintains the current goal state. Information can enter working memory from the environment, through spreading activation in declarative memory through associative priming, or as a result of the firing of a production in production memory. For example, if your goal is to do three-column addition and you haven’t yet added the rightmost column, then add the rightmost column. If your goal is to do three-column addition and you have added the rightmost column (now in working memory along with any carry), then add the middle column. If your goal is to do three-column addition and you have added the rightmost column and the middle column (both in working memory), then add the leftmost column and so on. The architecture of ACT-R, including the brain regions assumed to underpin the different components of the architecture, is shown in Figure 5.5.

Production memory in ACT-R

The production is the basic unit of knowledge in procedural memory and declarative knowledge is the basis for procedural knowledge. ACT-R requires declarative structures to be active to support procedural learning in the early stages of learning a new skill. Productions in ACT-R are modular. That is, deleting a production rule will not cause the system to crash. There will, however, be an effect on the behaviour of the system. If you had a complete model of two-column subtraction and deleted one of the production rules that represented the subtraction problem then you would generate an error in the subtraction. This way you can model the kinds of errors that children make when they learn subtraction for the first time (Brown & Burton, 1978; Young & O’Shea, 1981).

Procedural memory in ACT-R can respond to identical stimulus conditions in entirely different ways depending on the goals of the system. The interplay between declarative and production memory in ACT-R is shown in Figure 5.6.

Learning in ACT-R

Anderson has consistently argued that all knowledge enters the system in a declarative form. Using that knowledge in context generates procedural knowledge which is represented as a set of productions in production memory. At the initial stage of skill acquisition (the cognitive stage), the creation of procedural knowledge comes about through knowledge compilation which involves proceduralisation, where domain-specific declarative knowledge replaces items in general-purpose procedures. Thereafter the composition process collapses multiple procedures required to achieve a particular goal into a single procedure (Anderson, 1982; Taatgen & Lee, 2003). The process of generalisation can be modelled by replacing values with variables. The process of specialisation does the opposite by replacing variables with specific values. The production compilation process involves the replacing of very general productions with specific rules. The example in Information Box 5.5 is from Taatgen and Lee (2003, p. 64) and demonstrates how production compilation works in the domain of air traffic control (ATC).

Information Box 5.5 General procedures that can be applied to any task

- Retrieve instruction:

- IF you have to do a certain task,

- THEN send a retrieval request to declarative memory for the next instruction for this task.

-

Move attention:

- IF you have to do a task AND

- an instruction has been retrieved to move attention to a certain place,

- THEN send a retrieval request to declarative memory for the location of this place.

- Move to location:

- IF you have to do a task AND

- a location has been retrieved from declarative memory,

- THEN issue a motor command to the visual system to move the eyes to that location.

- The air traffic controller combines the declarative instruction specific to ATC with the general procedures above to produce the rules below by combining pairs of the general rules:

- Instruction & attention:

- IF you have to land a plane,

- THEN send a retrieval request to declarative memory for the location of Hold Level 1.

- Attention & location:

- IF you have to do a task AND

- an instruction has been retrieved to move attention to Hold Level 1,

- THEN issue a motor command to the visual system to move the eyes to the bottom left of the screen.

- “Combining either of these two rules with the rule from the original set (i.e., combining “instruction & attention” with “move to location” or “retrieve instruction” with “attention & location”) produces the following task-specific rule for landing a plane:”

- All three:

- IF you have to land a plane,

- THEN issue a motor command to the visual system to move the eyes to the bottom left of the screen.

Retrieving a declarative fact such as “Paris is the capital of France” or “7 × 8 = 56” can become faster and more accurate with repeated retrievals of the fact. This is the process of declarative strengthening that takes place at the associative stage of learning (Anderson, 2007; Tenison & Anderson, 2015).

When we encounter a novel problem for the first time we might hit an impasse – we don’t immediately know what to do. We might therefore attempt to recall a similar problem we have encountered in the past and try to use that to solve the current one. According to Anderson, this process involves interpretive problem solving. This means that our problem solving is based on a declarative account of a problem solving episode. This would include, for example, using textbook examples or information a teacher might write on a board. Anderson argues that even if we have only instructions rather than a specific example to hand, then we interpret those instructions by means of an imagined example and attempt to solve the current problem by interpreting this example. “The only way a new production can be created in ACT-R is by compiling the analogy process. Following instructions creates an example from which a procedure can be learned by later analogy” (Anderson, 1993, p. 89). In short, Anderson is arguing that learning a new production – and, by extension, all skill learning – occurs through analogical problem solving. In this sense the model is similar to Holland et al.’s (1986) Processes of Induction model described in Information Box 5.1.

Figure 5.7 shows the analogy mechanism in ACT-R in terms of the problem representation used throughout this book. In this model, the A and C terms represent goals (the problem statement including the problem’s goal). The B term is the goal state and the line linking the A and B terms is the “response”: the procedure to be followed to achieve the goal state. As in other models of analogy, mapping (2 in the Figure) involves finding correspondences between the example and the current problem.

Before looking in a little more detail at ACT-R’s analogy mechanism, try Activity 5.1.

Activity 5.1

Imagine you are given the problem of writing a Lisp function that will add 712 and 91. Imagine also that you know very little about Lisp. However, you have been given an example that shows you how to write a Lisp function that will multiply two numbers together:

Defun multiply-them(2 3)

* 2 3

You know that “defun” defines a function and that “multiply-them” is a name invented for that function and that * is the multiplication symbol. How would you write a function in Lisp that will add 712 and 91?

The mapping of the elements in the example onto elements in the current problem is shown in more detail in Figure 5.8. The structure in the example shows you that the problem involves a Lisp operation, that the operation involves multiplication, that there are two “arguments” (arg1 and arg2) and that there is a method for doing it shown by response1. These elements can be mapped onto the elements of the current problem. The problem type is different but nevertheless both are arithmetic operations. They are similar because they are semantically related and because they belong to the same superordinate category type.

Potential criticisms of cognitive models

Cognitive models have been criticised for being descriptive rather than explanatory. Clancey (1997) argues that architectures such as ACT-R and SOAR are descriptive and language based, whereas human knowledge is built from activities: “Ways of interacting and viewing the world, ways of structuring time and space, pre-date human language and descriptive theories” (Clancey, 1997, p. 270). He also argues that too much is missed out of production system architectures such as the cultural and social context in which experts operate. However, Taatgen, Huss, Dickison and Anderson (2008) have developed a model of skilled behaviour that integrates the traditional view of skill development through increasing experience in a domain leading to specialised knowledge with the embedded cognition view that knowledge is developed through interaction with the world. Thus the environment, which could include the social environment, often determines what action to take next, but since the environment does not always provide all the information needed the solver needs to maintain some form of mental representation and cognitive control.

Another potential problem is that the cognitive model is under-constrained. For example, Roberts and Pashler (2000) list a number of models of human behaviour that include “free parameters” – variables in the model that can potentially be adjusted by the modeller to make the model fit reality. Taatgen and Anderson (2008) point out the difficulties that exist in ensuring that models are valid. As well as the need to reduce the number of free parameters, they also include the need to ensure the model can predict behaviour rather than produce a post-hoc description of behaviour. A further validity criterion is that, rather than being based on expert knowledge, a model should start at the level of the novice and acquire knowledge on its own. Anderson makes a strong argument for his production system architecture being more than descriptive and predictive. Because of their explanatory power and ability to learn, both SOAR (Newell, 1990) and ACT-R (Anderson, 1983, 1993, 2007; Anderson & Lebiere, 1998) claim to be unified theories of cognition.

Johnson-Laird (1988a) has suggested that production system architectures can explain a great deal of the evidence that has accrued about human cognition. However, their very generality makes them more like a programming language than a theory and hence difficult to refute experimentally.

When I turn out an omelette to perfection, is this because my brain has come to acquire an appropriate set of if–then rules, which I am unconsciously following, or is my mastery of whisk and pan grounded in some entirely different sort of mechanism? It is certainly true that my actions can be described by means of if–then sentences: if the mixture sticks then I flick the pan, and so on. But it doesn’t follow from this that my actions are produced by some device in my brain scanning through lists of if–then rules of this sort (whereas that is how some computer expert systems work).

(Copeland, 1993, p. 101)

Johnson-Laird (1988b) also argues that condition–action rules are bound to content (the “condition” part of the production) and so are poor at explaining human abstract reasoning. Furthermore, he has argued that regarding expertise as compiled procedures suggests a rigidity of performance that experts do not exhibit. This topic is addressed in the next chapter.

Criticisms of ACT-R

Anderson has argued that there is an asymmetry in production rules such that the conditions of rules will cause the actions to fire but the actions will not cause conditions to fire. The conditions and actions in a condition–action rule cannot swap places. Learning Lisp for coding does not generalise to using Lisp for code evaluation. The implication is that one can become very skilled at solving crossword puzzles, but that skill should not in theory translate into making up a crossword for other people to solve. The skills are different. We saw in Chapter 4 that transfer was likely only where productions overlapped between the old and new task (e.g., Singley & Anderson, 1989). Practising a skill creates use-specific or context-specific production rules. McKendree and Anderson (1987) and Anderson and Fincham (1994) have provided experimental evidence for this use-specificity in production rules (see Table 5.1). ACT-R successfully models this transfer or retrieval asymmetry.

In Table 5.1, V1 is a variable which has the value (A B C). CAR is a “function call” in Lisp that returns the first element of a list. The list in this case is (A B C) and the first element of that list is “A”. When Lisp evaluates (CAR V1) the result is therefore “A”. The middle column in the table represents a task where a Lisp expression is evaluated. The third column represents a situation where someone has to generate a function call that will produce “A” from the list (A B C). McKendree and Anderson argue that the production rules involved in the evaluation task and in the generation task are different.

Type of information |

Evaluation |

Generation |

|---|---|---|

V1 |

(A B C) |

(A B C) |

Function call |

(CAR V1) |

? |

Result |

? |

A |

- P1: If the goal is to evaluate (CAR V1) And A is the first element of V1 Then produce A.

- P2: If the goal is to generate a function call And ANSWER is the first element of V1 Then produce (CAR V1).

Since the condition sides of these two productions do not match, then the transfer between them would be limited, bearing in mind that the knowledge they represent is compiled. A similar finding was made by Anderson and Fincham (1994), who got participants to practice transformations of dates for various activities such as: “Hockey was played on Saturday at 3 o’clock. Now it is Monday at 1 o’clock.”

Müller (1999, 2002, 2004), however, challenged the idea of use-specificity of compiled knowledge. One effect of such use-specificity is that skilled performance should become rather inflexible, yet expertise, if it is of any use, means that knowledge can be used flexibly. Müller also used Lisp concepts such as LIST, INSERT, APPEND, DELETE, MEMBER and LEFT. He also got his participants to learn either a generation task or an evaluation task using those concepts in both. His study was designed to distinguish between the results that would be obtained if the use of knowledge was context bound and those that follow on from his own hypothesis of conceptual integration. According to this hypothesis concepts mediate between problem givens and the requested answers. Concepts have a number of features that aggregate together to form an integrated conceptual unit. The basic assumptions of the hypothesis are:

(a) conceptual knowledge is internally represented by integrative units; (b) access to the internal representation of conceptual knowledge depends on the degree of match between presented information and conceptual features; (c) conceptual units serve to monitor the production of adequate answers to a problem; and (d) the integration of a particular feature into the internal representation depends on its salience during instruction, its relevance during practice, or both.

(Müller, 1999, p. 194)

Whereas ACT-R predicts that transfer between different uses of knowledge would decrease with practice, the hypothesis of conceptual integration predicts that transfer between tasks involving the same concepts would increase with practice. Müller found typical learning curves in his experiments but did not find that this presumably compiled knowledge was use specific. There was a relatively high transfer rate between evaluation and generation tasks. Thus the overlap and relevance of conceptual features was more important in predicting transfer and allowed flexibility in skilled performance.

Summary

- 1 There are several explanatory models of how we learn from our experience of the world. Most acknowledge the supremacy of analogising or as the prime mechanism for inducing schemas and for learning new skills.

- 2 Much of our learning involves induction – a powerful and ubiquitous learning mechanism whereby we abstract out the commonalities of experience and use them thereafter as the basis of our deductions and predictions about the world. Repeated exposure to problem types causes us to develop a schema for that problem type in much the same way we learn categories in general.

- 3 Holland et al. (1986) developed a cognitive architecture that uses induction as its basic learning mechanism and rules as the basis for representing categories. The strengthening of successful rules leads to the development of expertise.

- 4 Marshall (1995) listed the different types of knowledge that can be associated with schemas.

- 5 Once a category of problems has been identified, we also need to access the relevant procedure for solving it. Several models exist that assume that much of human thinking is rule based. Production system architectures based on if–then or condition–action rules have been used to model the development of expertise from the acquisition of declarative information and its use by novices to the automated categorisations and skills of the expert.

- 6 One potential side effect of skill learning is automatisation; that is, learning and knowledge compilation could lead to a rigidity of performance and to certain types of error based on habit.

- 7 Cognitive architectures are a means of theorising about and understanding the functional structure of the mind.

- 8 ACT-R is such a production system architecture based on the view that skill learning can be understood as the learning of condition–action rules (productions). It includes a procedural (production) memory, a declarative memory and a working memory that is the currently active part of declarative memory. The most recent version includes buffers (visual, manual, goal, retrieval) linked to specific areas of neural anatomy.

- 9 Criticisms of production system architectures as general models of learning and problem solving have centred on:

- The idea that cognition can best be modelled as if–then rules;

- Not including in enough detail the role of conceptual knowledge in underpinning solutions and in the development of productions;

- The variable influence of context, especially social context.

- 10 Studies involving ACT-R since just before the beginning of the century have tried to relate the functional aspects of the architecture to neuroanatomy.

References

Adelson, B. (1984). When novices surpass experts: The difficulty of a task may increase with expertise. Journal of Experimental Psychology: Learning, Memory, and Cognition, 10(3), 483–495. doi:10.1037/0278-7393.10.3.483

Anderson, J. R. (1982). Acquisition of cognitive skill. Psychological Review, 89(4), 369–406. doi:10.1037/0033- 295X.89.4.369

Anderson, J. R. (1983). The Architecture of Cognition. Cambridge, MA: Harvard University Press.

Anderson, J. R. (1987). Skill acquisition: Compilation of weak method problem situations. Psychological Review, 94(2), 192–210.

Anderson, J. R. (1990). The Adaptive Character of Thought. Hillsdale, NJ: Erlbaum.

Anderson, J. R. (1993). Rules of the Mind. Hillsdale, NJ: Erlbaum.

Anderson, J. R. (2007). How Can the Human Mind Occur in the Physical Universe? Oxford: Oxford University Press.

Anderson, J. R., & Fincham, J. M. (1994). Acquisition of procedural skills from examples. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20, 1322–1340.

Anderson, J. R., & Lebiere, C. (1998). The Atomic Components of Thought. Mahwah, NJ: Lawrence Erlbaum.

Bassok, M. (1997). Two types of reliance on correlations between content and structure in reasoning about word problems. In L. D. English (Ed.), Mathematical Reasoning: Analogies, Metaphors, and Images. Studies in Mathematical Thinking and Learning (pp. 221–246). Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Bernardo, A.B.I. (1994). Problem specific information and the development of problem-type schemata. Journal of Experimental Psychology: Learning, Memory, and Cognition, 20, 379–395.

Broadbent, D. E. (1975). The magical number seven after fifteen years. In R. A. Kennedy & A. Wilkes (Eds.), Studies in Long-Term Memory (pp. 2–18). New York: Wiley.

Brown, J. S., & Burton, R. R. (1978). Diagnostic models for procedural bugs in basic mathematical skills. Cognitive Science, 2(2), 155–192. doi:10.1207/s15516709cog0202_4

Catrambone, R., & Holyoak, K. J. (1989). Overcoming contextual limitations on problem-solving transfer. Journal of Experimental Psychology: Learning, Memory, & Cognition, 15(6), 1147–1156. doi:10. 1037/0278-7393.15.6.1147

Chen, Z., & Mo, L. (2004). Schema induction in problem solving: A multidimensional analysis. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(3), 583–600. doi:10. 1037/0278-7393.30.3.583

Chen, Z., Yanowitz, K. L., & Daehler, M. W. (1995). Constraints on accessing abstract source information: Instantiation of principles facilitates children’s analogical transfer. Journal of Educational Psychology, 87(3), 445–454.

Clancey, W. J. (1997). The conceptual nature of knowledge, situations, and activity. In P. J. Feltovich, K. M. Ford, & R. R. Hoffman (Eds.), Expertise in Context (pp. 247–291). London: MIT Press.

Collins, A. M., & Quillian, M. R. (1969). Retrieval time from semantic memory. Journal of Verbal Learning and Verbal Behaviour, 8, 240–248.

Collins, H., & Evans, R. (2015). Expertise revisited, Part I – Interactional expertise. Studies in History & Philosophy of Science Part A, 54, 113–123. doi:10.1016/j.shpsa.2015.07.004

Collins, H., Evans, R., & Weinel, M. (2015). Expertise revisited, Part II: Contributory expertise. Studies in History and Philosophy of Science, 56, 103–110. doi:10.1016/j.shpsa.2015.07.003

Copeland, B. J. (1993). Artificial Intelligence: A Philosophical Introduction. Oxford: Blackwell.

Cummins, D. D. (1992). Role of analogical reasoning in the induction of problem categories. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18(5), 1103–1124. doi:10.1037/0278-7393.18.5.1103

Feltovich, P. J., Spiro, R. J., & Coulson, R. L. (1997). Issues in expert flexibility in contexts characterized by complexity and change. In P. J. Feltovich, K. M. Ford, & R. R. Hoffman (Eds.), Expertise in Context (pp. 126–146). London: MIT Press.

Fisher, M., & Keil, F. C. (2015). The curse of expertise: When more knowledge leads to miscalibrated explanatory insight. Cognitive Science, 40(5), 1251–1269. doi:10.1111/cogs.12280

Frensch, P. A., & Sternberg, R. J. (1989). Expertise and intelligent thinking: When is it worse to know better? In R. J. Sternberg (Ed.), Advances in the Psychology of Human Intelligence (Vol. 5, pp. 157–188). Hillsdale, NJ: Erlbaum.

Hinsley, D. A., Hayes, J. R., & Simon, H. A. (1977). From Words to Equations: Meaning and Representation in Algebra Word Problems. Hillsdale, NJ: Erlbaum.

Holland, J. H., Holyoak, K. J., Nisbett, R. E., & Thagard, P. (1986). Induction: Processes of Inference, Learning and Discovery. Cambridge, MA: MIT Press.

Johnson-Laird, P. N. (1988a). The Computer and the Mind: An Introduction to Cognitive Science. London: Fontana Press.

Johnson-Laird, P. N. (1988b). A taxonomy of thinking. In R. J. Sternberg & E. E. Smith (Eds.), The Psychology of Human Thought (pp. 429–457). Cambridge, MA: Cambridge University Press.

Laird, J. E., Newell, A., & Rosenbloom, P. S. (1987). SOAR: An architecture for general intelligence. Artificial Intelligence, 33, 1–64.

Laird, J. E., & Rosenbloom, P. S. (1996). The evolution of the SOAR cognitive architecture. In D. Steier & T. M. Mitchell (Eds.), Mind Matters: A Tribute to Allen Newell (pp. 1–50). Mahwah, NJ: Erlbaum.

Luchins, A. S. (1942). Mechanization in problem solving: The effect of Einstellung. Psychological Monographs, 54(248), i–95.

Marr, D. (1982; 2010). Vision. New York: Freeman.

Marshall, S. P. (1995). Schemas in Problem Solving. Cambridge: Cambridge University Press.

McKendree, J., & Anderson, J. R. (1987). Effect of practice on knowledge and use of Basic LISP. In J. M. Carroll (Ed.), Interfacing Thought (pp. 236–259). Cambridge, MA: MIT Press.

Medin, D. L., & Ross, B. H. (1989). The Specific Character of Abstract Thought: Categorization, Problem Solving and Induction (Vol. 5). Hillsdale, NJ: Lawrence Erlbaum Associates.

Müller, B. (1999). Use specificity of cognitive skills: Evidence for production rules? Journal of Experimental Psychology: Learning, Memory, and Cognition, 25(1), 191–207. doi:10.1037/0278-7393.25.1.191

Müller, B. (2002). Single-use versus mixed-use learning of transformations: Evidence for conceptual integration. Experimental Psychology, 49(1), 45–56. doi:10.1027/1618-3169.49.1.45

Müller, B. (2004). A multinomial model to assess central characteristics of mental operators. Experimental Psychology, 51(3), 201–213. doi:10.1027/1618-3169.51.3.201

Newell, A. (1990). Unified Theories of Cognition. Cambridge, MA: Harvard University Press.

Perkins, D. N., & Salomon, G. (1989). Are cognitive skills context bound? Educational Researcher, 18, 16–25.

Reason, J. (1990). Human Error. Cambridge, MA: Cambridge University Press.

Reeves, L. M., & Weisberg, R. W. (1993). Abstract versus concrete information as the basis for transfer in problem solving: Comment on Fong and Nisbett (1991). Journal of Experimental Psychology: General, 122, 125–128.

Roberts, S., & Pashler, H. (2000). How persuasive is a good fit? A comment on theory testing. Psychological Review, 107(2), 358–367. doi:10.1037/0033-295X.107.2.358

Robertson, S. I. (1999). Types of Thinking. London: Routledge.

Ross, B. H. (1996). Category learning as problem solving. In D. L. Medin (Ed.), The Psychology of Learning and Motivation (Vol. 35, pp. 165–192). New York: Academic Press.

Simena, P., & Polk, T. (2010). A symbolic/subsymbolic interface protocol for cognitive modeling. Logic Journal of the IGPL, 18(5), 705–761. doi:10.1093/jigpal/jzp046

Simon, H. A., & Chase, W. G. (1973). Skill in chess. American Scientist, 61, 394–403.

Singley, M. K., & Anderson, J. R. (1989). The Transfer of Cognitive Skill. Cambridge, MA: Harvard University Press.

Spencer, R. M., & Weisberg, R. W. (1986). Context dependent effects on analogical transfer. Memory and Cognition, 14(5), 442–449.

Sternberg, R. J., & Frensch, P. A. (1992). On being an expert: A cost benefit analysis. In R. R. Hoffman (Ed.), The Psychology of Expertise: Cognitive Research and Empirical AI (pp. 191–203). New York: Springer-Verlag.

Taatgen, N. A., & Anderson, J. R. (2008). Constraints in cognitive architectures. In R. Sun (Ed.), Handbook of Computational Psychology (pp. 170–185). New York: Cambridge University Press.

Taatgen, N. A., Huss, D., Dickison, D., & Anderson, J. R. (2008). The acquisition of robust and flexible cognitive skills. Journal of Experimental Psychology: General, 137(3), 548–565. doi:10.1037/0096-3445.137.3.548

Taatgen, N. A., & Lee, F. J. (2003). Production compilation: Simple mechanism to model complex skill acquisition. Human Factors, 45(1), 61–76. doi:10.1518/hfes.45.1.61.27224

Tenison, C., & Anderson, J. R. (2015). Modeling the distinct phases of skill acquisition. Journal of Experimental Psychology: Learning, Memory, and Cognition. doi:10.1037/xlm0000204

VanLehn, K. (1986). Arithmetic procedures are induced from examples. In J. Hiebert (Ed.), Conceptual and Procedural Knowledge: The Case of Mathematics (pp. 133–179). Hillsdale, NJ: Erlbaum.

Young, R. M., & O’Shea, T. (1981). Errors in children’s subtraction. Cognitive Science, 5(2), 153–177. doi:10.1207/s15516709cog0502_3