1

What is involved in problem solving

We face problems of one kind or another every day of our lives. Two-year-olds face the problem of how to climb out of their cot unaided. Teenagers face the problem of how to live on less pocket money than all their friends. We have problems thinking what to have for dinner, how to get to Biarritz, what to buy Poppy for Christmas, how to find Mr Right, how to deal with climate change. Problems come in all shapes and sizes, from the small and simple to the large and complex and from the small and complex to the large and simple. Some are discovered: for example, we might discover there is no milk for our breakfast cereal in the morning, or you discover you are surrounded by the enemy. Some are deliberately created: we might wonder how to store energy generated by windmills. Some are deliberately chosen: you might decide to do a Sudoku puzzle or play chess with someone. Some are thrust upon us: in an exam you might find this question, “Humans are essentially irrational. Discuss in no more than 2,000 words.” In some cases, at least, it can be fairly obvious for those with the relevant knowledge and experience what people should do to solve the problem. This book deals with what people actually do.

To help get a handle on the issues involved in studying the psychology of human (and occasionally animal) problem solving, we need a way of defining our terms and classifying problems in ways that would help us see how they are typically dealt with. Historically problem solving has been studied using a variety of methods and from a range of philosophical perspectives. The aim of this chapter is to touch on some of these questions and to explain how this book is structured and the kinds of things you will find in it.

What exactly is a problem?

You are faced with a problem when there is a difference between where you are now (e.g., your vacuum cleaner has stopped sucking) and where you want to be (e.g., you want a clean floor). In each case “where you want to be” is an imagined state that you would like to be in. In other words, a distinguishing feature of a problem is that there is a goal to be reached through some action on your part but how to get there is not immediately obvious.

There are several definitions of a problem and of problem solving. Frensch and Funke (1995, pp. 5–6) provide a list of definitions including very broad ones such as “problem solving is defined as any goal-directed sequence of cognitive operations” (Anderson, 1980, p. 257 – in an early version of his cognitive psychology textbook) and a more restrictive one by Wheatley who states that problem solving is “what you do, when you don’t know what to do” (Wheatley, 1984, p. 1). Anderson’s definitions of problem solving have distinguished between early attempts at solving a problem type and later automated episodes that can still be regarded as problem solving (Anderson, 2000a). For the purposes of this book Wheatley’s definition is closest to what is meant when someone is faced with a problem. However, perhaps a fuller definition might be more appropriate:

A problem arises when a living creature has a goal but does not know how this goal is to be reached. Whenever one cannot go from the given situation to the desired situation simply by action, then there is recourse to thinking … Such thinking has the task of devising some action which may mediate between the existing and the desired situations.

(Duncker, 1945, p. 1)

According to this definition a problem exists when there is an obstacle or a gap between where you are now and where you want to be. “If no obstacle hinders progress toward a goal, attaining the goal is no problem” (Reese, 1994, p. 200). We are not faced with much of a problem when we can use learned behaviours to overcome or get round the blocked goal. In fact, some problems do not have a real block or obstacle. If you speak French and are asked to translate “maman” into English, that is not a problem. Neither is multiplying 4 by 5. If posed questions like those, the responses would be automatic and depend on automatic retrieval processes. We do not need to work anything out. For a different reason, multiplying 48 by 53 is not strictly speaking a problem because the solution involves using a learned procedure or algorithm – we know what we have to do to get the solution (although it would fit into Anderson’s definition as it involves a sequence of cognitive operations). It’s only when you don’t have a ready response and have to take some mediating action to attain a goal that you have a problem (Wheatley’s definition).

Furthermore, for a problem to exist, there needs to be a “felt need” to remove obstacles to a goal (Arlin, 1990). That is, people need to be interested enough to search for a solution, otherwise it’s not a problem for that person in the first place. If we do not have the requisite knowledge to solve a particular type of problem – say, in a domain of knowledge with which we are unfamiliar – then it would not be appropriate to say we have a problem there, either. If I am given a piece of Chinese text to translate into Korean, I would be unable to do it. It is not an appropriate problem for me to try to solve. We would be highly unlikely to be motivated to solve problems that are of no interest or relevance to us. “It is foolish to answer a question that you do not understand. It is sad to work for an end that you do not desire. Such foolish and sad things often happen, in and out of school” (Polya, 1957, p. 6).

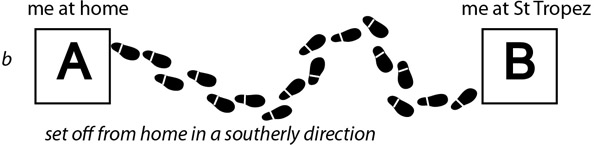

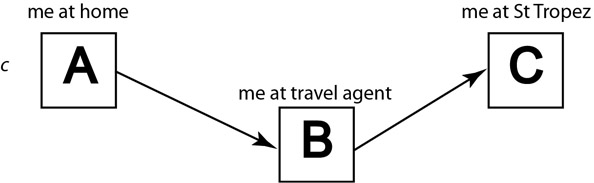

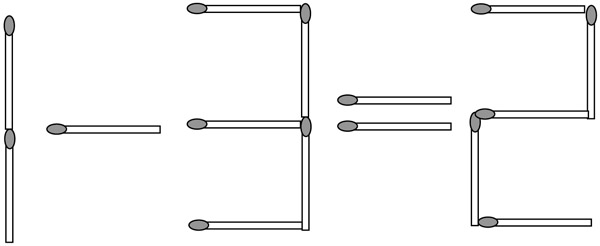

Figure 1.1 illustrates in an abstract form what is involved in a problem and two forms of mediating action. Starting from where I am initially (represented by “A”), one possible action (Figure 1.1b) is to try to take steps along a path seems to lead in the direction of the goal (represented by “B”). This is not a particularly sensible thing to do if your problem is how to get to St Tropez. The second form of mediating action is to do something that will make it easier to get to the goal (Figure 1.1c), in this case perform an action that will lead to a sub-goal (“B”). This is a reasonable thing to do since you will probably get everything you need to know from the travel agent.

As mentioned earlier, “real” problems exist when learned behaviours are not sufficient to solve the problem. Cognitivist and behavioural accounts converge on this view. For example, from the behaviourist side, Davis (1973, p. 12) has stated that “a problem is a stimulus situation for which an organism does not have a ready response.” In discussing cases mainly in the domain of mathematics, where the organism does have a reasonably ready response, Schoenfeld (1983, p. 41) and Bodner (1990, p. 2) have stated that a problem that can be solved using a familiar sequence of steps is an “exercise”. While “exercise” in the realm of mathematics is a useful way of denoting a problem that involves a familiar procedure guaranteed to get a correct answer (an algorithm), it is too restrictive a term to use more generally. Many familiar tasks in our working or domestic environment are algorithmic, but making dinner or decorating a room are not normally classed as exercises even though they involve a sequence of steps that may be very familiar. An exercise tends to involve working forward through a task step by step, as does fixing a flat tyre by a skilled mechanic.

One of the earliest systematic analyses of mathematical problem solving was done by Polya (1957), whose view of the phases of problem solving is shown in Information Box 1.1.

Information Box 1.1 Polya’s (1957) problem solving phases

Polya (1957) listed four problem solving phases which have had a strong influence on subsequent academic and instructional texts. These phases involve a number of cognitive processes which were not always spelled out but which have been teased apart by later researchers. The four phases are:

- First, we have to understand the problem; we have to see clearly what is required.

- Second, we have to see how the various items are connected, how the unknown is linked to the data, in order to obtain the idea of the solution, to make a plan.

- Third, we carry out our plan.

- Fourth, we look back at the completed solution, we review and discuss it.

(p. 5)

While this statement implies a focus on mathematical problem solving – for example, devising a plan means having some idea of the “calculations, computations or constructions we have to perform in order to obtain the unknown” (Polya, 1957, p. 8) – much of what he has said applies to the nature of problem solving in general. The way in which we understand any problem – how we mentally represent it – determines the actions we take to attempt to solve it. Polya also makes the point that problem solving is an iterative process involving false starts and re-representations – we have to work at it, and this, as we shall see in Chapters 7 and 8, can include creative problem solving and insight: “Trying to find the solution, we may repeatedly change our point of view, our way of looking at the problem. We have to shift our position again and again” (Polya, 1957, p. 5). He also pointed out that solving problems from examples or instructions involved learning by doing: “Trying to solve problems, you have to observe and to imitate what other people do when solving problems and, finally, you learn to do problems by doing them” (Polya, 1957, p. 5).

Other phases have been added by later theorists. For example, Hayes (1989) added “finding the problem” to the beginning and “consolidating gains” (learning from the experience via schema induction) at the end (see Chapter 5), and the phases have acquired different labels in some studies such as “orientation, organization, execution, and verification” (Carlson & Bloom, 2005).

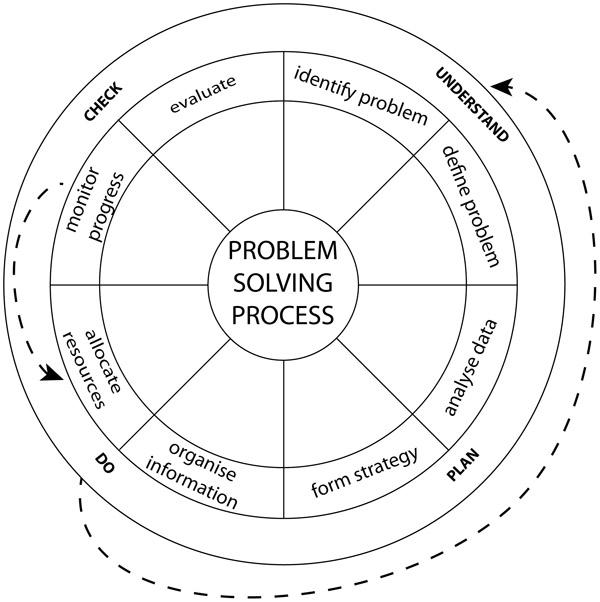

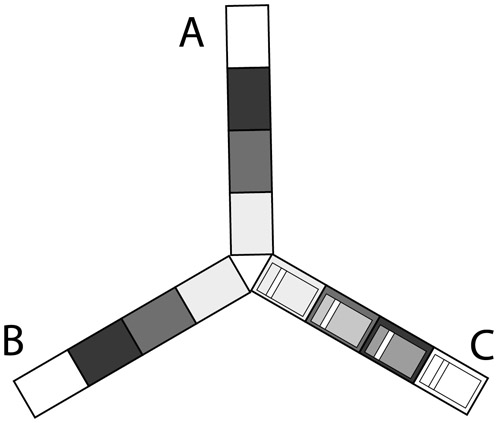

There are many models of the problem solving processes devised principally from Polya’s phases. Figure 1.2 represents a composite view of many such models. The dotted arrows represent the fact that solvers may reach an impasse or encounter constraints that mean they may have to go back to an earlier phase and start again.

Where do problems come from?

According to Getzels (1979, p. 168) problems can be presented or discovered or created, “depending on whether the problem already exists, who propounds it, and whether it has a known formulation, known method of solution, or known solution”. A presented problem is simply one that someone or some circumstance presents you with. The teacher gives you homework, the boss asks you to write a report, the weather disrupts your travel plans.

A discovered problem is one you yourself invent or conjure up. “Why is the sky blue?” “How does a microwave oven work?” “Why do burrs stick to my dog’s fur so well?” These are phenomena that already exist, and you have found yourself wondering about them and then trying to determine what is going on. Something that has been irritating you is now reclassified as a problem to be solved, and something that intrigues you leads to a discovery and creative product such as Velcro.

A created problem situation is one that “does not exist at all until someone invents or creates it”. This is the domain of the creative artist, scientist, mathematician, musician and so forth. The individual in this case tries to find a problem to solve in her domain of expertise. A symphony, a Turner prize–winning installation, a theory of the structure of the cosmos are all solutions to problem situations that have been created by the individual.

Whatever the kind of problem we are faced with, we are obliged to use the information available to us, information from memory and whatever information we can glean from the environment we find ourselves in, particularly where that information appears salient – it stands out for some reason. In some cases you don’t know what the answer looks like in advance and you have to find it. You might have a list of things to buy and only a fixed amount of money; do you have enough to buy the things you want? How you find the answer in that example is not particularly relevant; you just need to know what the answer is. In other problems it is precisely how you get the answer that is important: “I have to get this guy into checkmate.” The point of doing exercise problems in textbooks is to learn how to solve problems of a particular type and not just to provide the answers. If the answer was all you were interested in, you could look that up at the back of the book. In cases where you have to prove something, the “something” is given and it is the proof that is important, such as the proof of Fermat’s last theorem.

“Natural” and “unnatural” problems

There are things we find easy to do and others we find hard. Some of this can be explained by the way our environment has shaped our evolution. Within any population there is a degree of variability. Because of this variety there may be some individuals who are better able to cope with novel environments than others. Such individuals have a better chance of surviving and possibly even passing on their genes – including the ones with survival value – to a future generation. Every single one of your ancestors was successful in this respect. None was a failure, because failures don’t survive to produce offspring, and you wouldn’t be here reading this. If our behaviour – including our thinking behaviour – is to be of any use for our survival, evolutionarily speaking, then it should allow us to fulfil three aims. First, it should allow us to attain our goals (such as getting food, water, sex, etc.) by expending the least amount of energy. Second, it should help us avoid getting killed for as long as possible. And third, it should allow us to pass on our genes to succeeding generations. Having said that, we often find that humans try to fulfil the third aim, consciously or unconsciously, at the expense of the other two. Some men and women like to demonstrate their fitness by engaging in high-risk activities and surviving – such as climbing high mountains, smoking, and crossing frozen wastes on foot (for more on this theme see Diamond, 1992).

When it comes to solving problems and thinking about the “unnatural environment”, such as trying to understand textbooks on C# programming and piloting aircraft, we impose a heavy load on our working memory. Such topics can be difficult because we are often unable to call upon strategies that have evolved for dealing with the natural environment – those practical everyday problems we needed to solve in the past to ensure our survival. The distinction between what I have termed “natural” and “unnatural” problems has been referred to by Geary (2008) as biologically primary knowledge and biologically secondary knowledge (see Chapter 4).

While we can usually reach reasonable and sensible conclusions based on experience, we can be pretty poor at dealing with problems in abstract formal logic. We are good at learning what features of animals tend to go together and we can make generalisations from single examples and experiences. Any animal in the past that ate a poisonous berry and survived and decided to eat another berry of the same kind is not likely to have left many offspring. (However, generalising from a single example is not always appropriate. When a newspaper reported that a beggar on the streets of London was making a great deal of money from begging, some people naturally generalised from that instance and assumed that beggars were making lots of money and so stopped giving them any or even started beating them up.) Apart from categorising objects and people, natural problems include finding your way around, dealing with practical problems such as tool making or sewing, managing people (it may be natural, but no one said it had to be easy). “Unnatural” problems requiring biologically secondary knowledge are ones we did not encounter in the savannah, such as trigonometry or designing video games.

What’s involved in solving problems?

A car leaves city A at 10.20 am and travels to city B arriving at 16.40. If the cities are 320 miles apart, what was the average speed of the car?

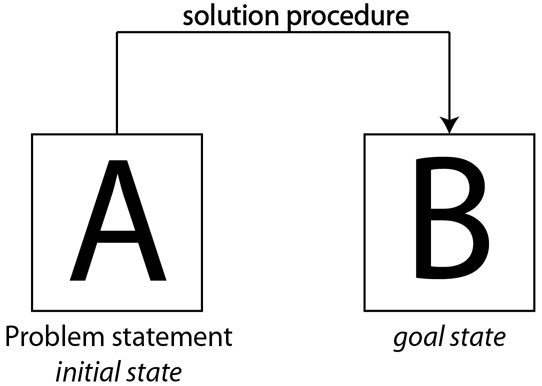

A problem statement provides the solver with a set of givens. In this problem, the givens include the times of departure and arrival, the distance between the cities and so forth. Faced with those givens, you need to apply some kind of operations to reach the goal state. Your starting point is the initial state of the problem (Figure 1.3). The set of operations you perform to get to the goal state constitutes the solution procedure. In this problem the operators are not specified in the problem statement, but we can infer from our prior knowledge that they are mathematical operators. There can be many kinds of operators depending on the problem type and how we mentally represent a problem: different people may represent a problem in different ways, which would involve the use of different operators. Furthermore, the operators are actually mental representations of the actions you can perform on the givens at any particular point in a problem. In this example, the calculations need to be done in our heads, and other problem types will require other types of mental operator.

In order to solve problems we use a number of cognitive processes, some conscious and some unconscious, making problem solving an activity that is “more complex than the sum of its component parts” (Jonassen, 1997, p. 65). To solve the preceding example we would need to use domain knowledge, which may include:

- Mathematical concepts (division, addition, multiplication and so on);

- Rules and principles (for balancing equations, for example);

- Problem categorisation (this is a Rate × Time problem);

- Domain-relevant semantics (how a concept relates to other concepts).

Then there are more general skills such as:

- Inferencing;

- Case-based reasoning (using previously learned examples to solve a current problem);

- Analysis and synthesis;

- Progress monitoring;

- Decision making;

- Abstraction of the underlying problem structure (through repeated examples);

- Generalisation (ability to apply what you have learned to new examples).

At an even more general level there are metacognitive skills related to motivation, goals, and allocating cognitive resources such as attention and effort (Jonassen, 1997). In short, a lot of knowledge, skills and the cognitive resources underpinning them are involved in much of our problem solving.

Approaches to the study of problem solving

Studying some forms of problem solving is relatively straightforward. Experimenters tend to present their participants with problems and then sit back and watch what happens. Problems can be manipulated in various ways. Typically, researchers have manipulated the way instructions are presented, whether hints or clues are given, the number and nature of problems presented, and so on. Experimenters can take a variety of measures such as the number of problems correctly solved, the time taken to solve them, the number and type of errors made, how much they can remember, variations in the speed of problem solving during a problem solving task and so forth. Relatively recently, researchers have also looked at what is going on in various regions of the brain as people perform problem solving tasks. That said, studying complex problem solving in everyday life (legal decision making, medical diagnosis, house renovation, etc.) can be very tricky.

For well over a century different approaches based on different philosophical traditions have produced explanations of problem solving behaviour. In some cases this has led to different vocabulary being used to explain much the same phenomena. Before moving on to the most recent approaches based on a computational view of problem solving and on neuroscience, it might be useful to locate these in some historical context.

The (neo)behaviourist approach

The early behaviourist approach to problem solving focussed on a cause–effect model of problem solving involving a stimulus (S) and a consequent response (R). Thorndike (1898) placed hungry cats in a variety of puzzle boxes to see how they learned to escape, if at all. The boxes had a variety of mechanisms that would allow the door of the box to be opened, such as a wire loop or a wooden latch on the outside of the box that the cat’s paw could reach. Thorndike found that the cats would randomly claw at the door and through the bars of the box before managing to escape by pulling on the loop or moving the latch, whereupon the cat was rewarded with some food. When replaced in the box repeatedly, the cat was able to escape increasingly quickly until it was eventually able to escape immediately after it was put in the box. Thorndike found no evidence that the cats “understood” how their actions allowed the door to be opened; they simply learned that a specific action freed them. (One could argue that the behaviour of the cats is not really trial and error behaviour, as the cats weren’t trying something to see if it worked or not.)

Trial and error problem solving and learning underpinned Watson’s (1920) view of problem solving, which he regarded as a form of verbal, usually subvocal, behaviour based on learned habits (although what subvocal behaviour the cats were using is unclear) and suggested that “thinking might become our general term for all subvocal behavior” (p. 89). Unfamiliar problems, he argued, require “trial verbal behaviour” (p. 90), for example, by applying one mathematical formula after another until a correct response is elicited.

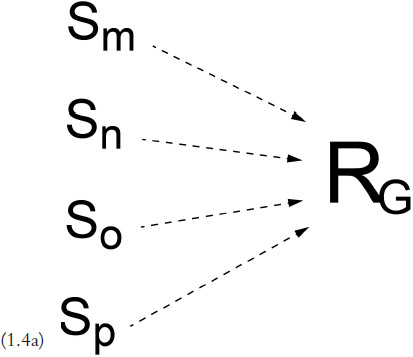

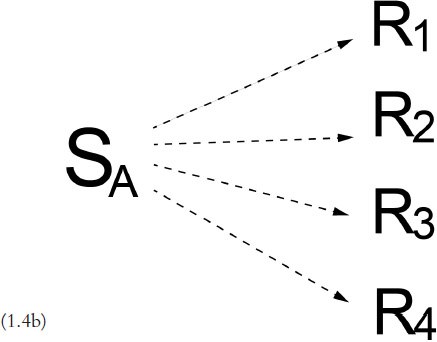

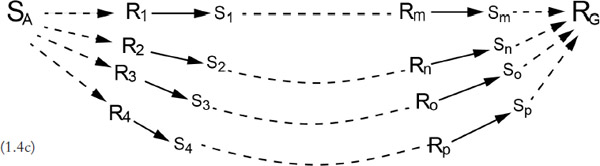

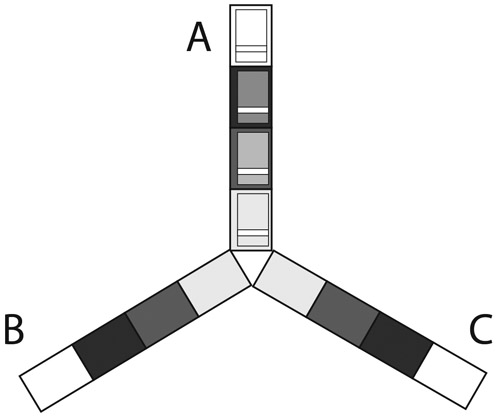

Skinner (e.g., 1984) criticised the idea of Thorndike’s trial and error explanation and claimed his results “do not represent any useful property of behavior” (p. 583). He regarded problem solving as based on two types of behavioural control: contingencies of reinforcement (patterns of reward based on an organism’s behaviour) either alone or together with culturally generated rules. A solver reading a problem identifies cues that can lead to responses, which in turn produce “discriminative stimuli” that act as further cues until a solution is reached. Following on from this view, more recently neo-behaviourists have regarded complex problem solving as not fully explicable using simple stimulus–response (S–R) contingencies. Hence neo-behaviourists postulated chains of intermediate S–R connections such that an initial stimulus (corresponding to the initial state of a problem) triggered a (covert) response that in turn generated a new stimulus leading to a further response, and so on, eventually producing (overt) behaviour. This idea is based on Hull’s (1934) habit-family hierarchy where a stimulus, through experience, could lead to several responses of different strengths (Figure 1.4a). Similarly, several possible stimuli could lead to a single specific response (Figure 1.4b). Both of these mechanisms can join together producing a “compound habit mechanism” via a hierarchy of associated chains of different strengths (Figure 1.4c). According to Maltzman (1955), these hierarchies can be combined into hierarchies of hierarchies (Gilhooly, 1996). When faced with a particular problem situation a person’s response would be based on “habit strength”, and if that doesn’t work then the next level of the hierarchy can be tried. The point, however, is that there is no appeal in this approach to any form of “thought” or mental processes.

The Gestalt approach

In the first half of the 20th century Gestalt psychology flourished alongside behaviourism and in contradistinction to it. Gestalt psychologists were interested in how our everyday experience was organised both in how we perceived the world but also how we understood situations or problems. More specifically, they were interested in the relationship between the elements or parts that made up our experience and how we saw whole objects independent of the parts – that is, we form a Gestalt. Indeed the parts only really make sense if one perceives or understands the “whole”, a principle known as “von oben nach unten” (“from above to below”, although “top-down” would have been a good translation if cognitive psychology hadn’t taken it over and given the phrase another meaning). Kurt Koffka referred to this as the whole being other than the sum of the parts (Koffka, 1935 [reprinted 1999], p. 174).

The basic thesis of gestalt theory might be formulated thus: there are contexts in which what is happening in the whole cannot be deduced from the characteristics of the separate pieces, but conversely; what happens to a part of the whole is, in clear-cut cases, determined by the laws of the inner structure of its whole.

(Wertheimer, 1924, p. 84)

Wertheimer (1959) has argued that we can solve problems by perceiving the whole problem situation rather than by following, perhaps blindly, a learned procedure which he termed reproductive thinking. By following a learned procedure, the solver need not necessarily understand why the procedure works; in other words there is no sense of meaning involved. Understanding, on the other hand, requires an insight into structure of a problem, thereby forming a complete Gestalt, which he termed productive thinking. Gestalt psychologists were also very interested in what prevented people from solving problems, including situations where what you have learned interfered with problem solving. Wertheimer also pointed out that the solver and the context were important, as problem solving takes place “within the general process of knowledge and insight, within the context of a broad historical development, within the social situation, and also within the subject’s personal life” (p. 240) – a view that pre-figured much of current problem solving approaches. How we represent problems is discussed in Chapter 2 and how such representations relate to insight is discussed in Chapter 5.

Cognitive psychology and information processing

From the 1950s a “cognitive revolution” took place, stimulated by advances in neuroscience, linguistics, information theory and the relatively new appearance of the programmable computer. The study of problem solving became the study of how a variety of cognitive processes are exploited to attain our goals and thereby ensure our survival. Cognitive psychology deals with perceiving, allocating attentional resources, encoding relevant information, storing it, retrieving it under certain conditions, skill learning (automatisation) and expertise, mental representation and planning, conscious and unconscious influences on behaviour, language, decision making and so on. Each of those areas involves some kind of data or information that has to be processed: that is, there a sequence of stages where information is transformed or encoded from one type of representation to another. For a written algebra word problem we have to detect the visual features of the letters, combine these to identify words using a stored orthographic lexicon (a mental store of word spellings), use grapheme-phoneme or spelling-sound correspondence to encode these as representations of the spoken word, use grammatical knowledge representations to make sense of sentences and pragmatic knowledge to make sense of what we are being asked to do. There is a constant to and fro movement of information stored in memory – concept-driven (top-down) processing and data-driven (bottom-up) processing – but we are aware only of the outcome of these, mostly unconscious, processes.

A computer is also an information processing system. As I type in these words the computer encodes them as strings of 0s and 1s. These strings can be understood as information. As I type I also make mistakes; for example, I frequently type “teh” for “the”. This means I have to go back, double-click on “teh” to highlight it and type over it. That is the observed effect; the computer, however, has to perform computations on the strings of 0s and 1s to perform that edit. I could also get the computer to go through the whole text replacing all instances of “teh” with “the”; once again the computer would perform computations on the strings of digits to perform that action. Performing computations on strings of digits is the way the computer processes information. The actual processes are invisible to the writer, but the effects are visible on the computer screen.

The arrival of the digital computer allowed psychologists to describe human behaviour in terms of the encoding, storage, retrieval and manipulation of information, and to specify the mechanisms that are presumed to underlie these processes. A computer or any other “universal Turing machine” can be made to perform a huge number of actions by changing its software, so although the hardware and software are notionally different, they are both incorporated into the one information processing system. Sloman (e.g., 2009) has argued that biological evolution has produced many types of such “active virtual machines” before humans ever thought of them. For cognitive scientists, therefore,

understanding this is important (a) for understanding how many biological organisms work and how they develop and evolve, (b) for understanding relationships between mind and brain, (c) for understanding the sources and solutions of several old philosophical problems, (d) for major advances in neuroscience, (e) for a full understanding of the variety of social, political and economic phenomena, and (f) for the design of intelligent machines of the future.

(Sloman, 2008)

The first major paper using the language of information processing applied to organisms as well as to computers was produced by Newell, Shaw and Simon (1958). It followed on from their attempts at devising a program that solved problems in symbolic logic, the way humans are presumed to do. The aim of the theory outlined in their paper was to explain human problem solving behaviour, or indeed the problem solving behaviour of any organism, in terms of simple information processes. Newell and Simon’s later magnum opus, Human Problem Solving (1972), provided the basis for much subsequent research on problem solving, using information processing as the dominant paradigm (see Chapter 2).

Cognitive neuroscience

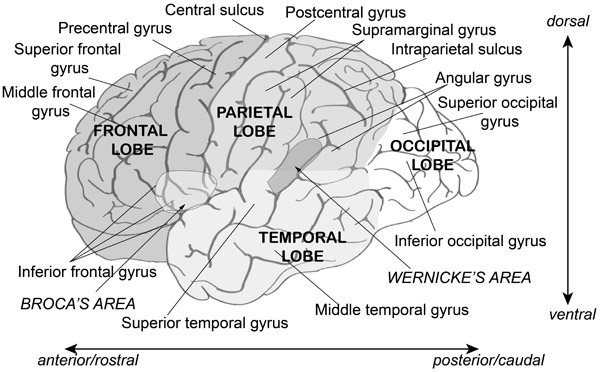

Several techniques are used to identify what’s going on in the brain and where it’s happening when people engage in cognitive tasks. One of the oldest is cognitive neuropsychology, where the functions of various brain areas can be identified as a result of some form of brain damage. A classic example is a deficit known as Broca’s aphasia caused by damage – often due to a stroke – to a region on the lower left part of the brain (Broca’s area in Figure 1.5). Along with Wernicke’s area, these make up the main language processing centres of the brain. People with damage to Broca’s area may struggle to produce coherent speech. The content words are there but the syntactical glue that holds them together may be lost, however speech comprehension is usually undamaged. Damage to Wernicke’s area can lead to an inability to comprehend speech. The patient’s speech may be grammatically correct but the words used are meaningless. Similarly, damage to different brain areas can cause disruption to different types of problem solving.

The second main technique is brain imaging. There have been huge recent advances in imaging technology and in our understanding of molecular biology at the neuronal level that have allowed neuroscientists to watch what is going on in the brain as people perform complex tasks. When we think or plan, we are aware of our “mind” doing the thinking for us. Now we are able to see what the brain is up to when it generates our mind. Even areas such as human creativity can now be analysed in terms of the neuroanatomy involved, along with decision making, inspiration, reasoning, planning and problem solving. As a result we are at last beginning to answer the question posed by Anderson (2007): “How can the human mind occur in the physical universe?”

Verbal reports as data

If we are interested in thinking, it would seem to make sense to examine someone’s thinking by asking them to review and report their thoughts and feelings, a view that goes back thousands of years (e.g., Plato ca. 369 BCE). Külpe and the Wurzburg School in the 1890s used introspection as a mechanism for assessing thinking using “systematic experimental introspection”, whereby participants were asked to give an account of their thoughts and feelings after taking part in an experiment. It has been criticised as a methodology by, for example, the behaviourists for being unscientific; by cognitivists for being an unsuitable method for accessing higher-order mental processes (e.g., Neisser, 1967); and by social psychologists as being potentially inaccurate – what people think they are doing is not the same as what they are really doing (e.g., Nisbett & Wilson, 1977). Nisbett and Wilson (1977, p. 233) have argued that “the accuracy of subjective reports is so poor as to suggest that any introspective access that may exist is not sufficient to produce generally correct or reliable reports.” More recently, researchers and philosophers have argued that, even if causal connections cannot be introspected (Goldman, 1993, has stated that no one had ever really argued that causal connections could be introspected in any case), they still provide valuable information about how people deal with complex problems in everyday life and provide evidence of what metacognitive processes they are using (Funke, 2014; Jäkel & Schreiber, 2013).

There is a difference to be made between what is known as introspection and think-aloud reporting. The use of verbal reports as data is based on the idea that human thinking can be construed as information processing, however the human information processing system has a limited capacity short-term working memory and a vast long-term memory. The limitations to short-term memory mean that we can attend to and store only a few things at a time (Miller, 1956). Furthermore, we tend to encode information primarily visually or phonologically (e.g., Baddeley, 1981, 2007) – we can see images in our mind’s eye and hear an inner voice. As with the computer, the processes that produce these images or voices are not accessible since they are very fast and below the level of consciousness. What we do have access to are the results of those processes, and those results, Ericsson and Simon (1993) argued, can be verbalisable. The job of the researcher is to analyse such verbal data in line with some theoretical rationale that allows the researcher to make inferences about the processes underlying the verbal report.

In the early behaviourist tradition, Watson (1920) regarded verbal reports as important forms of data: “The present writer has often felt that a good deal more can be learned about the psychology of thinking by making subjects think aloud about definite problems, than by trusting to the unscientific method of introspection” (p. 91). This is, at least, consistent with Watson’s view that thinking is a form of verbal behaviour. However, whereas he regarded problem solving as a situation that has to be worked out verbally, nowadays cognitive science is no longer reluctant to replace “verbally” with “mentally”. Nor are cognitivists averse to inferring mental processes based on the data in verbal accounts while solving problems. Furthermore, since verbal reports display the sequential nature of problem solving they can be used as the basis of computer models of problem solving.

Verbal protocols generally provide explicit information about the knowledge and information heeded in solving a problem rather than about the processes used. Consequently it is usually necessary to infer the processes from the verbal reports of information heeded instead of attempting to code processes directly.

(Simon and Kaplan, 1989, p. 23)

Ericsson and Simon’s (1993) theory of thinking aloud is described in more detail in Information Box 1.2.

Information Box 1.2 Ericsson and Simon’s (1993) theory of think-aloud protocols

Ericsson and Simon’s theory of thinking aloud asserts that there are three kinds of verbalisation. Type I verbalisations are direct verbalisations. This is where subjects simply speak out loud what their inner voice is “saying”. You can, for example, look up a phone number and keep it in short-term memory long enough to dial the number by rehearsing it; that is, by repeating it to yourself. It is quite easy therefore to say the number out loud since it is already in a verbal code in short-term memory. Similarly, most people would use a verbal code to solve a problem such as 48 × 24 in their heads. Saying it out loud instead is direct verbalisation. This kind of verbalisation does not involve reporting on one’s own thought processes, for example by saying things such as “I am imagining the 4 below the 8.” Type I direct verbalisations should not therefore interfere with normal problem solving by either slowing it down or affecting the sequence of problem solving steps.

Type II verbal reports involve recording the contents of short-term memory. This type of verbalisation does slow down problem solving to some extent since it requires the subject to recode information. The most common example is where the subjects are being asked to verbalise their thoughts when performing an imagery task. Describing an image involves recoding into a verbal code. When the processing load becomes too great, the subjects find it harder to verbalise since verbalising uses up the attentional resources they are trying to devote to the imagery task (e.g., Kaplan & Simon, 1990). Ericsson and Simon’s theory predicts that Type II reports should not affect the sequence of problem solving.

Type III verbal reports involve explanations. Unlike Type I and Type II verbal reports, verbalisations that include explanations or reasons for doing something can have a strong effect on problem solving. Subjects instructed to give a verbal or written rationale for why they performed a particular action improved on their subsequent problem solving (Ahlum-Heath & DiVesta, 1986; Berry, 1983). Similarly, subjects asked to elaborate on a text aloud recalled more than a silent control group (Ballstaedt & Mandl, 1984). Although there are benefits for problem solving and recall due to elaborating and providing reasons, such verbalisations are liable to disrupt the task in hand. Providing explanations involves interrupting a task to explain an action. Furthermore, we cannot always be really sure that their explanations accurately reflect the processes they actually used. For these reasons Type I and Type II protocols have been the most common in analysing human problem solving.

Artificial Intelligence (AI) models

To the extent that human thinking can be regarded as information processing, it should be possible to simulate or model human thinking on other information processing devices (e.g., Anderson, 1993; Cassimatis, Bellob, & Langley, 2008; Cooper, 2002; Doumas & Hummel, 2005, 2012; Newell & Simon, 1972). The information processing device in most common use is the digital computer, and so it has been used to model aspects of human thinking. Such models are only as good as the theory behind them and can only be built if the theory is presented in enough detail. If our theory of human thinking is specific enough, then it can be used as the basis of a computer program that instantiates (incorporates in a concrete form) that theory. There are two main contenders for modelling the structure of the mind, each of which tends to emphasise different aspects of human cognition. Because they represent the structure of the mind, they are known as cognitive architectures.

To understand what a cognitive architecture is, one can think of the architecture of a house (Anderson, 1993, 2007). The structure of a house is designed to perform certain functions. The occupants of the house need to be protected from the rain, so some form of roof is required. Structures are needed to hold this roof up and walls are needed to keep the occupants warm. Certain parts of the house have to be reserved for certain activities: the occupants need somewhere to prepare food, to sleep, to relax and so on. There also needs to be some way of getting in and out, and there need to be windows to let the light in. Like a house, a cognitive architecture contains structures that are required to support cognition. Different parts have different functions; some parts might store information, others might process information coming from the outside and so on. In short, “A cognitive architecture is a specification of the structure of the brain at a level of abstraction that explains how it achieves the function of the mind” (Anderson, 2007, p. 7).

One architecture, know as production systems, places the emphasis on the fact that much of our behaviour, particularly problem solving behaviour, is rule-governed, or can be construed as being rule-governed, and is often sequential in nature – we think of one thing after another. In the other corner is connectionism. This architecture tends to focus on the ways in which we can learn and spontaneously generalise from examples and instances, and recognise and categorise entities. Furthermore, we can access a whole memory from any part of it. This is different from the way a typical computer works, since a computer needs an “address” to be able to access a particular memory. General models of cognition tend to be hybrids in that they use both symbolic systems (see Chapter 2), usually production systems, along with connectionist systems. Cognitive architectures are dealt with in more detail in Chapter 7.

Schemas

We are constantly being bombarded with potentially vast amounts of information about our surroundings. To cope with this, we are able to focus our attention on a very small subset of that information. If you wanted, you could switch your attention to your left foot, the noises around you, the colour and texture of the paper in front of you and so on. In order to make sense of the quantity of information available we rely on information already stored in memory. Here again, though, there is a vast store of information that could (potentially) be called up when needed. However, our memories would be unhelpful unless we had a way to organise them. One way in which the organisation of long-term memory can be understood is to assume a framework or semantic structure known as a schema.

To help explain what a schema is, take a few moments to draw a house, or try to imagine how you would draw one if you have no pencil and paper handy. If what you drew or imagined is anything like this ![]() then you and I share the same schema for what a house looks like. Houses have roofs, windows, walls and a door. It’s hard to imagine a house without one of these features. These are the “fixed values” that would be incorporated into a house schema. You can also use your knowledge of houses to understand me if I tell you I locked myself out. You can mentally represent my predicament because you know that houses have doors and doors have locks that need keys and I don’t have my key. These are “default” assumptions you can make. So if information is missing from an account you can fill in the missing bits from your general knowledge of houses – your house schema. Now it could be that I locked myself out because I have one of these fancy push-button locks and I forgot the number, but it’s not what you would immediately assume. That would be a specific value that would replace the default value of a normal lock requiring a key.

then you and I share the same schema for what a house looks like. Houses have roofs, windows, walls and a door. It’s hard to imagine a house without one of these features. These are the “fixed values” that would be incorporated into a house schema. You can also use your knowledge of houses to understand me if I tell you I locked myself out. You can mentally represent my predicament because you know that houses have doors and doors have locks that need keys and I don’t have my key. These are “default” assumptions you can make. So if information is missing from an account you can fill in the missing bits from your general knowledge of houses – your house schema. Now it could be that I locked myself out because I have one of these fancy push-button locks and I forgot the number, but it’s not what you would immediately assume. That would be a specific value that would replace the default value of a normal lock requiring a key.

Schemas have been proposed for a number of domains including problem solving. With experience of houses or cinemagoing or Distance = Rate × Time problems, you learn to recognise and classify them and to have certain expectations about what goes on in a cinema or what you are likely to have to do to solve a particular type of problem.

Categorising problems

To understand a problem means to mentally represent it in some way, and different people may represent the same problem in different ways depending on their knowledge, expertise and their ability to notice salient elements of a problem. Furthermore, different problem types may influence the way we represent them, so it would be useful to identify what it is about a problem type that causes us to think about it or tackle it in a particular way.

Before we look at how we might categorise problems, try some of the ones in Activity 1.1 as briefly as you like and, as you think about each one, think also about how you might try to solve it, and how you might categorise it (familiar/unfamiliar, hard/easy and so on).

Activity 1.1

- 1 A car travelling at an average speed of 40 mph reaches its destination after 5 hours. How far has it travelled?

- 2 The equation in Figure 1.6 is incorrect. Move one match to create a correct equation.

- 3 Solve: 3(x + 4) + x = 20

- 4 Write a reverse-funcall macro that calls a function with its arguments reversed (use &rest to handle the function’s arguments and ,@ to pass them to funcall):

- ? (rev-funcall #’list ’x ’y ’z 4 3 2 1) (1 2 3 4 Z Y X)

- 5 What economic policies should the government adopt?

- 6 A tourist in St Tropez wants to convert £100 to euros. If the exchange rate is €1.35 to the pound, how many euro will she get?

- 7 You are driving down the road in your car on a wild, stormy night when you pass by a bus stop and you see three people waiting for the bus:

- a An old lady who looks as if she is about to die;

- b An old friend who once saved your life;

- c The perfect partner you have been dreaming about.

Knowing that there can only be one passenger in your car, whom would you choose?

- 8 Some trucks are parked in parking bays in A in Figure 1.7a. Unfortunately, they have to be moved to lane C in Figure 1.7b.

Trucks can only move to a parking space of their own colour. You can only move one truck at a time.

- 9 A small country fell under the iron rule of a dictator. The dictator ruled the country from a strong fortress. The fortress was situated in the middle of the country, surrounded by farms and villages. Many roads radiated outward from the fortress like spokes on a wheel. A great general arose who raised a large army at the border and vowed to capture the fortress and free the country of the dictator. The general knew that if his entire army could attack the fortress at once it could be captured. His troops were poised at the head of one of the roads leading to the fortress, ready to attack. However, a spy brought the general a disturbing report. The ruthless dictator had planted mines on each of the roads. The mines were set so that small bodies of men could pass over them safely, since the dictator needed to be able to move troops and workers to and from the fortress. However, any large force would detonate the mines. Not only would this blow up the road and render it impassable, but the dictator would then destroy many villages in retaliation. A full-scale direct attack on the fortress therefore appeared impossible (Gick & Holyoak, 1980, p. 351). How did the general succeed in capturing the fortress?

- 10 How do I write a book on problem solving that everyone can understand?

- 11 “Your task is in two parts … One, to locate and destroy the landlines in the area of the northern MSR [main supply route, Iraq]. Two, to find and destroy Scud … We’re not really bothered how you do it, as long as it gets done” (McNab, 1994, p. 35).

- 12 The tale is told of a young man who once, as a joke, went to see a fortune teller to have his palm read. When he heard her predictions, he laughed and exclaimed that fortune telling was nonsense. What the young man did not know was that the fortune teller was a powerful witch, and unfortunately, he had offended her so much that she cast a spell on him. Her spell turned him into both a compulsive gambler and also a consistent loser. He had to gamble but he never won a penny. The young man may not have been lucky at cards, but it turned out that he was exceedingly lucky in love. In fact, he soon married a wealthy businesswoman who took great delight in accompanying him every day to the casino. She gave him money, and smiled happily when he lost it all at the roulette table. In this way, they both lived happily ever after. Why was the man’s wife so happy to see him lose?

A problem can be categorised according to:

- 1 Whether the problem tells you everything you need to know to solve it or whether you need to work out for yourself what you are supposed to do;

- 2 The prior knowledge required to solve it;

- 3 Whether you need to know a lot about the subject or domain the problem comes from (physics, chess, football, Hiragana, cake decorating, etc.) before you can solve it;

- 4 The nature of the goal involved;

- 5 Its complexity;

- 6 Whether it is the same as one you’ve solved before;

- 7 Whether it needs a lot of working out or whether you can solve it in one step if you could only think what that step was;

- 8 Whether there is only one solution or multiple possible solutions.

Well-defined and ill-defined problems

Problems such as problems 2 and 8 contain all the information needed to solve them, in that they describe the problem as it stands now (the initial state), what the situation should be when you have solved the problem (the goal state) and exactly what you have to do to solve it (the operations). In both problems the operation is move – move a match, move a single truck. You are also told exactly what you are not allowed to do (the operator restrictions). Because thinking is done in your head, the operations are performed by mental operators. Although multiplication, say, is an arithmetic operation, you still have to know about it in order to apply it. Operators are therefore knowledge structures.

A problem that provides all the information required to solve it is well-defined. (A useful mnemonic is to remember that the initial state, goal state, operators and restrictions forms the acronym IGOR.) Actually, although you may know where you are starting from and where you are going to and what actions to perform to get there, it is not quite true to say that you have been given all the necessary information, since you are not told what objects to perform the action on or in what order. For example, in the algebra problem (problem 3) there are four basic arithmetic operations: multiplication, division, addition and subtraction, but you might not know which of the operators to apply to get the answer, nor in what order to apply them.

Problems 7 and 12 are different in that the initial state of the problem is given but you don’t know what the goal state looks like. In problem 12, the goal is to find a reason why the man’s wife is apparently happy to watch him lose money, but you are not told what operators to apply to solve the problem nor what the restrictions are, if any. Since these two elements are missing, this problem is ill-defined.

This way of categorising problems has also been referred to as well-structured or ill-structured, and these labels have sometimes been used synonymously with well-defined and ill-defined. However, you can have an initial state that is well-defined, as in as in a game of chess, but the problem itself remains ill-structured. According to Jonassen (1997):

Well-structured problems are constrained problems with convergent solutions that engage the application of a limited number of rules and principles within well-defined parameters. Ill-structured problems possess multiple solutions, solution paths, fewer parameters which are less manipulable, and contain uncertainty about which concepts, rules, and principles are necessary for the solution or how they are organized and which solution is best.

(p. 65)

For example, Simon (1973, p. 186) has argued that although the game of chess is well-defined, “playing a game of chess – viewing this activity as solving a single problem – involves continually redefining what the problem is,” which makes a game of chess ill-structured. Every move your opponent makes is literally a game changer, leaving you with a new problem to solve, and there is an immense number of possible solutions and solution paths.

Ill-structured problems may have a “hidden unknown”. In problem 12 the answer is in the problem statement: the gambler is a consistent loser, so anyone who bets against him will be a consistent winner – which is precisely what his wife does.

If you thought that problem 3 was well-defined then you are assuming that the solver knows what operators are available. You would also have to assume that the solver has a rough idea what the goal state should look like. In this case, if you can assume that the solver can readily infer the relevant missing bits of information (e.g., the operators, the goal state), then the problem is well-defined; otherwise the problem is ill-defined. For most of you, problem 4 is ill-defined since you probably haven’t a clue how to start writing a Lisp function, whereas for a Lisp programmer it may be perfectly obvious what the operators and goal are (although this does not of itself make the problem easy). As a result, problem definition is not one or the other but forms a continuum from well-defined to ill-defined and from well-structured to ill-structured (Simon, 1973). How problems are mentally represented is the topic of Chapter 2.

Experience needed to solve novel problems

Solving problems normally requires some factual knowledge (declarative knowledge) about a topic or knowledge domain, such as tyres are made of rubber, ovens can get hot, E = mc2 and so on. With experience you can learn procedures – sequences of actions that you can take using this knowledge. So faced with a novel problem, we bring to bear our declarative and procedural knowledge in order to solve it. At times we may be unfamiliar with the problem or can remember nothing like it in the past. For example, problems 7 and 8 may be new to you. However, to solve them we can fall back on a few general strategies that have worked in the past. In problem 8 you might start moving trucks to see where it gets you and try to get closer and closer to the goal one step at a time. In problem 9 you might start generating hypotheses and testing them out and keep going till you find one that works. These strategies often work in a variety of spheres of knowledge, or domains, so they can be called domain general.

To solve problems 4 or 5, on the other hand, you would need a fair amount of knowledge specific to that kind of problem. Most new problems in a familiar domain remind us of similar problems we have had experience with in the past, or they may trigger problem schemas for that category of problem. In such cases we can use domain-specific strategies: ones that work only this particular type of situation. For example, if you have to organise your very first holiday in Crete, your strategy might be to go along to a travel agent. This strategy only works in the domain of organising holidays. The travel agent would be rather confused if your problem was what to eat at a dinner party.

Using examples encountered in the past is the topic of Chapter 3 and learning is the topic of Chapter 6.

Semantically rich and semantically lean problems

Another way of characterising problems is in terms of the body of knowledge a person brings to bear to solve them. When someone is presented with the puzzle in problem 8 for the first time, the puzzle is semantically lean as far as the solver is concerned; that is, there is very little knowledge or prior experience that the solver can call upon. The same would be true of someone learning to play chess for the first time without a large body of knowledge and experience on which to draw. For a chess expert, on the other hand, the game of chess is semantically rich and the expert can bring to bear a vast body of knowledge about varieties of opening moves, defensive positions, strategies, thousands of previously encountered patterns of pieces and even whole games (expertise is discussed in Chapter 8).

Different types of goal

Some problems explicitly state what the goal state looks like. The task is to find a means of getting there. The Parking Lot problem in problem 8 presents a picture of what the goal should look like, although a written statement of the goal would perform the same function. The problem here involves finding the procedure – the sequence of moves in this case – for getting there. The goal of the arithmetic problem in problem 1 is different in that the procedure for getting to the goal is not particularly important. It’s just asking for the answer. The algebra problem in problem 3 is also asking for an answer, but in the context of a schoolroom the teacher would probably expect to see the correct procedure written out as well. In all cases you need to evaluate the solution against the criteria in the problem statement. This is straightforward for problem 8 which provides a picture, but a major headache in, for example, problems 5 and 11 (about economic policies and destroying Scud missiles, respectively). There may be very many possible solutions to problem 5 and, whatever one is chosen, it may be very difficult to know if it is the best one. For example, a government may claim that the good economic performance of the country is due to their policies – their solution to economic problems – but since we only live in this one universe we may never know if the good economic performance is because of their policies, despite their policies, whether the performance would be just as good if they had done nothing, or would have been much better if they had done something else.

Problem 4 requires the solver to write a piece of code that generates the specified output and, in order to find out if the goal is achieved, you would have to run the program to verify if it produces the desired outcome. Once you have found an answer to the equation in problem 3 and you feel reasonably confident you have a correct answer, it may have to be checked by a teacher or by looking it up in the back of a textbook. Problem 10 about writing a problem solving book has a potentially infinite number of solutions that are probably rather hard to evaluate by the solver – it may well be an insoluble problem, unfortunately.

In some cases there may be a strict limit on what you are allowed to do to attain your goal. In problem 11, although you can probably readily evaluate whether you have reached your goal or not, there are very few limits on how you get to it. You would probably impose your own constraints, such as avoiding getting caught, and you might want to add a further goal of getting out alive at the end. In fact, in real-world problems the solver usually has to define the problem components, and the potential goals may depend on the individual (Bassok & Novick, 2012).

Simple and complex problems

Some of the problems in the list are relatively simple; that is, they have a simple structure. Examples 2 and 8 have very simple rules and a clear description of how everything is set up at the beginning of the problem and what the goal state should look. Puzzle problems such as these are examples of knowledge-lean problems that require very little knowledge to solve them. Problem 4, on the other hand, is a knowledge-rich problem, as it requires a lot of previous knowledge of Lisp programming. If you have that knowledge, then it is probably a simple problem.

Complex problems have highly interrelated elements and the initial state may be “intransparent” (Fischer, Greiff, & Funke, 2012; Funke, 2012); that is, the solver may have to gain more information in order to define the problem and identify what the goal should look like, as not all relevant information is provided in the initial state (e.g., in problem 11). Such problems may also have a complex problem structure or involve multiple potentially interfering goals, and solving them requires prioritising those goals (Funke, 2012, p. 683). Complex problems are often semantically rich and knowledge-rich, requiring the acquisition of a degree of declarative and procedural knowledge about complex systems (tax law, thermodynamics, classical music, operating behind enemy lines, etc.). Problems in these areas require expertise, which is the subject of Chapter 6. How to help people cope with complex problems in terms of instructional design is the subject of Chapter 4.

Problems sharing the same structure

Have another look at problems 1 and 6. On the surface the problem about the distance travelled by the car and the problem about exchanging pounds sterling for euros seem completely different. However, both problems share the same underlying equation in their solution: a = b × c. In the car problem, the distance travelled = the speed the car travels × the time taken. In the currency problem, the number of euros = the rate of exchange × the number of pounds sterling. Although these problems differ in terms of their surface features, they both nevertheless share the same underlying structural features (see Chapter 3). Despite the fact that they both involve the same simple equation, the chances are that you wouldn’t have noticed their similarity until it was pointed out.

Multistep problems and insight

Some problems can be characterised by the fact that they have a crucial step necessary for its solution. Once that step has been taken, the solution becomes obvious either immediately or very quickly afterwards. These are referred to as insight problems (Chapter 5). You may have to think for a while about problem 7 – for example, you may imagine taking each individual in the car and examining the consequences – before you suddenly see the answer, probably because you have thought of the problem in a different way. Similarly in problem 2 you may have been shuffling the matchsticks around for a while before an answer suddenly hits you. Problem 12 may be puzzling for a long time before the penny drops.

The metaphors in the last three sentences (“you suddenly see the answer,” “an answer suddenly hits you,” “the penny drops”) serve to emphasise that insight is usually regarded as a sudden phenomenon in which the solution appears before you without any obvious step-by-step progression towards a solution, such as would be necessary in problems 3 and 8, say. It is because of the immediacy of the phenomenon of insight (sometimes referred to as the “Aha!” or “Eureka!” experience) that some psychologists, particularly the Gestalt psychologists in the first half of the 20th century, have regarded insight as something special in problem solving. Indeed, it was one of the first types of problem solving to be systematically studied.

One or more solutions

Some types of problems can be solved by following a clear and complete set of rules that will allow you, in principle, to get from the situation described at the start of the problem (the initial state) to the goal. Often these are well-structured problems leading to a single solution. Some “insight” or “lateral thinking” puzzles often have a single solution, too. In both cases many of them (but not all) are also knowledge-lean, requiring very little domain knowledge to solve them. Most problems we encounter every day have multiple potential solutions.

No matter what kind of problem we are faced with, the first step is to try to represent the problem in a way that allows us to proceed. This is the topic of Chapter 2.

Summary

- 1 Problem solving involves finding your way towards a goal. Sometimes the goal is clearly defined, and sometimes you will recognise it only when you see it. It has been examined from a variety of perspectives including (but not restricted to):

- Behaviourists (e.g., Thorndike, Watson, Skinner, Davis);

- Gestalt psychologists (e.g., Koffka, Duncker, Wertheimer);

- Educationalists and educational psychologists (e.g., Polya, Schoenfeld, Bodner, Geary);

- Cognitive psychologists (e.g., Anderson, Jonassen, Newell, Simon, Sweller).

- 2 Approaches to investigating problem solving include:

- Experimentation, where classical laboratory experiments are used, variables are controlled and so on;

- Analysis of verbal protocols, where people talk aloud while solving problems and the resulting protocol is then analysed;

- Artificial intelligence models, where theories of human problem solving are built into a computer program and tested by running the program. Different types of “architecture” can model different aspects of human thinking.

- 3 Schemas are semantic memory structures that allow us to organise long-term memory so that we can make sense of and retrieve information, and make predictions and assumptions about things and events. In problem solving they tend to refer to problem types.

- 4 Problems can be categorised as being:

- Knowledge-lean, where little prior knowledge is needed to solve them; you may be able to get by using only domain-general knowledge – general knowledge of strategies and methods that applies to many types of problem;

- Knowledge-rich, where a lot of prior knowledge is usually required; such knowledge is domain specific – it applies only to that domain;

- Well-defined, where all the information needed to solve it is either explicitly given or can be inferred;

- Ill-defined, where some aspect of the problem, such as what you are supposed to do, is only vaguely stated;

- Semantically lean, where the solver has little experience of the problem type;

- Semantically rich, where the solver can bring to bear a lot of experience of the problem type;

- Insight problems, where the solution is usually preceded by an “Aha!” experience.

References

Ahlum-Heath, M. E., & DiVesta, F. J. (1986). The effects of conscious controlled verbalization of a cognitive strategy on transfer in problem solving. Memory and Cognition, 14, 281–285.

Anderson, J. R. (1980). Cognitive Psychology and It Implications. New York: Freeman.

Anderson, J. R. (1993). Rules of the Mind. Hillsdale, NJ: Erlbaum.

Anderson, J. R. (2000a). Cognitive Psychology and Its Implications (5th ed.). New York: W. H. Freeman.

Anderson, J. R. (2000b). Learning and Memory: An Integrated Approach. New York: Wiley.

Anderson, J. R. (2007). How can the Human Mind Occur in the Physical Universe? Oxford: Oxford University Press.

Arlin, P. K. (1990). Wisdom: The Art of Problem Finding. Cambridge, MA: Cambridge University Press.

Baddeley, A. D. (1981). The concept of working memory: A view of its current state and probable future development. Cognition, 10, 17–23.

Baddeley, A. D. (2007). Working Memory, Thought and Action. Oxford: Oxford University Press.

Ballstaedt, S.-P., & Mandl, H. (1984). Elaborations: Assessment and analysis. In H. Mandl, N. L. Stein, & T. Trabasso (Eds.), Learning and Comprehension of Text (pp. 331–353). Hillsdale, NJ: Erlbaum.

Bassok, M., & Novick, L. R. (2012). Problem solving. In K. J. Holyoak & R. G. Morrison (Eds.), The Oxford Handbook of Thinking and Reasoning (pp. 413–432). New York: Oxford University Press.

Berry, D. C. (1983). Metacognitive experience and transfer of logical reasoning. Quarterly Journal of Experimental Psychology Human Experimental Psychology, 35A(1), 39–49.

Bodner, G. M. (1990). Toward a unified theory of problem solving: A view from chemistry. In M. U. Smith & V. L. Patel (Eds.), Toward a Unified Theory of Problem Solving: Views From the Content Domain (p. 21–34). Hillsdale, NJ: Lawrence Erlbaum.

Carlson, M. P., & Bloom, I. (2005). The cyclic nature of problem solving: An emergent multidimensional problem-solving framework. Educational studies in Mathematics, 58, 45–75.

Cassimatis, N. L., Bellob, P., & Langley, P. (2008). Ability, breadth, and parsimony in computational models of higher-order cognition. Cognitive Science, 32(8), 1304–1322.

Cooper, R. P. (2002). Modeling High-Level Cognitive Processes. Mahwah, NJ: Lawrence Erlbaum.

Davis, G. A. (1973). Psychology of Problem Solving. New York: Basic Books.

Diamond, J. (1992). The Rise and Fall of the Third Chimpanzee. London: Vintage.

Doumas, L.A.A., & Hummel, J. E. (2005). Approaches to modeling human mental representations: What works, what doesn’t, and why. In K. J. Holyoak & R. G. Morrison (Eds.), The Cambridge Handbook of Thinking and Reasoning (pp. 73–91). Cambridge: Cambridge University Press.

Doumas, L.A.A., & Hummel, J. E. (2012). Computational models of higher cognition. In K. J. Holyoak & R. G. Morrison (Eds.), The Oxford Handbook of Thinking and Reasoning (pp. 52–66). New York: Oxford University Press.

Duncker, K. (1945). On problem solving. Psychological Monographs, 58 (Whole no. 270).

Ericsson, K. A., & Simon, H. A. (1993). Protocol Analysis: Verbal Reports as Data (revised ed.). Cambridge, MA: MIT Press.

Fischer, A., Greiff, S., & Funke, J. (2012). The process of solving complex problems. Journal of Problem Solving, 4(1). doi:10.7771/1932-6246.1118

Frensch, P. A., & Funke, J. (1995). Definitions, traditions, and a general framework for understanding complex problem solving. In P. A. Frensch & J. Funke (Eds.), Complex Problem Solving: The European Perspective (pp. 3–26). Hove, UK: Lawrence Erlbaum.

Funke, J. (2012). Complex problem solving. In N. M. Seel (Ed.), Encyclopedia of the Sciences of Learning (pp. 682–685). Heidelberg: Springer Verlag.

Funke, J. (2014). Problem solving: What are the important questions? Paper presented at the 36th Annual Conference of the Cognitive Science Society, Austin, TX.

Geary, D. C. (2008). An evolutionarily informed education science. Educational Psychologist, 43(4), 179–195. doi:10.1080/00461520802392133

Getzels, J. W. (1979). Problem finding: A theoretical note. Cognitive Science, 3, 167–172.

Gick, M. L., & Holyoak, K. J. (1980). Analogical problem solving. Cognitive Psychology, 12(3), 306–356. doi:00I0·0285/80/030306-50$05.00/0

Gilhooly, K. J. (1996). Thinking: Directed, Undirected and Creative (3rd ed.). London: Academic Press.

Goldman, A. I. (Ed.). (1993). Readings in Philosophy and Cognitive Science. Cambridge, MA: MIT Press.

Hayes, J. R. (1989). The Complete Problem Solver (2nd ed.). Hillsdale, NJ: Erlbaum.

Hull, C. L. (1934). The concept of the habit-family hierarchy and maze learning. Psychological Review, 41, 33–54.

Jäkel, F., & Schreiber, C. (2013). Introspection in problem solving. Journal of Problem Solving, 6(1), 20–33.

Jonassen, D. H. (1997). Instructional design models for well-structured and ill-structured problem-solving learning outcomes. Educational Technology Research and Development, 45(1), 65–94.

Kaplan, C. A., & Simon, H. A. (1990). In search of insight. Cognitive Psychology, 22(3), 374 419. doi:10.1016/0010-0285(90)90008-R

Koffka, K. (1935 reprinted 1999). Principles of Gestalt Psychology. London: Routledge.

Maltzman, I. (1955). Thinking: From a behavioristic point of view. Psychological Review, 62(4), 275–286. doi:10.1037/h0041818

McNab, A. (1994). Bravo Two Zero. London: Corgi.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63, 81–97.

Neisser, U. (1967). Cognitive Psychology. New York: Appleton-Century-Crofts.

Newell, A., Shaw, J. C., & Simon, H. A. (1958). Elements of a theory of human problem solving. Psychological Review, 65, 151–166.

Newell, A., & Simon, H. A. (1972). Human Problem Solving. Upper Saddle River, NJ: Prentice Hall.

Nisbett, R. E., & Wilson, T. D. (1977). Telling more than we can know: Verbal reports on mental processes. Psychological Review, 84, 231–259.

Polya, G. (1957). How to Solve It: A New Aspect of Mathematical Method. Princeton, NJ: Princeton University Press.

Reese, H. W. (1994). Cognitive and behavioral approaches to problem solving. In S. C. Hayes, L. J. Hayes, M. Sato, & K. Ono (Eds.), Behavior Analysis of Language and Cognition (pp. 197–258). Reno, NV: Context Press.

Schoenfeld, A. H. (1983). Beyond the purely cognitive: Belief systems, social cognitions, and metacognitions as driving forces in intellectual performance. Cognitive Science, 7(4), 329 363.

Simon, H. A. (1973). The structure of ill-structured problems. Artificial Intelligence, 4, 181–201.

Simon, H. A., & Kaplan, C. A. (1989). Foundations of cognitive science. In M. I. Posner (Ed.), Foundations of Cognitive Science (pp. 1–47). Cambridge, MA: MIT Press/Bradford Books.

Skinner, B. F. (1984). An operant analysis of problem solving. Behavioral and Brain Sciences, 7, 583–613.

Sloman, A. (2008). Why Virtual Machines Really Matter – For Several Disciplines. Retrieved from http://www.cs.bham.ac.uk/research/projects/cogaff/misc/talks/information.pdf

Sloman, A. (2009). What cognitive scientists need to know about virtual machines. Paper presented at the 31st Annual Conference of the Cognitive Science Society, Vrije Universiteit Amsterdam, Netherlands.

Thorndike, E. L. (1898). Animal intelligence: An experimental study of the associative process in animals. Psychological Review Monograph, 2(8), 551–553.

Watson, J. B. (1920). Is thinking merely the action of language mechanisms? British Journal of Psychology, 11, 87–104.

Wertheimer, M. (1924). “Über Gestalttheorie”, Lecture before the Kant Gesellschaft [reprinted in translation in Ed. (1938, New York: Harcourt, Brace) pp. 1–11]. In W. D. Ellis (Ed.), A Source Book of Gestalt Psychology (1938) (pp. 1–11). New York: Harcourt Brace.

Wertheimer, M. (1959). Productive Thinking. New York: Harper and Row.

Wheatley, G. H. (1984). Problem Solving in School Mathematics (MEPS Technical Report 84.01). West Lafayette, IN: Purdue University, School Mathematics and Science Center.