Memory management primarily deals with the administration of available memory and the prediction of additional memory required for smoother and faster execution of functions. The current section will cover the concept of memory allocation, which deals with storage of an object in the R environment.

During memory allocation R allocates memory differently to different objects in its environment. Memory allocation can be determined using the object_size function from the pryr package. The pryr package can be installed from the CRAN repository using install.packages("pryr"). The package is available for R (≥ 3.1.0). The object_size function in pryr is similar to the object.size function in the base package. However, it is more accurate as it takes into account the:

- Environment size associated with the current object

- Shared elements within a given object under consideration.

The following are examples of using the object_size function in R to evaluate memory allocation:

> object_size(1) ## Memory allocated for a single numeric vector 48 B > object_size("R") ## Memory allocated for a single character vector 96 B > object_size(TRUE) ## Memory allocated for a single logical vector 48 B > object_size(1i) ## Memory allocated for a single complex vector 56 B

The storage required by an object can be attributed to the following parameters:

- Metadata: Metadata of an object is defined by the type of object used such as character, integers, logical, and so on. The type can also usually be helpful during debugging.

- Node pointer: The node pointer maintains the link between the different nodes, and depending on the number of node pointers used, memory requirement changes. For example, a doubly linked list requires more memory than a singly linked list, as it uses two node pointers to connect to the previous and next nodes.

- Attribute pointer: Pointer to keep reference for attributes; this helps to reduce memory allocation, especially the data stored by a variable.

- Memory allocation: Length of the vector representing the currently used space.

- Size: Size represent the true allocated space length of the vector.

- Memory padding: Padding applied to a component, for example, each element begins after an 8-byte boundary.

The Object_size() command is also used to see the inherent memory allocation as shown in the following table:

Figure 2.2: Memory allocated during initialization of different data types in R

The preceding table shows inherent memory allocated by each data structure/type.

Let's simulate scenarios with varying lengths of a vector with different data types such as integer, character, Boolean, and complex. The simulation is performed by varying vector length from 0 to 60 as follows:

> vec_length <- 0:60 > num_vec_size <- sapply(vec_length, function(x) object_size(seq(x))) > char_vec_size <- sapply(vec_length, function(x) object_size(rep("a",x))) > log_vec_size <- sapply(vec_length, function(x) object_size(rep(TRUE,x))) > comp_vec_size <- sapply(vec_length, function(x) object_size(rep("2i",x)))

Num_vec_size computes the memory requirement for each numeric vector from zero to 60 number of elements. These elements are integers increasing sequentially, as stated in the function. Similarly, incremental memory requirements are calculated for character (char_vec_size), logical (log_vec_size), and complex (comp_vec_size) vectors. The result obtained from the simulation can be plotted using code:

> par(mfrow=c(2,2)) > plot(num_vec_size ~ vec_length, xlab = "Numeric seq vector", ylab = "Memory allocated (in bytes)", + type = "n") > abline(h = (c(0,8,16,32,48,64,128)+40), col = "grey") > lines(num_vec_size, type = "S")

The result obtained on running the preceding code is shown in Figure 2.3. It can be observed that memory allocated to a vector is a function of its length and the object type used. However, the relationship does not seem to be linear rather, it seems to increase in step. This is due to the fact that for better and consistent performance, R initially assigns big blocks of memory from RAM and handles them internally. These memory blocks are individually assigned to vectors based on the type and the number of elements within. Initially, memory blocks seem to be irregular towards a particular level (128 bytes for numeric/logical vector, and 176 bytes for character/complex vectors), and later become stable with small increments of 8 bytes as can be seen in the plots:

Figure 2.3: Memory allocation based on length of vector

Due to initial memory allocation differences, numeric and logical vectors show similar memory allocation patterns, and complex vectors behave similarly to the character vectors. Memory management helps to efficiently run an algorithm however before the execution of any program, we should evaluate it based on its runtime. In the next sub-section, we will discuss the basic concepts involved in obtaining the runtime of any function, and its comparison with similar functions.

System runtime is essential for benchmarking different algorithms. The process helps us to compare different options, and to pick the best algorithm. Benchmarking of different algorithms will be dealt with in detail in subsequent chapters.

The microbenchmark package on CRAN is used to evaluate the runtime of any expression/function/code at an accuracy of a sub-millisecond. It is an accurate replacement to the system.time() function. Also, all the evaluations are performed in C code to minimize any overhead. The following methods are used to measure the time elapsed:

- The

QueryPerformanceCounterinterface on Windows OS - The

clock_gettimeAPI on Linux OS - The

mach_absolute_timefunction on MAC OS - The

gethrtimefunction on Solaris OS

In our current example, we will be using the mtcars data, which is in the package datasets. This data is obtained from 1974 Motor Trend US magazine, which comprises of fuel consumption comparison along with 10 automobile designs and the performance of 32 automobiles (1973-74 models).

Now, we would like to perform an operation in which a specific numeric attribute (miles per gallon (mpg) needs to be averaged to the corresponding unique values in an integer attribute (carb means no of carburetors). This can be performed using multiple ways such as aggregate, group_by, by, split, ddply(plyr), tapply, data.table, dplyr, sqldf, dplyr and so on. For illustration, we have used the following four ways:

aggregatefunction:aggregate(mpg~carb,data=mtcars,mean)ddplyfromplyrpackage:ddply( mtcars, .(carb),function(x) mean(x$mpg))data.tableformat:library(data.table) mtcars_tb = data.table(mtcars) mtcars_tb[,mean(mpg),by=carb]

group_byfunction:library(dplyr) summarize(group_by(mtcars, carb), mean(mpg))

Then, microbenchmark is used to determine the performance of each of the four ways mentioned in the preceding list. Here, we will be evaluating each expression 100 times.

> library(microbenchmark) > MB_res <- microbenchmark( + Aggregate_func=aggregate(mpg~carb,data=mtcars,mean), + Ddply_func=ddply( mtcars, .(carb),function(x) mean(x$mpg)), + Data_table_func = mtcars_tb[,mean(mpg),by=carb], + Group_by_func = summarize(group_by(mtcars, carb), mean(mpg)), + times=1000 + )

The output table is as follows:

The output plot demonstrating distribution of execution time from each approach is shown in Figure 2.4:

> library(ggplot2) > autoplot(MB_res)

Figure 2.4: Distribution of time (microseconds) for 1000 iterations in each type of aggregate operation

Among these four expressions and for the given dataset, data.table has performed effectively in less possible time as compared to the others. However, expressions need to be tested under scenarios with a high number of observations, high number of attributes, and prior to finalizing the best operator.

Based on the performance in terms of system runtime, a code can be classified under best, worst or average category for a particular algorithm. Let's consider a sorting algorithm to understand this in detail. A sorting algorithm is used to arrange a numeric vector in an ascending order, wherein the output vector should have the smallest number as its first element and largest number as its last element with intermediate elements in subsequent increasing order. Currently, we will be implementing insertion sorting, however Chapter 5, Sorting Algorithms, will cover various types of sorting algorithms in detail. In insertion sorting algorithm, the elements within a vector are arranged based on moving positions. The best, worst and average cases are data dependent. Now, let's define best, worst and average case scenarios for insertion sorting algorithm.

- Best case: A best case is one which requires the least running time. For example-a vector with all elements arranged in increasing order requires the least amount of time for sorting.

- Worst case: A worst case is one which requires the maximum possible runtime to complete sorting a vector. For example-a vector with all the elements sorted in decreasing order requires the most amount of time for sorting.

- Average case: An average case is one which requires an intermediate time to complete sorting a vector. For example-a vector with half elements sorted in increasing order and the remaining in decreasing order. An average case is assessed using multiple vectors of differently arranged elements.

Generally, the best-case scenarios are not considered to benchmark an algorithm, since it evaluates an algorithm most optimistically. However, if the probability of occurrence of best case is high, then algorithms can be compared using the best-case scenarios. Similar to best-case, worst-case scenarios evaluate the algorithm most pessimistically. It is only used to benchmark algorithms which are used in real-time applications, such as railway network controls, air traffic controls, and the like. Sometimes, when we are not aware of input data distributions, it is safe to assess the performance of the algorithm based on the worst-case scenario.

Most of the time, average-case scenario is used as a representative measure of algorithm's performance; however, this is valid only when we are aware of the input data distribution. Average-case scenarios may not evaluate the algorithm properly if the distribution of input data is skewed. In the case of sorting, if most of the input vectors are arranged in descending order, the average-case scenario may not be the best form of evaluating the algorithm.

In a nutshell, realtime application scenarios, along with input data distribution, are major criterions to analyze the algorithms based on best, worst, and average cases.

This section primarily deals with details on the trade-off between a computer's configuration and an algorithm's runtime. Let's consider two computers A and B, with B being 10 times faster than A, along with an algorithm whose system runtime is around 60 minutes in computer A for a dataframe of 100,000 observations. The functional form of algorithm's system runtime is n3*. However, this functional form can be considered as an equivalent to the growth in the number of operations required to complete the running of the algorithm. In other words, the functional form of system runtime and the growth rate is same. The following situations will help us understand the trade-off in detail:

Situation 1: Will computer B, which is ten times faster than computer A, be able to reduce the system runtime of the algorithm to six minutes from the current 60 minutes?

This is perhaps yes, provided the size of the dataset remains consistent in both computers A and B. However, if we increase the size of the dataframe by 10 times, the following situation arises.

Situation 2: Will the algorithm in computer B be able to run the increased dataframe of 1,000,000 observations in 60 minutes, as computer B is 10 times faster than computer A?

This becomes tricky, as we are dealing not only with a change in computer configuration but also change in the size of input data, which makes the algorithm perform non-linearly (in our case-cubic form). The following table elucidates the capacity of computer B, which is 10 times faster than computer A, to handle the increase in size of the input dataframe, which can be run in a fixed time period for a given functional form of the algorithm's growth rate. Assume that computer A can perform 100,000 operations in 60 minutes, whereas computer B can perform 1,000,000 operations in 60 minutes. The *k is a constant positive real number ∼ same time period of x minutes for computer A and computer B.

Figure 2.5: Performance comparison of widely used growth rate functions using two different sizes of dataframes

Let's understand each functional form of algorithm's growth rate:

- Linear form: From Figure 2.5, it can be seen that for any constant k, computer B can process 10 times bigger input dataframe within the same time period of 60 minutes. In other words, the processing speed of an algorithm with a linear runtime functional form is independent of the constant k, which affects the runtime behavior of the absolute size of input data. Also, for a given fixed runtime, if a system is i times faster than another system, then the data handling capacity of the faster system is also i times higher than the slower system. Hence, relative performance of the two computers is independent of the algorithm's growth rate constant k.

- Square and cubic form: We can see that for any constant k, computer B can process only the square root of 10 (3.16) and cube root of 10 (2.15) times the input dataframe within the same time period of 60 minutes. Here also, the performance of computer B is not affected by the constant k, which affects the absolute size of the input data size. In other words, computer B, which is 10 times faster than computer A, can run only 3.6 (square root of performance increase in case of square function form) times of the data in a given fixed time period, unlike 10 times as in the case of linear form. Hence, as computers perform much faster, the benefit attained towards the size of input data becomes highly disproportionate due to the inherent nature of ith root (where i is 2,3,4, and so on).

- Logarithmic form: For this functional form two variants are widely used:

- Log(n): The increment in size of the input dataframe is dependent on two factors-one being the increment in the system's computing performance, and other being the constant k. However, disparity between the system's increase in computing configuration and its performance continues as the increase in size of input data is directly proportional to kth root of increment in the system's performance.

- nLog(n): The enhancement in handling higher input data size upon increase in the system's computing performance is greater than the improvement obtained using the quadratic functional form, but lower than algorithms with a linear functional form.

- Power form (exponential): In power form, the system runtime of the algorithm increases exponentially upon increase in size of input data. For k= 1, the size of the input data to perform 100,000 operations in computer A is ∼11. Similarly, the size of the input data to perform 1,000,000 operations in computer B is ∼14. Hence, n2 = n1 + 3. This clearly shows that a system with 10 times increase in performance can handle only a marginal increase in data size within a given, fixed runtime period. The increase in size of the input data for an algorithm with an exponential or power functional form is almost additive rather than multiplicative. In other words, if the algorithm in computer A has a system runtime of 60 minutes for a data size of 100,000 observations, then computer B, which is 10 times faster than computer A, can run only an input data of size 100,003 observations in 60 minutes. Thus, the performance of algorithms with an exponential functional form is much different than the remaining growth functional forms.

Now, let's dive deep into situation 3, which deals with comparing the trade-off between algorithms and computers.

Situation 3: Which scenario is better for an algorithm with a growth rate functional form of n3- to increase the computer's performance capability,or to reconfigure the algorithm to change its growth rate functional form?

As we have already assessed the scenario of increasing the system's performance capability under Situation 2, let's now try to analyze the situation of reconfiguring the algorithm's growth rate functional form.

Currently, our algorithm possesses a functional form of n3. For an input data of size 1,000, the total number of operations required is 1,0003. Suppose, if the current algorithm can be reconfigured to nLog10(n), then the total number of operations would reduce to 3,000, which is much lower than 1,0003. As the number of operations using n3 is more than 10 times the number of operations using nLog10(n) for every n>2, it is more advisable to reconfigure the growth functional form of the algorithm rather than increase the computational performance capability by 10 times.

To summarize the trade-off:

- Algorithms with slower growth rate show a better performance in handling larger data observations upon upgrading the computer's computational configuration

- The rate of handling larger data sets by algorithms with a faster growth rate may not be proportionately handled upon increasing the computer's computational capability

As we learned earlier, an algorithm is a step by step procedure designed to analyze and compute a given problem in a language understandable by a computer. Asymptotic analysis of an algorithm is a mathematical representation to determine its runtime performance or growth rate with the necessary boundary conditions. The boundary conditions depend on factors such as computer configurations, growth in the size of input data, coefficient of the growth rate function (also referred to as constant (k) in section Computer versus algorithm), and others. However, the capability to handle larger data sets is more dependent on the increment in computational performance of computers rather than on the constant term in the growth rate functional form. Also, the curves of different growth rate functional forms do intersect irrespective of the value of the constant in those equations. Thus, the constants in the growth rate or system runtime functional forms are generally ignored while comparing performances at computer level or at the algorithm level. Nevertheless, it is desirable to consider constants in the following situations:

- If the data size is very small, and the algorithm is designed optimally for larger datasets.

- If we need to compare algorithms whose constants differ by a very large factor. However, this happens very rarely, since the algorithms with a very slow growth rate are generally not considered.

Asymptotic analysis is also used to determine the best, worst, and average case of an algorithm, as it is a function of input size which evaluates the runtime of the algorithm. For example, the performance of a sorting algorithm can be evaluated using the incremental length of input vectors. The following are asymptotic functions for standard insertion sorting and merge sorting:

- Standard insertion sorting: f(n) = α+ c*n2

- Standard merge sorting: f(n) = α + c*n*log2(n)

In the preceding two functions, α and c are constants and n is the length of the input vector.

One needs to bear in mind that asymptotic analysis provides only a ballpark estimation of the algorithm's performance in terms of system runtime consumption.

The following asymptotic notations are commonly used to determine the complexity in calculating the runtime of an algorithm.

The upper bound of an algorithm's running time is denoted as O. It is used in evaluating worst-case scenarios, and determines the longest running time for any given length of an input vector. In other words, it is the maximum growth rate of an algorithm.

Figure 2.6: f(n) is Big O of g(n) for all n>k

Let us consider two functions, f and g, which determine an algorithm's runtime t based on varying input vector length n. These functional forms f(n) and g(n) should be non-negative or non-decreasing, because as the length of the input vector increases, the running time of the algorithm practically increases. These functional forms are equivalent to the running time of best, average, and worst-case scenarios of any given algorithm.

As we can see, initially c*g(n) is lower than f(n) for values of n<k, and subsequently, c*g(n) is higher than f(n) for n>k. Thus, the upper bound of the algorithm can be represented as follows:

f(n) = O(g(n)) that is-f(n) < c*g(n) for n>k>0 and c>0.

Therefore an algorithm with a growth rate f(n) is known as Big O of g(n) only when f(n) executes faster than g(n) for all possible inputs n (n>k>0) and any constant c (c>0).

Now, let's consider an algorithm whose running time can be expressed as f(n) of polynomial order 2, and we need to determine g(n), which represents the upper bound for f(n):

f(n) = 25 + 12n + 32n2+ 4*log(n)

Now, for every n>0:

f(n) < 25 n2 + 12 n2 + 32n2+ 4 n2

f(n) < (25+ 12+ 32+ 4)n2

f(n) = O(n2), wherein g(n) is n2 and c=(25+12+32+4)

However, there exists a limitation with this approach. If the coefficient of the linear function is very high, then a polynomial of higher order or an exponential with a smaller coefficient is preferred in practical scenarios.

The following are some of the growth orders widely used to assess an algorithm's performance. Both 2O(n) and O(2n) yield different results and different interpretations as shown in the following figure:

Figure 2.7: Big O representation of various growth order functions

The lower bound of an algorithm's running time is denoted as Ω. It is used in evaluating the least running time of an algorithm, or the best-case scenario for any given length of input vector. In other words, it is the minimum growth rate of an algorithm.

Figure 2.8: f(n) is Big- Ω of g(n) for all n>k

Let us consider two non-negative and non-decreasing functions f(n) and g(n), which determine an algorithm's runtime t based on a varying input vector length n. These functional forms are an equivalent to the running time of best, average, and worst-case scenarios of any given algorithm.

As we can see, initially c*g(n) is higher than f(n) for values of n<k, and subsequently, c*g(n) becomes lower than f(n) for n>k. Thus, the lower bound of the algorithm can be represented as follows:

f(n) = Ω (g(n)) that is-f(n) > c*g(n) for n>k>0 and c>0.

Hence an algorithm with growth rate f(n) is known as Big O of g(n) only when f(n) executes faster than g(n) for all possible input n (n>k>0) and any constant c (c>0).

Now, let's consider an algorithm whose running time can be expressed as f(n) of polynomial order 2, and we need to determine g(n), which represents the lower bound for f(n):

f(n) = 25 + 12n + 32n2+ 4*log(n)

Now for every n>0, the largest of the lower bound is as follows:

f(n) > 25 n2

f(n) > Ω (n2) wherein g(n) is n2 and c=25

The smallest of lower bound is as follows:

f(n) > 25

f(n) > Ω (25)

Here, g(n) is a constant and c=25.

As you just learned, about O and Ω, which describe the upper (maximum) and lower (minimum) bound of an algorithm's running time respectively, θ is used to determine both the upper and lower bound of the algorithm's runtime, using the same function. In other words, it is asymptotically tight bound on the running time. Asymptotically because it is significant only for large number of observations, and tight bound because the running time is within constant factor bounds:

Figure 2.9: f(n) is Big- θ of g(n) for all n>k

Let us consider two non-negative and non-decreasing functions f(n) and g(n) which determine an algorithm's run time t based on varying input vector length n.

Then, for every n>k>0 and c>0,

f(n) = θ(g(n)) if and only if O(g(n)) = Ω (g(n)).

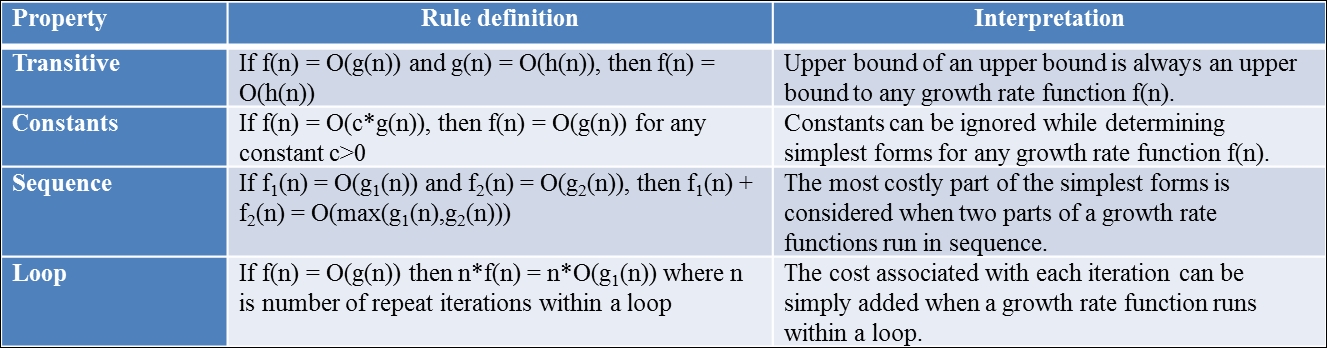

Big O (upper bound), Big Omega (lower bound), and Big Theta (average) are the simplest forms of functional equations, which represent an algorithm's growth rate or its system runtime. Simplifying rules can be used to determine these simplest forms without worrying much about formal asymptotic analysis. These rules are applicable to all the three simplest forms. However, the examples shown in the following table are based on the Big O asymptote.

Figure 2.10: Definition of simplifying rules along with their interpretations

These simplifying rules are widely used in the following chapters while evaluating costs for an algorithm's growth rate or system runtime functional form.

Let's consider two algebraic growth rate functions f(n) and g(n). The classifying rules are then used to determine which functional form has a better performance over the other. This can be evaluated using the limit theorem, which is as follows:

The following three scenarios are used to classify f(n) and g(n):

Figure 2.11: Classifying rule forms

Let's' evaluate the computations of different components within a program or algorithm using asymptotic analysis.

Assigning an element (numeric, character, complex, or logical) to an object requires a constant amount of time. The element can be a vector, dataframe, matrix and others.

int_Vector <- 0:60

Hence, the asymptote (Big Theta notation) of the assignment operation is θ(1).

Consider a simple for loop with assignment operations.

a <- 0 for(i in 1:n) a <- a + i

The following are asymptotes for each line of execution in the code:

Figure 2.12: Asymptotic analysis of a simple for loop

Hence, the total cost of this for loop using simplifying rules is θ(n).

Consider a complex loop using a while loop, and a nested for loop using assignment operations.

a <- 1 i <- 1 b <- list() while(i<=n ) { a <- a + i i<- i+1 } for(j in 1:i) for(k in 1:i) { b[[j]] <- a+j*k }

The following are asymptotes for each line of execution in the code:

Figure 2.13: Asymptotic analysis of a complex loop

Hence, the total cost of this complex loop using simplifying rules is θ(n2).

Consider a for loop with a nested if...else condition, as shown in the following example code:

a <- 1 for(i in 1:n) { if(i <= n/2) { for(j in 1:i) a <- a+i }else{ a <- a*i } }

The following are the asymptotes for each line of execution in the code:

Figure 2.14: Asymptotic analysis of a conditional loop

The total cost of this loop with if...else conditions using simplifying rules is θ(n2). The cost assessment of an if...else condition is evaluated using the worst-case scenario. Here, the worst-case scenario is when the if condition is True, and the nested for loop is executed instead of a simple for loop in the else condition. Hence, maximum growth rate (or system runtime) is considered for evaluating the asymptote of the conditional statements.

A statement which iterates in a loop using the same function till a condition is satisfied is called a recursive statement. The most commonly used recursive statement is the factorial function. The following code calculates the factorial of an integer n.

fact_n <- 1 for(i in 2:n) { fact_n <- fact_n * i }

The following are the asymptotes for each line of execution in the code:

Figure 2.15: Asymptotic analysis of a recursive statement

The total cost of a recursive statement using simplifying rules is θ(n).

Algorithms form an intrinsic base for analyzing a problem, and each problem can be analyzed using multiple algorithms. These algorithms are further evaluated based on their functional performances, as covered under previous sections. However, there arises a basic question-how to evaluate a problem which has many solutions vis-à-vis many algorithms.

Consider a problem with m number of algorithms, where m tends to infinity. Then, the upper bound or the worst-case scenario cannot be lower than the upper bound of the best algorithm, and the lower bound or the best-case scenario cannot be higher than the lower bound of the worst algorithm. In other words, it is easier to define the lower and upper bounds for an algorithm, but it becomes tricky when it is to be defined for a problem, since there might be algorithms which might not have been explored at all.

More details along with examples will be covered in subsequent chapters.

So far, the performance of an algorithm was evaluated using only its functional form of system runtime. Another functional form can be a key constraint for algorithm developers in system space or available memory. The space functional form depends on both the type and size of data structure unlike the runtime functional form, which depends primarily on the size of the input data structure. As an example, a vector of n elements requires k*n (θ(n)) bytes of memory provided that each element requires k bytes. The space required by each data structure depends on the mode of data storage for efficient data access within.

For example, a linked list not only stores a list of elements but also pointers for easy navigation within. These pointers are additional storage elements, which act as overheads and require additional space allocation. Thus, a data structure with lower overheads can enhance the performance of algorithms in terms of space functional form.

However, there needs to be a trade-off between the system's runtime and space requirement for effective evaluation of an algorithm. The best algorithm is one which requires less space and less runtime. But in reality, satisfying both criteria is difficult for algorithm developers. In order to reduce space requirement, developers tend to encode data information, which, in turn, requires additional time to decode, thereby increasing the system runtime. On the other hand, developers tend to restructure data storage information while executing algorithms to decrease the system runtime at the expense of greater space.

More details along with examples will be covered in subsequent chapters.