Stand-alone PolyBase installation on Windows

Big Data Cluster using kubeadm on Linux

Big Data Cluster using Azure Kubernetes Service (AKS)

It is perfectly fine to run all options from the same box. But as it is likely that you will not be using all of them, we figured it would make sense to start fresh each time.

We will be covering the installation using the Microsoft Windows operating system. The goal of this guide is to get your Big Data Cluster up and running as quick as possible, so we will configure some options that may not be best practice (like leaving all the service accounts and directories on default). Feel free to modify those as it may fit your needs.

If you opt for the AKS installation, you will need an active Azure subscription. If you do not already have an Azure subscription, you can create one for free which includes credits which you can spend free of charge.

A Little Helper: Chocolatey

Home page of Chocolatey

From a simple user perspective though, there is no need to create an account or to download any installer.

Install script for Chocolatey in PowerShell

Once the respective command has completed, choco is installed and ready to be used.

Installation of an On-Premises PolyBase Instance

In case you’re only interested in the data virtualization feature of SQL Server 2019 Big Data Clusters, the installation is actually much easier and lightweight than for a full environment. The PolyBase feature, which enabled the data virtualization feature, can be installed during the regular setup routine of SQL Server 2019 on any platform.

If you want to use Teradata through PolyBase, the C++ Redistributable 2012 is required to actually communicate with our SQL Server instance. Having SQL Server Management Studio (SSMS) may be helpful in either case and is nice to have it installed and ready to replay the examples we are showing throughout this book.

Install script for PolyBase prerequisites

With our prerequisites installed, we can get to the actual SQL Server installation. Navigate to www.microsoft.com/en-us/evalcenter/evaluate-sql-server-2019 and follow the instructions to download.

Run the downloaded file, as shown in Figure 3-2.

SQL Server 2019 installer – Installation type selection

SQL Server 2019 installer – Download Media dialog

SQL Server 2019 installer – Download Media successful

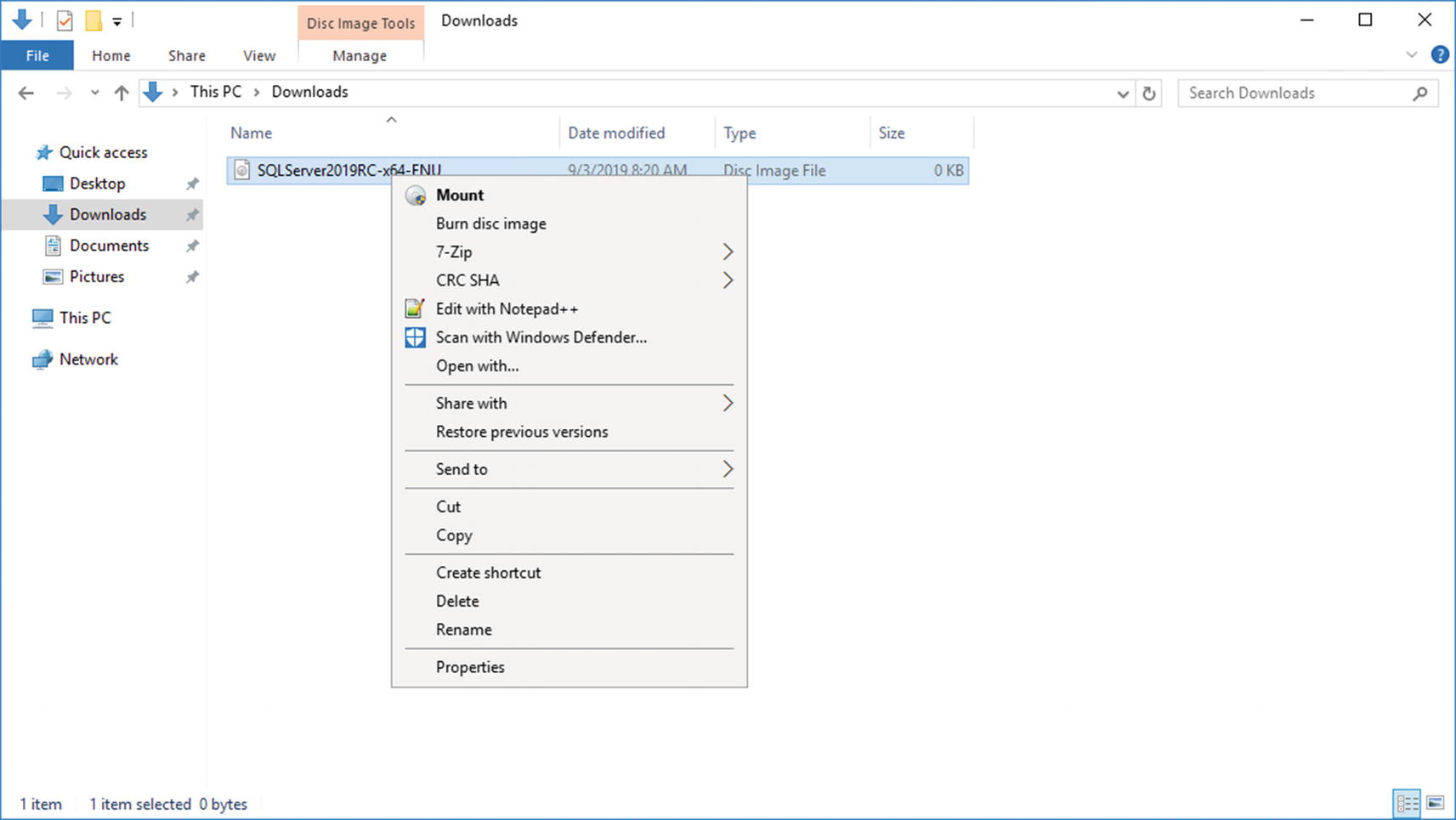

SQL Server 2019 installer – mount ISO

SQL Server 2019 installer – main screen

SQL Server 2019 installer – edition selection

SQL Server 2019 installer – Install Rules

SQL Server 2019 installer – Feature Selection

SQL Server 2019 installer – Instance Configuration

SQL Server 2019 installer – PolyBase Configuration

SQL Server 2019 installer – Java Install Location

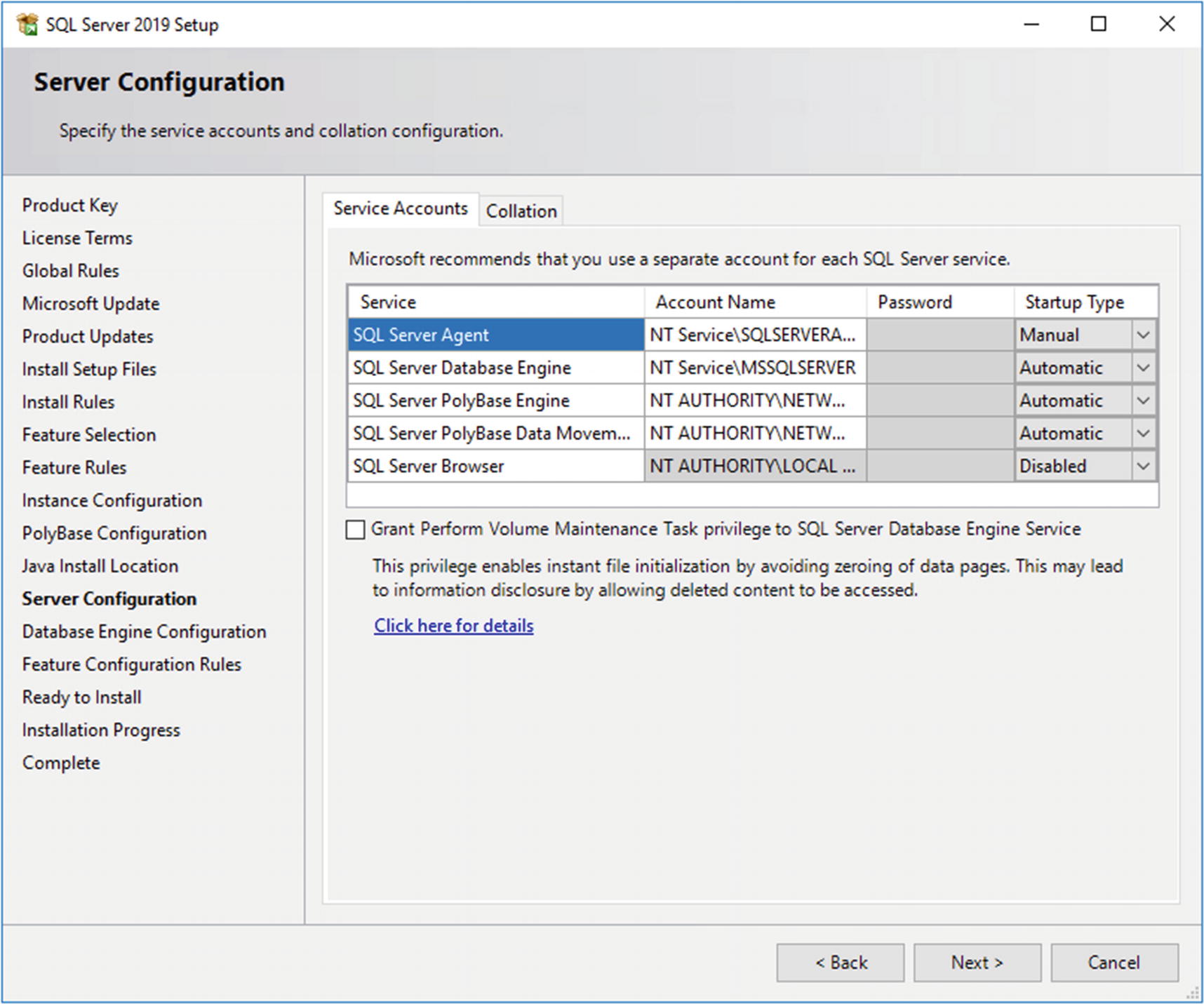

SQL Server 2019 installer – Server Configuration

SQL Server 2019 installer – Database Engine Configuration

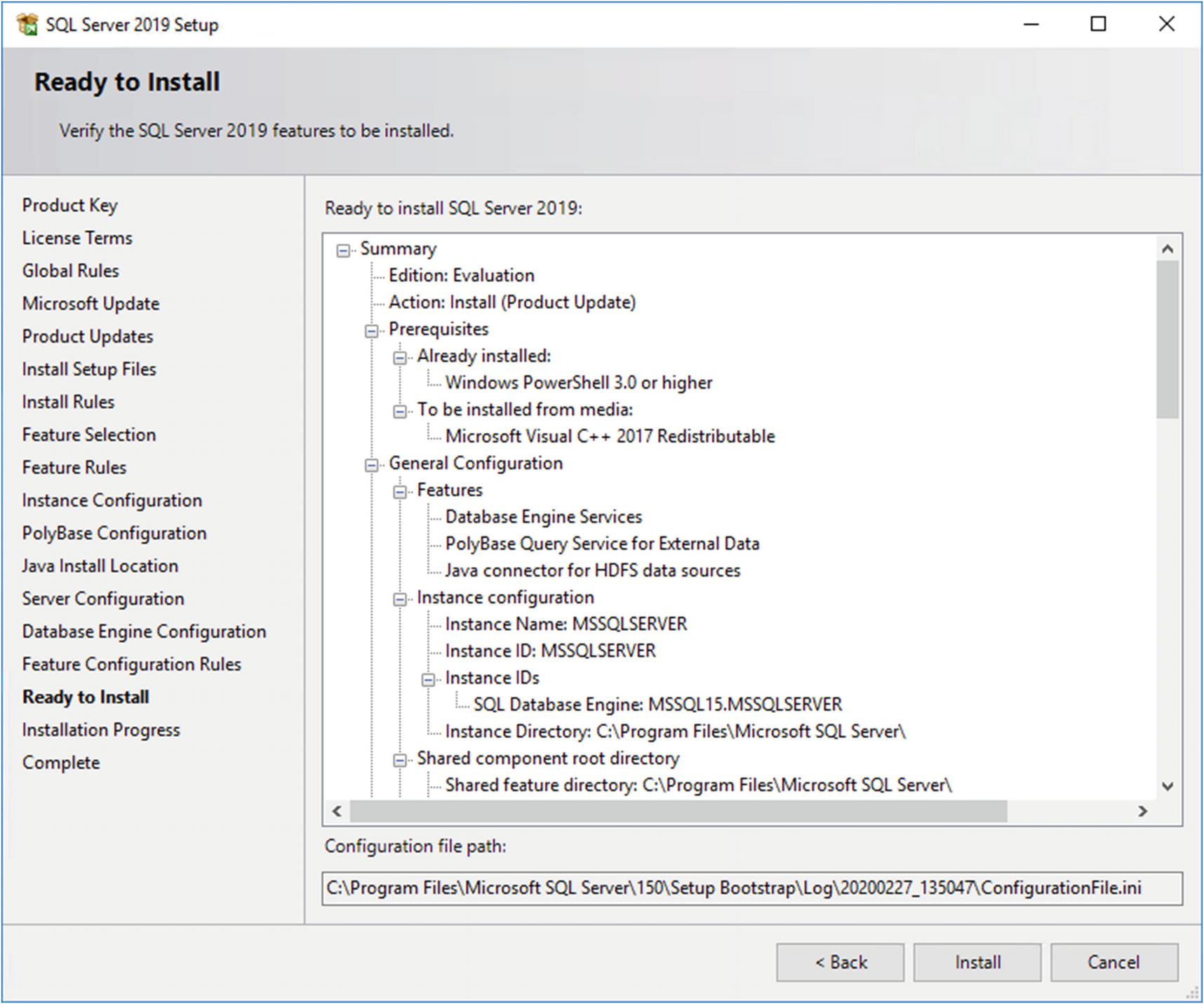

SQL Server 2019 installer – overview

SQL Server 2019 installer – Complete

Enable PolyBase through T-SQL

Restart SQL Server Instance

You’re done – you do now have access to a PolyBase-enabled SQL Server 2019 installation.

Using Azure Data Studio to Work with Big Data Clusters

As part of Microsoft’s SQL Client tool strategy, it may not surprise you that most of the tasks necessary to work with a Big Data Cluster are achieved through Azure Data Studio (ADS) rather than SQL Server Management Studio or other tools. For those of you who are not familiar with this tool, we’re going to start with a little introduction including how to get your hands on this tool.

What Is Azure Data Studio?

Azure Data Studio is a cross-platform (Windows, MacOS, and Linux), extendable, and customizable tool which can be used for classic T-SQL queries and commands, as well as multiple new functions like notebooks. The latter can be enabled through extensions which are usually installed through a VSIX file, which you might be familiar with from working with other extensions for Visual Studio or Visual Studio Code.1

It was originally made public in 2017 as SQL Operations Studio but was rebranded before its official release in 2018. While the product name is a bit misleading, it is not only for cloud (Azure)-based services but for on-premises solutions and needs as well.

The fact that it, for example, does not come with an out-of-the-box interface for SQL Server Agent, but in exchange offers built-in charting, shows that it is not so much a replacement but more of a complimenting tool for SQL Server Management Studio (SSMS). SSMS is targeting an administrating and managing group (database administrators), whereas Azure Data Studio is more suitable for data professionals of all kinds, including data scientists.

Getting and Installing Azure Data Studio

You can get your free copy of Azure Data Studio directly from Microsoft at https://docs.microsoft.com/en-us/sql/azure-data-studio/download. Download the installer of your choice for your platform and run it.

Install ADS via choco

Installation of a “Real” Big Data Cluster

If you want to make use of the full Big Data Cluster feature set, you will need a full installation including all the different roles and pools.

kubeadm on Linux

A very easy way to deploy a Big Data Cluster is using kubeadm on a vanilla (freshly installed) Ubuntu 16.04 or 18.04 virtual or physical machine.

Microsoft provides a script for you that does all the work, so besides the Linux installation itself, there is not much to do for you, which makes this probably the easiest way to get your first Big Data Cluster up and running.

Patch Ubuntu

Download and execute the deployment script

Deployment on Linux with kubeadm

Deployment on Linux with kubeadm

Successful deployment on Linux with kubeadm

Your cluster is now fully deployed and ready!

Azure Kubernetes Service (AKS)

Another straightforward way to deploy your cluster is to use Azure Kubernetes Service, in which the Kubernetes cluster is set up and provided in the Microsoft Azure cloud. While the deployment is started and controlled through any machine (either your local PC or a VM), the cluster itself will run in Azure, so this means that deployment requires an Azure account and will result in cost on your Azure subscription.

Python

Curl and the SQL Server command-line utilities

So we can communicate with the cluster and upload data to it.

The Kubernetes CLI

azdata

This will create, maintain, and delete a big data cluster.

Notepad++ and 7Zip

These are not actual requirements, but if you want to debug your installation, you will get a tar.gz file with potentially huge text files. Windows does not handle these out of the box.

Install script for Big Data Cluster prerequisites

While the respective vendors obviously supply visual/manual installation routines for most of these tools, the scripted approach just makes the whole experience a lot easier.

Install azure-cli

While you technically could prepare everything (we need a resource group, the Kubernetes cluster, etc.) in the Azure Portal or through manual PowerShell scripts, there is a much easier way: get the Python script from https://github.com/Microsoft/sql-server-samples/tree/master/samples/features/sql-big-data-cluster/deployment/aks, which will automatically take care of the whole process and setup for you.

Download deployment script

Of course, you can also modify and review the script as per your needs, for example, to make some parameters like the VM size static rather than a variable or to change the defaults for some of the values.

Trigger login to azure from command prompt

Azure logon screen

Azure logon confirmation

Input of parameters in Python deployment script

The script now runs through all the required steps. Again, this can take from a couple of minutes to hours, depending on the size of VM, number of nodes, and so on.

Output of Python deployment script

The script will also use azdata bdc config to create your JSON file.

Final output of Python deployment script including IP addresses

Retrieve Kubernetes service IPs using kubectl

Retrieve Kubernetes namespaces using kubectl

Python script to retrieve endpoints of a Big Data Cluster

Output of IP.py

You’re done! Your Big Data Cluster in Azure Kubernetes Service is now up and running.

Note Whether you use it or not, this cluster will accumulate cost based on the number of VMs and their size so it’s a good idea not to leave it idling around!

Deploy Your Big Data Cluster Through Azure Data Studio

New Deployment in ADS

On the following screen, select “SQL Server Big Data Cluster.” The wizard will ask you to accept the license terms, select a version, and also pick a deployment target. Supported targets for this wizard are currently a new Azure Kubernetes Service (AKS) cluster, an existing AKS cluster, or an existing kubeadm cluster. If you plan to deploy toward an existing cluster, the Kubernetes contexts/connections need to be present in your Kubernetes configuration. If the Kubernetes cluster was not created from the same machine, it’s probably still missing. In this case, you can either copy the .kube file to your local machine or configure Kubernetes manually as described at https://kubernetes.io/docs/tasks/access-application-cluster/access-cluster/.

Deploy a BDC through ADS – intro

Deploy a BDC through ADS – Step 1

Deploy a BDC through ADS – Step 2

Deploy a BDC through ADS – Step 3

Deploy a BDC through ADS – Step 4

Deploy a BDC through ADS – Summary

Deploy a BDC through ADS – install Python

Wait for the installation to complete. All your settings have been populated to a Python notebook which you could either save and store for later or run right away. To execute the notebook, simply click “Run Cells” as shown in Figure 3-35. Just make sure that the Python installation has finished. The kernel combo box should read “Python 3”. If it’s still showing “Loading kernels…”, be patient.![]()

Deploy a BDC through ADS – notebook predeployment

Deploy a BDC through ADS – notebook postdeployment

What Is azdata?

As mentioned before, no matter which path of deployment you choose, the deployment of your Big Data Cluster will always be controlled through a tool call azdata. It’s a command-line tool that will help you to create a Big Data Cluster configuration, deploy your Big Data Cluster, and later potentially delete or upgrade your existing cluster.

Create cluster config using azdata

Target is just the folder name for your config files (bdc.json and control.json). The src is one of the existing base templates to start with.

aks-dev-test

aks-dev-test-ha

kubeadm-dev-test

kubeadm-prod

Output of azdata bdc config init without specifying a source

The source to choose will depend on your deployment type.

Modify cluster config using azdata

Sample control.json

Sample bdc.json

As you can see, the file allows you to change quite a lot of settings. While you may leave many of them at their default, this comes in quite handy, especially in terms of storage. You can change the disk sizes as well as the storage type. For more information on storage in Kubernetes, we recommend reading https://kubernetes.io/docs/concepts/storage/.

All deployments use persistent storage by default. Unless you have a good reason to change that, you should keep it that way as nonpersistent storage can leave your cluster in a nonfunctioning state in case of restarts, for example.

Create cluster using azdata

Output of azdata bdc create

Depending on the size of your machine, this may take anywhere from minutes to hours.

Others

There are multiple other Kubernetes environments available – from Raspberry Pi to VMWare. Many but not all of them support SQL Server 2019 Big Data Clusters. The number of supported platforms will grow over time, but there is no complete list of compatible environments. If you are looking at a specific setup, the best and easiest way is to just give it a try!

Advanced Deployment Options

Besides the configuration options mentioned earlier, we would like to point your attention to two additional opportunities to make more out of your Big Data Cluster: Active Directory authentication and HDFS tiering.

Active Directory Authentication for Big Data Clusters

AD parameters in control.json

At the time of writing, there are quite a few limitations though. For example, AD authentication is only supported on kubeadm, not on AKS deployments , and you can only have one Big Data Cluster per domain. You will also need to set up a few very specific objects in your AD before deploying the Big Data Cluster. Please see the official documentation at https://docs.microsoft.com/en-us/sql/big-data-cluster/deploy-active-directory?view=sql-server-ver15 for detailed steps on how to enable this.

HDFS Tiering in Big Data Clusters

Should you already have an existing HDFS stored in either Azure Data Lake Store Gen2 or Amazon S3, you can mount this storage as a subdirectory of your Big Data Cluster’s HDFS. This will be achieved through a combination of environment variables, kubectl and azdata command. As the process differs slightly per source type, we refer you to the official documentation which can be found at https://docs.microsoft.com/en-us/sql/big-data-cluster/hdfs-tiering?view=sql-server-ver15.

Unlike enabling AD authentication, which happens at deployment, HDFS tiering will be configured on an existing Big Data Cluster.

Summary

In this chapter, we’ve installed SQL Server 2019 Big Data Clusters using various methods and to different extents.

Now that we have our Big Data Cluster running and ready for some workload, let’s move on to Chapter 4 where we’ll show and discuss how the cluster can be queried and used.