Vision-based sensing for assessing and monitoring civil infrastructures

Y.F. Ji, Tongji University, China

C.C. Chang, Hong Kong University of Science and Technology, Hong Kong

Abstract:

Recently, vision-based measurement techniques have emerged as an important non-destructive evaluation (NDE) method, due to the availability of low cost but high image resolution commercial digital cameras and effective image processing algorithms. In this chapter, some important issues that might affect the accuracy of the vision-based measurement techniques are presented and discussed. Results from laboratory tests and field tests are obtained to illustrate the accuracy and applicability of some vision-based measurement techniques.

Key words

displacement measurement; photogrammetry; videogrammetry; computer vision

14.1 Introduction

A growing number of research efforts have been devoted to the area of structural health monitoring (SHM) over the past two decades to assess the safety and integrity of civil infrastructures. One SHM approach, global vibration-based technique, relies on the analysis of structural dynamic responses for assessment. Generally, these dynamic responses are in terms of acceleration, as they can be easily measured by accelerometers. Structural displacement response remains difficult to measure directly, although it is important for structural model updating and damage detection (Doebling et al., 1996; Sohn et al., 2004). Currently available displacement measurement techniques can be roughly classified into two categories: indirect measurement and direct measurement. Indirect measurement techniques include double integration of recorded acceleration time history and inferring from mathematical models using measured quantities such as strains (Kim and Cho, 2004). The accuracy of indirect measurement techniques, however, has always been a concern. Hudson (1979) commented that the double integration of acceleration is not readily automated and requires selection of filters and baseline correction and the use of judgment when anomalies exist in the records. Applicable direct measurement techniques include the global positioning system (GPS) and the laser Doppler vibrometers. GPS can provide real-time displacement measurement with millimeter-level accuracy at a frequency up to 20 Hz. Xu et al. (2002) showed that a real-time kinematic GPS system installed on a suspension bridge achieved 5 and 10 mm resolution along the horizontal and the vertical direction, respectively. The laser Doppler vibrometer can provide accurate displacement measurement at multiple locations within its applicable distance (Nassif et al., 2005). These direct measurement instruments, however, are quite costly. When displacements at a large number of locations are desired, measuring them using these sensors can be prohibitively expensive, if not impossible (Fu and Moosa, 2002).

With the advent of inexpensive and high-performance cameras and associated image processing techniques, a measurement technique based on images acquired from these cameras has attracted significant interest for its potential application in various engineering disciplines. Compared with the other measurement sensors, this vision-based measurement technique has several advantages: (1) it is a non-contact three-dimensional (3D) measurement technique; (2) it is economical in terms of both installation and cost; and (3) it can measure simultaneously a large number of points, or even a continuous spatial displacement profile on a structure.

The development of vision-based measurement technique can be traced to classical photogrammetry, which uses photographs to establish the geometrical relationship between a 3D object and its two-dimensional (2D) photographic images. Developments in photogrammetry have followed four development cycles (Konecny, 1985): plan table photogrammetry (1850–1900); analog photogrammetry (1900–1960); analytical photogrammetry (1960 to present); and digital photogrammetry (1990–present). The photogrammetric technique can usually be classified into aerial or terrestrial, depending on the types of images used in the analysis (Mikhail et al., 2001). The aerial photogrammetric technique uses images acquired overhead from aircrafts, satellites, or hot air balloons. On the other hand, the terrestrial photogrammetric technique uses images from ground-based cameras. When the camera-to-object distance is within the range of 100 mm ~ 100 m, terrestrial photogrammetry is further defined as close-range photogrammetry (Atkinson, 2003; Luhmann et al., 2007). Note that most civil engineering applications fall under this category.

In the earlier development of the photogrammetric technique, researchers used high-precision industrial grade metric cameras for the image acquisition. These metric cameras have precisely known internal geometries and very low lens distortion, but are quite expensive and require factory calibration. Also, the principal distance of these metric cameras needs to be fixed during application, which limits their distance range during application. While it is possible that image measurement accuracy using these metric cameras can exceed 1:200 000 of the cameras’ field of view, using non-metric, even ‘amateur’ cameras is not excluded for some special measurement cases with a lower demand of accuracy, say, 1:1000 to 1:20 000. Along with the advancements in electronics, optics, and computer technologies in recent years, close-range photogrammetry has undergone a remarkable evolution and has been transformed to digital close-range photogrammetry (also called videogrammetry) using non-metric video components such as digital charge coupled device (CCD) cameras, video recorders, and frame grabbers for image acquisition. The evolution of videogrammetry has involved many special skills, such as geometric modeling of sensor imaging process, digital image analysis, feature extraction, and object reconstruction.

Computer vision, the study of enabling computers to understand and interpret visual information from static images and video sequences, emerged in the late 1950s and early 1960s and is expanding rapidly throughout the world. It belongs to the broader field of image-related computation, and relates to areas such as image processing, robot vision, machine vision, medical imaging, image databases, pattern recognition, computer graphics, artificial intelligence, psychophysics, and virtual reality (Bebis et al., 2003). Computer vision produces measurements or abstractions from geometrical properties recorded in images. The goal of computer vision is then completed by interpreting the obtained measurements or abstractions. Computer vision is becoming a mainstream subject of study in computer science and engineering with the rapid explosion of multimedia and the extensive use of video- and image-based communications over the World Wide Web.

While the photogrammetrists developed accurate instruments as well as solid fundamental theories on camera calibration and camera-object geometry, the computer vision researchers further contributed theories on multiview geometry, image flow, ego-motion, etc. from the image-understanding viewpoint. These two areas of research, although not intensively interactive in the past, have started to become synergized and intertwined. Generally, a videogrammetric task can be fulfilled through the following steps: (1) control target layout and camera network setting; (2) target survey and camera calibration with lens distortion; (3) image acquisition and processing; and (4) point or object coordinates reconstruction using images. These steps center on a precise understanding of the camera models and their intrinsic and extrinsic parameters, as well as the geometrical relationship between the acquired images and the 3D object. The introduction of computer vision theories further makes possible the elimination of target requirement as well as the compensation for camera movement. These developments add flexibility to videogrammetric measurement techniques and create ample of opportunities for application to various engineering disciplines, including civil engineering.

14.2 Vision-based measurement techniques for civil engineering applications

In the past 20 years, there have been a few applications involving using photogrammetric or videogrammetric technique for various measurement purposes in civil engineering. Bales (1985) measured the deflection of a continuous three span steel bridge under dead load using closerange photogrammetric technique. Though the reported results were revealed to match very well with those of conventional methods in the average difference of 3.2 mm, the whole process was labor-intensive, time-consuming, and not cost-effective due to the need for a metric camera, special processing equipment, and well-trained workers. Li and Yuan (1988) developed a 3D photogrammetric vision system consisting of TV cameras and 3D control points to identify the bridge deformation. The Direct Linear Transform method was employed for camera calibration using 3D control-point information, which produced an accuracy of 0.32 mm. Aw and Koo (1993) used three standard CCD cameras to determine 3D coordinates of pre-marked targets. An averaged accuracy of 2.24 mm was obtained by a bundle adjustment method. Whiteman et al. (2002) developed a two-camera videogrammetric system with a precision of 0.25 mm to measure the vertical deflection of a concrete beam during a destructive test. It is to be noted in respect of the above three studies, the measurement systems were neither flexible nor cost-effective, as the coordinates of the control points were obtained by other surveying equipment. Some similar metrology applications employing videogrammetry have been realized successfully, such as concrete deformation measurement during dehydration process (Niederöst and Maas, 1997), thermal deformation of steel beams (Fraser and Riedel, 2000), vertical deflection measurement of existing bridges of different types (Jáuregui et al., 2003), and development of the Image Based Integrated Measurement (IBIM) system based on a low-cost stereo-vision-based sensing system for structural 3D modeling (Ohdake and Chikatsu, 2004). An image-based deformation measurement technique has been developed by Take et al. (2005) for real-time monitoring of construction settlements. The technique combined the technologies of remote digital photography, automated file transfer, the image processing technique of Particle Image Velocimetry, and a web-based reporting system. Alba et al. (2010) presented the development and results of a vision-based method for displacement measurement that allowed rapid deformation analysis along the cross-sections of a tunnel.

There have also been a few research activities involving structure dynamic measurement and system identification using the videogrammetric principle. Olaszek (1999) developed a videogrammetric method to investigate the dynamic characteristics of bridges. The application was performed for real-time displacement measurement of chosen points at bridge structure using CCD cameras with a telephoto-lens. The motive for adopting the videogrammetric technique was to resolve the difficulty of measuring some hard-to-access points at structure. Patsias and Staszewski (2002) used a videogrammetric technique to measure mode shapes of a cantilever beam. The wavelet edge detection technique was adopted to detect the presence of damage in the beam. A Kodak high-speed professional camera system with a maximum sampling rate of 600 frames per second (fps) was used to capture the beam vibration. The system, however, was limited to 2D planar vibration measurement since only one camera was used. Yoshida et al. (2003) used a videogrammetric technique to capture the 3D dynamic behavior of a membrane. Their measurement system consisted of three progressive CCD cameras with 1.3 million pixels and 30 fps. The cameras were calibrated using a calibration plate anchored on a linear guide system with accurate positioning capability.

Chung et al. (2004) used digital image techniques for identifying non-linear characteristics in systems. Applications showed that digital image processing could identify the coulomb friction coefficient of a pendulum as well as nonlinear behavior of a base-isolated model structure. Poudel et al. (2005) proposed a video imaging technique for damage detection of a prismatic steel beam. A high-speed complementary-metal-oxide-semiconductor (CMOS) camera was employed to capture the dynamic response of the beam. Mode shapes of the beam were extracted by wavelet transform and were used for damage localization. A monocular-vision-based measurement system was developed by Lee et al. (2007) for health monitoring of bridges. A specially designed plane pattern was used for simple camera calibration as well as target tracking. As the system used only one camera, it could only measure the in-plan 2D displacement of a specially designed target. Chang and Ji (2007) developed a binocular-vision system to measure 3D structural vibration response. A two-step plane-based calibration process, including individual and stereo calibration, was proposed to effectively obtain the camera parameters. Caetano et al. (2007) reported the application of a monocular-vision-based technique for a target-camera distance of 850 m and a field of view of 300 m. A detailed review of vision-based sensing in bridge measurement was conducted by Jiang et al. (2008). Based on a monocular-vision theorem, Chang and Xiao (2010) developed a novel measurement technique to capture structural 3D displacement. Results of a field test showed that the method could measure quite accurately the 3D translation and rotation of a planar target attached to a bridge. Ozbek et al. (2010) discussed the pros and cons of using photogrammetric technique for health monitoring of wind turbines. The 3D dynamic response of the rotor was captured at 33 different locations simultaneously using 4 CCD cameras. Jeon et al. (2011) proposed a paired vision-based system comprising lasers, a camera, and a screen to measure displacement of large structures. Kim and Kim (2011) proposed a digital image correlation technique to measure multi-point displacement response for civil infrastructures. Recently, an image-based technique was used to measure minimum vertical under-clearance for bridges during routine inspection (Riveiro et al., 2012).

Note that the development of vision-based measurement techniques is not limited to the academic community; some commercial systems have been developed in the last two decades for various applications. PhotoModeler (Eos, 2012) is a software system that performs image-based modeling and close-range photogrammetry for tasks such as performing accurate measurement, creating CAD-like models, and modeling man-made, organic, or natural shapes. The Australis software suite (Photometrix, 2012) is designed to perform automated image-based 3D coordinate measurements from recorded digital images. The software can provide fully automatic measurement of targeted objects to high accuracy or low-to-moderate accuracy semi-automatic or manual measurements in photogrammetric networks comprising natural feature points and images from off-the-shelf cameras. The iWitness photogrammetry software systems (DCS, 2012) produce 3D measurements and models from digital camera images and scanned analog photographs using coded target technology. The systems have been used in engineering measurement, architectural, and archaeological measurement, and biomedical measurement, as well as 3D documentation in support of virtual reality modeling. Vic-3D 2010 (Correlated, 2012) is a turnkey system for measuring the shape, displacement, and strain of surfaces in three dimensions. It uses the digital image correlation principle and can measure displacements and strains from 50 micro-strains to 2000% strain for specimen sizes ranging from 1 mm to 10 m. The Bersoft Image Measurement (BIM) (Bersoft, 2012) is Windows-based software that acquires, measures, stores, compares, and analyzes digital images. BIM performs image analysis functions that include gray scale and 24-bit color measurements: angle, distance, perimeter, area, point, line, pixel profile, object counting, histogram, and statistics.

14.3 Important issues for vision-based measurement techniques

In this section, several important core issues that affect the accuracy of vision-based measurement techniques are discussed.

14.3.1 Camera calibration

Camera calibration is the recovery of the intrinsic parameters of a camera. Traditionally, cameras, especially metric cameras, are calibrated in laboratories in a well-controlled environment. Laboratory calibration can be done using either a goniometer or a multicollimator (Mikhail et al., 2001). Although the camera parameters can be obtained with high precision, the calibration is rather intensive and costly. In addition, the principal distance of the calibrated cameras needs to be fixed during application, which limits the flexibility of these laboratory-calibrated cameras. More and more uses of non-metric cameras have demanded more flexible, and preferably on-site, calibration.

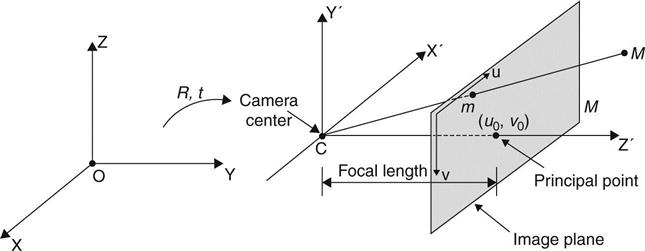

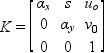

A general projective camera, such as the one equipped with CCD sensors, can be described using a pinhole model as (see Fig. 14.1):

[14.1]

where m = (u, ν, 1)T is a 2D homogenous image coordinate defined by pixels, a 3D real point is denoted by a homogenous vector M = (X, Y, Z, 1)T, λ is a scale factor, and P is a 3 × 4 matrix called camera projective matrix, and can be described by the following relationship:

[14.2]

[14.3]

[14.3]

[14.3]

Note that αx and αy are the focal lengths of the camera in terms of pixel dimensions in the u and ν directions, respectively, s is the skew parameter, and u0 and v0 are the coordinates of the principal point in terms of pixel dimensions, R is a 3 × 3 rotation matrix representing the orientation of camera coordinate frame, I is a 3 × 3 unity matrix, and t is a 3 × 1 translation vector relating image and object coordinate system. There are a total of 11 parameters to be calibrated for a single camera, which means at least six control points have to be pre-measured (i.e., known XYZ coordinates relative to one another in an arbitrary Cartesian reference system). These camera parameters can be found by the least squares solution of Equation [14.1]. In addition, camera lens might suffer from a distortion problem that could affect the accuracy when used for videogrammetric applications. Assume an ideal image point (u, v, 1) is distorted by an unknown amount; its corresponding distorted and observed image coordinates (![]() ) can be expressed as:

) can be expressed as:

[14.4a]

[14.4b, c]

where k1 and k2 are coefficients of radial lens distortion, and k3 and k4 are coefficients of decentering lens distortion. With the selection of these additional distortion parameters, the minimum requirement for one camera calibration should increase to eight control points.

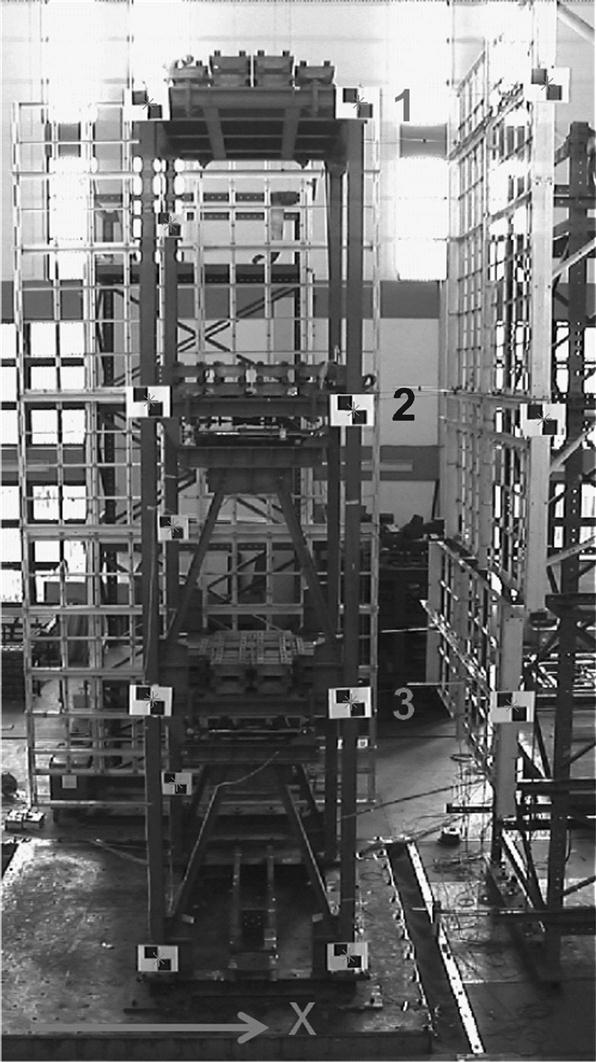

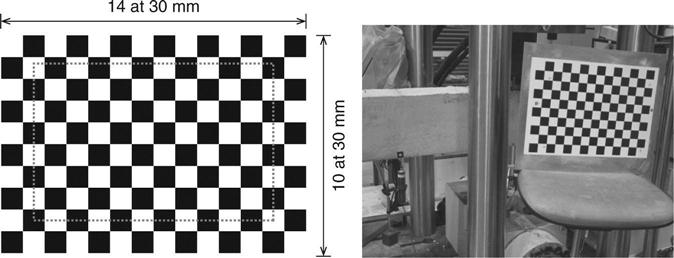

The calibration can be performed based on control targets that are spatially distributed in 3D space and not coplanar as shown in Fig. 14.2. The problem with this kind of calibration method exists in appropriate settings of cameras and targets, and tedious geometric survey of those control points. Therefore, when the measurement object or motion is not much larger, a plane-based method is considered as a more suitable way for camera calibration due to its reliability and flexibility. The plane-based camera calibration is a method between photogrammetric calibration and self-calibration that utilizes various relationships between different real plane information and corresponding image coordinates to verify all the parameters in camera projective matrices for all the views. Figure 14.3 shows one typical plane pattern for camera calibration. The corner points of black and white squares are used as control targets for the calibration.

There are several important issues related to the calibration (Kwon, 1998): (i) the calibration frame must be large enough to fully include the space of motion; (ii) the camera setting must not be altered once calibration is done; (iii) as many control points as possible should be included and spread uniformly throughout the control volume; and (iv) the calibration frame should be set properly to align the axes well in relation to the direction of motion. The camera calibration methods currently being developed in the computer vision have been moving toward more flexibility with less use of artificial control points. For example, Deutscher et al. (2000) proposed an automatic method for obtaining the approximate calibration of a camera from a single image of an unknown scene that contains three orthogonal directions (the so-called Manhattan World image).

14.3.2 Target and correspondence

Vision-based sensing is often concerned with identifying and locating pre-defined targets. These targets are used for two purposes: to provide coordinates for the point of interest, and to establish correspondence for the point of interest in an image sequence. A number of techniques can be used to locate a predefined target. These techniques depend on the type and shape of the target, whether its image is affected by perspective effects, and how much its average gray level differs from the background.

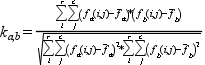

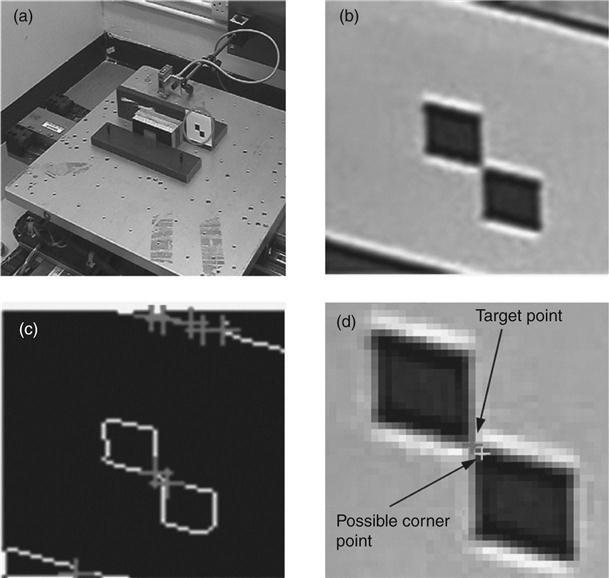

Figure 14.4 shows the identification of one common target. The algorithm includes the following tasks: (i) convert images to binary form; (ii) obtain intersections of image skeleton using image morphology techniques; (iii) filter out the outliers and determine the most-likely corner point; and (iv) use the detected point in Step (ii) as an initial guess to find the exact corner point using corner detection techniques such as the Harris corner detection method. After a target point has been identified from one image frame, it is necessary to track this target point from the subsequent images. A point matching criterion can be introduced to solve this correspondence problem. Assume that the target point is the center pixel of a square mask with r rows and c columns as shown in Fig. 14.5. A cross correlation coefficient between square mask a in Image I and square mask b in Image II can be calculated as follows:

[14.5]

[14.5]

[14.5]

where f is the pixel gray level, ![]() denotes the mean gray level of square mask, and ka,b is the correlation coefficient that satisfies ka,b ≤ 1. Among those candidate points, the point that gives the maximum ka,b value is the one matching with the reference. A triangular method can be used to determine the 3D coordinates of the target point from two image points recorded by a calibrated camera.

denotes the mean gray level of square mask, and ka,b is the correlation coefficient that satisfies ka,b ≤ 1. Among those candidate points, the point that gives the maximum ka,b value is the one matching with the reference. A triangular method can be used to determine the 3D coordinates of the target point from two image points recorded by a calibrated camera.

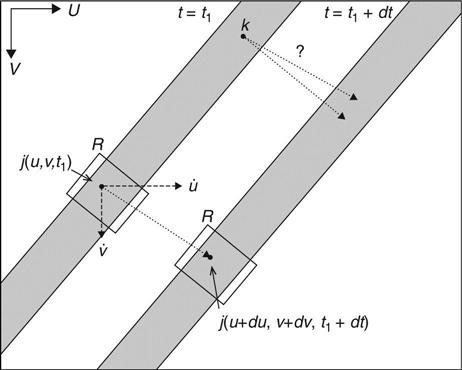

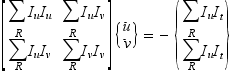

When no target is available, an approach developed in the computer vision, the optical flow technique, can be used (Jain et al., 1995). Optical flow is the velocity field resulting from an intensity change on the image plane. Figure 14.6 shows a schematic illustration of the optical flow technique. Two segments of cable images, one from time t = t1 and the other from time t = t1 + dt, are overlaid in the figure, where dt is a time increment. Without a specific target, it is impossible to know precisely where a point, say point k, would move to in the next time instant. Assume that the intensity of a point j(u, v, t1) at t = t1 is expressed as I(u, v, t1) where u and v are the coordinates on the image plane. At t = t1 + dt, this point moves to j(u + du, v + dv, t1 + dt) with an intensity of I(u + du, v + dv, t1 + dt) where du and dv are the displacement increments along u and v, respectively. These two intensities can be approximately related through Taylor series expansion as:

[14.6]

where Iu, Iv, and It denote the partial derivatives of the intensity with regard to u, v, and t, respectively. Assuming the lighting on the cable segment is the same on the two images, the two intensity values can then be assumed to be the same. And we can easily derive the governing equation of pixel movement as follows:

[14.7]

where ![]() and

and ![]() are the velocity components of the image point j(u, v, t1), and

are the velocity components of the image point j(u, v, t1), and ![]() is termed as the optical flow vector. Furthermore, on the assumption that there is a region of interest (ROI) R in which all points have the same constant optical flow vector, this optical flow vector should satisfy the following equation:

is termed as the optical flow vector. Furthermore, on the assumption that there is a region of interest (ROI) R in which all points have the same constant optical flow vector, this optical flow vector should satisfy the following equation:

[14.8]

[14.8]

[14.8]

This equation can be used to compute the optical flow vector. The direction and the magnitude of the optical flow vector then indicate the direction and the magnitude of motion for the ROI in the image plane.

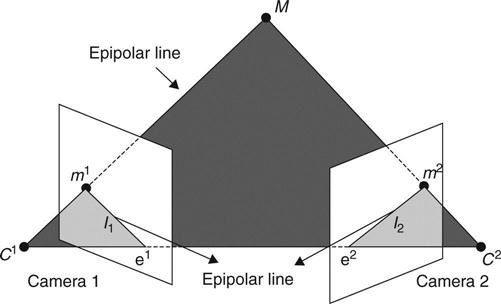

For the case of an object moving in the 3D domain, the optical flow technique can still be adopted using two or more cameras. For a two-camera image acquisition system, the epipolar geometry condition requires that two optical centers of the cameras C1 and C2, the point M, and its image projection points m1 and m2 should lie on the so-called epipolar plane as shown in Fig. 14.7. The two image points should satisfy the following equation (Hartley and Zisserman, 2003):

[14.9]

where F is defined as the fundamental matrix. This equation essentially defines a line on the second image if the image coordinates on the first image are given and vice versa. It is then possible to establish a point correspondence from the two images without the use of any target.

14.3.3 Camera movement

Most vision-based sensing techniques were developed based on the assumption that the camera was fixed on the ground. On the other hand, more and more applications in the computer vision community have demanded an investigation into the effect of camera motion, termed the ego-motion, on image understanding. The object motion recorded on an image sequence is a combined result of the actual object motion and the ego-motion. The effect of ego-motion becomes an issue of great concern for civil engineering applications. Civil infrastructures are normally large, and mounting cameras on fixed supports becomes a challenge. Several algorithms have been developed to extract the motion of a camera moving with respect to a fixed scene. These methods can be categorized either as discrete-time methods or as instantaneous-time methods (Tian et al., 1996). Simultaneous recovery of object motion and ego-motion has also been studied (Georgescu and Meer, 2002).

14.4 Applications for vision-based sensing techniques

To illustrate the applicability of vision-based measurement techniques, some measurement examples are presented in this section. These examples used only commercial grade non-metric cameras equipped with 1.18 million-pixel progressive CCD and could record high-definition (HD) images with a pixel resolution of 1280 × 720 at 29.97 fps.

14.4.1 Small-scale building model test

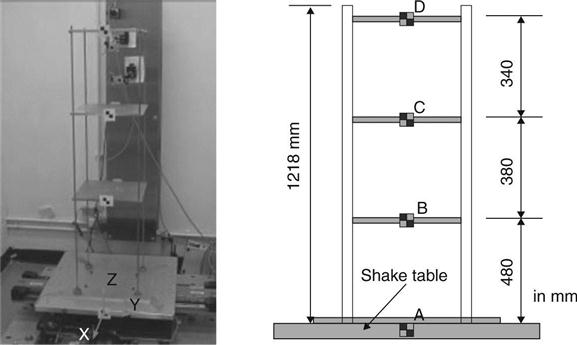

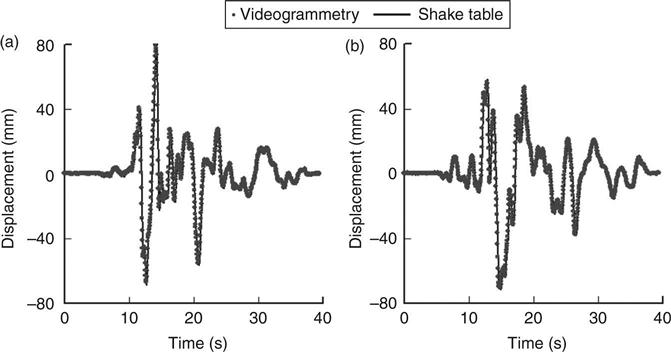

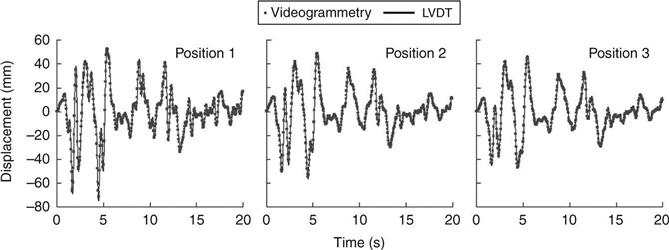

In this example, the plane-based method introduced above was used to calibrate two non-metric cameras. The small-scale three-story building model was made out of aluminum with a uniform story height of 0.38 m and a total height of 1.21 m as shown in Figure 14.8. Four targets, A, B, C, and D were attached to the base and the three stories. The model was excited by the NS component (i.e. the earthquake component in the ‘North-South’ direction) of the 1940 El Centro earthquake ground displacement record and the 1995 Kobe earthquake 2D ground displacement record, respectively. Four laser linear variable differential transformers (LVDTs) were set up to measure the displacement time histories for the shake table and the three stories. Figures 14.9 and 14.10 show the two sets of results. These results indicate that image-based measurement can achieve good agreement with the LVDT measurement.

14.4.2 Large-scale steel building frame test

As shown in Fig. 14.2, a three-story steel building frame was mounted on a shake table in the National Center for Research on Earthquake Engineering of Taiwan. This frame was excited by the 1940 El Centro earthquake 2D ground displacement record. The frame model had dimensions of 3 m × 4 m × 12 m with three stories evenly distributed in height. Fourteen control targets were attached on and around the frame for calibrating the two cameras. Some of these targets were used as target points for the displacement measurement. All control points were carefully surveyed by a total station. The camera calibration results showed that the mean reconstruction error of all targets was around 0.5–0.8 mm, which provided an indication on the measurement error for the set-up. Figure 14.11 shows the comparison of measurement results between the image-based technique and the LVDT. It can be seen that the proposed videogrammetric technique is able to track the dynamic displacement quite accurately.

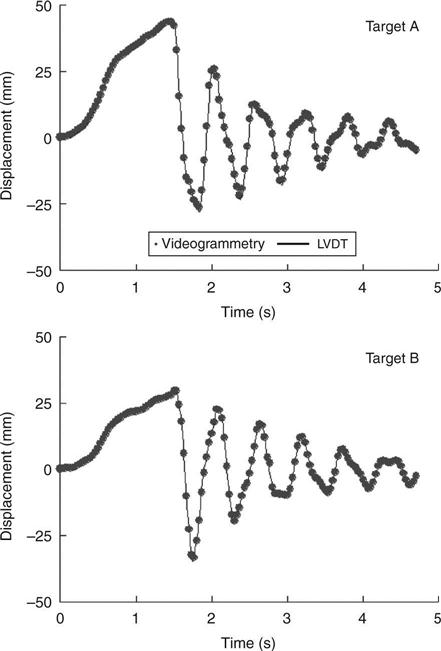

14.4.3 Wind tunnel bridge sectional model test

Figure 14.12 shows a wind tunnel set-up for a bridge sectional model test. The sectional model consisted of two wooden box girders connected by crossbars. The model was rigidly connected to circular rotatable shafts. The shafts were then attached to rigid rectangular bars, which were supported by four linear springs to provide the necessary stiffness for the section model. Two laser LVDTs were positioned at locations as shown in Fig. 14.12 to measure the displacement time histories during the test. Two targets were placed at locations as shown to validate the applicability of the vision-based sensing technique for such a test. The cameras were placed at about 1.2 m from the targets and the angle between the two cameras was about 30°. The focal lengths of the two cameras were both set at 15.6 mm. The model was pulled by a string and released to vibrate under a mild and steady wind condition. As the supporting rectangular bars were rigid, the readings from the laser LVDTs could be linearly scaled to the deformations at the two target locations for comparison. Figure 14.13 shows the measurement results at the two target locations. The close match between the two measured results indicates that the vision-based sensing technique is able to track the two targets quite accurately.

14.4.4 Bridge cable test

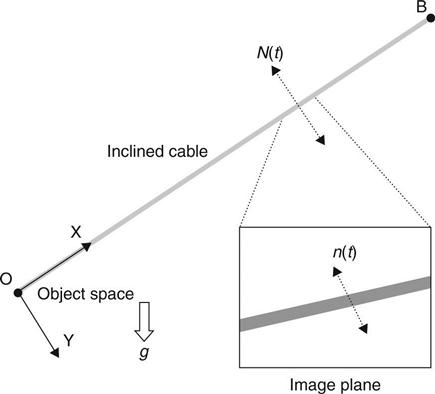

In this example, the use of the optical flow approach for measuring bridge cable vibration (in-plane motion) by monocular-vision-based sensing technique is demonstrated. Only one camera is used for the image acquisition. Assume that a bridge cable vibrates predominately along the in-plane perpendicular to chord direction as shown in Fig. 14.14. Any point on the cable can be regarded as making a 1D motion. Define the line trajectory of this point in the 3D object space and in the 2D image plane as N(t) and n(t), respectively, where t is time. For a projective camera, the mapping between the 1D motion in the 3D object space and the corresponding 1D motion in the 2D image plane can be obtained via a pinhole camera model. For a line-to-line projection, the camera matrix P becomes a 2 × 2 matrix:

[14.10]

where pi, i = 1–4 are the camera projection coefficients. Eliminating λ in Equation [14.1] gives:

[14.11]

It can be seen that the line N(t) in 3D space and the line n(t) on 2D image plane are nonlinearly correlated. Under the affine camera assumption, p3 in the camera matrix is equal to 0. Hence, Equation [14.11] becomes:

[14.12]

where the scaling factor p′1 = p1 / p4 and the initial factor p′1 = p1 / p4.

It can be seen that the 1D motion in the 3D object space N(t) and its corresponding 1D motion in the 2D image plane n(t) are linearly correlated and their frequency contents would be the same. Under such conditions, it is possible to obtain the natural frequencies of stay cables from the cable image motion recorded from a single camera. The object motion N(t) can be obtained from the image motion n(t) if the scaling factor p′1 can be determined. Setting the initial factor p′2 = 0, the 1D motion in the object space N(t) can be obtained from the 1D motion on the image plane n (t) as:

[14.13]

It should be noted that the estimation of cable displacement presented above is applicable to those cables without a noticeable sag effect.

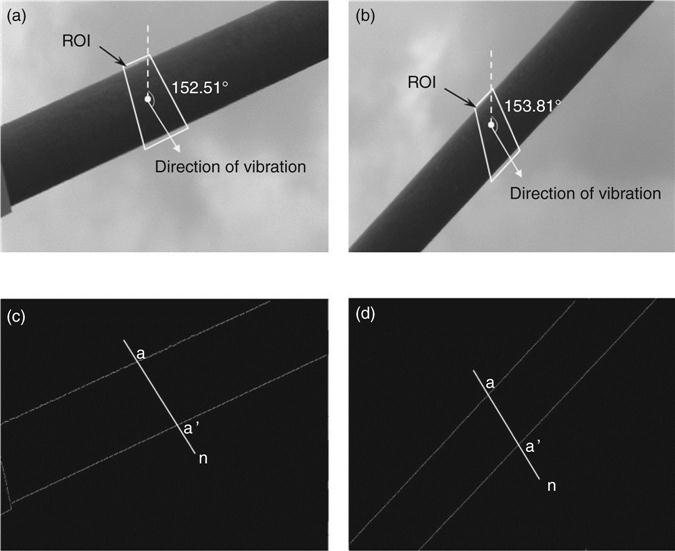

To illustrate, one cable of a small cable-stayed pedestrian bridge (see Fig. 14.15) was selected for measurement. The diameter of the cable was measured to be 40 mm. This cable was subjected to an initial deformation in the vertical plane and underwent free vibration. Two camcorders, placed at 2 and 3 m distance, respectively, were employed to measure the vibration of the cable separately. The focal lengths for both camcorders were set at 52 mm, and the ratios of the field of view to the cable-camera distance were both around 0.07 to meet the assumption of an affine camera. The two camcorders recorded the motion of the same segment on the cable so that comparison could be made. A total station was used to measure the coordinates of the two camcorders as well as the two anchorage locations of the cable. An accelerometer was also attached to the cable to obtain the cable frequencies for comparison.

Figures 14.16a and 14.16b show one of the images captured by Camcorders 1 and 2, respectively. From the optical flow technique, the directions of the optical flow vector for the ROIs from Camcorder 1 and Camcorder 2 are shown in Figs 14.16c and 14.16d. Plate XIa (in the color section between pages 294 and 295) shows the displacement time histories of the cable segment obtained from both camcorders. It can be seen that the cable segment oscillates between − 30 and 30 mm. Plate XIb shows the power spectral densities of the two displacement time histories shown in Plate XIa. It can be seen that in the frequency range of 0 to 8 Hz, the current image-based technique can obtain three frequencies: 2.05, 3.81, and 5.84 Hz. Three frequencies can also be obtained from the accelerometer: 2.05, 3.81, and 5.76 Hz. These results indicate that the vision-based sensing technique is capable of measuring the natural frequencies of this bridge cable quite accurately.

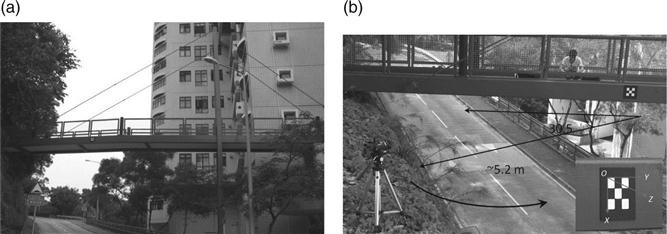

14.4.5 Pedestrian bridge test

This test was conducted on a small-scale single-pylon pedestrian bridge. The 3D dynamic displacement of the bridge deck was measured using monocular-vision sensing technique. The planar target used for the camera calibration and the target tracking was placed at one side of the bridge deck (see Fig. 14.17). The camera was placed at about 5.2 m away from the target at about the same height, and the extrinsic parameter of the camera from the first frame indicated that the inclination angle between the Z and − Zc axes was about 59.5°. The bridge was excited by two persons jumping on the bridge deck. A 20s image sequence was recorded for measuring the three-dimensional motion of the target. Based on the predefined world coordinate system, the displacement of the bridge deck was predominately along the X direction.

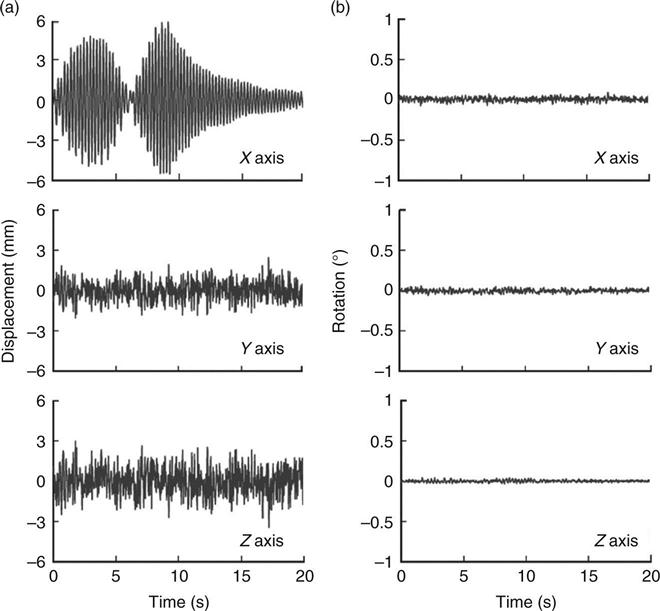

Figure 14.18a shows the measured displacement time histories along X, Y, and Z axes, respectively. It can be seen that the displacements along the X direction are larger than those along the other two directions. The first 9 s of the X displacements corresponds to the period of time when the bridge vibration was building up by the two persons continuously jumping on the deck. Beyond that the jumping was stopped and the bridge went through a free decay vibration, as shown in Fig. 14.18a. The maximum displacement amplitude along the X direction is about 6 mm. The measured displacements along the Y and the Z directions, however, do not show the built-up and the free decay behavior as seen along the X direction. These Y and Z displacements can be regarded as due more to the measurement noise than to the actual displacement of the bridge deck. The standard deviations of the displacements along the Y and the Z directions are found to be 0.76 and 1.09 mm, respectively. Figure 14.18b also shows the measured rotation angles about the three axes, which were all found to be less than 0.1°.

14.5 Conclusions

Measuring static and dynamic displacement of in-service structures is an important issue for the purpose of design validation, performance monitoring, or safety assessment. Currently, the available measurement techniques either cannot provide sufficient accuracy or are quite expensive when a large number of measurement locations are desired. Recently, vision-based measurement techniques have emerged as an important non-destructive evaluation (NDE) method, due to the availability of low cost but high image resolution commercial digital cameras and effective image processing algorithms. These vision-based measurement techniques synergize research developments from the photogrammetry and the computer vision discipline and offer a direct displacement measurement in both temporal and spatial domains for civil engineering structures. Furthermore, the cameras used in these techniques can be calibrated on-site, which offers great flexibility and significantly simplifies the installation process. In this chapter, some important issues that might affect the accuracy of the vision-based measurement techniques are presented and discussed. Results from laboratory tests and field tests show that the vision-based measurement techniques can remotely capture displacement response of structures with accuracy comparable to that of the GPS. With the rapid development in the digital image acquisition and processing, it is expected that the vision-based measurement techniques will continue to improve in accuracy and offer a viable cost-effective solution for obtaining three-dimensional displacement responses for civil engineering structures. It should be mentioned that, despite their advantages and potential, the current vision-based techniques that use commercial digital cameras nonetheless suffer from a few limitations including low sampling frequency, high measurement noise, and weather/light constraints, which might restrict their field applications. Further research efforts are needed to circumvent some of these limitations, perhaps by integrating these vision-based measurement techniques with other sensors available to the civil engineering community.

14.6 Acknowledgment

This study is supported by the Hong Kong Research Grants Council Competitive Earmarked Research Grant 611409.