Chapter 13

SA Forum Middleware Implementations

13.1 Introduction

This chapter presents the two leading open source implementations of the Service Availability (SA) Forum Service Availability Interface specifications:

- OpenHPI, which implements the Hardware Platform Interface (HPI) [35]; and

- OpenSAF which delivers the services of the SA Forum Application Interface Specification (AIS).

Both projects were initiated and are driven by leading telecom and computing companies, many of whom contributed to the specifications themselves. These implementations can be deployed by themselves or together to provide a complete SA Forum solution.

13.1.1 OpenHPI

The OpenHPI an open source project [71, 105] aims at implementing the SA Forum HPI specification. The project started in 2004 and quickly provided its first HPI implementation. With the release of OpenHPI 2.0 the project implemented the HPI B.01.01 version of the interface specification in the same year as it was published by the SA Forum. The project remained well on track implementing the new versions of the specification. At the time of writing it provides the HPI interface according to B.03.02 version of the SA Forum standard [35]. The only exceptions are the diagnostics initiator management instrument (DIMI) and the firmware upgrade management instrument (FUMI) functions introduced by the B.02.01 version of the specification, which are not provided yet by some plugins.

OpenHPI adopted a design that makes it easily adaptable to different hardware architectures. The project also goes a few steps further than just implementing the HPI specification. HPI users can take advantage of various additional support programs the project provides:

- There is an extensive set of client programs—examples that show the use of the different HPI functions. These same examples can also be used for testing and/or monitoring the hardware.

- An hpi-shell provides an interactive interface to the hardware using HPI functions; and

- Different graphical user interfaces allow for browsing the hardware.

These support programs helped enormously the adoption of the HPI specification by different hardware manufacturers and implementers of hardware management software.

13.1.2 OpenSAF

The OpenSAF [91] open source project was launched in mid-2007. It is the most complete and most up-to-date implementation of the SA Forum AIS specifications that can be deployed even in carrier-grade and mission critical systems. OpenSAF is an open-source high-availability middleware consistent with SA Forum AIS developed within the OpenSAF Project. Anyone can contribute to the codebase and it is freely available under the LGPLv2.1 license [106].

OpenSAF has a modular build, packaging, and runtime architecture, which makes it adaptable to different deployment cases whether there full or only a subset of the services is required. Besides the standard C Application Programming Interfaces (APIs), it also contains the standard Java bindings for a number of services as well as Python bindings for all the implemented AIS services, which also facilitates a wide-range of application models.

In the rest of the chapter we take a more detailed look at each of these open source projects. We start with a closer look at the OpenHPI project. We continue with the discussion of the OpenSAF project: The evolution of the middleware from the start of the project till version 4.1—the latest stable version at the time of writing of the book. We also describe the architecture and the main implementation aspects of OpenSAF, as well as management aspects of OpenSAF and process illustrating deployment of OpenSAF on target environments.

13.2 The OpenHPI Project

OpenHPI is one of the first implementations of SA Forum's HPI specification discussed in Chapter 5 and it is a leading product in this area. It has also become part of many Linux distributions.

This section describes the architecture and codebase of this open source project.

13.2.1 Overview of the OpenHPI Solution

The idea of an open source implementation of HPI immediately faces the problem that an interface to hardware must be hardware-specific. At the same time, an open source implementation has the goal to provide a common implementation of the interface.

OpenHPI addressed this problem by introducing the plugin-concept. Plugins contain the hardware specific part and use a common interface toward the OpenHPI infrastructure.

This approach created a new de-facto standard of the plugin application binary interface (ABI).

The client programs provided within the OpenHPI project can be used to exercise OpenHPI as well as third party HPI implementations. This demonstrates the portability of client applications that use the standardized HPI interfaces and the advantage of standard interfaces in general.

OpenHPI uses the client-server architecture which defines an interface between the server (OpenHPI daemon) and the client (the library linked to the HPI user process). OpenHPI being an open source project allows other implementations of the HPI specification to take advantage of this architecture by using the same interfaces and by that it also created a new de-facto standard. This creates a desired flexibility for the industry.

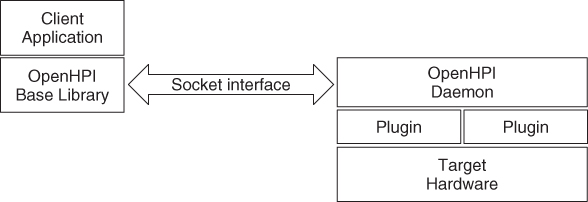

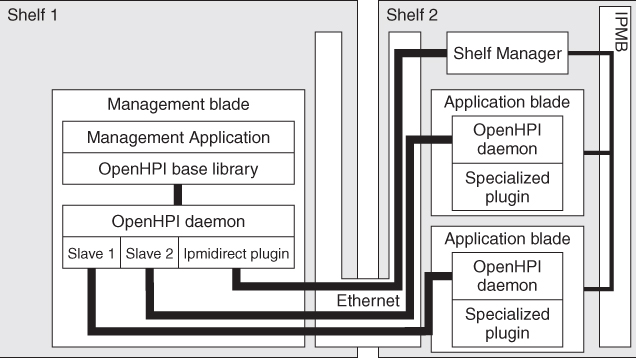

The OpenHPI client-server architecture is illustrated in Figure 13.1. This solution also provides remote management access to the hardware. As shown in the figure OpenHPI has introduced the hardware specific plugin concept on the server side. This makes the solution easily adaptable to different hardware architectures.

Figure 13.1 OpenHPI architecture.

Some commercial HPI implementations use the interface between the OpenHPI base library and the OpenHPI daemon as defined by the OpenHPI client-server architecture. This allows for a combination of OpenHPI components with their own implementation. Nevertheless in most cases hardware vendors not yet provide HPI implementations or OpenHPI plugins in their deliveries automatically.

13.2.1.1 Base Library

The OpenHPI base library provides the user interface with all HPI interfaces as defined in the SA Forum specification. It opens the sessions to the HPI domains by connecting to the correct OpenHPI daemon.

The initial configuration of the base library is given in a configuration file, which defines the Internet Protocol (IP) address and port at which the library finds the daemon for each specific domain. This configuration can be modified at runtime by dynamically adding new domains to the library configuration or deleting existing ones from it.

13.2.1.2 Socket Interface

The message interface between base library and OpenHPI daemon uses a special marshaling, which supports different endianism and data alignment. Thus, the client together with the base library may run on a hardware architecture, which is different from that of hosting the OpenHPI daemon.

13.2.1.3 OpenHPI Daemon

Every OpenHPI daemon implements one HPI domain. This includes the data structures as defined by the HPI specification; namely, the domain reference table (DRT), the resource presence table (RPT), and the domain event log. It is also responsible for the uniqueness of the HPI entity paths.

The OpenHPI daemon is capable of hosting multiple plugins to access different hardware. Having multiple plugins loaded at the same time is especially important in cases where the hardware architecture provides a separate management controller like it is the case with the Advanced Telecommunication Computing Architecture (ATCA) shelf manager. Thus, a single daemon can manage multiple shelves using multiple instances of the ATCA ipmidirect plugin and at the same time host a specialized plugin to manage the local hardware, which cannot be accessed via the ATCA ipmi bus (see an example configuration in Section 13.2.2.4). This principle applies of course also for all other bladed or non-bladed architectures where there is a separate management controller with out-of-band management.

The OpenHPI daemon is configured with an initial configuration file, which specifies the plugins to be loaded at startup and their plugin specific parameters. As in case of the base library, plugins can be loaded or unloaded dynamically at runtime as necessary.

13.2.1.4 OpenHPI Plugins

Plugins are at the heart of OpenHPI as they serve as proxies to the different hardware management interfaces and protocols.

The plugin ABI allows that a plugin provides only a subset of the functions. This way plugins can also share the management tasks for some hardware such as have a specialized plugin for diagnosis via DIMI.

The open source project provides the following plugins:

- ipmidirect

An Intelligent Platform Management Interface (IPMI) plugin designed specifically for ATCA chassis. It implements IPMI commands directly within the plugin.1

- snmp_bc

An Simple Network Management Protocol (SNMP) based plugin that can communicate with the IBM BladeCenter (International Business Machines), as well as the IBM xSeries servers with (Remote Supervisor Adapter) RSA 1 adapters.

- ilo2_ribcl

An OpenHPI plugin supporting the (Hewlett-Packard) HP ProLiant Rack Mount Servers. This plugin connects to the iLO2 on the HP ProLiant Rack Mount Server using a Secure Socket Layer (SSL) connection and exchanges information via the Remote Insight Board Command Language (RIBCL).

- oa_soap

An OpenHPI plugin supporting HP BladeSystems c-Class. This plugin connects to the Onboard Administrator (OA) of a c-Class chassis using an SSL connection and manages the system using an (eXtensible Markup Language) XML-encoded simple object access protocol (SOAP) interface.

- rtas

The Run-Time Abstraction Services (RTASs) plugin.

- sysfs

An OpenHPI plugin, which reads the system information from sysfs.

- watchdog

An OpenHPI plugin providing access to the Linux watchdog device interface.

In addition the following plugins have been provided to ease testing and to support complex hardware architectures:

- simulator

An OpenHPI plugin, which reports fake hardware used for testing the core library.

- dynamic_simulator

An OpenHPI plugin, which reports fake hardware defined in the simulation.data file and which is used to test the core library.

- slave

An OpenHPI plugin, which allows the aggregation of resources from different domains (slave domains) and provides these aggregated resources as part of the one domain (master domain).

13.2.2 User Perspective

The OpenHPI architecture is very flexible in supporting different distributed system architectures because it is the user who gets to choose the components on which the OpenHPI daemons should run, and the ways the necessary plugins are deployed.

In this section we demonstrate this flexibility on a few ATCA-based examples. The principles that we present apply also for other hardware architectures that use separate management controllers.

13.2.2.1 Typical OpenHPI Deployment in ATCA

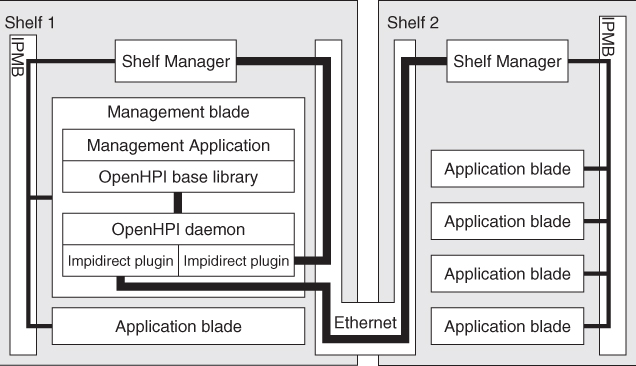

The diagram of Figure 13.2 shows the OpenHPI deployment in a typical ATCA configuration with two shelves. The management application, that is, the HPI user runs on a blade within one of shelves—Shelf 1 in this example. This blade also hosts the HPI daemon with two ipmidirect plugin instances talking to the shelf managers of both shelves.

Figure 13.2 Typical OpenHPI deployment in ATCA.

Please note that in this deployment example the two shelves are managed as a single HPI domain.

13.2.2.2 OpenHPI Deployment in ATCA with Multiple Domains

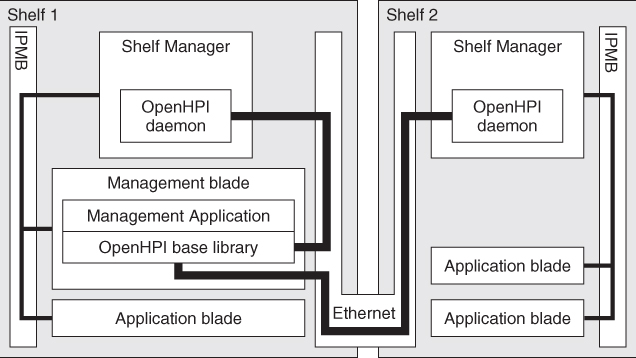

This next example of Figure 13.3 shows that the OpenHPI daemon can run also on the shelf manager, a separate daemon in each shelf manager. This configuration can manage the same hardware as in the previous example, but the HPI user, that is, the management application in shelf 1 must work with two HPI domains.

Figure 13.3 OpenHPI deployment in ATCA with multiple domains.

The OpenHPI daemon on the shelf manager may use the ipmidirect plugin or a shelf manager specific plugin.

This configuration provides better start-up times than the first one, because the discovery is performed on the shelf manager, and there the communication is faster.

13.2.2.3 OpenHPI Deployment Using the Slave Plugin

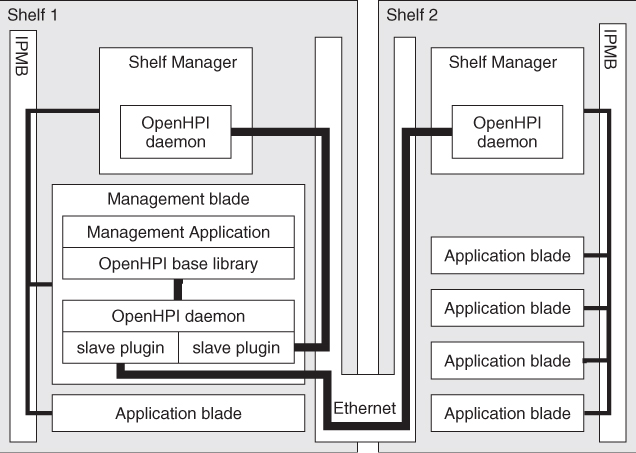

The configuration in Figure 13.4 is again very similar to the previous one. But it avoids creating different HPI domains by using the slave plugin with an additional instance of the OpenHPI daemon running on a blade of the first shelf. It is configured with two instances of the slave plugin, each talking to an OpenHPI daemon on a shelf manager.

Figure 13.4 OpenHPI deployment in ATCA using slave plugin.

Again the OpenHPI daemon on the shelf manager may use the ipmidirect plugin or a shelf manager specific plugin.

This configuration combines the advantages of the first two examples, that is, there is a single HPI domain yet the startup time is still good.

13.2.2.4 In-Band and Out-of-Band Management

High availability systems often provide the hardware management via a separate management bus like for instance in ATCA the IPMI bus. However such out-of band management typically cannot access all the hardware components. The OpenHPI plugin concept now allows using the in-band and the out-of-band communication paths in parallel, as shown in Figure 13.5.

Figure 13.5 In-band and out-of-band management.

For the specialized plugin, we need a local daemon on every blade with this specialized hardware. The specialized plugin can access the hardware components without a direct connection to the IPMI management. Thus, management applications could connect to the local daemons via a separate HPI session. But it is easier for the applications if slave plugins are used in the central daemon as this way all hardware resources can be managed using a single HPI domain.

In case an application blade is added at run time, it is also necessary to change the OpenHPI configuration dynamically: It needs to load a new instance of the slave plugin to manage the new local daemon.

13.2.3 OpenHPI Tools

13.2.3.1 Dynamic Configuration Functions

OpenHPI provides a few functions additionally to the HPI specification, which are mainly needed for the dynamic configuration. These functions allow us to:

- display the configured plugins;

- find the plugin, which manages a particular resource;

- create an additional plugin configuration;

- delete a plugin from the configuration;

- display the configured domains for the base library—this is different from displaying the DRT since the base library is no domain (not part of any HPI domain);

- add a domain to the configuration;

- delete a domain from the configuration.

These functions only temporarily change the OpenHPI configuration and do not change the configuration files.

13.2.3.2 Command Line Tools

OpenHPI provides an extensive set of command line utilities that allow calling HPI functions from a shell. These utilities can be used during testing or even as a simple hardware management interface. Most of the HPI functions can be called using these clients or from the hpi_shell, which works more interactively.

There are also clients to invoke the OpenHPI extensions for dynamic configuration.

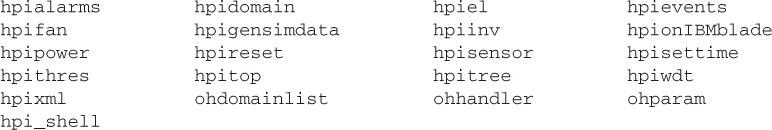

Here is a list of the client programs:

13.2.4 Open Issues and Recommendations

As any open source project, OpenHPI depends on contributions by companies or by individuals. Therefore sometimes features are prepared in the architecture, but they are never completely implemented. Similarly, the HPI standard may include a feature, but if none of the OpenHPI contributors needs this feature, it will not be implemented.

13.2.4.1 Related Domains and Peer Domains

Related domains and peer domains as defined in the HPI specification are not really used by OpenHPI. OpenHPI implements all the functions for exploring the DRT, but there is no possibility for configuring related domains or peer domains. Thus, strictly speaking, OpenHPI is compliant to the standard even with respect to the domain discovery as described in the specification by SA Forum. But there were no users for these features, and thus the implementation is incomplete.

13.2.4.2 Diagnostics and Firmware Upgrade

HPI B.02 introduced new management instruments for diagnostics and firmware upgrade. This was a major step, since hardware vendors provide different solutions for this.

OpenHPI in its main parts implements the necessary interfaces for both the DIMI and the FUMI. But unfortunately, the functions are not supported by the major plugins provided by the project. Only the dynamic simulator plugin and the slave plugin support DIMI and FUMI.

The full support of DIMI and FUMI will only be possible when more implementations of the hardware specific plugins become available.

On the positive side, OpenHPI being an open source project provides an easy access to an implementation of the SA Forum HPI specification. It is also easy to use OpenHPI for different hardware architectures and the supporting tools provided within the project improve the usability at runtime as well as during application development.

13.3 The OpenSAF Project

In the rest of the chapter we give a high level overview of the OpenSAF project and middleware. First we provide some background information on how the project came about and the evolution of the middleware implementations up until latest stable version, which is 4.1 at the time of writing this book. Subsequently we describe the main architectural, implementation, and management aspects of OpenSAF. Finally we illustrate the process of deploying OpenSAF on different target environments.

13.3.1 Background

13.3.1.1 How It All Started

The OpenSAF open source project was launched in mid 2007 by an informal group and subsequently lead to the establishment of the OpenSAF Foundation [64] on 22 January 2008 with Emerson Network Power, Ericsson, HP, Nokia Siemens Networks, and SUN Microsystems as founding members. Soon after GoAhead Software, Huawei, IP Infusion, Montavista, Oracle, Rancore Technologies, Tail-f, and Wind River have also joined the foundation.

The initial codebase of the project was contributed by Motorola Embedded Communication and Computing business by open-sourcing their Netplane Core Services, an high availability (HA) middleware software. This initial contribution included some SA Forum compliant APIs, but mostly legacy services. Since the project launch the OpenSAF architecture has been streamlined and completely aligned with SA Forum AIS specifications.

To achieve portability across different Linux distributions, OpenSAF is consistent with the Linux Standard Base (LSB) [88] APIs. This way OpenSAF uses only those operating system (OS) APIs that are common in all LSB compliant Linux distributions. Ports to other, non-Linux, OS is still easy and has been done by some OpenSAF users since OpenSAF uses Portable Operating System Interface for Unix (POSIX) [73] as its OS abstraction layer.

13.3.1.2 OpenSAF 2.0 and 3.0: Pre-4.0 Era

The first release of the project, OpenSAF 2.0, was in August 2008. Its main highlights were the addition of the SA Forum Log service (LOG) implementation and the 64-bit adaptation of the entire codebase. While working on this first release the development processes and infrastructure has been settled: OpenSAF uses the Mercurial distributed version control system and Trac for the web-based project management and wiki.

The implementations of the SA Forum Information Model Management (IMM) and Notification services (NTFs) were added in OpenSAF 3.0 released in June 2009. At the same time most of the existing SA Forum services were also lifted up to match the latest specification versions. In addition the complete build system was overhauled to be based on the GNU autotools.

13.3.1.3 OpenSAF 4.0 Aka ‘Architecture’ Release

OpenSAF 4.0 was released in July 2010 and has been, so far, the biggest and most significant release earning the nickname the ‘Architecture’ release. It addressed three key areas: closing the last functional gaps with the SA Forum services, streamlining architecture, and improving the modularity.

The functional gaps with the SA Forum services were closed by the contribution of

- the Software Management Framework (SMF), which enabled in-service upgrades with minimal service disruption; and

- the Platform Management service (PLM) allowing the IMM-based management of hardware and low-level software.

Streamlining the architecture meant refactoring of the OpenSAF internals so that the OpenSAF services themselves became aligned with the specification and used the SA Forum service implementations instead of the legacy services. This allowed the removal of eight of the legacy services and despite the increased functionality (i.e., the addition of SMF and PLM) resulted in the reduction of the total code volume by almost two-thirds.

In this release modularity was a strong focus as well:

- The established modular architecture facilitates the evolution of OpenSAF without compromising the support for users requiring a minimum set of services.

- In the fully redesigned packaging each service is packaged in its own set of RPM Package Managers (RPMs) to further support the wide-range of deployment cases that different OpenSAF users might have.

13.3.1.4 OpenSAF 4.1: Post-4.0 Era

OpenSAF 4.1, which was released in March 2011 focused mainly on the functional improvements of the existing services. These included the addition of the rollback support in SMF; adding Transmission Control Protocol (TCP) as an alternative communication mechanism while still keeping Transparent Inter-Process Communication (TIPC) as the default transport protocol; some substantial improvements of IMM in regard to handling of large IMM contents and schema upgrades; as well as improved handling of large Availability Management Framework (AMF) models.

Additionally the release delivers a Java Agent compliant with the JSR 319 (Java Specification Request) specification, which makes OpenSAF ready for integration with any Java Application Server compliant with JSR 319 [100].

13.3.2 OpenSAF Architecture

We look at the OpenSAF architecture from the process, deployment, and logical views. In the process and deployment views we describe the architectural patterns used by the implemented OpenSAF services. In the logical view we expand further on the different services and how they use the corresponding architectural patterns.

13.3.2.1 Process View

Within OpenSAF the different service implementations use one of the following architectural patterns.

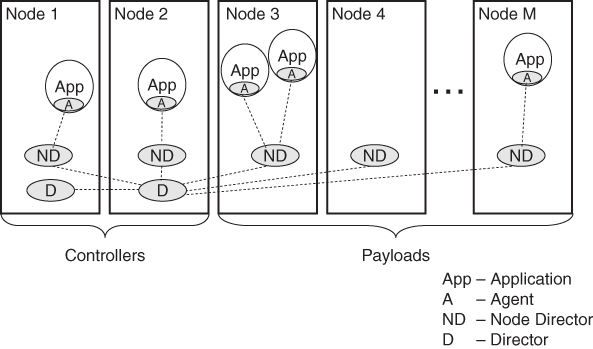

Three-Tier Architecture

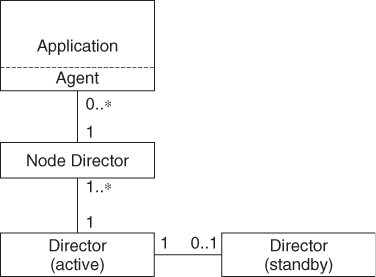

The three-tier architecture decomposes the service functionality into three parts as show in Figure 13.6: the director, the node director, and the agent.

Figure 13.6 The three-tier architecture.

Director

A director for a particular service implementation manages and coordinates the key data among the other distributed parts of this service implementation. It communicates with one or more node directors. Directors are implemented as daemons and use the Message Base Checkpoint service (MBCsv) discussed in Section 13.3.4.2 to replicate the service state between the active and the standby servers running on the system controller nodes.

Node Director

Node directors handle all the activities within the scope of a node such as interacting with the central director and with the local service agent. They are implemented as daemons.

Agent

A service agent makes the service functionality available to the service users such as client applications, by means of shared linkable libraries, which expose the SA Forum APIs. Agents execute within the context of the application process using the service interface.

The main advantage of the three-tier architecture is that by offloading the node scoped activities from the singleton directors to the node directors it offers better scalability.

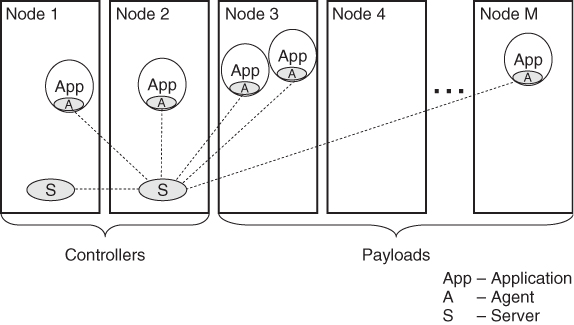

Two-Tier Architecture

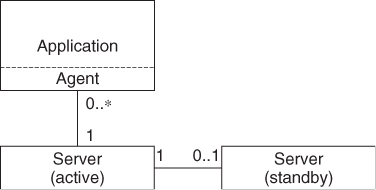

Not surprisingly the two-tier architecture decomposes the service functionality into two parts: the server and the agent shown in Figure 13.7.

Figure 13.7 The two-tier architecture.

Servers

The server provides the central intelligence for a particular service combining the roles of the director and the node directors of the three-tier architecture. The server communicates directly with service agents. Servers are implemented as daemons. Servers also use MBCSv discussed in Section 13.3.4.2 to replicate the service state between the active and the standby server instances running on the system controller nodes.

Agents

As in case of the three-tier architecture, agents expose the SAF APIs toward the client applications making for them the service functionality available in the form of shared linkable libraries. Agents execute in the context of the application processes.

This architecture is simple and suitable for services, which are not load-intensive. Using this architecture for load intensive services would limit their scalability.

One-Tier Architecture

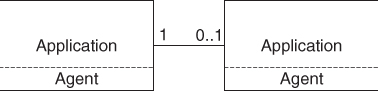

In this architecture a service is implemented as pure-library, an agent only shown in Figure 13.8. It is the least used architecture, only some OpenSAF Infrastructure services are using it.

Figure 13.8 The one-tier architecture.

13.3.2.2 Deployment View

Node Types

For the easier development of distributed services including SA Forum services themselves, OpenSAF defines two categories of nodes within an OpenSAF cluster:

- System controller node

There are at most two designated nodes within the OpenSAF cluster that host the centralized functions of the various OpenSAF services. Application components may or may not be configured on a system controller node depending on the application's requirements.

- Payload node

All nodes which are not system controller nodes are termed payload nodes. From the perspective of the middleware, these nodes contain only functions of the various OpenSAF services, which are limited in scope to the node.

Mapping of Processes to Node Types

The processes and components described in the process view in Section 13.3.2.1 are mapped to node types of the deployment view described in section ‘Node Types’ as follows:

Directors as well as servers run on the system controller nodes and they are configured with the 2N redundancy model. An error in a director or a server will cause the failure of the hosting system controller node and the fail-over of all of the services of the node as a whole. There is one director component per OpenSAF service per system controller node.

Node directors run on all cluster nodes (i.e., system controller and payload nodes), and are configured with the no-redundancy redundancy model. On each node there is one node director component for each OpenSAF service implemented according to the three-tier architecture.

Agents are available on all cluster nodes and since they are pure libraries their redundancy model or the exact location where they execute are fully determined by the actual components using them.

Figure 13.9 illustrates the process mapping of a service implemented according to the three-tier architecture (e.g., IMM) to the nodes of a cluster. It also indicates some application processes using this three-tier service. In this particular case the active director runs on Node 2.

Figure 13.9 Mapping of the process view to the deployment view in case of a three-tier service.

Figure 13.10 shows the process mapping in case of a two-tier service such as NTF to the cluster nodes, as well as, some application processes using the service. As in the previous example, the active Server runs on Node 2.

Figure 13.10 Mapping of the process view to the deployment view in case of a two-tier service.

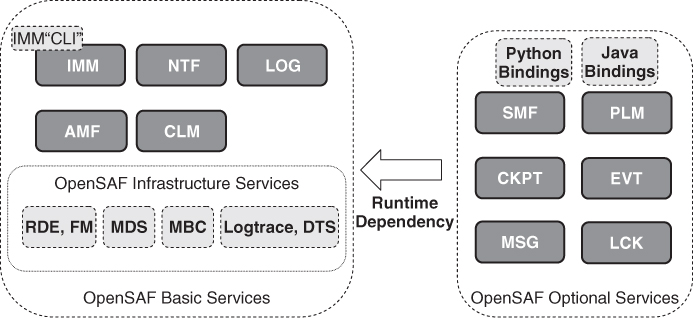

13.3.2.3 Logical View

The logical view of the architecture distinguishes between two groups of services: The OpenSAF services implementing the SA Forum specifications, that is, the SA Forum compliant services and the OpenSAF supporting services providing the infrastructure.

Only the first group of services is intended to be used by applications. The second group supports the implementation of the OpenSAF middleware itself, and is not intended for application. These are nonstandard services, on which OpenSAF provides no backward compatibility guarantees.

A subset of the SA Forum compliant services and the OpenSAF infrastructure services together compose the core of the OpenSAF implementation and referred as the OpenSAF Basic Services in Figure 13.11. They need to be deployed in any system using OpenSAF. The remaining SA Forum compliant services are optional and can be deployed as needed on the target system. They have a runtime dependency on the Basic Services.

Figure 13.11 The OpenSAF services.

This modular architecture allows the growth of the OpenSAF project without compromising the minimum system size for those who need only the basic services.

In the following section we take a closer look at each of the existing OpenSAF services and highlight some implementation specifics without going into details on the functional aspects for which we refer the reader to the corresponding chapters of the book.

13.3.3 SA Forum Compliant Services

13.3.3.1 Availability Management Framework

The OpenSAF AMF service is the implementation of the SA Forum AMF discussed in details in Chapter 6. It is implemented according to the three-tier architecture described in section ‘Three-Tier Architecture’.

Besides the director, node directors, and agents, it contains an additional element, an internal watchdog, which guarantees the high availability of the AMF node director (AmfND) by monitoring its health at regular intervals using AMF invoked healthcheck mechanism.

The AMF Director (AmfD) is responsible for the deployment of the AMF configuration specified in IMM at the system level. It receives the configuration and through the node directors it deploys the logical entities of the model in the system. That is, it coordinates the implementation of the AMF nodes, applications, their service groups with their service units and constituent components, as well as the corresponding component service instance and service instances.

The main task of the AmfND is to deploy and maintain those logical entities of the AMF information model that belong to a single node.

The AmfND is in control of the life-cycle of the components of the node it manages. It is capable of disengaging, restarting, and destroying any component within its scope. This may occur in accordance with the instructions of the AmfD, as a result of an administrative action, or automatically based on the applicable configured policies.

The AmfND performs fault detection, isolation, and repairs of components on the node and coordinates these actions with the AmfD.

The AmfND interacts with the component through the AMF Agent (AmfA) as necessary; it maintains the states of the components and the encapsulating service units. It keeps the AmfD informed about the current status and any changes.

In the OpenSAF 4.1 release the AMF API and the functional scope are compliant to SAI-AIS-AMF-B.01.01 version of the specification while the AMF information model is consistent with SAI-AIS-AMF-B.04.01 specification.

13.3.3.2 Cluster Membership Service

The OpenSAF CLM service implements the SA Forum CLM presented in Section 5.4. It is implemented using two-tier architecture as discussed in section ‘Two-Tier Architecture’. It also includes an additional element, the CLM Node Agent, which runs on each node and allows the node to join the cluster only if it is a configured node.

The CLM Server (ClmS) maintains the database of all the nodes in cluster as well as all application processes, which have registered for cluster tracking. When the CLM is deployed together with the PLM the ClmS subscribes with PLM for the readiness track callbacks to track the status of the underlying execution environments for all cluster nodes.

The ClmS considers a CLM node as a node qualifying for CLM when all of the following conditions are met:

- the CLM node is configured in IMM;

- the CLM node is not locked administratively;

- there is connectivity between the ClmS and CLM node over the Message Distribution Service (MDS) (see Section 13.3.4.1); and

- the readiness state of the execution environment hosting the CLM node in not out-of-service, provided the ClmS is a subscriber to the PLM track API.

The OpenSAF 4.1 CLM implementation supports two versions of the API specification: SAI-AIS-CLM-B.04.01 and SAI-AIS-CLM-B.01.01.

13.3.3.3 Information Model Management Service

The OpenSAF IMM service implements the SA Forum IMM which we presented in Section 8.4. The service is implemented according to three-tier architecture of section ‘Three-Tier Architecture’.

IMM is implemented as in-memory database, which replicates its data on all nodes. This replication pattern is suitable for configuration data as configuration data are frequently read but seldom updated. Thus reading the data is fast and it does not require inter-node communication, while writing the data is more consuming since the change is propagated to all nodes in the cluster.

Although it is in-memory database, IMM can use SQLite as its persistent backend, in which case IMM also writes any committed transaction to the persistent storage. In this case none of the committed transactions is lost even in case of system restart.

Each IMM Node Director (ImmND) process contains the IMM repository (with the complete SA Forum information model) and all ImmNDs, which are in sync, are identical. The ImmNDs replicate their data using the reliable multicast service provided by the IMM Director (ImmD). The ImmD also elects one of the ImmNDs as a coordinator for driving any sync process required if any of the ImmNDs goes out of sync, for example, because its node has been restarted, or if the node just joining cluster.

While such synchronization is in progress, the IMM repository (i.e., the SA Forum information model) is not writable, however, it will serve read requests.

In the OpenSAF 4.1 release the IMM API and functional scope are compliant with the SAI-AIS-IMM-A.02.01 version of the specification.

13.3.3.4 Notification Service

The OpenSAF NTF service implements the SA Forum NTF described in Section 8.3 according to the two-tier architecture presented in section ‘Two-Tier Architecture’.

The NTF Server (NtfS) maintains the list of subscribers in the cluster together with their subscriptions. Based on this information the NtfS compares each incoming NTF with the subscriptions and if there is a match it forwards the NTF to the corresponding subscribers. The NtfS also caches the last 1000 alarms published in the cluster. These can be read by using the NTF reader API.

The OpenSAF 4.1 NTF service API and functional scope are compliant with the SAI-AIS-NTF-A.01.01 version of the specification.

13.3.3.5 Log Service

The OpenSAF LOG service implements the SA Forum LOG presented also in Section 8.2 according to the two-tier architecture (see section ‘Two-Tier Architecture’).

It is intended for logging high-level information of cluster-wide significance and suited to troubleshoot issues related, for example, to misconfigurations, network disconnects, or unavailable resources.

The LOG Server (LogS) maintains a database with all the log streams currently open in the cluster. Using this database it writes each incoming log record to the file corresponding to the log stream indicated as the destination of the log record.

The LogS also implements the file management required by the specification: It creates a configuration file for each new log stream. It also handles the file rotation for the log streams according to their configuration.

In the OpenSAF 4.1 release the LOG implementation is fully compliant to the SAI-AIS-LOG-A.02.01 specification.

13.3.3.6 Software Management Framework

The SA Forum SMF presented in Chapter 9 is implemented by OpenSAF SMF service according to the three-tier architecture (see section ‘Three-Tier Architecture’).

The SMF Director (SmfD) is responsible for parsing the upgrade campaign specification XML file and controlling its execution. The servers and directors of the OpenSAF service implementations discussed so far use the MBCSv (see Section 13.3.4.2) to maintain a ‘hot’ standby state in their redundant peer. The SmfD instead stores its runtime state in IMM to achieve a similar ‘hot’ standby behavior.

An SMF Node Director (SmfND) performs all the upgrade actions which need to be executed locally on the nodes it is responsible for such as the installation of software bundles. The SmfND only acts by direct order from the SmfD.

The SMF service implementation of the OpenSAF 4.1 release is compliant to the SAF-AIS-SMF-A.01.02 specification with respect to the API and functionality.

However it extends the scope of the specification, which applies only to AMF entities, to some PLM entities. In particular it introduces an additional set of upgrade steps to cover the scenarios of low-level software (OS and middleware itself) upgrades and to support systems with RAM disk based file systems.

13.3.3.7 Checkpoint Service

The OpenSAF CKPT service is the implementation of the SA Forum CKPT specification discussed in Section 7.4. It uses the three-tier architecture pattern described in section ‘Three-Tier Architecture’.

The CKPT provides a facility for user processes to record their checkpoint data incrementally, for example, to protect the application against failures.

The OpenSAF 4.1 CKPT implementation is compliant to the SAI-AIS-CKPT-B.02.02 specification with respect to its API and functionality. However it provides additional non-SAF APIs to facilitate the implementation of a hot-standby component. Namely, it allows the tracking of changes in checkpoints and receiving callbacks whenever the content is updated.

The CKPT Director (CkptD) maintains a centralized repository of some control information for all the checkpoints created in the cluster. It also maintains the location information of the active replicas for all checkpoints opened in the cluster. In case of a non-collocated checkpoint, the CkptD designates a particular node to manage an active replica for that checkpoint and also decides on the number of replicas to be created. If a non-collocated checkpoint is created by an opener residing on a controller node then there will be two replicas, one on each system controller node. If the creator-opener resides on a payload node an additional replica is created on that payload node.

To reduce the amount of data copied between an application process and the CKPT Node Director, the OpenSAF CKPT uses the shared memory for storing checkpoint replicas. This choice also improves significantly the read performance.

13.3.3.8 Message Service

The SA Forum MSG presented in Section 7.3 is implemented by OpenSAF MSG service according to the three-tier architecture pattern discussed in section ‘Three-Tier Architecture’.

The MSG provides a multipoint-to-multipoint communication mechanism for processes residing on the same or on different nodes. Application processes using this service send messages to queues and not to the receiver application processes themselves. This means, if the receiver process dies, the message stays in the queue and can be retrieved by the restarted process or another one on the same or a different node.

The MSG Director maintains a database for the message queues and queue groups existing in the system. It contains the location, state, and some other information for each of them.

A message queues itself is implemented by a MSG Node Director. It uses Linux message queues to preserve the messages irrespective of the application process life-cycle.

In the OpenSAF 4.1 release the MSG API and functional scope is compliant to the SAI-AIS-MSG-B.03.01 specification.

13.3.3.9 Event Service

The SA Forum EVT also discussed in Section 7.2 is implemented according to two-tier architecture by the OpenSAF EVT service.

The EVT Server (EvtS) is a process, which distributes the published events based on subscriptions and associated filtering criteria. It maintains the publisher and subscriber information. If an event is published with a nonzero retention time, the EvtS also retains the event for this retention time period. From this repository the EvtS will deliver to new subscribers any event that matches their subscription.

The OpenSAF 4.1 EVT implementation is compliant to the SAI-AIS-EVT-B.03.01 specification.

13.3.3.10 Lock Service

The OpenSAF LCK service implements the SA Forum LCK using the three-tier architecture presented in section ‘Three-Tier Architecture’. The LCK is a distributed lock service, which allows application processes running on a multitude of nodes to coordinate their access to some shared resources.

The LCK Director (LckD) maintains the details about the different resources opened by each node represented by a LCK Node Director (LckND), and about the different nodes from which a particular resource has been opened. The LckD elects a master LckND for each particular resource responsible for controlling the access to this resource.

In OpenSAF 4.1 the LCK service API and functional implementation is compliant to SAI-AIS-LCK-B.01.01 specification.

13.3.3.11 Platform Management Service

The OpenSAF PLM service implements the SA Forum PLM discussed in Section 5.3. The service is implemented according to two-tier architecture (see section ‘Two-Tier Architecture’). The architecture includes an extra element called the PLM Coordinator, which executes on each node.

The PLM provides a logical view of the hardware and low-level software of the system.

The PLM Server (PlmS) is the object implementer for the objects of the PLM information model and it is responsible for mapping the hardware elements of the model to the hardware entities reported by the HPI implementation.

The PlmS also maintains the PLM state machines for the PLM entities, manages the readiness tracking and the associated entity groups. It also maps HPI events to PLM notifications.

The PLM Coordinator coordinates the administrative operations and in particular the validation and start phases of their execution. It performs its task in an OS agnostic way.

The OpenSAF 4.1 PLM service implementation is compliant to the SAI-AIS-PLM-A.01.02 specification with respect to the provided functionality and APIs.

13.3.4 OpenSAF Infrastructure Services

These services are used within the OpenSAF middleware as support services, they are not intended for applications use. Of course since OpenSAF is an open source implementation one can find and access the APIs these services provide, however they are not SA Forum compliant. Moreover OpenSAF does not provide any backward compatibility guarantees with respect to these services.

13.3.4.1 Message Distribution Service

The OpenSAF MDS is a non-standard service providing the inter-process communication infrastructure within OpenSAF. By default it relies on the TIPC [107] protocol as the underlying transport mechanism. It is implemented using one-tier architecture discussed in section ‘One-Tier Architecture’.

By changing its default configuration MDS can also use TCP [108] instead of TIPC. This is convenient:

- for OSs which do not have a TIPC port; or

- when layer three connectivity is required between the nodes of the cluster, for example, because the cluster is geographically distributed.

MDS provides both synchronous and asynchronous APIs and supports data marshaling. An MDS client can subscribe to track the state changes of other MDS clients. The MDS then informs the subscriber about the arrival, exit, and state change of other clients.

13.3.4.2 Message Based Checkpoint Service

The OpenSAF Message Based Checkpointing Service (MBCSv) is a non-standard service that provides a lightweight checkpointing infrastructure within OpenSAF.

MBCSv uses also the one-tier architecture presented in section ‘One-Tier Architecture’.

MBCSv provides a facility that processes can use to exchange checkpoint data between the active and the standby entities to protect their service against failures. The checkpoint data helps the standby entity turning into active to resume the execution from the state recorded at the moment the active entity failed.

For its communication needs MBCSv relies on the MDS discussed in Section 13.3.4.1. Among its clients MBCSv dynamically discovers the peer entities and establishes a session between one active and possibly multiple standby entities. Once the peers are established MBCSv also drives the synchronization of the checkpoint data maintained at the active and standby entities.

13.3.4.3 Logtrace

Logtrace is a simple OpenSAF-internal infrastructure service offering logging and tracing APIs. It maps logging to the syslog service provided by the OS, while tracing, which is disabled by default, writes to a file.

It is implemented using the one-tier architecture discussed in section ‘’.

13.3.4.4 Role Determination Engine

The Role Determination Engine (RDE) service determines the HA role for the controller node on which it runs based on a platform specific logic. It informs AMF about the initial role of controller node and also checks controller state in case of switchover. In its decision-making process it relies on TIPC.

13.3.5 Managing OpenSAF

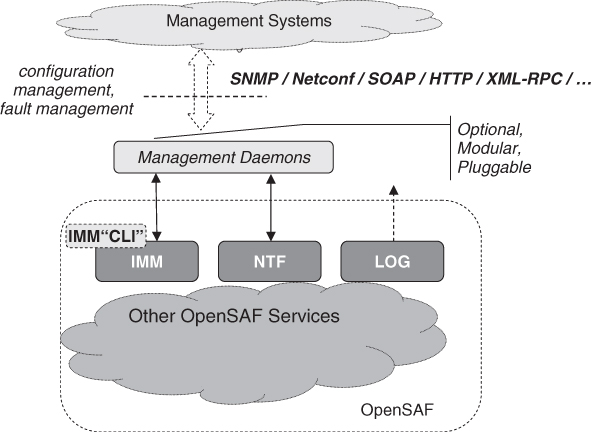

The OpenSAF management architecture is aligned with SA Forum principles. That is, the configuration of OpenSAF is managed via the IMM service; for fault management the middleware service use the NTF; and the SMF is used to orchestrate in-service upgrades of the middleware itself as well as its applications. Note that even the upgrades and SMF itself are controlled via IMM since it manages the SMF information model.

Management architecture is illustrated in Figure 13.12.

Figure 13.12 The OpenSAF management architecture.

IMM and NTF expose their standard C APIs respectively. The OpenSAF project has made the deliberate architectural choice not to limit itself on a single management and/or transport protocol such a Netconf, SNMP, CORBA (Common Object Request Broker Architecture), HTTP (HyperText Transmission Protocol), SOAP, and so on. This enables users of OpenSAF to use management access agents best suited to their needs and preferences.

However to improve its usability (e.g., at evaluation, testing, etc.), OpenSAF offers a simple management client in the form of a few Linux shell commands that allow the manipulation of the IMM content in a user friendly way, as well as generation and subscription to notifications. It also includes a few high-level shell commands for typical AMF and SMF use cases.

13.3.5.1 IMM Shell Commands

These are the commands of the simple Object Management client:

- immcfg: It creates, modifies, or deletes an IMM object. It can also load IMM classes and/or objects from a file.

- immadm: It performs an administrative operation on an IMM object.

- immlist: It prints the attributes of IMM objects.

- immfind: It finds one or more IMM object satisfying the search criteria.

- immdump: It dump the IMM content to a file.

13.3.5.2 NTF Shell Commands

These two commands are implemented by the simple NTF client:

- ntfsubscribe: This command subscribes for all incoming notifications or notifications of a certain type.

- ntfsend: This command does not qualify as management command; but rather can be used by application or more likely test programs and scripts to send notifications.

13.3.5.3 AMF Shell Commands

The AMF shell commands are implemented based on the IMM shell commands. While each of the operations, which can be performed with the AMF shell commands, can be performed directly by IMM shell commands, these commands were defined with syntax and semantic suited to the domain of AMF. Thus, they simplify the management operations on AMF information model.

The commands are:

- amf-adm: It executes different administrative operations on objects of AMF information model.

- amf-find: It searches for objects in the AMF information model.

- amf-state: It displays different state information of objects of the AMF information model.

13.3.5.4 SMF Shell Commands

Similarly to the AMF commands described in previous section, the SMF shell commands are also implemented using based on the IMM shell commands and

- smf-adm: It executes different administrative operations on an upgrade campaign object.

- smf-find: It searches for objects in the SMF information model.

- smf-state: It displays different state information of objects the SMF information model.

13.3.6 Deploying OpenSAF

13.3.6.1 Real or Virtualized Target System

In this section we go through the steps involved with the initial deployment of OpenSAF on a target system. As target system we consider a set of computers (nodes) interconnected at layer-two such as by a switched Ethernet subnet.

The OpenSAF tarball can be fetched from OpenSAF Project we-page [91]. Alternatively it can be cloned from the mercurial repository.

To bootstrap the build system execute:

$./bootstrap.sh

Note: OpenSAF has package dependencies, therefore it is recommended to check the README file in the OpenSAF source tree to find out about them.

$./configure

$ make rpm

To simplify the installation OpenSAF provides the ‘opensaf-controller’ and the ‘opensaf-payload’ meta-packages whose dependencies toward other packages have been set-up so that it is clear which packages need to be installed in the controller nodes and which on the payload nodes. This way if OpenSAF is installed from a supported yum server then it will resolve dependency.

To install OpenSAF on the controller nodes:

$ yum install opensaf-controller

To install OpenSAF on the payload nodes:

$ yum install opensaf-payload

OpenSAF provides the support needed to generate the initial AMF information model for OpenSAF itself for the specific target. This includes:

Adjusting the model to the actual size of the cluster

$ immxml-clustersize -s 2 -p 6

The example above prepares a configuration for an eight-node cluster consisting of two system controller nodes and six payload nodes and stores it in the nodes.cfg file.

From this file

$ immxml-configure

command generates the actual OpenSAF configuration which is then copied to the /etc/opensaf/imm.xml folder

These commands also make sure that on each node of the cluster the /etc/opensaf/node_name matches the value in the nodes.cfg file in the row corresponding to that node.

OpenSAF is started after rebooting node, or by executing as root the following command: # /etc/init.d/opensafd start

That's it! With these seven easy steps OpenSAF is up and running.

13.3.6.2 User-Mode Linux Environment

OpenSAF can run in a very convenient User-Mode Linux (UmL) environment included in the distribution. This environment is primarily used by the OpenSAF developers themselves, but users can use to get some quick hands-on experience with OpenSAF.

The first three steps of getting OpenSAF, bootstrapping, and configuring it are the same as for a real target system presented in the previous Section 13.3.6.1. So here we start with:

$ make

$ cd tool/cluster_sim_uml

$./build_uml

$./build_uml generate_immxml 4

$./opensaf start 4

Now we have a cluster consisting of four nodes running OpenSAF.

Stop the cluster:

$./opensaf stop.

13.4 Conclusion

In this chapter we review the two leading implementations of the SA Forum specifications OpenHPI and OpenSAF. Both are developed within open source projects with contributions from companies leading in the HA and service availability domain that were also the main contributors of the SA Forum specifications themselves.

With the OpenHPI project there is an easy access to an implementation of the SA Forum HPI specification. This fact facilitated the acceptance of the specification by vendors enormously. As a result OpenHPI has become a key factor and plays significant role in the industry, in the domain of carrier grade and high availability systems. Its position is also promoted by its architectural solution, which makes OpenHPI easy to use for many different hardware architectures. The supporting tools provided within the project improve the usability of the HPI specification at deployment time as well as during the development of hardware management applications.

OpenSAF has had an impressive development and evolution since the project launch. To reach maturity the major focus has been on streamlining its architecture and aligning it with SA Forum architecture and the latest releases of the specifications. This makes OpenSAF an open-source middleware which is the most consistent with the SA Forum AIS.

Besides improving the architecture significant effort has been made to improve its robustness, scalability, maintainability, and modularity. OpenSAF also includes Python bindings for all its services making it an attractive solution for quick system integration and prototyping. Together with the existing standard C and Java support OpenSAF is able to address the needs of a wide range of applications.

All the above and the emerging ecosystem of components and supporting tools ranging from commercial management clients to tools for automatic generation of AMF configurations as well as SMF upgrade campaign specifications make OpenSAF the number one choice for applications, which do not want to compromise service availability and which do not want to be locked into proprietary solutions.

1 This plugin replaces the ‘ipmi’ plugin that used the open source code OpenIPMI to generate the commands.