CHAPTER 1: Transmission

TRANSMISSION GOALS

Transmission is the conveyance of a waveform from one place to another. Transmission quality is judged by how faithfully the arriving signal tracks the original waveform. We capture the original acoustic or electronic waveform, with the intent of eventually reconstituting it into an acoustic waveform for delivery to our ears. The odds are against us. In fact there is absolutely zero probability of success. The best we can hope for is to minimize the distortion of the waveform, i.e., damage control. This is the primary goal of all efforts described in this book. This may sound dispiriting, but it is best to begin with a realistic assessment of the possibilities. Our ultimate goal is one that can be approached, but never reached. There will be large numbers of decisions ahead, and they will hinge primarily on which direction provides the least damage to the waveform. There are precious few avenues that will provide none, and often the decision will be a very fine line.

transmission n. transmitting or being transmitted; broadcast program

transmit v.t. 1. pass on, hand on, transfer, communicate. 2. allow to pass through, be a medium for, serve to communicate (heat, light, sound, electricity, emotion, signal, news)Concise Oxford Dictionary

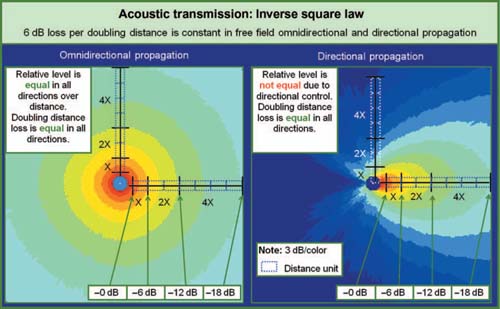

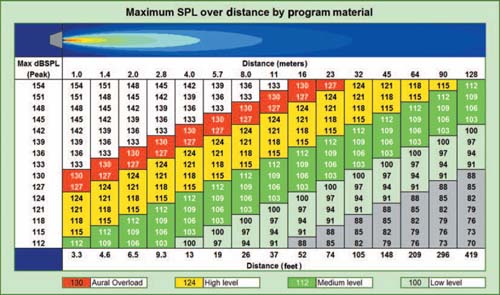

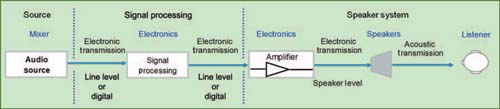

Our main study of the transmission path will look at three modes of transmission: line level electronic, speaker level electronic, and acoustic. If any link in the transmission chain fails, our mission fails. By far the most vulnerable link in the chain is the final acoustical journey from the speaker to the listener. This path is fraught with powerful adversaries in the form of copies of our original signal (namely reflections and arrivals from the other speakers in our system), which will distort our waveform unless they are exact copies and exactly in time. We will begin with a discussion of the properties of transmission that are common to all parts of the signal path (Fig. 1.1).

AUDIO TRANSMISSION DEFINED

An audio signal undergoes constant change, with the motion of molecules and electrons transferring energy away from a vibrating source. When the audio signal stops changing, it ceases to exist as audio. As audio signals propagate outwards, the molecules and electrons are displaced forward and back but never actually go anywhere, always returning to their

FIGURE 1.1

Transmission flow from the signal source to the listener.

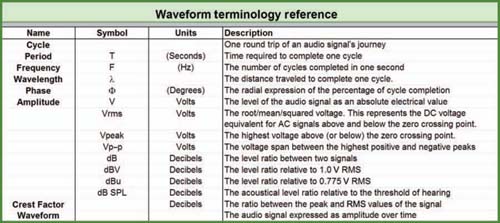

point of origin. The parameter that describes the extent of the change is the amplitude, also referred to as magnitude. A single round trip from origin and back is a cycle. The round trip takes time. That length of time is the period and is given in seconds, or for practical reasons in milliseconds (ms). The reciprocal of the period is frequency, the number of cycles completed per second, given in hertz (Hz). The round trip is continuous with no designated beginning or end. The trip can begin anywhere on the cycle and is completed upon our return to the same position. The radial nature of the round trip requires us to find a means of expressing our location around the circle. This parameter is termed the phase of the signal. The values are expressed in degrees, ranging from 0° (point of origin) to 360° (a complete round trip). The half-cycle point in the phase journey, 180°, will be of particular interest to us as we move forward.

All transmission requires a medium, i.e., the entity through which the audio signal passes from point to point, made of molecules or electrons. In our case, the primary media are wire (electronic) and air (acoustic), but there are interim media as well such as magnetic and mechanical. The process of transferring the audio energy between media is known as transduction. The physical distance required to complete a cycle in a particular medium is the wavelength and is expressed in some form of length, typically meters or feet. The size of the wavelength for a given frequency is proportional to the transmission speed of our medium.

The physical nature of the waveform's amplitude component is medium-dependent. In our acoustical case, the medium is air and the vibrations are expressed as a change in pressure. The half of the cycle with higher than the ambient pressure is termed pressurization, while the low-pressure side is termed rarefaction. A loudspeaker's forward motion into the air creates pressurization and its rearward movement creates rarefaction.

The movements of speaker cones do not push air across the room in the manner of a fan. If a room is hot, it is unlikely that loud music will cool things down. Instead air is moved forward and then it is pulled back to its original position. The transmission passes through the medium, which is an important distinction. Multiple transmissions can pass through the medium simultaneously even from different directions.

Electrical pressure change is expressed as positive and negative voltage. This movement is also termed alternating current (AC) since it fluctuates above and below the ambient voltage. A voltage that maintains a constant value over time is termed direct current (DC).

Our design and optimization strategies require a thorough understanding of the relationships between frequency, period, and wavelength.

Time and frequency

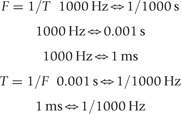

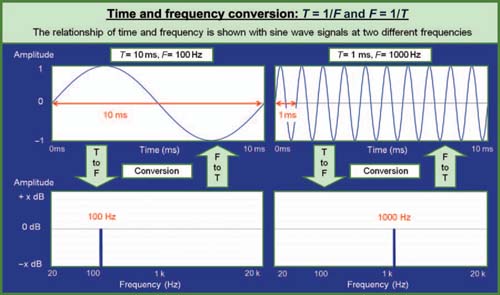

Let's start with a simple tone, a sine wave, and the relationship of frequency (F) and period (T):

T = 1/F and F = 1/T

where T is the time period of a single cycle in seconds and F is the number of cycles per second (Hz).

To illustrate this point, we will use a convenient frequency and delay for clarity: 1000 Hz (or 1 kHz) and 1/1000th of a second (or 1 ms).

If we know the frequency, we can solve for time. If we know time, we can solve for frequency. Therefore

For most of this text, we abbreviate the time period to the term “time” to connote the cycle duration of a particular frequency (Fig. 1.2).

Frequency is the best-known parameter since it is closely related to the musical term “pitch.” Most audio engineers relate first in musical terms since few of us got into this business because of a lifelong fascination with acoustical physics. We must go beyond frequency/pitch, however, since our job is to “tune” the sound system, not tune the musical instruments. Optimization strategies require an ever-present three-way link between frequency, period, and wavelength. The frequency 1 kHz exists only with its reciprocal sister 1 ms. This is not medium-dependent, nor temperature-dependent, nor waiting upon a standards committee ruling. This is one of audio's few undisputed absolutes. If the audio is traveling in a wire, those two parameters will be largely sufficient for our discussions. Once in the air, we will need to add the third dimension: wavelength. A 1 kHz signal only exists in air as a wavelength about as long as the distance from our elbow to our fist. All behaviors at 1 kHz are governed by the physical reality of the signal's time period and its wavelength. The first rule of optimization is to never think of an acoustical signal without consideration of all three parameters.

Wavelength

Wavelength is proportional to the medium's unique transmission speed. A given frequency will have a different wavelength in its electronic form (over 500,000 × larger) than its

FIGURE 1.2

Amplitude vs. time converted to amplitude vs. frequency.

TABLE 1.1 Speed of sound reference

| Plain language | Imperial/American measurement | Metric measurement |

| Speed of sound in air at 0° | 1052 ft/s | 331.4 m/s |

| +Adjustment for ambient air temperature | +(1.1 × T) Temperature T in °F | +(0.607 × T) Temperature T in °C |

| = Speed of sound at ambient air temperature | = c feet/second | = c meters/second |

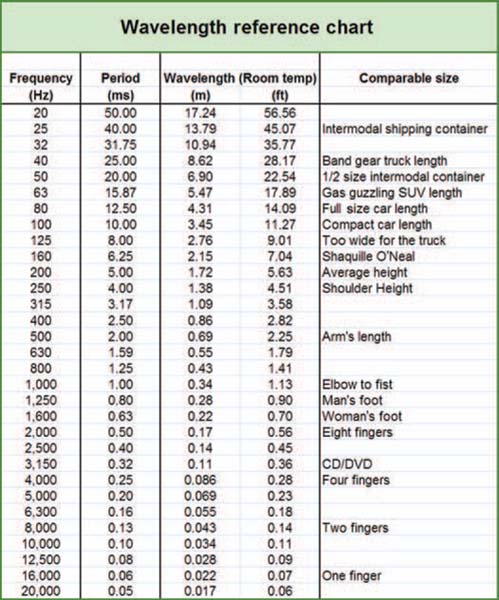

FIGURE 1.3

Chart of frequency, period, and wavelength (at room temperature) for standard one-third octave frequencies.

acoustic version. If the medium is changed, its transmission speed and all the wavelengths change with it.

The wavelength formula is

L = c/F

where L is the wavelength in meters, c is the transmission speed of the medium, and F is the frequency (Hz).

Transmission speed through air is among the slowest. Water is a far superior medium in terms of speed and high-frequency (HF) response; however, the hazards of electrocution and drowning make this an unpopular sound reinforcement medium (synchronized swimming aside). We will stick with air.

The formulas for the speed of sound in air are as shown in Table 1.1.

For example, at 22°C:

c = (331.4 + 0.607 × 22)m/s

c = 344.75 m/s

The audible frequency range given in most books is 20 Hz to 20 kHz. Few loudspeakers are able to reproduce the 20 Hz or 20 kHz extremes at a power level sufficient to play a significant role. It is more useful to limit the discussion to those frequencies we are likely to encounter in the wild: 31 Hz (the low B note on a five-string bass) up to 18 kHz. The wavelengths within this band fall into a size range of between the width of a finger and a standard intermodal shipping container. The largest wavelengths are about 600 times larger than the smallest (Figs 1.3–1.5).

Why it is that we should be concerned about wavelength? After all, there are no acoustical analyzers that show this on their display. There are no signal-processing devices that depend on this for adjustment. In practice, there are some applications where we can be blissfully ignorant of wavelength, for example: when we use a single loudspeaker in a reflection-free environment. For all other applications, wavelength is not simply relevant: it is decisive. Wavelength is the critical parameter in acoustic summation. The combination of signals at a given frequency is governed by the number of wavelengths that separate them. There is a lot at stake here, as evidenced by the fact that Chapter 2 is dedicated exclusively to this subject: summation. Combinations of wavelengths can range from maximum addition to maximum cancellation. Since we are planning on doing lots of combining, we had best become conscious of wavelength.

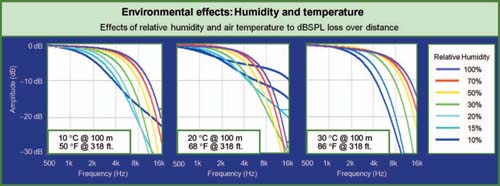

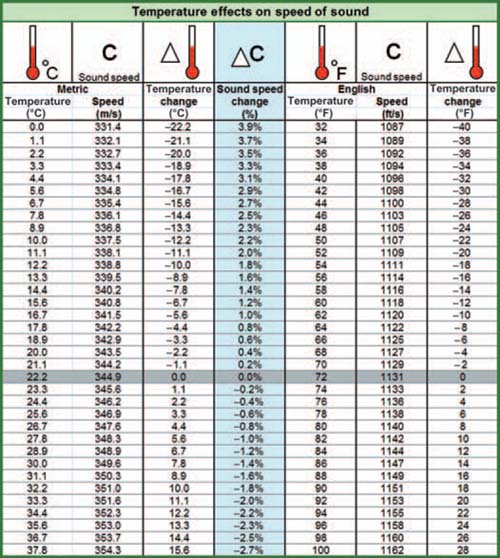

TEMPERATURE EFFECTS

As we saw previously, the speed of sound in air is slightly temperature-dependent. As the ambient temperature rises, sound speed increases and therefore the wavelengths expand. This behavior may slightly affect the response of our systems over the duration of a performance, since the temperature is subject to change even in the most controlled environments. However, although it is often given substantial attention, this is rarely a major factor in the big picture. A poorly designed system is not likely to find itself rescued by weather changes. Nor is it practical to provide ongoing environmental analysis over the widespread areas of an audience to compensate for drafts in the room. For our discussion, unless otherwise specified, we will consider the speed of sound to be fixed approximately at room temperature.

FIGURE 1.4

Handy reference for short wavelengths.

The relationship between temperature and sound speed can be approximated as follows: a 1% change in the speed of sound occurs with either a 5°C or 10°F change in temperature.

Waveform

There is no limit to the complexity of the audio signal. Waves at multiple frequencies may be simultaneously combined to make a new and unique signal that is a mixture of the contributing signals. This composite signal is the waveform, containing an unlimited combination of audio frequencies with variable amplitude and phase relationships. The waveform's complex shape depends upon the components that make it up and varies constantly as they do. A key parameter is how the frequency content of the contributing signals affects the combined waveform (Figs 1.6–1.8). When signals at different frequencies are added, the combined waveform will carry the shape of both components independently. The higher frequency is added to the shape of the lower-frequency waveform. The phase of the individual frequencies will affect the overall shape but the different frequencies maintain their separate identities. These signals can be separated later by a filter (as in your ear) and heard as separate sounds. When two signals of the same frequency are combined, a new and unique signal is created that cannot be filtered apart. In this case, the phase relationship has a decisive effect upon the nature of the combined waveform.

Analog waveform types include electronic, magnetic, mechanical, optical, and acoustical. Digital audio signals are typically electronic, magnetic, or optical, but the mechanics of the digital data transfer are not critical here. It could be punch cards as long as we can move them fast enough to read the data. Each medium tracks the waveform in different forms of energy, suitable for the particulars of that transmission mode, complete with its own vulnerabilities and limitations. Digital audio is most easily understood when viewed as a mathematical rendering of the waveform. For these discussions, this is no different from analog, which in any of its resident energy forms can be quantified mathematically.

FIGURE 1.5

Chart of speed of sound, period, and wavelength at different temperatures.

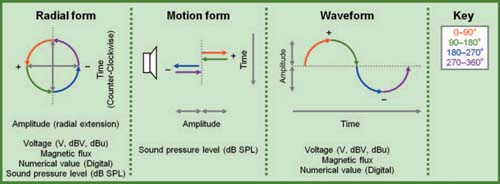

The audio signal can be visualized in three different forms as shown in Fig. 1.9. A single cycle is broken into four quadrants of 90° each. This motion form illustrates the movement of the signal from a point of rest to maximum amplitude in both directions and finally returning to the origin. This is representative of the particle motion in air when energized by a sound source such as a speaker. It also helps illustrate the point that the motion is back and forth rather than going outward from the speaker. A speaker should not be confused with a blower. The maximum displacement is found at the 90° and 270° points in the cycle. As the amplitude

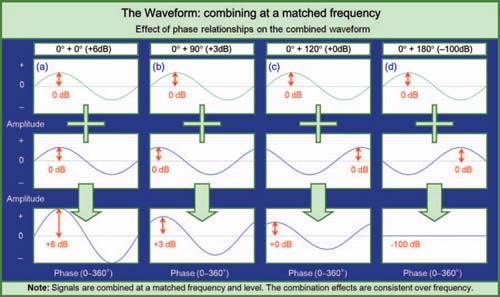

FIGURE 1.6

Reference chart of some of the common terms used to describe and quantify an audio waveform.

FIGURE 1.7

Combination of waveforms of the same frequency at the same level with different phase relationships: (a) 0° relative phase combines to +6 dB amplitude, (b) 90° relative phase combines to +3 dB amplitude, (c) 120° relative phase combines to +0 dB, (d) 180° relative phase cancels.

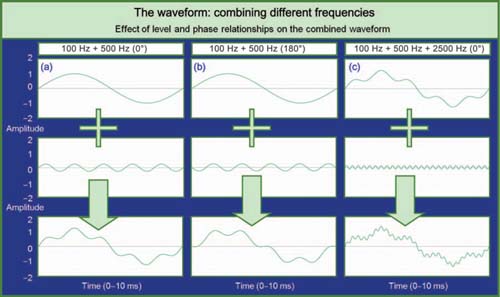

FIGURE 1.8

Combination of waveforms of different frequencies with different levels and phase relationships. (a) Second frequency is 5 × higher and 12 dB down in level from the first. Phase relationship is 0°. Note that both frequencies can be seen in the combined waveform. (b) Same as (a) but with relative phase relationship at 180°. Note that there is no cancellation in the combined waveform. The orientation of the HF trace has moved but the LF orientation is unchanged. (c) Combined waveform of (a) with third frequency added. The third frequency is 25 × the lowest frequency and 18 dB down in level. The phase relationship is matched for all frequencies. Note that all three frequencies can be distinguished in the waveform.

FIGURE 1.9

Three representations of the audio waveform.

increases, the displacement from the equilibrium point becomes larger. As the frequency rises, the time elapsed to complete the cycle decreases.

The radial form represents the signal as spinning in a circle. The waveform origin point corresponds to the starting point phase value, which could be any point on the circle. A cycle is completed when we have returned to the phase value of the point of origin. This representation shows the role that phase will play. The difference in relative positions on this radial chart of any two sound sources will determine how the systems will react when combined.

The sinusoidal waveform representation is the most familiar to audio engineers and can be seen on any oscilloscope. The amplitude value is tracked over time and traces the waveform in the order in which the signal passes through. This is representative of the motion over time of transducers and changing electrical values of voltage over time. Analog-to-digital (A/D) converters capture this waveform and create a mathematical valuation of the amplitude vs. time waveform.

TRANSMISSION QUANTIFIED

Decibels

Perspectives: I have tried to bring logic, reasoning, and physics to my audio problem applications. What I have found is that, when the cause of an event is attributed to “magic,” what this really means is that we do not have all the data necessary to understand the problem, or we do not have an understanding of the forces involved in producing the observed phenomena.

Dave Revel

Transmission amplitudes, also known as levels, are most commonly expressed in decibels (dB), a unit that describes a ratio between two measures. The decibel is a logarithmic scaling system used to describe ratios with a very large range of values. Using the decibel has the added benefit of closely matching our perception of sound levels, which is generally logarithmic. There are various dB scales that apply to transmission. Because decibels are based on ratios, they are always a relative scale. The question is: relative to what? In some cases, we compare to a fixed standard. Because audio is in constant change, it is also useful to have a purely relative scale that compares two unknown signals. An example of the latter type is the ratio of output to input level. This ratio, the gain of the device, can be quantified even though a drive signal such as music is constantly changing. If the same voltage appears at the output and input, the ratio is 1, also known as unity gain, or 0 dB. If the voltage at the output is greater, the gain value exceeds 1, and expressed in dB, is positive. If the input is greater, the gain ratio is less than 1 and expressed in dB is a negative number, signifying a net loss. The actual value at the input or output is unimportant. It is the change in level between them that is reflected by the dB gain value.

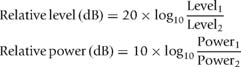

There are two types of log formulas applicable in audio:

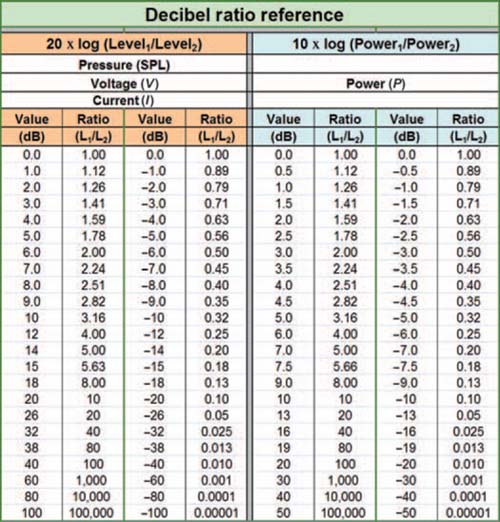

FIGURE 1.10

The ratio of output to input can be converted to dB with this easy reference chart. To compare a given level to a standard one, Level1 is the given level and Level2 is the standard. To derive the gain of a device, Level1 is the output level and Level2 is the input level. Likewise the power gain can be found in the same manner by substituting the power parameters.

Power-related equations use the 10 log version, while pressure- (SPL) and voltage-related equations use the 20 log version. It is important that the proper formula be used since a doubling of voltage is a change of 6 dB while a doubling of power is a change of 3 dB. For the most part, we will be using the 20 log version since acoustic pressure (dB SPL) and voltage are the primary drivers of our decision-making. Figure 1.10 provides a reference chart to relate the ratios of values to their decibel equivalents.

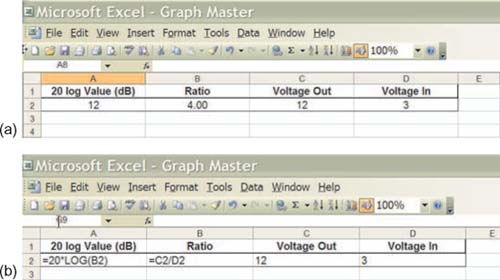

Here is a handy tip for Microsoft Excel users. Figure 1.11 shows the formula format for letting Excel do the log calculations for us.

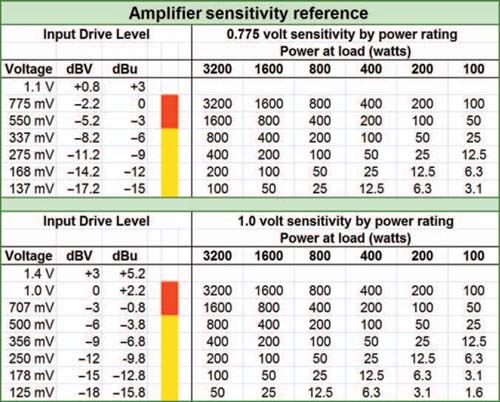

THE ELECTRONIC DECIBEL: dBV AND dBu

Electronic transmission utilizes the decibel scale to characterize the voltage levels. The decibel scale is preferred by operators over the linear scale for its relative ease of expression. Expressed linearly, we would find ourselves referring to the signal in microvolts, millivolts, and volts with various sets of number values and ranges. Such scaling makes it difficult to track a variable signal such as music. If we wanted to double the signal level, we would have to first know the voltage of the original signal and then compute its doubling. With dynamically changing signals such as music, the level at any moment is in flux, making such calculations impractical. The decibel scale provides a relative change value independent of the absolute value. Hence the desire to double the level can be achieved by a change of 6 dB, regardless of the original value. We can also relate the decibel to a fixed standard, which we designate as “0 dB.” Levels are indicated by their relative value above (+dB) or below (−dB) this standard. This would be simplest, of course, if there were a single standard, but tradition in our industry is to pick several. dBV and dBu are the most common currently. These are referenced to different values of 1.0 and 0.775 V (1mW across a 600Ω load) respectively. The difference between these is a fixed amount of 2.21 dB. Note: For ease of use, we will use dBV as the standard in this text. Those who prefer the dBu standard should apply +2.21 dB to the given dBV values.

FIGURE 1.11

Microsoft Excel log formula reference.

The voltage-related dB scales serve the important purpose of guidance toward the optimal operating range of the electronic devices. The upper and lower limits of an electronic device are absolute, not relative values. The noise floor has a steady average level and the clip point is a fixed value. These are expressed in dBV. The absolute level of our signal will need to pass between these two limits in order to prevent excess noise or distortion. The area enclosed by these limits is the linear operating area of the electronic device. Our designs will need to ensure that the operating levels of electronic devices are appropriately scaled for the signal levels passing through.

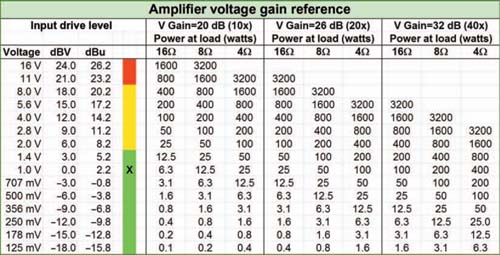

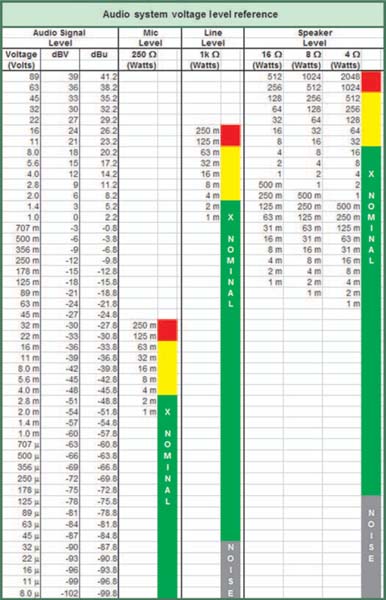

Once we have captured the audio waveform in its electronic form, it will be passed through the system as a voltage level with negligible current and therefore minimal power dissipation. Low-impedance output sections, coupled with high-impedance inputs, give us the luxury of not considering the power levels until we have reached the amplifier output terminals. Power amplifiers can then be seen as voltage-driven input devices with power stage outputs to drive the speakers. The amplifier gives a huge current boost and additional voltage capability as well. Figure 1.12 provides a reference chart showing the standard operating voltage levels for all stages of the system signal flow. The goal is to transmit the signal through the system in the linear operating voltage range of all of the devices, without falling into the noise floor at the bottom.

FIGURE 1.12

Reference chart for the typical operational voltage and wattage levels at various stages of the audio transmission. All voltages are RMS.

There is still another set of letter appendices that can be added on to the dB voltage formulas. These designate whether the voltage measured is a shortterm peak or the average value. AC signals are more complex to characterize than DC signals. DC signals are a given number of volts above or below the reference common. AC signals, by nature, go both up and down. If an average were taken over time, we would conclude that the positive and negative travels of the AC signal average out to 0 V. Placing our fingers across the AC mains will quickly alert us to the fact that averaging out to 0 V over time does not mean there is zero energy there. Kids, don't try this at home.

The AC waveform rises to a maximum, returns to zero, falls to a minimum, and then returns again to zero. The voltage between the peak and the zero point, either positive or negative, is the peak voltage (Vpk). The voltage between the positive and negative peaks is the peak-to-peak voltage (Vp−p) and is naturally double that of the peak value. The equivalent AC voltage to that found in a DC circuit is expressed as the root-mean-square (RMS) voltage (VRMS). The relationship between the peak and RMS values varies depending on the shape of the AC waveform. The RMS value is 70.7% of the peak value.

All of these factors translate over to the voltage-related dB formulas and are found as dBVpk, dBVp−p, and dBVRMS respectively. The 70.7% difference between peak and RMS is equivalent to 3 dB.

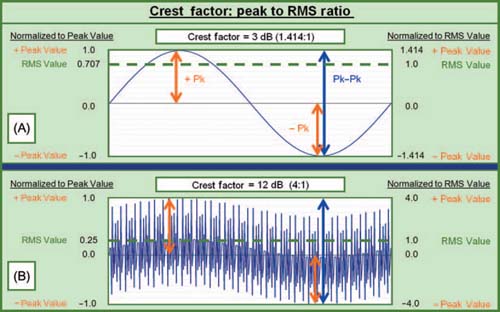

Crest factor

The term to describe the variable peak-to-RMS ratio found in different program materials is the crest factor (Fig. 1.13). The waveform with the lowest possible crest factor (3 dB) is a sine wave, which has a peak/RMS value of 1.414 (or RMS/peak value of 0.707). The presence of multiple frequencies creates momentary confluences of signals that can sum together into a peak that is higher than any of the individual parts. This is known as a transient peak. Most audio signals are transient by nature since we can't dance to sine waves. A strong transient, like a pulse, is one that has a very high peak value and a minimal RMS value. Transient peaks are the opposite extreme of peak-to-RMS ratio from the sine wave. Theoretically, there is no limit to the maximum, but our audio system will require more dynamic range as crest factor increases. The typical crest factor for musical signals is 12 dB. Since our system will be transmitting transients and continuous signals, we must ensure that the system has sufficient dynamic range to allow the transient peaks to stay within the linear operating range. The presence of additional dynamic range above the ability to pass a simple sine wave is known as headroom, with 12 dB being a common target range.

Perspectives: System optimization starts with good system design. From there it is a process that should be guided by structured thinking and critical listening.

Sam Berkow, founder, SIA Acoustics LLC & SIA Software Company, Inc.

ACOUSTIC DECIBEL: dB SPL

The favored expression for acoustic transmission is dB SPL (sound pressure level). This is the quantity of measure for the pressure changes above and below the ambient air pressure. The standard is the threshold of the average person's hearing (0 dB SPL). The linear unit of expression is one of pressure, dynes/square centimeter, with 0 dB SPL being

FIGURE 1.13

Crest factor, RMS, peak, and peak-to-peak values. (a) A sine wave has the lowest crest factor of 3 dB. (b) An example complex waveform with transients with a 12 dB crest factor.

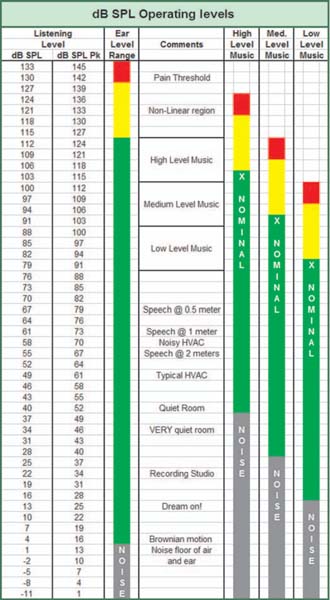

a value of 0.0002 dynes/cm2 (1 μbar). This lower limit approaches the noise level of the air medium, i.e., the level where the molecular motion creates its own random noise. It is comforting to know we aren't missing out on anything. At the other end of the scale is the threshold of pain in our hearing system. The values for this are somewhat inconsistent but range from 120 to 130 dB SPL, with more modern texts citing the higher numbers (Fig. 1.14). In any case, this number represents the pain threshold, and with that comes the obvious hazards of potential hearing damage:

where P is the RMS pressure in microbars (dynes/ square centimeter).

Excuse me: did you say microbars and dynes per square centimeter?

FIGURE 1.14

Typical operational level over the dynamic range of the ear.

These air pressure measurement terms are unfamiliar to most audio engineers, along with the alternate and equally obscure term of 20 μPa. For most audio engineers, the comprehension of dB SPL is relative to their own perspective: an experiential correlation to what we hear with what we have read over the years on SPL meters. The actual verification of 0 dB SPL is left to the Bureau of Standards and the people at the laboratories. Few of us have met a dyne, a microbar, or a micropascal at the venue or ever will. Even fewer are in a position to argue with someone's SPL meter as to whether it is calibrated correctly, unless we have an SPL meter and a calibrator of our own to make the case. Then we can argue over whose calibrator is accurate and eventually someone must yield or we will have to take a trip to the Bureau of Standards. This is one place where, in our practical world, we will have to take a leap of faith and trust the manufacturers of our measurement microphones. For our part, we will be mindful of even small discrepancies between different measurement instruments, microphones, etc., but we will not be in a good position to question the absolute dB SPL values to the same extent.

The 130 dB difference between the threshold of audibility and the onset of pain can be seen as the dynamic range of our aural system. Our full range will rarely be utilized, since there are no desirable listening spaces with such a low noise floor. In addition, the ear system is generating substantial harmonic distortion before the pain threshold which degrades the sonic experience (for some) before actual pain is sensed. In practical terms, we will need to find a linear operating range, as we did for the electronic transmission. This range runs from the ambient noise floor to the point where our hearing becomes so distorted that the experience is unpleasant. Our sound system will need to have a noise floor below the room and sufficient continuous power and headroom to reach the required maximum level.

dB SPL subunits

dB SPL has average and peak level in a manner similar to the voltage units. The SPL values differ, however, in that there may be a time constant involved in the calculation.

■ dB SPL peak: The highest level reached over a measured period is the peak (dB SPLpk).

■ dB SPL continuous (fast): This is the average SPL over a time integration of 250 ms. The integration time is used in order to mimic our hearing system's perception of SPL. Our hearing system does not perceive SPL on an instantaneous level but rather over a period of approximately 100 ms. The fast integration is sufficiently long enough to give an SPL reading that corresponds to that perception.

■ dB SPL continuous (slow): This is the average SPL over a time integration of 1 s. The slower time constant mimics the perception of exposure to extended durations of sound.

■ dB SPL LE (long term): This is the average SPL over a very long period of time, typically minutes. This setting is typically used to monitor levels for outdoor concert venues that have neighbors complaining about the noise. An excessive LE reading can cost the band a lot of money.

dB SPL can be limited to a specific band of frequencies. If a bandwidth is not specified, the full range of 20 Hz–20 kHz is assumed. It is important to understand that a reading of 120 dB SPL on a sound level meter does not mean that a speaker is generating 120 dB SPL at all frequencies. The 120 dB value is the integration of all frequencies (unless otherwise specified) and no conclusion can be made regarding the behavior over the range of speaker response. The only case where the dB SPL can be computed regarding a particular frequency is when only that frequency is sent into the system. dB SPL can also be determined for a limited range of frequencies, a practice known as banded SPL measurements. The frequency range of the excitation signal is limited, commonly in octave or one-third octave bands, and the SPL over that band can be determined. The maximum SPL for a device over a given frequency band is attained in this way. It is worth noting that the same data cannot be attained by simply band-limiting on the analysis side. If a fullrange signal is applied to a device, its energy will be spread over the full band. Bandlimited measurements will show lower maximum levels for a given band if the device is simultaneously charged with reproducing frequencies outside of the measured band.

THE UNITLESS DECIBEL

Perspectives: Regardless of the endeavor, excellence requires a solid foundation on which to build. A properly optimized sound system allows the guest engineer to concentrate on mixing, not fixing.

Paul Tucci

The unitless decibel scale is available for comparison of like values. Everything expressed in the unitless scale is purely relative. This can be applied to electronic or acoustic transmission. A device with an input level of +20 dBV and an output level of +10 dBV has a gain of +10 dB. Notice that no letter is appended to the dB term here, signifying a ratio of like quantities. Two seats in an auditorium that receive 94 and 91 dB SPL readings respectively are offset in level by 3 dB. This is not expressed as 3 dB SPL, a level just above our hearing threshold. If the levels were to rise to 98 and 95 respectively, the level offset remains at 3 dB.

The unitless dB scale will be by far the most common decibel expression in this book. The principal concern of this text is relative level, rather than absolute levels. Simply put, absolute levels are primarily an operational issue, inside the scope of mix engineering, whereas relative levels are primarily a design and optimization issue, under our control. The quality of our work will be based on how closely the final received signal resembles the original. Since the transmitted signal will be constantly changing, we can only view our progress in relative terms.

Power

Electrical energy is a combination of two measurable quantities: voltage and current. The electrical power in a DC circuit is expressed as:

P = EI

where P is the power in watts, E is the voltage in volts, and I is the current in amperes.

Voltage corresponds to the electrical pressure while current corresponds to the rate of electron flow. A simplified analogy comes from a garden hose. With the garden hose running open, the pressure is low and the flow is a wide cylinder of water. Placing our thumb on the hose will greatly increase the pressure and reduce the width of the flow by a proportional amount. The former case is low voltage and high current, while the latter is the reverse.

The power is the product of these two factors; 100 watts (W) of electrical power could be the result of 100 volts (V) at 1 amperes (A), 10 V at 10 A, 1 V at 100 A, etc. The power could be used to run one heater at 100 W, or 100 heaters at 1 W each. The heat generated, and the electrical bill, is the same either way. Both voltage and current must be present for power to flow. Voltage with no current is potential for power, but none is transferred until current flows.

A third factor plays a decisive role in how the voltage and current are proportioned: resistance. Electrical resistance limits the amount of current flowing through a circuit and thereby affects the power transferred. Provided that the voltage remains constant, the power dissipation will be reduced as the resistance stems the flow of current. This power reduction can be compensated by an increase in voltage proportional to the reduced current. Returning to the garden hose analogy, it is the thumb that acts as a variable resistance to the circuit, a physical feeling that is quite apparent as we attempt to keep our thumb in place. This resistance reapportions the hose power toward less current flow and higher pressure. This has very important effects upon how the energy can be put to use. If we plan on taking a drink out of the end of the hose, it would be wise to carefully consider the position of our thumb.

The electrical power in a DC circuit is expressed as:

P = IE

P = I2R

P = E2/R

where P is the power in watts, E is the voltage in volts, I is the current in amperes, and R is the resistance in ohms.

These DC formulas are applicable to the purely resistive components of the currentlimiting forces in the electrical circuit. In the case of our audio waveform, which is by definition an AC signal, the measure of resistive force differs over frequency. The complex term for resistance with a frequency component is impedance. The impedance of a circuit at a given frequency is the combination of the DC resistance and the reactance. The reactance is the value for variable resistance over frequency and comes in two forms: capacitive and inductive. The impedance for a given circuit is a combination of the three resistive values: DC resistance, capacitive reactance, and inductive reactance. These factors alter the frequency response in all AC circuits; the question is only a matter of degree of effect. For our discussion here, we will not go into the internal circuit components but rather limit the discussion of impedance and reactance to the interconnection of audio devices. All active audio devices present an input and output impedance and these must be configured properly for optimal transmission. The interconnecting cables also present variable impedance over frequency and this will be discussed.

Frequency response

If a device transmits differently at one frequency than another, it has a frequency response. A device with no differences over frequency, also known as a “flat” frequency response, is actually the absence of a frequency response. In our practical world, this is impossible, since all audio devices, even oxygen-free hypoallergenic speaker cable, change their response over frequency. The question is the extent of detectable change within the frequency and dynamic range of our hearing system. Frequency response is a host of measurable values but we will focus our discussion in this section on two representations: amplitude vs. frequency and phase vs. frequency. No audio device can reach an infinitely low frequency (we would have to go all the way back to the Big Bang to measure the lowest frequency) and none can reach an infinitely high frequency. Fortunately this is not required. The optimal range is some amount beyond that of the human hearing system. (The exact extent is subject to ongoing debate.) It is generally accepted that HF extension beyond the human hearing limits is preferable to those systems that limit their response to exactly 20 Hz to 20 kHz. This is generally attributed to the reduced phase shift of the in-band material, which leaves the upper harmonic series intact. Anyone familiar with the first generations of digital audio devices will remember the unnatural quality of the band-limited response of those systems. The debate over 96 kHz, 192 kHz, and higher sampling rates for digital audio will be reserved for those with ears of gold.

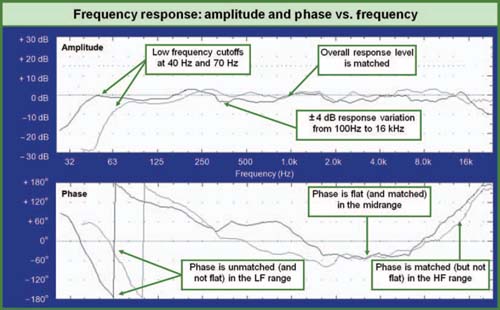

AMPLITUDE VS. FREQUENCY

Amplitude vs. frequency (for brevity, we will call this the amplitude response) is a measure of the level deviation over frequency. A device is specified as having an operational frequency range and a degree of variation within that range. The frequency range is generally given as the −3 dB points in electronic devices, while −6 and −10 dB figures are most often used for speakers. The quality of the amplitude response is determined by its degree of variance over the transmission range, with minimum variance corresponding to the maximum quality. The speakers shown in Fig. 1.15 have an amplitude response that is ±4 dB over their operating ranges. The operating ranges (between −6 dB points) differ in the low frequency (40 and 70 Hz) and high frequency (18 and 20 kHz).

PHASE VS. FREQUENCY

Phase vs. frequency (for brevity, we will call this the phase response) is a measure of the time deviation over frequency. A device is specified as having a degree of variation within the operational range governed by the amplitude response. The quality of the phase response is determined by its degree of variance over the transmission range, with minimum variance again corresponding to the maximum quality. The phase responses of the two speakers we compared previously in Fig. 1.15 are shown as an example.

The phase response over frequency is, to a large extent, a derivative of amplitude response over frequency. Leaving the rare exception aside for the moment, we can say that a flat phase response requires a flat amplitude response. Deviations in the amplitude response over frequency (peak and dip filters, high- and low-pass filters, for example) will cause predictable deviations in phase. Changes in amplitude that are independent of frequency are also independent of phase; i.e., an overall level change does not affect phase.

The exception cited above refers to filter circuits that delay a selective range of frequencies, thereby creating phase deviations unrelated to changes in amplitude. This creates interesting possibilities for phase compensation in acoustical systems with physical displacements between drivers. The final twist is the possibility of filters that change

FIGURE 1.15

Relative amplitude and relative phase responses of two loudspeakers. The two systems are matched in both level and phase over most of the frequency range, but become unmatched in both parameters in the LF range.

amplitude without changing phase. The quest for such circuits is like a search for the Holy Grail in our audio industry. We will revisit this quest later in Chapter 10. Phase will be covered from multiple perspectives as we move onward. For now, we will simply introduce the concept.

Phase response always merits a second-place finish in importance to amplitude response for the following reason: if the amplitude value is zero, there is no level, and the phase response is rendered academic. In all other cases, however, the response of phase over frequency will need to be known.

There has been plenty of debate in the past as to whether we can discern the phase response over frequency of a signal. The notion has been advanced that we cannot hear phase directly and therefore a speaker with extensive phase shift over frequency was equivalent to one that exhibited flat phase. This line of reasoning is absurd and has few defenders left. Simply put, a device with flat phase response sends all frequencies out in the temporal sequence as they went in. A device with nonlinear phase selectively delays some frequencies more than others. These discussions are typically focused on the performance of loudspeakers, which must overcome tremendous challenges in order to maintain a reasonably flat phase response for even half of their frequency range. Consider the following question: all other factors being equal, would a loudspeaker with flat phase over a six-octave range sound better than one that is different for every octave? The answer should be self-evident unless we subscribe to the belief that loudspeakers create music instead of recreating it. If this is the case, then I invite you to consider how you would feel about your console, cabling or amplifiers contributing the kind of wholesale phase shift that is justified as a musical contribution by the speaker.

A central premise of this book is that the loudspeaker is not given any exception for musicality. Its job is as dry as the wire that feeds it an input signal: track the waveform. There will be no discussion here as to which forms of coloration in the phase or amplitude response sound “better” than another.

Let's apply this principle to a musical event: a piano key is struck and the transient pressure peak contains a huge range of frequency components, arranged in the distinct order that our ear recognizes as a piano note. To selectively delay some of the portions of that transient peak rearranges the sequences into a waveform that is very definitely not the original and is less recognizable as a piano note. As more phase shift is added, the transient becomes increasingly stretched over time. The sense of impact from a hammer striking a string will be lost.

While linear phase over frequency is important, it is minor compared to the most critical phase parameter: its role in summation. This subject will be the detailed in Chapter 2.

Polarity

The polarity of a signal springs from its orientation to the origin point of the waveform. All waveforms begin at the “ambient” state in the medium and proceed back and forth from there. The same waveform shape can be created in opposite directions, one going back and forth while the other goes forth and back. There is plenty of debate as to whether we can discern the absolute polarity of a signal. A piano key is struck and the pressure peak arrives first as a positive pressure followed by a negative. If this is reproduced by an otherwise perfect speaker but reversed in polarity would we hear the difference? The debate continues.

Perspectives: Every voodoo charm and ghost in the machine that I dismissed early in my career about why anything audio was the way it was, has little by little been replaced by hard science. I have no reason to believe that the voodoo and ghosts that persist can't be replaced as well by experience and truth.

Martin Carillo

Perspectives: Keeping your gain structure optimized will ensure the whole system will clip simultaneously, thus ensuring the best signalto- noise ratio.

Miguel Lourtie

In our case, the critical parameter regarding polarity is ensuring that no reversals occur in parts of the transmission chain that will be combined either electrically or acoustically. Combining out of polarity signals will result in cancellation, a negative effect of which there is little debate.

Latency

Transmission takes time. Every stage in the path from the source to the listener takes some amount of time to pass the signal along. This type of delay, known as latency, occurs equally at all frequencies, and is measured in (hopefully) ms. The most obvious form of latency is the “flight time” of sound propagating through the air. The electronic path is also fraught with latency issues, and this will be of increasing importance in the future. In purely analog electronic transmission, the latency is so small as to be practically negligible. For digital systems, the latency can never be ignored. Even digital system latencies as low as 2 ms can lead to disastrous results if the signal is joined with others at comparable levels that have analog paths (0 ms) or alternate network paths (unknown number of ms). For networked digital audio systems, latency can be a wide-open variable. In such systems, it is possible to have a single input sent to multiple outputs each with different latency delays, even though they are all set to “0 ms” on the user interface.

ANALOG AUDIO TRANSMISSION

We have discussed the frequency, period, wavelength, and the audio waveform above. It is now time to focus on the transmission of the waveform through the electronic and acoustic media. We will begin with the far less challenging field of electronic transmission.

Electronic audio signals are variations in voltage, current, or electromagnetic energy. These variations will finally be transformed into mechanical energy at the speaker. The principal task of the electronic transmission path is to deliver the original signal from the console to the mechanical/acoustic domain. This does not mean that the signal should enter the acoustic domain as an exact copy of the original. In most cases, it will be preferable to modify the electrical signal in anticipation of the effects that will occur in the acoustic domain. Our goal is a faithful copy at the final destination: the listening position. To achieve this, we will need to precompensate for the changes caused by interactions in the space. The electronic signal may be processed to compensate for acoustical interaction, split apart to send the sound to multi-way speakers and then acoustically recombined in the space.

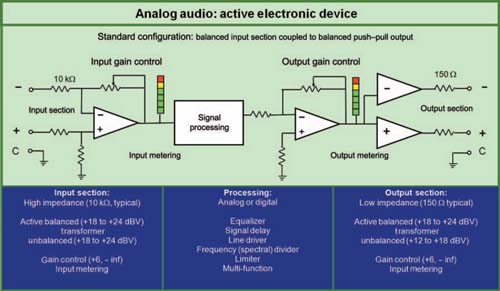

The analog audio in our transmission path runs at two standard operational levels: line and speaker. Each of these categories contains both active and passive devices. Active line level devices include the console, signal processing such as delays, equalization, level controls, and frequency dividers and the inputs of power amplifiers. Passive devices include the cables, patchbays, and terminal panels that connect the active devices together. Active devices are categorized by their maximum voltage and current capability into three types: mic, line, and speaker level. Mic and line both operate with high-impedancebalanced inputs (receivers) and low-impedance-balanced outputs (sources). Input impedances of 5–100 kΩ and output drives of 32–200Ω are typical. Mic level devices overload at a lower voltage than line level, which should be capable of approximately 10 V (+20 dBV) at the inputs and outputs. Since our discussion is focused on the transmission side of the sound system, we will be dealing almost exclusively with line level signals. Power amplifiers have a high-impedance line level input and an extremely low-impedance speaker level output. Speaker level can range to over 100 V and is potentially hazardous to both people and test equipment alike.

Line level devices

Each active device has its own dedicated functionality, but they all share common aspects as well. All of these devices have input and output voltage limits, residual noise floor, distortion, and frequency–response effects such as amplitude and phase variations. Looking deeper, we find that each device has latency, low-frequency (LF) limits approaching DC, and HF limits approaching light. In analog devices, these factors can be managed such that their effects are practically negligible—but this cannot be assumed. The actual values for all of the above factors can be measured and compared to the manufacturer's specification and to the requirements of our project.

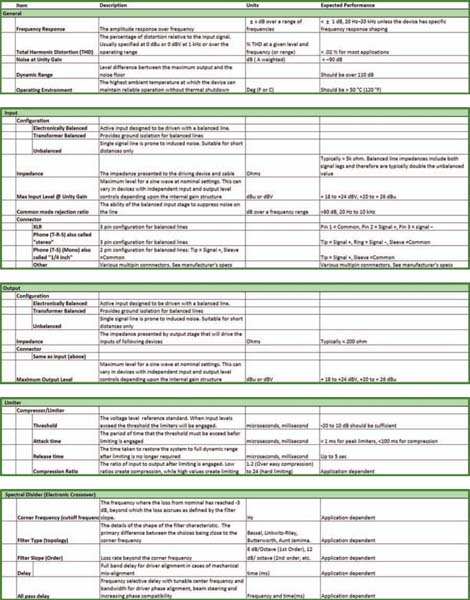

The typical electronic device will have three stages: input, processing, and output, as shown in Fig. 1.16. The nature of the processing stage depends upon the function of the device. It could be an equalizer, delay, frequency divider, or any audio device. It could be analog or digital in the processing section but the input and output stages are analog. Professional line level electronic devices utilize a fairly standard input and output configuration: the balanced line. Balanced lines provide a substantial degree of immunity from noise induced on the line (electromagnetic interference) and grounding problems (hum) by virtue of the advantages of the differential input (discussed later in this chapter). The standard configuration is a voltage source system predicated on lowimpedance outputs driving high-impedance inputs. This relationship allows the interconnection to be relatively immune to distance and number of devices.

Now let's look at some of the particular types of devices in common use in our systems. The list that follows is by no means comprehensive. The descriptions for this limited list are mostly generic and established features and applications of these devices. There are simply too many devices to describe. The description of and advocacy for (or against) the specific features and benefits of any particular make and model will be left to the manufacturers. Therefore, consider the insertion of text such as “in most cases,” “typically but not always,” “usually,” “in every device I ever used in the last 20 years,” or “except for the model X” as applicable to any of the descriptions that would otherwise seem to exclude a particular product.

FIGURE 1.16

Typical analog electronic device flow chart.

AUDIO SOURCES

The device transmitting our original transmitted signal must be capable of delivering the signal in the working condition. The most common delivery devices are the mix console outputs. The console outputs should meet the above criteria in order for us to have an input signal worth transmitting.

SIGNAL PROCESSING

The signal-processing devices will be used during the optimization procedures. The processing may be in a single chassis, or separated, but will need the capability to perform the basic functions of calibration: level setting, delay setting, and equalization. Since these will be covered separately, we will consider the functions of the signal processor as the combination of individual units.

LEVEL-SETTING DEVICES

Level-setting devices adjust the voltage gain through the system and optimize the level for maximum dynamic range and minimum level variance by adjusting subsystem relative levels. The level-setting device ensures that the transition from the console to the power amplifier stage is maintained within the linear operating range. The resolution of a levelsetting device for system optimization should be at least 0.5 dB.

DELAY LINES

Just as level controls adjust the relative levels, the delay lines control relative phase. They have the job of time management. They aid the summation process by phase aligning acoustical crossovers for maximum coupling and minimum variance. Most delay lines have a resolution of 0.02 ms or less, which is sufficient. The minimum latency value is preferred. Most delay line user interfaces give false readings as to the amount of delay they are actually adding to the signal. The indicator on the device gives the impossible default setting of 0 ms, which is accurate only as an indication of the amount of delay added to the latency base value. An accurate unit of expression for this would be 0 ms(R); i.e., relative. If every device in the system is the same model and they are not routing signals through each other on a network, the relative value will be sufficient for our purposes. If we are mixing delay line models or networking them together, that luxury is gone. Any latency variable in our transmission path will need to be measured on site and taken into account.

EQUALIZERS

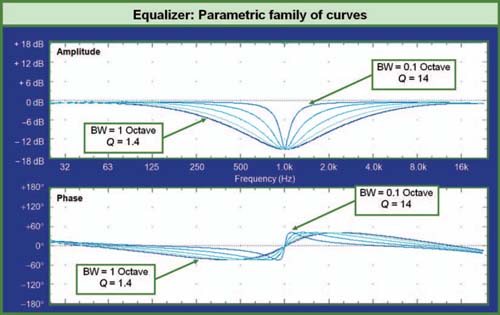

Filter types

Equalizers are a user-adjustable bank of filters. Filters, in the most basic sense, are circuits that modify the response in a particular frequency range, and leave other areas unchanged. The term equalization filters generally refers to two primary types of filters: shelving and parametric. Shelving filters affect the upper and lower extremes and leave the middle unaffected. Parametric filters do the opposite, affecting the middle and leaving the extremes unaffected. Used together, these two filter types can create virtually any shape we require for equalization.

Parametric-type equalization filter characteristics:

■ Center frequency: The highest or lowest point in the response, specified in Hz.

■ Magnitude: The level, in dB, of the center frequency above or below the unity level outside of the filter's area of interaction.

■ Bandwidth (or Q): The width of the affected area above and below the center frequency. This has some complexities that we will get to momentarily. In simple terms, a “wide” bandwidth filter affects a broader range of frequencies on either side of the center than a “narrow” bandwidth filter with all other parameters being equal.

Shelving-type equalization filter characteristics:

■ Corner frequency: The frequency range where the filter action begins. For example, a shelving filter with a corner frequency of 8 kHz will affect the range above this, while leaving the range below largely unaffected.

■ Magnitude: The level, in dB, of the shelved area above or below the unity level outside of the filter's area of interaction.

■ Slope (in dB/octave or filter order): This controls the transition rate from the affected and unaffected areas. A low slope rate, like the wide band filter described above, would affect a larger range above (or below) the corner frequency than a high slope.

There are a great variety of different subtypes of these basic filters. For both filter types, the principal differences between subtypes are in the nature of the transitional slope between the specified frequency and the unaffected area(s). Advances in circuit design will continue to create new versions and digital technology opens up even more possibilities. It is beyond the scope of this text to describe each of the current subtypes, and fortunately it is not required. As it turns out, the equalization needs for the optimized design are decidedly unexotic. The filter shapes we will need are quite simple and are available in most standard parametric equalizers (analog or digital) manufactured since the mid-1980s.

Filter functions

Since these devices are called “equalizers,” it is important to specify what it is they will be making equal. The frequency response, of course. But how did it get unequal? There are three principal mechanisms that “unequalize” a speaker system in the room: an unequal frequency response in the speaker system's native response (a manufacturing or installation issue), air loss over frequency, and acoustic summation. For the moment, we will assume that a speaker system with a flat free-field response has been installed in a room. The equalization scope consists of compensation for frequency–response changes due to air loss over distance, summation response with the room reflections, and summation response with other speakers carrying similar signals.

Any of these factors can (and will) create peaks and dips in the response at frequencies of their choosing and at highly variable bandwidth and magnitudes. Therefore, we must have the maximum flexibility in our filter set. The most well-known equalizer is the “graphic” equalizer. The graphic is a bank of parametric filters with two of the three parameters (center frequency and bandwidth) locked at fixed settings. The filters are spread evenly across the log frequency axis in octave or one-third octave intervals. The front panel controls are typically a series of sliders, which allows the user to see their slider settings as a response readout (hence the name “graphic”). The questionable accuracy of the readout is a small matter compared to the principal limitation of the graphic: fixed filter parameters in a variable filter application. This lack of flexibility severely limits our ability to accurately place our filters at the center frequency and bandwidth required to compensate for the measured speaker system response in the room. The principal remaining professional audio application for these is in the full combat conditions of onstage monitor mixing, where the ability to grab a knob and suppress emerging feedback may exceed all other priorities. Otherwise, such devices are tone controls suitable for artists, DJs, audiophiles, and automobiles.

The ability to independently and continuously adjust all three parameters—center frequency, bandwidth, and level—give the parametric equalizer its name. Boost and cut maxima of 15 dB have proven more than sufficient. Bandwidths ranging from 0.1 to 2 octaves will provide sufficient resolution for even precise studio applications. There is no limit to the number of filters that can be placed in the signal path; however, the point of diminishing returns is reached rapidly. If we find ourselves using large quantities of filters (more than six) in a single subsystem feed of a live sound system tuning application, it is worth considering whether solutions other than equalization have been fully explored. Recording studios, where the size of the audience is only as wide as a head, can benefit from higher numbers of filters. The equalization process will be discussed in detail in Chapter 12.

Complementary phase

The phase response of the filters is most often the first derivative of the amplitude response, a relationship known as “minimum phase.” This relationship of phase to amplitude is mirrored in the responses for which the equalizer is the proper antidote. This process of creating an inverse response in both amplitude and phase is known as complementary phase equalization.

It is inadvisable to use notch filters for system optimization. Notch filters create a cancellation and thereby remove narrow bands completely from the system response. A notch filter is a distinct form of filter topology, not simply a narrow parametric band. The application of notch filters is not equalization, it is elimination. A system that has eliminated frequency bands can never meet our goal of minimum variance.

Some equalizers have filter topologies that create different bandwidth responses depending on their peak and dip settings; a wide peak becomes a narrow dip as the gain setting changes from boost to cut. Is the bandwidth marking valid for a boost or a dip (or either)? Such filters can be a nuisance in practice since their bandwidth markings are meaningless. However, as long as we directly monitor the response with measurement tools, such filters should be able to create the complementary shapes required.

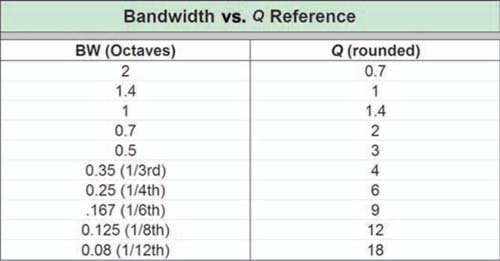

Bandwidth and Q

There are two common descriptive terms for the width of filters: bandwidth (actually percentage bandwidth) and Q or “quality factor.” Both refer to the frequency range between the −3 dB points compared to the center frequency level. Neither of these descriptions provides a truly accurate representation of the filter as it is implemented. Why? What is the bandwidth of a filter that has only a 2.5 dB boost? There is no −3dB point. The answer requires a brief peek under the hood of an equalizer. The signal path in an equalizer follows two paths: direct from input to the output bus and alternately through the filter section. The level control on our filter determines how much of the filtered signal we are adding (positively or negatively) to the direct signal. This causes a positive summation (boost) or a negative summation (cut) to be added to the full-range direct signal. Filter bandwidth specifications are derived from the internal filter shape (a band pass filter) before it has summed with the unfiltered signals. The bandwidth reading on the front panel typically reflects that of the filter before summation, since its actual bandwidth in practice will change as level is modified. Some manufacturers use the measured bandwidth at maximum boost (or cut) as the front panel marking. This is the setting that most closely resembles the internal filter slope.

Perspectives: I have had to optimize a couple of systems using one-third octave equalizers and I don't recommend it. I don't think that there is any argument about what can be done with properly implemented octave-based equalizers, but much more can be done with parametric equalizers that have symmetrical cut/boost response curves. The limitless possibilities for frequency and filter skirt width make fitting equalizers to measured abnormalities possible. With fixed filters the problems never seem to fall under the filters.

Alexander Yuill-Thornton II (Thorny)

The principal difference between the two standards is one of intuitive understanding. Manufacturers seem to favor Q, which plugs directly into filter design equations. Most audio system operators have a much easier time visualizing one-sixth of an octave than they do a Q of 9 (Fig. 1.17).

All that will matter in the end is that the shape created by a series of filters is the right one for the job. As we will see much later in Chapter 12, there is no actual need to ever look at center frequency level or bandwidth on the front panel of an equalizer. Equalization will be set by visually observing the measured result of the equalizer response and viewing it in context with the acoustic response it is attempting to equalize (Fig. 1.18).

FIGURE 1.17

Bandwidth vs. Q conversion reference (after Rane Corporation, www.rane.com/library.html).

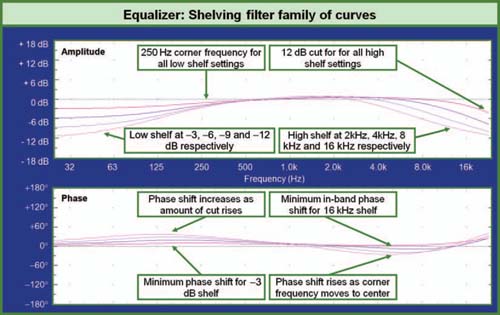

An additional form of filter can be used to create broad flat changes in frequency response. Known as shelving filters, they differ from the parametric by their lack of bandwidth control. The corner frequency sets the range of action and the magnitude control sets the level to which the shelf will flatten out. This type of filter provides a gentle shaping of the system response with minimal phase shift (Fig. 1.19).

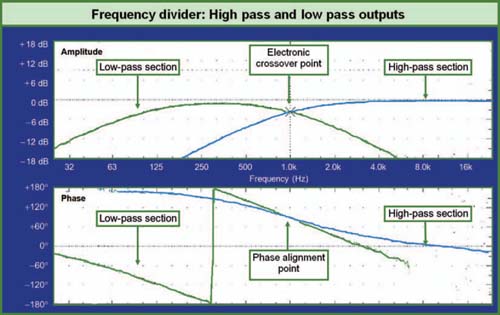

FREQUENCY DIVIDERS

The job of the frequency divider (also termed the spectral divider in this text) is to separate the audio spectrum so that it can be optimally recombined in the acoustic space (Fig. 1.20). The separation is to accommodate the physics that makes it impossible (currently) to have any one device that can reproduce the full audio range with sufficient quality and power.

Note: This device is commonly known as an electronic crossover, which is a misnomer. The electronic device divides the signal, which is then recombined acoustically. It is in the

FIGURE 1.18

Standard parametric equalizer curve family.

FIGURE 1.19

Shelving filter family of curves.

FIGURE 1.20

Frequency divider curve family.

acoustical medium that the “crossing over” occurs, hence the term “acoustical crossover.” This is not just a case of academic semantics. Acoustical crossovers are present in our room at any location where signals of matched origin meet at equal level. This includes much more than just the frequency range where high and low drivers meet, and also includes the interaction of multiple full-range speakers and even room reflections. A central component of the optimized design is the management of acoustic crossovers. Frequency dividers are only one of the components that combine to create acoustic crossovers.

Frequency dividers have user-settable corner frequency, slope, and filter topology. Much is made in the audio community of the benefits of the Bessel, Butterworth, Linkwitz–Riley, or some other filter topologies. Each topology differs somewhat around the corner frequency but then takes on the same basic slope as the full effects of the filter order become dominant. There is no simple answer to the topology question since the acoustic properties of the devices being combined will play their own role in the summation in the acoustical crossover range. That summation will be determined by the particulars of the mechanical devices as well as the settings on the frequency divider. A far more critical parameter than topology type is that of filter order, and the ability to provide different orders to the high- and low-pass channels. Placing different slope orders into the equation allows us to create an asymmetrical frequency divider, an appropriate option for the inherent asymmetry of the transducers being combined. Any frequency divider that can generate up to fourth order (24 dB per octave) should be more than sufficient for our slope requirements.

An additional parameter that can be found in some frequency dividers is phase alignment circuitry. This can come in the form of standard signal delay or as a specialized form of phase filter known as an all-pass filter. The standard delay can be used to compensate for the mechanical offset between high and low drivers so that the most favorable construction design can be utilized. The all-pass filter is a tunable delay that can be set to a particular range of frequencies. The bandwidth and center frequency are user-selectable.

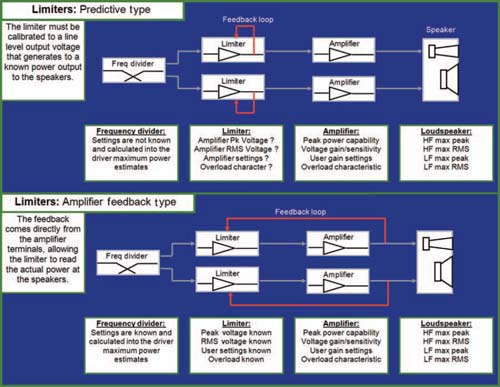

FIGURE 1.21

Predictive limiter scheme (upper). The limiter is calibrated independently of the amplifier. For this scheme to be effective, the limiter must be calibrated to the known voltage gain and peak power characteristics of the amplifier. Changes in the amplifier input level control will de-calibrate the limiter. Negative feedback scheme (lower). The limiter is calibrated to the output of the amplifier. The limiter remains calibrated even with changes in the amplifier level control.

All-pass filters can be found in dedicated speaker controllers and active speakers, where conditions are sufficiently controlled such that the parameters can be optimized.

The all-pass has gained popularity of late as an additional tool for system optimization. There are some promising applications such as the modification of one speaker's phase response in order to make it compatible with another. There is also some exciting potential for LF beam steering in arrays by selectively applying delay to some elements. It is important, however, to understand that such a tool will require much greater skill for practical application than traditional filters. Exotic solutions such as this should not take precedence over the overall task of uniformity over the space. The belief that an all-pass filter tuned for the mix position will benefit the paying customers is as unfounded an idea of any of the single-point strategies. It will be a happy day in the future when we reach a point where we have speaker systems in the field that are so well optimized that the only thing left to do is to fine-tune all-pass delays.

LIMITERS

Limiters are voltage regulation devices that reduce the dynamic range of the signal passing through (Fig. 1.21). They can be applied at any point in the signal chain including individual input channels, output channels, or the post-frequency-divider signal driving a power amplifier. Our scope of interest is limiters that manage the input signal to the power amplifiers in order to provide protection for the loudspeakers. Limiters are not a requirement in the transmission path. If the system is always operated safely within its linear range, there is no need for limiting. This could happen in our lifetime, as could world peace. But unfortunately, we have to assume the worst-case scenario: that the system will be subjected to the maximum level of abuse fathomable, plus 6 dB. Overload conditions are a strain on both the amplifiers and the speakers and have many undesirable sonic characteristics (to most of us). Limiters are devices with a thresholdcontrolled variable voltage gain. The behavior of the device is characterized by its two operating ranges: linear and nonlinear and by the timing parameters associated with the transition between the states: attack and release. The ranges are separated by the voltage threshold and the associated time constants that govern the transition between them. If the input signal exceeds the threshold for a sufficient period of time, the limiter gain becomes nonlinear. The voltage gain of the circuit decreases because the output becomes clamped at the threshold level, despite rising levels at the input. If the input level recedes to below the threshold for a sufficient duration, the “all clear” sounds and the device returns to linear gain.

Limiters may be found inside the mix console where the application is principally for dynamic control of the mix, which falls outside of our concern for system protection. Additional locations include the signal-processing chain both before and after active frequency dividers. A limiter applied before frequency division is difficult to link to the physics of a particular driver, unless the limiter has frequency-sensitive threshold parameters. The most common approach to system protection is after the frequency divider, where the limiters are charged with a particular driver model operating in a known and restricted frequency range. Such limiters can be found as stand-alone devices, as part of the active frequency divider (analog or digital) or even inside the power amplifier itself.

Where does the limiter get its threshold and time constant settings? Most modern systems utilize factory settings based on the manufacturer-recommended power and excursion levels. There are two principal causes of loudspeaker mortality: heat and mechanical trauma. The heat factor is managed by RMS limiters, which can monitor the long-term thermal conditions based upon the power dissipated. The mechanical trauma results from over-excursion of the drivers, resulting in collision with the magnet structure or from the fracturing of parts of the driver assembly. Trauma protection must be much faster than heat protection; therefore the time constants are much faster. These types of limiters are termed peak limiters. Ideally, limiters will be calibrated to the particular physics of the drivers. If the limiters are not optimized for the drivers, they may degrade the dynamic response with excess limiting or possibly fail (insufficient limiting) in their primary mission of protection. The best designed systems incorporate the optimal balance of peak and RMS limiting. This is most safely done with the use of amplifiers with voltage limits that fall within the driver's mechanical range and peak limiters that are fast enough to prevent the amplifier from exceeding those limits or clipping. The results are peaks that are fully and safely realized and RMS levels that can endure the long-term thermal load.

A seemingly safe approach would be to use lower-power amplifiers or set the limiting thresholds well below the full capability so as to err on the side of caution. This is not necessarily the best course. Overly protective limiters can actually endanger the system because the lack of dynamic range causes operators searching for maximum impact to push the system into a continual state of compression. This creates a worst-case long-term heat scenario as well as the mechanical challenges of tracking square waves if the amplifiers or drive electronics are allowed to clip. The best chances of long-term survival and satisfaction are a combination of responsible operation and limiters optimized for maximum dynamic range.

Limiters can be split into two basic categories: predictive or negative feedback loop. Predictive limiters are inserted in the signal path before the power amplifier(s). They have no direct correlation to the voltage appearing at the amplifier outputs. Therefore, their relationship to the signal they are managing is an open variable that must be calibrated for the particulars of the system. For such a scheme to be successful, the factors discussed above must become known and enacted in a meaningful way into the limiter. Such practices are common in the industry. I do not intend to be an alarmist. Thousands of speakers survive these conditions, night after night. The intention here is to heighten awareness of the factors to be considered in the settings. Satisfactory results can be achieved as long as the limiters can be made to maintain an appropriate tracking relationship to the output of the power amplifiers. Consult the manufacturers of speakers, limiters and amplifiers for their recommended settings and practices.

Required known parameters for predictive limiters:

■ Voltage limits (maximum power capability) of the amplifier

■ Voltage gain of the amplifier (this includes user-controlled level settings)

■ Peak voltage maximum capability of the loudspeaker

■ Excursion limits of the loudspeaker over its frequency range of operation

■ Long-term RMS power capability of the loudspeaker?

Negative feedback systems employ a loop that returns the voltage from the amplifier terminals. This voltage is then used for comparison to the threshold. In this way the voltage gain and clipping characteristics of the amplifier are incorporated into the limiting process. This is common practice in dedicated speaker controllers and can afford a comparable or greater degree of protection with lesser management requirements for the power amplifiers; e.g., the amplifier input level controls may be adjusted without readjusting the limit threshold.

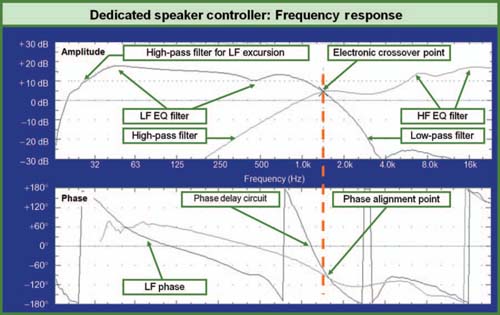

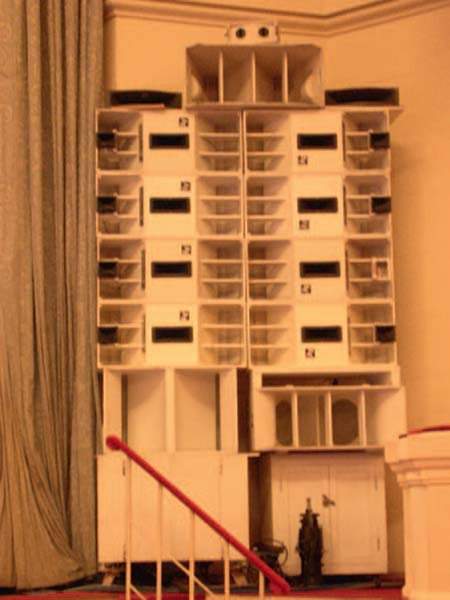

DEDICATED SPEAKER CONTROLLERS

Many speaker manufacturers also make dedicated speaker controllers (Fig. 1.22). The controllers are designed to create the electronic-processing parameters necessary to obtain optimal performance of the speaker. The parameters are researched in the manufacturer's controlled environment and a speaker “system” is created from the combination of optimized electronics and known drivers. The dedicated speaker controller contains the same series of devices already described above: level setting, frequency divider, equalization, phase alignment, and limiters, all preconfigured and under one roof. The individual parameters are manufacturer- and model-dependent so there is little more that needs be said here. This does not mean that equalizers, delays, and level-setting devices can be dispensed with. We will still need these tools to integrate the speaker system with other speakers and to compensate for their interaction in the room. We will, however, have reduced the need for additional frequency dividers and limiters and should have a much lighter burden in terms of equalization.

Two recent trends have lessened the popularity of these dedicated systems in the current era. The first is the trend toward third-party digital signal processors (DSPs). These units are able to provide all of the functionality of the dedicated controllers, usually with the exception of negative feedback limiters. Manufacturers supply users with factory settings that are then programmed into the DSP. These tools have the advantage of relatively low cost and flexibility but the disadvantages include a lack of standardized response since users of a particular system may choose to program their own custom settings instead of those recommended by the manufacturer. There is also considerable latitude for user error

FIGURE 1.22

Frequency and phase response of an example two-way dedicated speaker controller.

since the programming, copying and pasting of user settings is often poorly executed even by the best of us. The second trend is toward completely integrated systems, inclusive of the amplifier in the speaker cabinet.

ACTIVE SPEAKERS

The ultimate version of the dedicated speaker controller is this: a frequency divider, limiter, delay line, level control, equalizer, and power amplifier in a single unit directly coupled to the speaker itself. This type of system is the self-powered speaker, also termed the “active” speaker. Active speakers operate with a closed set of variables: known drivers, enclosure, known physical displacement, maximum excursion, and dissipation. As a result, they are designed to be fully optimized for the most linear amplitude and phase response over the full frequency range and to be fully protected over their full dynamic range. Active speakers, like power amplifiers, have an open polarity variable, since they are electronically balanced at the input and moving air at the output. Since most active speakers came on the market after industry polarity standardization, it would be rare to find a nonstandard system.

From our design and optimization perspective, active speakers give us unlimited flexibility in terms of system subdivision; i.e., the number of active speakers equals the number of channels and subdivision options. Externally powered speakers by contrast may, for economic reasons, often share up to four drivers on a common power amplifier channel and thereby reduce subdivision flexibility.

There will be no advocacy here as to the superiority of either active or externally powered (passive) loudspeakers. We will leave this to the manufacturers. The principal differences will be noted as we progress, since this choice will affect our optimization and design strategies. In this text, we will consider the speaker system to be a complete system with frequency divider, limiters, and power amplifiers inclusive. In the case of the active speaker, this is a physical reality, while in the case of the externally powered system, the components are separated. The techniques required to verify the proper configuration of these two different system types will be covered in Chapter 11.

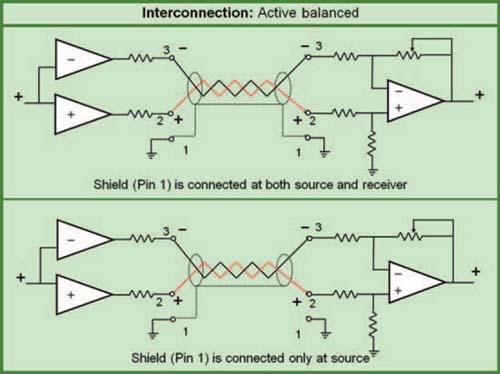

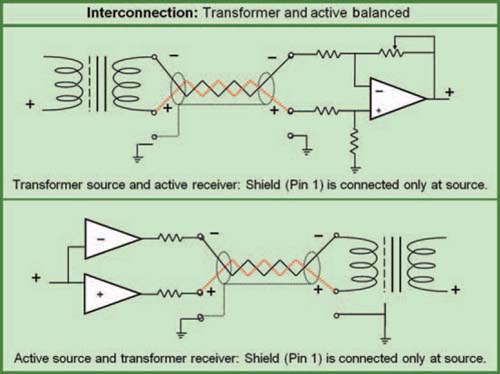

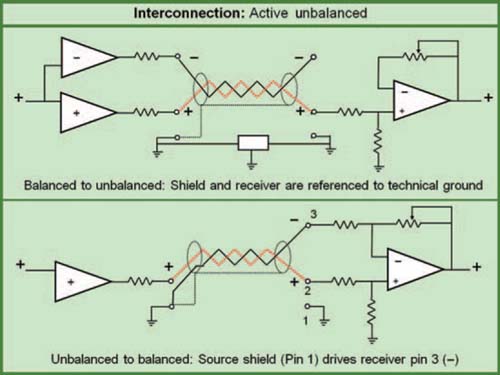

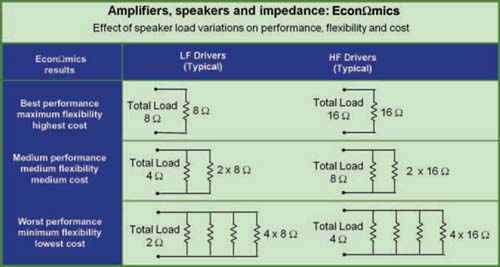

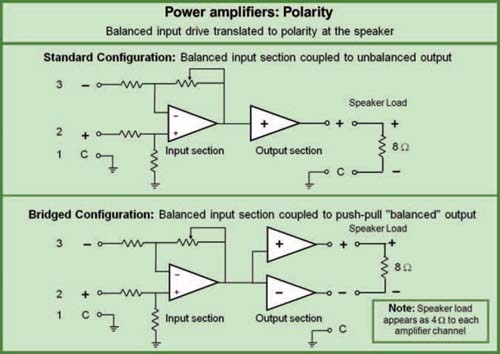

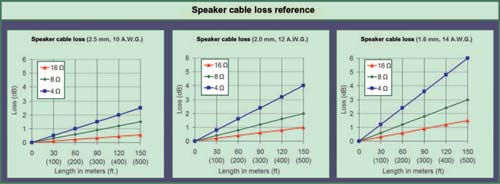

Line level interconnection