IBM i implementation

In this chapter we describe IBM i implementation.

4.1 Creating the LUNs for IBM i

We create 8 HyperSwap LUNs to attach to IBM i. Created LUNs are shown on Figure 4-1.

Figure 4-1 HyperSwap LUNs for IBM i

HyperSwap LUNs are in the Metro Mirror Consistency Group, which is shown in Figure 4-2.

Figure 4-2 Metro Mirror consistency group of HyperSwap volumes

The automatically assigned preferred nodes at each site of the IBM i LUNs are shown in Table 4-1.

Table 4-1 Preferred nodes of IBM i LUNs

|

LUN

|

Site 1

|

Site 2

|

|

|

Preferred node

|

Preferred node

|

|

HyperSwap_IBM_i_1

|

1

|

4

|

|

HyperSwap_IBM_i_2

|

2

|

3

|

|

HyperSwap_IBM_i_3

|

1

|

4

|

|

HyperSwap_IBM_i_4

|

2

|

3

|

|

HyperSwap_IBM_i_5

|

1

|

4

|

|

HyperSwap_IBM_i_6

|

2

|

3

|

|

HyperSwap_IBM_i_7

|

1

|

4

|

|

HyperSwap_IBM_i_8

|

2

|

3

|

The preferred nodes of a HyperSwap LUN can be seen in LUN properties, as shown in Figure 4-3.

Figure 4-3 Preferred nodes in LUN properties

4.2 Connecting the IBM i LUNs

The Storwize V7000 host for IBM i has four virtual FC adapter with numbers 40, 41, 42, and 43. Two of them are assigned to VIOS1, and two are assigned to VIOS2. Each VIOS is connected via Fabric-A and Fabric-B. Virtual adapter 40 uses VIOS1 Fabric-A, VFC 41 uses VIOS1 Fabric-B, VFC 42 uses VIOS2 Fabric-A, and VFC 43 uses VIOS2 Fabric-B.

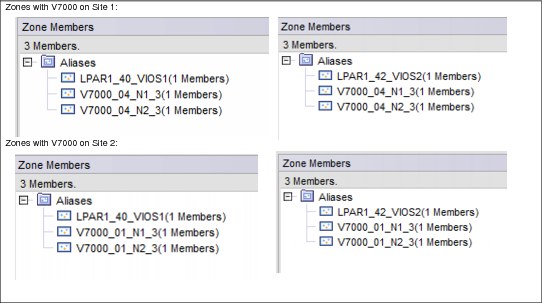

Figure 4-4 and Figure 4-5 show zoning of the switches.

Figure 4-4 Zoning of Fabric A

Figure 4-5 Zoning of Fabric B

The attachment and zoning scheme is shown in Figure 4-6.

Figure 4-6 Attachment scheme

In the Storwize V7000 cluster we create a Host containing the four WWPNs of IBM i virtual FC adapters, as is shown in Figure 4-7.

Figure 4-7 IBM i Host on Site 1

We mapped the HyperSwap LUNs to the IBM i Host, as is shown in Figure 4-8.

Figure 4-8 Mapping IBM i LUNs to the Host

4.3 Prepared partition on Site 2

Prepared partition IBM_i_Site2 in another Power server is connected to the Storwize cluster with two VIOS in NPIV. Four virtual FC are used to connect the Storwize cluster. SAN cabling and zoning is the same as for the production partition, see Figure 4-6 on page 27.

Host in the Storwize cluster has the WWPNs of the four virtual FC adapters of IBM_i_Site2, as shown in Figure 4-9. Normally, no LUNs are mapped to the host.

Figure 4-9 IBM i Host on Site 2

4.4 Installing IBM i on HyperSwap LUNs

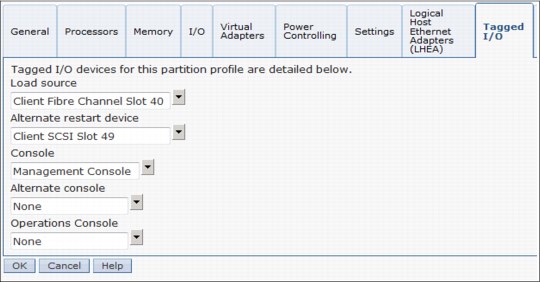

We install IBM i from the installation images in the VIOS repository. Figure 4-10 shows tagged virtual adapters in HMC. Figure 4-11 shows settings of the IBM i LPAR on Site 1. On Figure 4-12 on page 30 see the activating of the partition to install IBM i.

Figure 4-10 Tagged virtual adapters on Site 1

Figure 4-11 Installation settings

Figure 4-12 Activation of IBM i LPAR

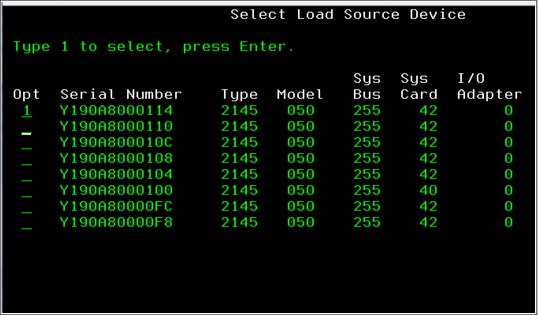

After installation starts, we are prompted to select a LoadSource device, as can be seen in Figure 4-13.

Figure 4-13 Select LoadSource

After IBM i is installed we look for the paths to LUNs, by using command STRSST (Start Service Tools) then select 3 Work with disk units, select 1 Display disk configuration, select 9 9 Display Disk Path Status.

Each resource DMPxxx represents one path to the LUN. Each LUN has four active and 12 passive paths, as can be seen in Figure 4-14. Active paths are the paths to the preferred node on Site 1, each path from one VIOS and one fabric. Passive paths are the paths to the non-preferred node on Site 1 and to both nodes at Site 2.

Figure 4-14 Paths to an IBM i LUN

4.5 Using Independent Auxiliary Storage Pool (IASP)

When using solutions with IASP, make sure that both Sysbas and IASP of the production LPAR reside on HyperSwap LUNs, and that Sysbas of the disaster recovery (DR) partition resides on the HyperSwap LUNs. The reason for this is that having IASP alone on the HyperSwap LUNs doesn’t bring any benefit of HyperSwap compared to Metro Mirror.

4.6 Migrating IBM i to HyperSwap LUNs

We suggest the following options to migrate an IBM i from existing LUNs or disk units to HyperSwap LUNs:

•Save / Restore

•ASP balancing and copy LoadSource

•Using FlashCopy

4.6.1 Save / Restore

With this migration you save the IBM i system to tape and then restore it from tape to an LPAR with disk capacity on HyperSwap LUNs. Migration is straightforward and doesn’t require any additional resources. However, it requires relatively long downtime.

4.6.2 ASP balancing and copy LoadSource

This migration method requires relatively short downtime, but it might require you to temporarily connect additional FC adapters to IBM i. Use the following steps to perform it:

1. Connect HyperSwap LUNs and existing internal disks or LUNs to IBM i LPAR.

2. By using the ASP balancing function, migrate data from the currently used disks or LUNs except LoadSource, to the HyperSwap LUNs. ASP balancing doesn’t require any downtime. It is done while the IBM i partition is running. Depending on the installation needs, you may perform load balancing relatively quickly with some impact on performance, or slowly with minimal performance influence.

3. After data (except LoadSource) is migrated to HyperSwap LUNs, copy LoadSource to a HyperSwap LUN, which must be at least as big as the present LoadSource. This action is disruptive, and it requires careful planning.

4. After LoadSource is copied to a HyperSwap LUN, IBM i starts working with the entire disk capacity on HyperSwap LUNs.

5. Disconnect the previous storage system from IBM i, or remove the internal disk.

4.6.3 Using FlashCopy

When the existing IBM i LUN resides on the same Storwize V7000 cluster as the HyperSwap LUNs that you plan to use, you might be able to use FlashCopy of the existing LUNs to the HyperSwap LUNs. You should perform the following steps to migrate:

1. Power Down IBM i.

2. FlashCopy existing IBM i LUNs to the HyperSwap LUNs. Use FlashCopy with background copying.

3. Start IBM i from the HyperSwap LUNs and continue workload.

|

Important: Note that we didn’t test this migration method. Therefore, we strongly recommend that you perform a Proof of Concept (PoC) before using it.

|

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.