Chapter 7. The Internet in a Changing Climate

Climate change is already here, and it’s already impacting the reliability of the web. It’s important for us to recognize that this is just the beginning. Because of a phenomenon called climate lag, emissions don’t impact the planet’s climate instantaneously. James Hansen, climate scientist and former director of NASA’s Goddard Institute for Space Studies, puts typical climate lag at between twenty-five and fifty years (http://bkaprt.com/swd/07-01/). That means we are today experiencing the effects of emissions generated during the 1970s–1990s. Even if we stop emitting greenhouse gases today, we’ve guaranteed that climate change will get significantly worse over the next few decades because we’ve already emitted vast amounts of greenhouse gases over the past few decades. We are locked in.

We’re currently designing for the web with the assumption that the internet will only ever be better than it is now, and for everyone. Yet much of the infrastructure we depend on for internet services was never designed with climate change in mind.

We therefore need to ensure that the internet is designed to function in a changing and less hospitable world. As we look ahead to an exciting future with AI, VR, and every imaginable object connected, we need to plan ahead and prioritize resilience before we need it.

Water, Wind, and Heat

London Docklands, stretching along the River Thames in London, is the epicenter of internet data flowing through, in, and out of the UK. It’s home to a number of large data centers and points of presence, where individual data centers connect to the wider internet. It also faces serious flood risk from sea level rise (Fig 7.1). Nick Mailer told me he looked at the Docklands before deciding instead to locate their data center on higher ground in Cambridgeshire:

The Docklands is on a floodplain, and it’s only thanks to the Thames Barrier that it doesn’t actually flood. But the Thames Barrier is not infallible, never more so than when sea levels rise. We noted that quite a few emergency generators were in basements of the London Dockland data centers, with grating for their vents. We did indeed imagine what would happen were the area to be flooded, and we were not reassured. In effect, the Docklands is the UK’s internet “switchboard.” If the London Docklands were to be damaged or disconnected, then the result for the UK’s internet would be calamitous.

Sea levels won’t affect only data centers, but also the cables that connect us to the web. A study by the University of Oregon and the University of Wisconsin-Madison looked at internet infrastructure in the United States and found that, in the next fifteen years, 4,067 miles of fiber internet cables are likely to be permanently underwater, and 1,101 nodes (points of presence and colocation centers) will be surrounded by sea water (http://bkaprt.com/swd/07-03/, PDF). That’s just in the United States. Of course it won’t happen overnight, but in the long run it could disrupt the performance and reliability of the web we depend on.

The risks of coastal flooding from rising sea levels are exacerbated by storms that push the sea inland. These storms are growing in frequency and strength, and bring with them high winds that threaten other internet infrastructure, such as cell towers and power supplies to data centers.

And this is all before we even mention the basic fact that the world is getting warmer. One of the big costs of running data centers is keeping the machines cool. As global temperatures rise, the energy required to cool data centers will rise significantly, creating a feedback loop in which climate change increases the energy use and carbon emissions of the internet, which in turn accelerate climate change. It seems that one of our key strategies to keep the internet running smoothly in an increasingly hostile climate will be to burn more fuel. As Hurricane Florence approached the East Coast of the United States in 2018, Robert Fiordaliso, director of critical infrastructure at data center operator CenturyLink, described the backup power plans in place:

I have 50,000 gallons of fuel on site to refuel generators…In addition we have several 10,000-gallon tankers, or motherships, just outside the predicted storm strike zone, and those are serviced by smaller trucks containing 2,500 or 5,000 gallons that can be pulled to sites by trucks. (http://bkaprt.com/swd/07-04/)

That’s a lot of fossil fuel ready to be burned in backup generators when the data center is hit by the worst effects of climate change.

Communication in Crisis

As wildfires swept through Australia in late 2019 and early 2020, destroying an area of land twice the size of Belgium, many people in danger found they had limited or no access to mobile phone networks and data. As cell towers were destroyed or damaged by fire, access to information and communication channels was cut off precisely when and where people needed it most. The issue was compounded by the fact that intense fire releases plasma, which interferes with certain radio frequencies, degrading signal strength even when the towers are operational (http://bkaprt.com/swd/07-05/). Telco operators asked the Australian government to be added to a priority list for fuel during emergencies in order to run emergency generators, stating that power loss is the largest cause of communications blackouts in wildfire areas (Fig 7.2).

It wasn’t just mobile towers that failed, though; the National Broadband Network (NBN) also struggled during the wildfires. Amanda Leck, director of community safety at Australia’s National Council for Fire and Emergency Services, said they had been expressing concern about the NBN’s ability to withstand a natural disaster for a couple of years. In her words, “We all knew that the NBN would crap out almost immediately, and it did” (http://bkaprt.com/swd/07-07/).

The COVID-19 pandemic in 2020 highlighted a new challenge, as millions of office workers began working from home. While this temporarily had a positive impact on the environment and air quality, it also put extra strain on the web, with peak internet traffic up by 30 percent in Italy, according to Cloudflare (http://bkaprt.com/swd/07-08/). Thankfully, the internet held up well overall, but many people experienced degraded performance. It’s a glimpse into a future where we may need to travel a lot less and rely on internet services to a far greater extent.

As the impacts of climate change grow, large tech companies will have the financial resources to protect their infrastructure and commercial services, while smaller businesses will struggle to maintain resilient systems. In an article for the New Republic, Greenpeace IT specialist Gary Cook suggested that this economic disparity will also be apparent among web users: “Customers with the wherewithal to pay for more reliable services will still get [them], and there will be a wider divide between those who do and don’t” (http://bkaprt.com/swd/07-09/). On a typical day, that might not make much difference, but in crisis situations, it will have a profoundly negative impact on small businesses and vulnerable citizens.

But web services can be designed to be more resilient to our changing climate. As Hurricane Irma approached the United States in 2017, CNN announced that they had launched a text-only version of their website to ensure that people affected by the storm could still access critical news with only a minimal data connection required (Fig 7.3). The homepage of the text-only site is 97.5 percent smaller than the main CNN website, making it not just low-carbon, but also far more accessible. It was extremely well received, as it ensured that many people had access to critical information even when the wired broadband and 3G/4G networks were down (http://bkaprt.com/swd/07-10/).

It’s a great example of the type of solutions we need to be developing in the future, contrasting our rich-immersive digital experiences with simple, no-frills solutions for the services we depend on.

Low bandwidth, better experience

That said, creating a secondary version of a website to cater to suboptimal conditions is not ideal. Just as we’ve moved away from building separate mobile websites to building responsive sites that work on all devices, we should aim to follow the same approach when it comes to websites that can function on low bandwidth connections. If we embrace efficiency as a core requirement from the very outset of our projects, we can create rich web experiences that are also low bandwidth. The result isn’t just greater resilience of the full web service we’ve created, but faster load times for everyone, even in perfect conditions.

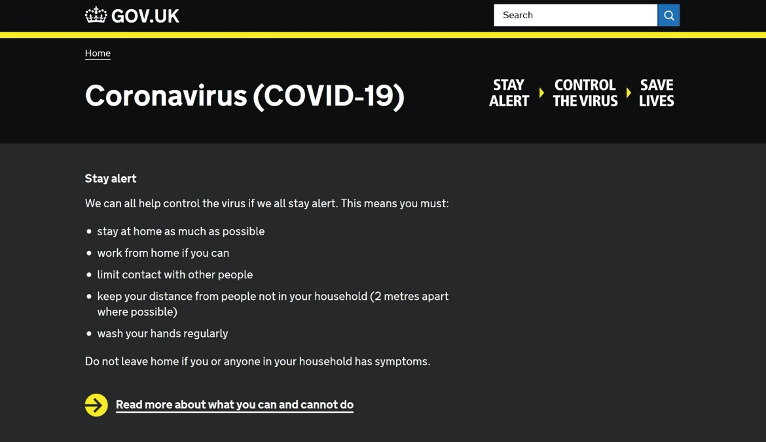

The COVID-19 guide from Gov.UK provides key information to the general public about how to stay safe during the pandemic (Fig 7.4) (http://bkaprt.com/swd/07-11/). It needs to be accessible to the widest possible audience, no matter what device or connection they have. The full page is just 263 KB and achieves a score of 100 percent in Google PageSpeed. It’s a good example of efficient design and development supporting the public during a crisis by making information easy to access.

We also need to eliminate single points of failure. The team behind Digital.gov has embraced JAMstack for a new version of their site, using the static site generator Hugo (Fig 7.5). By using static files on a CDN, they’ve improved load times for users, as well as ensuring that crucial content is accessible even if a data center goes offline. Decoupling the CMS from the front end also avoids the risk of overloading the server hosting the CMS during high-traffic crises. Yes, you can get some of these benefits without using a JAMstack solution, but there’s an inherent resiliency with this approach that isn’t found in more traditional website configurations.

Improved resilience has benefits beyond the duty-bound services of government and news websites—it’s a key pillar of good customer service for all sorts of organizations serving customers online. Take none other than Starbucks, for example. When creating a browser-based ordering system, Starbucks wanted to ensure that customers’ orders wouldn’t be disrupted by unreliable mobile connections. Their digital agency, Formidable, used Progressive Web App technology to enable as much of the ordering process as possible to function offline:

In order to create the offline functionality for the PWA, complex caching had to be set up to download the information for each menu item, store information on customers’ previous orders, and store static pages from the website. (http://bkaprt.com/swd/07-13/)

Ordering a coffee may seem like an overly simple use case, but this type of approach is exactly what we’ll need to create a resilient web across any number of services.

Fig 7.4: Efficient and accessible web design supporting the public in a crisis.

Fig 7.5: Digital.gov uses the Hugo static site generator (http://bkaprt.com/swd/07-12/).

Beyond the browser

The previous examples assume that the user has some access to the internet via a data connection and web browser. But how would we provide information to users who can’t access websites at all?

WeFarm is an SMS-based crowdsourcing service to help farmers in developing countries—who rarely have internet access and tend to live in isolated areas—find the information they need, source supplies, and determine pricing (http://bkaprt.com/swd/07-14/). Farmers send questions to WeFarm via SMS; using machine learning, the WeFarm system interprets the questions and forwards them to farmers with relevant experience, who then send answer back via SMS. The system allows farmers to access information quickly, without an internet connection, and for only the cost of a standard SMS.

We must challenge our own assumptions and think deeply about the true problems we are trying to solve. Is our job to design a website, or is it to provide users access to information and services? By focusing on the true problems to be solved, we might just find some creative solutions.

Orbiting possibilities

If the earth’s climate poses such a great threat to infrastructure, we might also ask whether we could eliminate the climate itself from the equation somehow. One interesting idea is the creation of a high-speed, low-latency satellite internet—a growing possibility as tech companies such as SpaceX enter a new space race. Although climate change isn’t the motivating factor for these new networks, they may provide a far more resilient solution to transferring data around the globe without being impacted by wind, floods, wildfires, and rising temperatures. Plus, they would offer far greater coverage in rural parts of the world where it’s simply too expensive to build high-speed cable or mobile infrastructure.

You might be wondering how ecofriendly it is to put satellites into space. The SpaceX program plans to launch forty-two thousand satellites into the Earth’s orbit. That sounds like a lot of rocket fuel, but the CO2 released by launching these satellites is roughly equivalent to that of five full passenger planes journeying from London to Sydney (http://bkaprt.com/swd/07-15/).

That’s a fraction of what it would take to build physical infrastructure on the ground covering the entire globe. Plus, the satellites themselves are solar-powered, minimizing the energy and emissions required for transferring data twenty-four hours a day once they’re in operation. The downside is that, by definition, these networks will be owned and controlled by a small number of powerful companies, and that brings about its own challenges.

For web designers, a satellite network could help us deliver web services to a much wider audience currently excluded from our digital world. And by embracing resiliency to maintain steady communication amid fluctuating climate circumstances, we can maximize the benefits to these new web users. This is how we can create a web that adapts to what users need, rather than demanding they adapt to limited networks and service providers.

A Resilient Future

Ensuring fast, reliable, and open access to the internet for the world’s citizens is going to be a growing challenge as the large-scale impacts of climate change begin to take effect. The good news is that many of the approaches we can use to create a more climate-friendly web are the same approaches that will help us create a more resilient web. When we pull together approaches such as static web technology, content delivery networks, offline functionality, and communication technologies like SMS, we can create web services that stand up to almost anything.

If we approach it positively, this challenge will lead us to more creative solutions for a web that is better for everyone.