10

Common Issues and Pitfalls

Test automation, with all its benefits, helps engineering teams save time, effort, and resources. It takes highly collaborative and skilled engineers to get test automation working at a large scale. Even high-performing teams tend to go through multiple iterations before settling on a stable framework. Teams typically encounter a wide variety of issues when it comes to test automation, and each team’s journey is unique. In this chapter, I have tried to compile a list of teachings to guide you through this process and help minimize any hurdles in your test automation undertaking. The main topics we will be covering in this chapter are the following:

- Recurrent issues in test automation

- Test automation anti-patterns

Recurrent issues in test automation

Being successful in test automation involves getting a lot of things right – in this section, we will look at a collection of common issues encountered when executing test automation projects. These items throw light on common viewpoints for anyone undertaking test automation at a large scale. This is not meant to be a comprehensive list, but these are more common occurrences that I have witnessed over time.

Unrealistic expectations of automated testing

Engineering teams come under pressure quite often to meet unrealistic expectations from automated tests. It is the duty of SDETs and quality engineers to educate the stakeholders about the initial investment it takes to see results from test automation. Going all in on a framework without understanding what it can or cannot do for your organization often leads to wasted resources. Another common issue is stakeholders questioning the need for multiple frameworks to address the application stack. This also demands resources that are readily available and skilled with that knowledge. The initial leg of the test automation journey is overly critical and needs strong collaboration between management and engineering teams.

Let us now look at the importance of manual testing before kicking off automation.

Inadequate manual testing

Good test automation coverage usually springs from extensive manual testing. Every feature included as part of a test automation suite should be thoroughly manually tested and considered stable enough for automation. Manual testing gives us the essential confidence to move to test automation. In fact, the manual testing of a feature is where most of the bugs are found, and automation is an ally for catching regression bugs at a later point. Manual testing should not just be limited to functionality but also help find issues with application configuration and test environments. Debugging a test automation script becomes trickier when there are multiple layers of failure. Another important aspect of manual testing is that it produces a vast sample of test cases to choose from for test automation. Therefore, early in the development cycle, test case creation should be completed, and these test cases can be executed against a stable build. At this point, the foundational work of test automation can begin, and when we know that the feature is fully working later, the flow can be automated and added to the corresponding test suite.

Let us review the right candidates for test automation next.

Not focusing on automating the right things

Just because we have a framework in place and the resources lined up for automation, we should automate everything. It is imperative to avoid going down the path of 100% test automation. A lot of consideration should go into calling out the right candidates for automation. Some of the key items to consider are as follows:

- Pay special attention when automating the tests that do not need to be run frequently. Automate rare cases only when absolutely necessary.

- Automation costs are initially high and we will only start seeing the ROI after several rounds of execution, so it is all-important to focus on stable business scenarios that can reap the benefits in the long run.

- Minimize automating tests at the UI layer due to the brittleness of the frontend. Load the base of the test automation pyramid as much as possible.

- Do not automate the usability aspects of your application.

- Avoid automating big and complex scenarios in the early phases of an automation framework. Automating a complex scenario in a single flow will impact test stability and eventually result in higher maintenance costs if the framework is not mature enough to handle it.

- Brittle selectors are the biggest cause of flakiness in the UI tests. Working with your team and identifying a solid selector strategy saves a lot of time in the long run.

Test engineers should strive to only automate the required and right set of test cases and should push back if anyone says otherwise.

Let us now look at how important the architecture of the underlying system is to test automation.

A lack of understanding of the system under test

Every software application is unique and so must be the test suite designed to validate its features. Test engineers often struggle to automate features reliably due to their lack of a deeper understanding of the various aspects of the application. Test engineers should collaborate with software engineers at every stage of the development process. There can be intermittent UI test failures due to subtle backend changes and test engineers should strive to say on top of the code changes that are made. A single API call or a web page can no longer be viewed as a black box, as it may add various asynchronous activities behind the scenes down the road. If the test suite is not ready for these changes, a lot of refactoring work might be needed to get it operational again. Therefore, it is mandatory for engineers to take the time to get an in-depth understanding of the system and then go ahead with designing the automated tests.

Let us understand the importance of test maintenance next.

Overlooking test maintenance

One of the easiest aspects to overlook in test automation is its maintenance. A lot of teams try to ramp up test automation infrastructure when product delivery is in full swing and they start witnessing regression defects leaking into production. They just want to be able to get up and running as quickly as possible with a test automation infrastructure without giving much thought to the maintenance aspects. This is a recipe for disaster, as it is a known fact that any design work that doesn’t consider maintenance aspects doesn’t scale very well. In the early stages of a software project, it is vital to think through what kind of effort will need to go into maintaining a test automation framework after it is fully built and functional. This usually includes items such as test data refreshing, keeping test libraries updated, addressing major architectural changes, and so on.

Let us now understand how important the right tools are for automation work.

Not choosing the right tools

The test automation market is flooded with a wide variety of tools, both licensed and open source, and it is essential to pick the one that suits the needs of your organization. Not selecting a tool just for its popularity and thinking about all the expectations from the tool practically saves a lot of effort down the road. Test coverage-related lapses often stem from not analyzing how the selected tool addresses the entire application stack. It is essential to examine how the test automation tool provides coverage for every layer of the test pyramid. Not all the tools on the market come with all the capabilities needed and most often, it is up to the end users to customize their choices to fit their needs.

Let’s review the impact of insufficient investment in test environments next.

Under-investing in test environments

A test environment is arguably the key factor that decides the success of a test automation endeavor. Not investing properly in the test environment and expecting automated tests to be stable is usually wishful thinking. Based on the type of automated tests, the test environment should have all the necessary pieces installed and ready to use. For example, the environment used for end-to-end testing should be close to production in all aspects, except for load handling. The current infrastructure landscape supplies tools such as Garden.io, which can be used to spin production-like environments at will for local development and testing. Software engineers should have the flexibility to bring the test environment as close as possible to their code rather than testing first in a full-fledged environment.

Another critical failure point concerning test environments is not considering how they work with a Continuous Integration (CI) tool. Automated tests are usually packaged and deployed on the CI platform to run tests against an environment. It is vital for these three components to work together without any incompatibility.

Let us look at the value of taking a whole team approach to test automation next.

Taking a siloed approach

A successful test automation approach often involves the collaboration of the entire team. Keeping a quality-first mindset brings different skill sets and viewpoints to the table and increases the testability of the product. Having the whole team approach to test automation problems results in higher test coverage, efficient testing processes, and, eventually, higher confidence in the product released. Taking a whole team approach to test automation forces every engineer to think about how to test a piece of code, thereby evolving quality as the product is being built rather than as an afterthought. It also helps with better collaboration and ownership.

Let us now look at how being lean with automation helps overall.

Not taking a lean approach

A general recommendation when it comes to test automation efforts would be to not work on any large-scale changes for more than two iterations. It is important to keep trying something new, but it is also wise to take the time to appraise what is going right and what is not working every iteration or two. This gives us the flexibility to change direction when things are not going right or accelerate when the results are encouraging. The last thing we need is to spend too much time on a tool or a framework and find it sub-optimal. There is no one-size-fits-all solutions when it comes to test automation, and it is crucial to keep the approach lean.

Let us next realize the importance of having a plan for test data.

Not having a plan for test data needs

Sound test automation strategies devote ample time to collecting, sanitizing, and managing test data. Test data is at the core of most test automation frameworks. Ignoring test data needs and only focusing on other technical aspects often results in a framework that cannot be scaled. I would go one step further and say that test data is as important as the framework itself. Having a plan for data seeding at every layer of the application goes a long way in building robust automated tests. It is equally important to acknowledge the areas where data is hard to simulate and, in turn, look for alternatives such as mocking or stubbing techniques. Test results are deterministic only when the input data is reliable and predictable.

Frequent baselining production data and migrating it to test environments helps build a reliable test data bed on which automated tests can be executed. Automated tests should also be built in a self-contained manner when it comes to handling test data. Proper clean-up steps should be built within the frameworks to account for test stability. More often than not, proper test coverage equates to having sensible data to drive automated tests.

This brings us to the end of this section. In the next sections, let us review certain commonly occurring anti-patterns in the world of test automation.

Test automation anti-patterns

An anti-pattern is something that goes against the grain of what should be done in a project. It is important to be mindful of these so that you may recognize and avoid the occurrence. These anti-patterns can be coding- or design- as well as process-oriented. Let us begin with a few coding- and design-based anti-patterns in the next section.

Coding and design anti-patterns in test automation

Test automation code should maintain extremely lofty standards when it comes to stability and reliability. Poorly designed tests result in more time spent on debugging tests rather than developing new product features. This has a ripple effect across teams, thereby leading to the overall degradation of quality and productivity. It is essential to focus on the basics and get them right from the bottom up. Let us begin with the test code quality.

Compromising the code quality in tests

There should be no differentiation between tests and core application logic when it comes to code quality. Test code must be treated the same way as any other code would be in each repository. Applying coding principles such as DRY, SOLID, and so on to test code helps keep the standards up. Test engineers are often tasked with getting the team up and running quickly with an automation framework. Even though this is important, immediate follow-up tasks should be created to make the framework more extensible and maintainable. Eventually, most of the test framework maintenance work will end up in the backlog of test engineers and they should think through the code quality at every step.

Let us now understand the effects of tightly coupled code.

Tightly coupled test code

Coupling and cohesion are principal facets of coding that should be paid special attention to when it comes to test code. Coupling refers to how a particular class is associated with other classes and what happens when a change must be made to a specific class. High coupling results in a lot of refactoring due to strong relationships between classes. On the other hand, loose coupling promotes scalability and maintainability in test automation frameworks.

Cohesion refers to how close the various components of a class are knit together. If the method implementation within a class is spread out across multiple classes, it gets harder to refactor, resulting in high code maintenance efforts. For example, let us say we have a class that contains all the methods to assert various responses from a single API endpoint. It would be termed loose cohesion if you added a utility within the same class to generate reports from these assertions. It might seem related at a high level, but a reporting utility belongs in its own class, and it can be reused for other API endpoints as well.

Let us next review the impact of code duplication.

Code duplication

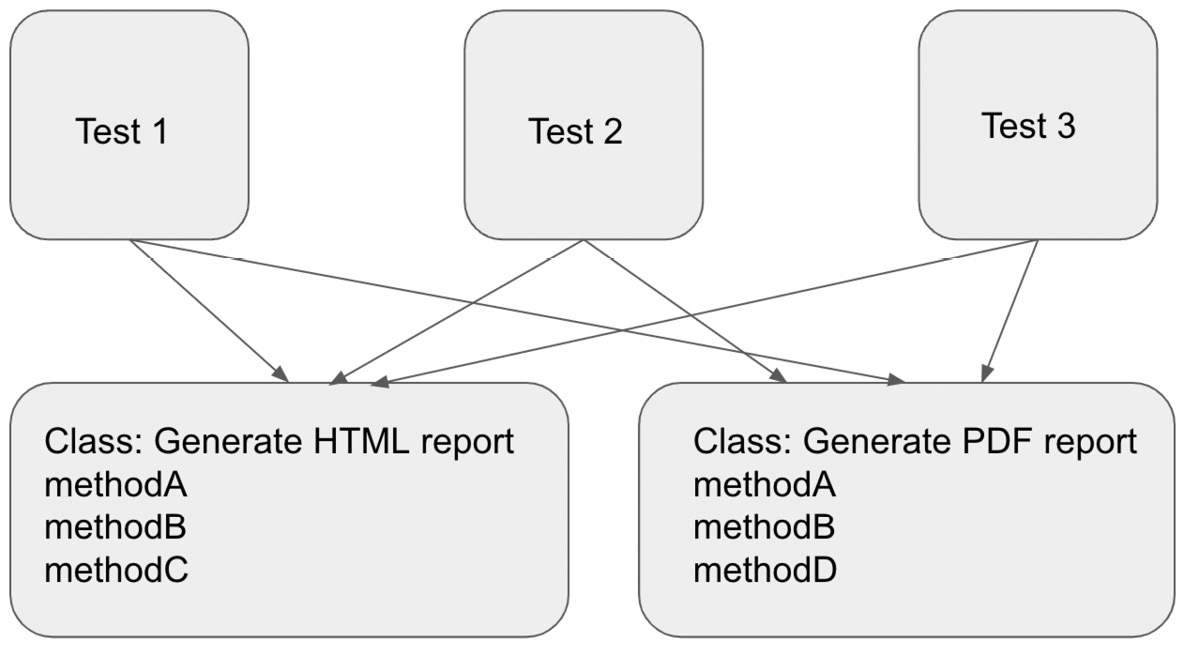

Code duplication happens in a lot of test frameworks, most often to get the job done quickly rather than taking time to design it the right way. This hurts the maintainability of automation frameworks eventually. It is essential to refactor and optimize test code frequently. Figure 10.1 illustrates a simplified case of code duplication where there are two classes to generate distinct kinds of reports. In this case, methodC and methodD are the only differences between the classes. The rest of the class body is duplicated, forcing multiple tests to instantiate both these classes every time they need to generate reports. This results in a maintenance nightmare when there is a change in the way reports are generated or when these classes are combined.

Figure 10.1 – Code duplication

It is important to remember the rule of three when it comes to code duplication. Whenever there is a need to duplicate a chunk of code a third time, it needs to be abstracted into a separate method or class.

Let us now look at how lengthy and complex tests affect test maintainability.

Lengthy complex tests

Extending existing tests and making them more complex often results in brittleness and fragility in the test suite. Engineers tend to add to the existing tests as the project scope increases and more features are being delivered. This may seem like a viable solution in the short term, but it results in complicated code for the rest of the team to follow. We have a code snippet here that shows a long test that navigates to four different screens and validates multiple fields in the process. This can be easily broken down into single tests in their own blocks or spec files if necessary:

describe("Visit packt home page, ", () => {beforeEach(() => {cy.visit("https://www.packtpub.com");});

it("search, terms and contact pages", () => {const search_string = "quality";

const result_string = "Filter Results ";

cy.get('#__BVID__324').and("have.value", "");cy.get('#__BVID__324').type(`${search_string}`, { delay: 500 });cy.get('.form-inline > .btn-parent > .btn > .fa').click();cy.get(".filter-results").contains(result_string);cy.get(".reset-button", { timeout: 10000 } ).should("be.disabled");cy.get("#packt-navbar").and("have.class", "navbar- logout");//test term-conditions page

cy.visit("https://www.packtpub.com/terms- conditions");cy.get('.form-inline > .btn-parent > .btn > .fa').click();cy.get(".terms-button", { timeout: 10000 }).should("be.enabled");//test contact us page

cy.visit("https://www.packtpub.com/contact");cy.get('.form-inline > .btn-parent > .btn > .fa').click();cy.get(".send-button", { timeout: 10000 }).should("be.disabled");});

Long and complex tests eventually result in adding timeouts in multiple places to account for slowness. This results in increased flakiness and long-running test suites. Therefore, it is important to break down the test code to follow the single responsibility principle as much as possible. It is hard to achieve this, especially in end-to-end tests, where there is usually a business flow involved, but constant efforts must be made to keep it as close to a single responsibility as possible.

Let us next review the ways to employ assertions.

The incorrect use of assertions

Assertions are our primary instruments for validating the application logic in a test. They should be used correctly, sparingly, and sensibly. One surprising area of concern is when assertions are not used at all. Engineers tend to use conditionals or write to the console instead of employing assertions, and this should be avoided at all costs. Another pain point is when there are multiple assertions within a single function or a test. This negates the primary logic of the test , thereby leading to undesirable results. A third way in which assertions can be misused is when the right type of assertions are not employed.

The following code snippet illustrates a case where no assertions are being used. This is strongly discouraged in a test:

function compute_product() {...Test logic...

if (product==10){ console.log('product is 10'); else if (product==20){ console.log('product is 20');}

else { console.log('product is unknown');}

return product;

}

The next code snippet illustrates the use of multiple assertions in a single test. Use them sparingly based on the test type and scenario:

cy.get('[data-testid="user-name"]').should('have.length', 7)cy.get('[data-testid="bank_name"]').should('have.text', 'BOA Bank')cy.get('[data-testid="form_checkbox"]') .should('be.enabled') .and('not.be.disabled')Lastly, the following code snippet shows the use of the same type of assertions for multiple UI elements. Explore and employ the appropriate type of assertion based on the element being validated:

cy.get('#about').contains('About')cy.get('.terms')contains('terms-conditions')cy.get('#home').contains('Home')Let us look at data handling within test frameworks next.

Mishandling data in automation

Data can be mishandled easily – sometimes with dire consequences. There are different types of data that a test automation framework handles and stores. Each type of data has its place within the framework and it should be handled as such. Mixing them up will result in an unorganized and inextensible framework. Some of the common types are listed with examples:

- Functional test data: This drives the application logic and is seeded within the framework or comes from a test environment.

- Dynamic test suite data: This is data required by the test scripts for execution, such as secrets:

node test-script.js -secret='HAGSDH' -timeout=30000

- Global data: This is configuration data specific to particular environments, stored in config files and the CI system:

DEV_URL= //test-development.com

STAGING_URL=https://test-staging.com

AWS_KEY=test-aws-key

- Framework level constants: These are constant values required by the tests and stored within the framework in a non-extendable base class:

const swift_code = 111222333,

routing_number = 897654321;

class BankConstants {

static get swift_code () {

return swift_code;

}

static get routing_number () {

return routing_number;

}

}

Let us now understand the importance of refactoring.

Not refactoring according to changing requirements

It is necessary to refactor test code according to changing requirements. Unfortunately, there are times when additional requirements get added or existing requirements are modified beyond the scope of an iteration. If the framework is not flexible enough to accommodate refactoring, it is extremely difficult to keep up with these changes. In an Agile world, it should be a top priority to make the test automation framework extensible so that these changes can be made.

Let us review the use of UI tests to validate business logic next.

Validating business rules through the UI

UI test automation should be restricted to testing high-level business flows, and all the business rule validations should happen at the API level as much as possible. Many teams have wasted their valuable resources by using UI automation to cover low-level business rules due to scripts breaking at minor code changes. The initial overhead is way too high to realize any ROI with this approach. It is extremely hard to acquire fine control over the system under test through UI components. These are also the slowest running tests in the test automation pyramid and hence deprive the teams of valuable rapid feedback.

Let us now look at the effects of inefficient code reviews.

Inefficient code reviews

Code reviews, when not done correctly, lead to code that does not meet the quality standards of an organization. Test automation code reviews are usually not performed at the same level as the code delivered to customers. An inefficient code review process may lead to a lack of code quality standards and the introduction of new bugs into the existing framework. Chief items to look for in a test automation code review are as follows:

- Proper commenting and descriptions wherever necessary

- Formatting and typos

- Test execution failures

- The hardcoded and static handling of variables

- Potential for test flakiness

- Design pattern deviations

- Code duplication

- Any invalid structural changes to the framework

- The correct handling of config files and their values

That brings us to the end of our exploration of coding- and design-oriented anti-patterns. Let us now explore a few process-oriented anti-patterns in test automation.

Process-oriented anti-patterns in test automation

Building a test automation suite and maintaining it operationally is no easy task. Apart from the technical obstacles, there are a variety of process-related constraints that hinder efforts. In this section, let us review a few process-oriented test automation anti-patterns.

Test automation efforts not being assessed

It is a widespread practice for software engineers to assess the effort required to complete the work of building a feature. In the Agile landscape, this is usually represented in story points. However, this pattern unfortunately is broken quite often for test automation, resulting in the accumulation of technical debt. Every feature or story being worked on should be evaluated the same way for test automation as it is done for manual testing and release. It is a common occurrence in the industry to punt the test automation efforts to a later iteration due to tight deadlines and other technical limitations. This can simply be avoided by considering test automation as an integral part of completing a feature. A feature should not be considered complete unless the team feels they have sufficient automated test coverage for it.

Table 10.1 shows a sample matrix that can be used to estimate test automation efforts in an Agile setup. It can be customized to suit the team’s needs and skill sets:

|

Story points |

Test type |

The complexity of the task |

Dependencies | |

|

1 |

API integration |

Very minor |

Nothing |

Less than 3 hours |

|

2 |

API integration |

Simple |

Some |

Half a day |

|

3 |

UI end-to-end |

Medium |

Some | |

|

5 |

UI end-to-end |

Difficult |

More than a few |

3 to 5 days |

|

Split into smaller tasks |

API/UI |

Very complex |

Unknown |

More than a week |

Table 10.1 – Sample test automation effort matrix

Test engineers should be vocal about this aspect, as it takes time, effort, and commitment from the whole team to get quality right. It is a process, and it evolves over time to yield fruitful results.

Let us understand the impact of starting automation efforts late next.

Test automation efforts commencing late

It is a frequent occurrence even in today’s Agile landscape that test automation doesn’t start until the development of a feature is fully complete. Test engineers wait for the feature to be completed and then begin planning for automation. This eventually demands a big push from the team to get tests automated and, in turn, affects the quality of the output. It is true that there might be a few unknowns in terms of the exact implementation details when the feature is being developed, but the ancillary tasks required for test automation can be started and some may even be completed while the development is in progress. Figure 10.2 illustrates how various test automation tasks can fit into a development cycle.

Figure 10.2 – Test automation tasks

Often, teams find it really hard to compress all the test automation activities post-feature completion, which results in project delays or, even worse, sub-par product quality by leaking defects into production.

Let us look at how proper tracking helps with test automation next.

Infrequent test automation tracking

It is a common project management practice to track as much information as possible in an ongoing project, but this pattern gets broken in the Agile landscape, where there is a lot of emphasis on face-to-face communication rather than documentation. Engineers spend all their time in the code bases, not devoting time to tracking what has been done so far. Certain basic aspects must be documented for several reasons. For example, teams might be juggled due to an organization being restructured and new members need to know where to start. Another common case in which the team suffers is when an experienced test engineer leaves the organization. Some critical items to keep a track of when it comes to test automation are as follows:

- Documenting what is automated and not automated

- Noting which tests are not worth automating

- Mapping automated tests to manual test cases

- Listing any bugs identified through test automation

- Listing deferred test cases for automation

Let us now understand the importance of test selection.

Creating tests for the sake of automation

I have witnessed a number of projects in which engineers have automated tests just because it is easy to automate them. This ties back to our discussion in the previous section about selecting the right tests but it deserves a special mention, as it could easily send the test automation suite into a downward spiral. The most important consideration here is to add tests that are likely to catch bugs. Adding tests just to increase the test coverage renders the suite inextensible and unmaintainable. It creates more work for the future, where tests have to be trimmed down for various reasons.

This brings us to the end of our survey on process-oriented test automation anti-patterns. Let us quickly summarize what we have learned in this chapter.

Summary

Our focus in this chapter was to understand the common issues and anti-patterns in test automation. We started by looking at how unrealistic expectations affect test automation and also how not selecting the right things to automate can impact test maintenance. We also reviewed the significance of investing in test environments and taking a whole-team and lean approach to test automation. We concluded the section by understanding the need for planning around test data. Next, we reviewed a few coding- and process-oriented anti-patterns in test automation. Some of the notable coding and design anti-patterns are compromising the code quality in tests, code duplication, incorrect use of assertions, and inefficient code reviews. Subsequently, we reviewed process-oriented anti-patterns such as test automation efforts not being assessed, test automation commencing late, and so on. This brings us to the end of our joint exploration of test automation. We have come a long way from learning about the basics of test automation to reviewing design anti-patterns in test automation frameworks. As you may have noticed, test automation involves putting together several moving parts of a complex software application. The knowledge about tools and techniques acquired throughout this book should act as a stepping stone for readers to explore test automation further.

Some potential next steps would be to dive deeper into each of the tools that we looked at and think about how you can apply them in your current role and organization. The more hands-on experience you gain with these tools and techniques, the more you will be ready to address new challenges in test automation.

Happy exploring!

Questions

- How can test engineers and SDETs manage unrealistic expectations of test automation?

- What is the importance of test framework maintenance?

- How do we select the right things to automate?

- How does code duplication affect a test framework and how do we address it?

- What are some of the correct ways to use assertions?

- What are some items to look for in a test automation code review?

- What are some tasks to keep track of in test automation?