17. A Computer Scientist’s Dream System for Designing Houses—Mind to Machine

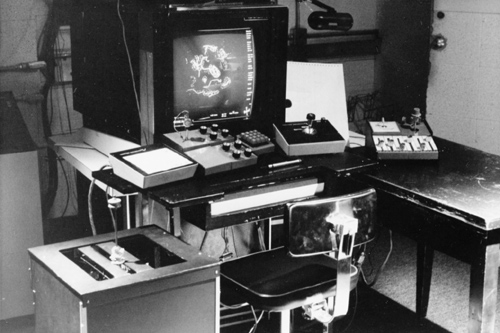

UNC GRIP Molecular Graphics System user console

James Lipscomb

Pyramids, cathedrals, and rockets exist not because of geometry, theory of structures, or thermodynamics, but because they were first pictures—literally visions—in the minds of those who conceived them.

EUGENE FERGUSON [1992], ENGINEERING

AND THE MIND’S EYE

The Challenge

Suppose one could imagine into existence an ideal computer system for the architectural design of houses and other buildings. What would it be like?

A Vision

A professional architect is obviously better equipped than I to envision an entire “building design” system. Yet because I’ve done a little amateur house design, because building architecture is more concrete and accessible to a general audience than is software architecture, and because I have for more than 50 years worked on human-computer interfaces, I presume to postulate my version of the designer-computer interface for a Dream System for Designing Houses.

The interface proposed will heavily reflect the experience of my students and me in evolving the UNC–Chapel Hill GRIP Molecular Graphics System. Evolution of this real-time interactive graphics system took place over years, as we worked with brilliant protein chemists on tools for challenging tasks.1

This essay will treat only the process of functional design of houses, not the processes for structural or systems engineering, although I think the same system would be useful for the structural engineering, the mechanical and electrical systems, and even the furnishings.

There have been other dream systems for design. Ullman [1962] devotes an essay to such a system for mechanical design.2 Yet our scopes are quite different. Ullman seeks a program to handle as much of the knowledge management as possible, a valuable objective. I am here principally concerned with the communication between the designer’s mind and the automatic system, for I consider the designer’s mind to be paramount.

Progressive Truthfulness

Good design is top-down. One starts a prose document with an outline that identifies the key ideas and then the subordinate ones. One begins a program by thinking about a data structure and an algorithm. One starts thinking about a house plan by identifying the functional spaces based on the use cases, and then their connectivity. One early on addresses a building’s esthetics in terms of massing.

Great designers, even the most iconoclastic, rarely start from scratch—they build on the rich inheritance from their predecessors.3 They take an idea from here, an idea from there, add some of their own, and wrestle the mix into a design that has conceptual integrity and a coherent style of its own.

The usual technique is to begin a design with precedential ideas but blank paper. One sketches in the big units and then proceeds by progressive refinement, adjusting dimensions and adding more and more detail.

Turner Whitted in 1986 proposed that another, perhaps better, way to build models of physical objects (originally in the context of computer graphics) is to start with a model that is fully detailed but only resembles what is wanted. Then, one adjusts one attribute after another, bringing the result ever closer to the mental vision of the new creation, or to the real properties of a real-world object.

Whitted called his technique progressive truthfulness.4 In a very real sense, progressive truthfulness is precisely the program of the natural sciences over the past few centuries, as their models approach the existing natural creation.5

With human design of artifacts, the very process of designing causes changes in the mental ideal that the design approaches. Progressive truthfulness radically helps. One has at every step a prototype to study. The prototype is initially valid; that is, there are no inconsistencies in structure. The prototype is always fully detailed, so that visual and aural perceptions of it do not mislead.

So one can imagine a house design sequence such as this:

• “Give me the Three-Bedroom Georgian House.”

• “Face it north.”

• “Mirror-flip it left to right.”

• “Make the living room 14 feet wide.”

• “Shrink the kitchen depth by a foot.”

• “Make the exterior white stucco instead of brick.”

• “Make the roof pantiles instead of shingles.”

I find Whitted’s vision convincing, and I will postulate that approach for this Dream System. It could bring about a change in how one does design. New tools can lead us to better ways of thinking.

The Model Library

Hence the Dream System starts with a rich library of fine specimens of fully detailed designs. Starting with exemplars that themselves have consistency of style ensures that such consistency is the designer’s to lose. The model library itself grows as the system is used. For remodeling, computer-vision methods of capturing a 3-D object and reducing it to a structured model will be necessary.

In our first imagined house-uttering sequence, the designer acted as if intimately familiar with the exemplar library: he called out an exemplar by name.

No matter how experienced the designer, as the library grows, this easy familiarity will be lost. As with any large terrain, one will be quite at home in parts, passably competent in others, and an explorer elsewhere. The novice will explore everywhere. Hence the ability to scan the library hierarchically and otherwise is crucial.

Structuring the library most helpfully is a prime taxonomic task, but much terminology and conceptual structure already exist.6

Hazards of the Progressive Truthfulness Mode

Although I postulate that progressive truthfulness is how the most productive and easy-to-use design systems must be built, it has its inherent hazards.

Some will argue that broad exposure to exemplars will implicitly limit the designer’s creativity. Could the designs of a Brunelleschi, a Le Corbusier, a Gehry, a Gaudí, emerge from the minds of designers so indoctrinated?

I submit that they did. None were amateurs. All trained by studying precedents. Like Bach, they innovated from mastery, not ignorance. The Grandma Moseses of the world are few.

Perhaps more relevant, these “but what about?” examples represent a minute fraction of designs, and a great tool doesn’t have to provide for discontinuities.

The true hazards of progressive truthfulness lurk in the library. Bad models, too few models, too narrow a variety of models—these shortcomings will most limit the emerging designs. This hazard will be worst at the beginning.

A Vision for Input from Mind to Machine

Whether designing from an exemplar or de novo, how does one transform thought-stuff into a computer model?

One wants to utter one’s castle in the air into existence, using the voice, both hands, the head, and conceivably the feet. Buxton, his colleagues at Alias Systems, and his students at the University of Toronto have pioneered two-handed interfaces.7 The dominant hand makes the precise manipulations; the non-dominant hand provides framing context (hereafter right and left, for simplicity). Both hands together provide approximate dimension—”So big.”

The Noun-Verb Rhythm

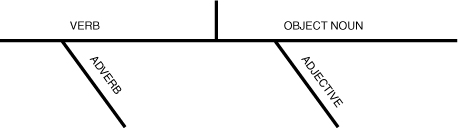

In design languages, as in imperative languages in general, each utterance has a verb and a noun, the object. The noun may have a selecting “which” adjectives, phrases, or clauses. The verb may have an adverbial phrase (Figure 17-1). A linguistic curiosity is that many verbs assign adjectives to the object noun: “Make this door 32 inches wide.” “Color the west wall green.”

Figure 17-1 Structure of an imperative sentence

In spatial design languages, one usually wants to specify the noun by pointing—a task for the hand(s). The voice is the natural instrument for verbs; the Windows-Icons-Menus-Pointing (WIMP) interface uses an unnatural input, the menu. To be sure, the menu has the tremendous advantage of showing the options. For the user traversing familiar tasks, however, this is not necessary.

Users at work at a WIMP interface proceed with a regular rhythm: point to or key in a noun specification; then point to a menu command (verb). Thus, in editing a document, one selects a block of text, points to “Cut”; perhaps selects another, points to “Copy”; selects an inter-character location, points to “Paste.”

Specifying Verbs

The one-handed rhythm is in fact irritating and counterproductive. By moving the pointer away from the noun field to the verb menu, one loses one’s place. The next noun is usually close to the previous one—back goes the pointer to about where it was. To avoid this disorientation, special ways have been developed for high-frequency verb specification. Double-clicking becomes an “Open” verb; keying specifies an “Insert.”

The most common verbs have keyboard equivalents, executed with the left hand. This technique is a brilliant invention. The novice always has available the standard technique—verb selection from a menu. The expert has techniques that are faster, exploit both hands, and leave the pointer positioned in the noun field. Best of all, the novice can acquire expertise one verb at a time, according to his own use frequencies.

Voice Commands

But voice is the natural mode for giving commands. So our Dream System will have a limited-vocabulary voice recognizer with wide tolerance for voice differences, a rich synonym vocabulary, and a user-modifiable dictionary. The menu option is kept active, as is the keyboard one. The hoarse user has lost no options.

General Verbs

Today’s architectural CAD systems embody a richly developed set of general verbs, based on years of customer experience. There are perhaps too many, but if any user can choose a personal palette and thereafter select most readily from it, usability is not hampered.

Examples include

• Rotate

• Replicate

• Group

• Snap

• Align

• Space Apart

• Scale

• Select Object from Library

• Name

Select Object from Library is perhaps the most useful of these, whether or not one takes the progressive truthfulness approach. We do, and that approach elevates library selection in frequency and importance.

The library will have all objects in one scale; the frequent verb will be Select and Scale, where the scaling is automatically set by the current working scale.

Specifying Nouns

What a variety of ways we use for specifying objects and regions of the space and time continuum!

By Name

In most conversation we specify objects by an explicit name or a name implicit via a pronoun. We want to do the same in the Dream System. Even with 100 percent effective voice recognition, this is still harder than it looks. To what does “the left one” refer? “The front one”? “The red one”? “The biggest one”? “It”? Even when the pointing is unambiguous, the scope of selection is often not. Making a system as smart and natural as a three-year-old requires syntactic analysis, semantic analysis, and the saving and use of context.

Moreover, the same object is called by different names, and different objects by the same name. Deriving standard definitions for a joint Army–Navy–Air Force database proved a huge job. Just “What time is it?” was challenging. The Air Force used Greenwich time; the Army used local time; each Navy ship used the local time where its battle-group aircraft carrier was.

So our Dream System must enable individual users and user groups to create individualized synonym dictionaries that supplement and override the system dictionary. Rationalizing model library nomenclature is much too deep a morass for our system builders; the users must have and wield the tools.

By Pointing in 2-D

The stunning success of the WIMP interface demonstrates the power of pointing for selecting defined and visible objects. This mode will indeed have very high use frequency.

But it is not sufficient. When we built the GRIP system, I assumed our first client chemist would select one of several hundred amino-acid residues in a protein by pointing to it. No, he wanted a keypad so he could specify a residue by a three-digit residue number. He had worked with that particular protein for years; he knew the numeric names of those residues as well as he knew the names of his children! Pointing required adjusting the viewpoint until the target residue was clearly visible in the 3-D tangle. Moreover, extending the arm holding the light pen was fatiguing.

By Sketching in 2-D

Architects design 3-D entities. But their principal mode is 2-D drawing, both rigorous and sketchy. This is true even though their fanciest 2-D projections are such limited portrayals of 3-D objects. Sketching appears essential to the thought process.8

I do not believe this primacy of 2-D drawing will ever change, no matter how rich and handy the 3-D modeling tools become. The retina is 2-D. The flat surfaces that so easily guide the hand are 2-D. Therefore, our Dream System must feature 2-D pointing and sketching, as with a pen pad that detects not only position but also pressure.

For defined 2-D spaces, such as maps or blueprints, one often needs to specify both where and how much region. Hierarchical subdivisions such as states, countries, or rooms make this how much specification much easier.

Precise specification of both where and how much calls for a two-handed operation. Consider drawing a line of a precisely specified length. The right hand holds the pen telling where; the left works the numeric keypad telling how long.

Pointing and Sketching in 3-D

All of the above considerations for 2-D apply in 3-D. Pointing is inherently more fatiguing—one must hold the hand up. Finger-wrist-elbow movements have about the same relative precision as hand-elbow-shoulder movements, so one wants a primary working volume where both elbows rest most of the time.9

Specifying arbitrary spatial regions and their rotations is more difficult than specifying objects and their positioning. In conversation we usually specify arbitrary regions with two hands in motion: “The cloud was shaped like this.” That’s how our Dream System should work. Much work has been done on widgets and affordances for 3-D specification.10 Much of the work aims to yield freehand specification; much of it just provides more awkward substitutes for it.

Specifying Text

Most text blocks will be short strings, mostly names and dimensions, on the design drawings. Voice recognition is the tool of choice for these, as with verbs.

Specifications will consist of blocks of text, standard paragraphs chosen from a database and parameterized. For creating new substantial text, dictation has largely died out because one can type and edit paragraphs much faster than one can dictate and edit. For both of these kinds of text tasks, one needs an alphanumeric keyboard.

In our UNC GRIP system, we found it advantageous to slide the keyboard away under the work surface. In practice, it stayed there most of the time; I would expect the same to be true in the Dream System.

Specifying Adverbs

“Move.”

“Which way? How far? To Where?”

“Rotate.”

“Which way? How far?”

“Duplicate.”

“How many? Which direction? Spaced how?”

“Select door and scale.”

“How wide?”

The command dialog consists of not only verbs and nouns; most verbs have adverbial modifiers, usually prepositional phrases.11

Most Such Adverbs Will Be Quantitative

Moreover, those must be precise; pointing on a tablet rarely suffices. Much of this precision is specified indirectly by auxiliary verbs: Snap To, Align With, and so on.

Much is specified by selection from limited menus: color palettes, materials lists, finish schedules. Menu selection is cognitively and economically cheap. House designs, like computer memory accesses, exhibit very strong locality—for any given design decision, there may be many independent choice alternatives, but most choices are made from a small subset. Customizable menus are essential.

The remaining quantitative precision is best specified by a numeric keyboard. In our experience, this gets much use. One doesn’t want to put it away as one does the alphanumeric keyboard.

Specifying Viewpoint and View

Most creative architectural work is done on plans and sections, but one checks the work by looking at the 3-D design whole, and from many vantages including walking through it. Specifying the current view of a 3-D house design is an important special case of noun + adjective specification.

One changes some viewing parameters continually and some infrequently. This distinction is not the same as dynamic versus static. One wants even infrequently changed parameters to change dynamically and smoothly.

Interior Views

In simulating a walk through a house, x, y location and head yaw change continually. One slides a viewpoint about on a 2-D drawing representing one building floor and turns the gaze in the same plane.

Eye height above the floor changes rarely. Most often, one moves to the same height on a different floor. Infrequently, one adjusts eye height proper, to fit a different designer or to envision the perspectives of different users.

Roll is rarely changed. We just don’t roll our heads much. Pitch movements are much more common—look down, look up—but they are far less frequent than x, y and yaw movements.

The EyeBall

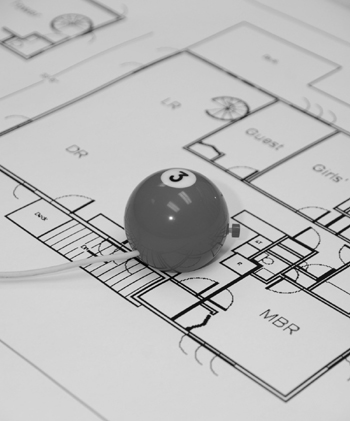

We found a special I/O device to be ideal for specifying interior views in architecture. The EyeBall consists of a six-degrees-of-freedom tracker housed in a billiard ball whose bottom half inch has been sliced off. It is equipped with two buttons readily pushed by the forefinger and the middle finger (Figure 17-2). One glides it over a work surface to move a V-shaped cursor on the plan.

Figure 17-2 UNC–Chapel Hill EyeBall viewpointer device

Kelli Gaskill, University of North Carolina

This device enables natural continual specification of all six view parameters—viewpoint (3 parameters), view direction (2 parameters), and view roll (1 parameter)—while heavily favoring x, y, yaw. The forefinger’s button is that of a one-button mouse; the other button is a clutch. One moves the viewpoint to an upper floor by raising the EyeBall, clutching, lowering it to the table, and unclutching. Eye height above the floor stays unchanged. A nice property is that the viewpoint continually moves in z, as if one is in a glass elevator; there is no visual discontinuity, so one doesn’t get lost in the model so often.

The EyeBall has another mode of use besides gliding over plans to specify interior views. Assume one always has, as a context index, a 3-D model on one side of one’s workstation, with a “You are here” light showing viewpoint. Then, one can easily point within this index and say, “Put me there, looking that way.”12

In either mode, eye height is set by a slider, usually very rarely. Two buttons default it to that of the 5th-percentile woman or the 95th-percentile man.

Exterior Views

The “Toothpick” Viewpointer

As I elaborate in the next chapter, any design workstation needs multiple views of the object being designed: the workpiece on which one is manipulating plus context views.

For a house, one context view may be a view from the outside, or a view of a whole room or interior wall. For specifying an arbitrary exterior view of an object, we have found the most useful device to be a two-degrees-of-freedom positional joystick, as shown in Figure 17-3.

Figure 17-3 Toothpick viewpoint device; copy of a UNC device made by Professor Charles Molnar’s group at Washington University, St. Louis

This is readily manipulated, usually by the left hand, to specify the direction from which one is looking at the object. The rest position has the Toothpick pointing to the user. To give a full 4π-steradian selection of views, we doubled the angle of displacement of the Toothpick from its rest position. Thus, when it is moved completely to the left or right, one’s view moves around the object to look at it from the very back. Our users found this doubling easy and natural, rather to my surprise. This is supplemented with frequently used default buttons specifying the four elevations and some three-quarter views (from the corner of an aligned encompassing cube), and a rarely used slider that specifies the viewing distance.

Depth Perception

Massing, the relative placement of filled and empty volumes, is an important consideration in architectural esthetics. Perceiving it requires 3-D perception. The most powerful depth cue is the kinetic depth effect, the relative motion of eye and scene. This cue is stronger even than stereopsis, and the two together are very powerful.

So how does one specify the motion wanted? Clearly the EyeBall can be used to move the viewpoint arbitrarily, but that requires work. One would like to study massing while thinking. On the UNC–Chapel Hill GRIP Molecular Graphics System, we found that rocking the entire scene about the vertical axis greatly helped perception while thinking, so we provided a rocking mode in which the scene behaved like a torsion pendulum whenever no action was being taken. The controls enabled the user to specify both the amplitude and the period of the rocking, although usually default values were used.

Notes and References

1. Brooks [1977], “The computer ‘scientist’ as toolsmith”; Britton [1981], The GRIP-75 Man-Machine Interface; and Brooks [1985], “Computer graphics for molecular studies,” describe the system. The user interface was remarkable in that it had 21 degrees of freedom for modifying the model and controlling the display, plus keyboards. All were in fact used. No device had more than three degrees of freedom; there was no device overloading. Users instinctively reached to known locations to change values.

2. Ullman [1962], “The foundations of the modern design environment,” in Cross [1962b], Research in Design Thinking.

3. Alexander, Notes on the Synthesis of Form [1964], A Pattern Language [1977], and The Timeless Way of Building [1979], are all highly relevant here.

4. Turner Whitted, personal communication [1986].

5. Computer graphics also used progressive refinement in scene illumination, back when computers were slow. In the first 1/30 second, one renders an image with simple ambient lighting. In subsequent frame times, one iteratively calculates the more sophisticated radiositized illumination. The visual effect is dramatic—in a building interior image, for example, the shadows flee away to the corners of a room as the lighting sweetens from ambient to radiositized.

6. See, for example, the 40 styles of houses at http://www.houseplans.net/house-plans-with-photos/ (accessed July 30, 2009).

7. Buxton [1986], “A study in two-handed input.”

8. Goel [1991], “Sketches of thought.”

9. Arthur [1998], “Designing and building the PIT.”

10. For example, Conner [1992], “Three-dimensional widgets.”

11. One can imagine building the system so that it asks such follow-up questions after a noun or verb is incompletely specified. If so, one must be able to turn it off; expert designers will know implicitly what must come next, and the distraction would be intrusive.

12. Stoakley [1995], “Virtual reality on a WIM.”