Chapter 2. Contrasting Technologies

How do service meshes contrast with one another? How do service meshes contrast with other related technologies?

You might already have a healthy understanding of the set of technology within the service mesh ecosystem, like API gateways, ingress controllers, container orchestrators, client libraries, and so on. But how are these technologies related to, overlapped with, or deployed alongside service meshes? Where do service meshes fit in, and why are there so many of them?

Let’s begin with an examination of client libraries, predecessor to the service mesh.

Client Libraries

Client libraries (sometimes referred to as microservices frameworks) became very popular when microservices took a foothold in modern application design as a means to avoid rewriting the same logic in every service. Example frameworks include the following:

- Twitter Finagle

-

An open source remote procedure call (RPC) library built on Netty for engineers who want a strongly typed language on the Java Virtual Machine (JVM). Finagle is written in Scala.

- Netflix Hystrix

-

An open source latency and fault tolerance library designed to isolate points of access to remote systems, services, and third-party libraries; stop cascading failure; and enable resilience. Hystrix is written in Java.

- Netflix Ribbon

-

An open source Inter-Process Communication (IPCs) library with built-in software load balancers. Ribbon is written in Java.

- Go kit

-

An open source toolkit for building microservices (or elegant monoliths) with gRPC as the primary messaging pattern. With pluggable serialization and transport, Go kit is written in Go.

Other examples include Dropwizard, Spring Boot, Akka, and so on.

Note

See the Layer5 service mesh landscape for a comprehensive perspective on and characterization of all popular client libraries.

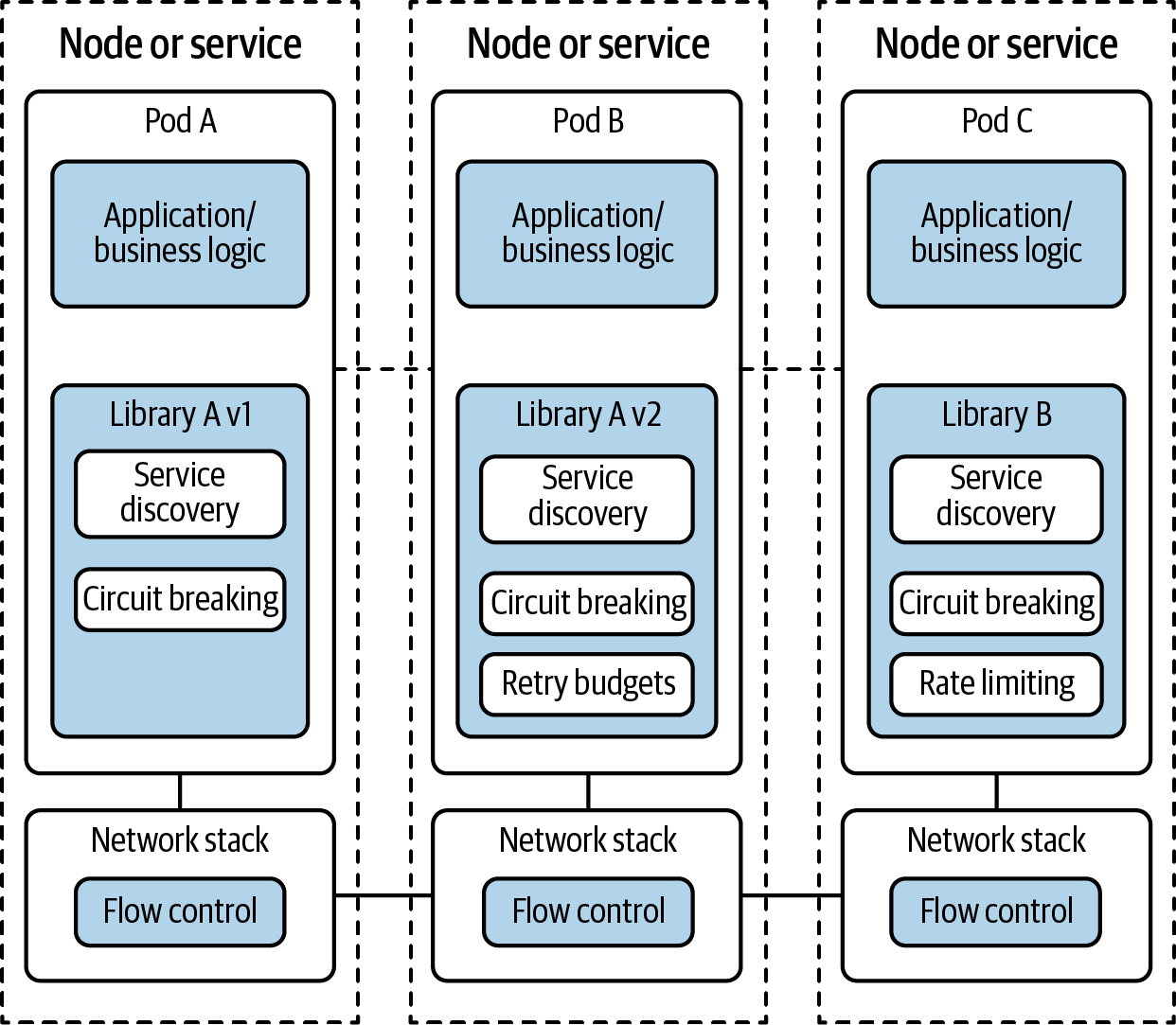

Prior to the general availability of service meshes, developers commonly turned to language-specific microservices frameworks to uplevel the resiliency, security, and observability of their services. The problem with client libraries is that their use means embedding infrastructure concerns into your application code. In the presence of a service mesh, services that embed the same client library between themselves incorporate duplicative code. Services that embed different client libraries or different versions of the same client library fall prey to inconsistency in what one framework or one version of the same framework provides versus what another framework or framework version provides and how the libraries behave. Use of different client libraries is most often seen in environments with polyglot microservices.

Getting teams to update their client libraries can be an arduous process. When these infrastructure concerns are embedded into your service code, you need to chase down your developers to update and/or reconfigure these libraries, of which there might be a few in use and used to varying degrees. Aligning on the same client library, client library version, and client library configuration can consume time unnecessarily. Enforcing consistency is challenging. These frameworks couple your services with the infrastructure, as seen in Figure 2-1.

Figure 2-1. Services architecture using client libraries coupled with application logic

Note

Astute developers understand that service meshes not only alleviate many distributed systems concerns but also greatly enable runtime application logic, further removing the need for developers to spend time on concerns not core to the business logic they truly need to advance. The concept of service meshes providing and executing application and business logic is an advanced topic that is lightly covered in Chapter 4.

When infrastructure code is embedded in the application, different services teams must get together to negotiate things like timeouts and retries. A service mesh decouples not only infrastructure code from application code, but more importantly, it decouples teams. Service meshes are generally deployed as infrastructure that resides outside your applications, but with their adoption mainstreaming, this is changing, and their use for influencing or implementing business logic is on the rise.

API Gateways

How do API gateways interplay with service meshes?

This is a very common question, and the nuanced answer puzzles many, particularly given that within the category of API gateways lies a subspectrum. API gateways come in a few forms:

-

Traditional (e.g., Kong)

-

Cloud-hosted (e.g., Azure Load Balancer)

-

L7 proxy used as an API gateway and microservices API gateways (e.g., Traefik, NGINX, HAProxy, or Envoy)

L7 proxies used as API gateways generally can be represented by a collection of microservices-oriented, open source projects, which have taken the approach of wrapping existing L7 proxies with additional features needed for an API gateway.

NGINX

As a stable, efficient, ubiquitous L7 proxy, NGINX is commonly found at the core of API gateways. It may be used on its own or wrapped with additional features to facilitate container orchestrator native integration or additional self-service functionality for developers. For example:

-

API Umbrella uses NGINX

-

Kong uses NGINX

-

OpenResty uses NGINX

Envoy

The Envoy project has also been used as the foundation for API gateways:

- Ambassador

-

With its basis in Envoy, Ambassador is an API gateway for microservices that functions standalone or as a Kubernetes Ingress Controller.

-

Open source. Written in Python. From Ambassador Labs. Ambassador is currently proposed to join the CNCF.

-

- Contour

-

Based on Envoy and deployed as a Kubernetes Ingress Controller. Hosted in the CNCF.

-

Open source. Written in Go. From Heptio. Contour joined the CNCF in July 2020.

-

- Enroute

-

Envoy Route Controller. API gateway created for Kubernetes Ingress Controller and standalone deployments.

-

Open source. Written primarily in Go. From Saaras.

-

Additional differences between traditional API gateways and microservices API gateways revolve around which team is using the gateway: operators or developers. Operators tend to focus on measuring API calls per consumer to meter and disallow API calls when a consumer exceeds its quota. On the other hand, developers tend to measure L7 latency, throughput, and resilience, limiting API calls when service is not responding.

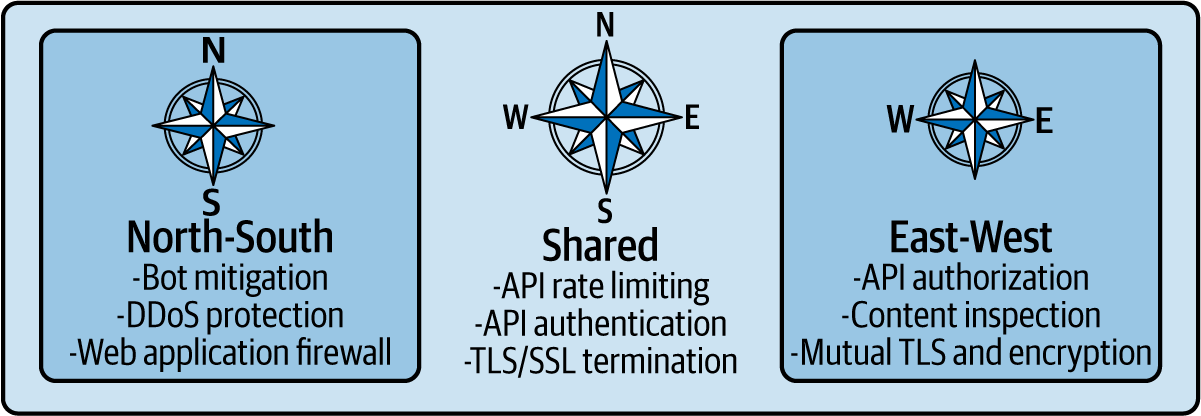

With respect to service meshes, one of the more notable lines of delineation is that API gateways, in general, are designed for accepting traffic from outside of your organization/network and distributing it internally. API gateways expose your services as managed APIs, focused on transiting north-south traffic (in and out of the service mesh). They aren’t as well suited for traffic management within the service mesh (east-west) because they require traffic to travel through a central proxy and add a network hop. Service meshes are designed foremost to manage east-west traffic internal to the service mesh.

Note

North-south (N-S) traffic refers to traffic between clients outside the Kubernetes cluster and services inside the cluster, while east-west (E-W) traffic refers to traffic between services inside the Kubernetes cluster.

Given their complementary nature, API gateways and service meshes are often found deployed in combination. Service meshes are on a path to ultimately offering much, if not all, of the functionality provided by API gateways.

API Management

API gateways complement other components of the API management ecosystem, such as API marketplaces and API publishing portals—both of which are surfacing in service mesh offerings. API management solutions provide analytics, business data, adjunct provider services like single sign-on, and API versioning control. Many of the API management vendors have moved API management systems to a single point of architecture, designing their API gateways to be implemented at the edge.

An API gateway can call downstream services via service mesh by offloading application network functions to the service mesh. Some API management capabilities that are oriented toward developer engagement can overlap with service mesh management planes in the following ways:

-

Developers use a portal to discover available APIs and review API documentation, and as a sandbox for exercising their code against the APIs.

-

API analytics for tracking KPIs, generating reports on usage and adoption trending.

-

API life-cycle management to secure APIs (allocate keys) and promote or demote APIs.

-

Monetization for tracking payment plans and enforcing quotas.

Today, there’s overlap and underlap among service mesh capabilities and API gateways and API management systems. The overlap is rapidly increasing as service meshes are deployed and API management functionality is brought into the service mesh—which makes intuitive sense. In the presence of a service mesh, why run API gateways and API management systems separately? As service meshes gain new capabilities, use cases (shown in Figure 2-2) will overlap more and more.

Figure 2-2. The underlap and overlap of API gateway and service proxy security functions by traffic direction

Container Orchestrators

Why is my container orchestrator not enough? What if I’m not using containers? What do you need to continuously deliver and operate microservices? Leaving CI and their deployment pipelines aside for the moment, you need much of what the container orchestrator provides at an infrastructure level and what it doesn’t at a services level. Table 2-1 takes a look at these capabilities.

| Core capabilitiesa | Missing service-level needs |

|---|---|

Cluster management |

L7 granular traffic routing |

—Host discovery |

—HTTP redirects |

—Host health monitoring |

—CORS handling |

Scheduling |

Circuit breaking |

Orchestrator updates and host maintenance |

Chaos testing |

Service discovery |

Canary deploys |

Networking and load balancing |

Timeouts, retries, budgets, deadlines |

Stateful services |

Per-request routing |

Multitenant, multiregion |

Backpressure |

Transport security (encryption) |

|

Quota management |

|

Protocol translation (REST, gRPC) |

|

Policy |

|

a Must have this to be considered a container orchestrator. | |

Additional key capabilities include simple application health and performance monitoring, application deployments, and application secrets.

Service meshes are a dedicated layer for managing service-to-service communication, whereas container orchestrators have necessarily had their start and focus on automating containerized infrastructure and overcoming ephemeral infrastructure and distributed systems problems. Applications are why we run infrastructure, though. Applications have been and are still the North Star of our focus. There are enough service and application-level concerns that additional platforms/management layers are needed.

Container orchestrators like Kubernetes have different mechanisms for routing traffic into the cluster. Ingress Controllers in Kubernetes expose the services to networks external to the cluster. Ingresses can terminate Secure Sockets Layer (SSL) connections, execute rewrite rules, and support WebSockets and sometimes TCP/UDP, but they don’t address the rest of service-level needs.

API gateways address some of these needs and are commonly deployed on a container orchestrator as an edge proxy. Edge proxies provide services with Layer 4 to Layer 7 management while using the container orchestrator for reliability, availability, and scalability of container infrastructure.

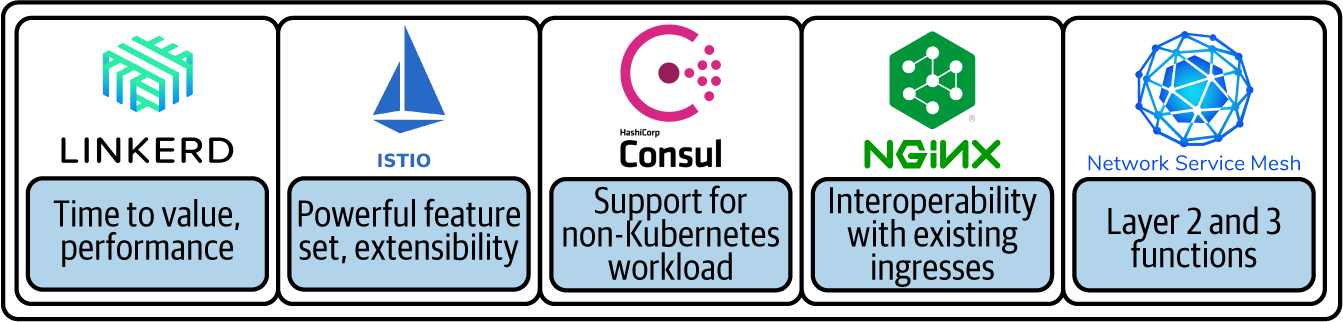

Service Meshes

In a world of many service meshes, you have a choice when it comes to which service mesh(es) to adopt. For many of you, your organization will end up with more than one type of service mesh. Every organization I’ve been in has multiple hypervisors for running VMs, multiple container runtimes, different container orchestrators in use, and so on. Infrastructure diversity is a reality for enterprises. Diversity is driven by a broad set of workload requirements. Workloads vary from those that are process-based to those that are event-driven in their design. Some run on bare metal, while other workloads execute in functions. Others represent each and every style of deployment artifact (container, virtual machine, and so on) in between. Different organizations need different scopes of service mesh functionality. Consequently, different service meshes are built with slightly divergent use cases in mind, and therefore, the architecture and deployment models of service meshes differ between them (see Figure 2-3). Driven by Cloud, Hybrid, On-Prem, and Edge, service meshes are capable of enabling each of these. Microservice patterns and technologies, together with the requirements of different edge devices and their function along with ephemeral cloud-based workloads, provide myriad opportunities for service mesh differentiation and specialization. Cloud vendors also produce or partner as they offer service mesh as a managed service on their platforms.

Figure 2-3. A comparative spectrum of the differences among some service meshes, based on their individual strengths

Open source governance and corporate interests dictate a world of multiple meshes, too. A huge range of microservice patterns drives service mesh opportunity. Open source projects and vendors create features to serve microservice patterns (they splinter the landscape and function differently), and some accommodate hybrid workloads. Noncontainerized workloads need to integrate and benefit from a service mesh as well.

As the number of microservices grows, so too does the need for service meshes, including meshes native to specific cloud platforms. This leads to a world where many enterprises use multiple service mesh products, whether separately or together.

Service Mesh Abstractions

Because there are any number of service meshes available, independent specifications have cropped up to provide abstraction and standardization across them. Three service mesh abstractions exist today:

- Service Mesh Performance (SMP)

-

This is a format for describing and capturing service mesh performance. Created by Layer5; Meshery is the canonical implementation of this specification.

- Multi-Vendor Service Mesh Interoperation (Hamlet)

-

This is a set of API standards for enabling service mesh federation. Created by VMware.

- Service Mesh Interface (SMI)

-

This is a standard interface for service meshes on Kubernetes. Created by Microsoft; Meshery is the official SMI conformance tool used to ensure that a cluster is properly configured and that its behavior conforms to official SMI specifications.

Service Mesh Landscape

With a sense now of why it’s a multi-mesh world, let’s begin to characterize different service meshes. Some service meshes support noncontainerized workloads (services running on a VM or on bare metal), whereas some focus solely on layering on top of a container orchestrator, most notably Kubernetes. All service meshes support integration with service discovery systems. The subsections that follow provide a very brief survey of service mesh offerings within the current technology landscape.

Note

The list included here is neither exhaustive nor intended to be a detailed comparison but rather a simple overview of some of the available service meshes and related components. Many service meshes have emerged, and more will emerge after the publication of this book. See the Layer5 service mesh landscape for a comprehensive perspective and characterization of all of the service meshes, service proxies, and related tools available today. This landscape is community-maintained and contrasts service meshes so the reader can make the most informed decision about which service mesh best suits their needs.

Data Plane

Service proxies (gateways) are the elements of the data plane. How many are present is both a factor of the number of services you’re running and the design of the service mesh’s deployment model. Some service mesh projects have built new proxies, while many others have leveraged existing proxies. Envoy is a popular choice as the data plane element.

- BFE

-

BFE is a modern proxy written in Golang and is now hosted by the CNCF. It supports different load balancing algorithms and multiple protocols, including HTTP, HTTPS, SPDY, HTTP/2, WebSocket, TLS, and FastCGI. BFE defines its own domain-specific language in which users can configure rule and content-based routing.

- Envoy

-

Envoy is a modern proxy written in C++ and hosted by the CNCF. Envoy’s ability to hot reload both its configuration and itself (upgrade itself in place while handling connections) contributed to its initial popularity. Any number of projects have been built on top of Envoy, including API gateways, ingress controllers, service meshes, and managed offerings by cloud providers. Istio, App Mesh, Kuma, Open Service Mesh, and other service meshes (discussed in the Control Plane section) have been built on top of Envoy.

- Linkerd v2

-

The Linkerd2-proxy is built specifically for the service mesh sidecar use case. Linkerd can be significantly smaller and faster than Envoy-based service meshes. The project chose Rust as the implementation language to be memory-safe and highly performant. This service proxy purports a sub-1ms p99 traffic latency. Open source. Written in Rust. From Buoyant.

- NGINX

-

Launched in September 2017, the nginMesh project deploys NGINX as a sidecar proxy in Istio. The nginMesh project has been set aside as of October 2018. Open source. Written primarily in C and Rust. From NGINX.

The following are a couple of early, and now antiquated, service mesh–like projects, forming control planes around existing load balancers:

- SmartStack

-

Comprising two components: Nerve for health-checking and Synapse for service discovery. Open source. From AirBnB. Written in Ruby.

- Nelson

-

Takes advantage of integrations with Envoy, Prometheus, Vault, and Nomad to provide Git-centric, developer-driven deployments with automated build-and-release workflow. Open source. From Verizon Labs. Written in Scala.

Control Plane

Control plane offerings include the following:

- Consul

-

Announced service mesh capable intention in v1.5. Became a full service mesh in v1.8. Consul uses Envoy as its data plane, offering multicluster federation.

-

Open and closed source. From HashiCorp. Primarily written in Go.

-

- Linkerd

-

Linkerd is hosted by the Cloud Native Computing Foundation (CNCF) and has undergone two major releases with significant architectural changes and an entirely different code base used between the two versions.

- Linkerd v1

-

The first version of Linkerd was built on top of Twitter Finagle. Pronounced “linker-dee,” it includes both a proxying data plane and a control plane, Namerd (“namer-dee”), all in one package.

-

Open source. Written primarily in Scala.

-

Data plane can be deployed in a node proxy model (common) or in a proxy sidecar (not common). Proven scale, having served more than one trillion service requests.

-

Supports services running within container orchestrators and as standalone virtual or physical machines.

-

Service discovery abstractions to unite multiple systems.

-

- Linkerd v2

-

The second major version of Linkerd is based on a project formerly known as Conduit, a Kubernetes-native and Kubernetes-only service mesh announced as a project in December 2017. In contrast to Istio and in learning from Linkerd v1, Linkerd v2’s design principles revolve around a minimalist architecture and zero configuration philosophy, optimizing for streamlined setup.

-

Open Source. From Buoyant. Control plane written in Go. Hosted by the CNCF.

-

Support for gRPC, HTTP/2, and HTTP/1.x requests, plus all TCP traffic. Currently only supports Kubernetes.

-

- Istio

-

Announced as a project in May 2017, Istio is considered to be a “second explosion after Kubernetes” given its architecture and surface area of functional aspiration.

-

Supports services running within container orchestrators and as standalone virtual or physical machines.

-

Was the first service mesh to promote the model of supporting automatic injection of service proxies as sidecars using Kubernetes admission controller.

-

Many projects have been built around Istio—commercial, closed source offerings built around Istio include AspenMesh, VMware Tanzu Service Mesh, and Octarine (acquired by VMware in 2020).

-

Many projects have been built within Istio. Commercial, closed source offerings built inside Istio include Citrix Service Mesh. To be built “within Istio” means to offer the Istio control plane with an alternative service proxy. Citrix Service Mesh displaces Envoy with CPX.

-

An open source, data plane proxy, MOSN released support for running under Istio as the control plane, while displacing Envoy as the service proxy.

-

-

Mesher. Layer 7 (L7) proxy that runs as a sidecar deployable on HUAWEI Cloud Service Engine.

-

Open source. Written primarily in Go. From HUAWEI.

-

-

- NGINX Service Mesh

-

Released in September 2020, NGINX Service Mesh is a more recent entrant into the service mesh arena. Using an NGINX Plus augmented to interface with Kubernetes natively as its data plane, supports ingress and egress gateways through NGINX Plus Kubernetes Ingress Controllers. Using the Service Mesh Interface (SMI) specification as its API, NGINX Service Mesh presents its control plane as a CLI,

meshctl.-

Open and closed source. From NGINX. Primarily written in C.

-

Other examples include Open Service Mesh, Maesh, Kuma, and App Mesh.

Many other service meshes are available. This list is intended to give you a sense of the diversity of service meshes available today. See the community-maintained, Layer5 service mesh landscape page for a complete listing of service meshes and their details.

Management Plane

The management plane resides a level above the control plane and offers a range of potential functions between operational patterns, business systems integration, and enhancing application logic while operating across different service meshes. Among its uses, a management plane can perform workload and mesh configuration validation—whether in preparation for onboarding a workload onto the mesh or in continuously vetting their configuration as you update to new versions of components running your control and data planes or new versions of your applications. Management planes help organizations running a service mesh get the most out their investment. One aspect of managing service meshes includes performance management—a function at which Meshery excels.

- Meshery

-

The service mesh management plane for adopting, operating, and developing on different service meshes, Meshery integrates business processes and application logic into service meshes by deploying custom WebAssembly (WASM) modules as filters in Envoy-based data planes. It provides governance, policy and performance, and configuration management of service meshes with a visual topology for designing service mesh deployments and managing the fine-grained traffic control of a service mesh.

-

Open source. Created by Layer5. Primarily written in Go. Proposed for adoption in the CNCF.

-

Conclusion

Client libraries (microservices frameworks) come with their set of challenges. Service meshes move these concerns into the service proxy and decouple them from the application code. API gateways are the technology with the most overlap in functionality, but they are deployed at the edge, not on every node or within every pod. As service mesh deployments evolve, I’m seeing an erosion of separately deployed API gateways and in an inclusion of them within the service mesh. Between client libraries and API gateways, service meshes offer enough consolidation functionality to either diminish their need or replace them entirely with a single layer of control.

Container orchestrators have so many distributed systems challenges to address within lower-layer infrastructure that they’ve yet to holistically address services and application-level needs. Service meshes offer a robust set of observability, security, traffic, and application controls beyond that of Kubernetes. Service meshes are a necessary layer of cloud native infrastructure. There are many service meshes available along with service mesh specifications to abstract and unify their functionality.