Chapter 4. Customization and Integration

How do I fit a service mesh into my existing infrastructure, operational practices, and observability tooling?

Some service meshes are designed with simplicity as their foremost design principle. While simple isn’t necessarily synonymous with inflexible, there is a class of service meshes that are less extensible than their more powerful counterparts. Maesh, Kuma, Linkerd, and Open Service Mesh are designed with some customizability in mind; however, they generally focus on out-of-the-box functionality and ease of deployment. Istio is an example of a service mesh designed with customizability in mind. Service mesh extensibility comes in different forms: swappable sidecar proxies, telemetry and authorization adapters, identity providers (certificate authorities), and data plane filters.

Service mesh sidecar proxies are the real workhorse, doing the heavy lifting of traffic bytes and bits from one destination to the next. Their manipulation of packets prior to invoking application logic makes the transparent service proxies of the data plane an intriguing point of extensibility. Service proxies are commonly designed with extensibility in mind. While they are customizable in many different ways (e.g., access logging, metric plug-ins, custom authentication and authorization plug-ins, health-checking functions, rate-limiting algorithms, and so on), let’s focus our exploration on the ways service proxies are customizable by way of creating custom traffic filters (modules).

The Power of the Data Plane

Control planes bring much-needed element management to operators. Data planes composed of any number of service proxies need control planes to go about the task of applying service mesh-specific use cases to their fleet of service proxies. Configuration management, telemetry collection, infrastructure-centric authorization, identity, and so on are common functions delivered by a control plane. However, their true source of power is drawn significantly from the service proxy. Users commonly find themselves in need of customizing the chain of traffic filters (modules) that service proxies use to do much of their heaving lifting. Different technologies are used to provide data plane extensibility and, consequently, additional custom data plane intelligence, including:

- Lua

-

A scripting language for execution inside a Just-In-Time compiler, LuaJIT

- WebAssembly (WASM)

-

A virtual stack machine as a compilation target for different languages to use as an execution environment

NGINX and Lua

NGINX provides the ability to write dynamic modules that can be loaded at runtime based on configuration files. These modules can be unloaded by editing the configuration files and reloading NGINX. NGINX supports embedding custom logic into dynamic modules using Lua.

Lua is a lightweight, embeddable scripting language that supports procedural, functional, and object-oriented programming. Lua is dynamically typed and runs by interpreting bytecode with a register-based virtual machine.

NGINX provides the ability to integrate dynamic Lua scripts using the ngx_lua module. Using NGINX with ngx_lua helps you offload logic from your services and hand their concerns off to an intelligent data plane. Leveraging NGINX’s subrequests, the ngx_lua module allows the integration of Lua threads (or coroutines) into the NGINX event model. Instead of passing logic to an upstream server, the Lua script can inspect and process service traffic. ngx_lua modules can be chained to be invoked at different phases of NGINX request processing.

Envoy and WebAssembly

WebAssembly, or WASM, is an open standard that defines a binary format for executable programs. Through WebAssembly System Interface (WASI), it also defines interfaces for facilitating interaction with host environments. The initial focus of these host environments was browsers and large web applications with the intention of securely running programs to improve performance. As an open standard, WASM is maintained by the W3C and has been adopted by all modern browsers. After HTML, CSS, and Javascript, WebAssembly is the fourth language to natively run in web browsers.

WASM support is coming to Envoy through the efforts of Google and Envoy maintainers embedding Google’s open source high-performance JavaScript and WebAssembly engine, V8, into Envoy. Through the WebAssembly System Interface, Envoy exposes an Application Binary Interface (ABI) to WASM modules so that they can operate as Envoy filters. The way WASI works is straightforward. You write your application in your favorite languages, like Rust, C, or C++. Then, you build and compile them into a WebAssembly binary targeting the host environment. The generated binary requires the WebAssembly runtime to provide the necessary interfaces to system calls for the binary to execute. Conceptually, this is similar to JVM. If you have a JVM installed, you can run any Java-like languages on it. Similarly, with a runtime, you can run the WebAssembly binary.

Envoy provides the ability to integrate additional filters in one of two ways:

-

Natively, by incorporating your custom filter into Envoy’s C++ source code and compiling a new Envoy version. The drawback is that you need to maintain your own version of Envoy, while the benefit is that your custom filter runs at native speed.

-

Via WASM, by incorporating your custom filter as a WebAssembly binary writing in C++, Rust, AssemblyScript, or Go. The drawback is that WASM-based filters incur some overhead, while the benefit is that you can dynamically load and reload WASM-based filters in Envoy at runtime.

Envoy configuration is initialized via bootstrap on startup. Envoy’s xDS APIs allow configuration to be loaded and reloaded dynamically during runtime, as shown in Figure 4-1. Envoy configuration has different sections (e.g., LDS, which is for configuring listeners, and CDS, which is for configuring clusters). Each of the sections can configure WASM plug-ins (programs).

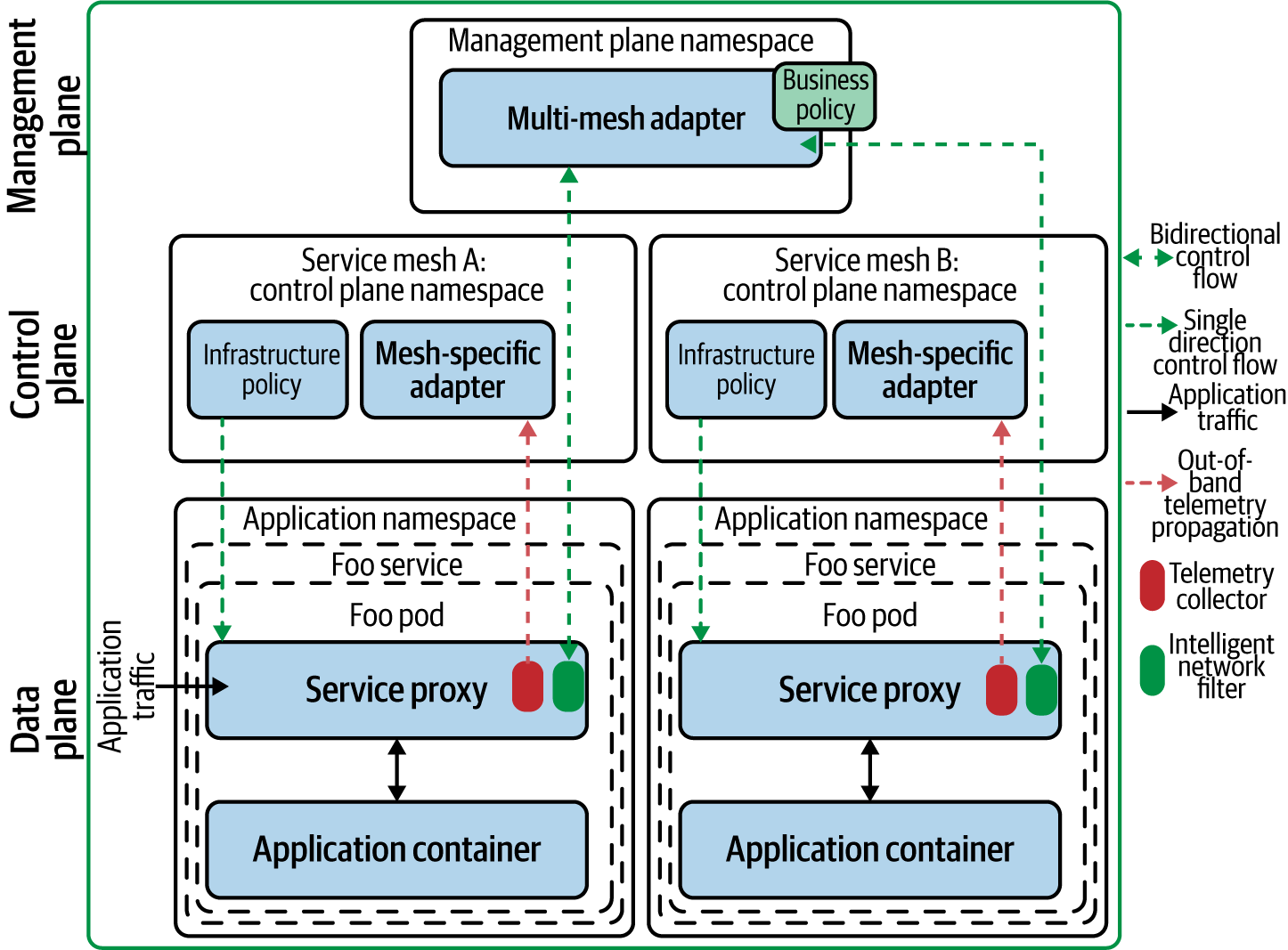

Figure 4-1. How the intelligence of the service mesh management plane and the power of the service mesh data plane combine to deliver application infrastructure logic

WebAssembly is exciting in part because of its performance characteristics, which vary based on the type of program/filter being used. Some run between 10% to 20% overhead as compared to natively executed code for network filtering use cases (see “The Importance of Service Mesh Performance (SMP)”). WebAssembly bears some resemblance to Docker given its high degree of portability. Like the Java Virtual Machine (JVM), WASM’s virtual stack machine is becoming a write once, run anywhere (WORA). WASM executables are precompiled with a healthy variety of languages supporting it as a compilation target—currently about 40 languages.

Swappable Sidecars

Functionality of the service mesh’s proxy is one of the more important considerations when adopting a service mesh. From the perspective of a developer, much significance is given to a proxy’s cloud-native integrations (e.g., with OpenTelemetry/OpenTracing, Prometheus, and so on). Surprisingly, a developer may not be very interested in a proxy’s APIs. The service mesh control plane is the point of…well, control for managing the configuration of proxy. A developer will, however, be interested in a management plane’s APIs. A top item on the list of developers’ demands for proxies is protocol support. Generally, protocol considerations can be broken into two types:

- TCP, UDP, HTTP

-

Network team-centric consideration in which efficiency, performance, offload, and load balancing algorithm support are evaluated. Support for HTTP/2 often takes top billing.

- gRPC, NATS, Kafka

-

A developer-centric consideration in which the top item on the list is application-level protocols, specifically those commonly used in modern distributed application designs.

The reality is that selecting the perfect proxy involves more than protocol support. Your proxy should meet all key criteria:

-

High performance and low latency

-

High scalability and small memory footprint

-

Deep observability at all layers of the network stack

-

Programmatic configuration and ecosystem integration

-

Thorough documentation to facilitate an understanding of expected proxy behavior

Any number of service meshes have adopted Envoy as their service proxy. Envoy is the default service proxy within Istio. Using Envoy’s APIs, different projects have demonstrated the ability to displace Envoy as the default service proxy with the choice of an alternative.

In early versions of Istio, Linkerd demonstrated an integration in which Istio was the control plane providing configuration to Linkerd proxies. Also, in more than a demonstration, NGINX hosted a project known as nginMesh in which, again, Istio functioned as the control plane while NGINX proxies ran as the data plane.

With many service proxies in the ecosystem, outside of Envoy only two have currently demonstrated integration with Istio. Linkerd is not currently designed as a general-purpose proxy; instead, it is focused on being lightweight, placing extensibility as a secondary concern by offering extensions via gRPC plug-in. Consul uses Envoy as its proxy. Why use another service proxy? Following are some examples:

- NGINX

-

While you won’t be able to use NGINX as a proxy to displace Envoy (recall that the nginMesh project was set aside), based on your operational expertise, need for a battle-tested proxy, or integration of F5 load balancer, you might want to use NGINX. You might be looking for caching, web application firewall (WAF), or other functionality available in NGINX Plus, as well. An enhanced version of NGINX Plus that interfaces natively with Kubernetes is the service proxy used in the NGINX Service Mesh data plane.

- CPX

-

You might choose to deploy the Citrix Service Mesh (which is an Istio control plane with CPX data plane) if you have existing investment in Citrix’s Application Delivery Controllers and have them across your diverse infrastructure, including new microservices and existing monoliths.

- MOSN

-

MOSN can deploy as an Istio data plane. You might choose to deploy MOSN if you need to highly customize your service proxy and are a Golang shop. MOSN supports a multiprotocol framework, and you access private protocols with a unified routing framework. It has a multiprocess plug-in mechanism, which can easily extend the plug-ins of independent MOSN processes through the plug-in framework and do some other management, bypass, and functional module extensions.

The arrival of choice in service proxies for Istio has generated a lot of excitement. Linkerd’s integration was created early in Istio’s 0.1.6 release. Similarly, the ability to use NGINX as a service proxy through the nginMesh project (see Figure 4-2) was provided early in the Istio release cycle.

Note

You might find this article on “How to Customize an Istio Service Mesh” and its adjoining webcast helpful in further understanding Istio’s extensibility with respect to swappable service proxies.

Without configuration, proxies are without instructions to perform their tasks. Pilot is the head of the ship in an Istio mesh, so to speak, keeping synchronized with the underlying platform by tracking and representing its services to istio-proxy. istio-proxy contains the proxy of choice (e.g., Envoy). Typically, the same istio-proxy Docker image is used by Istio sidecar and Istio ingress gateway, which contains not only the service proxy but also the Istio Pilot agent. The Istio Pilot agent pulls configuration down from Pilot to the service proxy at frequent intervals so that each proxy knows where to route traffic. In this case, nginMesh’s translator agent performs the task of configuring NGINX as the istio-proxy. Pilot is responsible for the life cycle of istio-proxy.

Figure 4-2. Example of swapping proxies—Istio and nginMesh.

Extensible Adapters

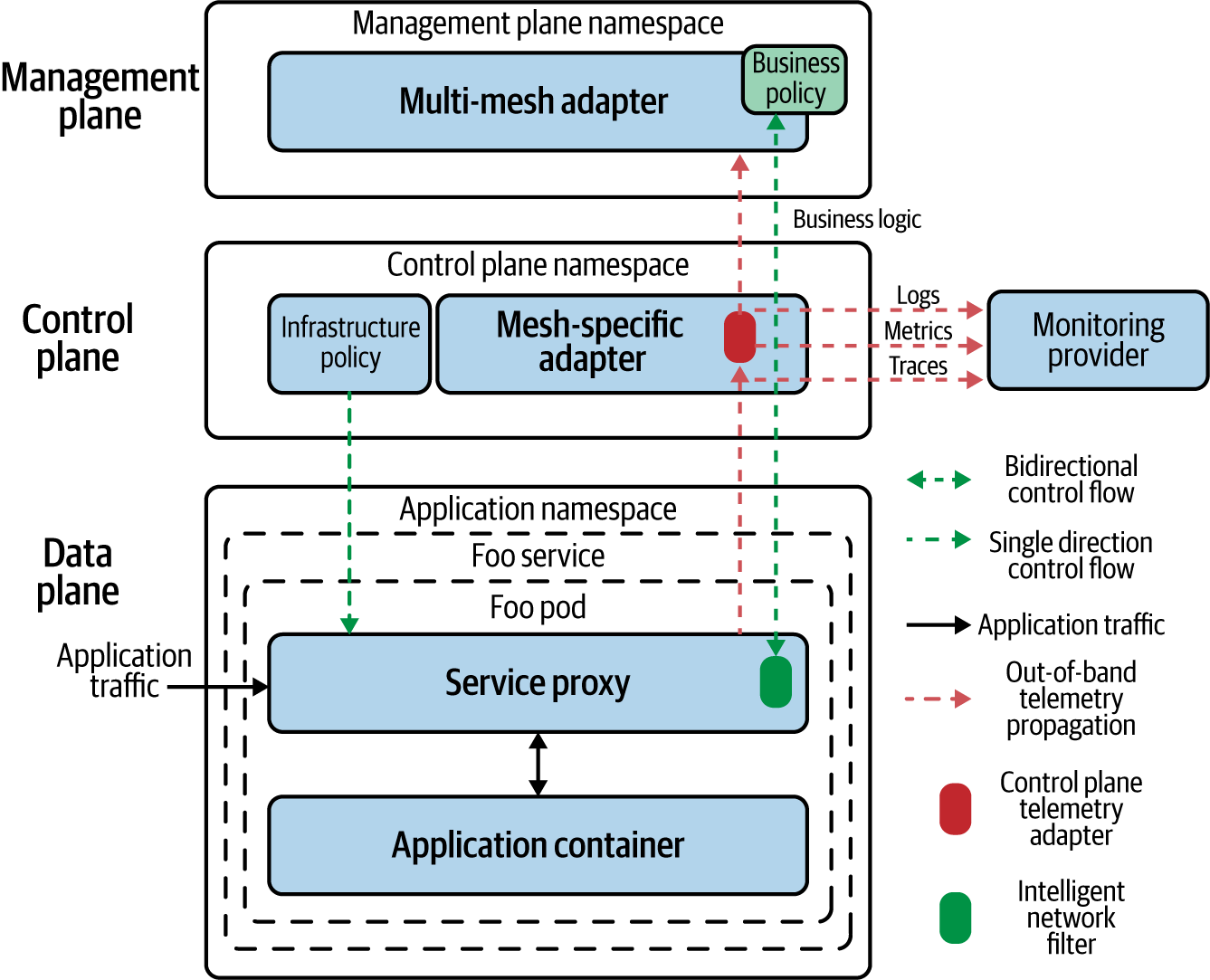

Management planes and control planes are responsible for enforcing access control, usage, and other policies across the service mesh. In order to do so, they collect telemetry data from service proxies. Some service meshes gather telemetry as shown in Figure 4-3. The service mesh control plane uses one or more telemetry adapters to collect and pass along these signals. The control plane will use multiple adapters either for different types of telemetry—races, logs, metrics—or for transmitting telemetry to external monitoring providers.

Figure 4-3. An example of a data plane proxy generating and directly sending telemetry to the control plane. Control plane adapters are points of extensibility, acting as an attribute processing engine, collecting, transforming, and transmitting telemetry.

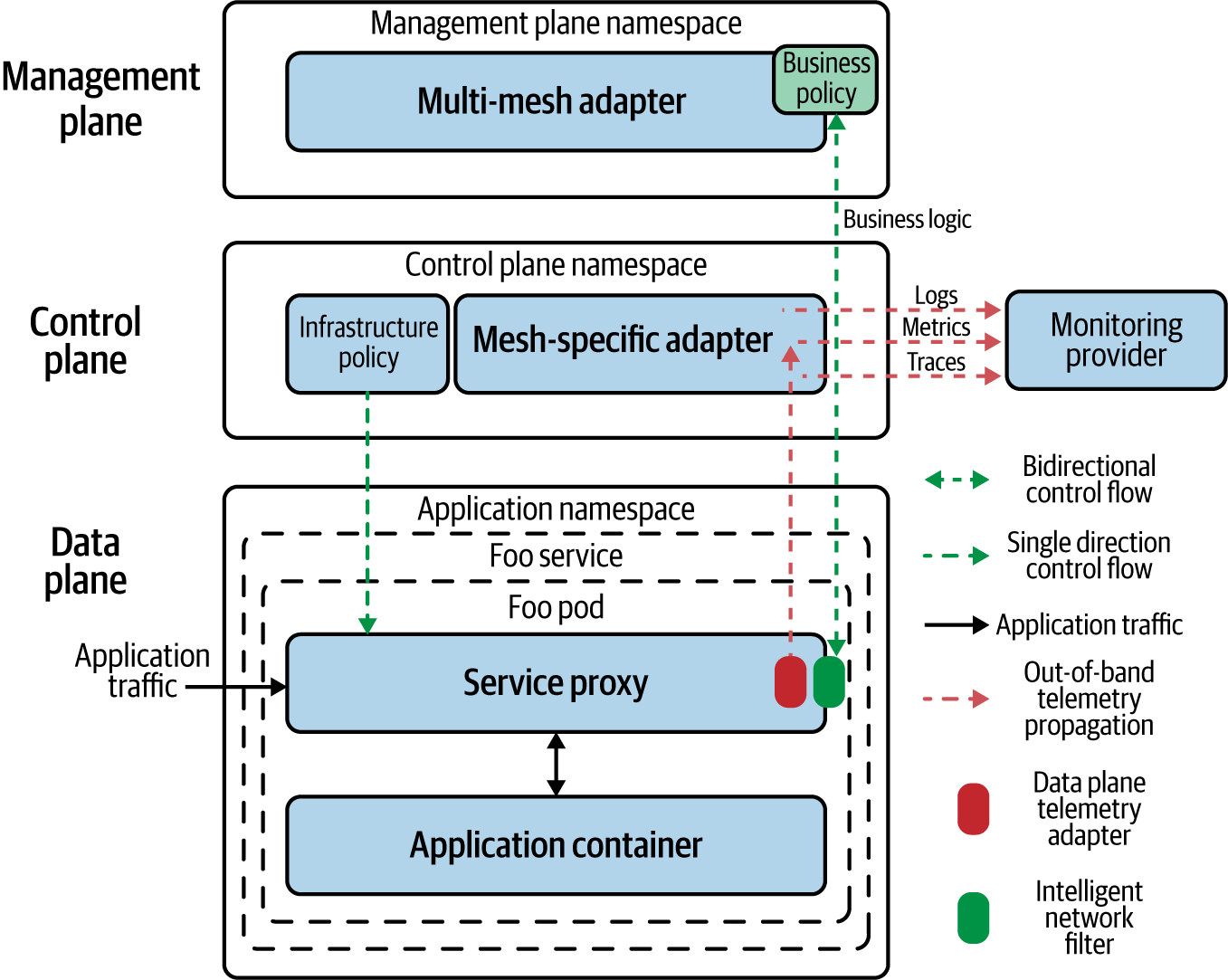

Some service meshes gather telemetry through service mesh-specific network filters residing in the service proxies in the data plane, as shown in Figure 4-4. Here, we also see that management planes are capable of receiving telemetry and sending control signals to affect the behavior of the application at a business-logic level. The service mesh control plane uses one or more telemetry adapters to collect and pass along these signals. The control plane will use multiple adapters either for different types of telemetry—traces, logs, or metrics, or for transmitting telemetry to external monitoring providers.

Later versions of Istio are shifting this model of adapter extensibility out of the control plane and into the data plane, as shown in Figure 4-4.

Figure 4-4. Data plane performing the heavy lifting to ensure efficient processing of packets and telemetry generation. “Mixerless Istio” is an example of this model.

The default model of telemetry generation in Istio 1.5 and onward is done through Istio proxy filters, which are compiled to WebAssembly (WASM) modules and shipped with Istio proxy. This architectural shift was primarily done for performance reasons. Performance management is a recurring theme in consideration of deploying and managing service meshes.

The Performance of the Data Plane

Considering the possibilities of what can be achieved when the intelligence of the management plane and the power of the data plane are combined, service meshes pack quite a punch in terms of facilitating configuration management, central identity, telemetry collection, traffic policy, application infrastructure logic, and so on. With this understanding, consider that the more value you try to derive from a service mesh, the more work you will ask it to do. The more work a service mesh does, the more its efficiency becomes a concern. While benefiting from a service mesh’s features, you may ponder the question of what overhead your service mesh is incurring. This is one of the most common questions service mesh users have.

Carefully consider the quantifiable performance aspects of your service mesh in context of the value you are deriving from it. If you were to acquire this same functionality outside of a service mesh, what would it “cost” you in terms of time to implement and operational efficiency of not having a single point of control for all that the service mesh provides?

Conclusion

The NGINX Service Mesh and Citrix Service Mesh draw much interest as many organizations have broad and deep operational expertise built around these battle-tested proxies.

Service mesh management and control and data planes are highly extensible and customizable to your environment. Data plane intelligence can be extended by executing traffic filters (modules) using technologies like Lua and WebAssembly.

Do not underestimate the importance of data plane intelligence. More and more, developers can shed application logic responsibilities to the data plane, which means that they can deliver on core business logic more readily, and it means that operators and service/product owners are empowered with more control over application logic by virtue of simply configuring the service mesh.