A WORD TO THE NERD

Resilience: How The Cloud Stays Up

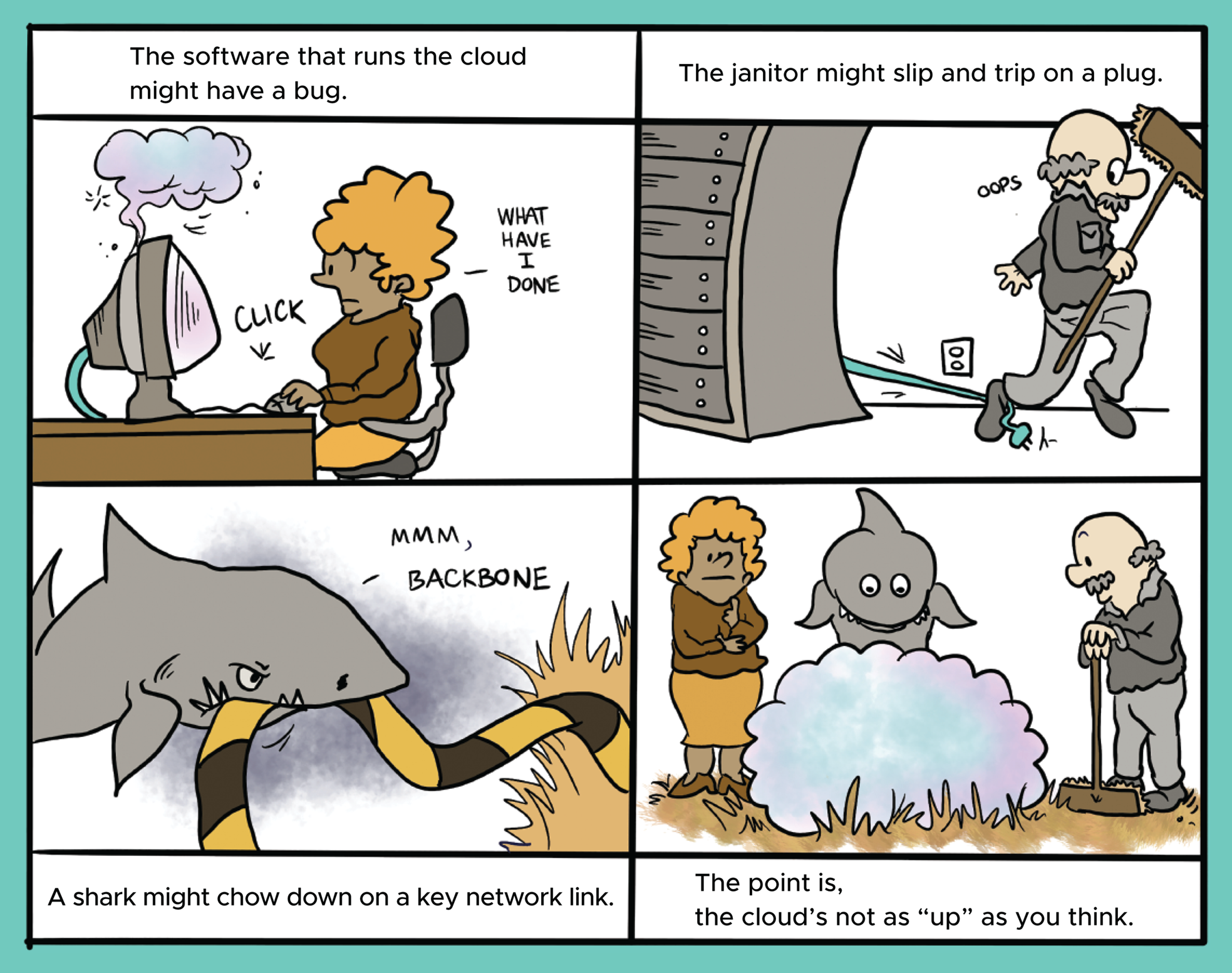

The cloud's not perfect. It isn't supposed to be. In fact, we describe good cloud architectures not as “foolproof,” but “fault-tolerant.”

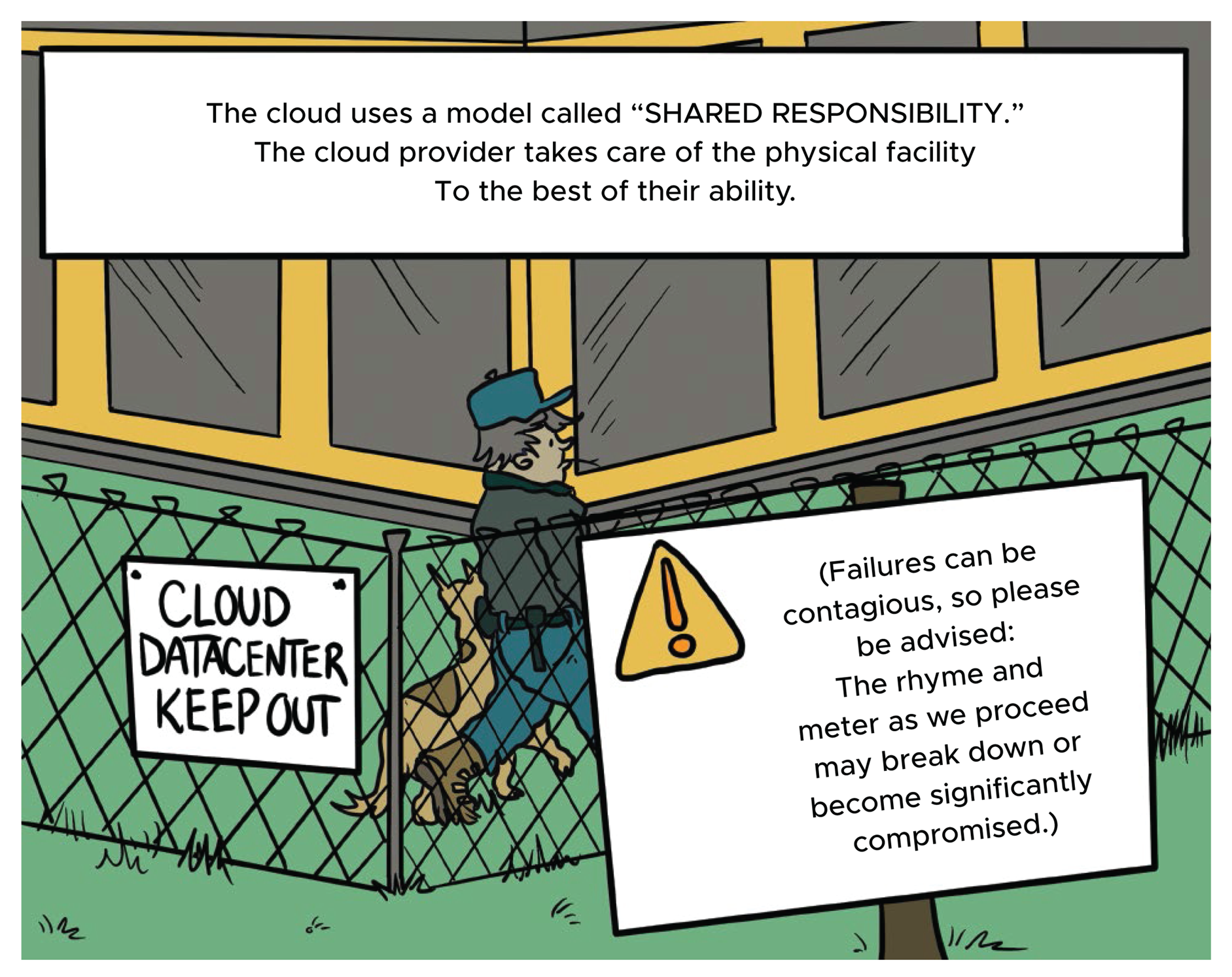

The Shared Responsibility Model

When you make the big decision to put your data on a cloud provider's servers instead of running your own hardware, you give up a certain amount of control—for better and worse. You are still responsible for the software of your cloud applications, like making sure your code doesn't have bugs in it. But the hardware—including the physical security of the data center—is now the responsibility of the cloud provider. You just have to trust that they know what they're doing.

The good news: cloud providers are among the best in the business at taking care of data centers and the machinery inside. After all, they do it at simply enormous scale. Amazon's S3 (Simple Storage Service) hosts data on the order of multiple exabytes—think a trillion copies of David Foster Wallace's Infinite Jest—and they run integrity checks on every single byte to maintain their legendary eleven nines of durability. That's 99.999999999 percent likelihood that your data will not get lost in a given year. You are several hundred times more likely to be struck by a meteor than to lose a file in S3.

Amazon Web Services and other cloud providers are able to sustain these guarantees because their data centers use specialized hardware and software, as well as highly trained technicians, to minimize the consequences of failure. They quickly replace bad drives, provide redundant (duplicate) power supplies and network cables, and probably don't make grilled cheese sandwiches on the toasty backs of the servers, which is what I would do.

Availability Zones and Regions

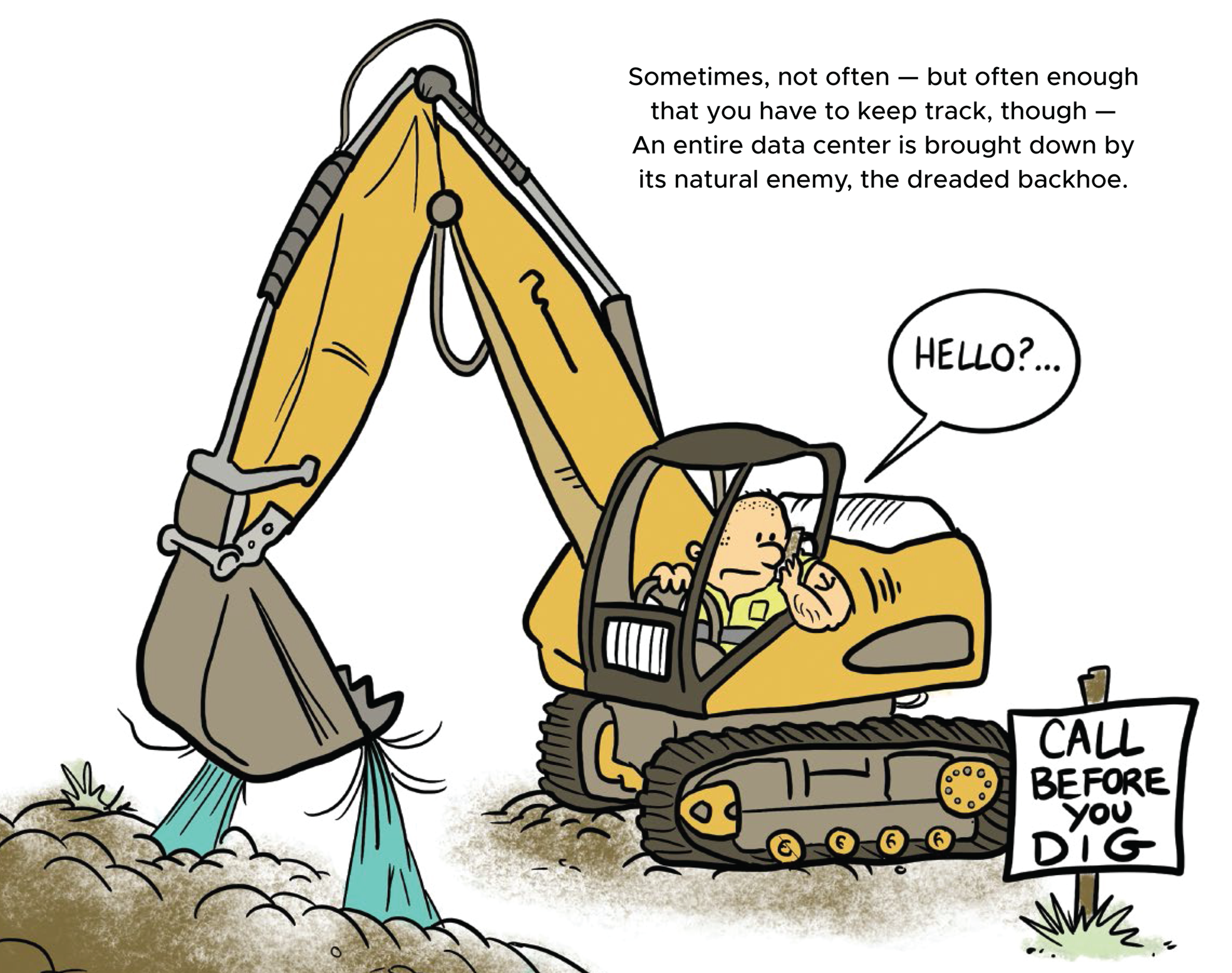

The natural enemy of the data center is the backhoe. Nothing can ruin a clever data backup strategy faster than a giant shovel slicing through the fiber-optic cable outside the building.

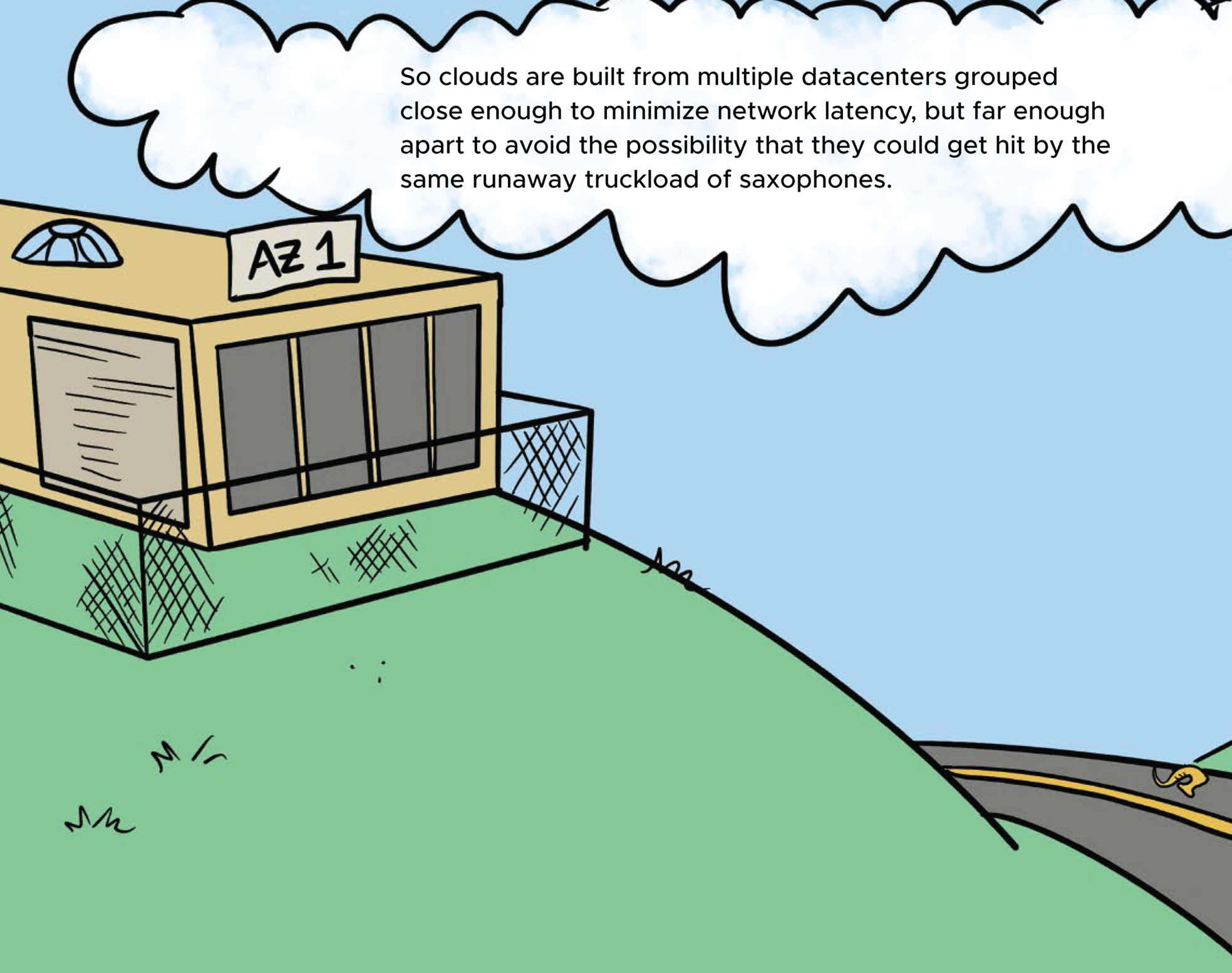

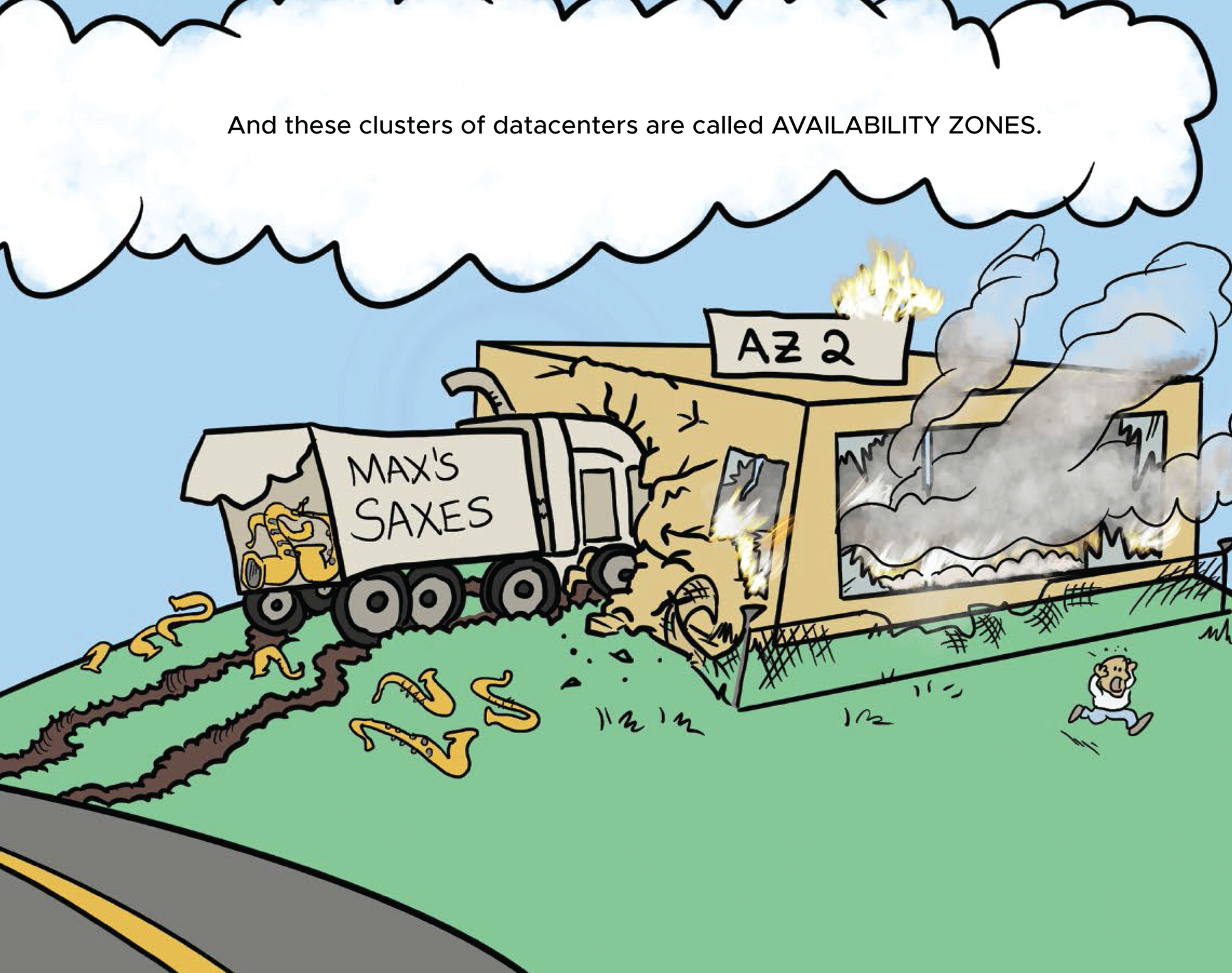

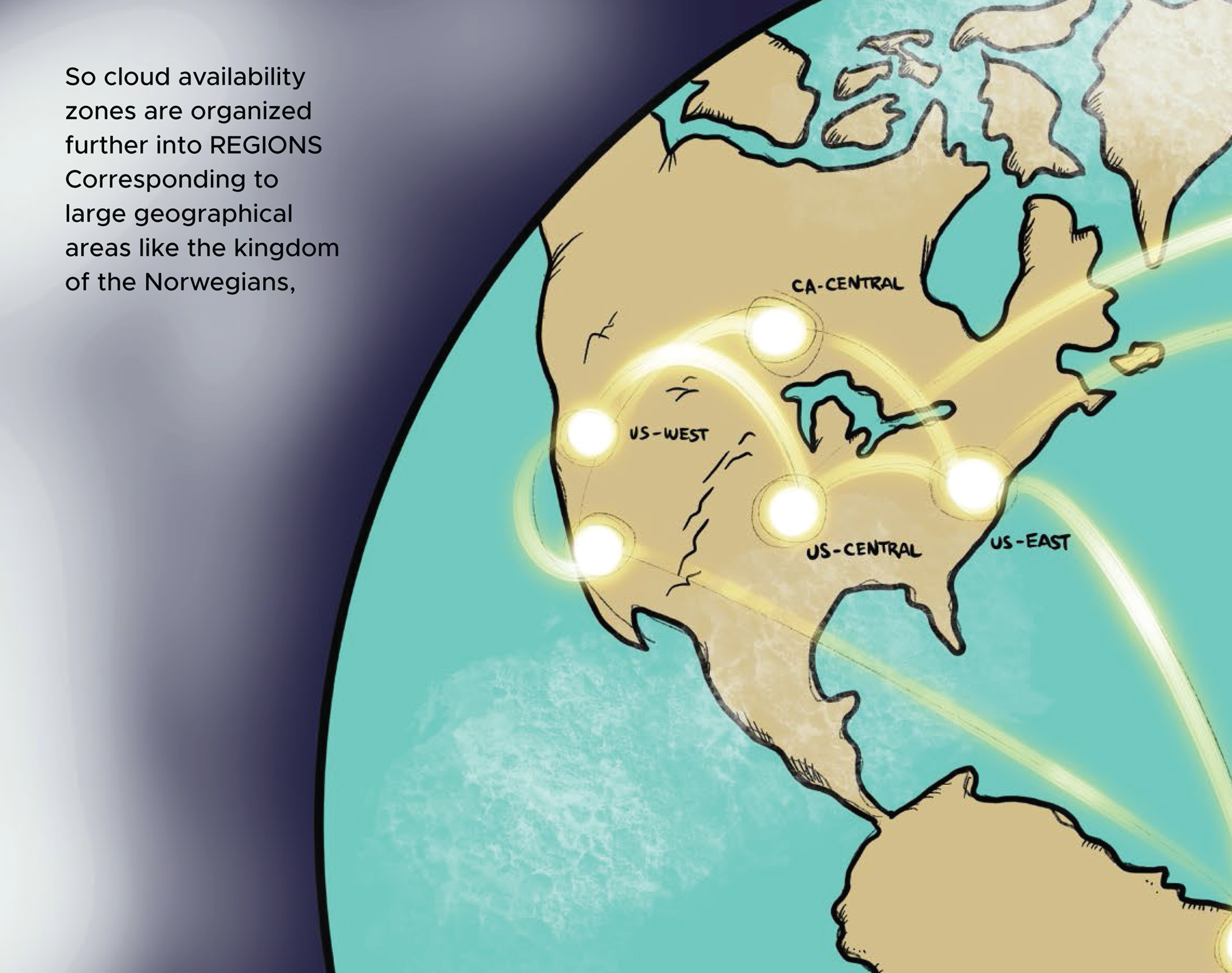

So the major cloud providers all have a concept of “availability zones,” which are groups of one or more data centers that are physically far enough apart to avoid sharing some sort of localized catastrophe like a sliced network link but close enough together that data can travel back and forth without too much latency, or communication delay. (Remember, the cloud has to obey the laws of physics, just like all of us schmucks here on the ground.)

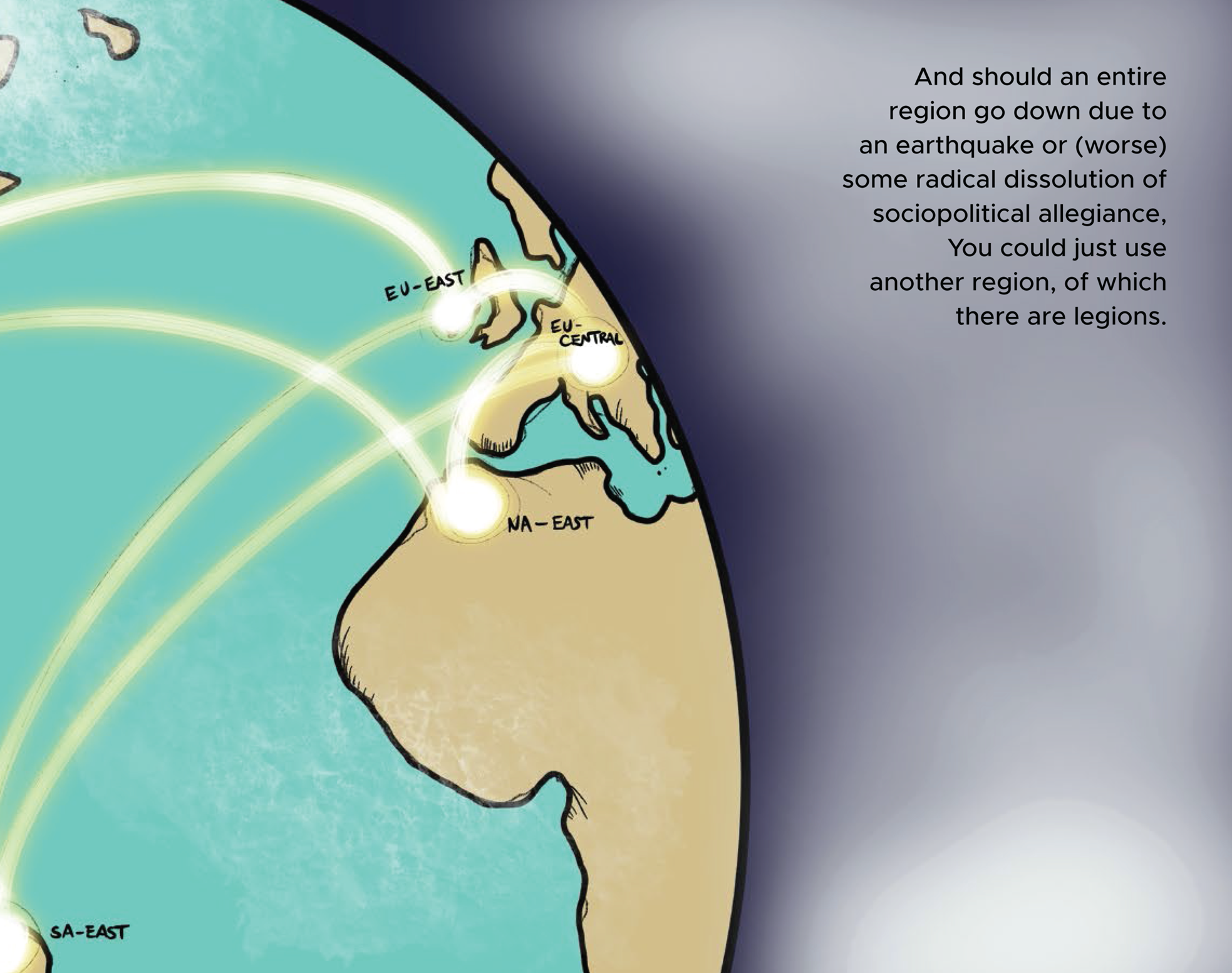

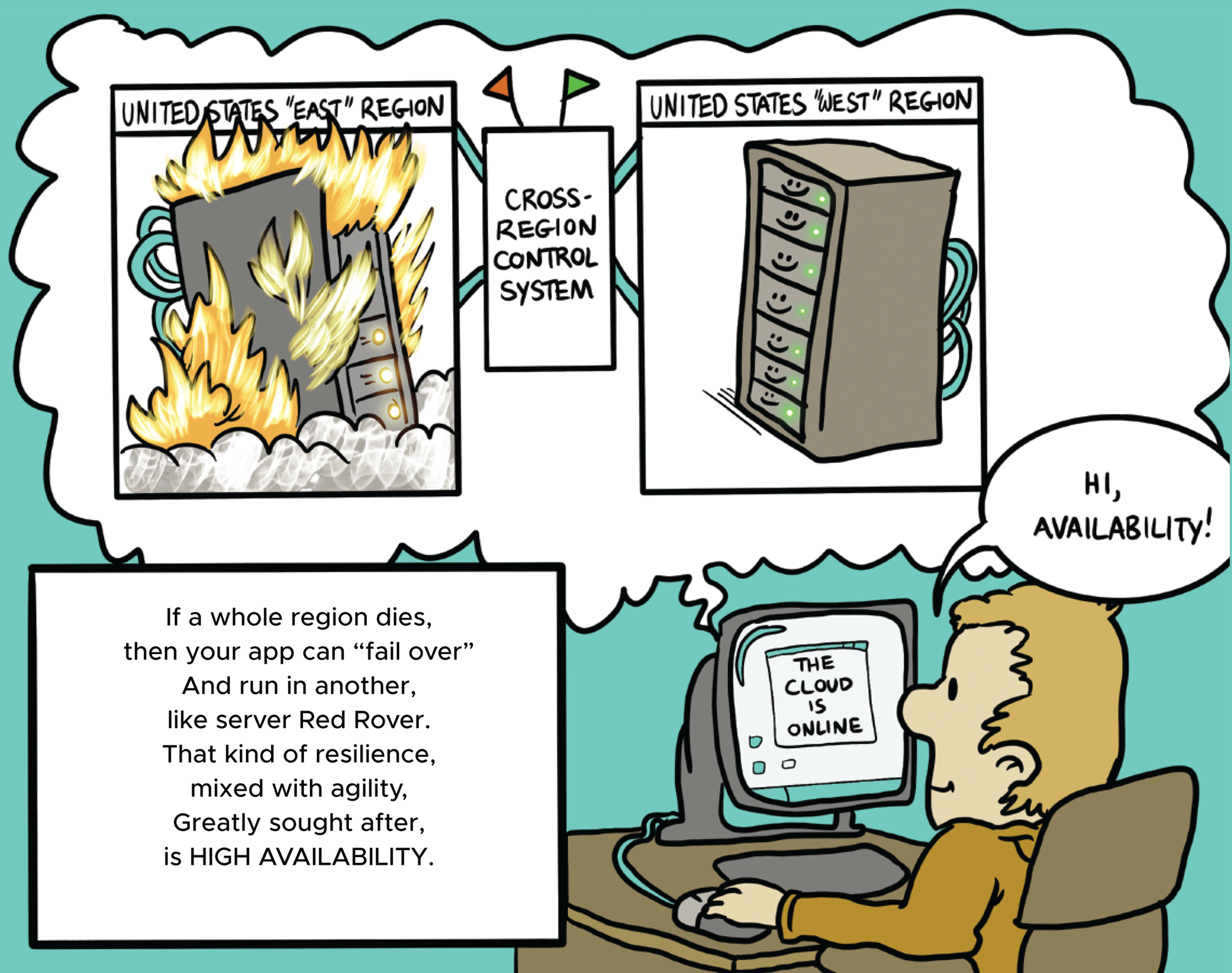

Availability zones themselves are grouped into geographical “regions,” usually comprising two or more zones. If an entire region were to go offline, that would be a bad day. Like if a giant meteor hit the East Coast of the United States. Or, as happened to Amazon in 2017, if some system administrator typed 100 when they meant to type 10 and accidentally restarted too many servers, causing a cascading failure that took down S3 in the US-East region and what seemed like half the Internet with it.

Designing for Failure

You can't control what happens if the cloud provider screws up their end of the shared responsibility model. But you are completely in charge of your end: designing your application for failure.

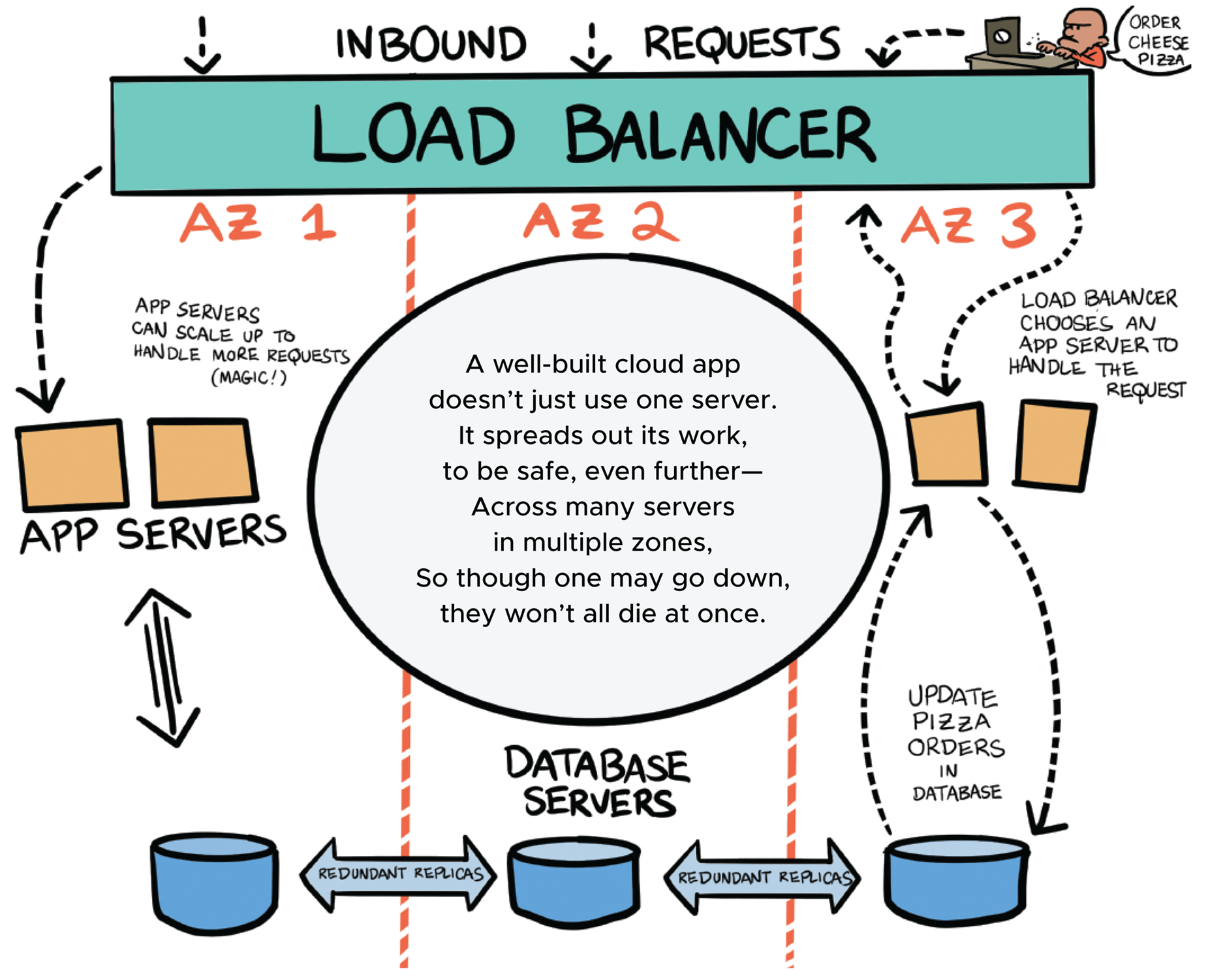

You must assume, at the least, that any given availability zone could get pulled out from under you at any time. So a well-architected cloud application places resources in multiple availability zones and uses a routing device called a “load balancer” to send client requests to whatever servers happen to be online.

The database is the part of your application that's always the easiest thing to screw up in a failure scenario: data can get lost, or just as bad, you could end up with multiple copies of your data that disagree. So databases often have complex “failover” protocols that let you place replicas of your data in different availability zones, though only one of them is actively writing new data at a given time.

Lose a region? You might be in a disaster recovery situation and have to take some downtime, an unscheduled outage, while you bring up a new copy of your application from backups in another region. (You should definitely always have backups!)

So yeah, the cloud takes away some control. But it also gives you a ton of options for how you can protect yourself from failure, whether bugs in your code or sudden hardware suicide. There's even a whole discipline called chaos engineering that breaks parts of systems on purpose to see how resilient they are. The cloud lets you do that at very low cost so that when the big failure happens, you'll be ready.