IPC is an abbreviation for Interprocess Communication, and commonly refers to a set of mechanisms that allow a User Mode process to do the following:

Synchronize itself with other processes by means of semaphores

Send messages to other processes or receive messages from them

Share a memory area with other processes

System V IPC first appeared in a development Unix variant called "Columbus Unix” and later was adopted by AT&T’s System III. It is now found in most Unix systems, including Linux.

IPC data structures are created dynamically when a process requests an IPC resource (a semaphore, a message queue, or a shared memory region). An IPC resource is persistent: unless explicitly removed by a process, it is kept in memory and remains available until the system is shut down. An IPC resource may be used by any process, including those that do not share the ancestor that created the resource.

Since a process may require several IPC resources of the same type, each new resource is identified by a 32-bit IPC key , which is similar to the file pathname in the system’s directory tree. Each IPC resource also has a 32-bit IPC identifier , which is somewhat similar to the file descriptor associated with an open file. IPC identifiers are assigned to IPC resources by the kernel and are unique within the system, while IPC keys can be freely chosen by programmers.

When two or more processes wish to communicate through an IPC resource, they all refer to the IPC identifier of the resource.

IPC resources are created by invoking the semget( ), msgget( ), or shmget( ) functions, depending on whether the new resource is a

semaphore, a message queue, or a shared memory region.

The main objective of each of these three functions is to derive from the IPC key (passed as the first parameter) the corresponding IPC identifier, which is then used by the process for accessing the resource. If there is no IPC resource already associated with the IPC key, a new resource is created. If everything goes right, the function returns a positive IPC identifier; otherwise, it returns one of the error codes listed in Table 19-6.

Table 19-6. Error codes returned while requesting an IPC identifier

|

Error code |

Description |

|---|---|

|

|

Process does not have proper access rights. |

|

|

Process tried to create an IPC resource with the same key as one that already exists. |

|

|

Resource is marked to be deleted. |

|

|

No IPC resource with the requested key exists and the process did not ask to create it. |

|

|

No more storage is left for an additional IPC resource. |

|

|

Maximum limit on the number of IPC resources has been exceeded. |

Assume that two independent processes want to share a common IPC resource. This can be achieved in two possible ways:

The processes agree on some fixed, predefined IPC key. This is the simplest case, and it works quite well for any complex application implemented by many processes. However, there’s a chance that the same IPC key is chosen by another unrelated program. In this case, the IPC functions might be successfully invoked and still return the IPC identifier of the wrong resource.[126]

One process issues a

semget( ),msgget( ), orshmget( )function by specifyingIPC_PRIVATEas its IPC key. A new IPC resource is thus allocated, and the process can either communicate its IPC identifier to the other process in the application[127] or fork the other process itself. This method ensures that the IPC resource cannot be used accidentally by other applications.

The last parameter of the semget( ),

msgget( ), and shmget( )

functions can include two flags. IPC_CREAT

specifies that the IPC resource must be created, if it does not

already exist; IPC_EXCL specifies that the

function must fail if the resource already exists and the

IPC_CREAT flag is set.

Even if the process uses the IPC_CREAT and

IPC_EXCL flags, there is no way to ensure

exclusive access to an IPC resource, since other processes may always

refer to the resource by using its IPC identifier.

To minimize the risk of incorrectly referencing the wrong resource, the kernel does not recycle IPC identifiers as soon as they become free. Instead, the IPC identifier assigned to a resource is almost always larger than the identifier assigned to the previously allocated resource of the same type. (The only exception occurs when the 32-bit IPC identifier overflows.) Each IPC identifier is computed by combining a slot usage sequence number relative to the resource type, an arbitrary slot index for the allocated resource, and an arbitrary value chosen in the kernel that is greater than the maximum number of allocatable resources. If we choose s to represent the slot usage sequence number, M to represent the upper bound on the number of allocatable resources, and i to represent the slot index, where 0≤i<M, each IPC resource’s ID is computed as follows:

IPC identifier = s × M + i

In Linux 2.4, the value of M is set to 32,768

(IPCMNI macro). The slot usage sequence number

s is initialized to 0 and is incremented by 1 at

every resource allocation. When s reaches a

predefined threshold, which depends on the type of IPC resource, it

restarts from 0.

Every type of IPC resource (semaphores, message queues, and shared

memory areas) owns an ipc_ids data structure,

which includes the fields shown in Table 19-7.

Table 19-7. The fields of the ipc_ids data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

Current maximum number of IPC resources |

|

|

|

Number of allocated IPC resources |

|

|

|

Maximum slot index in use |

|

|

|

Slot usage sequence number for the next allocation |

|

|

|

Maximum slot usage sequence number |

|

|

|

Semaphore protecting the |

|

|

|

Spin lock protecting the IPC resource descriptors |

|

|

|

Array of IPC resource descriptors |

The size field stores the maximum number of

allocatable IPC resources of the given type. The system administrator

may increase this value for any resource type by writing into the

/proc/sys/kernel/sem,

/proc/sys/kernel/msgmni, and

/proc/sys/kernel/shmmni special files,

respectively.

The entries field points to an array of pointers

to kern_ipc_perm data structures, one for every

allocatable resource (the size field is also the

size of the array). Each kern_ipc_perm data

structure is associated with an IPC resource and contains the fields

shown in Table 19-8. The uid,

gid, cuid, and

cgid fields store the user and group identifiers

of the resource’s creator and the user and group

identifiers of the current resource’s owner,

respectively. The mode bit mask includes six

flags, which store the read and write access permissions for the

resource’s owner, the resource’s

group, and all other users. IPC access permissions are similar to

file access permissions described in Section 1.5.5, except that the Execute permission flag is

not used.

Table 19-8. The fields in the kern_ipc_ perm structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

IPC key |

|

|

|

Owner user ID |

|

|

|

Owner group ID |

|

|

|

Creator user ID |

|

|

|

Creator group ID |

|

|

|

Permission bit mask |

|

|

|

Slot usage sequence number |

The kern_ipc_perm data structure also includes a

key field (which contains the IPC key of the

corresponding resource) and a seq field (which

stores the slot usage sequence number s used to

compute the IPC identifier of the resource).

The semctl( ), msgctl( ), and

shmctl( ) functions may be used to handle IPC

resources. The IPC_SET subcommand allows a process

to change the owner’s user and group identifiers and

the permission bit mask in the ipc_perm data

structure. The IPC_STAT and

IPC_INFO subcommands retrieve some information

concerning a resource. Finally, the IPC_RMID

subcommand releases an IPC resource. Depending on the type of IPC

resource, other specialized subcommands are also available.[128]

Once an IPC resource is created, a process may act on the resource by

means of a few specialized functions. A process may acquire or

release an IPC semaphore by issuing the semop( )

function. When a process wants to send or receive an IPC message, it

uses the msgsnd( ) and msgrcv( ) functions, respectively. Finally, a process attaches and

detaches an IPC shared memory region in its address space by means of

the shmat( ) and shmdt( )

functions, respectively.

All IPC functions must be implemented

through suitable Linux system calls. Actually, in the 80 ×

86 architecture, there is just one IPC system call named

ipc( ). When a process invokes an IPC function,

let’s say msgget( ), it really

invokes a wrapper function in the C library. This in turn invokes the

ipc( ) system call by passing to it all the

parameters of msgget( ) plus a proper subcommand

code—in this case, MSGGET. The

sys_ipc( ) service routine examines the subcommand

code and invokes the kernel function that implements the requested

service.

The ipc( )

“multiplexer” system call is a

legacy from older Linux versions, which included the IPC code in a

dynamic module (see Appendix B). It did not make

much sense to reserve several system call entries in the

system_call table for a kernel component that

could be missing, so the kernel designers adopted the multiplexer

approach.

Nowadays, System V IPC can no longer be compiled as a dynamic module, and there is no justification for using a single IPC system call. As a matter of fact, Linux provides one system call for each IPC function on Hewlett-Packard’s Alpha architecture and on Intel’s IA-64.

IPC semaphores are quite similar to the kernel semaphores introduced in Chapter 5; they are counters used to provide controlled access to shared data structures for multiple processes.

The semaphore value is positive if the protected resource is available, and 0 if the protected resource is currently not available. A process that wants to access the resource tries to decrement the semaphore value; the kernel, however, blocks the process until the operation on the semaphore yields a positive value. When a process relinquishes a protected resource, it increments its semaphore value; in doing so, any other process waiting for the semaphore is woken up.

Actually, IPC semaphores are more complicated to handle than kernel semaphores for two main reasons:

Each IPC semaphore is a set of one or more semaphore values, not just a single value like a kernel semaphore. This means that the same IPC resource can protect several independent shared data structures. The number of semaphore values in each IPC semaphore must be specified as a parameter of the

semget( )function when the resource is being allocated. From now on, we’ll refer to the counters inside an IPC semaphore as primitive semaphores . There are bounds both on the number of IPC semaphore resources (by default, 128) and on the number of primitive semaphores inside a single IPC semaphore resource (by default, 250); however, the system administrator can easily modify these bounds by writing into the/proc/sys/kernel/semfile.System V IPC semaphores provide a fail-safe mechanism for situations in which a process dies without being able to undo the operations that it previously issued on a semaphore. When a process chooses to use this mechanism, the resulting operations are called undoable semaphore operations. When the process dies, all of its IPC semaphores can revert to the values they would have had if the process had never started its operations. This can help prevent other processes that use the same semaphores from remaining blocked indefinitely as a consequence of the terminating process failing to manually undo its semaphore operations.

First, we’ll briefly sketch the typical steps performed by a process wishing to access one or more resources protected by an IPC semaphore:

Invokes the

semget( )wrapper function to get the IPC semaphore identifier, specifying as the parameter the IPC key of the IPC semaphore that protects the shared resources. If the process wants to create a new IPC semaphore, it also specifies theIPC_CREATEorIPC_PRIVATEflag and the number of primitive semaphores required (see Section 19.3.1 earlier in this chapter).Invokes the

semop( )wrapper function to test and decrement all primitive semaphore values involved. If all the tests succeed, the decrements are performed, the function terminates, and the process is allowed to access the protected resources. If some semaphores are in use, the process is usually suspended until some other process releases the resources. The function receives as parameters the IPC semaphore identifier, an array of integers specifying the operations to be atomically performed on the primitive semaphores, and the number of such operations. Optionally, the process may specify theSEM_UNDOflag, which instructs the kernel to reverse the operations, should the process exit without releasing the primitive semaphores.When relinquishing the protected resources, invokes the

semop( )function again to atomically increment all primitive semaphores involved.Optionally, invokes the

semctl( )wrapper function, specifying theIPC_RMIDcommand to remove the IPC semaphore from the system.

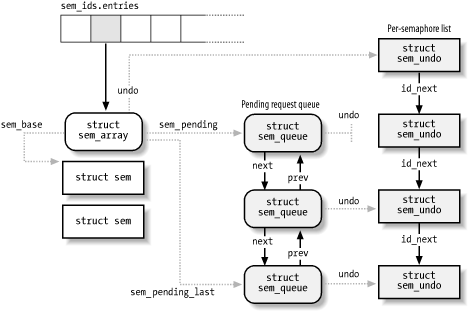

Now we can discuss how the kernel implements IPC semaphores. The data

structures involved are shown in Figure 19-1. The

sem_ids variable stores the

ipc_ids data structure of the IPC semaphore

resource type; its entries field is an array of

pointers to sem_array data structures, one item

for every IPC semaphore resource.

Formally, the array stores pointers to

kern_ipc_perm data structures, but each structure

is simply the first field of the sem_array data

structure. All fields of the sem_array data

structure are shown in Table 19-9.

Table 19-9. The fields in the sem_array data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

|

|

|

|

Timestamp of last |

|

|

|

Timestamp of last change |

|

|

|

Pointer to first |

|

|

|

Pending operations |

|

|

|

Last pending operation |

|

|

|

Undo requests |

|

|

|

Number of semaphores in array |

The sem_base field points to an array of

struct sem data structures, one for every IPC

primitive semaphore. The latter data structure includes only two

fields:

-

semval The value of the semaphore’s counter.

-

sempid The PID of the last process that accessed the semaphore. This value can be queried by a process through the

semctl( )wrapper function.

If a process aborts suddenly, it cannot undo the operations that it

started (for instance, release the semaphores it reserved); so by

declaring them undoable, the process lets the kernel return the

semaphores to a consistent state and allow other processes to

proceed. Processes can request undoable operations by specifying the

SEM_UNDO flag in the semop( )

function.

Information to help the kernel reverse the undoable operations

performed by a given process on a given IPC semaphore resource is

stored in a sem_undo data structure. It

essentially contains the IPC identifier of the semaphore and an array

of integers representing the changes to the primitive

semaphore’s values caused by all undoable operations

performed by the process.

A simple example can illustrate how such sem_undo

elements are used. Consider a process that uses an IPC semaphore

resource containing four primitive semaphores. Suppose that it

invokes the semop( ) function to increment the

first counter by 1 and decrement the second by 2. If it specifies the

SEM_UNDO flag, the integer in the first array

element in the sem_undo data structure is

decremented by 1, the integer in the second element is incremented by

2, and the other two integers are left unchanged. Further undoable

operations on the IPC semaphore performed by the same process change

the integers stored in the sem_undo structure

accordingly. When the process exits, any nonzero value in that array

corresponds to one or more unbalanced operations on the corresponding

primitive semaphore; the kernel reverses these operations, simply

adding the nonzero value to the corresponding

semaphore’s counter. In other words, the changes

made by the aborted process are backed out while the changes made by

other processes are still reflected in the state of the semaphores.

For each process, the kernel keeps track of all semaphore resources

handled with undoable operations so that it can roll them back if the

process unexpectedly exits. Furthermore, for each semaphore, the

kernel has to keep track of all its sem_undo

structures so it can quickly access them whenever a process uses

semctl( ) to force an explicit value into a

primitive semaphore’s counter or to destroy an IPC

semaphore resource.

The kernel is able to handle these tasks efficiently, thanks to two lists, which we denote as the per-process and the per-semaphore lists. The first list keeps track of all semaphores operated upon by a given process with undoable operations. The second list keeps track of all processes that are acting on a given semaphore with undoable operations. More precisely:

The per-process list includes all

sem_undodata structures corresponding to IPC semaphores on which the process has performed undoable operations. Thesemundofield of the process descriptor points to the first element of the list, while theproc_nextfield of eachsem_undodata structure points to the next element in the list.The per-semaphore list includes all

sem_undodata structures corresponding to the processes that performed undoable operations on the semaphore. Theundofield of thesemid_dsdata structure points to the first element of the list, while theid_nextfield of eachsem_undodata structure points to the next element in the list.

The per-process list is used when a process terminates. The

sem_exit( ) function, which is invoked by

do_exit( ), walks through the list and reverses

the effect of any unbalanced operation for every IPC semaphore

touched by the process. By contrast, the per-semaphore list is mainly

used when a process invokes the semctl( ) function

to force an explicit value into a primitive semaphore. The kernel

sets the corresponding element to 0 in the arrays of all

sem_undo data structures referring to that IPC

semaphore resource, since it would no longer make any sense to

reverse the effect of previous undoable operations performed on that

primitive semaphore. Moreover, the per-semaphore list is also used

when an IPC semaphore is destroyed; all related

sem_undo data structures are invalidated by

setting the semid field to -1.[129]

The kernel associates a queue of pending requests

with each IPC semaphore to identify processes that are

waiting on one (or more) of the semaphores in the array. The queue is

a doubly linked list of sem_queue data structures

whose fields are shown in Table 19-10. The first and

last pending requests in the queue are referenced, respectively, by

the sem_pending and

sem_pending_last fields of the

sem_array structure. This last field allows the

list to be handled easily as a FIFO; new pending requests are added

to the end of the list so they will be serviced later. The most

important fields of a pending request are nsops

(which stores the number of primitive semaphores involved in the

pending operation) and sops (which points to an

array of integer values describing each semaphore operation). The

sleeper field stores the descriptor address of the

sleeping process that requested the operation.

Table 19-10. The fields in the sem_queue data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

Pointer to next queue element |

|

|

|

Pointer to previous queue element |

|

|

|

Pointer to the sleeping process that requested the semaphore operation |

|

|

|

Pointer to |

|

|

|

Process identifier |

|

|

|

Completion status of operation |

|

|

|

Pointer to IPC semaphore descriptor |

|

|

|

Slot index of the IPC semaphore resource |

|

|

|

Pointer to array of pending operations |

|

|

|

Number of pending operations |

|

|

|

Flag indicating that the operation sets the semaphore value |

Figure 19-1 illustrates an IPC semaphore that has

three pending requests. Two of them refer to undoable operations, so

the undo field of the sem_queue

data structure points to the corresponding

sem_undo structure; the third pending request has

a NULL

undo field since the

corresponding operation is not undoable.

Processes can communicate with one another by means of IPC messages. Each message generated by a process is sent to an IPC message queue, where it stays until another process reads it.

A message is composed of a fixed-size header and a variable-length text; it can be labeled with an integer value (the message type), which allows a process to selectively retrieve messages from its message queue.[130] Once a process has read a message from an IPC message queue, the kernel destroys the message; therefore, only one process can receive a given message.

To send a message, a process invokes the msgsnd( )

function, passing the following as parameters:

The IPC identifier of the destination message queue

The size of the message text

The address of a User Mode buffer that contains the message type immediately followed by the message text

To retrieve a message, a process invokes the msgrcv( ) function, passing to it:

The IPC identifier of the IPC message queue resource

The pointer to a User Mode buffer to which the message type and message text should be copied

The size of this buffer

A value t that specifies what message should be retrieved

If the value t is 0, the first message in the queue is returned. If t is positive, the first message in the queue with its type equal to t is returned. Finally, if t is negative, the function returns the first message whose message type is the lowest value less than or equal to the absolute value of t.

To avoid resource exhaustion, there are some limits on the number of

IPC message queue resources allowed (by default, 16), on the size of

each message (by default, 8,192 bytes), and on the maximum total size

of the messages in a queue (by default, 16,384 bytes). As usual,

however, the system administrator can tune these values by writing

into the /proc/sys/kernel/msgmni,

/proc/sys/kernel/msgmnb, and

/proc/sys/kernel/msgmax files, respectively.

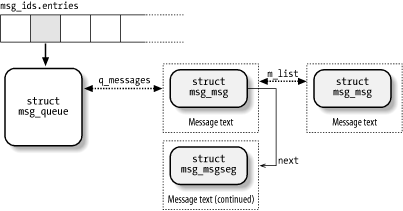

The data structures associated with IPC message queues are shown in

Figure 19-2. The msg_ids variable

stores the ipc_ids data structure of the IPC

message queue resource type; its entries field is

an array of pointers to msg_queue data

structures—one item for every IPC message queue resource.

Formally, the array stores pointers to

kern_ipc_perm data structures, but each such

structure is simply the first field of the

msg_queue data structure. All fields of the

msg_queue data structure are shown in Table 19-11.

Table 19-11. The msg_queue data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

|

|

|

|

Time of last |

|

|

|

Time of last |

|

|

|

Last change time |

|

|

|

Number of bytes in queue |

|

|

|

Number of messages in queue |

|

|

|

Maximum number of bytes in queue |

|

|

|

PID of last |

|

|

|

PID of last |

|

|

|

List of messages in queue |

|

|

|

List of processes receiving messages |

|

|

|

List of processes sending messages |

The most important field is q_messages, which

represents the head (i.e., the first dummy element) of a doubly

linked circular list containing all messages currently in the queue.

Each message is broken in one or more pages, which are dynamically

allocated. The beginning of the first page stores the message header,

which is a data structure of type msg_msg; its

fields are listed in Table 19-12. The

m_list field stores the pointers to the previous

and next messages in the queue. The message text starts right after

the msg_msg descriptor; if the message is longer

than 4,072 bytes (the page size minus the size of the

msg_msg descriptor), it continues on another page,

whose address is stored in the next field of the

msg_msg descriptor. The second page frame starts

with a descriptor of type msg_msgseg, which just

includes a next pointer storing the address of an

optional third page, and so on.

Table 19-12. The msg_msg data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

Pointers for message list |

|

|

|

Message type |

|

|

|

Message text size |

|

|

|

Next portion of the message |

When the message queue is full (either the maximum number of messages

or the maximum total size has been reached), processes that try to

enqueue new messages may be blocked. The q_senders

field of the msg_queue data structure is the head

of a list that includes the pointers to the descriptors of all

blocked sending processes.

Even receiving processes may be blocked when the message queue is

empty (or the process specified a type of message not present in the

queue). The q_receivers field of the

msg_queue data structure is the head of a list of

msg_receiver data structures, one for every

blocked receiving process. Each of these structures essentially

includes a pointer to the process descriptor, a pointer to the

msg_msg structure of the message, and the type of

the requested message.

The most useful IPC mechanism is shared memory, which allows two or more processes to access some common data structures by placing them in an IPC shared memory region . Each process that wants to access the data structures included in an IPC shared memory region must add to its address space a new memory region (see Section 8.3), which maps the page frames associated with the IPC shared memory region. Such page frames can then be easily handled by the kernel through demand paging (see Section 8.4.3).

As with semaphores and message queues, the shmget( ) function is invoked to get the IPC identifier of a shared

memory region, optionally creating it if it does not already exist.

The shmat( ) function is invoked to

“attach” an IPC shared memory

region to a process. It receives as its parameter the identifier of

the IPC shared memory resource and tries to add a shared memory

region to the address space of the calling process. The calling

process can require a specific starting linear address for the memory

region, but the address is usually unimportant, and each process

accessing the shared memory region can use a different address in its

own address space. The process’s Page Tables are

left unchanged by shmat( ). We describe later what

the kernel does when the process tries to access a page that belongs

to the new memory region.

The shmdt( ) function is invoked to

“detach” an IPC shared memory

region specified by its IPC identifier—that is, to remove the

corresponding memory region from the process’s

address space. Recall that an IPC shared memory resource is

persistent: even if no process is using it, the corresponding pages

cannot be discarded, although they can be swapped out.

As for the other types of IPC resources, in order to avoid overuse of

memory by User Mode processes, there are some limits on the allowed

number of IPC shared memory regions (by default, 4,096), on the size

of each segment (by default, 32 megabytes), and on the maximum total

size of all segments (by default, 8 gigabytes). As usual, however,

the system administrator can tune these values by writing into the

/proc/sys/kernel/shmmni,

/proc/sys/kernel/shmmax, and

/proc/sys/kernel/shmall files, respectively.

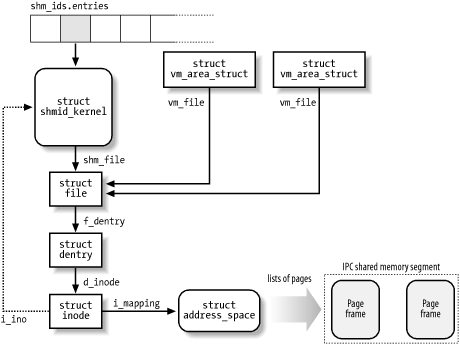

The data structures associated with IPC shared memory regions are

shown in Figure 19-3. The shm_ids

variable stores the ipc_ids data structure of the

IPC shared memory resource type; its entries field

is an array of pointers to shmid_kernel data

structures, one item for every IPC shared memory resource. Formally,

the array stores pointers to kern_ipc_perm data

structures, but each such structure is simply the first field of the

msg_queue data structure. All fields of the

shmid_kernel data structure are shown in Table 19-13.

Table 19-13. The fields in the shmid_kernel data structure

|

Type |

Field |

Description |

|---|---|---|

|

|

|

|

|

|

|

Special file of the segment |

|

|

|

Slot index of the segment |

|

|

|

Number of current attaches |

|

|

|

Segment size in bytes |

|

|

|

Last access time |

|

|

|

Last detach time |

|

|

|

Last change time |

|

|

|

PID of creator |

|

|

|

PID of last accessing process |

The most important field is shm_file, which stores

the address of a file object. This reflects the tight integration of

IPC shared memory with the VFS layer in Linux 2.4. In particular,

each IPC shared memory region is associated with a regular file

belonging to the

shm special

filesystem (see Section 12.3.1).

Since the shm filesystem has no mount point in

the system directory tree, no user can open and access its files by

means of regular VFS system calls. However, whenever a process

“attaches” a segment, the kernel

invokes do_mmap( ) and creates a new shared memory

mapping of the file in the address space of the process. Therefore,

files that belong to the shm special filesystem

have just one file object method, mmap, which is

implemented by the shm_mmap( ) function.

As shown in Figure 19-3, a memory region that

corresponds to an IPC shared memory region is described by a

vm_area_struct object (see Section 15.2); its vm_file field points

back to the file object of the special file, which in turn references

a dentry object and an inode object. The inode number, stored in the

i_ino field of the inode, is actually the slot

index of the IPC shared memory region, so the inode object indirectly

references the shmid_kernel descriptor.

As usual, for any shared memory mapping, page frames that belong to

the IPC shared memory region are included in the page cache through

an address_space object referenced by the

i_mapping field of the inode (you might also refer

to Figure 15-4).

The kernel has to be careful when swapping out pages included in shared memory regions, and the role of the swap cache is crucial (this topic was already discussed in Section 16.3).

As explained in Section 16.5.1, to swap out

a page owned by an address_space object, the

kernel essentially marks the page as dirty, thus triggering a data

transfer to disk, and then removes the page from the

process’s Page Table. If the page belongs to a

shared file memory mapping, eventually the page is no longer

referenced by any process, and the shrink_cache( )

function will release it to the Buddy system (see Section 16.7.5). This is fine because the data in the page is

just a duplicate of some data on disk.

However, pages of an IPC shared memory region map a special inode that has no image on disk. Moreover, an IPC shared memory region is persistent—that is, its pages must be preserved even when the segment is not attached to any process. Therefore, the kernel cannot simply discard the pages when reclaiming the corresponding page frames; rather, the pages have to be swapped out.

The try_to_swap_out( ) function includes no check

for this special case, so a page belonging to the region is marked as

dirty and removed from the process address space. Even the

shrink_cache( ) function, which periodically

prunes the page cache from the least recently used pages, has no

check for this special case, so it ends up invoking the

writepage method of the owner

address_space object (see Section 16.7.5).

How, then, are IPC shared memory regions preserved when their pages

are swapped out? Pages belonging to IPC shared memory regions

implement the writepage method by means of a

custom shmem_writepage( ) function, which

essentially allocates a new page slot in a swap area and moves the

page from the page cache to the swap cache (it’s

just a matter of changing the owner address_space

object of the page). The function also stores the swapped-out page

identifier in a shmem_inode_info structure

embedded in the filesystem-specific portion of the inode object.

Notice that the page is not immediately written onto the swap area:

this is done when the shrink_cache( ) is invoked

again.

The

pages added to a process by shmat( ) are dummy

pages; the function adds a new memory region into a

process’s address space, but it

doesn’t modify the process’s Page

Tables. Moreover, as we have seen, pages of an IPC shared memory

region can be swapped out. Therefore, these pages are handled through

the demand paging mechanism.

As we know, a Page Fault occurs when a process tries to access a

location of an IPC shared memory region whose underlying page frame

has not been assigned. The corresponding exception handler determines

that the faulty address is inside the process address space and that

the corresponding Page Table entry is null; therefore, it invokes the

do_no_page( ) function (see Section 8.4.3). In turn, this function checks whether the

nopage method for the memory region is defined.

That method is invoked, and the Page Table entry is set to the

address returned from it (see also Section 15.2.4).

Memory regions used for IPC shared memory always define the

nopage method. It is implemented by the

shmem_nopage( ) function, which performs the

following operations:

Walks the chain of pointers in the VFS objects and derives the address of the inode object of the IPC shared memory resource (see Figure 19-3).

Computes the logical page number inside the segment from the

vm_startfield of the memory region descriptor and the requested address.Checks whether the page is already included in the swap cache (see Section 16.3); if so, it terminates by returning its address.

Checks whether the

shmem_inode_infoembedded in the inode object stores a swapped-out page identifier for the logical page number. If so, it performs a swap-in operation by invokingswapin_readahead( )(see Section 16.6.1), waits until the data transfer completes, and terminates by returning the address of the page.Otherwise, the page is not stored in a swap area; therefore, the function allocates a new page from the Buddy system, inserts into the page cache, and returns its address.

The do_no_page( ) function sets the entry that

corresponds to the faulty address in the process’s

Page Table so that it points to the page frame returned by the

method.

[126] The ftok( ) function attempts to create a

new key from a file pathname and an 8-bit project identifier passed

as parameters. It does not guarantee, however, a unique key number,

since there is a small chance that it will return the same IPC key to

two different applications using different pathnames and project

identifiers.

[127] This implies, of course, the existence of another communication channel between the processes not based on IPC.

[128] Another IPC design flaw is that a User Mode process cannot atomically create and initialize an IPC semaphore, since these two operations are performed by two different IPC functions.

[129] Notice that they are just invalidated and not freed, since it would be too costly to remove the data structures from the per-process lists of all processes.

[130] As we’ll see, the message queue is implemented by means of a linked list. Since messages can be retrieved in an order different from “first in, first out,” the name “message queue” is not appropriate. However, new messages are always put at the end of the linked list.