Linux implements a memory region by

means of an object of type vm_area_struct; its

fields are shown in Table 8-3.

Table 8-3. The fields of the memory region object

|

Type |

Field |

Description |

|---|---|---|

|

|

|

Pointer to the memory descriptor that owns the region |

|

|

|

First linear address inside the region |

|

|

|

First linear address after the region |

|

|

|

Next region in the process list |

|

|

|

Access permissions for the page frames of the region |

|

|

|

Flags of the region |

|

|

|

Data for the red-black tree (see later in this chapter) |

|

|

|

Pointer to the next element in the file memory mapping list |

|

|

|

Pointer to previous element in the file memory mapping list |

|

|

|

Pointer to the methods of the memory region |

|

|

|

Offset in mapped file, if any (see Chapter 15) |

|

struct file * |

vm_file |

Pointer to the file object of the mapped file, if any |

|

|

|

End of current read-ahead window of the mapped file (see Section 15.2.4) |

|

|

|

Pointer to private data of the memory region |

Each memory region descriptor identifies a linear address interval.

The vm_start field contains the first linear

address of the interval, while the vm_end field

contains the first linear address outside of the interval;

vm_end - vm_start thus denotes the length of the

memory region. The vm_mm field points to the

mm_struct memory descriptor of the process that

owns the region. We shall describe the other fields of

vm_area_struct as they come up.

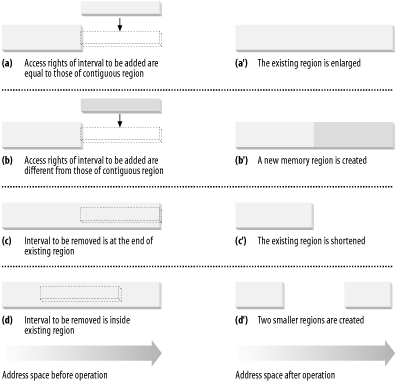

Memory regions owned by a process never overlap, and the kernel tries to merge regions when a new one is allocated right next to an existing one. Two adjacent regions can be merged if their access rights match.

As shown in Figure 8-1, when a new range of linear addresses is added to the process address space, the kernel checks whether an already existing memory region can be enlarged (case a). If not, a new memory region is created (case b). Similarly, if a range of linear addresses is removed from the process address space, the kernel resizes the affected memory regions (case c). In some cases, the resizing forces a memory region to split into two smaller ones (case d ).[58]

The vm_ops field points to a

vm_operations_struct data structure, which stores

the methods of the memory region. Only three methods are defined:

-

open Invoked when the memory region is added to the set of regions owned by a process.

-

close Invoked when the memory region is removed from the set of regions owned by a process.

-

nopage Invoked by the Page Fault exception handler when a process tries to access a page not present in RAM whose linear address belongs to the memory region (see the later section Section 8.4).

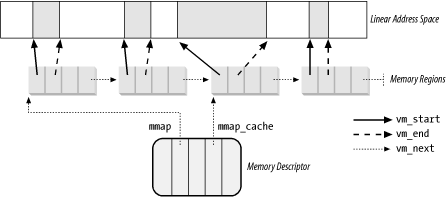

All the regions owned by a process

are linked in a simple list. Regions appear in the list in ascending

order by memory address; however, successive regions can be separated

by an area of unused memory addresses. The vm_next

field of each vm_area_struct element points to the

next element in the list. The kernel finds the memory regions through

the mmap field of the process memory descriptor,

which points to the first memory region descriptor in the list.

The map_count field of the memory descriptor

contains the number of regions owned by the process. A process may

own up to MAX_MAP_COUNT different memory regions

(this value is usually set to 65,536).

Figure 8-2 illustrates the relationships among the address space of a process, its memory descriptor, and the list of memory regions.

A frequent operation performed by the kernel is to search the memory region that includes a specific linear address. Since the list is sorted, the search can terminate as soon as a memory region that ends after the specific linear address is found.

However, using the list is convenient only if the process has very few memory regions—let’s say less than a few tens of them. Searching, inserting elements, and deleting elements in the list involve a number of operations whose times are linearly proportional to the list length.

Although most Linux processes use very few memory regions, there are some large applications, such as object-oriented databases, that one might consider “pathological” because they have many hundreds or even thousands of regions. In such cases, the memory region list management becomes very inefficient, hence the performance of the memory-related system calls degrades to an intolerable point.

Therefore, Linux 2.4 stores memory descriptors in data structures called red-black trees .[59] In an red-black tree, each element (or node ) usually has two children: a left child and a right child . The elements in the tree are sorted. For each node N, all elements of the subtree rooted at the left child of N precede N, while, conversely, all elements of the subtree rooted at the right child of N follow N (see Figure 8-3(a); the key of the node is written inside the node itself.

Moreover, a red-black tree must satisfy four additional rules:

Every node must be either red or black.

The root of the tree must be black.

The children of a red node must be black.

Every path from a node to a descendant leaf must contain the same number of black nodes. When counting the number of black nodes, null pointers are counted as black nodes.

These four rules ensure that any red-black tree with n internal nodes has a height of at most 2log(n + 1).

Searching an element in a red-black tree is thus very efficient because it requires operations whose execution time is linearly proportional to the logarithm of the tree size. In other words, doubling the number of memory regions adds just one more iteration to the operation.

Inserting and deleting an element in a red-black tree is also efficient because the algorithm can quickly traverse the tree to locate the position at which the element will be inserted or from which it will be removed. Any new node must be inserted as a leaf and colored red. If the operation breaks the rules, a few nodes of the tree must be moved or recolored.

For instance, suppose that an element having the value 4 must be inserted in the red-black tree shown in Figure 8-3(a). Its proper position is the right child of the node that has key 3, but once it is inserted, the red node that has the value 3 has a red child, thus breaking rule 3. To satisfy the rule, the color of nodes that have the values 3, 4, and 7 is changed. This operation, however, breaks rule 4, thus the algorithm performs a “rotation” on the subtree rooted at the node that has the key 19, producing the new red-black tree shown in Figure 8-3(b). This looks complicated, but inserting or deleting an element in a red-black tree requires a small number of operations—a number linearly proportional to the logarithm of the tree size.

Therefore, to store the memory regions of a process, Linux uses both a linked list and a red-black tree. Both data structures contain pointers to the same memory region descriptors, When inserting or removing a memory region descriptor, the kernel searches the previous and next elements through the red-black tree and uses them to quickly update the list without scanning it.

The head of the linked list is referenced by the

mmap field of the memory descriptor. Any memory

region object stores the pointer to the next element of the list in

the vm_next field. The head of the red-black tree

is referred by the mm_rb field of the memory

descriptor. Any memory region object stores the color of the node, as

well as the pointers to the parent, the left child, and the right

child, into the vm_rb field of type

rb_node_t.

In general, the red-black tree is used to locate a region including a specific address, while the linked list is mostly useful when scanning the whole set of regions.

Before moving on, we should clarify the relation between a page and a memory region. As mentioned in Chapter 2, we use the term “page” to refer both to a set of linear addresses and to the data contained in this group of addresses. In particular, we denote the linear address interval ranging between 0 and 4,095 as page 0, the linear address interval ranging between 4,096 and 8,191 as page 1, and so forth. Each memory region therefore consists of a set of pages that have consecutive page numbers.

We have already discussed two kinds of flags associated with a page:

A few flags such as

Read/Write,Present, orUser/Supervisorstored in each Page Table entry (see Section 2.4.1).A set of flags stored in the

flagsfield of eachpagedescriptor (see Section 7.1).

The first kind of flag is used by the 80 × 86 hardware to check whether the requested kind of addressing can be performed; the second kind is used by Linux for many different purposes (see Table 7-2).

We now introduce a third kind of flags: those associated with the

pages of a memory region. They are stored in the

vm_flags field of the

vm_area_struct descriptor (see Table 8-4). Some flags offer the kernel information

about all the pages of the memory region, such as what they contain

and what rights the process has to access each page. Other flags

describe the region itself, such as how it can grow.

Table 8-4. The memory region flags

|

Flag name |

Description |

|---|---|

|

|

Pages can be read. |

|

|

Pages can be written. |

|

|

Pages can be executed. |

|

|

Pages can be shared by several processes. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

The region can expand toward lower addresses. |

|

|

The region can expand toward higher addresses. |

|

|

The region is used for IPC’s shared memory. |

|

|

The region maps a file that cannot be opened for writing. |

|

|

The region maps an executable file. |

|

|

Pages in the region are locked and cannot be swapped out. |

|

|

The region maps the I/O address space of a device. |

|

|

The application accesses the pages sequentially. |

|

|

The application accesses the pages in a truly random order. |

|

|

Does not copy the region when forking a new process. |

|

|

Forbids region expansion through |

|

|

Does not swap out the region. |

Page access rights included in a memory region descriptor may be combined arbitrarily. It is possible, for instance, to allow the pages of a region to be executed but not read. To implement this protection scheme efficiently, the read, write, and execute access rights associated with the pages of a memory region must be duplicated in all the corresponding Page Table entries so that checks can be directly performed by the Paging Unit circuitry. In other words, the page access rights dictate what kinds of access should generate a Page Fault exception. As we shall see shortly, the job of figuring out what caused the Page Fault is delegated by Linux to the Page Fault handler, which implements several page-handling strategies.

The initial values of the Page Table flags (which must be the same

for all pages in the memory region, as we have seen) are stored in

the vm_page_ prot field of the

vm_area_struct descriptor. When adding a page, the

kernel sets the flags in the corresponding Page Table entry according

to the value of the vm_page_prot field.

However, translating the memory region’s access rights into the page protection bits is not straightforward for the following reasons:

In some cases, a page access should generate a Page Fault exception even when its access type is granted by the page access rights specified in the

vm_flagsfield of the corresponding memory region. For instance, as we shall see in Section 8.4.4 later in this chapter, the kernel may wish to store two identical, writable private pages (whoseVM_SHAREflags are cleared) belonging to two different processes into the same page frame; in this case, an exception should be generated when either one of the processes tries to modify the page.80 × 86 processors’s Page Tables have just two protection bits, namely the

Read/WriteandUser/Supervisorflags. Moreover, theUser/Supervisorflag of any page included in a memory region must always be set, since the page must always be accessible by User Mode processes.

To overcome the hardware limitation of the 80 × 86 microprocessors, Linux adopts the following rules:

The read access right always implies the execute access right.

The write access right always implies the read access right.

Moreover, to correctly defer the allocation of page frames through the Section 8.4.4 technique (see later in this chapter), the page frame is write-protected whenever the corresponding page must not be shared by several processes. Therefore, the 16 possible combinations of the read, write, execute, and share access rights are scaled down to the following three:

If the page has both write and share access rights, the

Read/Writebit is set.If the page has the read or execute access right but does not have either the write or the share access right, the

Read/Writebit is cleared.If the page does not have any access rights, the

Presentbit is cleared so that each access generates a Page Fault exception. However, to distinguish this condition from the real page-not-present case, Linux also sets thePagesizebit to 1.[60]

The downscaled protection bits corresponding to each combination of

access rights are stored in the protection_map

array.

Having

the basic understanding of data structures and state information that

control memory handling, we can look at a group of low-level

functions that operate on memory region descriptors. They should be

considered auxiliary functions that simplify the implementation of

do_mmap( ) and do_munmap( ).

Those two functions, which are described in Section 8.3.4 and Section 8.3.5 later in this chapter,

enlarge and shrink the address space of a process, respectively.

Working at a higher level than the functions we consider here, they

do not receive a memory region descriptor as their parameter, but

rather the initial address, the length, and the access rights of a

linear address interval.

The

find_vma( ) function acts on two parameters: the

address mm of a process memory descriptor and a

linear address addr. It locates the first memory

region whose vm_end field is greater than

addr and returns the address of its descriptor; if

no such region exists, it returns a NULL pointer.

Notice that the region selected by find_vma( )

does not necessarily include addr because

addr may lie outside of any memory region.

Each memory

descriptor includes a mmap_cache field that stores

the descriptor address of the region that was last referenced by the

process. This additional field is introduced to reduce the time spent

in looking for the region that contains a given linear address.

Locality of address references in programs makes it highly likely

that if the last linear address checked belonged to a given region,

the next one to be checked belongs to the same region.

The function thus starts by checking whether the region identified by

mmap_cache includes addr. If

so, it returns the region descriptor pointer:

vma = mm->mmap_cache;

if (vma && vma->vm_end > addr && vma->vm_start <= addr)

return vma;Otherwise, the memory regions of the process must be scanned, and the function looks up the memory region in the red-black tree:

rb_node = mm->mm_rb.rb_node;

vma = NULL;

while (rb_node) {

vma_tmp = rb_entry(rb_node, struct vm_area_struct, vm_rb);

if (vma_tmp->vm_end > addr) {

vma = vma_tmp;

if (vma_tmp->vm_start <= addr)

break;

rb_node = rb_node->rb_left;

} else

rb_node = rb_node->rb_right;

}

if (vma)

mm->mmap_cache = vma;

return vma;The function uses the rb_entry macro, which

derives from a pointer to a node of the red-black tree the address of

the corresponding memory region descriptor.

The kernel also defines the find_vma_prev( )

function (which returns the descriptor addresses of the memory region

that precedes the linear address given as parameter and of the memory

region that follows it) and the find_vma_prepare( ) function (which locates the position of the new leaf in

the red-black tree that corresponds to a given linear address and

returns the addresses of the preceding memory region and of the

parent node of the leaf to be inserted).

The

find_vma_intersection( ) function finds the first

memory region that overlaps a given linear address interval; the

mm parameter points to the memory descriptor of

the process, while the start_addr and

end_addr linear addresses specify the interval:

vma = find_vma(mm,start_addr);

if (vma && end_addr <= vma->vm_start)

vma = NULL;

return vma;The function returns a NULL pointer if no such

region exists. To be exact, if find_vma( ) returns

a valid address but the memory region found starts after the end of

the linear address interval, vma is set to

NULL.

The

arch_get_unmapped_area( ) function searches the

process address space to find an available linear address interval.

The len parameter specifies the interval length,

while the addr parameter may specify the address

from which the search is started. If the search is successful, the

function returns the initial address of the new interval; otherwise,

it returns the error code -ENOMEM.

if (len > TASK_SIZE)

return -ENOMEM;

addr = (addr + 0xfff) & 0xfffff000;

if (addr && addr + len <= TASK_SIZE) {

vma = find_vma(current->mm, addr);

if (!vma || addr + len <= vma->vm_start)

return addr;

}

addr = (TASK_SIZE/3 + 0xfff) & 0xfffff000;

for (vma = find_vma(current->mm, addr); ; vma = vma->vm_next) {

if (addr + len > TASK_SIZE)

return -ENOMEM;

if (!vma || addr + len <= vma->vm_start)

return addr;

addr = vma->vm_end;

}The function starts by checking to make sure the interval length is

within the limit imposed on User Mode linear addresses, usually 3 GB.

If addr is different from zero, the function tries

to allocate the interval starting from addr. To be

on the safe side, the function rounds up the value of

addr to a multiple of 4 KB. If

addr is 0 or the previous search failed, the

search’s starting point is set to one-third of the

User Mode linear address space. Starting from

addr, the function then repeatedly invokes

find_vma( ) with increasing values of

addr to find the required free interval. During

this search, the following cases may occur:

The requested interval is larger than the portion of linear address space yet to be scanned (

addr + len > TASK_SIZE). Since there are not enough linear addresses to satisfy the request, return-ENOMEM.The hole following the last scanned region is not large enough (

vma != NULL &&vma->vm_start < addr + len). Consider the next region.If neither one of the preceding conditions holds, a large enough hole has been found. Return

addr.

insert_vm_struct( )

inserts a

vm_area_struct structure in the memory region

object list and red-black tree of a memory descriptor. It uses two

parameters: mm, which specifies the address of a

process memory descriptor, and vma, which

specifies the address of the vm_area_struct object

to be inserted. The vm_start and

vm_end fields of the memory region object must

have already been initialized. The function invokes the

find_vma_prepare( ) function to look up the

position in the red-black tree mm->mm_rb where

vma should go. Then insert_vm_struct( ) invokes the vma_link( ) function,

which in turn:

Acquires the

mm->page_table_lockspin lock.Inserts the memory region in the linked list referenced by

mm->mmap.Inserts the memory region in the red-black tree

mm->mm_rb.Releases the

mm->page_table_lockspin lock.Increments by 1 the

mm->map_countcounter.

If the region contains a memory-mapped file, the vma_link( ) function performs additional tasks that are described in

Chapter 16.

The kernel also defines the _ _insert_vm_struct( )

function, which is identical to insert_vm_struct( ) but doesn’t acquire any lock before

modifying the memory region data structures referenced by

mm. The kernel uses it when it is sure that no

concurrent accesses to the memory region data structures can

happen—for instance, because it already acquired a suitable

lock.

The _ _vma_unlink( ) function receives as

parameter a memory descriptor address mm and two

memory region object addresses vma and

prev. Both memory regions should belong to

mm, and prev should precede

vma in the memory region ordering. The function

removes vma from the linked list and the red-black

tree of the memory descriptor.

Now let’s discuss how

new linear

address intervals are allocated. To do this, the do_mmap( ) function creates and initializes a new memory region for

the current process. However, after a successful

allocation, the memory region could be merged with other memory

regions defined for the process.

The function uses the following parameters:

-

fileandoffset File descriptor pointer

fileand file offsetoffsetare used if the new memory region will map a file into memory. This topic is discussed in Chapter 15. In this section, we assume that no memory mapping is required and thatfileandoffsetare bothNULL.-

addr This linear address specifies where the search for a free interval must start.

-

len The length of the linear address interval.

-

prot This parameter specifies the access rights of the pages included in the memory region. Possible flags are

PROT_READ,PROT_WRITE,PROT_EXEC, andPROT_NONE. The first three flags mean the same things as theVM_READ,VM_WRITE,andVM_EXECflags.PROT_NONEindicates that the process has none of those access rights.-

flag This parameter specifies the remaining memory region flags:

-

MAP_GROWSDOWN,MAP_LOCKED,MAP_DENYWRITE, andMAP_EXECUTABLE Their meanings are identical to those of the flags listed in Table 8-4.

-

MAP_SHAREDandMAP_PRIVATE The former flag specifies that the pages in the memory region can be shared among several processes; the latter flag has the opposite effect. Both flags refer to the

VM_SHAREDflag in thevm_area_structdescriptor.-

MAP_ANONYMOUS No file is associated with the memory region (see Chapter 15).

-

MAP_FIXED The initial linear address of the interval must be exactly the one specified in the

addrparameter.-

MAP_NORESERVE The function doesn’t have to do a preliminary check on the number of free page frames.

-

The do_mmap( ) function performs some preliminary

checks on the value of offset and then executes

the do_mmap_pgoff() function. Assuming that the

new interval of linear address does not map a file on disk, the

latter function executes the following steps:

Checks whether the parameter values are correct and whether the request can be satisfied. In particular, it checks for the following conditions that prevent it from satisfying the request:

The linear address interval has zero length or includes addresses greater than

TASK_SIZE.The process has already mapped too many memory regions, so the value of the

map_countfield of itsmmmemory descriptor exceedsMAX_MAP_COUNT.The

flagparameter specifies that the pages of the new linear address interval must be locked in RAM, and the number of pages locked by the process exceeds the threshold stored in therlim[RLIMIT_MEMLOCK].rlim_curfield of the process descriptor.

If any of the preceding conditions holds,

do_mmap_pgoff( )terminates by returning a negative value. If the linear address interval has a zero length, the function returns without performing any action.Obtains a linear address interval for the new region; if the

MAP_FIXEDflag is set, a check is made on theaddrvalue. Otherwise, thearch_get_unmapped_area( )function is invoked to get it:if (flags & MAP_FIXED) { if (addr + len > TASK_SIZE) return -ENOMEM; if (addr & ~PAGE_MASK) return -EINVAL; } else addr = arch_get_unmapped_area(file, addr, len, pgoff, flags);Computes the flags of the new memory region by combining the values stored in the

protandflagsparameters:vm_flags = calc_vm_flags(prot,flags) | mm->def_flags | VM_MAYREAD | VM_MAYWRITE | VM_MAYEXEC; if (flags & MAP_SHARED) vm_flags |= VM_SHARED | VM_MAYSHARE;The

calc_vm_flags( )function sets theVM_READ,VM_WRITE, andVM_EXECflags invm_flagsonly if the correspondingPROT_READ,PROT_WRITE, andPROT_EXECflags inprotare set; it also sets theVM_GROWSDOWN,VM_DENYWRITE, andVM_EXECUTABLEflags invm_flagsonly if the correspondingMAP_GROWSDOWN,MAP_DENYWRITE, andMAP_EXECUTABLEflags inflagsare set. A few other flags are set to 1 invm_flags:VM_MAYREAD,VM_MAYWRITE,VM_MAYEXEC, the default flags for all memory regions inmm->def_flags,[61] and bothVM_SHAREDandVM_MAYSHAREif the memory region has to be shared with other processes.Invokes

find_vma_prepare( )to locate the object of the memory region that shall precede the new interval, as well as the position of the new region in the red-black tree:for (;;) { vma = find_vma_prepare(mm, addr, &prev, &rb_link, &rb_parent); if (!vma || vma->vm_start >= addr + len) break; if (do_munmap(mm, addr, len)) return -ENOMEM; }The

find_vma_prepare( )function also checks whether a memory region that overlaps the new interval already exists. This occurs when the function returns a non-NULLaddress pointing to a region that starts before the end of the new interval. In this case,do_mmap_pgoff( )invokesdo_munmap( )to remove the new interval and then repeats the whole step (see the later section Section 8.3.5).Checks whether inserting the new memory region causes the size of the process address space

(mm->total_vm<<PAGE_SHIFT)+lento exceed the threshold stored in therlim[RLIMIT_AS].rlim_curfield of the process descriptor. In the affirmative case, it returns the error code-ENOMEM. Notice that the check is done here and not in Step 1 with the other checks because some memory regions could have been removed in Step 4.Returns the error code

-ENOMEMif theMAP_NORESERVEflag was not set in theflagsparameter, the new memory region contains private writable pages, and the number of free page frames is less than the size (in pages) of the linear address interval; this last check is performed by thevm_enough_memory( )function.If the new interval is private (

VM_SHAREDnot set) and it does not map a file on disk, invokesvma_merge( )to check whether the preceding memory region can be expanded in such a way to include the new interval. Of course, the preceding memory region must have exactly the same flags as those memory regions stored in thevm_flagslocal variable. If the preceding memory region can be expanded,vma_merge( )also tries to merge it with the following memory region (this occurs when the new interval fills the hole between two memory regions and all three have the same flags). In case it succeeds in expanding the preceding memory region, jump to Step 12.Allocates a

vm_area_structdata structure for the new memory region by invoking thekmem_cache_alloc( )slab allocator function.Initializes the new memory region object (pointed by

vma):vma->vm_mm = mm; vma->vm_start = addr; vma->vm_end = addr + len; vma->vm_flags = vm_flags; vma->vm_page_prot = protection_map[vm_flags & 0x0f]; vma->vm_ops = NULL; vma->vm_pgoff = pgoff; vma->vm_file = NULL; vma->vm_private_data = NULL; vma->vm_raend = 0;If the

MAP_SHAREDflag is set (and the new memory region doesn’t map a file on disk), the region is used for IPC shared memory. The function invokesshmem_zero_setup( )to initialize it (see Chapter 19).Invokes

vma_link( )to insert the new region in the memory region list and red-black tree (see the earlier section Section 8.3.3.4).Increments the size of the process address space stored in the

total_vmfield of the memory descriptor.If the

VM_LOCKEDflag is set, increments the counter of locked pagesmm->locked_vm:if (vm_flags & VM_LOCKED) { mm->locked_vm += len >> PAGE_SHIFT; make_pages_present(addr, addr + len); }The

make_pages_present( )function is invoked to allocate all the pages of the memory region in succession and lock them in RAM. The function, in turn, invokesget_user_pages( )as follows:write = (vma->vm_flags & VM_WRITE) != 0; get_user_pages(current, current->mm, addr, len, write, 0, NULL, NULL);The

get_user_pages( )function cycles through all starting linear addresses of the pages betweenaddrandaddr+len; for each of them, it invokesfollow_page( )to check whether there is a mapping to a physical page in thecurrent’s Page Tables. If no such physical page exists,get_user_pages( )invokeshandle_mm_fault( ), which, as we shall see in Section 8.4.2, allocates one page frame and sets its Page Table entry according to thevm_flagsfield of the memory region descriptor.Finally, terminates by returning the linear address of the new memory region.

The

do_munmap( ) function deletes a linear address

interval from the address space of the current process. The

parameters are the starting address addr of the

interval and its length len. The interval to be

deleted does not usually correspond to a memory region; it may be

included in one memory region or span two or more regions.

The function goes through two main phases. First, it scans the list of memory regions owned by the process and removes all regions that overlap the linear address interval. In the second phase, the function updates the process Page Tables and reinserts a downsized version of the memory regions that were removed during the first phase.

The do_munmap( ) function executes the following steps:

Performs some preliminary checks on the parameter values. If the linear address interval includes addresses greater than

TASK_SIZE, ifaddris not a multiple of 4,096, or if the linear address interval has a zero length, it returns the error code-EINVAL.Locates the first memory region that overlaps the linear address interval to be deleted:

mpnt = find_vma_prev(current->mm, addr, &prev); if (!mpnt || mpnt->vm_start >= addr + len) return 0;If the linear address interval is located inside a memory region, its deletion splits the region into two smaller ones. In this case,

do_munmap( )checks whethercurrentis allowed to obtain an additional memory region:if ((mpnt->vm_start < addr && mpnt->vm_end > addr + len) && current->mm->map_count > MAX_MAP_COUNT) return -ENOMEM;Attempts to get a new

vm_area_structdescriptor. There may be no need for it, but the function makes the request anyway so that it can terminate right away if the allocation fails. This cautious approach simplifies the code since it allows an easy error exit.Builds up a list that includes all descriptors of the memory regions that overlap the linear address interval. This list is created by setting the

vm_nextfield of the memory region descriptor (temporarily) so it points to the previous item in the list; this field thus acts as a backward link. As each region is added to this backward list, a local variable namedfreepoints to the last inserted element. The regions inserted in the list are also removed from the list of memory regions owned by the process and from the red-black tree (by means of therb_erase( )function):npp = (prev ? &prev->vm_next : ¤t->mm->mmap); free = NULL; spin_lock(¤t->mm->page_table_lock); for ( ; mpnt && mpnt->vm_start < addr + len; mpnt = *npp) { *npp = mpnt->vm_next; mpnt->vm_next = free; free = mpnt; rb_erase(&mpnt->vm_rb, ¤t->mm->mm_rb); } current->mm->mmap_cache = NULL; spin_unlock(¤t->mm->page_table_lock);

A while

cycle is used to scan the list of memory regions built in the first

phase, starting with the memory region descriptor that

free points to.

In each iteration, the mpnt local variable points

to the descriptor of a memory region in the list. The

map_count field of the

current->mm memory descriptor is decremented

(since the region has been removed in the first phase from the list

of regions owned by the process) and a check is made (by means of two

question-mark conditional expressions) to determine whether the

mpnt region must be eliminated or simply

downsized:

current->mm->map_count--; st = addr < mpnt->vm_start ? mpnt->vm_start : addr; end = addr+len; end = end > mpnt->vm_end ? mpnt->vm_end : end; size = end - st;

The st and end local variables

delimit the linear address interval in the mpnt

memory region that should be deleted; the size

local variable specifies the length of the interval.

Next, do_munmap( ) releases the page frames

allocated for the pages included in the interval from

st to end:

zap_page_range(mm, st, size);

The zap_page_range( ) function deallocates the

page frames included in the interval from st to

end and updates the corresponding Page Table

entries. The function invokes in nested fashion the

zap_pmd_range( ) and zap_pte_range( ) functions for scanning the Page Tables; the latter

function clears the Page Table entries and frees the corresponding

page frames (or slot in a swap area; see Chapter 14). While doing this, zap_pte_range( ) also invalidates the TLB entries corresponding to the

interval from st to end.

The last action performed in each iteration of the

do_munmap( ) loop is to check whether a downsized

version of the mpnt memory region must be

reinserted in the list of regions of current:

extra = unmap_fixup(mm, mpnt, st, size, extra);

The unmap_fixup( ) function considers four

possible cases:

The memory region has been totally canceled. It returns the address of the previously allocated memory region object (see Step 4 in the earlier section Section 8.3.5.1), which can be released by invoking

kmem_cache_free( ).Only the lower part of the memory region has been removed:

(mpnt->vm_start < st) && (mpnt->vm_end == end)

In this case, it updates the

vm_endfield ofmnpt, invokes_ _insert_vm_struct( )to insert the downsized region in the list of regions belonging to the process, and returns the address of the previously allocated memory region object.Only the upper part of the memory region has been removed:

(mpnt->vm_start == st) && (mpnt->vm_end > end)

In this case, it updates the

vm_startfield ofmnpt, invokes_ _insert_vm_struct( )to insert the downsized region in the list of regions belonging to the process, and returns the address of the previously allocated memory object.The linear address interval is in the middle of the memory region:

(mpnt->vm_start < st) && (mpnt->vm_end > end)

It updates the

vm_startandvm_endfields ofmnptand of the previously allocated extra memory region object so that they refer to the linear address intervals, respectively, frommpnt->vm_starttostand fromendtompnt->vm_end. Then it invokes_ _insert_vm_struct( )twice to insert the two regions in the list of regions belonging to the process and in the red-black tree, and returnsNULL, thus preserving the memory region object previously allocated.

This terminates the description of what must be done in a single

iteration of the second-phase loop of do_munmap( ).

After handling all the memory region descriptors in the list built

during the first phase, do_munmap( ) checks if the

additional extra memory descriptor has been used. If the address

returned by unmap_fixup( ) is

NULL, the descriptor has been used; otherwise,

do_munmap( ) invokes kmem_cache_free( ) to release it. Finally, do_munmap( )

invokes the free_pgtables( ) function: it again

scans the Page Table entries corresponding to the linear address

interval just removed and reclaims the page frames that store unused

Page Tables.

[58] Removing a linear address interval may theoretically fail because no free memory is available for a new memory descriptor.

[59] Up to Version 2.4.9, the Linux kernel used another type of balanced search tree called AVL tree .

[60] You might consider this use of the Page size

bit to be a dirty trick, since the bit was meant to

indicate the real page size. But Linux can get away with the

deception because the 80 × 86 chip checks the

Page size bit in Page Directory entries, but not

in Page Table entries.

[61] Actually, the

def_flags field of the memory descriptor is

modified only by the mlockall( ) system call,

which can be used to set the VM_LOCKED flag, thus

locking all future pages of the calling process in RAM.