In most game engines, we would have the luxury of being able to port inefficient script code into the faster C++ area if we were hitting performance issues. This is not an option unless we invest serious cash in obtaining the Unity source code, which is offered as a license separate from the Free/Personal/Pro licensing system, and on a per case, per title basis. This forces the overwhelming majority of us into a position of needing to make our C# script-level code as performant as possible. So, what does all of this backstory we've been covering mean for us when it comes to the task of performance optimization?

Firstly, we won't be covering anything that is specific to the UnityScript and Boo languages (although much of the knowledge translates to those languages).

Secondly, even though all of our script code might be in C#, we need to be aware that the overall Unity Engine is built from multiple components that each maintains its own memory domains.

Thirdly, only some tasks we perform will awaken the dreaded garbage collector. There are quite a few memory allocation approaches that we can use to avoid it entirely.

Finally, things can change quite a bit depending on the target platform we're running against and every assumption should be tested for validity if we're stumbling into unexpected memory bottlenecks.

The memory space within the Unity Engine can be essentially split into three different memory domains. Each domain stores different types of data and takes care of a very different set of tasks.

The first domain is the Native Domain. This is the underlying foundation of the Unity Engine, which is written in C++ and compiled to native code depending on which platform is being targeted. This area takes care of allocating memory space for things such as Asset data, for example Textures and Meshes, memory space for various subsystems, such as the Rendering system, Physics, Input, and so on. Finally, it includes native representations of important gameplay objects such as GameObject and Component. This is where a lot of the base Component classes keep their data, such as the Transform and Rigidbody Components.

The second memory domain, the Managed Domain, is where the Mono platform does its work, and is the area of memory that is maintained by the Garbage Collector. Any scripting objects and custom classes are stored within this memory domain. It also includes wrappers for the very same object representations that are stored within the Native Domain. This is where the bridge between Mono code and Native code derives from; each domain has its own representation for the same entity, and crossing the bridge between them too much can inflict some fairly significant performance hits on our game, as we learned in the previous chapters.

When a new GameObject or Component is instantiated, it involves allocating memory in both the Managed and Native Domains. This allows subsystems such as Physics and Rendering systems to control and render an object through its transform data on the Native Domain, while the Transform Component from our script code is merely a way to reference through the bridge into the Native memory space and change the object's transform data. Crossing back and forth across this bridge should be minimized as much as possible, due to the overhead involved, as we've learned through techniques such as caching position/rotation changes before applying them, back in Chapter 2, Scripting Strategies.

The third and final memory domain(s) are those of Native and External DLLs, such as DirectX, OpenGL, and any custom DLLs, we attach to our project. Referencing from Mono C# code into such DLLs will cause a similar memory space transition as that between Mono code and Native code.

We have no direct control over what is going on in the Native Domain without the Unity Engine source code, but we do have a lot of indirect control by means of various script-level functions. There are technically a variety of memory allocators available, which are used internally for things such as GameObjects, Graphics objects, and the Profiler, but these are hidden behind the Native code wall.

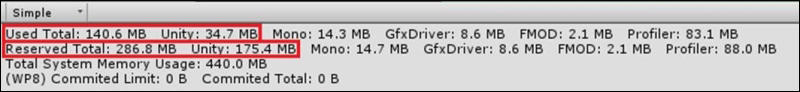

However, we can observe how much memory has been allocated and reserved in this memory domain via the Memory Area of the Profiler. Native memory allocations show up under the values labeled "Unity", and we can even get more information using the Detailed view and sampling the current frame.

Under the Scene Memory section of the Detailed view, we can observe that MonoBehaviour objects always consume a constant amount of memory, regardless of their member data. This is the memory consumed by the Native representation of the object. Note that 376 bytes of memory is consumed by a MonoBehaviour in Editor Mode, while only 156 bytes is consumed when profiling through a standalone application.

We can also use the Profiler.GetRuntimeMemorySize() method to get the Native memory allocation size of a particular object.

Managed object representations are intrinsically linked to their Native representations. The best way to minimize our Native memory allocations is to simply optimize our Managed memory usage.

Memory in most modern operating systems splits dynamic memory into two categories: the stack and the heap. The stack is a special reserved space in memory, dedicated to small, short-lived data values, which are automatically deallocated the moment they go out of scope. The stack contains local variables, as well as handles the loading and unloading of functions as they're called, and expands and contracts along with the call stack. Deallocations in the stack are basically free because the data is essentially instantly forgotten about and no longer referenceable. New data simply overwrites the old data, since the start of the next memory allocation is always known, and there's no reason to perform any clean-up operations.

Because data in the stack is very short-lived, the total stack size is usually very small; in the order of Megabytes. It's possible to cause a stack overflow by allocating more space than the stack can support. This can occur during exceptionally large call stacks (for example, infinite loops), or having a large number of local variables, but in most cases causing a stack overflow should not be a concern despite its relatively small size.

The heap represents all remaining memory space, and it is used for the overwhelming majority of dynamic memory allocation. Whenever a data type is too big to fit in the stack or must exist outside the function it was declared in, then it must be allocated on the heap. Mono's heap is special in that it is managed by a Garbage Collector (it is sometimes referred to as a Managed Heap). During application initialization, Mono will request a given chunk of memory from the OS and use it to generate the heap. The heap starts off fairly small, less than one Megabyte, but will grow as new blocks of memory are needed by our script code.

We can verify how much memory has been allocated and reserved for the heap using the Memory Area of the Profiler, but under the values labeled "Mono".

We can also determine the current used and reserved heap space at runtime using the Profiler.GetMonoUsedSize() and Profiler.GetMonoHeapSize() methods, respectively.

When a memory request is made, and there is enough empty space in the reserved heap block to satisfy the request, then Mono allocates the space and hands it over to whoever requested it. But, if the heap does not have room for it, then the Garbage Collector will awaken and scan all the existing memory allocations for anything which is no longer being used and cleans them up first, before attempting to expand the current heap space.

The Garbage Collector in the version of Mono that Unity uses is a type of Tracing Garbage Collector, which uses a Mark-and-Sweep strategy. This algorithm works in two phases: each allocated object is tracked with an additional bit. This flags whether the object has been marked or not. These flags start off set to 0 (or false).

When the collection process begins, it marks (sets the flag to 1 or true) all objects that are still reachable to the program. Either the reachable object is a direct reference, such as static or local variables on the stack, or it is an indirect reference through the fields (member data) of other directly or indirectly accessible objects. In this way, it is gathering a set of objects that are still referenceable.

The second phase involves iterating through every object reference in the heap (which Mono will have been tracking throughout the lifetime of the application) and verifying whether or not they are marked. If so, then the object is ignored. But, if it is not marked, then it is a candidate for deallocation. During this phase, all marked objects are skipped over, but not before setting their flag back to false for the first phase of the next garbage collection.

Once the second phase ends, all unmarked objects are deallocated to free space, and then the initial request to create the object is revisited. If there is enough space for the object, then it is allocated in that space and the process ends. But, if not, then it must allocate a new block for the heap by requesting it from the Operating System, at which point the memory allocation request can finally be completed.

In an ideal world, where we only keep allocating and deallocating objects but only a finite number of them exist at once, the heap would maintain a constant size because there's always enough space to fit the object. However, all objects in an application are rarely deallocated in the same order they were allocated, and even more rarely do they all have the same size in memory. This leads to memory fragmentation.

Fragmentation occurs when objects are allocated and deallocated in different orders. This is best explained through an example. The following shows four stages of memory allocation within a typical heap memory space:

Here is how the memory allocation takes place:

- We start with an empty heap space (1).

- We then allocate four objects on the heap, A, B, C and D, each sized at 64 bytes (2).

- At some later time, we deallocate two of the objects A and C (3).

- This technically frees 128 bytes worth of space, but since the objects were not contiguous (adjoining neighbors) in memory, we have really only deallocated two separate 64-byte regions of memory. If, at some point later, we wish to allocate a new object that is larger than 64 bytes (4), then we cannot use the space that was previously freed by objects A and C, since neither is individually large enough to fit the new object (objects must always consume contiguous memory spaces). Therefore, the new object must be allocated in the next available 128 contiguous bytes in the heap space.

Over time, our heap memory will become riddled with more and more, smaller and smaller empty spaces such as these, as objects of different sizes are deallocated, and then the system later tries to allocate new objects within the smallest available space that it can fit within. The smaller these regions become, the less usable they are for new memory allocations. To use an analogy, the memory space begins to resemble Swiss cheese with many small holes that become unusable to us. In the absence of background techniques that automatically clean up this fragmentation, this effect would occur in literally any memory space—RAM, heap space, and even hard drives—which are just larger, slower, and more permanent memory storage areas (this is why it's a good idea to defragment our hard drives from time-to-time!).

Memory fragmentation causes two problems. Firstly, it effectively reduces the total usable memory space for new objects over long periods of time, depending on the frequency of allocations and deallocations. Secondly, it makes new allocations take longer to resolve, due to the extra time it takes to find a new memory space large enough to fit the object.

This becomes important when new memory allocations are made in a heap, since where the free spaces are located becomes just as important as how much free space is available. Even if we technically have 128 bytes of free space to fit a new object, if it is not contiguous space, then the heap must either continue searching until it finds a large enough space or the entire heap size must be increased to fit the new object, by requesting a new memory allocation from the OS in order to expand the heap space.

So, in a worst case scenario, when a new memory allocation is being requested by our game, the CPU would have to spend cycles completing the following tasks before the allocation is finally completed:

- Verify if there is enough contiguous space for the new object.

- If not, iterate through all known direct and indirect references, marking them as reachable.

- Iterate through the entire heap, flagging unmarked objects for deallocation.

- Verify a second time if there is enough contiguous space for the new object.

- If not, request a new memory block from the OS, in order to expand the heap.

- Allocate the new object at the front of the newly allocated block.

This can be a lot of work for the CPU to handle, particularly if this new memory allocation is an important game object such as a particle effect, a new character entering the scene, a cutscene transition, and so on. Users are likely to note moments where the Garbage Collector is freezing gameplay to handle these tasks. To make matters worse, the garbage collection workload scales as the allocated heap space grows, since sweeping through a few megabytes of space will be significantly faster than scanning several gigabytes of space.

All of this makes it absolutely critical to control our heap space intelligently. The lazier our memory usage tactics are, the worse the Garbage Collector will behave in an almost exponential fashion. So, it's a little ironic that, despite the efforts of managed languages to solve the memory management problem, managed language developers can still find themselves being just as, if not more, concerned with memory consumption than developers of native applications!

The Garbage Collector runs on two separate threads: the Main thread and the Finalizer thread. When the Garbage Collector is invoked, it will run on the Main thread and flag heap memory blocks for future deallocation. This does not happen immediately. The Finalizer thread, controlled by Mono, can have a delay of several seconds before the memory is finally freed and available for reallocation.

We can observe this behavior in the Total Allocated block (the green line with apologies to that 5 percent of the population with deuteranopia/deuteranomaly) of the Memory Area within the Profiler. It can take several seconds for the total allocated value to drop after a garbage collection has occurred. Because of the delay, we should not rely on memory being available the moment it has been deallocated, and as such, we should never waste time trying to eke out every last byte of memory that we believe should be available. We must ensure that there is always some kind of buffer zone available for future allocations.

Blocks that have been freed by the Garbage Collector may sometimes be given back to the Operating System after some time, which would reduce the reserved space consumed by the heap and allow the memory to be allocated for something else, such as another application. But, this is very unpredictable and depends on the platform being targeted, so we shouldn't rely on it. The only safe assumption to make is that, as soon as the memory has been allocated to Mono, it's then reserved and is no longer available to either the Native Domain or any other application running on the same system.

One strategy to minimize garbage collection problems is concealment; manually invoke the Garbage Collector at opportune moments, when the player may not notice. A collection can be invoked by simply calling the following method:

System.GC.Collect();

Good opportunities to invoke a collection may be during level loading, when gameplay is paused, shortly after a menu interface has been opened, in the middle of cutscene transitions, or really any break in gameplay that the player would not witness, or care about a sudden performance drop. We could even use the Profiler.GetMonoUsedSize() and Profiler.GetMonoHeapSize() methods at runtime to determine if a garbage collection needs to be invoked in the near future.

We can also cause the deallocation of a handful of specific objects. If the object in question is one of the Unity object wrappers, such as a GameObject or MonoBehaviour component, then the Finalizer will first invoke the Dispose() method within the Native Domain. At this point, the memory consumed by both the Native and Managed Domains will then be freed. In some rare instances, if the Mono wrapper implements the IDisposable interface (that is, it has a Dispose() method available from script code), then we can actually control this behavior and force the memory to be freed instantly.

The only known and useful case of this (that this author is currently aware of) is the WWW class. This class is most often used to connect to a web server and download Asset data during runtime. This class needs to allocate several buffers in the Native Domain in order to accomplish this task. It needs to make room for the compressed file, a decompression buffer, and the final decompressed file. If we kept all of this memory for a long time, it would be a colossal waste of precious space. So, by calling its Dispose() method from script code, we can ensure that the memory buffers are freed promptly and precisely when they need to be.

All other Asset objects offer some kind of unloading method to clean up any unused asset data, such as Resources.UnloadUnusedAssets(). Actual asset data is stored within the Native Domain, so the Garbage Collector technically isn't involved here, but the idea is basically the same. It will iterate through all Assets of a particular type, check if they're no longer being referenced, and, if so, deallocate them. But, again, this is an asynchronous process and we cannot guarantee when deallocation will occur. This method is automatically called internally after a Scene is loaded, but this still doesn't guarantee instant deallocation.

At the very least, Resources.UnloadAsset() (that is, unloading one specific Asset at a time) is the preferred way to clean up Asset data, since time will not be spent iterating through the entire collection. However, it's worth noting that these unloading methods were upgraded to be multithreaded in Unity 5, which improves the performance cost of cleaning up Asset data rather significantly on most platforms.

However, the best strategy for garbage collection will always be avoidance; if we allocate as little heap memory and control its usage as much as possible, then we won't have to worry about the Garbage Collector inflicting performance costs as frequently or as hard. You will learn many tactics for this during the remainder of the chapter, but you should first cover some theory on how and where memory is allocated.

Not all memory allocations we make within Mono will go through the heap. The .NET Framework (and by extension the C# language, which merely implements the .NET specification) has the concept of Value types and Reference types, and only the latter of which needs to be marked by the Garbage Collector while it is performing its Mark-and-Sweep algorithm. Reference types are expected to (or need to) last a long time in memory either due to their complexity, their size, or how they're used. Large datasets, and any kind of object instantiated from a class, is a Reference type. This also includes arrays (whether it is of Value types or Reference types), delegates, all classes, such as MonoBehaviour, GameObject, and any custom classes we define.

Value types are normally allocated on the stack. Primitive data types such as bools, ints, and floats are examples of Value types, but only if they're standalone and not a member of a Reference type. As soon as a primitive data types is contained within a Reference type, such as a class or an array, then it is implied that it is either too large for the stack or will need to survive longer than the current scope and must be allocated on the heap instead.

All of this can be best explained through examples. The following code will create an integer as a Value type that exists on the stack only temporarily:

public class TestComponent : MonoBehaviour {

void Start() {

int data = 5; // allocated on the stack

DoSomething(data);

} // integer is deallocated from the stack here

}As soon as the Start() method ends, then the integer is deallocated from the stack. This is essentially a free operation since, as mentioned previously, it doesn't bother doing any cleanup; it just moves the stack pointer back to the previous memory location in the call stack. Any future stack allocations simply overwrite the old data. Most importantly, no heap allocation took place to create the data, and so, the Garbage Collector would be completely unaware of its existence.

But, if we created an integer as a member variable of the MonoBehaviour class definition, then it is now contained within a Reference type (a class) and must be allocated on the heap along with its container:

public class TestComponent : MonoBehaviour {

private int _data = 5;

void Start() {

DoSomething(_data);

}

}Similarly, if we put the integer into an independent class, then the rules for Reference types still apply, and the object is allocated on the heap:

public class TestData {

public int data = 5;

}

public class TestComponent : MonoBehaviour {

void Start() {

TestData dataObj = new TestData(); // allocated on the heap

DoSomething(dataObj.data);

} // 'dataObj' is not deallocated here, but it will become a candidate during the next garbage collection

}So, there is a big difference between temporarily allocating memory within a class method and storing long-term data in a class' member data. In both cases, we're using a Reference type (a class) to store the data, which means it can be referenced elsewhere. For example, imagine DoSomething() stored the reference to dataObj within a member variable:

private TestData _testDataObj;

void DoSomething (TestData dataObj) {

_testDataObj = dataObj; // a new reference created! The referenced object will now be marked during mark-and-sweep

}In this case, we would not be able to deallocate the object pointed to dataObj as soon as the Start() method ended because the total number of things referencing the object would go from 2 to 1. This is not 0, and hence the Garbage Collector would still mark it during mark-and-sweep. We will also need to set _testDataObj to null, or make it reference something else, before the object was no longer reachable.

Note that a Value type must have a value, and can never be null. If a stack-allocated Value type is assigned to a Reference type, then the data is simply copied. This is true even for arrays of the Value types:

public class TestClass {

private int[] _intArray = new int[1000]; // Reference type full of Value types

void StoreANumber(int num) {

_intArray[0] = num; // store a Value within the array

}

}When the initial array is created (during object initialization), 1,000 integers will be allocated on the heap set to a value of 0. When the StoreANumber() method is called, the value of num is merely copied into the zeroth element of the array, rather than storing a reference to it.

The subtle change in the referencing capability is what ultimately decides whether something is a Reference type or a Value type, and we should try to use standalone Value types whenever we have the opportunity, so that they generate stack allocations instead of heap allocations. Any situation where we're just sending around a piece of data that doesn't need to live longer than the current scope is a good opportunity to use a Value type instead of a Reference type. Ostensibly, it does not matter if we pass the data into another method of the same class or a method of another class; it still remains a Value type that will exist on the stack until the method that created it goes out of the scope.

Technically, something is duplicated every time a data value is passed as an argument from one method to another, and this is true whether it is a Value type or a Reference type. This is known as passing by value. The main difference is that a Reference type is merely a pointer which consumes only 4 or 8 bytes in memory (32-bit or 64-bit, depending on the architecture) regardless of what it is actually pointing to. When a Reference type is passed as an argument it is actually the value of this pointer that gets copied, which is very quick since the data is very small.

Meanwhile, a Value type contains the full and complete bits of data stored within the object. Hence, all of the data of a Value type gets copied whenever they are passed between methods, or stored in other Value types. In some cases, it can mean that passing a large Value type as arguments around too much can be more costly than just using a Reference type. For most Value types, this is not a problem, since they are comparable in size to a pointer. But this becomes important when we begin to talk about structs, in the next section.

Data can be passed around by reference as well, by using the ref keyword, but this is very different from the concept of Value and Reference types, and it is very important to keep them distinct in our mind when we try to understand what is going on under the hood. We can pass a Value type by value, or by reference, and we can pass a Reference type by value, or by reference. This means that there are four distinct data passing situations that can occur depending on which type is being passed and whether the ref keyword is being used or not.

When data is passed by reference (even if it is a Value type!) then making any changes to the data will change the original. For example, the following code would print the value 10:

void Start() {

int myInt = 5;

DoSomething(ref myInt);

Debug.Log(String.Format("Value = {0}", myInt));

}

void DoSomething(ref int val) {

val = 10;

}Removing the ref keyword from both places would make it print the value 5 instead. This understanding will come in handy when we start to think about some of the more interesting data types we have access to. Namely, structs, arrays, and strings.

Structs are an interesting special case in C#. If we come from a C++ background to C#, then we would probably assume that the only difference between a struct and a class is that a struct has a default access specifier, public, while a class defaults to private. However, in C#, structs are similar to classes in that they can contain other private/protected/public data, have methods, can be instantiated at runtime, and so on. The core difference between the two is that structs are Value types and classes are Reference types.

There are some other important differences between how structs and classes are treated in C#; structs don't support inheritance, their properties cannot be given custom default values (member data always defaults to values such as 0 or null, since it is a Value type), and their default constructors cannot be overridden. This greatly restricts their usage compared to classes, so simply replacing all classes with structs (under the assumption that it will just allocate everything on the stack) is not a wise course of action.

However, if we're using a class in a situation whose only purpose is to send a blob of data to somewhere else in our application, and it does not need to last beyond the current scope, then we should use a struct instead, since a class would result in a heap allocation for no particularly good reason:

public class DamageResult {

public Character attacker;

public Character defender;

public int totalDamageDealt;

public DamageType damageType;

public int damageBlocked;

// etc

}

public void DealDamage(Character _target) {

DamageResult result = CombatSystem.Instance.CalculateDamage(this, _target);

CreateFloatingDamageText(result);

}In this example, we're using a class to pass a bunch of data from one subsystem (the combat system) to another (the UI system). The only purpose of this data is to be calculated and read by various subsystems, so this is a good candidate to convert into a struct.

Merely changing the DamageResult definition from a class to a struct could save us quite a few unnecessary garbage collections, since it would be allocated on the stack.

public struct DamageResult {

// ...

}This is not a catch-all solution. Since structs are Value types, this means something rather unique when it is passed as an argument between methods. As we previously learned, every time a Value type is passed as an argument between one function and another, it will be duplicated since it is passed by value. This creates a duplicate Value type for the next method to use, which will be deallocated as soon as that method goes out of scope, and so on each time it is passed around. So, if a struct is passed by value between five different methods in a long chain, then five different stack copies will occur at the same time. Recall that stack deallocations are free, but data copying is not.

The copying is pretty much negligible for small values, such as a single integer or float, but passing around ridiculously large datasets through structs over and over again is obviously not a trivial task and should be avoided. In such cases, it would be wiser to pass the struct by reference using the ref keyword to minimize the amount of data being copied each time (just a 32-bit or 64-bit integer for the memory reference). However, this can be dangerous since passing by reference allows any subsequent methods to make changes to the struct, in which case it would be prudent to make its data values readonly (it can only be initialized in the constructor, and never again, even by its own member functions) to prevent later changes.

All of the above is also true when structs are contained within Reference types:

public struct DataStruct {

public int val;

}

public class StructHolder {

public DataStruct _memberStruct;

public void StoreStruct(DataStruct ds) {

_memberStruct = ds;

}

}To the untrained eye, the preceding code appears to be attempting to store a stack-allocated struct within a Reference type. Does this mean that a StructHolder object on the heap can now reference an object on the stack? What will happen when the StoreStruct() method goes out of scope and the struct is erased? It turns out that these are the wrong questions.

What's actually happening is that, while a DataStruct object (_memberStruct) has been allocated on the heap within the StructHolder object, it is still a Value type and does not magically transform into a Reference type. So, all of the usual rules for Value types apply. The _memberStruct variable cannot have a value of null and all of its fields will be initialized to 0 or null values. When StoreStruct() is called, the data from ds will be copied into _memberStruct. There are no references to stack objects taking place, and there is no concern about lost data.

Arrays can potentially contain a huge amount of data within them, which make them difficult to treat as a Value type since there's probably not enough room on the stack to support them. Therefore, they are treated as a Reference type so that the entire dataset can be passed around via a single reference, instead of duplicating the entire array every time it is passed around. This is true irrespective of whether the array contains Value types or Reference types.

This means that the following code will result in a heap allocation:

TestStruct[] dataObj = new TestStruct[1000];

for(int i = 0; i < 1000; ++i) {

dataObj[i].data = i;

DoSomething(dataObj[i]);

}The following, functionally equivalent code, would not result in any heap allocations, since the structs are Value types, and hence would be created on the stack:

for(int i = 0; i < 1000; ++i) {

TestStruct dataObj = new TestStruct();

dataObj.data = i;

DoSomething(dataObj);

}The subtle difference in the second code-block is that only one TestStruct exists on the stack at a time (at least for this function DoSomething() can potentially create more), whereas the first block needs to allocate 1,000 of them via an array. Obviously, these methods are kind of ridiculous as they're written, but they illustrate an important point to consider. The compiler isn't smart enough to automatically find these situations for us and make the appropriate changes. Opportunities to optimize our memory usage through Value type replacements will be entirely down to our ability to detect them, and understand why a change will result in stack allocations, rather than heap allocations.

Note that, when we allocate an array of Reference types, we're creating an array of references, which can each reference other locations on the heap. However, when we allocate an array of Value types, we're creating a packed list of Value types on the heap. Each of these Value types will be initialized with a value of 0 (or equivalent), since they cannot be null, while each reference within an array of Reference types will always initialize to null, since no references have been assigned, yet.

We briefly touched upon the subject of strings back in Chapter 2, Scripting Strategies, but now it's time to go into more detail about why proper string usage is extremely important.

Because strings are essentially arrays of characters (chars), they are Reference types, and follow all of the same rules as other Reference types; the value that is actually copied and passed between functions is merely a pointer, and they will be allocated on the heap.

The confusing part really begins when we discover that strings are immutable, meaning they cannot be changed after they've been allocated. Being an array implies that the entire list of characters must be contiguous in memory, which cannot be true if we allowed strings to expand or contract at-will within a dynamic memory space (how could we quickly and safely expand the string if we've allocated something else immediately after it?).

This means that, if a string is modified, then a new string must be allocated to replace it, where the contents of the original will be copied and modified as-needed into a whole new character array. In which case, the old version will no longer be referenced anywhere, will not be marked during mark-and-sweep and will therefore eventually be garbage-collected. As a result, lazy string programming can result in a lot of unnecessary heap allocations and garbage collection.

For example, if we believed that strings worked just like other Reference types, we might be forgiven for assuming the log output of the following to be World!:

void Start() {

string testString = "Hello";

DoSomething(testString);

Debug.Log(testString);

}

void DoSomething(string localString) {

localString = "World!";

}However, this is not the case, and it will still print out Hello. What is actually happening is that the localString variable, within the scope of DoSomething(), starts off referencing the same place in memory as testString, due to the reference being passed by value. This gives us two references pointing to the same location in memory as we would expect if we were dealing with any other Reference type. So far, so good.

But, as soon as we change the value of localString, we run into a little bit of a conflict. Strings are immutable, and we cannot change them, so therefore we must allocate a new string containing the value World! and assign its reference to the value of localString; now the number of references to the Hello string returns back to one. The original string (Hello) will remain in memory because the value of testString has not been changed, and that is still the value which will be printed by Debug.Log(). All we've succeeded in doing by calling DoSomething() is creating a new string on the heap which gets garbage-collected, and doesn't change anything. This is the textbook definition of wasteful.

If we change the method definition of DoSomething() to pass the string by reference, via the ref keyword, the output would indeed change to World!. This is what we would expect to happen with a Value type, which leads a lot of developers to incorrectly assume that strings are Value types. But, this is an example of the fourth and final data-passing case where a Reference type is being passed by reference, which allows us to change what the original reference is referencing!

Note

So, to recap; if we pass a Value type by value, we can only change the value of the copy. If we pass a Value type by reference, we can change the actual value attributed to the original version. If we pass a Reference type by value, we can make changes to the object that the original reference is referencing. And finally, if we pass a Reference type by reference, we can change which object the original reference is referencing.

Concatenation is the act of appending strings to one another to form a larger string. As we've learned, any such cases are likely to result in excess heap allocations. The biggest offender in string-based memory waste is concatenating strings using the + and += operators, because of the allocation chaining effect they cause.

For example, the following code tries to combine a group of strings together to print some information about a combat result:

void CreateFloatingDamageText(DamageResult result) {

string outputText = result.attacker.GetCharacterName() + " dealt " + result.totalDamageDealt.ToString() + " " + result.damageType.ToString() + " damage to " + result.defender.GetCharacterName() + " (" + result.damageBlocked.ToString() + " blocked)";

// ...

}An example output of this function might be a string that reads:

Dwarf dealt 15 Slashing damage to Orc (3 blocked)

This function features a handful of string literals (hard-coded strings that are allocated during application initialization) such as dealt, damage to, and blocked. But, because of the usage of variables within this combined string, it cannot be compiled away at build time, and therefore must be generated dynamically at runtime.

A new heap allocation will be generated each time a +, or +=, operator is executed; only a single pair of strings will be merged at a time, and it allocates a new string each time. Then, the result of one merger will be fed into the next, and merged with another string, and so on until the entire string has been built.

So, the previous example will result in 9 different strings being allocated all in one go. All of the following strings would be allocated to satisfy this instruction, and all would eventually need to be garbage collected (note that the operators are resolved from right-to-left):

"3 blocked)" " (3 blocked)" "Orc (3 blocked)" " damage to Orc (3 blocked)" "Slashing damage to Orc (3 blocked)" " Slashing damage to Orc (3 blocked)" "15 Slashing damage to Orc (3 blocked)" " dealt 15 Slashing damage to Orc (3 blocked)" "Dwarf dealt 15 Slashing damage to Orc (3 blocked)"

That's 262 characters being used, instead of 49; or because a char is a 2-byte data type, that's 524 bytes of data being allocated when we only need 98 bytes. Chances are that, if this code exists in the codebase once, then it exists all over the place, so for an application that's doing a lot of lazy string concatenation like this, that is a ton of memory being wasted on generating unnecessary strings.

Better approaches for generating strings are to use either the StringBuilder class, or one of several string class methods.

The StringBuilder class is effectively a mutable (changeable) string class. It works by allocating a buffer to copy the target strings into and allocates additional space whenever it is needed. We can retrieve the string using the ToString() method which, naturally, results in a memory allocation for the resultant string, but at least we avoided all of the unnecessary string allocations we would have generated using the + or += operators.

Conventional wisdom says that if we roughly know the final size of the resultant string, then we can allocate an appropriate buffer ahead of time and save ourselves undue allocations. For our example above, we might allocate a buffer of 100 characters to make room for long character names and damage values:

using System.Text;

// ...

StringBuilder sb = new StringBuilder(100);

sb.Append(result.attacker.GetCharacterName());

sb.Append(" dealt " );

sb.Append(result.totalDamageDealt.ToString());

// etc ...

string result = sb.ToString();If we don't know the final size, then using a StringBuilder class is likely to generate a buffer that doesn't fit the size exactly, or closely. We will either end up with a buffer that's too large (wasted allocation time and space), or, worse, a buffer that's too small, which must keep expanding as we generate the complete string. If we're unsure about the total size of the string, then it might be best to use one of the various string class methods.

There are three string class methods available for generating strings; Format(), Join(), and Concat(). Each operates slightly differently, but the overall output is the same; a new string is allocated containing the contents of the string(s) we pass into them, and it is all done in a single action which reduces excess string allocations.

It is surprisingly hard to say which one of the two approaches would be more beneficial in a given situation as there are a lot of really deep nuances involved. There's a lot of discussion surrounding the topic (just do a Google search for "c sharp string concatenation performance" and you'll see what I mean), so the best approach is to implement one or the other using the conventional wisdom described previously. Whenever we run into bad performance with one method, we should try the other, profile them both, and pick the best option of the two.

Technically, everything in C# is an object (caveats apply). Even primitive data types such as ints, floats, and bools are derived from System.Object at their lowest level (a Reference Type!), which allows them access to helper methods such as ToString(), so that they can customize their string representation.

But these primitive types are treated as special cases via being treated as Value types. Whenever a Value type is implicitly treated in such a way that it must act like an object, the CLR automatically creates a temporary object to store, or "box", the value inside so that it can be treated as a typical Reference type object. As we should expect, this results in a heap allocation to create the containing vessel.

For example, the following code would cause the variable i to be boxed inside object obj:

int i = 128; object obj = i;

The following would use the object representation obj to replace the value stored within the integer, and "unbox" it back into an integer, storing it in i. The final value of i would be 256:

obj = 256; i = (int)obj;

These types can technically be changed dynamically. The following is perfectly legal C# code, which uses the same object obj as above, which was originally boxed from an int:

obj = 512f; float f = (float)obj;

The following is also legal:

obj = false; bool b = (bool)obj;

Note that attempting to unbox obj into a type that isn't the most recently assigned type would result in an InvalidCastException. All of this can be a little tricky to wrap our head around until we remember that, at the end of the day, everything is just bits in memory. What's important is knowing that we can treat our primitive types as objects by boxing them, converting their types, and then unboxing them into a different type at a later time.

Boxing can be either implicit, as per the examples above, or explicit, by typecasting to System.Object. Unboxing must always be explicit by typecasting back to its original type. Whenever we pass a Value type into a method which uses System.Object as arguments, boxing will be applied implicitly.

Methods such as String.Format(), which take System.Objects as arguments, are one such example. We typically use them by passing in Value types such as ints, floats, bools and so on, to generate a string with. Boxing is automatically taking place in these situations, causing additional heap allocations which we should be aware of. Collections.Generic.ArrayList is another such example, since ArrayLists always contain System.Object references.

Any time we use a function definition that takes System.Object as arguments, and we're passing in Value types, we should be aware that we're implicitly causing heap allocations due to boxing.

The importance of how our data is organized in memory can be surprisingly easy to forget about, but can result in a fairly big performance boost if it is handled properly. Cache misses should be avoided whenever possible, which means that in most cases, arrays of data that are contiguous in memory should be iterated over sequentially, as opposed to any other iteration style.

This means that data layout is also important for garbage collection, since it is done in an iterative fashion, and if we can find ways to have the Garbage Collector skip over problematic areas, then we can potentially save a lot of iteration time.

In essence, we want to keep large groups of Reference types separated from large groups of Value types. If there is even just one Reference type within a Value type, such as a struct, then the Garbage Collector considers the entire object, and all of its data members, indirectly referenceable objects. When it comes time to mark-and-sweep, it must verify all fields of the object before moving on. But, if we separate the various types into different arrays, then we can make the Garbage Collector skip the majority of the data.

For instance, if we have an array of structs containing data like so, then the Garbage Collector will need to iterate over every member of every struct, which could be fairly time consuming:

public struct MyStruct {

int myInt;

float myFloat;

bool myBool;

string myString;

}

MyStruct[] arrayOfStructs = new MyStruct[1000];But, if we replace all of this data with simple arrays, then the Garbage Collector will ignore all of the primitive data types, and only check the strings. This would result in much a faster garbage collection sweep:

int[] myInts = new int[1000]; float[] myFloats = new float[1000]; bool[] myBools = new bool[1000]; string[] myStrings = new string[1000];

The reason this works is because we're giving the Garbage Collector fewer indirect references to check. When the data is split into separate arrays (Reference types), it finds three arrays of Value types, marks the arrays, and then immediately moves on because there's no reason to mark Value types. It must still iterate through all of the strings within the string array, since each is a Reference type and it needs to verify that there are no indirect references within it. Technically, strings cannot contain indirect references, but the Garbage Collector works at a level where it only knows if the object is a Reference type or Value type. However, we have still spared the Garbage Collector from needing to iterate over an extra 3,000 pieces of data (all 1,000 ints, floats, and bools).

There are several instructions within the Unity API which result in heap memory allocations, which we should be aware of. This essentially includes everything that returns an array of data. For example, the following methods allocate memory on the heap:

GetComponents<T>(); // (T[]) Mesh.vertices; // (Vector3[]) Camera.allCameras; // (Camera[])

Such methods should be avoided whenever possible, or at the very least called once and cached so that we don't cause memory allocations more often than necessary.

Note that Unity Technologies has hinted it might come out with allocation-less versions of these methods sometime in the lifecycle of Unity 5. Presumably, it might look something like the way ParticleSystems allows access to Particle data, which involves providing a Particle[] array reference to point to the required data. This avoids allocation since we reuse the same buffer between calls.

The foreach loop keyword is a bit of a controversial issue in Unity development circles. It turns out that a lot of foreach loops implemented in Unity C# code will incur unnecessary heap memory allocations during these calls, as they allocate an Enumerator object as a class on the heap, instead of a struct on the stack. It all depends on the given collection's implementation of the GetEnumerator() method.

It turns out that every single collection that has been implemented in the version of Mono that comes with Unity (Mono version 2.6.5) will create classes instead of structs, which results in heap allocations. This includes, but is not limited to, List<T>, LinkedList<T>, Dictionary<K,V>, ArrayList, and so on. But, note that it is actually safe to use foreach loops on typical arrays! The Mono compiler secretly converts foreach over arrays into simple for loops.

The cost is fairly negligible as the heap allocation cost does not scale with the number of iterations. Only one Enumerator object is allocated, and reused over and over again, which only costs a handful of bytes of memory overall. So unless our foreach loops are being invoked every update (which is typically dangerous in and of itself) then the costs will be mostly negligible on small projects. The time taken to convert everything to a for loop may not be worth the time. But it's definitely something to keep in mind for the next project we begin to write.

If we're particularly savvy with C#, Visual Studio, and manual compilation of the Mono assembly, then we can have Visual Studio perform code compilation for us, and copy the resulting assembly DLL into the Assets folder, which will fix this mistake for the generic collections.

Note that performing foreach over a Transform Component is a typical shortcut to iterating over a Transform's children. For example:

foreach (Transform child in transform) {

// do stuff with 'child'

}However, this results in the same heap allocations mentioned above. As a result, that coding style should be avoided in favor of the following:

for (int i = 0; i < transform.childCount; ++i) {

Transform child = transform.GetChild(i);

// do stuff with 'child'

}Starting a Coroutine costs a small amount of memory to begin with, but note that no further costs are incurred when the method yields. If memory consumption and garbage collection are significant concerns, we should try to avoid having too many short-lived Coroutines, and avoid calling StartCoroutine() too much during runtime.

Closures are useful, but dangerous tools. Anonymous methods and lambda expressions are not always Closures, but they can be. It all depends on whether the method uses data outside of its own scope and parameter list, or not.

For example, the following anonymous function would not be a Closure, since it is self-contained and functionally equivalent to any other locally defined function:

System.Func<int,int> anon = (x) => { return x; };

int result = anon(5);But, if the anonymous function pulled in data from outside itself, then it becomes a Closure, as it "closes the environment" around the required data. The following would result in a Closure:

int i = 1024;

System.Func<int,int> anon = (x) => { return x + i; };

int result = anon(5);In order to complete this transaction, the compiler must define a new class that can reference the environment where the data value i would be accessible. At runtime it creates the corresponding object on the heap and provides it to the anonymous function. Note that this includes Value types (as per the above example), which were originally on the stack, possibly defeating the purpose of them being allocated on the stack in the first place. So, we should expect each invocation of the second method to result in heap allocations and inevitable garbage collection.

The .NET library offers a huge amount of common functionality that helps solve numerous problems that programmers may come across during day-to-day implementation. Most of these classes and functions are optimized for general use cases, which may not be optimal for a specific situation. It may be possible to replace a particular .NET library class with a custom implementation that is more suited to our specific use case.

There are also two big features in the .NET library that often become big performance hogs whenever they're used. This tends to be because they are only included as a quick-and-hacky solution to a given problem without much effort put into optimization. These features are LINQ and Regular Expressions.

LINQ provides a way to treat arrays of data as miniature databases and perform queries against them using SQL-like syntax. The simplicity of its coding style, and complexity of the underlying system (through its usage of Closures), implies that it has a fairly large overhead cost. LINQ is a handy tool, but is not really intended for high-performance, real-time applications such as games, and does not even function on platforms that do not support JIT compilation, such as iOS.

Meanwhile, Regular Expressions, using the Regex class, allow us to perform complex string parsing to find substrings that match a particular format, replace pieces of a string, or construct strings from various inputs. Regular Expression is another very useful tool, but tends to be overused in places where it is largely unnecessary, or in seemingly "clever" ways to implement a feature such as text localization, when straightforward string replacement would be far more efficient.

Specific optimizations for both of these features go far beyond the scope of this book, as they could fill entire volumes by themselves. We should either try to minimize their usage as much as possible, replace their usage with something less costly, bring in a LINQ or Regex expert to solve the problem for us, or do some Googling on the subject to optimize how we're using them.

Tip

One of the best ways to find the correct answer online is to simply post the wrong answer! People will either help us out of kindness, or take such great offense to our implementation that they consider it their civic duty to correct us! Just be sure to do some kind of research on the subject first. Even the busiest of people are generally happy to help if they can see that we've put in our fair share of effort beforehand.

If we get into the habit of using large, temporary work buffers for one task or another, then it just makes sense that we should look for opportunities to reuse them, instead of reallocating them over and over again, as this lowers the overhead involved in allocation, as well as garbage collection (so-called "memory pressure"). It might be worthwhile to extract such functionality from case-specific classes into a generic god class that contains a big work area for multiple classes to reuse.

Speaking of temporary work buffers, object pooling is an excellent way of both minimizing and establishing control over our memory usage by avoiding deallocation and reallocation. The idea is to formulate our own system for object creation, which hides away whether the object we're getting has been freshly allocated or has been recycled from an earlier allocation. The typical terms to describe this process are to "spawn" and "despawn" the object, rather than creating and deleting them, since any time an object is despawned we're simply hiding it from view until we need it again, at which point it is respawned and reused.

Let's cover a quick implementation of an object pooling system.

The first requirement is to allow the pooled object to decide how to recycle itself when the time comes. The following interface will satisfy the requirements nicely:

public interface IPoolableObject{

void New();

void Respawn();

}This interface defines two methods; New() and Respawn(). These should be called when the object is first created, and when it has been respawned, respectively.

The second requirement is to provide a base implementation of this interface that allows objects of any type to handle any bookkeeping required to take care of the initial creation and respawning of objects.

The following ObjectPool class definition is a fairly simple implementation of the object pooling concept. It uses generics to support any object type so long as it fits two criteria; it must implement the IPoolableObject interface, and must allow for a parameter-less constructor (the new() keyword in the class declaration).

public class ObjectPool<T> where T : IPoolableObject, new() {

private Stack<T> _pool;

private int _currentIndex = 0;

public ObjectPool(int initialCapacity) {

_pool = new Stack<T>(initialCapacity);

for(int i = 0; i < initialCapacity; ++i) {

Spawn (); // instantiate a pool of N objects

}

Reset ();

}

public int Count {

get { return _pool.Count; }

}

public void Reset() {

_currentIndex = 0;

}

public T Spawn() {

if (_currentIndex < Count) {

T obj = _pool.Pop ();

_currentIndex++;

IPoolableObject ip = obj as IPoolableObject;

ip.Respawn();

return obj;

} else {

T obj = new T();

_pool.Push(obj);

_currentIndex++;

IPoolableObject ip = obj as IPoolableObject;

ip.New();

return obj;

}

}

}An example Poolable object would look like so. It must implement two public methods, New() and Respawn(), which are invoked by the ObjectPool class at the appropriate times:

public class TestObject : IPoolableObject {

public void New() {

// very first initialization here

}

public void Respawn() {

// reset data which allows the object to be recycled here

}

}And finally, an example usage to create a pool of 100 TestObject objects:

private ObjectPool<TestObject> _objectPool = new ObjectPool<TestObject>(100);

The first 100 calls to Spawn() on the _objectPool object will cause the objects to be respawned and provided to the caller. If the stack runs out of space, then it will add even more TestObject objects to the stack. Finally, if Reset() is called on _objectPool, then it will begin again from the start, recycling objects and providing them to the caller.

Note that this pooling solution will not work for classes we haven't defined, and cannot derive from, such as Vector3, and Quaternion. In these cases, we would need to define a containing class:

public class PoolableVector3 : IPoolableObject {

public Vector3 vector = new Vector3();

public void New() {

Reset();

}

public void Respawn() {

Reset();

}

public void Reset() {

vector.x = vector.y = vector.z = 0f;

}

}We could extend this system in a number of ways, such as defining a Despawn() method to handle destruction of the object, making use of the IDisposable interface and using blocks when we wish to automatically spawn and despawn objects within a small scope, and/or allowing objects instantiated outside the pool to be added to it.