Chapter 11

Managing Your Cloud

Now that you have built a vCloud Director environment to deliver services to your consumers and allowed those consumers to use the self-service portal to build vApps, we'll take a look at how best to manage your platform as an operations team.

We will cover monitoring and day-to-day operations as well as show you how and when to expand the infrastructure supporting your vCloud implementation.

In this chapter, you will learn to:

- Support a private cloud

- Manage a private cloud

- Perform common operational tasks and troubleshoot

How Managing vCloud Differs from Managing vSphere

As we've discussed in earlier chapters, vCloud Director is an abstraction layer on top of vSphere that facilitates self-service and on-demand provisioning of computing, storage, and network resources for consumers to build services on. You still need to do the following:

- Manage the underlying vSphere implementation in a similar manner to a normal vSphere platform

- Keep a close eye on performance of computing and storage components to allow the load balancing functions like Distributed Resource Scheduling (DRS) and vMotion to operate correctly

- Patch, upgrade, and maintain hosts

You still use the vSphere client (or the web client) to manage and provision individual hosts and present storage and networks. But once hosts are allocated to vCloud Director, it handles their subdivision and allocation among various tenants, rather than the vSphere administrator doing so.

Your primary administrative interface for your private cloud is the vCloud Director user interface (UI), whereas the vSphere layer will be your interface for troubleshooting lower-level configuration or physical problems. The vCloud Director UI allows you to view any errors logged, identify the problematic subcomponent, and connect directly to it to troubleshoot further.

For example, if a vApp fails to deploy you can use the vCloud Director UI to verify that sufficient capacity exists and that the user has the proper permissions. You can also use the log files to identify which vCenter is dealing with the deployment request. You are then able to connect to that vCenter with the vSphere client to further troubleshoot the issue at the vSphere layer.

If you have a specific application-type problem with one of the vCloud Director management components, such as vCNS Manager or a cell server, you can troubleshoot such issues at the console of the relevant virtual machine or appliance directly.

You need to manage your vCloud platform in three parts:

- Management pod

- Resource pods

- Organization virtual datacenters (Org vDCs)

Let's explore each of these components next.

Management Pod

The vCloud Director management pod is very much like a traditional application that you host for your organization. It consists of an allocation of resources from a vSphere cluster that provides an underlying high-availability infrastructure for an n-tier type application. vCloud Director has a browser-based application front-end, a database back-end, and various network and API interfaces to ancillary systems (like Chargeback or vCNS Manager), and you need to ensure its availability to ensure the vCloud Director platform is available to and usable by your consumers.

You can engineer resilience into most of the core components of the vCloud Director service to ensure availability. There is no real reason to deploy it on physical infrastructure rather than as virtual machines (VMs). Most of the management components of vCloud Director require more than one vCPU, so vSphere Fault Tolerance (FT) is of limited use to protect these components.

Generally your management pod will be quite static, with infrequent changes. It should come under the scope of tight change management to ensure a good level of security and availability.

Resource Pod

You will have one or more resource pods. Each pod contains clusters with varying levels of resilience, capacity, or capability that map to your Provider Virtual Datacenter (PvDC) objects in vCloud Director. These PvDC objects and the underlying ESX host clusters will run the vApps that your consumers will build and use.

The resource pods will be managed and configured in the traditional manner via vCenter. However, the management team is also responsible for ensuring that there is sufficient capacity to meet demand, and the team deals with any underlying platform issues, such as hardware failures, security patching, and host upgrades. Because a key principle of cloud computing is self-service, your consumers will be managing, uploading, and deploying vApps using the template and catalog capabilities provided by vCloud Director.

As part of an operations team running a vCloud Director platform, you would not typically be involved in building, configuring, and deploying VMs for your end users beyond possibly setting up the initial catalogs and objects in vCloud Director. Likewise, for networking on a day-to-day basis port groups are allocated and created for your consumers on demand by vCloud Director from the network pool you created at setup time.

In a regulated environment where you need to comply with commercial or government regulations, your system administrators may not be allowed access inside VMs deployed by your consumers. vCloud Director allows the owners of these VMs to deploy, configure, and administer them independently of the team responsible for managing the underlying infrastructure.

Your resource pods will generally have a higher level of change than the management pods. You will be continually managing capacity and performance of the platform, and as such, you may need to frequently add/remove and reallocate hosts among your various PvDCs. Native vSphere platform features like maintenance mode, vMotion, and DRS mean you can do this online in a nondisruptive manner.

Org vDCs

You will have a number of tenant organizations, each with its own allocation or share of pooled resources. Much of the control and management of the vApps your consumers deploy within their Org vDC is delegated to the consumer. Therefore, they are responsible for ensuring their software solutions are available and meet the demands of their user community.

These containers will have a high level of change, so much of the change management should be focused on the end user rather than technical/platform impacts. vCloud Director provides a determinate level of performance through its Org vDC constructs. These can be increased on request if further resources are required from the platform to meet application demands. Consumers and end users do not need to get bogged down in the technical aspects of such changes—such issues are dealt with lower down the stack by the platform team—but requests for more or fewer resources can be expressed in easily understandable units like GHz, GB, and IOPS.

Rather than consumers saying “My application is slow,” it's easier to get a view of how many resources are allocated to a vApp and how much is reserved compared to consumed. This allows you to quickly determine whether adding more resources will in fact improve performance or whether the problem lies elsewhere.

Understanding Change Management

Change management is important in any production system, but you need to take a pragmatic approach to its implementation in a cloud environment, which is geared up for agility and flexibility.

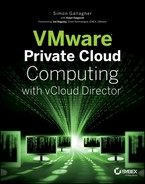

Figure 11.1 illustrates management layers with a strictly defined approval and control process. Note there are decreasing levels of change control to allow more agile adjustment of capacity at the resource level, and no change control is enforced by the cloud provider at the consumer level.

Figure 11.1 The various levels of change control from management to consumer

Particularly with regard to the resource pod layer, you should adopt the concept of preapproved changes and capped quantities. You can have a number of standard changes (such as adding datastores or hosts) that can be fast-tracked through the change process because they can be done online with no disruption to service. However, the schedule and process is communicated to infrastructure stakeholders for information only.

Likewise, you should maintain a quota of preapproved changes. For example, the operations team could have the authority to add hosts to a resource pod up to a predefined design limit before they need to seek further design or financial approval.

Capacity Planning

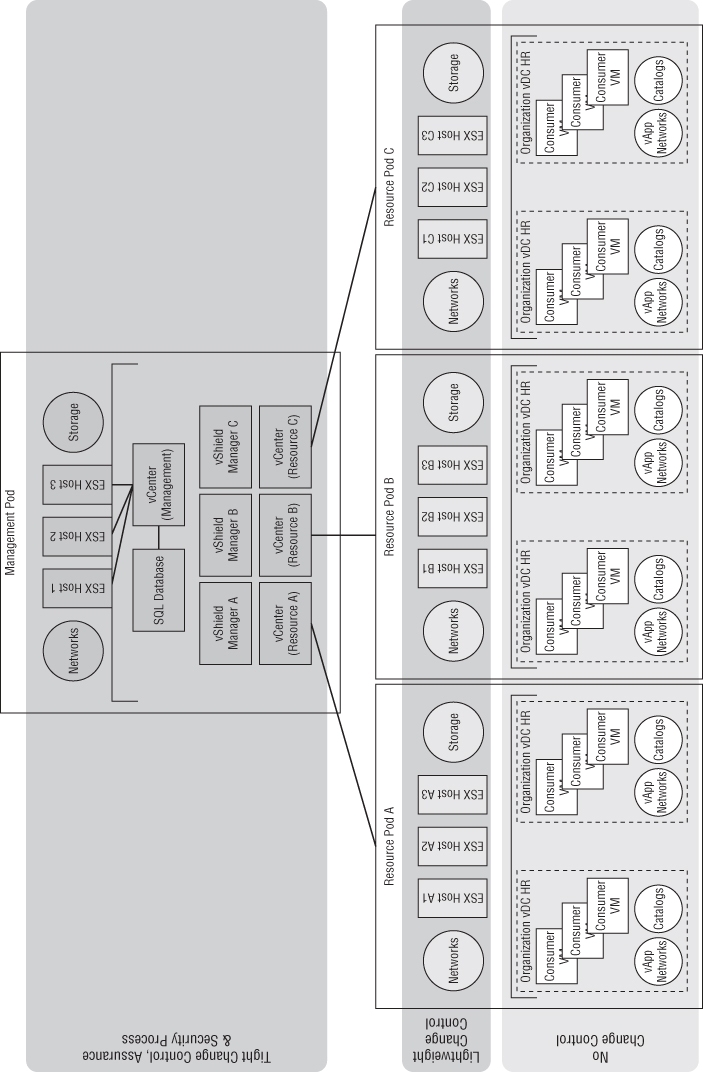

Whenever a new host is added, the addition of the host feeds into the capacity-planning teams, who review the current and projected footprint against the overall architectural and financial plan to ensure that technical or financial constraints are not breached. By maintaining this buffer capacity, or “headroom” of capacity/approval, you can still deliver most of the agility wanted by consumers while maintaining control and assurance of the underlying platform.

The amount of headroom you maintain in the platform depends on your business plans and desire to overcommit resources. Your capacity plans will evolve over time in line with business, technical, and operational change. As such, the capacity-planning role needs to pay close attention to planned projects as well as closely monitor actual usage to ensure capacity keeps ahead of potential demand (see Figure 11.2).

Figure 11.2 Headroom capacity delivered by expansion vs. consumer workload over time

Team Structure

Deploying a private cloud in your enterprise means a slight shift in the mindset of your organization and how it performs conventional operations, from supporting and managing end users to enabling and empowering consumers. IT capability is provided in a less prescriptive manner, which means IT departments can relax their control over the systems and solutions the consumer chooses to deploy. Such transformation can be hard, and you need to ensure your organization and teams give the concept their buy-in. Some tactics for winning this buy-in are outlined in Chapter 2, “Developing Your Strategy.”

A common strategy for operating your private cloud is to split the team into two functions:

vCloud Operations

Members of this team are responsible for supporting vSphere and vCloud and for ensuring capacity and availability.

Transition Support

This team works in the business, helping consumers bring their solutions on board and understand how best to use vCloud and your private cloud facility at a technical level. This may involve liaising with developers and assisting with integration with the vCloud API. It also may involve advising your consumers on how they should best build their solutions and applications to ensure availability and performance.

You should also be prepared to have the transition team work with third parties, business partners, suppliers, and customers where appropriate and work to align them closely with projects that build new solutions on your private cloud to ensure success.

Operations are a critical part of the overall team, charged with building, maintaining, and operating the platform. The operations manager role is a key part of this management team and is responsible for delivering infrastructure that can meet the service levels offered to consumers.

As we've discussed, if your organization is reasonably experienced at operating vSphere, then you'll find that operating a vCloud platform isn't totally different from what your existing vSphere operations team does on a day-to-day basis:

- Hosts will still need to be patched.

- Failures will need to be resolved.

- Upgrades and enhancements will be continual.

However, there is less reliance on building VMs and directly serving requests from end users; much of this is dealt with through self-service.

In a vCloud environment, your team will be serving two types of consumer:

- End users creating and deploying vApps

- Autonomous systems consuming the APIs you provide

For example, consumers are free to build applications that interact with the vCloud infrastructure via its API to request more instances of a vApp or even to change network and firewall rules (depending on the levels of permissions granted). This level of integration doesn't often happen in traditional vSphere deployments and will thus bypass any manual process for change control, capacity planning, and so forth. In such instances, you are relying on the quotas put in place by the capacity-planning function and configured on an Org vDC to meet the demands of your consumers until they request further resources.

vCloud Director does an excellent job of creating abstractions of the complex permissions required to deliver such functionality in a secure manner within the underlying vSphere platform. These abstractions are known as roles and can easily be used by your vCloud operations team as building blocks to control consumer access. That way, your team does not have to spend significant time building and testing low-level access control lists.

Depending on the size of your deployment, you will have a number of subject matter expert (SME) roles within your team. SME roles may be held by a couple of individuals, or you may have dedicated SMEs in larger deployments. The SMEs provide expertise on the following areas:

- Storage—FC, NAS, SCSI, IOps/performance troubleshooting

- Servers/virtualization—vSphere

- Network—VLAN, switching, vDS, routing, firewalls, and load balancers (physical and virtual)

- Automation—API support, integration of end-to-end automations

Monitoring Performance and Availability

The private cloud is very much about offering a product to your internal consumers. Part of that product specification is a service level agreement (SLA). To ensure that you are conforming to that SLA, it's important to be able to monitor performance and availability.

vCloud and the underlying vSphere platform has many “moving parts”: servers, networks, storage, applications, and so forth. These components may make it difficult to provide an end-to-end picture of a solution.

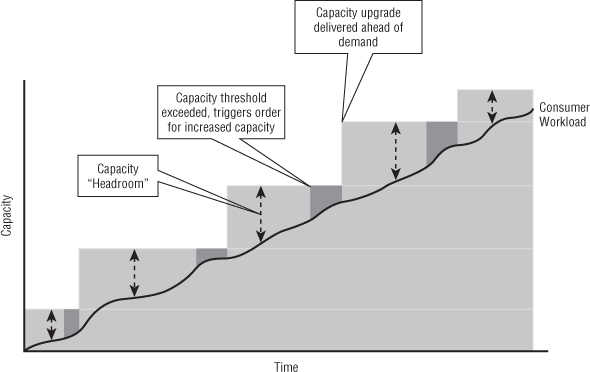

There is no one-size-fits-all solution. Your solution depends on your individual vendors, but it's likely that you'll need to build your own solution. The architecture shown in Figure 11.3 shows the split between consumer and provider monitoring solutions.

This model reinforces the concept that the consumer cannot see or access the underlying infrastructure components for monitoring and that the provider generally has no visibility into the consumer workload.

VMware provides the vCenter Operations Management Suite (vCOPS), which can provide detailed analysis of the virtual workload. This tool has an extensible adapter architecture that allows integration with vCloud Director as well as hardware vendors like EMC or NetApp for storage monitoring and alerting. However, it's important to note that vCloud integration for these tools is an ongoing process and they currently do not integrate with the native vCloud portal. They have their own interfaces and consoles, which would form part of your management platform.

If you want to build access to these tools for your consumers, you'll likely need to build some custom dashboards within those products and publish them to your consumers. vCOPS isn't yet a multitenant solution, so this could be an issue in a private cloud scenario if you have security requirements to separate visibility of those dashboards between consumers.

vCDAudit is a community-built plug-in for the popular vCheck PowerShell toolkit. This plug-in generates easily customized reports on vCloud workloads and configurations. If your requirements are simple or you need a high level of customization, we recommend you use vCDAudit.

You can download vCDAudit from Alan Renouf's blog at www.virtu-al.net/2012/03/13/vcdaudit-for-vcloud-director/.

Figure 11.3 Demarcation between consumer and provider monitoring

Using Malware Protection

Malware protection is important in any production environment and is often a compliance requirement if you operate in a regulated industry. As with traditional vSphere environments, antimalware vendors are starting to integrate their products with hypervisors, having recognized that traditional pure in-guest solutions are inefficient and difficult to manage when using virtualization with no control inside the guest OS.

Currently none of the hypervisor-integrated solutions are supported by vCloud. This is generally because they rely on special network-level filter drivers or appliances on ESX hosts that are not understood by vCloud Director's networking automation.

vCNS Edge and Gateway currently provide network-level firewall functionality and packet filtering. However, you'll need to stick with in-guest solutions like antimalware tools and built-in Windows or Linux firewalls as part of your strategy for virtual workload protection until those products are released with the ability to be controlled from vCloud Director.

Balancing Capacity and Demand

As discussed earlier, your resource pods will likely experience a higher level of change than your management pods. You may need to add more hosts to increase capacity or move hosts between clusters to balance capacity and demand.

Having a consistent configuration for your ESX hosts is critical for vSphere clusters. When you are aggregating multiple hosts and clusters into a computing pool managed by vCloud Director, a consistent configuration is even more important because all of the network and storage settings will need to be identical on all hosts to ensure consistent performance and reliability. As such, you should apply an automated method to get a consistent configuration on your hosts. For example, you might use vSphere host profiles or a PowerShell script to ensure that all port groups and datastores are named and configured consistently.

Using the new Storage DRS functionality of vSphere 5 makes scaling and maintaining storage simple. You just add or remove storage to the clusters and storage DRS (SDRS) automatically takes care of distributing load. Likewise, if you need to reallocate storage between clusters at the vSphere layer, you can use SDRS maintenance mode to automatically move VMs to alternative datastores so you can work on the datastores in maintenance mode without impacting the VMs that were originally stored there. This all happens transparently to vCloud Director and can be carried out by a vSphere administrator as part of business-as-usual activities.

Adding More ESX Hosts to a PvDC

As part of your capacity-planning process, you may decide that you need to add more ESX hosts to your clusters to increase capacity available to PvDCs.

Once the new host is added to the cluster and ready for use, you need to “prepare” it for use with vCloud Director.

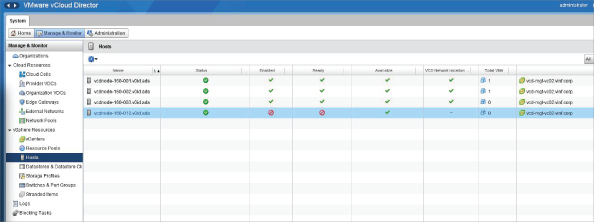

As you can see in Figure 11.4, vCloud Director knows when a host has been added to a PvDC but isn't yet ready for use.

vCloud Director has detected a host in the cluster that hasn't been prepared for use. Note that it is not enabled or ready and does not support vCloud Network Isolation (VCNI).

Figure 11.4 A new unprepared host has been detected by vCloud Director.

The agent that is deployed by vCloud Director to each host installs extra networking components to support VCNI and VXLAN network encapsulation between hosts (for more information on these technologies, see Chapter 5, “Platform Design for vCloud Director”).

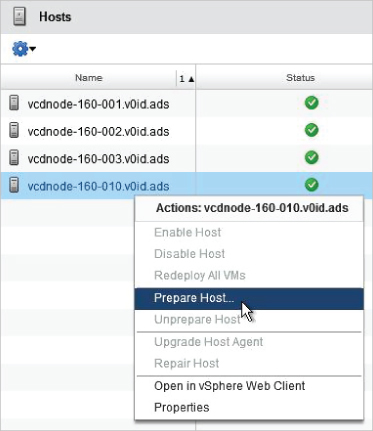

Figure 11.5 Preparing a host for use with vCloud Director. This step initiates the installation of the vCloud Director agent to an ESX host.

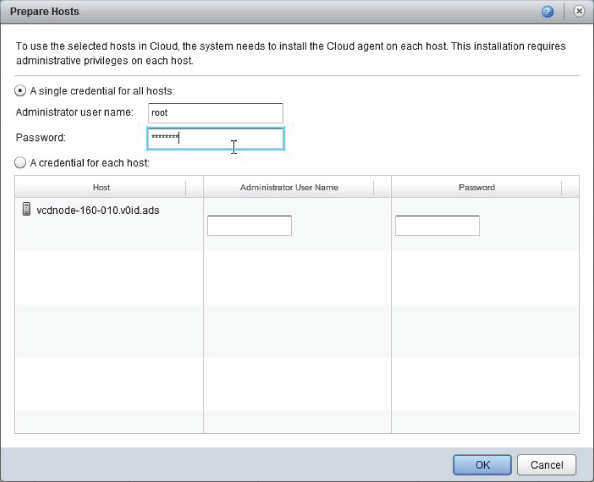

Figure 11.6 Entering root credentials to connect to an ESX host

Figure 11.7 A host is being prepared for use with vCloud Director.

Figure 11.8 Enabling host spanning task during agent installation

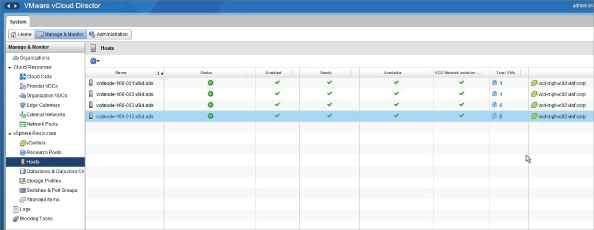

Once the process is complete, the host is ready for use (Figure 11.9). DRS will distribute VM workloads to the host automatically if configured to do so.

Figure 11.9 Host preparation completed

Removing ESX Hosts from a PvDC

You may find it necessary to move one or more physical vSphere hosts to a different cluster or to remove the hosts entirely. vCloud Director is connected to your vCenter servers, so if you put a host into maintenance mode, vCloud Director detects this fact automatically and marks the host as unavailable until you exit maintenance mode (Figure 11.10).

Figure 11.10 vCloud Director detects a host has been placed into maintenance mode.

However, vCloud Director does not automatically remove a host from the inventory if you move the host to a different vSphere cluster or remove it entirely.

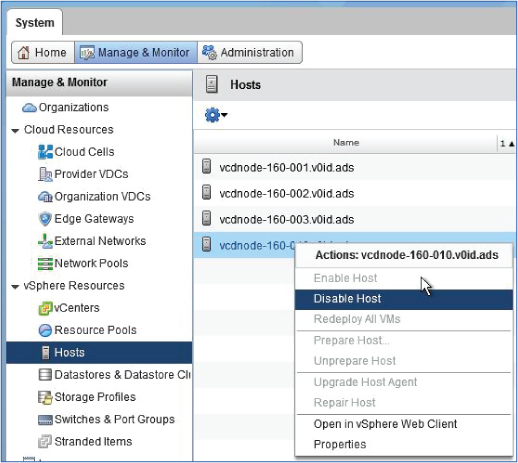

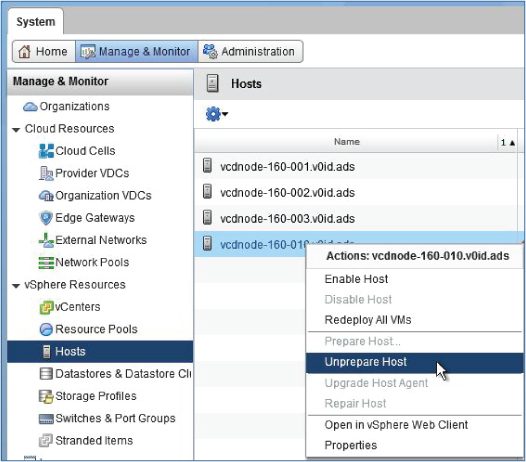

So, to remove a host you need to do the following:

Figure 11.11 Disabling a host

Figure 11.12 Choose Unprepare Host from this menu.

Adding Datastores

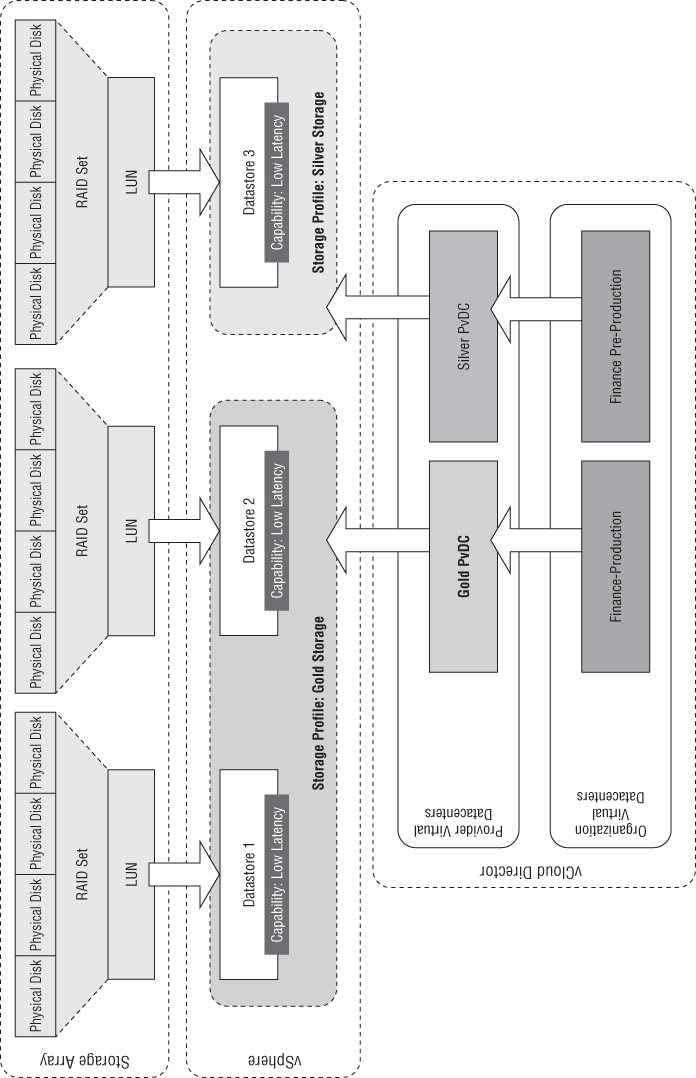

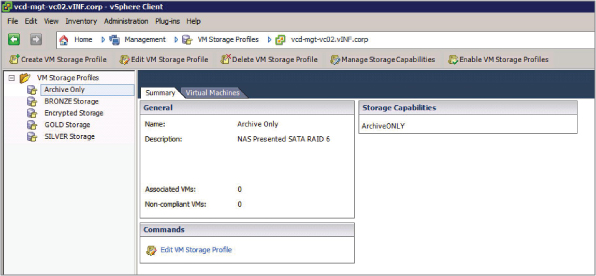

Use the Storage Profiles feature of vSphere to create storage profiles. This feature provides a further level of abstraction to vCloud Director and allows your cloud to consume storage of a particular profile without having to select individual logical unit numbers (LUNs). For example, you can group LUNs with particular capabilities (such as performance, array-based encryption, or different levels of redundancy) into storage profiles.

Figure 11.13 illustrates the mapping of physical disks to storage profiles by capability tags and ultimately into storage that is abstracted to consumers in Org vDCs.

Figure 11.13 Mapping of physical disks to storage profiles by capability tags and abstracting to Org vDCs

The process for adding more storage to your vCloud environment includes two steps. First, the storage needs to be presented at the vSphere layer, as you would for a traditional vSphere environment. LUNs are zoned and masked to a cluster. The vSphere administrator scans for new LUNs and formats them. These LUNs are then added to a storage profile using either user-assigned capabilities or automatically detected capabilities. If you have a storage array that supports vStorage APIs for Storage Awareness (VASA), you can use the automatically detected capabilities to define a storage profile.

Second, if you are using storage profiles as shown in Figure 11.14, LUNs with common capabilities or properties are automatically able to be used by vCloud Director as you associate a PvDC and its associated Org vDCs with storage profiles. vCloud Director is then able to consume whatever is provided within each storage profile.

Figure 11.14 vSphere storage profiles

Using storage profiles means that as soon as datastores are added at the vSphere layer they are automatically available to vCloud Director for consumption unless they are in maintenance mode.

Adding Additional vCenter Servers

As your environment grows, you may start to approach the configuration maximums of a single vCenter in a vCloud deployment in terms of the number of VMs or hosts that you can support. Scaling further will require another vCenter server. Or you may have a workload that has a different security profile, which means you'll need to deploy a dedicated vCenter and set of resource pods (clusters).

Deploying a dedicated vCenter and set of resource pods is a straightforward process and is just a case of adding another vCenter and vCNS Manager to your vCloud console. You'll remember from Chapter 3, “Introducing vCloud Director,” that vCloud Director aggregates multiple vSphere resources into a common management and orchestration function. This is how vCloud is able to scale to a significant number of VMs. The process of adding further vCenter servers is the same as the steps shown in Chapter 8, “Delivering Services,” for attaching the first vCenter.

Troubleshooting vCloud Director

From time to time you may encounter issues with your vCloud Director environment. Most of the “brains” of vCloud Director reside in the code running on the cell servers and are quite straightforward to troubleshoot.

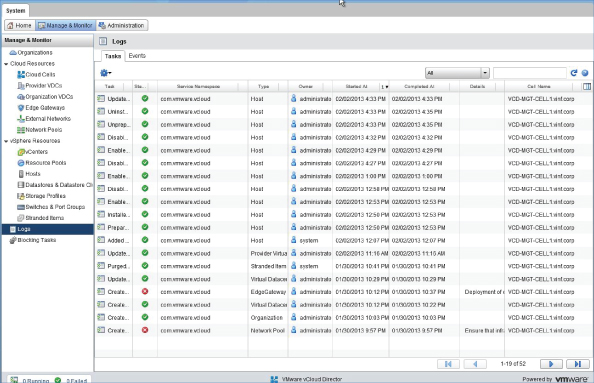

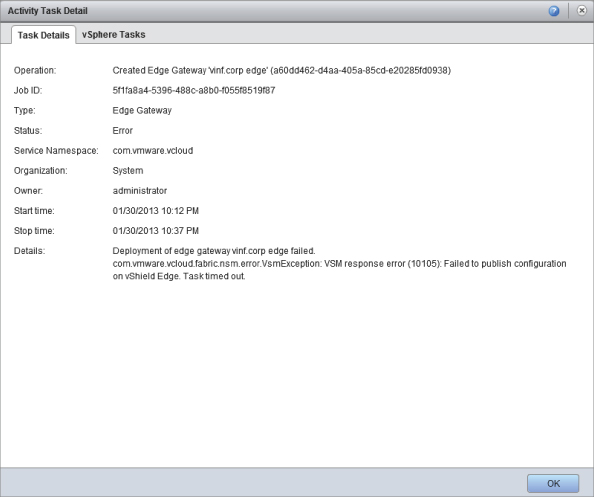

vCloud Director provides a number of logs that are accessible to administrators via the vCloud Director interface. As you can see in Figure 11.15, these logs include tasks and events that are generated by vCloud Director across the implementation. Double-clicking on a task will display more details about the error, as shown in Figure 11.16.

Figure 11.15 vCloud Director logs: tasks and events

Figure 11.16 Task details

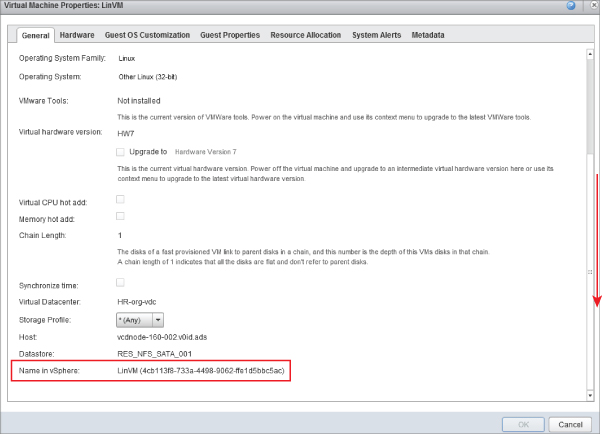

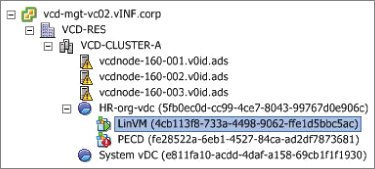

Often it's useful to be able to track vCloud Director–created objects in vSphere. In the properties dialog box of a VM, you'll be able to find its vSphere name toward the bottom of the screen, as shown in Figure 11.17. You can then locate this object in the vSphere client and troubleshoot any issues with that VM at the vSphere layer, as shown in Figure 11.18.

Figure 11.17 Locating the vSphere object name in the VM's properties dialog box

Figure 11.18 vCloud Director managed object viewed from vSphere

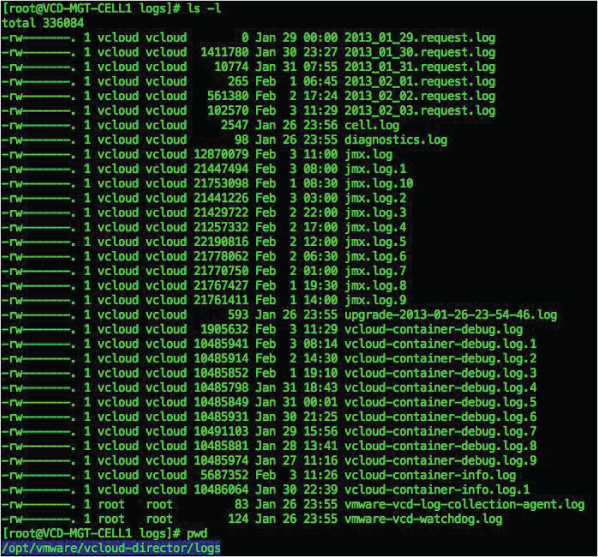

If you experience problems connecting to the vCloud Director interface, this is often a sign of an issue with one or more of your cell servers. Each cell server maintains a number of local log files. You can view these files by creating a Secure Shell (SSH) connection to the cell server and viewing the log files. The log files are held in /opt/vmware/vcloud-director/logs (see Figure 11.19).

The most useful log files for day-to-day running are the cell.log and vcloud-container-debug.log files. If you are experiencing issues, you should review these files first for recent events and errors.

There are not many controls you can use to adjust the function of a cell server while it is online, and it may be necessary to restart the vCloud Director software running on a cell server if you have problems.

Figure 11.19 vCloud Director cell server log directory

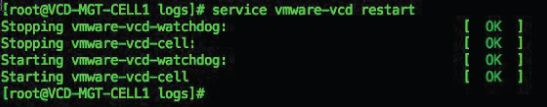

You can perform a full restart of the vCloud Director cell software on a single server by issuing the following command as root (see Figure 11.20). Note that this command will terminate any currently running jobs.

service vmware-vcd restart

Figure 11.20 Restarting vCloud Director software on a cell server

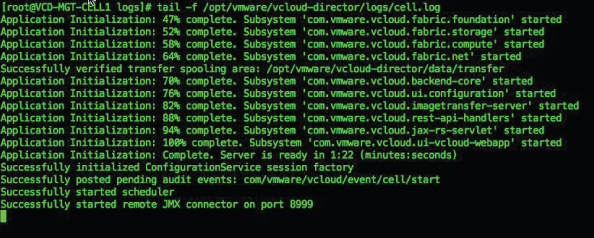

Although the console reports back reasonably quickly that the service has restarted, the actual vCloud Director software is still carrying out its initialization processes in the background. You can check on the progress of this task by viewing the cell.log file (see Figure 11.21). If you use the tail -f command, you will see real-time updates to this log file on the console without having to reopen the file.

Figure 11.21 The cell.log file on a vCloud Director cell server during service initialization

If you have problems with the vCloud cell server initializing correctly, you should further investigate the log files and verify that you have connectivity to the server where the vCloud database is held and that DNS resolution is functioning correctly.

You can also gracefully shut down a cell server. Doing so allows running processes to complete but disables new connections to the cell server. This approach is useful for performing online maintenance.

You can stop any new jobs from being scheduled on an individual cell server with the following command:

cell-management-tool -u UserName -p YourPassword cell -q true

You can query the number of active jobs using this command (see Figure 11.22):

cell-management-tool -u UserName -p YourPassword cell -t

Figure 11.22 Querying the cell service

When the job count equals 0, you can stop the cell server with the following command (see Figure 11.23):

cell-management-tool -u UserName -p YourPassword cell -s

Figure 11.23 Cell service with no active jobs

Note you have to run this command twice:

- The first time, it shuts down the watchdog service.

- The second time, it shuts down the actual cell service.

You can double-check the status of the cell service using the following command. The results in Figure 11.24 show the vCloud services are stopped after being gracefully shut down.

service vmware-vcd status

Figure 11.24 vCloud services stopped after graceful shutdown

Once you have completed your maintenance, you can restart the cell service by using this command:

Service vmware-vcd start

Summary

Managing your vCloud environment isn't drastically different from managing traditional IT infrastructure, except much of the focus of the operations teams is shifted to capacity planning and management, rather than day-to-day provisioning activity for customer VMs and applications.

Change control allows you to enforce a level of diligence and planning for change. As such, we suggest that you apply change control in varying levels. Apply the tightest level of governance and control to the management elements of the platform.

To retain agility around the infrastructure elements that service customer workloads directly (the resource pods), we recommend that you develop a set of preapproved changes and processes that are available to the operations teams to invoke as required without having to go through a full change control or approval process.

From a provider point of view, no change control is provided or enforced on consumer workloads. Consumers have a self-service interface to the platform and can create and destroy their own systems as they see fit.

Monitoring is split into two elements: platform (vCloud, vSphere, network, storage) and consumer workload (vApps). The provider is typically interested in monitoring platform performance, ensuring the infrastructure is available and performing to the required SLA.

In an IaaS offering like vCloud, the consumer workload is typically managed and monitored by the consumer. The infrastructure is not visible to the customer, who must rely on the provider to deliver the required (or even contracted) SLA. To manage and monitor vApp performance and availability, the consumer would have to implement their own solution in their Org vDC or integrate the vApps with an existing external enterprise monitoring solution.

Likewise, there are no vCloud-integrated antimalware solutions available as of this writing, but this does not prevent consumers from implementing antimalware solutions in their vApps.

From time to time there may be issues with the vCloud cell servers or database connections they rely on. Restarting the cell service is a straightforward process. If there are multiple cell servers, any outage to consumers can be avoided by implementing an IP load balancer to distribute connections to the cell servers.

Issues with the underlying vSphere platform can be investigated and resolved using the vSphere client. Both vCloud and vSphere maintain a comprehensive set of logs, particularly if you use a syslog server to correlate alerts and event messages.