2

Understanding the Language of Compression

All disciplines have their own specialized terminology and references for explaining various components and practices, and compression is certainly no different. It has its own specific language, and like most languages, it shares elements with other neighboring tongues, especially the video and IT network worlds.

But in some cases, the same terms have different meanings in the world of compression. Talk to a broadcaster or cable operator about video on demand, and they’ll immediately assume you are referring to the video content made available on demand through cable channels. Talk to a streaming services or content provider about the same terminology, and they will assume you mean any online content. Compressionists need to know both definitions and be conscious of the context others refer to when they use these terms.

On the other hand, some words and concepts are specific to compression, and these are the ones that typically cause communication problems between compressionists and others. Perhaps the most confusing terms concern the differences between video players on computers (and embedded devices), video and audio codecs, and video and audio formats. The words that describe these different parts of compression are similar enough to puzzle anyone working in the field and confuse a client who just needs work done and has no inclination or need to learn the compressionist’s lexicon.

The goal of this chapter is to clarify what exactly these three major concepts—players, codecs, and formats—mean, and then we will discuss some specific facets of compression that any compressionist should be prepared to discuss as part of the encoding process.

Compression Parameters

Before we dive into the formats, containers, and codecs you are going to come into contact with, let’s drill into compression parameters. This is a term commonly used to describe the settings available with a codec. Many are global, meaning they are identical or at least similar from codec to codec. Some, however, may be specific to an individual codec family. It’s important to understand all the basic settings because they are directly going to affect the quality of the finished video you are creating.

It’s also a good idea to familiarize yourself with the advanced settings possible within the codecs you work with most often. This will allow you to really get in there and optimize the finished product.

Data Rates (or Bit Rates)

Data rates are the number of bits you are allowing the software to use to describe each frame of the video. If you use more bits, the detail and picture quality go up, but so does the file size. If you use fewer bits, the size goes down, but the image detail may suffer. Certain codecs are better at providing a better-quality image with less data than others.

![]() TIP

TIP

In the world of acronyms, a little b means bits, and a big B means bytes. Don’t get them confused; there are eight bits in a byte, so if you get it wrong, you are off by eight times!

Data rates are typically represented in bits (whereas storage on a hard drive is typically represented in bytes). The two abbreviations you will see most often in reference to this are kilobits per second (Kbps) and megabits per second (Mbps). However, in recent years, Society of Motion Picture and Television Engineers (SMPTE) and other large standards boards have attempted to unify around Bit/s instead of bps to avoid confusing bits and bytes. So, the use of kbit/s and Mbit/s would also be appropriate in documentation or software settings.

VBR and CBR

Variable bitrate (VBR) encoding varies the amount of output bits over time, using an average target bitrate as a goal but apportioning different amounts of bits to different portions of an encoded video. VBR allows a higher bitrate (and therefore more storage space) to be allocated to the more complex segments of media files, while less space is allocated to less complex segments. The average of these rates is calculated to produce an average bitrate for the file that will represent its overall audio and video quality.

Constant bitrate (CBR), on the other hand, means that bits used per segment are constant, regardless of the complexity of the audio or image. Why would you use one versus the other? VBR is useful for web-based content that is downloaded, not streamed in real time, because the ratio of image quality to file size is high—you are being as efficient as possible with the bits being used (the most bang for your bits, as it were).

![]() TIP

TIP

Need a small-sized video with a decent picture quality? Try a VBR file with a low minimum data rate and a high peak. This will keep the average fairly low while allowing the codec to decide when it needs to spend more bits to get the image right.

So, if that’s true, why would you ever use CBR? Some playback devices expect the same amount of data constantly because it is easier for the player to maintain smooth, continuous playback of the video. Devices with lower processing power than modern DVD and Blu-ray players, as well as portable devices that have to balance processing power against power consumption, are ideal candidates for CBR video because the processor won’t be overwhelmed.

Frame Rate

Frame rate (expressed most often as frames per second or fps) is the frequency, or rate, at which consecutive images are displayed. The term is used for film and video cameras, computer graphics, and motion capture systems. Frame rate is also called the frame frequency or hertz (Hz).

In the early days of online video, it was common to reduce the frame rate to save bandwidth (for example, delivering a clip at 15 fps instead of 30 fps). Because of the improvements in broadband speeds as well as an increase in the processing power of modern devices, most encoded video is delivered at a native frame rate, rather than a reduced one. It is, however, vital to ensure frame rates aren’t accidentally adjusted during the encode process (say from 30 to 24, or vice versa). The effect of this would be to potentially add perceptual motion stutter or what is often called “judder” to the image.

Frame Types

![]() NOTE

NOTE

Interframe compression encodes a frame after looking at data from many frames (between frames) near it, while intraframe compression applies encoding to each individual frame without looking at the others.

When encoding video, there are a few different types of frames that will appear within the content—intra (I) frames (also called key frames), predicted (P) frames, and bipredictive or bidirectional (B) frames. I-frames are frames that do not refer to other frames before or after them in the video. They contain all the information needed to display them. P-frames, however, use previous I-frames as reference points to complete them (in other words, they describe the changes made in an image since the last I-frame). Because they are describing changes only since the last I-frame, P-frames take fewer bits to create. B-frames are predictive frames that use the last two I-frames to predict motion paths that may occur. These take even less data to create than P-frames.

Aspect Ratios and Letterboxing

As discussed in Chapter 1, a number of aspect ratios are available; however, in the video world, 4:3 and 16:9 are the two most common. As part of the compression process, you want to be conscious of preserving the intended aspect ratio as you scale the video resolution; nothing can be as distracting as trying to watch video that has a distorted image.

But there is also one other issue to keep an eye out for: letterboxed video. Letterboxed video is 16:9 content that has black bars above and below the image to conform it to 4:3 standards. Letterboxing became popular in the 1970s to help preserve the full image of a feature film being played back on a broadcast TV. Pillar bars are another form of letterboxing, which became popular with the arrival of wide-screen TVs. Most compression applications have a built-in cropping tool that allows you to remove the black bars from the original image as well as alter the intended aspect ratio to the new dimensions needed to keep the video image from distorting.

Square and Nonsquare Pixels

Pixels in the computer world are square. A 100-pixel vertical line is the same length as a 100-pixel horizontal line on a graphics monitor (a 1:1 ratio). However, some formats (such as HDV and DVCPRO HD) use nonsquare pixels internally for image storage as a way to reduce the amount of data that must be processed, thus limiting the necessary transfer rates and maintaining compatibility with existing interfaces.

Directly mapping an image with a certain pixel aspect ratio on a device whose pixel aspect ratio is different makes the image look unnaturally stretched or squashed in either the horizontal or vertical direction. For example, a circle generated for a computer display with square pixels looks like a vertical ellipse on a standard-definition NTSC television that uses vertically rectangular pixels. This issue is even more evident on wide-screen TVs.

As you convert video meant for one delivery format to another, you may need to convert the pixel aspect ratio as part of the process. Many compression programs will already correct for this automatically; however, some may expose an option that allows the users to either select it manually or override the default options available.

Containers

A container (also known as a wrapper or format) is a computer file format that can contain various types of data, compressed by means of standardized audio/video codecs. The container file is used to identify and interleave the different data types and make them accessible to the player. This applies to online and broadcast content. Simpler container formats can contain different types of video or audio codecs, while more advanced container formats can support multiple audio and video streams, subtitles, chapter information, and metadata (tags), along with the synchronization information needed to play back the various streams together.

Containers for Audio

Some wrappers/containers are exclusive to audio, as listed here:

WAV: This is widely used on the Windows platform.

WMA: This is a common Windows audio format that supports a number of codecs.

AIFF: This is widely used on the macOS platform.

MP3 (MPEG-1 Layer 3): Though it has plateaued in popularity, this format is considered one of the linchpins for the rise of digital music.

M4A and AAC: These are audio formats that have better audio quality over MP3 and take advantage of the MPEG-4 audio standards.

Containers for Various Media Types

Other, more flexible containers can hold many types of audio and video, as well as other media. Some of the more common containers include the following:

AVI: This is the standard Microsoft Windows container.

WMV: This is a compressed video file format for several proprietary codecs developed by Microsoft.

FLV: This is the format specific to Flash Video. It primarily uses AVC/H.264 codec, though it also supports VP6.

MOV: This is the standard QuickTime video container from Apple.

MPEG-2 transport stream (TS) (aka MPEG-TS): This is the standard container for digital broadcasting; this typically contains multiple video and audio streams, an electronic program guide, and a program stream (PS).

MP4: This is the standard audio and video container for the MPEG-4 multimedia portfolio.

OGM (“Ogg Media”): This is the standard video container for Xiph.org codecs.

MXF: This is a container format for professional digital video and audio media defined by a set of SMPTE standards.

Matroska/MKV: This is not a standard for any codec or platform but instead is an open standard.

MOX: This is an open source mezzanine container for use in conjunction with open source codecs such as EXR, PNG, and VC5 (CineForm).

Codecs

Ah, here we are, the core component of the compression world—the codec. Codec is an acronym that stands for compressor/decompressor or coder/decoder, depending on whom you ask. Though codecs also exist in hardware, when you hear codecs referred to, people are typically speaking about the software codecs that actually make it possible to translate stored video and audio from their digital form into moving images and audio. Knowing when and when not to use different codecs is important throughout the postproduction, compression, and final delivery of your content.

A Time and a Place for Everything

All codecs have advantages and disadvantages. Comparisons are frequently made between which are the “best” (best being a sliding scale depending on who published the findings). Generally speaking, the trade-off between image fidelity, file size, processor usage, and popularity can be used to draw comparisons between various codecs. What do we mean by that?

Image quality: Obviously, preserving image quality is of paramount concern when compressing video.

File size: Quality has to be balanced by how large the file actually is.

Processor usage: As the video is playing back, it is being decoded from its binary form to be displayed. Each codec requires different amounts of processor support to perform this. It can’t be so intensive that it’s impossible for the device or computer to actually keep up with the decoding process, so this should be considered as well.

Popularity: The most popular codecs are the ones that have the widest-reaching audience, though they are not always the best-looking or the ones capable of delivering the best quality in the smallest files. A video format or codec that requires additional steps to install a custom player may not get the viewers that a lower-quality but widely supported player may get.

With these criteria in mind, you can begin organizing codecs into a few categories that make it easy to decide when—or even more importantly, when not—to use a certain codec. The following are the essential categories for video codecs.

Acquisition Codecs

Acquisition codecs are the ones you capture your images in. Acquisition codecs are usually (but not always) associated with cameras. When choosing your acquisition codec, generally speaking, you should aim for the highest-quality codec that your camera (or your budget) supports. In this case, “highest quality” just means you want to capture as much information as possible: less compression, higher bit depth, and less chroma subsampling. If you start with more information at the beginning, the more flexibility you will have in post, especially in color correction and any visual effects work. In many cases, your choice of camera also dictates your choice of codec (or codec family). Choosing an acquisition codec is one of the most important decisions content producers make. But there are also a lot of pragmatic factors involved with this decision. Otherwise, we would always be shooting uncompressed 8K raw, right?

A few of the current popular acquisition codes available are the following:

REDCODE: Red Digital Cinema’s proprietary codec and format for camera acquisition.

XF-AVC: Canon’s 4K acquisition codec.

XAVC: Sony Prosumer line for acquisition. XAVC is based on 4K and can support up to 4K at 60 fps.

XDCAM: Sony’s line of acquisition codecs based on MPEG-2. Four different product lines—XDCAM SD, XDCAM HD, XDCAM EX, and XDCAM HD422—differ in types of encoder used, frame size, container type, and recording media.

Mezzanine Codecs

The main purpose of these intermediary codecs is to preserve image quality (usually) regardless of file size. These codecs are mostly used during the postproduction process and are usually not suitable for delivery to the end user. These files are often huge or lack wide public support as playback formats. You would use a mezzanine codec if you wanted to export video to be used in another program during the postproduction process but didn’t want to introduce compression artifacts.

A few of the current popular mezzanine codecs are as follows:

Apple ProRes: This is a lossy codec developed by Apple for use in postproduction that supports up to 8K. It is the successor of the Apple Intermediate Codec and was first introduced in 2007 with Final Cut Studio 2. In 2010 it was made available as an encoder on the Windows platform as well, and in 2011 FFmpeg added support for it.

CineForm: This is an open source intermediate codec first developed by CineForm in 2002 and then acquired in 2011 by GoPro. It is a wavelet-based codec that is similar to JPEG 2000, which is currently being standardized by SMPTE under the VC-5 name.

Avid DNX: This is a lossy high-definition video postproduction codec developed by Avid for multigeneration compositing with reduced storage and bandwidth requirements. It is an implementation of the SMPTE VC-3 standard.

Delivery Codecs

Delivery codecs are used to distribute video and audio content to an audience. These codecs provide the tightest balance between small file sizes and image quality. They are widely adopted and supported codecs that will reach the widest audience possible.

Current delivery codecs are as follows:

HEVC/H.265: High Efficiency Video Coding (HEVC), also known as H.265 and MPEG-H Part 2, is an industry standard and considered a successor to AVC (H.264 or MPEG-4 Part 10). It was codeveloped by the Motion Picture Experts Group (MPEG) and the Video Coding Experts Group (VSEG). In comparison to AVC, HEVC offers about double the data compression ratio at the same level of video quality, or substantially improved video quality at the same bitrate. It supports resolutions up to 8192 × 4320, including 8K UHD.

VP9: VP9 is an open source and royalty-free format developed by Google, which acquired On2 Technologies in 2010. VP9 has a large user base through YouTube and the Android platform. This code base was also the starting point for the AV1 codec.

MPEG-4 Part 10/H.264/AVC: MPEG-4 Part 10 is a standard technically aligned with the ITU-T’s H.264 and often also referred to as Advanced Video Coding (AVC). This standard is still the most popular codec currently supported on the Web. It uses different profiles and levels to identify different configurations and uses. It contains a number of significant advances in compression capability, and it has been adopted into a number of company’s products, including the Xbox family, PlayStation, virtually all mobile phones, both macOS and Windows operating systems, as well as high-definition Blu-ray Disc.

AV1: AOMedia Video 1 is an open, royalty-free video-coding format designed for video transmissions over the Internet. It is being developed by the Alliance for Open Media (AOMedia), a consortium of firms from the semiconductor industry, video-on-demand providers, and web browser developers, founded in 2015. It is meant to succeed its predecessor VP9 and compete with HEVC/H.265.

Players

The term player refers to the software that makes it possible to play back video and audio. In essence, video players are the TV within the computer that plays your videos. Player can refer to a stand-alone application, or it can be the software used for playback that is designed for use in web pages and applications that use HTML5. These HTML5 players will vary in capabilities but are developed to be used in web pages, in purpose-built applications, on computers, on console devices, and even on mobile phones (effectively, anywhere HTML works).

Desktop Players

There are many out there; some come free from software and hardware companies, others are sold commercially, and some are open source products supported by a community of developers and enthusiasts. Sometimes these players will have compression capabilities of their own, making it possible to transcode a piece of video in addition to playing it back.

As mentioned, several players are available; there isn’t one universal video player that will seamlessly play back all video and audio formats (though some try hard to do just that). As a compressionist, you want to be familiar with all these players and have them available to test and play back content you are creating to make sure it will work for your clients (and their end users) upon delivery.

If you are widely distributing your content, then you should also be checking this content in these players on multiple types of computers. Don’t test just on your Mac if all your viewers are likely to be playing it back on a PC; you have to mimic the end-viewer experience as closely as possible to assure a quality viewing experience.

Built-in Players

Generally speaking, on a modern consumer PC or Mac, the default player is a fairly generic application that will play back local media but is also tied to a media catalog or store run by the platform and designed to sell you movies and TV shows. It is not expected that the average user is doing much video conversion, so the default consumer experience is merely one of playback.

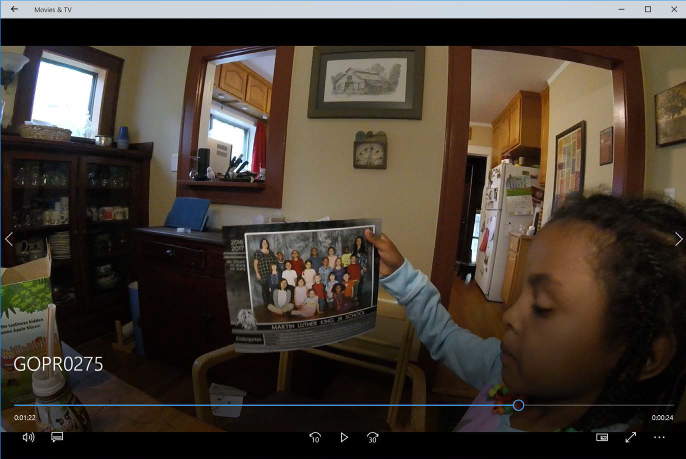

Microsoft Movies and TV App

On a modern Windows 10 device, the default player is a Windows Store app called Movies & TV (Figure 2.1). It is a simple player but supports formats and codecs expected in general content delivery (various flavors of MPEG-4 primarily). Given it is focused on a consumer experience, it also supports closed captions or subtitles in the display and the ability to “cast” or wirelessly push playback to supported wireless devices, such as an Xbox on the same network or a Google Chromecast. It also supports the ability to add new codec support through the Microsoft store (for example, there is a free extension to add MPEG2 support to the Movies & TV app).

macOS QuickTime and iTunes

QuickTime is still the default player for video in the Apple ecosystem (Figure 2.2). QuickTime X is the latest version (the previous version was QuickTime 7). The reason for the jump in numbering from 7 to 10 (X) was to indicate a similar break with the previous versions of the product that macOS indicated. QuickTime X is fundamentally different from previous versions, in that it is provided as a Cocoa (Objective-C) framework and breaks compatibility with the previous QuickTime 7 C-based APIs that were previously used. QuickTime X was completely rewritten to implement modern audio video codecs in 64-bit. QuickTime X is a combination of two technologies: QuickTime Kit Framework (QTKit) and QuickTime X Player. QTKit is used by QuickTime Player to display media. QuickTime X does not implement all of the functionality of the previous QuickTime and has focused support of codecs to primarily H.264, ProRes, and a few others.

Third-Party Players

Built-in players are not the only option for playback. Several third parties have created both open source and proprietary players that will play back video and audio. The platforms and formats they support will vary for each. These players offer additional features over the default players such as expanded format support, video analysis, and some exporting features.

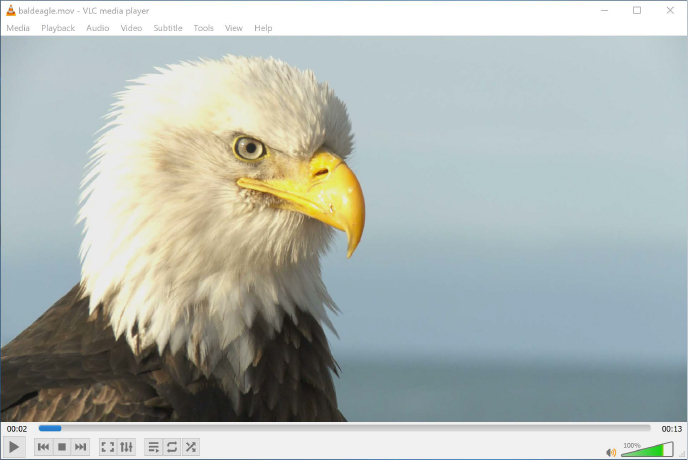

VLC

VLC is an open source software media player from the VideoLAN project (Figure 2.3). It is a highly portable multimedia player, encoder, and streamer supporting many audio and video codecs and file formats as well as DVDs, VCDs, and various streaming protocols. Versions of it exist for Microsoft Windows, macOS, BeOS, BSD, Windows CE, Linux, and Solaris.

It is well known as one of the most flexible video players on the market, able to play back virtually any format. It provides hardware decoding on supported devices and has advanced controls for adding subtitles and resyncing audio and video.

Telestream Switch

Telestream, a company known for both its professional and enterprise video tools, has a player marketed directly to compressionists. Its application, Switch, lets the user play a variety of web and professional media formats and, more importantly, inspect and adjust the properties of those files and then export a new file if needed. Switch enables visual file inspection and single-file transcoding, and it is an affordable software solution for professional media quality control.

Its functionality is broken down into three levels (and price points). The most basic Switch player is $9.99 and plays and inspects movies (providing metadata, and so on). The $199 Switch Plus adds the capability of editing the metadata of the video and gives extended support for HEVC, DNxHD, and many other professional codecs. The most expensive level, Switch Pro ($499), adds many more inspection tools, panels designed for monitoring audio loudness and playback on external monitors, and a timeline that highlights GOP structure and data rate details. The Pro version also includes the ability to compare alternate media (for example, comparing encoded video to source) and allows for exporting video as an iTunes-friendly package for those submitting content to the Apple Store for sale.

Switch is available for both Windows and macOS, which makes it an ideal cross-platform tool for testing and finding issues with your encodes.

HTML5 Players

HTML5 video is intended by its creators to become the new standard way to show video on the Web. The video tag is meant to replace the previous de facto standard, which was the proprietary Adobe Flash plug-in. Early adoption of the tag was hampered by lack of agreement as to which video-coding and audio-coding formats should be supported in web browsers. The <video> element was proposed by Opera Software in February 2007. Opera also released a preview build that was showcased the same day, and its manifesto called for video to become a first-class citizen of the Web.

Other elements closely associated with video playback in HTML5 are Media Source Extensions (MSE) and Encrypted Media Extensions (EME). Both are World Wide Web Consortium (W3C) specifications. MSE allows browsers to directly decode content supported by HTML5. EME provides a secured communication channel between web browsers and digital rights management (DRM) software. This allows the use of HTML5 video to play back DRM-wrapped content without the need of a plug-in like Adobe Flash.

The HTML5 specification does not specify which video and audio formats browsers should support. Browsers are free to support any video formats they feel are appropriate, but content authors cannot assume that any video will be accessible by all complying browsers. Next we want to go through a few of the common HTML5 players you may see referred to. It is worth noting that unlike desktop players, HTML5 players are more what framework developers will use either in an application or online to play back the media. Each will have its own supported features and quirks.

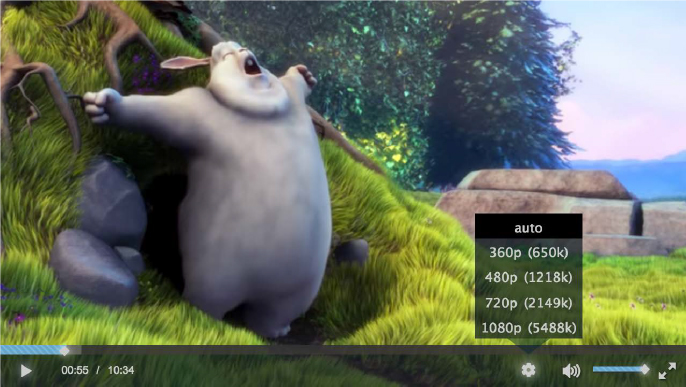

Video.js

Video.js is one of the most popular open source HTML players available (Figure 2.4). It is sponsored by Brightcove and is now used in more than 400,000 web sites. Video.js plays both HTML5 and Flash Video and works on mobile devices and desktops.

Projekktor

This is an open source video player project. Projekktor was released under GPLv3 for the Web, and it was written using JavaScript. This platform is capable enough to manage all compatibility issues and cross-browser problems while providing a huge set of powerful features such as preroll and postroll advertising, playlist and channel support, and Flash playback.

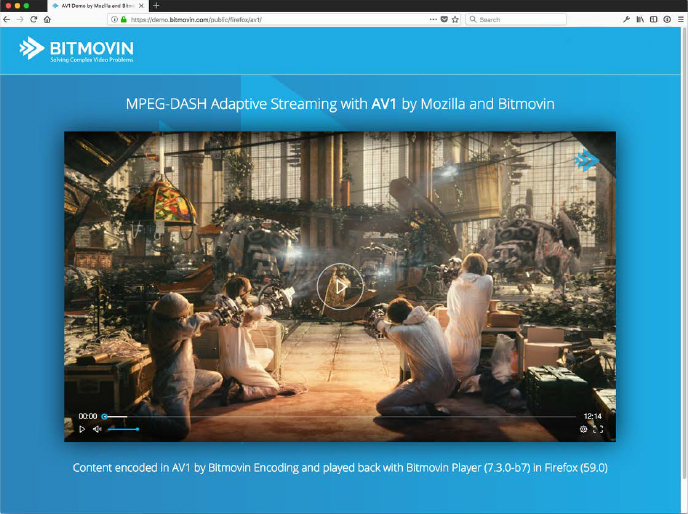

Bitdash

The HTML5 and Flash-based web player Bitdash can be used in web browsers on desktop computers and smartphones (Figure 2.5). This player enables the streaming and playback of MPEG-DASH or Apple’s HTTP Live Streaming, using either MSE or Flash, depending on the platform. DRM is enabled through the usage of EME as well as Flash. Bitdash is a product from Bitmovin, a multimedia technology company that provides services that transcode digital video and audio to streaming formats using cloud computing and streaming media players.

JW Player

JW Player was developed in 2005 as an open source project. The software is named after the founder and initial developer Jeroen Wijering and was initially distributed via his blog. JW Player has several different versions, including a free basic version under Creative Commons and a commercial software as a service (SaaS) version.

JW Player supports MPEG-DASH (in the paid version), digital rights management (DRM), interactive advertisement, and customization of the interface through Cascading Style Sheets (CSS).

Conclusion

These are the basic tenets of the compression part of video compression. To use them effectively, you need to consider how they apply to your daily workflow. Now that you are better informed about the fundamentals of video compression, in Chapter 3 you’ll learn what type of equipment and process you need in place to use that knowledge.