Chapter 2

Wearable Computing Background and Theory

2.1 Wearable Computing History

Depending on how we define the concept of Wearable Computing, how long it has been around can range from hundreds of years to several decades. At some level, even a wrist watch can be considered to be a computing device—it computes the time for you and is always on, ready, and available. But the term computing device or computer is characterized today by the existence of a processor (CPU) within—which is missing in the case of, say, a mechanical watch. At the same time, the earliest computers were mechanical and electromechanical in nature. All this can make it harder to arrive at one definite answer that is widely acceptable.

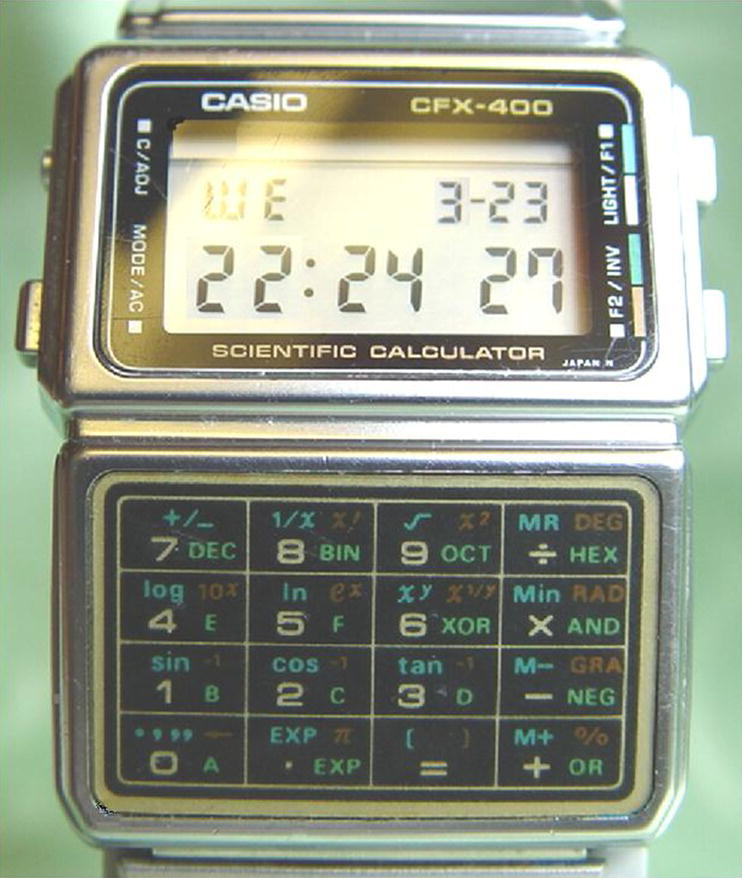

Since the 1980s, the consumer marketplace has certainly seen sophisticated digital watches with scientific calculation, games, audio, and video capabilities. Nelsonic Industries, a US company, produced a “game” watch in the 1980s, which served both as a timepiece as well as an electronic game device. Casio, the Japanese electronic company, offered a wide variety of digital watch models including the Casio CFX-400 scientific calculator watch as well as the Casio Databank CD 40, both of which were introduced in the early to mid-1980s (Figure 2-1).

Figure 2-1 Casio CFX-400 watch manufactured circa 1985.

Attribution: By Septagram at en.wikipedia [Public domain], from Wikimedia Commons.

Sophisticated, modern Wearable Computing has certainly been in use in military, industrial, and research and educational labs since the last few decades. For instance, headgear with displays has been used in the arena of military and space applications. Similarly, head-mounted displays have been in use by surgeons for performing advanced surgeries.

Today, the overall cloud-based ecosystem, economic factors, and also the human/user expectation from mobile devices have evolved to a point where wearables have great potential in the mass consumer market.

2.1.1 Wearable Computing Pioneers

There are several individuals who may be credited as pioneers of modern Wearable Computing. The following is a partial listing of such computer scientists, along with brief highlights of their contributions.

Edward O. Thorpe and Claude Shannon, both MIT Ph.D. mathematicians, have been widely credited for designing the first Wearable Computer in the early 1960s. Thorpe pioneered the application of probability theory in various arenas including hedge fund techniques in financial markets as well as the mathematics of gambling. Thorne and Shannon developed the first Wearable Computer and used it as a gambling aid when playing the game of blackjack, purportedly as a purely academic exercise (at a casino in Las Vegas, Nevada, where gambling was and continues to be legal). Subsequently, laws in the state of Nevada were revised to outlaw the use of wearables and other computing devices to predict the outcomes in betting and gambling.

Steve Mann is a Ph.D. researcher and inventor whose work since the 1980s has contributed immensely toward modern Wearable Computing technologies. Mann designed a backpack-mounted computer to control photographic equipment in the early 1980s while still in high school. Mann was one of the founders of the Wearable Computing Lab at MIT, and he continues to be an active contributor in the field of Wearable Computing.

Thad Stamer, a Ph.D. researcher and professor, has been actively contributing to the field of Wearable Computing and the associated topics of contextual awareness, pattern recognition, human–computer interaction (HCI), and artificial intelligence since the 1990s. Stamer was one of the founders of the MIT’s Wearable Computing Project and has a key role with the Google Glass project.

Edgar Matias and Mike Rucci from the University of Toronto have been credited with building a wrist computer in the 1990s. Mik Lamming and Mike Flynn at Xerox PARC demonstrated in the 1990s a wearable device, the Forget-me-not, that could record interactions with people and store them for later reference. Alex “Sandy” Pentland, a Ph.D. computer scientist, is an active faculty member at MIT Media Labs and one of the pioneers of wearable and data sciences. His work has focused on wearables, human, and social dynamics using data analytics.

As with any arena of complex technology and research, the knowledge base and ecosystem have been built based on the contributions from a multitude of dedicated researchers, professionals, enthusiasts, and hobbyists.

2.1.2 Academic Research at Various Universities

Wearable Computing has been a theoretical subject of academic research for several decades. A wide variety of pioneering work has been done at the MIT Media Lab, which focuses on the convergence of technology, multimedia, science, art, and design. HCI and wearable technologies are some of its core arenas of research. Columbia University too has pioneered work on augmented reality since the 1990s. Numerous universities continue to conduct research in the field of Wearable Computing and related fields of virtual reality and augmented reality. Much of the innovations seen in consumer market wearables today have originated from the academic research of years and decades ago.

At a fundamental level, Wearable Computing and research has a correlation with both virtual reality and augmented reality. “Virtual reality” provides the user with a simulated, unreal, or virtual sensory input.

Augmented reality on the other hand enhances the real world with additional computer-generated information such as audio, video, and other data, such that the user’s current perception of reality is enhanced. Augmented reality provides additional input that overlays and co-exists with the user’s real-time experience of their real-world environment. Augmented reality often uses computer vision and object recognition. Using virtual reality, NASA astronauts can “experience” being at a beach during the long periods of time in space, and get some relief from the psychological and physical demands of being in space, away from their home planet. The same astronauts benefit from augmented reality-based systems that are typically used while carrying out critical physical missions and maneuvers in space.

2.2 Internet of Things (IoT) and Wearables

The Internet of Things (IoT) may be thought of as an interconnected ecosystem wherein diverse computing devices, large and small, interconnect, collaborate, and cooperate with one another. The IoT represents the proliferation of small embedded computing devices that interconnect with the Internet. “Things” in the IoT include diverse devices such as health monitoring sensors, automobile sensors, pet biochips, indoor plant moisture sensors, home appliances, industrial and smart factories, smart transportation, agriculture-related sensors, and so on. There are estimated to be millions of IoT devices today, and according to market research and forecasts by the Harvard Business Review, 28 billion such IoT-type devices are expected to join the IoT by year 2020 (Figure 2-2).

Figure 2-2 Internet of things.

Attribution: “Internet of Things” by Wilgengebroed on Flicker, licensed under Creative Commons 2.0.

The world of IoT spans the arenas of consumer, industrial, transportation, safety, farming, and more. Environmental monitoring, infrastructure management, manufacturing and asset tracking, inventory control, safety monitoring, medical and health care, transport systems, fleet management, resource optimization and energy management, etc. are some examples of the application of IoT technology.

A discussion on IoT often brings up the topic of wearables and vice versa. IoT devices include wearables; some of the devices, that is, “things” in the world of IoT, happen to be wearable devices. Some wearable devices may interact with non-wearable IoT devices in order to share information or instructions depending on the needs of the applications.

Many definitions of IoT devices emphasize the aspect of their direct network addressability on the Internet, as in an independent IP address directly on the Internet. In practice, however, it is not necessary for each IoT device to have independent connectivity and addressability on the Internet. This is because IoT devices typically have access to other local networks, which in turn have the capability to connect to the Internet. With the cloud serving as an intermediary, it is possible for IOT devices to connect as client devices to the Internet-based cloud endpoints and maintain long-lived connections over which they can receive data and commands (without needing to possess their independent IP address directly on the Internet). Devices worn on the user’s body, for example, have access to the user’s phone via Bluetooth, which in turn typically has carrier service. Smart thermostats, washers, dryers, refrigerators, sprinkler systems, etc. in a user’s home connect to the local home network over Wi-Fi or wire. Devices in an industrial environment often have a local network. In all these instances, proximate devices and networks can pair, tether, and interconnect in order for the IoT-type devices to bridge the network path to the Internet and connect to Internet cloud-based endpoints as connected client devices and thereby attain two-way communication capabilities.

Internet and phone service providers typically have a business model that benefits from provisioning Internet access for individual devices including IoT devices independently—with the associated costs to the consumer. There are numerous disadvantages of having smaller devices directly on the Internet with their own IP address. For one, they are more susceptible to Internet cyberattacks and hacking. Also, it is typically more expensive to have to provision and provide direct connectivity for every IoT device independently. Traditionally, we have had devices within the local network that do not have their own Internet address, which can remain connected to the Internet all the time—as client devices. For example, personal computing devices within a local home network connect as client devices and have the ability to send and receive information.

The use of the cloud as an intermediary helps devices provide remote networked services without exposing the devices as Internet endpoints. As an example, Google Cloud printers allows you to print from anywhere over the network, yet the Google Cloud printer is connected on your local network via which it connects as a client device to Google Cloud on the Internet; it is not exposed as a device on the Internet with its own public Internet IP address, and yet it services requests to print documents over the Internet via the cloud as the intermediary.

2.2.1 Machine to Machine (M2M)

The term Machine to Machine is associated with and commonly used along with the term IoT. M2M and IoT are associated and have significant overlap of concepts and applications. M2M refers to the direct two-way connectivity between a computing device and a “fellow” computing device. In the world of M2M, devices typically have a network address directly on the Internet, and devices are peers on the network. M2M was originally used in specialized industrial segments including automation, instrumentation, and process control. M2M devices are generally characterized by small devices having their own IP address directly on the Internet.

2.3 Wearables’ Mass Market Enablers

Today, a coincidence of many factors has paved the way for the arrival of wearables to the mass consumer market. The manufacturing economy of scale has made small powerful devices more affordable. The human dependence and appreciation of mobile computing has expanded the possibilities for wearable devices to provide additional value to the users.

2.3.1 “ARM-ed” revolution

The ARM family of processors power the vast majority of mobile devices such as mobile phones, tablets, and small embedded devices including the majority of the IoT devices. ARM processors also power set-top boxes, televisions, and netbook computers. The ARM family processors have typically required significantly less power compared to the x86 family of processors. ARM processors are light, portable, and small in size. The success of mobile devices has hinged on the availability of low-cost, lightweight, compact, and power-efficient ARM processors. ARM has played a key role in the last many years in the success of affordable mobile devices that can run on battery power for hours on end.

Over 50 billion ARM processors have been manufactured as of 2014, and this appears to be only the beginning. The availability of wearable devices hinges for the most part on the ARM-based processors and system on chip (SoCs) covered in the next section. Incidentally, ARM processors have recently made an entry into the market segment of servers that reside in data centers on the cloud.

ARM Holdings plc is the British company that bears the ARM name and develops the architecture and design of processors. ARM Holdings licenses the technology to other companies for purposes of production and manufacturing. ARM licensees include companies such as Qualcomm, Broadcom, Marvel, Freescale, Amtel, Nvidia, Texas Instruments, NXP, ST Microelectronics, Applied Micro, AMD, Samsung, and Apple. These are the companies that make ARM processors or chips; some of these companies also manufacture and market mobile devices as well.

2.3.1.1 ARM alternatives

MIPS from MIPS Technologies, Inc. is an instruction set that competes with the ARM family of processors. MIPS has been used by embedded and real-time Linux-based operating systems since decades. Android was ported to MIPS in the year 2009. MIPS Technologies, Inc. was acquired by Imagination Technologies, a UK-based processor R&D and licensing company that is widely known for their graphics processors. Several prominent companies such as Broadcom offer both MIPS- and ARM-based processors/SoCs for various market segments.

Intel, which is well known for its highly popular x86 family of processors, has been working on creating low-cost and low power consuming x86 family processors known under monikers such as “Atom,” “Quark,” “Edison,” etc., which are aimed at the IoT and wearable market segments.

2.3.2 System on Chip (SoC)

Although the CPU is the heart of any computing device, the CPU works not in isolation but in conjunction with several other key components such as memory, graphics processing unit (GPU), audio chips, wireless radios (Wi-Fi, 4G), USB controllers, and so on. A system on chip (SoC) integrates the CPU with the other key components such as memory, GPU, USB controller, wireless radio, etc. into a single integrated chip.

While a computer cannot be built based on a single CPU chip alone, it can be built based on a single SoC chip. In recent years, the trend has been in the direction of SoC-based consumer devices, coincident with the proliferation of mobile devices and the success of the ARM-based processors.

The SoC has the advantage of its highly compact size, lower cost, and less wiring. SoCs have now begun to appear inside larger systems such as netbooks and even server systems. The limitation of the SoC can be that it is not conducive toward the upgrade or replacement of individual constituent components, as they have all been integrated upfront.

The mass production and availability of low-cost wearable devices hinges on the availability of SoCs in an innovative and competitive market. Today, there are well over 50 SoC manufacturers who manufacture SoCs based on ARM-, MIPS-, or Intel-based processors.

2.3.3 Human Dependence on Computing

Consumer interaction and dependence on computing started with the personal computer (PC), but it was the success of the smartphone that deepened this dependency on and appreciation of computing devices on a more engaging, intimate and somewhat “constant” basis. Now that the smartphone has become an integral part of most users’ lives and the value from computing in their daily lives adequately appreciated, there is greater interest and potential value from the enhanced functions and specialized use cases that wearables can provide.

2.3.4 Smartphone extensions

In general, smartphones have tended to provide a software solution and a software-based replacement for many hardware gadgets. At first, and since well over a decade ago, the mass adoption of feature phones and later smartphones tended to cause a progressive decline in the use of hardware devices such as digital watches, alarm clocks, cameras, scanners, simple level instruments, and so much more. In a way and to some extent, the introduction of wearables into the consumer computing ecosystem today seems to run contrary to this trend. Today, our appreciation of and dependence on the smartphone paves the way for devices such as a wearable smart watch. Users experience some of the limitations of the much utilized smartphone—in terms of their intrusiveness toward the user’s real-world activities—and this helps justify wearable devices, some of which aim to elegantly extend the smartphone and act somewhat like an accessory and extension of the smartphone. Still other wearables aim to provide specialized functionality such as fitness sensors and health monitoring.

2.3.5 Sensors

Many smartphones have sensors for motion, position, environment (temperature, pressure, humidity, and light), and so on. Fitness devices have sensors for fitness-related parameters such as heart rate, step counters, etc. Sensors play a key role in the world of wearables and IoT. The inference of the user’s context and access to fitness readings—which tend to make wearable applications more useful to consumers—are possible due to the availability of low-cost, power-efficient sensors.

2.3.5.1 Micro-Electro-Mechanical Systems (MEMS) Sensors

Much like compact, power-efficient, and economically priced embedded computing devices are made possible in the consumer market by the availability of a wide variety of SoCs, the compact, power-efficient, and economically priced sensors are made possible predominantly by micro-electro-mechanical systems (MEMS) technology. MEMS devices have a size in the range of 20 micrometers to 1 millimeter and are made up of components that have a size in the range of 1–100 micrometers (0.001–0.1 millimeters). MEMS devices typically consist of a central unit as well as micro-sensors and micro-actuators that interact with the surroundings. MEMS devices can serve the functions of sensors and actuators. MEMS at the smaller scale merges into nano-electro-mechanical systems (NEMS). The nanoscale refers to structures in the order of 1–100 nanometers. One nanometer is one billionth of a meter.

MEMS technology is found to reside within accelerometers, gyroscopes, touch sensors, temperature sensors, humidity sensors, microphones, health and medical sensors, inkjet printers, game controllers, automobiles (dynamic stability control and tire pressure sensors), hard disks (to “park” the head when free fall is detected, in order to protect the disk and prevent damage and data loss), and much more.

2.4 Human–Computer Interface and Human–Computer Relationship

The Wearable Interaction represents a different flavor of HCI/human–computer interface. The human–computer interface as well as the human–computer “relationship” has been evolving over time.

2.4.1 Human–Computer Interface: over the years

The human–computer interface refers to the interaction between human and computer. The computer receives input data from the human via various mechanisms such as keyboard, soft keyboard, mouse, touch, gestures, speech, audio, video, vision, and so on. The computer responds with output data such as screen displays, printouts, audio, video, and more.

Until the 1970s, most of the input and output—the interaction between human and computer—was mostly based on “punch cards.” A punch card is a thick paper card that encodes programs and data. Computer scientists, programmers, and operators used a keypunch, a typewriter-like device to write data that was fed to the mainframe computer via a punch card reader. This was the era of the “mainframe” computer that was used in the corporate, industrial, military, and academic worlds. This punch card-based interaction kept the use of computers limited to computer scientists, programmers, and operators and as far as possible from the consumer. At that time, consumers typically had no interaction with or direct use of such computers or any computers at all. The advent of the PC era, which began in the late 1970s and 1980s, changed that model, slowly but profoundly. Incidentally as of 2012, some voting machines in the United States reportedly used punch card based mainframe computers.

In the PC era of the 1980s through to the recent decade, the computer and the consumer interacted via the keyboard, monitors, and mouse and to some degree via speakers and microphones. In this PC era, the users’ computer was a located in their home or office, perched on their desk. Soon, the laptop arrived and was easier to carry around on business and leisure.

In the post-PC world of today—the era of mobile and cloud—consumers interact with their mobile computing devices via intuitive mechanisms such as soft keyboards, touch, gestures, voice, audio, video, and so on. Computing resources on the cloud are also typically an important part of this interaction; however, these cloud computing resources are somewhat abstracted out in terms of their location, specification, and power needs. The consumer cares about the quality of experience and service but typically neither knows nor particularly cares where the cloud-based computers that serve their needs physically reside nor what their specifications of CPU, memory, etc. look like. In retrospect, the PC was not all that personal since it was not that close at hand, compared to today’s mobile devices which are close at hand all day and thus are more personal.

2.4.2 Human Computer Interaction (HCI): Demand and Suggest

Two important paradigms in modern human computer interaction (HCI) design are the “Demand” and “Suggest,” which are covered in this section.

2.4.2.1 Demand Paradigm

In general, the HCI certainly started out in a “Demand” paradigm wherein the human demands some information or action from the computer, while the computer provides the information or performs the action in response to the “Demand.”

The Demand Paradigm has historically been and still is prevalent in the world today. In the Demand Paradigm, the human is in the driver’s seat and asks the computer for information, while the computer “dumbly” responds. The Demand model in a way underlines this somewhat of a master–slave relationship between human and computer.

Certainly, the human is the master here, and humans created computers to serve and assist them. Yet, the limitation of the Demand model lies in the fact that the mundane actions need to be initiated by the human every time and it is somewhat of a “manual” process for a human to have to remember to initiate something—that might be predictable. It is often predictable that a certain Demand is highly likely at a certain time and place or context, and in such a scenario, the human ends up having to ask “manually” for the obvious.

Humans have gotten accustomed to the Demand model, which entails having to remember to perform various tasks such as monitoring their stock portfolio or keeping updated about new homes that have arrived on the market during a home search and home buying project. It can cause fatigue if the human keeps on aggressively demanding information during a period that the information has not changed (e.g., the stock portfolio value has been steady; and no new homes arrived on the market during this period of aggressive “demands”). On the other hand, the human can forget to demand information and miss out on being updated about changes in the portfolio value or new home arrivals on the market.

When the user is on the beach or hiking, for instance—in a Demand model—the user needs to remember to frequently “demand” information such as news for shark attacks, tides, crimes or violence, fires, or thunderstorms repeatedly and frequently, in order to keep abreast of real-world events pertinent to their current activity and context. Many or most of the demands will tend to return no significant new information, and this is a drawback of the Demand model.

2.4.2.2 Suggest Paradigm

In the Suggest paradigm, the human allows the smart and intelligent agent-based automation to understand the context, analyze the user’s history data as well as other relevant general data, and make reasonable inferences in order to proactively provide information or suggestions that have a high likelihood of being useful, timely, and relevant to the user. In the Suggest model, the user is notified automatically when the computer, driven by an intelligent agent-based system, has detected information and scenarios that justify a suggestion or notification or alarm to the user.

In case of the stock portfolio and home search examples earlier, the intelligent agent provides suggestions/notifications when something noteworthy and significant has occurred, such as a change in the stock portfolio beyond a threshold or when a new home has arrived on market that strongly matches the user’s known search criteria.

2.4.2.3 Demand or Suggest?

In the Demand-only model, the human on the beach or on a hike will need to repeatedly search the news manually for any recent shark attacks, thunderstorms, fires, and so on in order to keep abreast of such information. This can distract and detract from enjoying their real-world activities. In contrast to the Demand model, when the sophisticated Suggest model is in place, the user can relax and enjoy their real-world activity and be notified automatically when something noteworthy occurs. This can be accomplished in various scenarios such as by using the user’s current location (i.e., Laguna Beach), the user’s current context (on the beach with family), and automated scanning for current news and weather about the location, inferring the sentiment (such as danger), and then making the determination that an alert needs to be pushed out to the user. Such intelligent agent cloud-based computing scales well for a large set of users since the efforts of such computation is often performed, not for an individual user but for a set of users that are in the “same boat” or beach at a given point in time.

2.4.2.4 Demand and Suggest: A Healthy Balance

Demand and Suggest are not mutually exclusive; rather, the new Suggest model ideally coexists with the Demand model. HCI has evolved over these years of mass adoption; it has matured to a point today that a healthy balance and mix of Demand and Suggest paradigms make an optimal interaction between human and computer possible. It saves time, is more efficient, and is based ideally on adaptive algorithms—whenever the user does not enthusiastically consume the routine suggestions of particular categories, back-off policies kick in, in order to make them less frequent.

2.4.3 Evolution of the Human–Computer Relationship

The human–computer relationship has evolved over time in the direction of “progressively deepening intimacy.” For consumers, the computer itself had become smaller in size and weight, and its shape has become more elegant. From being located on a desk in front of the human—at arm’s length—the computing devices moved closer by being perched on human laps and closer still by being housed in human pockets and purses or held in human hands for extended periods of time. The next step in the progression of this trend brings us to computing devices that are in contact with the human body for extended periods of time.

2.5 A Multi-Device World

Today, a consumer interacts with a wide variety of personal computing devices. There are often several computing devices per user in developed and developing nations. Such computing devices are located in varying degrees of proximity. Some devices may be worn on the body, such as a smart watch or sensor band, while other devices may be carried in the pocket or handbag. Some devices may be located in the home, some in the automobile, and so on.

2.5.1 Spatial Scope of Computing: Devices near and Devices far

In a world of a multitude of devices, networks, and services that a user interacts with, we find that there are some computers and networks that reside closer to the user and some progressively farther away from the user. Proximate networks include interconnected devices close to the user, while wider networks include a company or college campus, a citywide metro network, the Internet, and so on.

By organizing and categorizing the devices and networks in this manner and seeing computing from this perspective, it is easier to see how these various devices and networks can be made to work together and provide synergistic value.

In Figure 2-3, the smaller circles or ellipses represent devices and networks that are “proximate”—physically situated nearer to the user—such as the user’s mobile phone, tablet, and smart watch. The ever-expanding larger ellipses represent computing resources such as cloud-based computing resources that reside farther away from the user, which the user nonetheless interacts with—via their proximate devices. Not all devices that are spatially closest to the user will necessarily connect to the Internet—which is why we find that the smallest ellipse is not wholly contained within the larger ones.

Figure 2-3 Spatial scope of computing—devices near, devices far.

In such a nomenclature and categorization, the devices that the user may wear on their person are denoted as the body area network (BAN), while devices that reside within the user’s home are denoted as the home area network (HAN).

2.5.2 Body Area Network (BAN)

A Body Area Network (BAN) is a wireless network of wearable computing devices that are centered around the human body. Such devices may be surface mounted or even embedded inside the body and are typically connected wirelessly over this BAN to a mobile smartphone or tablet device. The mobile devices can collect data from the body-worn devices and store such data; they may also, in turn, interconnect the BAN to the Internet. Thus, it is technically feasible for such body sensor data to be made available for remote monitoring by medical systems and so on.

Also, consumer fitness sensors and applications can help the user get insight into the various commonly understood metrics such as temperature, resting heart rate, average resting heart rate, and so on. Consumers can potentially share their fitness data with their doctors and health care providers. With the mass consumer usage of bodily fitness sensors in conjunction with cloud-based storage, there is potential for the power of large-scale computation to provide significant prediction, inferences, and forecasting. There is promise of a path to the much needed, affordable, and proactive health care—via use of Internet-based technology. Medical devices and applications are regulated by particular governmental agencies and need to comply with applicable laws, and these generally vary by country. Medical devices and applications are distinct from fitness sensor devices and applications, especially from a legal and regulatory perspective.

Some medical devices and applications merely monitor particular bodily parameters and are technologically quite similar to fitness devices and applications, but legally, they are distinct—fitness devices and applications are not regulated by the governmental agencies, that medical devices and applications are.

Other medical devices and applications regulate, actuate, or control some bodily parameters and functions and these are significantly distinct from fitness devices/applications, which do not control or actuate any bodily function. In this sub-arena, medical devices and fitness devices are technologically dissimilar.

The rate at which technology is evolving makes it somewhat difficult for laws and legislation to catch up or keep up. Legislation can often become the bottleneck in the path of innovation and progress in the health care arena. There is a lot of promise and potential for a more fundamental transformation in the delivery, management, and cost-effectiveness of health care that leverages the technological advances of lower-cost sensors, diagnostic software Apps that can run on generic lower-cost handheld (phone and tablet) devices, and beyond.

2.5.3 Personal Area Network (PAN)

The personal area network (PAN) is a network of interconnected devices that are centered around the user’s living space; it includes devices that users carry with them including mobile devices such as smartphones, tablets, Chromebooks, netbooks, etc.; devices on the desk and devices in the home including home automation; and smart networked devices such as washers, dryers, refrigerators, and so on. The PAN can be considered to include the BAN, but it can also be relevant to think of the PAN as distinct from the BAN. In any case, the PAN and the BAN are spatially proximate and have opportunity for meaningful interconnection.

Bluetooth and IrDA are two of the common technologies that help interconnect the BAN and the PAN. IrDA has been around since the 1990s and is an industry standard and a set of protocols that address communication and data transfer over the “last one meter” by using infrared light. Bluetooth is a set of protocols that addresses wireless communication over distance of a few feet. Bluetooth Low Energy (LE), also known as Bluetooth Smart, aims to reduce the power consumption. A recent update to the Bluetooth specifications (version 4.2) addresses the direct connectivity of Bluetooth Smart devices to the Internet.

2.5.4 Home Area Network (HAN)

The Home Area Network (HAN) is a local area network (LAN) that interconnects devices that are within the home or within close proximity to the home. Such a network may include mobile devices and wired computers, televisions and entertainment devices, printers, scanners, thermostats, lamps, sprinklers, and so on. Most Internet service providers provide one IP address for the external network facing router. All the devices within the network typically have a local private IP address. The router that connects to the Internet service providers’ network represents the boundary at which the Internet service provider’s network ends and the home network begins. While the user is at home, the HAN may typically include the PAN and the BAN.

Network address translation (NAT) is a technique that hides the local IP address of a device behind a single device such as a router—which may have a public/external IP address. NAT is closely associated with IP masquerading—wherein one device such as a router masquerades as several other devices behind it—that have a local private IP address but appear as a single public/external IP address to the external public network. Such devices are able to initiate connections to the Internet, but are not directly addressable on the Internet. Most home networks use a NAT-based arrangement.

2.5.5 Automobile Network

There are devices and networks associated with our automobiles such as entertainment, locks, keys, and diagnostics. These form part of the automobile network and may overlap with the home network, while the automobile is parked within range of the home wireless network. The PAN and the BAN can become part of this automobile network when the user is within the vehicle.

2.5.5.1 Controller Area Network (CAN)

There are formal protocols related to vehicles—a vehicle bus is a specialized internal communications network that interconnects components inside a vehicle (e.g., automobile, bus, train, industrial or agricultural vehicle, ship, or aircraft). Special requirements for vehicle control such as assurance of message delivery, nonconflicting messages, minimum time of delivery, and EMF noise resilience, as well as redundant routing and other characteristics, mandate the use of less common networking protocols. Protocols include controller area network (CAN), local interconnect network (LIN), and others. CAN bus is a vehicle bus standard designed to allow microcontrollers and devices to communicate with each other within a vehicle without a host computer. CAN bus is a message-based protocol, designed specifically for automotive applications but now also used in other areas such as aerospace, maritime, industrial automation, and medical equipment.

2.5.6 Near-Me Area Network (NAN)

A near-me area network (NAN) is a logical communication network that focuses on communication among wireless devices in close proximity. Unlike LANs, in which the devices are in the same network segment and share the same broadcast domain, the devices in a NAN can belong to different proprietary network infrastructures (e.g., different mobile carriers). So, even though two devices are geographically close, the communication path between them might, in fact, traverse a long distance, going from a LAN, through the Internet, and to another LAN. NAN applications focus on two-way communications among people within a certain proximity to each other.

2.5.7 Campus Area Network

A campus area network is a network made of LANs that are interconnected within a geographical area and owned by a single entity such as a corporation or a university. Such a network typically has various relevant network services.

2.5.8 Metro Area Network

A metro area network is a network that spans a metropolitan area such as an entire city and is managed by a single, coordinating organization. Most networks are now aligning with the Ethernet-based metro Ethernet, which is used to connect subscribers to the larger networks including the Internet. There is tremendous potential to leverage metro area networks in many dimensions such as emergency management, community, and services. At a grand scale, the devices on the network and their activity and location provide a reflection of the current state, en masse of the human population, pets, resources, energy conservation, and so on. Such opportunities for efficiency and optimization of human activity, resources, safety, and so on can help realize the vision of the “smart city.”

2.5.9 Wide Area Network

A wide area network networks include telecommunication networks that span national and international boundaries. The Internet, too, can be considered to be a wide area network.

2.5.10 Internet

The Internet is the global, interconnected network of networks that is based on the standard TCP/IP communication protocol. It consists of millions of public, government, academic, and business networks linked by a wide range of electronic, wireless, and optic fiber technologies.

There are two main name spaces in the Internet—the Internet Protocol (IP) address space and the Domain Name System (DNS) maintained by the Internet Corporation for Assigned Names and Numbers (ICANN). The technical standardization of the core IPV4 and IPV6 protocols are managed by the Internet Engineering Task Force (IETF), which is a nonprofit organization.

The modern Internet came into being sometime around the mid-1980s and initially was used predominantly in academic institutions. Commercialization occurred in the 1990s. However, the origins of the modern Internet date back to the 1960s and the research conducted by the US government as well as UK and France.

2.5.11 Interplanetary Network

Even though an interplanetary, galactic network seems a little like science fiction, an initial form of such a network already exists—the International Space Station is already connected to planet earth’s Internet.

A wider interplanetary network requires a specialized set of protocols to address more of a store and forward approach that handles the delays and interruptions that could range from minutes to hours in view of the distances. One of such initiatives is the delay-tolerant networking (DTN), which is an architecture that endeavors to address technical issues in heterogeneous networks that lack continuous network connectivity including networks in space. At the core of DTN is the Bundle Protocol family—very similar to the Internet Protocol (IP)—which has been designed to account for the delays and disruptions expected in space communications.

2.6 Ubiquitous Computing

Ubiquitous computing is the computing paradigm of an always available access to computing resources in a coherent manner from any location and via one or more user-facing devices. The mobile era has set the stage for this model of “ubiquitous computing” to come into widespread practice, whereby data and computing is accessible from anywhere and at anytime—typically subject to network connectivity. Ubiquitous computing emphasizes universal access to computing as well as collaboration of devices over the network. Ubiquitous computing is known by other names such as “pervasive computing,” “ambient computing,” and so on.

2.7 Collective, Synergistic Computing Value

We have commenced, since several years, to interact with a wide variety and growing number of computing devices, via varying mechanisms of interaction. Our interaction with, and the value derived from computing devices, is (or ideally ought to be) less about interacting with one particular device and more about how these various devices might work together via interacting, interconnecting, and collaborating in order to assist us, save time and effort, and improve efficiency and productivity among many other such dimensions of our lives.

The computing environment, infrastructure, and our mindset has now matured and evolved to the point of being able to answer the questions of what these multitudes and groups of computing devices can do for us collectively and collaboratively, rather than what any particular device can do individually, in isolation.

Wearables can play a key role in such an ecosystem due to their proximity to the user and/or the physical environment.

2.7.1 Importance of the User Centricity and the User Context

User centricity in conjunction with a user context that transcends the existence or uptime of any particular device is particularly important and relevant. The transdevice user context is one of the key foundations of a more interactive, intelligent agent-based, ubiquitous model of computing.

As was the case decades ago, a given user interacted with about one personal device, that is, on a one-to-one basis such that device centricity happened to be mostly coincident with user centricity. But today, each user interacts with a multitude of devices, that is, on a one-to-many basis, so it becomes important to align with the user-centric model of data and context. A device-centric model tends to become obsolete in a world of many devices per user.

2.7.2 Distributed Intelligent Personal Assistant

An intelligent personal agent performs tasks, autonomously on an ongoing basis, in order to make human lives more convenient and safer. In a world of a multitude of diverse devices and in order to serve a user’s needs at all times, the intelligent agent ideally runs not on any particular device, but as a distributed intelligent agent that runs across collaborating devices, which are user context aware at all times. The cloud is certainly the ideal candidate for the “headquarters” for such a distributed intelligent personal agent. The devices that reside close to the user also have great importance due to their ability to provide sensor signals that are the basis for the inferences about the user’s current activity, context, and environment.

2.8 Bright and Cloudy: Cloud-based Intelligent Personal Agent

The foundation of the Suggest model is a sophisticated Intelligent Agent that is aware of the user’s contexts at all times, watches out for the user’s well-being at all times, and adapts its behavior based on learning algorithms. Such an intelligent agent on the cloud gives it a bird’s-eye view and much depth and width of perspective.

Given a user who is driving and headed in a certain direction, the cloud-based intelligent agent can not only suggest alternate routes when the route ahead has traffic congestion, but it can also extrapolate all the various possible events such as ongoing car chases, ambulance paths, hurricanes, and so on that could affect the user’s projected route—in order to make timely suggestions and recommendations to keep the user safer and on schedule for appointments and arrival destinations.

Analysis of the user’s current location and context, in the backdrop of the news and events that occur moment to moment, is a full time job and one that can be best placed in the hands of this intelligent personal agent that resides primarily on the cloud, so the user is freed up to contemplate on or engage in matters of deeper significance.

The cloud provides both high-capacity storage and also tremendous processing power. The cloud-based systems can optimize computations for a group of users in the same “boat” or situation and benefit from the economy of scale. Cloud-based data centers are typically located close to sources of electric power, which reduces transmission losses and improves reliability.

2.8.1 Google / Cloud-Based Intelligent Personal Agent

Google, as the search engine, initially started out providing us access to information and knowledge. After having started out providing access to the world’s information, Google has over the years become a prominent repository of the world’s information. Over time, by virtue of mass usage and analysis of patterns and distribution in search terms, Google Insights acquired deep predictive capabilities. For many years, Google was able to predict an outbreak of the flu earlier and more accurately than the Centers for Disease Control and Prevention (CDC).

Google, as the cloud repository of the users’ email, documents, photos, calendar, and location history stored on Google’s secure cloud infrastructure, has the advantage of the best data and the best algorithms to create intelligent computing value for the consumers.

2.9 Leveraging Computer Vision

In the backdrop of IoT and an ubiquitous computing environment, there are sensors that can detect various real-world parameters of interest. These could include sensors embedded into the highway road’s surface to detect traffic volume or sensors embedded in farmed land that detect moisture levels or moisture sensors embedded in the soil within the potted plant in your living room.

Video and audio sensors include cameras and microphones, which are often IoT devices, but they can include handheld and wearable devices. Video data from a section of highway can, for example, be analyzed in order to infer the traffic density without the need for sensors embedded into the highway road surface. The quality of the road surface can also be inferred by means of video data from a camera that covers a section of the road.

Computer vision is a field that includes the acquisition and processing of image data in order to infer relevant information. Computer vision aims to perceive and understand image data and, to some degree, duplicate the abilities of human vision. Computer vision algorithms can detect real-world objects such as human faces and cars in real time and thereby count cars and people that pass through a section of road or walkway. Some use cases of sensors embedded into surfaces for acquisition of particular real-world information can be addressed via image data and computer vision algorithms. Depending on the specifics, it may be less expensive and easier to install a camera and a vision-based system compared to a multiple specialized embedded sensors.

2.9.1 Enhanced Computer Vision / Subtle Change Amplification

While computer vision started out with the “modest” goal of duplicating human vision, researchers at the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT, which include Professor William T. Freeman and Michael (Miki) Rubinstein (Ph.D.), have made progress in the arena of analysis and amplification of subtle motion and color changes.

Freeman and Rubinstein share with us the big world of small motions and changes in color, which when detected and amplified can give us “superhuman” vision. Rubinstein—as part of his Ph.D. research, with Freeman as his advisor—developed methods to extract and amplify subtle motion and color changes from videos. The beating heart results in the rhythmic flow of blood in the human body, which causes corresponding subtle, rhythmic changes in the color of the human skin. These color changes are generally invisible to human eye. Using the video signal from a regular camera and by detecting these subtle color changes via signal processing algorithms, it is possible to infer the heart rate. By creating a change amplified version of the video, it becomes easy to perceive the heart rate visually. This is an instance of vision enhancement via color change amplification. The human breath results in subtle elevation and movement of the chest and stomach area. Neonatal infants typically need to be monitored for vital signs while minimizing disturbing or touching them. The subtle motion of the belly after amplification yields a modified video which makes their breathing and the rate, thereof, visually obvious—without needing to touch or disturb them. Rubinstein also demonstrates other applications of this technology such as recreating a conversation by amplifying the movements of a crumpled bag of chips while a conversation is occurring in the vicinity. By zooming in on small motions of the crumpled bag and amplifying them in the order of a 100 times and after converting the motions into sound, the conversation can be constituted. A TED talk by Michael Rubinstein “A Big World of Small Motions” as well as other videos on this technology is available at:

Such amplification of subtle motion and color changes can be effectively applied to other arenas such as industrial, transportation, agricultural, and more. In one approach, a grid of moisture sensors embedded into the soil in a section of farmland provide useful input that can be used to time and control the periodicity of watering of the crop. In another approach, the video feed from a camera that “observes the crop” can be analyzed, and the subtle motions of the crop such as the swaying motion in the wind or any slight color changes or wilting can be amplified to detect commencement of crop dehydration and making it more visible and detectable for timing the watering accordingly.

Thus, the ordinary camera’s video feed can be used to infer and reveal various measurements indirectly via sophisticated algorithms and computation.

2.10 IoT and Wearables: Unnatural and over the top?

So far in this chapter, we have covered the technological aspects of computing and networks. It certainly seems that a world with many devices, clouds, IoT, and wearables is unnatural and represents somewhat of an overdose of technology.

Just how unnatural and over the top is this world of IoT and wearables—smart devices, smart homes, sensors, intelligent agents, ubiquitous computing, and so on? The answer is subjective and depends on one’s perspective. This section covers some correlations seen in nature and human history relating to networks and computation.

At a conceptual level, networks represent paths of transportation or flow of physical matter, services, and information. Tools represent extensions of biological intelligence and abilities. Computations involve sensing and recording data of data, analysis, and prediction. All these concepts are present in nature and not unique to human civilization or computer science. There is potential value that can be derived from the use of tools and computation, and there is the choice that one can make individually—to use or not use particular offerings that come from these technological advancements.

2.10.1 Human History of Tool Use and Computation

What we see today is, probably, merely an acceleration of a trend that was established and has been in place for tens or hundreds of thousands of years. Although technology has advanced in recent decades, there is no fundamental shift from our fundamental dependence on tools and computation to improve our daily lives and even our chances of survival.

Humans and their ancestors have been a tool maker and computational species. Long ago, tracking the movements of the sun, moon, planets, stars, and constellations helped our ancestors understand and predict celestial and astronomical cycles and the seasons; build clocks and calendars; and plan hunting, migration, and planting.

Observations of various patterns and associated predictions were the factor that enhanced the chances to human survival. Long ago, the rock chip gave human ancestors the competitive edge for survival. Tool use actually caused an increase in brain size and that bigger brain helped in building the next generation of more sophisticated tools.

The use of tools for hunting helped provide adequate meat more easily, which helped nourish the brain and improve its size and intelligence and provide more time to think and ponder and gaze at the skies and create rock art.

Not too much has changed, it would seem, because today it is the silicon “chip” that gives us the competitive edge, individually and as a species. It extends our memory, helps us consume, manage, create, and share information. It helps predict many aspects of human interest such as weather, finances, health, and more, to help us live more comfortably, safely, and efficiently.

Today, with the highest human population in recorded history inhabiting planet earth, perhaps the computing ecosystem of wearables, IoT, and artificial intelligence can solve many of the problems of infrastructure and resource management, energy efficiency, health monitoring, manufacturing efficiency, city and township management, and so much more.

2.10.2 Communication Networks in Nature

There are numerous examples of networks in nature that enable communication. It turns out that plants interconnect their roots with other plants—via the “mycelium,” the branching threadlike, vegetative part of mushrooms (fungi) that grows in the soil—thereby forming a communication network via which information such as warning signals of pathogen and aphid attacks are transmitted between plants. Mycelium can be really tiny, and they can also be quite massive.

Mycelium is useful in nature and to the ecosystem in various roles—including the role of a communication network. Paul Stamets—the renowned mycologist and author of Mycelium Running: How Mushrooms Can Help Save the World—shares some of his insight into the world of mycelium, which form large 2000 plus acre networks in the forests of the Pacific northwest of the great North American continent. The mycelial network helps the overall health of the forest by distributing nutrition and information for the overall good of the forest. The mycelial network thrives in a healthy forest, and it strives to keep the forest healthy. Stamets points out in his writing and talks on TED (http://www.ted.com) that fungi are sentient beings that can sense the environment, human presence, and much more and that the mycelial network makes it possible for particular trees that do not receive adequate sunlight to receive nutrition via the mycelial network’s ability to access and transport needed mineral nutrition.

Similar to the networks in nature, the important foundations of modern human civilization include the advanced systems for transportation, power transmission, water distribution, and information superhighway. Computation and communications have an important place in human civilization and are not necessarily all that artificial in concept. IoT and wearables have an important role of the sensor–actuator network, which addresses the sensing and detection, and feedback to the periphery of this network. The periphery of this computational network is what engages people and their relevant, significant “things” of interest directly.

2.10.3 Consumption of Power: by computational systems, biological and artificial

Our computational and artificial “intelligence” is built by, and is an extension of, our human biological brain. It turns out that the Internet somewhat resembles a massive organism and also our nervous system by demonstrating self-healing behavior, adaptation to changes in the flow of packets based on dynamic changes in available routes, redundant connections, and so on.

The smartphones and other user-interacting devices such as IoT and wearables lie on the periphery of this organism-like nervous system of the computational Internet.

The human brain comprises less than 3% of the total body weight, yet it accounts for over 18% of the energy consumption. Much like the human brain has this density of storage and processing, civilization’s data centers have a density of storage and computational power as well as the need for massive amounts of energy to power this processing and data storage.

The subject of the power consumption by the cloud/data centers and the overall information technology industry has attracted much attention in recent years. It is estimated that if one were to count the energy consumption that goes into manufacturing the processors and devices, running the network infrastructure, and the data centers put together, then as much as 10% of the world’s generated electricity is consumed in powering information technology.

It turns out that, much like the human brain has tremendous need for power, oxygen, and nutrition—compared to the rest of the body—the huge computational data centers, networks, and devices that run human civilization have huge needs of power and energy. The energy needs of the computational resources to run our human civilization have perhaps begun to mirror the relative power needs of the human brain with respect to the human body.

2.11 Security and Privacy Issues

In recent years, mass usage of mobile Apps and associated data collection on the cloud have brought the issues of security and privacy to the forefront of consumer attention. IoT and wearable devices raise the issues of security and privacy to an even greater degree, especially if IoT and wearable devices are directly present on the Internet as an independent device that has its own IP address and/or send data to cloud endpoints without the user’s knowledge and control. It’s more difficult for an isolated small IoT device with limited devices to possess an independent IP address and independent presence on the Internet and also defend and protect itself from malicious attack. As described earlier in this chapter, it is safer and more cost-effective for IoT and wearable devices to act as clients that maintain a secure possibly long-lived connection to cloud endpoints in a user-centric approach that gives the user control over their data. It is also easier for cloud-based endpoints to protect and defend themselves from malicious attack because of their access to computing resources, physical isolation, firewalls, algorithmic monitoring, and adaptive defenses.

Figure 2-4 shows the relationship between users, IoT devices, generated data, and cloud endpoints: a given user may own various IoT devices. IoT devices in turn may generate some form of recorded data and also send such data to various cloud endpoints on the Internet. IoT devices may also receive some data, instructions, or commands from the cloud endpoints.

Figure 2-4 User, IoT devices, generated data, and so on.

In such a world of a multitude of IoT type smart devices, wherein the user’s various devices may collect various data and send such data to various cloud end points such that the user is unaware of what data is collected and who the cloud-based entities are that store, analyze, and potentially share such data with their extended partners, is a scenario that has poor user privacy and control.

It does disservice to the users if their data is collected and stored but not accessible by the users themselves for their own needs and purposes. It is unfair to the user if they are not able to opt in or in, per their needs and choices.

In such a backdrop, the following section covers are some of the important principles that boost user privacy, security, and user control over data.

2.11.1 Use Awareness and complete end-to-end Transparency

It is important that users are aware of what data their IoT devices are generating, and to which cloud-based endpoints the data is being sent to, as applicable.

There have been many news reports in the media about household smart gadgets that record data and send it to the cloud without the user’s knowledge and function like spyware.

Complete end-to-end transparency makes it very clear to the user what data is being collected and who the data is being sent to, how that data is being used, how securely the data is stored on the cloud, and if it is shared with external parties.

2.11.2 User Control and Choice

It is important that the users have control over the data collection with the ability to turn off the data collection per their needs and preferences—as well as the choice to opt in or opt out of data collection or sharing with external parties.

2.11.3 User Access to Collected Data and Erasure capability

It is important that the user has access to all of their data that is collected and stored on the cloud. It is also important that the user has the ability to erase the data stored on the cloud permanently and also export it out in standard open formats of their choice.

2.11.4 Device side, transit, and cloud side protection: Data Anonymization

It is important that the user is aware of what data is collected, who collects and stores it, the degree of protection that their data enjoys in terms of security on the device side, the encryption standards used, and the algorithmic protection the cloud side infrastructure provides in order to protect their data from unauthorized access and cyberattack. Strong infrastructure boundary protection prevents or reduces the chances of a data breach or unauthorized access. Strong algorithmic protection detects failed attempts promptly and intelligently in real time and blocks further attempts by malicious entities.

It’s also important that the stored data be anonymized. Data anonymization is a technique of encryption and removal of personally identifiable information from sets of data. Anonymization maintains certain data centered around a random ID, rather than a identifiable individual. Sensitive data such as passwords, credit card numbers, and social security numbers need to be stored with strong encryption rather than as “plain text.” Hashing algorithms such as MD5, which is often used for hashing passwords, is relatively easy to break. The National Institute of Standards and Technology (NIST) recommends PBKDF2 for one-way hashing.

2.11.5 Practical Considerations: User Centricity

It is impractical for users to have login account credentials on a per device and cloud/website basis—especially in a world of such a multitude of devices and cloud accounts. It is important that users use strong passwords that are not re-used across different realms. At the same time, it is important that users are able to securely access the history data collected on the Internet cloud endpoints and websites, control data access and sharing, and delete the data if they so desire. One of the solutions to address this problem is OpenID.

2.11.5.1 OpenID

OpenID is an open standard and protocol that attempts to consolidate user’s online identities so that users can log into various websites without having to register over and over. The OpenID-enabled website acts as the “relying party,” which depends on the OpenID provider to authenticate their users. Users select accounts by first choosing an OpenID provider and the associated credentials.

OpenID providers include major Internet and technology companies such as Google, Yahoo, Facebook, Microsoft, WordPress, and several more. Such consolidation of the online identity is useful because the user can have far fewer login accounts with unique and more secure credentials and remember them more easily. OpenID 2.0 was finalized in December 2007 and OpenID adoption has been growing.

OpenID is decentralized—it does not rely on a central authority to authenticate a user. Furthermore, OpenID does not mandate any particular or specific set of authentication mechanisms—it can work as well with biometric authentication, smart card-based authentication, user name/passwords, and anything else in the future.

OpenID can be a win–win both for website owners as well as for users, because both can depend on the OpenID provider to address user authentication. Websites often find it challenging to maintain the user names and passwords and store them securely on their sites. Users find it harder to have to remember user names and strong distinct passwords for a large number of websites. It is important for the users’ security that credentials at each site or IoT device be unique and complex.

2.12 Miscellaneous

A few miscellaneous topics are covered in this section.

2.12.1 PhoneBloks: Waste Reduction

PhoneBloks is a modular smartphone design concept created by Dutch Designer Dave Hakkens. “Bloks” are modular components that can be attached to the main board of the phone. Such “Bloks” can be upgraded and replaced, while retaining the rest of the device. Since many users end up replacing their entire phone every few years, there is a huge volume of electronic waste that is generated. By selectively upgrading a functional “Blok” such as camera, battery, storage, and so on, users can upgrade particular modules without giving up the entire device, thereby reducing electronic waste. PhoneBloks is an independent organization with the general mission of electronic waste reduction. More information on PhoneBloks can be found at https://phonebloks.com.

2.12.1.1 Project “Ara”

Project “Ara” is an initiative from Motorola and Google that is influenced by, and with some degree of collaboration from, PhoneBloks. Project Ara aims to create modular smartphones and devices based on kits with modular components that can be put together like Lego blocks to create devices. Such modular components include common features such as camera and battery as well as specialized features such as game controller buttons, sensors, medical devices, receipt printers, laser pointers, and so on.

2.12.2 Google Cardboard: inexpensive Virtual Reality

Google Cardboard is an inexpensive cardboard headset designed by Google that works along with stereoscopic vision and display software on the Android smartphone—in order to provide users with 3-dimensional, virtual reality App experiences. Consumers can fold their own based on the designs provided by Google or purchase a ready-made version from various manufacturers listed on their site. The prices start at about US $15. More information can be found at https://www.google.com/get/cardboard. Google also has an associated virtual reality Cardboard SDK that simplifies the development of virtual reality Apps, the coverage of which is outside of the scope of this book.

References and Further Reading

- http://en.wikipedia.org/wiki/Wearable_computer#History

- http://en.wikipedia.org/wiki/Mechanical_computer

- http://www.media.mit.edu/wearables/

- http://en.wikipedia.org/wiki/Casio_Databank

- http://en.wikipedia.org/wiki/Calculator_watch

- http://en.wikipedia.org/wiki/Nelsonic_Industries#Game_Watches

- http://www.media.mit.edu/wearables/lizzy/timeline.html

- http://www.cs.virginia.edu/~evans/thorp.pdf

- http://www.amazon.com/Beat-Dealer-Winning-Strategy-Twenty-One/dp/0394703103

- http://en.wikipedia.org/wiki/Alex_Pentland

- http://web.media.mit.edu/~sandy/

- http://www.forbes.com/forbes/2010/0830/e-gang-mit-sandy-pentland-darpa-sociometers-mining-reality.html

- http://en.wikipedia.org/wiki/Punched_card

- http://en.wikipedia.org/wiki/Punched_card_input/output

- http://en.wikipedia.org/wiki/Mechanical_computer

- http://en.wikipedia.org/wiki/Infrared_Data_Association

- http://en.wikipedia.org/wiki/ARM_architecture

- http://en.wikipedia.org/wiki/MIPS_Technologies

- http://en.wikipedia.org/wiki/List_of_system-on-a-chip_suppliers

- http://en.wikipedia.org/wiki/Internet_of_Things

- https://hbr.org/2014/10/the-sectors-where-the-internet-of-things-really-matters

- http://en.wikipedia.org/wiki/Machine_to_machine

- http://en.wikipedia.org/wiki/Computer_vision

- http://en.wikipedia.org/wiki/Microelectromechanical_systems

- http://en.wikipedia.org/wiki/Metro_Ethernet

- http://en.wikipedia.org/wiki/Delay-tolerant_networking

- http://en.wikipedia.org/wiki/Network_address_translation

- https://www.google.com/cloudprint/learn/howitworks.html

- http://www.gartner.com/newsroom/id/2636073

- http://nfc-forum.org

- http://en.wikipedia.org/wiki/Metro_Ethernet

- http://en.wikipedia.org/wiki/Delay-tolerant_networking

- http://en.wikipedia.org/wiki/Microelectromechanical_systems

- http://www.ncbi.nlm.nih.gov/pubmed/24129903

- https://phonebloks.com

- https://www.google.com/get/cardboard/