Chapter 2

THE PAST PERFORMANCE CYCLE

![]()

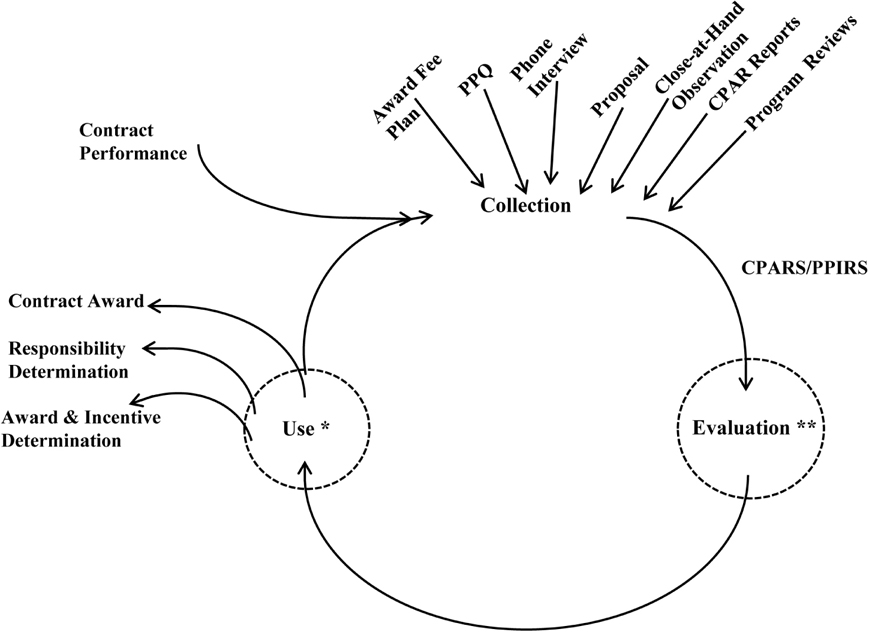

Envisioning past performance as a cycle of activities helps to illustrate its potential power as a self-reinforcing process. By generating feedback that can be used to improve performance, these activities can improve contract outcomes and increase chances of business growth. As used by both buyer and seller, the past performance cycle includes some form of information collection, analogous processes of interpretation or evaluation, and a point or phase in the cycle we will call “use.” These cycles operate continuously and incorporate many levels of activity, including purchases, projects, project phases, and incentives.

The past performance activities of buyers and sellers follow different cycles. As depicted here, the seller past performance cycle is more of a closed loop than the buyer past performance cycle, primarily because contract performance was taken out of the buyer cycle but left in the seller cycle. This was done to emphasize the primary role of the seller in contract performance. It is, admittedly, somewhat simplistic, in that the buyer can and does play an important role in contract performance—for example, by providing access to resources critical to contract performance. In truth, this is a shared responsibility; nonetheless, the emphasis is on the seller for performance.

The two cycles also overlap in several ways. External inputs and activities that take place outside the cycles impact each cycle, and both buyer and seller collect from the same sources and inputs, such as a person completing a past performance questionnaire or CPARS report. Additionally, the cycles overlap in the sense that ideally, both buyer and seller are interested in improving performance. Finally, both cycles feed back into themselves.

BUYER PAST PERFORMANCE CYCLE

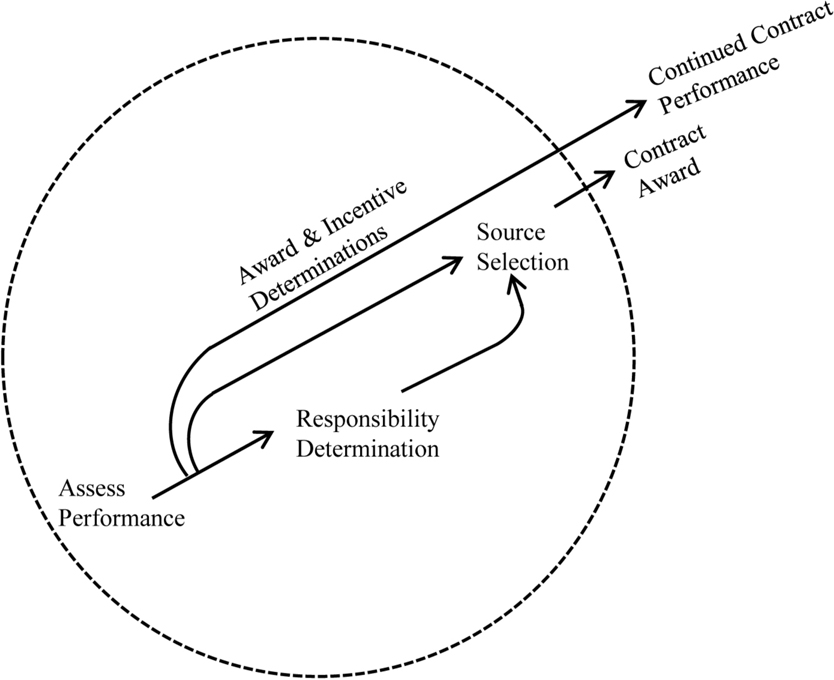

At a high level, the buyer uses past performance information to assess performance: How has this party performed in the past? These assessments are most commonly part of source selection during a competitive procurement. A clear understanding of the buyer’s process for obtaining, evaluating, and using past performance information is essential to sellers as they develop responses to solicitations, because they seek to showcase their past performance in all potential sources the buyer might turn to for information about their past performance. Buyers also should, naturally, be aware of their own agency’s processes. The buyer past performance cycle is shown in Figure 2-1. (Not shown directly is the storage or retention of past performance information.)

Figure 2-1: Buyer Past Performance Cycle

Contract award and contract performance are shown as output of and input to, respectively, the buyer past performance cycle. The term contract award means that the government has given notification that it is entering a contract with a private party. This may have been an award decision where one or more bidders learned they did not receive contract awards, or it may have been an award decision where there was only one bidder. For sellers, a contract award is the desired outcome from the buyer’s use of past performance information in source selection (the process of determining which offeror to choose to make a contract award to among a group of offerors or other alternatives).

Bidding on and subsequently being awarded a contract means that contract performance information now starts accumulating experience that can be converted to past performance information. Thus begins the cycle: collection, evaluation, and use. Bidding on and subsequently not being awarded a contract means that no contract performance experience that can be used in the future as past performance information will be accumulated.

Collection

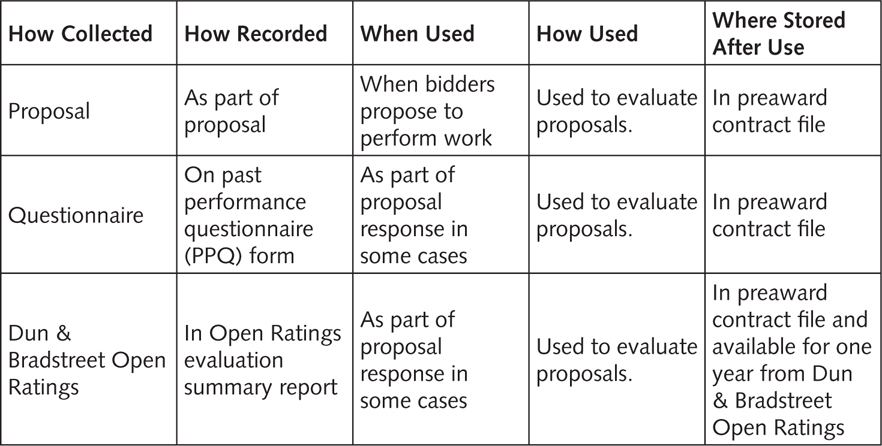

Sellers that do business with the federal government are usually familiar with its three main collection methods: past performance write-ups submitted as part of proposals, past performance questionnaires (PPQs) collected as part of a solicitation response, and contractor performance assessment reports (CPARs) collected during and after contract performance. Past performance information is not solely provided by the bidder and may come from multiple sources.

These sources can have a large impact on an evaluation outcome. Government agencies that frequently buy commercial items and place a significant number of orders under Federal Supply Schedules, governmentwide acquisition contracts, or other task order contracts may conclude that their quest to award contracts to good performers over good proposal writers is better served by evaluations that rely only on past performance and price, and not on extensive technical or management proposals. For a firm competing for work under this scenario, any individual source of past performance information can rise to a critical level when the buyer is deliberating on which firm will receive a contract. Table 2-1 summarizes all the collection methods used by government agencies and when/how past performance data are commonly recorded, used, and retained for later use.

Persistent problems regarding past performance can be categorized as stemming primarily from inputs and from organization. Persistent input problems arise from agencies not completing past performance reports, evaluators avoiding writing narratives, evaluators giving high scores rather than using evaluations to provide meaningful feedback, and written narratives that don’t match or support assigned grades. Additional input problems come from the continued and pervasive use of burdensome collection methods, namely ad hoc, short-fused, and unpredictable (to the party completing them) paper-based past performance questionnaires.

Persistent organization problems regarding past performance come from continued use of a burdensome and disjointed collection, retention, and retrieval system for past performance information. Information is difficult to access, access is limited to a small population of users out of a potentially broader user base, and access is parsed and stovepiped so that the user experience is poor and utility derived from the system is low.

Table 2-1: Buyer Past Performance Information Collection Methods

These persistent issues contribute to a longstanding critique that the past performance information collected by an agency or activity is not made available to others (either within the agency or outside it) and therefore is not used by other agencies or activities in making procurement decisions. This criticism holds true across collection methods despite many years of attempts to address this fundamental problem. In 2006, the General Services Administration observed the following concerning past performance and its nuances:

It has long been a vulnerability that Government agencies would award to a vendor who owes another part of the Government money or services, or is in the process of being debarred. This was due to the fact that information about performance was maintained at the local contracting office level. (GSA, 2006)

A brief discussion of each method and its impact on data usage and retention/retrieval follows.

Proposal

Bidders submit past performance information in proposals, typically in a separate section or volume from the proposal itself, and otherwise as directed in the solicitation. The information collected is used for proposal evaluation. A proposal consists of an offer or promise of work, price or cost information, and information about the bidder (Figure 2-2). Past performance information is part of the information about the bidder (proposer capability information). This information can be provided (as directed in the solicitation) in a variety of formats, most commonly written information and oral presentations.

Figure 2-2: Components of a Proposal

Past performance information collected in proposals typically goes no further than the preaward contract file, whether paper or electronic. These files generally are not searchable or electronically archived in any manner conducive to access or use for any other purpose.

Past Performance Questionnaire

PPQs are typically collected as part of a proposal response and used during proposal evaluation. Solicitations provide guidance on how they are to be completed and returned to the contracting officer or other government official. The norm is to include a specific PPQ form in the solicitation. This form is not filled out by the bidder but sent by the bidder to several client references of their choice, who are asked to return the completed form directly to the contracting officer or designee. PPQs collected during preaward typically go no further than preaward contract files, which generally are not searchable or electronically archived in any manner conducive to access or use for any other purpose.

Dun & Bradstreet Open Ratings Report

For a fee, Open Ratings, Inc. (a Dun & Bradstreet company), conducts an independent audit of customer references and calculates a rating based on statistical analysis of various performance data and survey responses. Some agencies, notably the General Services Administration (GSA) for Federal Supply Schedule proposals, use Open Ratings for collecting past performance information in proposals and for evaluating past performance during proposal evaluation.

The performance data collected in the Open Ratings survey is provided in an Open Ratings evaluation report and includes customer ratings on a contractor’s overall performance, reliability, cost, order accuracy, delivery/timeliness, quality, business relations, personnel, customer support, and responsiveness. The solicitation directs sellers to file with Open Ratings and show evidence of this filing (to include, in some cases, a copy of the evaluation report).

Aside from firm identification data, the primary information supplied to Open Ratings by a seller is contact information for 20 client references. Open Ratings considers four responses from these references to be the minimum needed to complete a rating and declares its past performance evaluation reports to be valid for one year. The soliciting agency will most likely retain Open Ratings reports along with proposals in the preaward contract file. They are also kept on file and are accessible (for a fee) directly from Open Ratings during the one-year validity period.

Phone Interview

Phone interviews are occasionally conducted by contracting officers and designees as a part of past performance evaluation during a proposal evaluation for source selection purposes. Phone interviews may be conducted using a standard set of questions—in essence, a PPQ form.

In an evaluation of past performance, there is no legal requirement that a government agency must contact all the references submitted by a particular bidder. But the agency must act reasonably when it decides which references to contact or not contact: Government agencies must make a reasonable effort to make contact, and if that reasonable effort does not result in contact with the reference, the agency can complete its evaluation without the reference.

When instructed to provide contact information for its past performance references in a proposal, a prudent bidder will include references familiar with it that will respond to queries in a timely fashion and provide a good reference. Many agencies ask for both a technical and a contracts reference for each cited past contract performance. In some cases, only contact information is requested (i.e., no past performance write-up).

Phone interviews may be recorded as call notes or on a PPQ (or similar) form. The notes are most likely to be retained in the preaward contract file, with the same level of access as for PPQ questionnaires.

Email Response

Similar to phone interviews, email responses may be used as part of proposal evaluation and may be solicited directly from the past performance references provided by bidders using a set of questions or a PPQ template. Email responses are recorded in the email body or as an attachment and may be retained in preaward contract files.

Award or Incentive Plan Report

Award fee plans (used in award fee contracts) provide guidance on the collection of performance information for specified contract periods. This past performance information is typically collected in a standard response format (as specified by each plan) that, ideally, contains elements tying important contract objectives to the incentives or awards in the contract. This information is used to determine award fee amounts during and following contract performance; it is kept in the contract files, which are not typically archived or stored electronically in a manner conducive to later retrieval.

FAR 42.1503 was revised in September 2013 to specifically state that incentive and award fee performance information is to be included in past performance ratings and evaluations entered into CPARS. This FAR language does not state that CPARS evaluation reports are to be produced coincident with award and incentive fee plan evaluations of contractor performance and does not apply to procedures used by agencies in determining fees under award or incentive fee contracts. When past performance of an award or incentive fee contract is evaluated, this element of contract performance is to be rated as part of any CPARs covering the same period, using the adjectival rating scale provided by the same FAR section (see “Evaluation”). It also sets the expectation that the fee amount paid to contractors under award or incentive fee arrangements should reflect the contractor’s performance and the past performance evaluation should closely parallel and be consistent with the fee determinations.

Close-at-Hand Observation

When agency or government staff directly observe contractor performance, this is referred to as close-at-hand observation. Although this type of past performance information is not generally captured or recorded, it is an established precedent in case law that close-at-hand observation of contractor performance cannot be ignored during source selection evaluations (see for example GAO B-280511.2, GTS Duratek, Inc., 1998,7 and GAO B-401679.4 et al., Shaw-Parsons Infrastructure Recovery Consultants, LLC and Vanguard Recovery Assistance, Joint Venture, 20108) and, if it exists, must be used during proposal evaluations. GAO review precedent is that this information should be considered the same as any other past performance information presented by the seller or found by the buyer.

Some agencies and activities purposefully and systematically collect close-at-hand contract performance information, but the majority do not. The likely means of collection and retention of this type of past performance information, if it is documented, is in any evaluation working papers or decision documents retained in the preaward contract files.

CPARS Reports

CPARS reports are collected periodically as described in Chapter 4. CPARS forms are sometimes reviewed during proposal evaluation or as a reference when completing performance evaluations during contract performance.

The standardization, retention, and retrieval provided by CPARS is intended to make records of past performance information accessible across agencies for the purpose of evaluating contractor past performance. Currently, these records are accessible via CPARS and the Past Performance Information Retrieval System (PPIRS). A planned update to the System for Award Management (SAM) will include the information in PPIRS in SAM. Past performance information in CPARS reports is retained for three years.

Program Review

It is a best practice for government programs to periodically conduct some form of a program review. There is no specified form or format for such review that is universally applicable, and this type of past performance information is not likely to be recorded or retained. These reviews may generate past performance information regarding contractor performance during a contract period or periods.

Use of this information is similar to that described for close-at-hand observation. It is unlikely that this information would be retained in any way that is searchable or accessible outside the office that generated the review.

Evaluation

Evaluation refers to a buyer’s evaluation of a bidder’s past performance against stated evaluation criteria.

In the solicitation, the agency is required to describe the evaluation approach it will follow and provide an opportunity for bidders to identify past or current contracts that they performed on and to detail any problems encountered and corrective actions taken. The evaluation of past performance must consider the currency and relevance of the information, the source and context of the information, and general trends in contractor performance. The government must consider information from the offeror and other sources and should consider information about predecessor companies, key personnel, and subcontractors.

As described in Chapter 1, three standard rules in the government’s evaluation of past performance are that (1) If no record of relevant past performance exists, the offeror may not be evaluated either favorably or unfavorably on past performance; (2) the offeror must be provided the opportunity to address adverse past performance information that it has not previously had an opportunity to comment on; and (3) the government cannot reveal the names of individuals providing reference information about an offeror’s past performance.

In addition to these three standards, what is to be evaluated and how it is to be evaluated must be stated in the solicitation, and when conducting the evaluation this “what and how” must be followed. (This information is in Section M of the solicitation.) Typically, the stated evaluation scheme will specify the evaluation criteria for the past performance itself, along with how the relevance of the past performance will be evaluated. Evaluation criteria for past performance may include things such as the management performance of the specified relevant past performance, the quality of the products or services delivered, how well schedule requirements were adhered to, and the cost control performance of the specified relevant contracts cited as past performance. Relevance of past performance information can take into consideration such things as the nature of the business areas involved, the required levels of technology, contract types, similarity of materials and products, location of work to be performed, and similarity in size, scope, and complexity.

The government’s basic objective regarding past performance evaluation is to measure the level of confidence in an offeror’s ability to successfully perform based on previous and current contract performance data. This level of confidence is expressed in a performance risk assessment, in which evaluators assign an adjectival rating based on their evaluation of the offeror’s past performance. A typical adjectival rating scheme for assessing performance risk based on past performance is shown in Table 2-2. A thorough past performance evaluation, by including reviewing information from sources in addition to those submitted in a proposal, ensures that awards are made to good performers over good proposal writers.

| Adjective | Description |

| Low Risk | Based on offeror’s past performance record, essentially no doubt exists that the offeror will successfully perform the required effort. |

| Moderate Risk | Based on offeror’s past performance record, some doubt exists that the offeror will successfully perform the required effort. |

| High Risk | Based on offeror’s past performance record, extreme doubt exists that the offeror will successfully perform the required effort. |

| Unknown Risk | No relevant performance record upon which to base a meaningful performance risk prediction is identifiable. |

Table 2-2: Adjectival Rating Scheme for Assessing Performance Risk Based on Past Performance

An important and often misunderstood differentiation between past performance and experience can come into play during evaluation. As stated above, government agencies are not allowed to unfavorably evaluate bidders with no past performance. This is sometimes referred to as the neutral rule, because a bidder with no past performance is to be evaluated neutrally (not negatively) on past performance. However, agencies can negatively evaluate a bidder’s experience if it has no past performance, if the solicitation makes this distinction.

GAO protest decisions are based in part on how the solicitation is written. If no distinction is made between experience and past performance in the solicitation, the decision normally is that the two elements cannot be evaluated separately. If a clear distinction is made in the solicitation between past performance and experience, then a bidder can be evaluated separately on these two elements, and a bidder with no past performance and no relevant experience could be evaluated neutrally on past performance and negatively on experience. The majority of solicitations and evaluation schemes are not this clear, however, and the terms past performance and experience are often conflated or used interchangeably in solicitations.

Past performance evaluation as a part of source selection for award of a contract can take place as a prequalification, as in a two-step or other phased source selection process. For example, a first round of past performance and capability information submission could result in a down-select decision by the buying agency that limits the final or subsequent participation in this acquisition to a subset of the interested respondents.

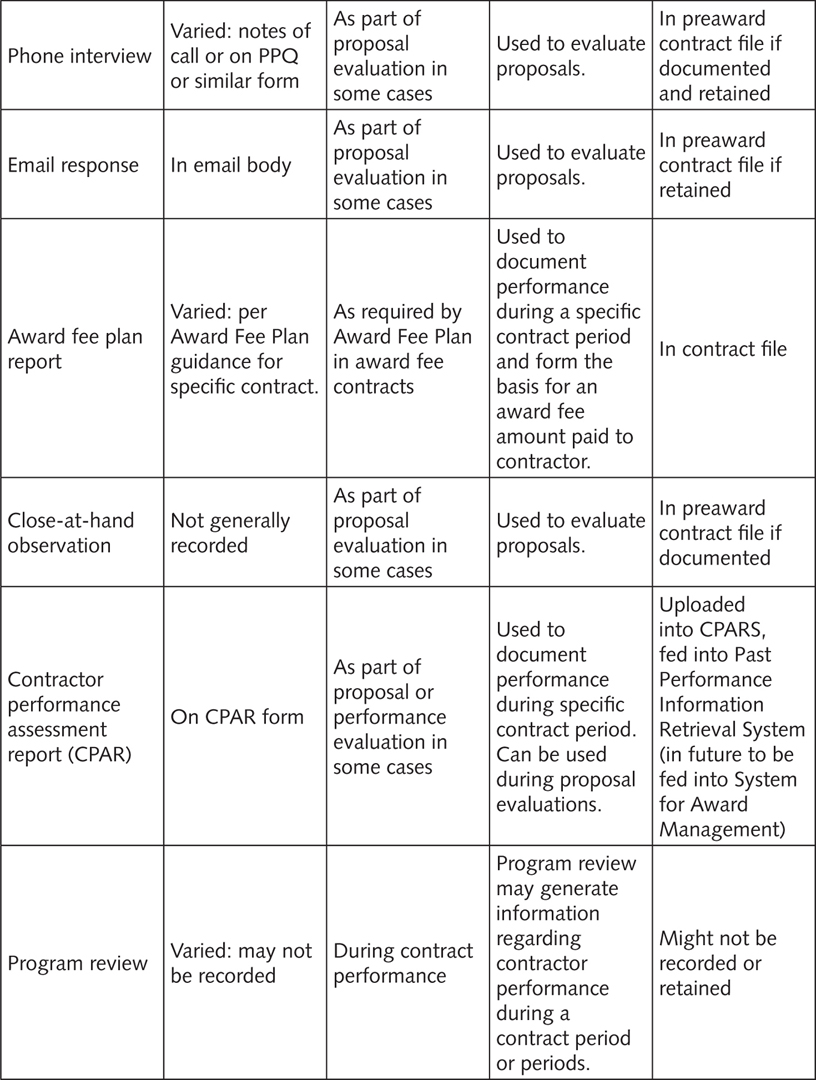

Evaluation of a company’s past performance also takes place outside source selection evaluations: during program reviews, during the completion of CPARS reports, during award or incentive fee determinations, and at other less formal times during or after contract performance. These are shown in the expansion of the “evaluation” area of the buyer past performance cycle shown in Figure 2-3.

Figure 2-3: Evaluation, Buyer Past Performance Cycle

Historically, a higher level of scrutiny is applied to—and a higher degree of compliance is achieved in—the form, format, and procedures that are used during source selections, when an agency determines to which offeror among a group of offerors it will award a contract. At other times, however, such as evaluating contractor performance during contract performance or assessing bidder past performance in a responsibility determinations, less oversight and attention has been paid. It is in part because of this that several issues with past performance evaluation persist across government agencies (GAO, 2009). First, past performance evaluations (or contractor evaluations during contract performance) are not always completed. Second, even when completed, evaluations either are cursory or fail to provide direct, actionable, or constructive critiques on performance. Third, opportunities to collect and retain past performance information and information on contractor performance are missed, and past performance information repositories are thus rendered ineffective at best. Weaknesses in the collection, retention, and use of past performance information have been repeatedly decried by oversight and other organizations such as GAO, inspectors general, and industry groups. Some government agencies have dramatically improved their past performance information collection efforts, particularly via CPARS. (It is worth noting that CPARS is for unclassified information only, and intelligence community agencies largely do not use CPARS.)

Additionally, FAR 42.15 on contractor performance information was revised in September 2013 to detail when, where, what, how, and by whom contractor performance is to be evaluated. The when is annually and when contract performance is completed. The where is electronically in CPARS, from which data are automatically migrated to PPIRS after 14 days. The who includes the technical office, contracting office, program management office, and, where appropriate, quality assurance and end users of the product or service who provide inputs to evaluations. If a specific person is not designated as being responsible for completing the evaluation (e.g., contracting officer, contracting officer’s representative, project manager, program manager) the contracting officer is the party responsible for the evaluation.

The what is first established in FAR 42.15 by defining past performance information as relevant information, for future source selection purposes, regarding a contractor’s actions under previously awarded contracts or orders and includes the contractor’s record of

1. Conforming to requirements and standards of good workmanship

2. Forecasting and controlling costs

3. Adherence to schedules, including the administrative aspects of performance

4. Reasonable and cooperative behavior and commitment to customer satisfaction

5. Reporting into databases

6. Integrity and business ethics

7. Businesslike concern for the interest of the customer.

The how is by using standardized adjectival rating schemes and specified elements of past performance information to be reviewed and evaluated. Required evaluation factors include (at a minimum):

1. Technical quality of product or service

2. Cost control (not applicable for firm fixed-price or fixed-price with economic price adjustment arrangements)

3. Schedule/timeliness

4. Management or business relations

5. Small business subcontracting (as applicable)

6. Other (as applicable—e.g., late or nonpayment to subcontractors, trafficking violations, tax delinquency, failure to report in accordance with contract terms and conditions, defective cost or pricing data, terminations, suspensions, debarments).

These factors can include subfactors, and each factor and subfactor is required to both be evaluated and have a supporting narrative. The evaluation rating scale and definitions are shown in Figures 2-4 and 2-5, reproduced directly from FAR Tables 42-1 (for prime contractor performance) and 42-2 (for small business and subcontracting performance).

Figure 2-4: Past Performance Evaluation Rating and Definitions from FAR Table 42-1

NOTE 1: Plus or minus signs may be used to indicate an improving (+) or worsening (-) trend insufficient to change the evaluation status.

NOTE 2: N/A (not applicable) should be used if the ratings are not going to be applied to a particular area for evaluation.

Figure 2-5: Small Business Subcontracting Past Performance Evaluation Rating and Definitions from FAR Table 42-2

NOTE 1: Plus or minus signs may be used to indicate an improving (+) or worsening (-) trend insufficient to change evaluation status.

NOTE 2: Generally, zero percent is not a goal unless the contracting officer determined when negotiating the subcontracting plan that no subcontracting opportunities exist in a particular socio-economic category. In such cases, the contractor shall be considered to have met the goal for any socioeconomic category where the goal negotiated in the plan was zero.

Evaluation of past performance information typically precedes its storage and retention—that is, it is not stored or retained unless it has been evaluated. The implication of this statement is that past performance information exists in two forms: information that has been evaluated and information that has not been evaluated. An example of the former is the formal documentation (including the evaluation) of a contractor’s performance in a CPARS report. This evaluated past performance information has a high likelihood of being captured and retained in a past performance repository.

An example of the latter is information generated during a program review conducted in an agency. This illustrative program review likely was conducted using a locally devised framework and may or may not include judgmental evaluations of contractor performance. Therefore, it could include past performance information that has not been evaluated. The likelihood of this unevaluated past performance information being captured and retained in a past performance information repository of any sort is extremely low. Contractors should be aware of this occurrence and apply the same management principles recommended for evaluated past performance information. Government agencies should likewise take advantage of this past performance information.

One could argue that unevaluated past performance information regarding contract awards, dollar amounts, and so on, such as can be found in sites or systems like the Federal Funding Accountability and Transparency Act site, on the USAspending.gov site, in the System for Award Management (SAM), or in the Federal Procurement Data System, are past performance information repositories. While this is a true statement, the extremely abbreviated and encoded nature of these repositories makes them unsuitable for past performance evaluations.

Storage and Retention

The primary repository of past performance information used by the government is CPARS. All data in CPARS are to be accessible (per FAR Part 42) in PPIRS to agencies.

Government agencies are required by regulation to document contractor performance. A more recent addition to the requirements to store and retain past performance information is the requirement to document terminations for cause or default, determination of contractor fault by DoD, determinations of non-responsibility, and defective cost or pricing data in the Federal Awardee Performance and Integrity Information System (FAPIIS). Grant issuers and administrators are also charged with documenting “recipient not qualified” determinations and terminations for material failure to comply in FAPIIS. Storage of past performance information is covered in more detail in Chapter 4.

Use

At a high level, the buyer uses past performance information to assess performance: How has this firm performed in the past? These assessments are most frequently used and thought of as part of source selection during a competitive procurement (as described in Evaluation).

The purpose of using past performance information, from the buyer’s perspective, is twofold: First, to make sound business decisions during contractor selection and during contract performance. Second, to provide feedback to contractors to improve overall contract performance. These purposes are fulfilled during the “use” section of the buyer’s past performance cycle (Figure 2-6). A discussion of the three uses—responsibility determination, source selection, and award or incentive determination—follows.

Figure 2-6: Use, Buyer Past Performance Cycle

Responsibility Determination

The FAR uses the term responsible to describe an offeror that has the capability, tenacity, and perseverance to perform a particular contract. Every contract award must have a determination of responsibility. A contracting officer signing an awarded contract signifies the affirmative determination that the contractor is responsible.

Before awarding a contract, a contracting officer conducts a responsibility determination. An affirmative responsibility determination is when a government agency (i.e., a contracting officer) concludes that an offeror is in fact an acceptable contract awardee. At this point, multiple offerors may be responsible.

A responsibility determination is different from a source selection evaluation, which is the process of determining which offeror to choose to make a contract award to among a group of offerors or other alternatives. Award of any contract, often done without a formal source selection process (e.g., a sole source contract award or a small purchase), always requires a positive responsibility determination.

The Small Business Administration may get involved (likely at the request of a small business offeror that was determined to be non-responsible by a contracting officer) and may issue a certificate of responsibility called a certificate of competency. In fact, this certificate can override a non-responsibility finding by the awarding agency.

The past performance of a prospective contractor is one element considered during this process. A responsibility determination is a subjective determination made based on seven factors:

1. Have or can get adequate financial resources to perform

2. Have or can get capacity to perform

3. Have a satisfactory performance record (i.e., past performance)

4. Have a satisfactory record of ethics and integrity (i.e., past performance)

5. Have or can get needed business and performance skills to perform

6. Have or can get needed facilities to perform

7. Be qualified and eligible under applicable laws and regulations.

The best evidence for satisfying that a business “has or can get” these elements is that it has successfully used them on other government contracts similar in size, scope, and complexity—in effect, that it has satisfactory past performance in all seven areas.

Source Selection

Source selection is the most recognizable use of past performance information by the government, being the use most often considered in discussing the use of past performance by government agencies. This use is covered in detail in Chapter 5 on past performance evaluations.

Award Fee, Award Term, and Incentive Determinations

Award and incentive fee arrangements used in award and incentive type contracts are intended to:

• Provide for assessments of contractor performance levels

• Focus the contractor on areas of greatest importance

• Communicate assessment procedures and thus government priorities

• Provide for effective, two-way communication between the contractor and evaluators

• Provide for an equitable and timely assessment process

• Establish an effective organizational structure to administer award fee provisions.

Retrospective performance (i.e., recent past performance or performance during the contract award or incentive period just completed) is the primary factor in an award or incentive determination. Award fee, award term, and incentive refer to the specific contract types requiring these determinations. The contract will specify (or should specify) the performance assessment criteria, the periodicity, and other aspects of the administration of the award or incentive. These matters are covered in an award or incentive fee determination plan included as an addendum to the contract.

Incentives and awards can be either subjective or objective. For objective plans, typically associated with incentive-fee arrangements, there is less likely to be an evaluation of past performance as part of the administration of the plan. In subjective plans, typically associated with an award fee or award term plan, there is a high likelihood that subjective measures will be defined in the contract and contractor performance will be assessed against these criteria to determine the amount of the award.

These contract types are less frequently used but do generate past performance information that must be documented and stored. As of the September 2013 rewrite of FAR 42.1500, these performance assessments are required to be captured in CPARS, will thus be retained in PPIRS, and will be available for either six years (for construction and architect-engineer contracts) or three years (for all other contracts) for agencies to review as part of past performance evaluations during source selection.

Award fee determinations are unilateral decisions by the government, even though a best practice in award fee determination plans is to have the contractor perform a self-assessment and provide input during the review cycle. Award fees are typically paid in three-to six-month increments, so an award or incentive fee contract can generate a number of award fee determinations as well as past performance evaluation content in CPARS over the life of the contract.

SELLER PAST PERFORMANCE CYCLE

The seller past performance cycle is shown in Figure 2-7. Sellers that desire to perform well collect and use past and current performance information to stay informed about their performance, monitor and take corrective action as needed to improve performance, measure progress against goals, and revise measures and goals as needed. They also collect and manage past performance information for more purposes than just using it in their next proposal, such as for employee feedback or as part of a performance improvement program. This focus on continually improving performance, combined with ensuring that performance records reflect positively on their past performance, meshes with the government goal of using past performance information to improve contractor performance.

Figure 2-7: Seller Past Performance Cycle

Collection

Sellers collect past performance information from a variety of sources, including debriefings (both unsuccessful offers and awarded contract proposal debriefings), program reviews, CPARS reports, and customer satisfaction surveys and programs, as well as that information collected by staff performing or monitoring their own firm’s performance.

Debriefings

Debriefings are feedback from a buyer to a seller when a contract decision is made. Typically this is a contract award decision, and the seller may or may not be the awardee; other decisions by the government also can provide the basis for a debriefing, such as exclusion from the competitive range or otherwise being excluded from competition before an award. A FAR precept is that debriefings have to be requested by the bidder within very specific and tight timelines. For preaward debriefing requests, the party requesting the debriefing can ask to be debriefed immediately or to be debriefed after the contract award is made.

The bidder should know what it wants to learn and wants to do in a preaward debriefing. If the bidder is interested in taking an action based on the preaward decision, it is likely to be in its best interest to request a preaward debriefing. However, if the bidder is interested in learning as much as possible about the eventual award decision but is unlikely to take immediate action, it probably should request a postaward debriefing. That way, more can be learned about the source selection decision.

Debriefings are an important part of past performance information collection. Sellers should take full advantage of every debriefing opportunity: They should request one at every opportunity, ask for and take advantage of in-person debriefings, and come to them fully prepared. Coming fully prepared means reviewing the solicitation and submitted proposal along with any discussion or clarification rounds that have occurred. Sellers should be aware of the varied viewpoints on the buyer side and seek direct participation and feedback from each viewpoint in the debriefing. These viewpoints include the contracting officer, the source selection authority, the technical evaluation team lead, or other evaluation teams (management, past performance, price, or other). Preparation includes coming to the conversation with a collection of questions and advance preparation by the direct participants (from the seller) in the debriefing. Feedback during a debriefing can and should include feedback on the competitive field, on the evaluation process, on the proposal, and on the rating of the proposal. Questions for consideration include:

• Was the successful offeror already performing work for the agency?

• What other firms are on the successful offeror’s team?

• Who were the other offerors?

• What did the price and technical score distribution look like?

• Please describe the process by which evaluation scores were derived by the government evaluators.

• Please describe the makeup and composition of the evaluation team.

• Please provide a detailed breakdown of our technical score by proposal section, including detail on strengths and weaknesses.

• Please provide specific feedback on the subcontractor plan rating.

• Please describe the perceived strengths and weaknesses in our proposed incumbent personnel retention strategy.

• Please describe the perceived strengths and weaknesses in our proposed transition plan.

• What needed improvement in our proposal, or in the government assessment of the risk associated with an award to our firm; how could we have mitigated this perceived risk?

![]() Company experience

Company experience

![]() Management approach

Management approach

![]() Facilities or geographic coverage

Facilities or geographic coverage

![]() Key personnel

Key personnel

![]() Proposed labor mix and utilization

Proposed labor mix and utilization

![]() Proposed technical solution

Proposed technical solution

![]() Qualifications of proposed personnel

Qualifications of proposed personnel

![]() Past performance.

Past performance.

As can be seen in this list of possible questions, a debriefing is an opportunity to obtain feedback on not only how the past performance was perceived and rated but also on other aspects of the firm’s proposal that might affect how the firm is viewed.

Program Reviews

The term program review is used here to mean any review, formal or otherwise, conducted by an agency or client on the progress of a project or program. In projects and programs involving contracted support, it is normal for the review to include comments on contractor performance. These evaluations of performance are a potential collection point for a seller of how a particular buyer or agency views its performance.

These views of contractor performance are not likely to be stored in a searchable repository of past performance information, but they could form the basis for close-at-hand information about contractor performance or purposeful collection of past performance information at the activity or agency level on a specific project or program.

CPARS Reports

It is in a firm’s best interest to proactively manage its CPARS reports. Firms are provided limited access to CPARS via individual, named account holders. A firm needs to assign an account access person who actively monitors postings and associated email notifications. Using an organizational mailbox with multiple recipients to ensure that email notifications are received and acted upon is useful if accountability for action is not lost in doing so. A firm should know which projects it has ongoing and when CPARS reports are or could be completed and should prompt its clients to complete the reports, to fully document the firm’s performance, and to comment appropriately on the filed reports. It behooves a firm to collect and store its CPARS reports for its own use.

Customer Satisfaction Surveys

Customer satisfaction monitoring can be done in a variety of ways. Firms can and should collect customer satisfaction information on a regular, recurring basis. Conventional wisdom regarding this collection method is that the best results are achieved by using a neutral or objective third party to solicit and collect customer satisfaction data in a manner that makes it relatively easy for the customer to provide meaningful feedback. The methods to do this are varied and, compared to the highly scripted nature of CPARS, can be easily tailored to the type and depth of feedback sought, the disposition of the customer toward responding to queries, and the retention and dissemination of the information collected. Customer satisfaction and perceptions can be collected from existing, past, prospective, or representative customers.

Staff Collection

An often overlooked means of collecting past performance information and both client and self-perceptions of current performance is from a source that is perhaps closest to the client on a regular basis: the staff performing on current projects. Collection opportunities include normal daily interactions, the submission and review of recurring status reports, and program or project reviews. Firms that perform government work often default to or rely upon the government to specify the frequency and format of the program and project reviews where this type of information can arise and feedback collection can occur. Firms are well served by not missing these opportunities and by taking the initiative to schedule and conduct regular reviews, submit and follow up on performance feedback via recurring reports, and drive agendas during interactions with clients to obtain performance feedback with adequate specificity to inform service delivery and the management of client perceptions.

Interpretation

Unlike the government buyer, the seller is not guided by rule and regulation in the evaluation of its own past performance. Our choice of the term interpretation as opposed to evaluation reflects this distinction. (Evaluation of past performance information is covered in greater detail in Chapter 5.) The seller can choose to evaluate its own past performance against a grading scale or schema. For the purposes of proposals, it should evaluate its past performance information to select the past performance citations to use in a particular proposal, to choose whether to bid on a solicitation, and to determine how to best present its past performance information.

The seller needs to interpret the past performance information it receives, seeks out, collects, or otherwise encounters. This interpretation includes making sense of the information, both for itself and with the aim of predicting how others (e.g., buyers) will make sense of and evaluate the information—particularly for relevancy and scoring against proposal and CPARS evaluation criteria.

Of primary consideration in interpretation by a seller is the removal or overcoming of biases in its analysis. Collection sources and methods or channels each come with their own inherent lean toward a particular view or perspective. One bias that is pervasive and difficult to overcome is the strong emotional response of the party receiving feedback or past performance information when its own “blood, sweat, and tears” went into delivery of the goods or services. Additionally, the perceived value of the delivery team is highly likely to be graded, judged, compensated, or in some way affected by the nature of the past performance information it receives. Under these circumstances, it is human nature to selectively listen, to not be objective, to filter information, or to consider explaining or forming a retort instead of actively listening when confronted with feedback and information about past performance. This is particularly true when that feedback or information is critical or seemingly prepared and provided with little thought or effort.

Acknowledging, then, that it is difficult to predict how others will evaluate one’s past performance, and also acknowledging persistent biases and filters between a performance and looking back at that performance through past performance information, how can the information be interpreted? One way is to submit it to a proxy for the eventual or actual evaluator(s), such as a person with similar attributes (e.g., used to work at the buyer agency, served in a role same as or similar to an expected evaluator, had similar experiences as an expected evaluator) to gain an understanding of the actual evaluator’s potential disposition and considerations. Another method is to seek out direct feedback from prospective evaluators at times other than during a proposal evaluation. This feedback can be sought during formal and informal project and program performance reviews, during and following submission of status reports, in advance of a CPARS evaluation point, and during data collection as part of a customer satisfaction program.

Storage and Retention

Seller retention and storage of past performance information is not prescribed by any governing or regulatory guidance outside of the firm itself; therefore, there is a range of ways this is accomplished, from doing nothing in particular to store or manage past performance information to tightly controlling the storage and retention of past performance information using all manner of archiving and retrieval systems and topologies. This topic is covered in greater detail in Chapter 4.

Use

One way to think about how a firm manages its past performance is through the lens of an equation or model. Heskett, Sasser, and Schlesinger provide an equation for customer value (1997):

They use this equation to illustrate that value to the customer is a function of both results achieved and quality of service offset against the price and other costs associated with acquiring the service. In this model, managing past performance means seeking an understanding of the results, quality, price, and costs as seen by the customer to increase value to the customer and thus the value the firm provides in the marketplace. Another model describing how a firm manages its past performance is called a maturity model (see Chapter 4).

Regardless of the means used to analyze how a firm does or should manage its past performance, the best and highest use of past performance information is to improve performance. Subordinate uses that support this primary objective include improving feedback, improving the documentation of past performance, and improving proposals.

Improve Performance

Excellence in performance is an ongoing endeavor. It is not a static position that can be achieved and then the means of achieving it forgotten about. It is a dynamic state requiring constant improvement guided by, among other things, customer perceptions, observations, and feedback. Performance improvement efforts of merit include measures of performance to assess performance, to set direction and goals, and to measure and monitor progress. Past performance information can serve as an important component of these measures of performance.

A firm can decide by itself that it is an “excellent” performer, and this self-appraisal may even have some merit and generate strong convictions and beliefs about the value of the firm’s products and services. However, in a crowded competitive field like the government contracting market, viable competitors are always present and it is not a firm’s own opinion of its value that matters, but the perception, judgments, and opinions of its customers and prospective customers. Current customers are already generating past performance information about a particular firm that prospective customers will use to evaluate its past performance—while making decisions of great importance to the firm. It is toward the improvement of these perceptions and judgments that the time and energy spent managing past performance is directed. There are two elements at play here: managing and improving perceptions of performance, including the documentation of those perceptions, and using this information to improve performance itself, thus improving future perceptions of performance.

Improve Feedback

A critical element of improving performance is robust and objective feedback from a variety of sources, particularly clients, including past, current, and prospective clients. For a large number of government contractors, past performance information represents the only customer satisfaction information they receive outside of direct feedback during performance. Past performance information provided by government agencies is also a low- or no-cost means of receiving customer feedback. Active involvement and management of this information flow is an important element of obtaining actionable customer feedback on performance.

An example of how a firm can actively manage and participate in this feedback loop to improve the feedback received is to perform self-assessments before a CPARS assessment is due, since on an ongoing project, the due dates for CPARS performance assessment by the customer are known. Well in advance of this due date, the firm can take a CPARS form, prepopulate the pro forma contract and administrative blocks, and use the elements in the form to complete a self-assessment of its own performance, assigning ratings it feels are appropriate for its own performance. The next step is to schedule a time with the rating official, most likely the contracting officer’s representative, to go over this self-assessment. The purpose of this session is to have a frank and open discussion regarding performance and to identify areas where the government evaluator sees a need for (or there is room for) improvement. The firm, armed with this information, can then use the remaining time between the discussion and the end of the rating period to address these areas and improve the evaluation against what it would have been had the feedback session not been held.

Improve Documentation of Past Performance

People are more inclined to act than they are to write about an action—particularly the actions of others—and documentation of past performance is essentially writing retrospectively about the actions of others. Human nature runs counter to efforts to improve documentation, as evidenced by low rates of completed CPARS reporting. Sellers in the past performance cycle must overcome this tendency in order to improve their documentation of past performance.

There are a number of means to achieve this goal, some of which include increasing the number of channels and types of feedback received, making CPARS reports more comprehensive and more complete, and increasing the posting of CPARS comments by the seller. The seller must take advantage of every opportunity to “complete the story” in a CPARS report by fully using the comments section or reminding government clients to complete CPARS reports. See Chapter 4 for specifics on how a firm can institutionalize these actions by describing them in plain language and assigning them as specific responsibilities to ensure they are accomplished.

Having an active customer satisfaction program is one way to increase the number of channels and types of feedback received. A customer satisfaction program can be tailored to elicit feedback from those that will respond to phone conversations but not to email queries, for instance. Elicitation and capture of customer quotes and awards or “attaboys” is another example of a concentrated effort to open up and encourage additional types of (positive) feedback that can be incorporated into past performance documentation. CPARS reports allow approximately one page of contractor comments and a generous allotment of 60 days in which to complete the comments. Few firms take the time to use these comment sections. Many equate the use of the comments sections to disputing the ratings received, which is neither their stated purpose nor the only way to make use of them. The comments section can be used to complete the story of each record, the story being a complete, stand-alone description of all aspects of the performance during the rating period that can then be understood by a wider audience relying upon this past performance documentation during evaluations of past performance.

Improve Proposals

We have identified improving performance as the primary objective of managing past performance. During contract performance, past performance information that will be used for bids and proposals is generated. Improved proposals generally result in more opportunities for performance. Thus we have established a self-reinforcing, positive feedback loop in which better performance results in more past performance information that, if managed well, results in improved proposals which in turn result in better performance. How past performance information can be best managed to improve proposals is covered in greater detail in Chapter 4 and Chapter 5.