CHAPTER 4

Too Much of a Good Thing

INFORMATION OVERLOAD—too much data—was a problem of particular interest to a young man named Herbert Simon. Simon was a business expert who had helped administer the Marshall Plan and in 1947 wrote a book about operations research called Administrative Behavior.1 In 1978, Simon won the Nobel Prize in economics for demonstrating that economics has as much to do with the brain as it does with math. He spent the rest of his life trying to build a computer that could think like a brain. There wasn’t as much theory then about how the brain works, but Simon instinctively realized that people don’t make decisions by weighing every possible alternative. They simply don’t have the time. So they make “good enough” decisions based on what has worked before.2

In 1952, while Simon was working as a consultant for the Rand Corporation in Santa Monica, California, he met Allen Newell, a graduate in physics from Stanford. Newell felt the same way as Simon about what would later be called artificial intelligence. In 1955 they left Rand for the Carnegie Institute to develop a computer program that would exhibit human intelligence.

That program, they realized, had to be based on the way the brain works: the brain, rather than being a precision instrument, runs on rules of thumb. It remembers experience, shapes it into patterns, and then uses the patterns to guess what’s coming down the pike. These guesses could be called hunches or intuitions. But the name that has stuck in the scientific world is heuristics, a term coined by mathematician George Pólya, who had been Newell’s tutor at Stanford. Heuristics are the rules of thumb (based on experience) that whittle our choices down to manageable numbers, such as deciding to group the stops on a shopping trip so that we first go to all the electronics stores to make price comparisons while they are fresh in our minds.

To test this theory, Newell and Simon wrote an algorithm having certain heuristics and fed it into their computer. What would have taken the computer tens of thousands of years to compute if it were making one calculation after another (like checking each person in New York City one at a time) was instead completed in onefourth of a second.

In June 1956, Newell and Simon presented their work at a conference at Dartmouth College. It was the first time that anything approaching “human” intelligence was coaxed out of a machine. John McCarthy, the assistant professor at Dartmouth who had organized the meeting, termed it artificial intelligence (AI), and the name stuck. Before long, researchers were adding other touches of common sense to their algorithms—manipulations such as generalizations, associations, pattern similarities, analogies, and maxims. This practice became known as fuzzy logic.

In July 1979, at the Winter Sports Palace in Monte Carlo, these efforts reached a high-watermark of sorts. Here, Luigi Villa, an Italian backgammon whiz, had just been crowned world backgammon champion. But he stuck around for one more contest. As the theme from Star Wars blared from the speakers, a three-foot-tall robot emerged on stage, tangled itself momentarily in the curtains (causing the cheering crowd to burst into laughter), bumped into a table (evoking more laughter), and then took its place across a backgammon board from Villa. The robot, dubbed Gammonoid, was connected (via satellite) to a computer located at Carnegie Mellon University in Pittsburgh.3

The computer program had been written by Hans Berliner, a world correspondence chess champion and student of Herb Simon’s. When the audience settled down, the game commenced. No world champion, in any board game, had ever been defeated by a software program. But Berliner had carefully shaped the heuristics in the program along the lines of human thinking. He didn’t tell Gammonoid how to win; he didn’t tell it what to do in every circumstance. If he had, Gammonoid would have spent years making those step-by-step calculations until he toppled over in a smoking heap. Rather, Berliner wrote the software to predict patterns that would pare down the possible alternatives, much as the brain does. Six games were played, and in each one Gammonoid beat the world champion. The world was amazed (and rather amused) by the feat. “Gammonoid the Conqueror,” the Washington Post exclaimed the following day.4

Did Gammonoid possess human intelligence? No. He could do one thing: play backgammon. He couldn’t even chew a stick of gum, let alone decide to do so. And that was the problem in trying to build a prediction machine like the mind. But that was OK with Simon. Gammonoid was only the beginning. “It is not my aim to surprise or shock you,” he said, “but the simplest way I can summarize is to say that there are now in the world machines that think, that learn and that create. Moreover, their ability to do these things is going to increase rapidly until—in a visible future—the range of problems they can handle will be coextensive with the range to which the human mind has been applied.”5

Gammonoid was replaced by Deep Blue, the IBM computer that in 1997 beat world chess champion Gary Kasparov. That triumph, as great as it may have seemed, was similar to Gammonoid’s. It didn’t prove that a robot had human intelligence. In fact, it provoked brain scientist and author Marvin Minsky to note, “Deep Blue might be able to win at chess, but it wouldn’t know to come in from the rain.”6 In a sense, Gammonoid and Deep Blue hampered the quest for the thinking machine, because they instilled the idea of “artificial” intelligence among us. What we want, really, is an artificial thing that creates real intelligence. To get that, we need to make something that can tackle predictions, heuristics, and intuition, as Simon demonstrated years ago.

The Flaw of Information

We tend to assume that the best way to solve a problem is to have perfect information and perfect calculation. But it is prediction in the face of limited information that makes our brains, well, thoughtful. In Blink: The Power of Thinking Without Thinking, author Malcolm Gladwell relates a situation in which prediction triumphs. Gladwell tells the story of the victory of Confederate General Robert E. Lee over Union General Joe Hooker at the battle of Chancellorsville. Hooker had the upper hand: a larger army that had been divided so that it was squeezing the Confederates in a vise. He also had infiltrated Lee’s army with spies and had an abundance of information.7

But Lee sensed what Hooker was up to, and so he divided his army and quietly sent his forces into place near the Union encampment. When Hooker’s men were eating dinner, the Rebel forces descended on them, sending the Union soldiers into a rout. “It was wisdom that someone acquires after a lifetime of learning and watching and doing,” Gladwell explains. “It’s judgment . . . Lee’s ability to sense Hooker’s indecision, to act on the spur of the moment, to conjure up a battle plan that would take Hooker by surprise—his ability, in other words, to move quickly and instinctively on the field of battle—was so critical that it is what made it possible for him to defeat an army twice the size of his. Judgment matters: it is what separates winners from losers.”8 In short, Lee took advantage of his ability to predict.

Gerd Gigerenzer, who has authored numerous academic articles on prediction, offers another counterintuitive idea.9 According to Gigerenzer, intuition often arises from how little we know of something rather than how much. He notes, “Intuitions based on only one good reason tend to be accurate when one has to predict the future (or some unknown present state of affairs), when the future is difficult to foresee, and when one has only limited information.” In other words, he says, one good reason is better than many. Less is more. And with that one good reason, notes Gigerenzer, we can get “almost unfairly easy insight.”10

In the case of Hooker and Lee, Hooker had the advantage in facts. He had a network of spies, and he had hot air balloons floating nearly over the Confederates’ heads. He was dead sure of himself. “My battle plans are perfect,” he boasted. “And when I start to carry them out, may God have mercy on Bobby Lee, for I shall have none.”11 Bobby Lee didn’t have the same wealth of information; in fact, he was so blissfully in the dark that Hooker managed to move seventy thousand Union troops behind him without his knowledge.

What caused General Lee to prevail? According to Gladwell, Lee had the blink mojo working for him—the instinct to react fast, move swiftly, and push the element of surprise back on Hooker. Or, as Gigerenzer would argue, Lee had just enough information and nothing more. Lee didn’t need spies and hot air balloons circling the battlefield ; he had patterns in his head that predicted the path to take. In World War II, Dwight Eisenhower could be said to have done the same thing: faced with the complexities of D-Day, with the weather deteriorating and the bold invasion of Europe possibly already detected, he made a decision with limited information, and the decision was to go.

We see this kind of decision making play out every day. Some executives become victims of analysis paralysis, thinking they need to weigh every bit of information against all possible outcomes. Those executives rarely make it very far. In contrast, other executives make quick decisions, based on limited information, using the brain’s implicit ability to predict the best path.

Monty Hall and Door Number 3

Have you ever heard of the Monty Hall Dilemma? This is a behavioral science experiment derived from the game show Let’s Make a Deal (whose host was Monty Hall). In the experiment, “Monty” asks the subject to choose between three doors: one hiding a new car, and the others hiding gag prizes. Once the subject has made the decision (say, door number 1), Monty opens one of the unchosen doors (let’s say door number 2) to reveal a gag prize. It is now clear that the car is behind either door number 1 or door number 3. Then comes the dilemma: Monty asks the subject, “Do you want to stay with the original door you chose, or switch?” What would you do?

Most people choose to stay with their original choice. But that is the wrong decision. In fact, you are two-thirds more likely to win the car if you switch. Why do people make the wrong decision? It turns out that Monty Hall is giving people too much information, the kind of information that Gigerenzer and Gladwell were concerned about. The right decision would have been much easier if, after you chose door number 1, Monty offered you the choice to switch and keep doors 2 and 3. In that case, everyone would switch, as we demonstrated in research studies while I was at Brown. But the two scenarios are actually identical.12

How about this brainteaser, courtesy of Amos Tversky and Nobel Laureate Danny Kahneman: “‘Linda’ is 31 years old, single, outspoken, and very bright. She majored in philosophy. As a student she was deeply concerned with such issues as discrimination and social justice. She also participated in several anti-nuclear demonstrations. Now, is Linda more likely to be a bank teller or a feminist bank teller?”13

As Tversky and Kahneman’s research has shown, most people say Linda is more likely to be a feminist bank teller. But why? Linda is always “more likely” to be a bank teller, because the category “bank teller” is larger than its subcategory “feminist bank teller”; it is irrelevant whether or not she is feminist. This is an example of information overload, one where information (her college major, her concerns, her political actions) causes errors in judgment. Gigerenzer showed that part of the problem stems from how information is presented.14 And with a group of colleagues from Brown, my team showed that decisions could be reversed by reducing the amount of information or even spacing it out appropriately.15 With less information, we more easily attain the right answer.

Companies haven’t yet gotten to the point of limiting people’s access to data, but I suspect they will. The most recent trends are to increase information flow, build larger data warehouses, and spend more time analyzing before making decisions. These practices go counter to the wisdom of the brain and the insight behind Blink. Gladwell’s book is in fact one of a minority of books about decision making. Most are focused on increasing information flow and driving more data into the decision process. Moresophisticated tools are being brought to market—not to simplify things, but to increase the complexity of data. But that is the wrong model for business. It is also the wrong model for a prediction machine.

Making the Internet a Prediction Machine

Amazon.com’s prediction system is as sophisticated as they come, precisely because it discards data in favor of pattern recognition. Think about the last time you were presented with a book suggestion from Amazon.com (hopefully this book). My mom recently purchased a book on Amazon.com after reading a recommended suggestion. The suggestion was so insightful and yet so out of left field that she was convinced a human was behind it. Why are the results often so good? It was Amazon.com’s strong predictive algorithms at work—algorithms that substitute human-like decision making based on a few pieces of data rather than an overwhelming amount. It was by no accident that this happened: Dan Ariely, my MIT mentor and author of the best-seller Predictably Irrational, helped Amazon.com build some of these intelligent algorithms.16

Steven Johnson, author of Emergence and Mind Wide Open, also cites Amazon.com’s prediction capability: “The recommendation agent that we interact with at Amazon has gotten remarkably smart in a remarkably short time,” he writes. “If you’ve built up a long purchase history with Amazon, you’ll tend to get pretty sophisticated recommendations . . . The software doesn’t know what it’s like to read a book, or what you feel like when you read a particular book. All it knows is that people who bought this book also bought these other ones; or that people who rated these books highly also rated these books highly . . . Out of that elemental data something more nuanced can emerge.”17

These algorithms work by looking for patterns in how we buy, rate, and recommend books. From this data, patterns emerge that may, as my mom said, come from left field but are incredibly powerful. As Amazon.com founder Jeff Bezos puts it, “I remember one of the first times this struck me. The main book on the page was on Zen. There were other suggestions for Zen books, and in the middle of those was a book on how to have a clutter-free desk.”18 He goes on to say that this is not something a human would do.

But Bezos is wrong: this is exactly the type of association a human would make, and that is what makes it powerful. Amazon. com was able to make the link between a Zen book, past behavior, and the fact that Bezos was looking to clean up his desk, just as a colleague in human resources might do after peering through a stack of papers, only to find a hapless boss feverishly reading a book on Zen.

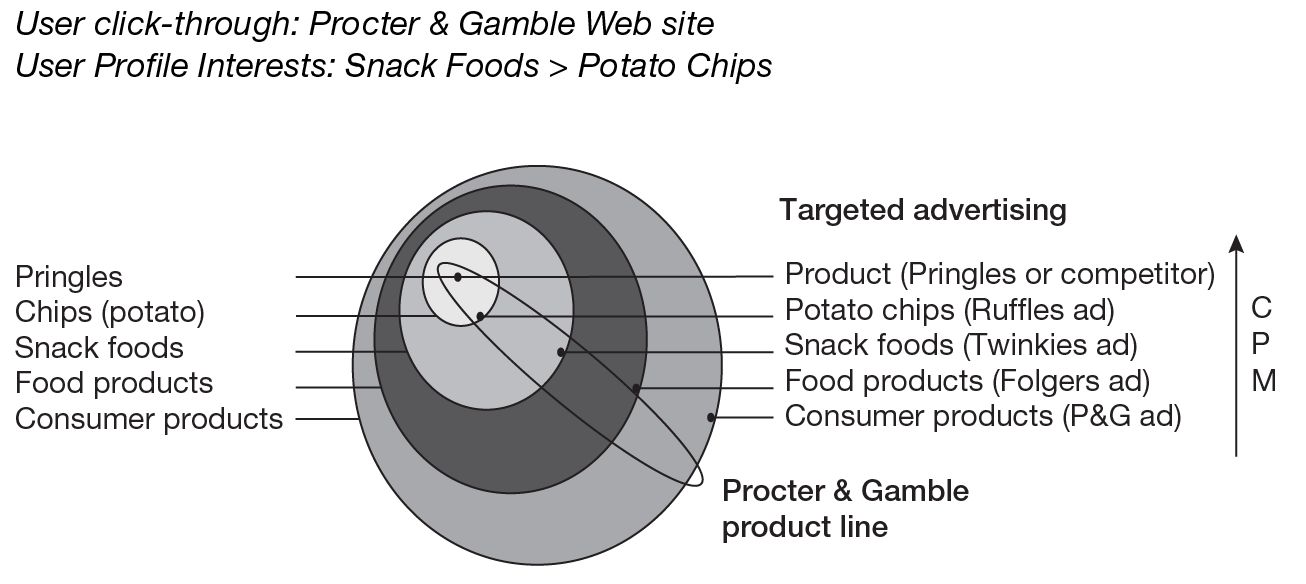

At Simpli.com, we built a prediction engine (developed in part by Jim Anderson and Dan Ariely) that let us compare user searches to advertising. If the user had searched at some point for chips and snacks (versus chips and Intel), the technology would target the user with, say, a Pringles ad. This is roughly the same technology that Google now uses for AdSense, its advertising system.

Using this same technology at NetZero and Juno, we would present people with ads after they visited particular sites. So you would receive a Pringles ad after you visited, say, P&G.com, Lays.com, and KidsSnacks.com. Later we leveraged other useful information: user search terms, click stream data, and buying patterns (see figure 4-1). We increased our most valuable inventory while offering narrower targeting for advertisers.

Prediction engine at Simpli.com

The nice thing about running an ISP is that you have endless amounts of data. But as you have seen, prediction is more about eliminating data than using it. So our model identified the universe of possible opportunities and cut it to a narrow set of targeted ad spots. With so much ad inventory on the Internet, reducing the amount available can actually drive revenues higher. So when users’ behavior indicated they might like Pringles, we matched them directly to a Pringles ad; if users were of a broader mind or undecided, we would open up the inventory and give them a more general treat—maybe Pringles, maybe Twinkies, maybe both. And the benefits to the advertisers were striking: more clicks, higher sales, and an overall increase in the efficiency of their marketing programs.

Predictions based on limited information, as you can see, are essential to the operation of both the brain and the Internet. But another consideration is in play: in both the brain and the Internet, thoughts and ideas come and go. They are created and destroyed. And the brain has created an interesting way to deal with this deficiency. Don’t believe me? Turn the page. I predict that the next chapter will reveal it all.