A secure product: a product that protects the confidentiality, integrity, and availability of the customers’ information, and the integrity and availability of processing resources, under control of the system’s owner or administrator.

A security vulnerability: a flaw in a product that makes it infeasible—even when using the product properly—to prevent an attacker from usurping privileges on the user’s system, regulating its operation, compromising data on it, or assuming ungranted trust.

As the Internet grows in importance, applications are becoming highly interconnected. In the "good old days," computers were usually islands of functionality, with little, if any, interconnectivity. In those days, it didn’t matter if your application was insecure—the worst you could do was attack yourself—and so long as an application performed its task successfully, most people didn’t care about security. This paradigm is evident in many of the classic best practices books published in the early 1990s. For example, the excellent Code Complete (Microsoft Press, 1993), by Steve McConnell, makes little or no reference to security in its 850 pages. Don’t get me wrong: this is an exceptional book and one that should be on every developer’s bookshelf. Just don’t refer to it for security inspiration.

Times have changed. In the Internet era, virtually all computers—servers, desktop personal computers, and, more recently, cell phones, pocket-size devices, and other form factor devices such as the AutoPC and embedded systems—are interconnected. Although this creates incredible opportunities for software developers and businesses, it also means that these interconnected computers can be attacked. For example, applications not designed to run in highly connected (and thus potentially harsh) environments often render computer systems susceptible to attack because the application developers simply didn’t plan for the applications to be networked and accessible by malicious assailants. Ever wonder why the World Wide Web is often referred to as the Wild Wild Web? In this chapter, you’ll find out. The Internet is a hostile environment, so you must design all code to withstand attack.

Important

Never assume that your application will be run in only a few given environments. Chances are good it will be used in some other, as yet undefined, setting. Assume instead that your code will run in the most hostile of environments, and design, write, and test your code accordingly.

It’s also important to remember that secure systems are quality systems. Code designed and built with security as a prime feature is more robust than code written with security as an afterthought. Secure products are also more immune to media criticism, more attractive to users, and less expensive to fix and support. Because you cannot have quality without security, you must use tact or, in rare cases, subversion to get everyone on your team to be thinking about security. I’ll discuss all these issues in this chapter, and I’ll also give you some methods for helping to ensure that security is among the top priorities in your organization.

If you care about quality code, read on.

On a number of occasions I’ve set up a computer on the Internet just to see what happens to it. Usually, in a matter of days, the computer is discovered, probed, and attacked. Such computers are often called honeypots. A honeypot is a computer set up to attract hackers so that you can see how the hackers operate.

More Information

To learn more about honeypots and how hackers break into systems, take a look at the Honeynet Project at http://project.honeynet.org.

I also saw this process of discovery and attack in mid-1999 when working on the http://www.windows2000test.com Web site, a site no longer functional but used at the time to battle-test Microsoft Windows 2000 before it shipped to users. We silently slipped the Web server onto the Internet on a Friday, and by Monday it was under massive attack. Yet we’d not told anyone it was there.

The point is made: attacks happen. To make matters worse, attackers currently have the upper hand in this ongoing battle. I’ll explain some of the reasons for this in "The Attacker’s Advantage and the Defender’s Dilemma" later in this chapter.

Some attackers are highly skilled and very clever. They have deep computer knowledge and ample time on their hands. They have the time and energy to probe and analyze computer applications for security vulnerabilities. I have to be honest and say that I have great respect for some of these attackers, especially the white-hats, or good guys, many of whom I know personally. The best white-hats work closely with software vendors, including Microsoft, to discover and remedy serious security issues prior to the vendor issuing a security bulletin prompting users to take mitigating action, such as applying a software fix or changing a setting. This approach helps prevent the Internet community from being left defenseless if the security fault is first discovered by vandals who mount widespread attacks.

Many attackers are simply foolish vandals; they are called script kiddies. Script kiddies have little knowledge of security and can attack insecure systems only by using scripts written by more knowledgeable attackers who find, document, and write exploit code for the security bugs they find. An exploit (often called a sploit) is a way of breaking into a system.

This is where things can get sticky. Imagine that you ship an application, an attacker discovers a security vulnerability, and the attacker goes public with an exploit before you have a chance to rectify the problem. Now the script kiddies are having a fun time attacking all the Internet-based computers running your application. I’ve been in this position a number of times. It’s a horrible state of affairs, not enjoyable in the least. People run around to get the fix made, and chaos is the order of the day. You are better off not getting into this situation in the first place, and that means designing secure applications that are intended to withstand attack.

The argument I’ve just made is selfish. I’ve looked at reasons to build secure systems from the software developer’s perspective. Failure to build systems securely leads to more work for you in the long run and a bad reputation, which in turn can lead to the loss of sales as customers switch to a competing product perceived to have better security support. Now let’s look at the viewpoint that really matters: the end user’s viewpoint!

Your end users demand applications that work as advertised and the way they expect them to each time they launch them. Hacked applications do neither. Your applications manipulate, store, and, hopefully, protect confidential user data and corporate data. Your users don’t want their credit card information posted on the Internet, they don’t want their medical data hacked, and they don’t want their systems infected by viruses. The first two examples lead to privacy problems for the user, and the latter leads to downtime and loss of data. It is your job to create applications that help your users get the most from their computer systems without fear of data loss or invasion of privacy. If you don’t believe me, ask your users.

Trustworthy computing is not a marketing gimmick. It is a serious push toward greater security within Microsoft and hopefully within the rest of the industry. Consider the telephone: in the early part of the last century, it was a miracle that phones worked at all. We didn’t particularly mind if they worked only some of the time or that we couldn’t call places a great distance away. People even put up with inconveniences like shared lines. It was just a cool thing that you could actually speak with someone who wasn’t in the same room with you. As phone systems improved, people began to use them more often in their daily lives. And as use increased, people began to take their telephones for granted and depend on them for emergencies. (One can draw a similar analogy with respect to electricity.) This is the standard that we should hold our computing infrastructure to. Our computers need to be running all the time, doing the tasks we bought them to do; not crashing because someone sent an evil packet, and not doing the bidding of someone who isn’t authorized to use the system.

We clearly have a lot of work to do to get our computers to be considered trustworthy. There are difficult problems that need to be solved, such as how to make our systems self-healing. Securing large networks is a very interesting and non-trivial problem. It’s our hope that this book will help us all build systems we can truly consider trustworthy.

"Security is a top priority" needs to be a corporate dictum because, as we’ve seen, the need to ship secure software is greater than ever. Your users demand that you build secure applications—they see such systems as a right, not a privilege. Also, your competitor’s sales force will whisper to your potential customers that your code is risky and unsafe. So where do you begin instilling security in your organization? The best place is at the top, which can be hard work. It’s difficult because you’ll need to show a bottom-line impact to your company, and security is generally considered something that "gets in the way" and costs money while offering little or no financial return. Selling the idea of building secure products to management requires tact and sometimes requires subversion. Let’s look at each approach.

The following sections describe arguments you can and should use to show that secure applications are good for your business. Also, all these arguments relate to the bottom line. Ignoring them is likely to have a negative impact on your business’s success.

This is a simple issue to sell to your superiors. All you need to do is ask them if they care about creating quality products. There’s only one answer: yes! If the answer is no, find a job elsewhere, somewhere where quality is valued.

OK, I know it’s not as simple as that, because we’re not talking about perfect software. Perfect software is an oxymoron, just like perfect security. (As is often said in the security community, the most secure system is the one that’s turned off and buried in a concrete bunker, but even that is not perfect security.) We’re talking about software secure enough and good enough for the environment in which it will operate. For example, you should make a multiplayer game secure from attack, but you should spend even more time beefing up the security of an application designed to manipulate sensitive military intelligence or medical records.

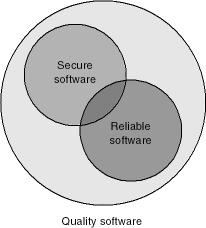

Despite the fact that the need for security and the strength of security is context-driven—that different situations call for different solutions—what’s clear in this argument is that security is a subset of quality. A product that is not appropriately secure is inferior to competing products. Some would argue that security is a subset of reliability also; however, that depends on what the user means by security. For example, a solution that protects secret data need not necessarily be reliable. If the system crashes but does so in a manner that does not reveal the data, it can still be deemed secure. As Figure 1-1 shows, if you care about quality or reliability, you care about security.

Like it or not, the press loves to make headlines out of security problems. And sometimes members of the press don’t know what they’re talking about and mischaracterize or exaggerate issues. Why let the facts get in the way of a good story? Because people often believe what they read and hear, if your product is in the headlines because of a security issue, serious or not, you can bet that your sales and marketing people will hear about the problem and will have to determine a way to explain the issue. The old adage that "any news is good news" simply does not hold true for security incidents. Such publicity can lead people to start looking for solutions from your competitors because they offer seemingly more secure products than you do.

Once news gets around that your product doesn’t work appropriately because it’s insecure, some people will begin to shy away from your product or company. Worse yet, people who have a grudge against your product might fan the fire by amassing bad security publicity to prove to others that using your product is dangerous. They will never keep track of the good news, only the bad news. It’s an unfortunate human trait, but people tend to keep track of information that complies with their biases and agendas. Again, if you do not take security seriously, the time will come when people will start looking to your competition for products.

There is a misguided belief in the market that people who can break into systems are also the people who can secure them. Hence, there are a lot of would-be consultants who believe that they need some trophies mounted on their wall for people to take them seriously. You don’t want your product to be a head on someone’s wall!

Like all engineering changes, security fixes are expensive to make late in the development process. It’s hard to determine a dollar cost for a fix because there are many intangibles, but the price of making one includes the following:

The cost of the fix coordination. Someone has to create a plan to get the fix completed.

The cost of developers finding the vulnerable code.

The cost of developers fixing the code.

The cost of testers testing the fix.

The cost of testing the setup of the fix.

The cost of creating and testing international versions.

The cost of digitally signing the fix if you support signed code, such as Authenticode.

The cost to post the fix to your Web site.

The cost of writing the supporting documentation.

The cost of handling bad public relations.

Bandwidth and download costs if you pay an ISP to host fixes for you.

The cost of lost productivity. Chances are good that everyone involved in this process should be working on new code instead. Working on the fix is time lost.

The cost to your customers to apply the fix. They might need to run the fix on a nonproduction server to verify that it works as planned. Once again, the people testing and applying the fix would normally be working on something productive!

Finally, the potential cost of lost revenue, from likely clients deciding to either postpone or stop using your product.

As you can see, the potential cost of making one security fix could easily be in the tens, if not hundreds, of thousands of dollars. If only you had had security in mind when you designed and built the product in the first place!

Note

While it is difficult to determine the exact cost of issuing a security fix, the Microsoft Security Response Center believes a security bug that requires a security bulletin costs in the neighborhood of $100,000.

Another source of good reasons to make security a priority is the Department of Justice’s Computer Crime and Intellectual Property Section (CCIPS) Web site at http://www.cybercrime.gov. This superb site summarizes a number of prosecuted computer crime cases, outlining some of the costs necessitated and damages inflicted by the criminal or criminals. Take a look, and then show it to the CEO. He or she should realize readily that attacks happen often and that they are expensive.

Now let’s turn our attention to something a little more off-the-wall: using subversion to get the message across to management that it needs to take security seriously.

Luckily, I have had to use this method of instilling a security mind-set in only a few instances. It’s not the sort of thing you should do often. The basic premise is you attack the application or network to make a point. For example, many years ago I found a flaw in a new product that allowed an attacker (and me!) to shut down the service remotely. The product team refused to fix it because they were close to shipping the product and did not want to run the risk of not shipping the product on time. My arguments for fixing the bug included the following:

The bug is serious: an attacker can remotely shut down the application.

The attack can be made anonymously.

The attack can be scripted, so script kiddies are likely to download the script and attack the application en masse.

The team will have to fix the bug one day, so why not now?

It will cost less in the long run if the bug is fixed soon.

I’ll help the product team put a simple, effective plan in place with minimal chance of regression bugs.

What’s a regression bug? When a feature works fine, a change is made, and then the feature no longer works in the correct manner, a regression is said to have occurred. Regression bugs can be common when security bugs are fixed. In fact, based on experience, I’d say regressions are the number one reason why testing has to be so intensive when a security fix is made. The last thing you need is to make a security fix, only to find that it breaks some other feature.

Even with all this evidence, the product group ignored my plea to fix the product. I was concerned because this truly was a serious problem; I had already written a simple Perl script that could shut down the application remotely. So I pulled an evil trick: I shut down the application running on the team’s server they used each day for testing purposes. Each time the application came back up, I shut it down again. This was easy to do. When the application started, it opened a specific Transmission Control Protocol (TCP) port, so I changed my Perl script to look for that port and as soon as the port was live on the target computer, my script would send the packet to the application and shut it down. The team fixed the bug because they realized the pain and anguish their users would feel. As it turned out, the fix was trivial; it was a simple buffer overrun.

More Information

Refer to Chapter 5, for more information on buffer overruns.

Another trick, which I recommend you never use except in the most dire situations, is to attack the application you want fixed while it’s running on a senior manager’s laptop. A line you might use is, "Which vice president’s machine do I need to own to get this fixed?"

Note

What does own mean? Own is hacker slang for having complete and unauthorized access to a computer. It’s common to say a system is 0wn3d. Yes, the spelling is correct! Hackers tend to mix numerals and letters when creating words. For example, 3 is used to represent e, zero is used to represent o, and so on. You also often hear that a system was rooted or that someone got root. These terms stem from the superuser account under Unix named root. Administrator or System account on Microsoft Windows NT, Windows 2000, and Windows XP has an equivalent level of access.

Of course, such action is drastic. I’ve never pulled this stunt—or, at least, I won’t admit to it!—and I would probably e-mail the VP beforehand to say that the product she oversees has a serious security bug that no one wants to fix and that if she doesn’t mind, I’d like to perform a live demonstration. The threat of performing this action is often enough to get bugs fixed.

Important

Never use subversive techniques except when you know you’re dealing with a serious security bug. Don’t cry wolf, and pick your battles.

Now let’s change focus. Rather than looking at how to get the top brass into the game, let’s look at some ideas and concepts for instilling a security culture in the rest of your organization.

Now that you have the CEO’s attention, it’s time to cultivate a security culture in the groups that do the real work: the product development teams. Generally, I’ve found that convincing designers, developers, and testers that security is important is reasonably easy because most people care about the quality of their product. It’s horrible reading a review of your product that discusses the security weakness in the code you just wrote. Even worse is reading about a serious security vulnerability in the code you wrote! The following sections describe some methods for creating an atmosphere in your organization in which people care about, and excel at, designing and building secure applications.

Assuming you’ve succeeded in getting the attention of the boss, have him send an e- mail or memo to the appropriate team members explaining why security is a prime focus of the company. One of the best e-mails I saw came from Jim Allchin, Group Vice President of Windows at Microsoft. The following is an excerpt of the e-mail he sent to the Windows engineering team:

I want customers to expect Windows XP to be the most secure operating system available. I want people to use our platform and not have to worry about malicious attacks taking over the Administrator account or hackers getting to their private data. I want to build a reputation that Microsoft leads the industry in providing a secure computing infrastructure—far better than the competition. I personally take our corporate commitment to security very seriously, and I want everyone to have the same commitment.

The security of Windows XP is everyone’s responsibility. It’s not about security features—it’s about the code quality of every feature.

If you know of a security exploit in some portion of the product that you own, file a bug and get it fixed as soon as possible, before the product ships.

We have the best engineering team in the world, and we all know we must write code that has no security problems, period. I do not want to ship Windows XP with any known security hole that will put a customer at risk.

This e-mail is focused and difficult to misunderstand. Its message is simple: security is a high priority. Wonderful things can happen when this kind of message comes from the top. Of course, it doesn’t mean no security bugs will end up in the product. In fact, some security bugs have been found since Windows XP shipped, and no doubt more will be found. But the intention is to keep raising the bar as new versions of the product are released so that fewer and fewer exploits are found.

The biggest call to action for Microsoft came in January 2002 when Bill Gates sent his Trustworthy Computing memo to all Microsoft employees and outlined the need to deliver more secure and robust applications to users because the threats to computer systems have dramatically increased. The Internet of three years ago is no longer the Internet of today. Today, the Net is much more hostile, and applications must be designed accordingly. You can read about the memo at http://news.com.com/2009-1001-817210.html.

Having one or more people to evangelize the security cause—people who understand that computer security is important for your company and for your clients—works well. These people will be the focal point for all security-related issues. The main goals of the security evangelist or evangelists are to

Stay abreast of security issues in the industry.

Interview people to build a competent security team.

Provide security education to the rest of the development organization.

Hand out awards for the most secure code or the best fix of a security bug. Examples include cash, time off, a close parking spot for the month—whatever it takes!

Provide security bug triaging to determine the severity of security bugs, and offer advice on how they should be fixed.

Let’s look at some of these goals.

Two of the best sources of up-to-date information are NTBugTraq and BugTraq. NTBugTraq discusses Windows NT security specifically, and BugTraq is more general. NTBugTraq is maintained by Russ Cooper, and you can sign up at http://www.ntbugtraq.com. BugTraq, the most well-known of the security vulnerability and disclosure mailing lists, is maintained by SecurityFocus, which is now owned by Symantec Corporation. You can sign up to receive e-mails at http://www.securityfocus.com. On average, you’ll see about 20 postings a day. It should be part of the everyday routine for a security guru to see what’s going on in the security world by reading postings from both NTBugTraq and BugTraq.

If you’re really serious, you should also consider some of the other SecurityFocus offerings, such as Vuln-Dev, Pen-Test, and SecProg. Once again, you can sign up for these mailing lists at http://www.securityfocus.com.

In many larger organizations, you’ll find that your security experts will be quickly overrun with work. Therefore, it’s imperative that security work scales out so that people are accountable for the security of the feature they’re creating. To do this, you must hire people who not only are good at what they do but also take pride in building a secure, quality product.

When I interview people for security positions within Microsoft, I look for a number of qualities, including these:

A love for the subject. The phrase I often use is "having the fire in your belly."

A deep and broad range of security knowledge. For example, understanding cryptography is useful, but it’s also a requirement that security professionals understand authentication, authorization, vulnerabilities, prevention, accountability, real-world security requirements that affect users, and much more.

An intense desire to build secure software that fulfills real personal and business requirements.

The ability to apply security theory in novel yet appropriate ways to mitigate security threats.

The ability to define realistic solutions, not just problems. Anyone can come up with a list of problems—that’s the easy part!

The ability to think like an attacker.

Often, the ability to act like an attacker. Yes, to prevent the attacks, you really need to be able to do the same things that an attacker does.

The primary trait of a security person is a love for security. Good security people love to see IT systems and networks meeting the needs of the business without putting the business at more risk than the business is willing to take on. The best security people live and breathe the subject, and people usually do their best if they love what they do. (Pardon my mantra: if people don’t love what they do, they should move on to something they do love.)

Another important trait is experience, especially the experience of someone who has had to make security fixes in the wild. That person will understand the pain and anguish involved when things go awry and will implant that concern in the rest of the company. In 2000, the U.S. stock market took a huge dip and people lost plenty of money. In my opinion, many people lost a great deal of money because their financial advisors had never been through a bear market. As far as they were concerned, the world was good and everyone should keep investing in hugely overvalued .com stocks. Luckily, my financial advisor had been through bad times and good times, and he made some wise decisions on my behalf. Because of his experience with bad times, I wasn’t hit as hard as some others.

If you find someone with these traits, hire the person.

When my wife and I were expecting our first child, we went to a newborn CPR class. At the end of the session, the instructor, an ambulance medic, asked if we had any questions. I put up my hand and commented that when we wake up tomorrow we will have forgotten most of what was talked about, so how does he recommend we keep our newfound skills up-to-date? The answer was simple: reread the course’s accompanying book every week and practice what you learn. The same is true for security education: you need to make sure that your not-so-security-savvy colleagues stay attuned to their security education. For example, the Secure Windows Initiative team at Microsoft employs a number of methods to accomplish this, including the following:

Create an intranet site that provides a focal point for security material. This should be the site people go to if they have any security questions.

Provide white papers outlining security best practices. As you discover vulnerabilities in the way your company develops software, you should create documentation about how these issues can be stamped out.

Perform daylong security bug-bashes. Start the day with some security education, and then have the team review their own product code, designs, test plans, and documentation for security issues. The reason for filing the bugs is not only to find bugs. Bug hunting is like homework—it strengthens the knowledge they learned during the morning. Finding bugs is icing on the cake.

Each week send an e-mail to the team outlining a security bug and asking people to find the problem. Provide a link in the e-mail to your Web site with the solution, details about how the bug could have been prevented, and tools or material that could have been used to find the issue ahead of time. I’ve found this approach really useful because it keeps people aware of security issues each week.

Provide security consulting to teams across the company. Review designs, code, and test plans.

Tip

When sending out a bug e-mail, also include mechanical ways to uncover the bugs in the code. For example, if you send a sample buffer overrun that uses the strcpy function, provide suggestions for tracing similar issues, such as using regular expressions or string search tools. Don’t just attempt to inform about security bugs; make an effort to eradicate classes of bugs from the code!

There are times when you will have to decide whether a bug will be fixed. Sometimes you’ll come across a bug that will rarely manifest itself, that has low impact, and that is very difficult to fix. You might opt not to remedy this bug but rather document the limitation. However, you’ll also come across serious security bugs that should be fixed. It’s up to you to determine the best way to remedy the bug and the priority of the bug fix.

I’ve outlined the requirement to build more secure applications, and I’ve suggested some simple ways to help build a security culture. However, we should not overlook the fact that as software developers we are always on the back foot. Simply put, we, as the defenders, must build better quality systems because the attacker almost certainly has the advantage.

Once software is installed on a computer, especially an Internet-facing system, it is in a state of defense. I mean that the code is open to potential attack 24 hours a day and 7 days a week from any corner of the globe, and it must therefore resist assault such that resources protected by the system are not compromised, corrupted, deleted, or viewed in a malicious manner. This situation is incredibly problematic for all users of computer systems. It’s also challenging for software manufacturers because they produce software that is potentially a point of attack.

Let’s look at some of the reasons why the attackers can have fun at the defender’s expense. You’ll notice as you review these principles that many are related.

Imagine you are the lord of a castle. You have many defenses at your disposal: archers on the battlements, a deep moat full of stagnant water, a drawbridge, and 5-foot-thick walls of stone. As the defender, you must have guards constantly patrolling the castle walls, you must keep the drawbridge up most of the time and guard the gate when the drawbridge is down, and you must make sure the archers are well-armed. You must be prepared to fight fires started by flaming arrows, and you must also make sure the castle is well-stocked with supplies in case of a siege. The attacker, on the other hand, need only spy on the castle to look for one weak point, one point that is not well-defended.

The same applies to software: the attacker can take your software and look for just one weak point, while we, the defenders, need to make sure that all entry points into the code are protected. Of course, if a feature is not there—that is, not installed—then it cannot be attacked.

Now imagine that the castle you defend includes a well that is fed by an underground river. Have you considered that an attacker could attack the castle by accessing the underground river and climbing up the well? Remember the original Trojan horse? The residents of Troy did not consider a gift from the Greeks as a point of attack, and many Trojan lives were lost.

Software can be shipped with defenses only for pretheorized or preunderstood points of attack. For example, the developers of IIS 5 knew how to correctly defend against attacks involving escaped characters in a URL, but they did not prepare a defense to handle an attack taking advantage of a malformed UTF-8 sequence because they did not know the vulnerability existed. The attacker, however, spent much time looking for incorrect character handling and found that IIS 5 did not handle certain kinds of malformed UTF-8 escaping correctly, which led to a security vulnerability. More information is at http://www.wiretrip.net/rfp/p/doc.asp/i2/d57.htm.

The only way to defend against unknown attacks is to disenable features unless expressly required by the user. In the case of the Greeks, the Trojan horse would have been a nonevent if there was no way to get the "gift" into the city walls.

The defender’s guard must always be up. The attacker’s life, on the other hand, is much easier. She can remain unnoticed and attack whenever she likes. In some instances, the attacker might wait for just the right moment before attacking, while the defender must consider every moment as one in which an attack might occur. This can be a problem for sysadmins, who must always monitor their systems, review log files, and look for and defend against attack. Hence, software developers must provide software that can constantly defend against attack and monitoring tools to aid the user in determining whether the system is under attack.

This is not always true in the world of software, but it’s more true than false. The defender has various well-understood white-hat tools (for example, firewalls, intrusion-detection systems, audit logs, and honeypots) to protect her system and to determine whether the system is under attack. The attacker can use any intrusive tool he can find to determine the weaknesses in the system. Once again, this swings the advantage in favor of the attacker.

As you can see, the world of the defender is not a pleasant one. As defenders, software developers must build applications and solutions that are constantly vigilant, but the attackers always have the upper hand and insecure software will quickly be defeated. In short, we must work smarter to defeat the attackers. That said, I doubt we’ll ever "defeat" Internet vandals, simply because there are so many attackers, so many servers to attack, and the fact that many attackers assail Internet-based computers simply because they can! Or, as George Mallory (1886-1924) answered the question, "Why do you want to climb Mt. Everest?": "Because it is there." Nevertheless, we can raise the bar substantially, to a point where the attackers will find software more difficult to attack and use their skills for other purposes.

Finally, be aware that security is different from other aspects of computing. Other than your own developers, few, if any, people are actively looking for scalability or internationalization issues in software. However, plenty of people are willing to spend time, money, and sweat looking for security vulnerabilities. The Internet is an incredibly complex and hostile environment, and your applications must survive there.