Many books that cover building secure applications outline only one part of the solution: the code. This book aims to be different by covering design, coding, testing, and documentation. All of these aspects are important for delivering secure systems, and it’s imperative that you adopt a disciplined process that incorporates these aspects. Simply adding some "good ideas" or a handful of "best practices" and checklists to a poor development process will result in only marginally more secure products. In this chapter, I’ll describe in a general way some methods for improving the security focus of the development process. I’ll then spend a good amount of time on educational issues because education is both crucial to creating secure products and a pet subject of mine. Then I’ll move on to more specific discussion of the techniques you should use to instill security awareness and discipline at each step in the development process.

However, let’s first look at some of the reasons why people choose not to build secure systems and why many perfectly intelligent people make security mistakes. Some of the reasons include the following:

Security is boring.

Security is often seen as a functionality disabler, as something that gets in the way.

Security is difficult to measure.

Security is usually not the primary skill or interest of the designers and developers creating the product.

Security means not doing something exciting and new.

Personally, I don’t agree with the first reason—security professionals thrive on building secure systems. Usually, it’s people with little security experience and perhaps little understanding of security who think it’s boring, and designs and code considered boring rarely make for good quality. As I hope you already know or will discover by reading this book, the more you know about security, the more interesting it is.

The second reason is an oft-noted view, and it is somewhat misguided. Security disables functionality that should not be available to the user. For example, if for usability reasons you build an application allowing anyone to read personal credit card information without first being authenticated and authorized, anyone can read the data, including people with less-than-noble intentions! Also, consider this statement from your own point of view. Is security a "disabler" when your data is illegally accessed by attackers? Is security "something that gets in the way" when someone masquerades as you? Remember that if you make it easy for users to access sensitive data, you make it easy for attackers, too.

The third reason is true, but it’s not a reason for creating insecure products. Unlike performance, which has tangible analysis mechanisms—you know when the application is slow or fast—you cannot say a program has no security flaws and you cannot easily say that one application is more secure than another unless you can enumerate all the security flaws in both. You can certainly get into heated debates about the security of A vs. B, but it’s extremely difficult to say that A is 15 percent more secure than B.

That said, you can show evidence of security-related process improvements—for example, the number of people trained on security-related topics, the number of security defects removed from the system, and so on. A product designed and written by a security-aware organization is likely to exhibit fewer security defects than one developed by a more undisciplined organization. Also, you can potentially measure the effective attack surface of a product. I’ll discuss this in Chapter 3, and in Chapter 19.

Note also that the more features included in the product, the more potential security holes in it. Attackers use features too, and a richer feature set gives them more to work with. This ties in with the last reason cited in the previous bulleted list. New functions are inherently more risky than proven, widely used, more mature functionality, but the creativity (and productivity) of many developers is sparked by new challenges and new functions or new ways to do old functions. Bill Gates, in his Trustworthy Computing memo, was pointed about this when he said, "When we face a choice between adding features and resolving security issues, we need to choose security."

Ok, let’s look at how we can resolve these issues.

Ignoring for just a moment the education required for the entire development team—I’ll address education issues in detail in the next section, "The Role of Education"—we need to update the software development process itself. What I’m about to propose is not complex. To better focus on security, you can add process improvements at every step of the software development life cycle regardless of the life cycle model you use.

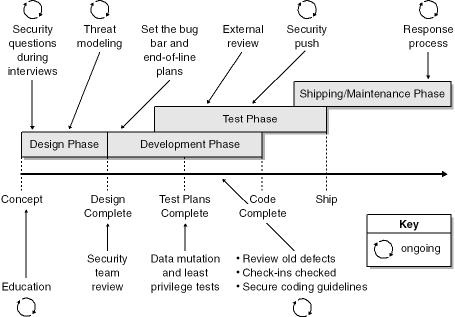

Figure 2-1 shows some innovations that will add more accountability and structure in terms of security to the software development process. If you use a spiral development model, you should just bend the line into a circle, and if you use a waterfall approach, simply place a set of downward steps in the background! I’ll discuss each aspect of these process improvements—and other matters also important during various steps in the process—in detail throughout this chapter.

You’ll notice that many parts of the process are iterative and ongoing. For example, you don’t hire people for your group only at the start of the project; it’s a constant part of the process.

The best example of an iterative step in a software development process that makes security a high priority is the first step: education. I think the most critically important part of delivering secure systems is raising awareness through security education, as described in the next section.

I mentioned that security education is a pet subject of mine or, more accurately, the lack of security education is a pet peeve of mine, and it really came to a head during the Windows Security Push in the first quarter of 2002. Let me explain. During the push, we trained about 8500 people in ten days. The number of people was substantial because we made it mandatory that anyone on a team that contributed to the Windows CD (about 70 groups) had to attend the training seminars, including vice presidents! We had three training tracks, and each was delivered five or six times. One track was for developers, one was for testers, and one was for program managers. (In this case, program managers own the overall design of the features of the product.) Documentation people went to the appropriate track dictated by their area of expertise. Some people were gluttons for punishment and attended all three tracks!

Where am I going with this? We trained all these people because we had to. We knew that if the Windows Security Push was going to be successful, we had to raise the level of security awareness for everybody. As my coauthor, David, often says, "People want to do the right thing, but they often don’t know what the right thing is, so you have to show them." Many software developers understand how to build security features into software, but many have never been taught how to build secure systems. Here’s my assertion: we teach the wrong things in school or, at least, we don’t always teach the right things. Don’t get me wrong, industry has a large role to play in education, but it starts at school.

The best way to explain is by way of a story. In February 2002, I took time out from the Windows Security Push to participate in a panel discussion at the Network and Distributed System Security Symposium (NDSS) in San Diego on the security of Internet-hosted applications. I was asked a question by a professor that led me to detail an employment interview I had given some months earlier. The interview was for a position on the Secure Windows Initiative (SWI) team, which helps other product teams design and develop secure applications. I asked the candidate how he would mitigate a specific threat by using the RSA (Rivest-Shamir-Adleman) public-key encryption algorithm. He started by telling me, "You take two very large prime numbers, P and Q." He was recounting the RSA algorithm to me, not how to apply it. I asked him the question again, explaining that I did not want to know how RSA works. (It’s a black box created and analyzed by clever people, so I assume it works as advertised.) What I was interested in was the application of the technology to mitigate threats. The candidate admitted he did not know, and that’s fine: he got a job elsewhere in the company.

By the way, the question I posed was how you would use RSA to prevent a person selling stock from reneging on the transaction if the stock price rose. One solution is to support digitally signed transactions using RSA and to use a third-party escrow company and timestamp service to countersign the request. When the seller sells the stock, the request is sent to the third party first. The company validates the user’s signature and then timestamps and countersigns the sell order. When the brokerage house receives the request, it realizes it has been signed by both the seller and the timestamp service, which makes it much harder for the seller to deny having made the sell order.

The principle skill I was looking for in the interview was the ability, in response to a security problem, to apply techniques to mitigate the problem. The candidate was very technical and incredibly smart, but he did not understand how to solve security problems. He knew how security features work, but frankly, it really doesn’t matter how some stuff works. When building secure systems, you have to know how to alleviate security threats. An analogy I like to draw goes like this: you go to a class to learn to defensive driving, but the instructor teaches you how an internal combustion engine works. Unless you’re a mechanic, when was the last time you cared about the process of fuel and air entering a combustion chamber and being compressed and ignited to provide power? The same principle applies to building secure systems: understanding how features work, while interesting, will not help you build a secure system.

Important

Make this your motto: Security Features != Secure Features.

Once the panel disbanded, five professors marched up to me to protest such a despicable interview question. I was stunned. They tried convincing me that understanding how RSA worked was extremely important. My contention was that explaining in an exam answer how RSA works is fairly easy and of interest to only a small number of people. Also, the exam taker’s answer is either correct or incorrect; however, understanding threat mitigation is a little more complex, and it’s harder to mark during an exam. After a lively debate, it was agreed by all parties that teaching students how to build secure systems should comprise learning about and mitigating threats and learning how RSA and other security features work. I was happy with the compromise!

Now back to the Windows Security Push. We realized we had to teach people about delivering secure systems because the chances were low that team members had been taught how to build secure systems in school. We realized that many understood how Kerberos, DES (Data Encryption Standard), and RSA worked but we also knew that that doesn’t help much if you don’t know what a buffer overrun looks like in C++! As I often say, "You don’t know what you don’t know," and if you don’t know what makes a secure design, you can never ship a secure product. Therefore, it fell on our group to raise the security awareness of 8500 people.

The net of this is that you have to train people about security issues and you have to train them often because the security landscape changes rapidly as new threat classes are found. The saying, "What you don’t know won’t harm you" is simply not true in the area of security. What you do not know can (and probably will) leave your clients open to serious attack. You should make it mandatory for people to attend security training classes (as Microsoft is doing). This is especially true for new employees. Do not assume new hires know anything about secure systems!

Important

Education is critical to delivering secure systems. Do not expect people to understand how to design, build, test, document, and deploy secure systems; they may know how security features work, but that really doesn’t help. Security is one area where "What I don’t know won’t hurt me" does not apply; what you don’t know can have awful consequences.

We were worried that mandatory training would have negative connotations and be poorly received. We were amazed to find we were completely wrong. Why were we wrong? Most software development organizations are full of geeks, and geeks like learning new things. If you give a geek an opportunity to learn about a hot topic such as security, she or he will actively embrace it. So provide your geeks with the education they need! They yearn for it.

Note

While we’re on the subject of geeks, don’t underestimate their ability to challenge one another. Most geeks are passionate about what they do and like to hold competitions to see who can write the fastest, tightest, and smallest code. You should encourage such behavior. One of my favorite examples was when a developer in the Internet Information Services (IIS) 6 team offered a plaster mold of his pinky finger to anyone who could find a security flaw in his code. Even though many people tried—they all wanted the trophy—no one found anything, and the developer’s finger is safe to this day. Now think about what he did for a moment; do you think he cared about losing his trophy? No, he did not; all he wanted was as many knowledgeable eyes as possible to review his code for security defects. He did this because he doesn’t want to be the guy who wrote the code that led to a well-publicized security defect. I call it clever!

It’s unfortunate, but true, that each week we see new security threats or threat variations that could make seemingly secure products vulnerable to attack. Because of this, you must plan ongoing training for your development teams. For example, our group offers monthly training to make people aware of the latest security issues and the reasons for these issues and to teach how to mitigate the threats. We also invite guest speakers to discuss lessons learned in their area of security and to offer product expertise.

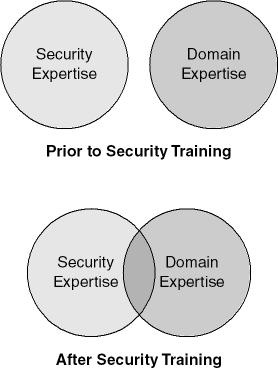

It turns out security education has an interesting side effect. Once you communicate security knowledge to a number of domain experts—for example, in the case of Windows, we have people who specialize in file systems, globalization, HTTP, XML, and much more—they begin thinking about how their feature set can be used by malicious users. Figure 2-2 illustrates this concept.

Figure 2-2. The mind-set change that occurs when you teach security skills to formerly nonsecurity people.

This shift in perspective gave rise to a slogan, "One person’s feature is another’s exploit," as domain experts used their skill and knowledge to come up with security threats in features that were once considered benign.

Tip

If you do not have security skills in-house, hire a security consulting company that offers quality, real-world training courses to upskill your employees.

Important

There are two aspects to security training. The first is to teach people about security issues so that they can look over their current product and find and fix security bugs. However, the ultimate and by far the most important goal of security education is to teach people not to introduce security flaws into the product in the first place!

I often hear that more eyes reviewing code equals more security flaws found and therefore more secure code. This is untrue. The people reviewing the code need to know and understand what security vulnerabilities look like before they can determine whether the code is flawed. Here’s an analogy. While this book was being written, a number of accounting scandals came to light. In short, there’s evidence that a number of companies used some "imaginative" accounting practices. So, now imagine if a company’s CEO is called before the United States Congress to answer questions about the company’s accounting policies and the conversation goes like this:

Congressional representative: "We believe your company tampered with the accounts."

CEO: "We did not."

Congressional representative: "How can you prove it?"

CEO: "We had 10,000 people review our accounts, and no one found a single flaw."

Congressional representative: "But what are the credentials of the reviewers? What is their accounting background, and are they familiar with your business?"

CEO: "Who cares? We had 10,000 people review the books—that’s 20,000 eyes!"

It does not matter how many people review code or specifications for security flaws, not unless they understand and have experience building secure systems and understand common security mistakes. People must learn before they can perform a truly appropriate review. And once you teach intelligent people about security vulnerabilities and how to think like an attacker, it’s amazing what they can achieve.

In 2001, I performed a simple experiment with two friends to test my theories about security education. Both people were technical people, with solid programming backgrounds. I asked each of them to review 1000 lines of real public domain C code I found on the Internet for security flaws. The first developer found 10 flaws, and the second found 16. I then gave them an intense one-hour presentation about coding mistakes that lead to security vulnerabilities and how to question assumptions about the data coming into the code. Then I asked them to review the code again. I know this sounds incredible, but the first person found another 45 flaws, and the second person found 41. Incidentally, I had spotted only 54 flaws in the code. So the first person, who found a total of 55 flaws, had found one new flaw, and the second person, with 57 total flaws, had found the same new flaw as the first person plus two others!

If it seems obvious that teaching people to recognize security flaws means that they will find more flaws, why do people continue to believe that untrained eyes and brains can produce more secure software?

Important

A handful of knowledgeable people is more effective than an army of fools.

An interesting side effect of raising the security awareness within a development organization is that developers now know where to go if they need help rather than plod along making the same mistakes. This is evident in the huge volume of questions asked on internal security newsgroups and e-mail distribution lists at Microsoft. People are asking questions about things they may not have normally asked about, because their awareness is greater. Also, there is a critical mass of people who truly understand what it takes to design, build, test, and document secure systems, and they continue to have a positive influence on those around them. This has the effect of reducing the chance that new security defects will be entered into the code.

People need security training! Security is no longer a skill attained only by elite developers; it must be part of everyone’s daily skill set.

As with all software development, it’s important to get security things right during the design phase. No doubt you’ve seen figures that show it takes ten times more time, money, and effort to fix a bug in the development phase than in the design phase and ten times more in the test phase than in the development phase, and so on. From my experience, this is true. I’m not sure about the actual cost estimates, but I can safely say it’s easier to fix something if it doesn’t need fixing because it was designed correctly. The lesson is to get your security goals and designs right as early as possible. Let’s look at some details of doing this during the design phase.

Hiring and retaining employees is of prime importance to all companies, and interviewing new hires is an important part of the process. You should determine a person’s security skill set from the outset by asking security-related questions during interviews. If you can pinpoint people during the interview process as candidates with good security skills, you can fast-track them into your company.

Remember that you are not interviewing candidates to determine how much they know about security features. Again, security is not just about security features; it’s about securing mundane features.

During an interview, I like to ask the candidate to spot the buffer overrun in a code example drawn on a whiteboard. This is very code-specific, but developers should know a buffer overrun when they see one.

More Information

See Chapter 5, for much more information on spotting buffer overruns.

Here’s another favorite of mine: "The government lowers the cost of gasoline; however, they place a tracking device on every car in the country and track mileage so that they can bill you based on distance traveled." I then ask the candidate to assume that the device uses a GPS (Global Positioning System) and to discuss some of these issues:

What are the privacy implications of the device?

How can an attacker defeat this device?

How can the government mitigate the attacks?

What are the threats to the device, assuming that each device has embedded secret data?

Who puts the secrets on the device? Are they to be trusted? How do you mitigate these issues?

I find this a useful exercise because it helps me ascertain how the candidate thinks about security issues; it sheds little light on the person’s security features knowledge. And, as I’m trying hard to convince you, how the candidate thinks about security issues is more important when building secure systems. You can teach people about security features, but it’s hard to train people to think with a security mind-set. So, hire people who can think with a hacking mind-set.

Another view is to hire people with a mechanic mind-set, people who can spot bad designs, figure out how to fix them, and often point out how they should have been designed in the first place. Hackers can be pretty poor at fixing things in ways that make sense for an enterprise that has to manage thousands of PCs and servers. Anyone can think of ways to break into a car, but it takes a skilled engineer to design a robust car, and an effective car alarm system. You need to hire both hackers and mechanics!

More Information

For more on finding the right people for the job, take another look at "Interviewing Security People" in Chapter 1.

You need to determine early who your target audience is and what their security requirements are. My wife has different security needs than a network administrator at a large multinational corporation. I can guess the security needs that my wife has, but I have no idea what the requirements are for a large customer until I ask them what they are. So, who are your clients and what are their requirements? If you know your clients but not their requirements, you need to ask them! It’s imperative that everyone working on a product understands the users’ needs. Something we’ve found very effective at Microsoft is creating personas or fictitious users who represent our target audience. Create colorful and lively posters of your personas, and place them on the walls around the office. When considering security goals, include their demographics, their roles during work and play, their security fears, and risk tolerance in your discussions. Figure 2-3 shows an example persona poster.

By defining your target audience and the security goals of the application, you can reduce "feature creep," or the meaningless, purposeless bloating of the product. Try asking questions like "Does this security feature or addition help mitigate any threats that concern one of our personas?" If the answer is no, you have a good excuse not to add the feature because it doesn’t help your clients. Create a document that answers the following questions:

Who is the application’s audience?

What does security mean to the audience? Does it differ for different members of the audience? Are the security requirements different for different customers?

Where will the application run? On the Internet? Behind a firewall? On a cell phone?

What are you attempting to protect?

What are the implications to the users if the objects you are protecting are compromised?

Who will manage the application? The user or a corporate IT administrator?

What are the communication needs of the product? Is the product internal to the organization or external, or both?

What security infrastructure services do the operating system and the environment already provide that you can leverage?

How does the user need to be protected from his own actions?

On the subject of the importance of understanding the business requirements, ISO 17799, "Information Technology – Code of practice for information security management,"—an international standard that covers organizational, physical, communications, and systems development security policy—describes security requirements in its introduction and in section 10.1, "Security requirements of systems," and offers the following in section 10.1.1:

Security requirements and controls should reflect the business value of the information assets involved, and the potential business damage, which might result from a failure or absence of security.

Note

ISO 17799 is a somewhat high-level document, and its coverage of code development is sketchy at best, but it does offer interesting insights and assistance to the development community. You can buy a copy of the standard from http://www.iso.ch.

More Information

If you use ISO 17799 in your organization, most of this book relates to section §9.6, "Application access control," section §10.2, "Security in application systems," and to a lesser extent §10.3, "Cryptographic controls."

Security is a feature, just like any other feature in the product. Do not treat security as some nebulous aspect of product development. And don’t treat security as a background task, only added when it’s convenient to do so. Instead, you should design security into every aspect of your application. All product functional specifications should include a section outlining the security implications of each feature. To get some ideas of how to consider security implications, go to http://www.ietf.org and look at any RFC created in the last couple of years—they all include security considerations sections.

Remember, nonsecurity products must still be secure from attack. Consider the following:

The Microsoft Clip Art Gallery buffer overrun that led to arbitrary code execution (http://www.microsoft.com/technet/security/bulletin/MS00-015.asp).

A flaw in the Solaris file restore application, ufsrestore, could allow an unprivileged local user to gain root access (http://online.securityfocus.com/advisories/3621).

The sort command in many UNIX-based operating systems, including Apple’s OS X, could create a denial of service (DoS) vulnerability (http://www.kb.cert.org/vuls/id/417216).

What do all these programs have in common? The programs themselves have nothing to do with security features, but they all had security vulnerabilities that left users susceptible to attack.

Note

One of the best stories I’ve heard is from a friend at Microsoft who once worked at a company that usually focused on security on Monday mornings — after the vice president of engineering watched a movie such as "The Net," "Sneakers," or "Hackers" the night before!

I once reviewed a product that had a development plan that looked like this:

Milestone 0: Designs complete

Milestone 1: Add core features

Milestone 2: Add more features

Milestone 3: Add security

Milestone 4: Fix bugs

Milestone 5: Ship product

Do you think this product’s team took security seriously? I knew about this team because of a tester who was pushing for security designs from the start and who wanted to enlist my help to get the team to work on it. But the team believed it could pile on the features and then clean up the security issues once the features were done. The problem with this approach is that adding security at M3 will probably invalidate some of the work performed at M1 and M2. Some of the bugs found during M3 will be hard to fix and, as a result, will remain unfixed, making the product vulnerable to attack.

This story has a happy conclusion: the tester contacted me before M0 was complete, and I spent time with the team, helping them to incorporate security designs into the product during M0. I eventually helped them weave the security code into the application during all milestones, not just M3. For this team, security became a feature of the product, not a stumbling block. It’s interesting to note the number of security-related bugs in the product. There were very few security bugs compared with the products of other teams who added security later, simply because the product features and the security designs protecting those features were symbiotic. The product was designed and built with both in mind from the start.

Remember the following important points if you decide to follow the bad product team example:

Adding security later is wrapping security around existing features, rather than designing features with security in mind.

Adding any feature, including security, as an afterthought is expensive.

Adding security might change the way you’ve implemented features. This too can be expensive.

Adding security might change the application interface, which might break the code that has come to rely on the current interface.

Important

Do not add security as an afterthought!

If you’re creating applications for nonexpert users (such as my mom!), you should be even more aware of your designs up front. Even though users require secure environments, they don’t want security to "get in the way." For such users, security should be hidden from view, and this is a trying goal because information security professionals simply want to restrict access to resources and nonexpert users require transparent access. Expert users also require security, but they like to have buttons to click and options to select so long as they’re understandable.

I was asked to review a product schedule recently, and it was a delight to see this:

|

Date |

Product Milestone |

Security Activities |

|---|---|---|

|

Sep-1-2002 |

Project Kickoff |

Security training for team |

|

Sep-8-2002 |

M1 Start | |

|

Oct-22-2002 |

Security-Focused Day | |

|

Oct-30-2002 |

M1 Code Complete |

Threat models complete |

|

Nov-6-2002 |

Security Review I with Secure Windows Initiative Team | |

|

Nov-18-2002 |

Security-Focused Day | |

|

Nov-27-2002 |

M2 Start | |

|

Dec-15-2002 |

Security-Focused Day | |

|

Jan-10-2003 |

M2 Code Complete | |

|

Feb-02-2003 |

Security-Focused Day | |

|

Feb-24-2003 |

Security Review II with Secure Windows Initiative Team | |

|

Feb-28-2003 |

Beta 1 Zero |

Priority 1 and 2 Security Bugs |

|

Mar-07-2003 |

Beta 1 Release | |

|

Apr-03-2003 |

Security-Focused Day | |

|

May-25-2003 |

M3 Code Complete | |

|

Jun-01-2003 |

Start 4-week-long security push | |

|

Jul-01-2003 |

Security Review (including push results) III | |

|

Aug-14-2003 |

Beta 2 Release | |

|

Aug-30-2003 |

Security-Focused Day | |

|

Sep-21-2003 |

Release Candidate 1 | |

|

Sep-30-2003 |

Final Security Overview IV with Secure Windows Initiative Team | |

|

Oct-30-2003 |

Ship product! |

This is a wonderful ship schedule because the team is building critical security milestones and events into their time line. The purpose of the security-focused days is to keep the team aware of the latest issues and vulnerabilities. A security day usually involves training at the start of the day, followed by a day of design, code, test plan and documentation reviews. Prizes are given for the "best" bugs and for most bugs. Don’t rule out free lattes for the team! Finally, you’ll notice four critical points where the team goes over all its plans and status to see what midcourse corrections should be taken.

Security is tightly interwoven in this process, and the team members think about security from the earliest point of the project. Making time for security in this manner is critical.

I know it sounds obvious, but if you’re spending more time on security, you’ll be spending less time on other features, unless you want to push out the product schedule or add more resources and cost. Remember the old quote, "Features, cost, schedule; choose any two." Because security is a feature, it has an impact on the cost or the schedule, or both. Therefore, you need to add time to or adjust the schedule to accommodate the extra work. If you do this, you won’t be "surprised" as new features require extra work to make sure they are designed and built in a secure manner.

Like any feature, the later you add it in, the higher the cost and the higher the risk to your schedule. Doing security design work early in your development cycle allows you to better predict the schedule impact. Trying to work in security fixes late in the cycle is a great way to ship insecure software late. This is particularly true of security features that mitigate DoS attacks, which frequently require design changes.

Note

Don’t forget to add time to the schedule to accommodate training courses and education.

We have an entire chapter on threat modeling, but suffice it to say that threat models help form the basis of your design specifications. Without threat models, you cannot build secure systems, because securing systems requires you to understand your threats. Be prepared to spend plenty of time working on threat models. They are well worth the effort.

"Software never dies; it just becomes insecure." This should be a bumper sticker, because it’s true. Software does not tire nor does it wear down like stuff made of atoms, but it can be rendered utterly insecure overnight as the industry learns new vulnerabilities. Because of this, you need to have end-of-life plans for old functionality. For example, say you decide that an old feature will be phased out and replaced with a more secure version currently available. This will give you time to work with clients to migrate their application over to the new functionality as you phase out the old, less-secure version. Clients generally don’t like surprises, and this is a great way of telling them to get ready for change.

You have to be realistic and pragmatic when determining which bugs to fix and which not to fix prior to shipping. In the perfect world, all issues, including security issues, would be fixed before you release the product to customers. In the real world, it’s not that simple. Security is one part, albeit a very important part, of the trade-offs that go into the design and development of an application. Many other criteria must be evaluated when deciding how to remedy a flaw. Other issues include, but are not limited to, regression impact, accessibility to people with disabilities, deployment issues, globalization, performance, stability and reliability, scalability, backward compatibility, and supportability.

This may seem like blasphemy to some of you, but you have to be realistic: you can never ship flawless software, unless you want to charge millions of dollars for your product. Moreover, if you shipped flawless software, it would take you so long to develop the software that it would probably be outdated before it hit the shelves. However, the software you ship should be software that does what you programmed it to do and only that. This doesn’t mean that the software suffers no failures; it means that it exhibits no behavior that could render the system open to attack.

Note

Before he joined Microsoft, my manager was one of the few people to have worked on the development team of a system designed to meet the requirements of Class A1 of the Orange Book. (The Orange Book was used by the U.S. Department of Defense to evaluate system security. You can find more information about the Orange Book at http://www.dynamoo.com/orange.) The high-assurance system took a long time to develop, and although the system was very secure, he canceled the project because by the time it was completed it was hopelessly out of date and no one wanted to use it.

You must fix bugs that make sense to fix. Would you fix a bug that affected ten people out of your client base of fifty thousand if the bug were very low threat, required massive architectural changes, and had the potential to introduce regressions that would prevent every other client from doing their job? Probably not in the current version, but you might fix it in the next version so that you could give your clients notice of the impending change.

I remember a meeting a few years ago in which we debated whether to fix a bug that would solve a scalability issue. However, making the fix would render the product useless to Japanese customers! After two hours of heated discussion, the decision was made not to fix the issue directly but to provide a work-around solution and fix the issues correctly in the following release. The software was not flawless, but it worked as advertised, and that’s good enough as long as the documentation outlines the tolerances within which it should operate.

You must set your tolerance for defects early in the process. The tolerances you set will depend on the environment in which the application will be used and what the users expect from your product. Set your expectations high and your defect tolerance low. But be realistic: you cannot know all future threats ahead of time, so you must follow certain best practices, which are outlined in Chapter 3, to reduce your attack surface. Reducing your attack surface will reduce the number of bugs that can lead to serious security issues. Because you cannot know new security vulnerabilities ahead of time, you cannot ship perfect software, but you can easily raise the bug bar dramatically with some process improvements.

Important

Elevation of privilege attacks are a no-brainer—fix them! Such attacks are covered in Chapter 4."

Finally, once you feel you have a good, secure, and well-thought-out design, you should ask people outside your team who specialize in security to review your plans. Simply having another set of knowledgable eyes look at the plans will reveal issues, and it’s better to find issues early in the process than at the end. At Microsoft, it’s my team that performs many of these reviews with product groups.

Now let’s move onto the development phase.

Development involves writing and debugging code, and the focus is on making sure your developers write the best-quality code possible. Quality is a superset of security; quality code is secure code. Let’s look at the some of the practices you can follow during this phase to achieve these goals.

I’ll keep this short. Revoke everyone’s ability to check in new and updated existing code. The ability to update code is a privilege, not a right. Developers get the privilege back once they have attended "Security Bootcamp" training.

Peer review of new code is, by far, my favorite practice because that peer review is a choke point for detecting new flaws before they enter the product. In fact, I’ll go out on a limb here: I believe that training plus peer review for security of all check-ins will substantially increase the security of your code. Not just because people are checking the quality of the code from a security viewpoint, but also because the developer knows his peers will evaluate the code for security flaws. This effect is called the Hawthorn effect, named for a factory just south of Chicago, Illinois. Researchers measured the length of time it took workers to perform tasks while under observation. They discovered that people worked faster and more effectively than they did when they weren’t observed by the researchers.

Here’s an easy way to make source code more accessible for review. Write a tool that uses your source control software to build an HTML or XML file of the source code changes made in the past 24 hours. The file should include code diffs, a link that shows all the updated files, and an easy way to view the updated files complete with diffs. For example, I’ve written a Perl tool that allows me to do this against the Windows source code. Using our source control software, I can get a list of all affected files, a short diff, and I then link to windiff.exe to show the affected files and the diffs in each file.

Because this method shows a tiny subset of source code, it makes it a reasonably easy task for a security expert to do a review. Note I say security expert. It’s quite normal for all code to be peer-reviewed before it’s checked into the code tree, but security geeks should review code again for security flaws, not generic code correctness.

You should define and evangelize a minimum set of coding guidelines for the team. Inform the developers of how they should handle buffers, how they should treat untrusted data, how they should encrypt data, and so on. Remember, these are minimum guidelines and code checked into the source control system should adhere to the guidelines, but the team should strive to exceed the guidelines. Appendix C, Appendix D, and Appendix E offer starting guidelines for designers, developers, and testers and should prove to be a useful start for your product, too.

Reviewing old defects is outlined in greater detail in Chapter 3 in the "Learn from Mistakes" section. The premise is you must learn from past mistakes so that you do not continue making the same security errors. Have someone in your team own the process of determining why errors occur and what can be done to prevent them from occurring again.

It’s worthwhile to have an external entity, such as a security consulting company, review your code and plans. We’ve found external reviews effective within Microsoft mainly because the consulting companies have an outside perspective. When you have an external review performed, make sure the company you choose to perform the review has experience with the technologies used by your application and that the firm provides knowledge transfer to your team. Also, make sure the external party is independent and isn’t being hired to, well, rubber-stamp the product. Rubber stamps might be fine for marketing but are death for developing more secure code because they can give you a false sense of security.

Microsoft initiated a number of security pushes starting in late 2001. The goals of these security pushes included the following:

Raise the security awareness of everyone on the team.

Find and fix issues in the code and, in some instances, the design of the product.

Get rid of some bad habits.

Build a critical mass of security people across the team.

The last two points are critical. If you spend enough time on a security push—in the case of Windows, it was eight weeks—the work on the push proper is like homework, and it reinforces the skills learned during the training. It gives all team members a rare opportunity to focus squarely on security and shed some of the old insecure coding habits. Moreover, once the initial push is completed, enough people understand what it takes to build secure systems that they continue to have an effect on others around them. I’ve heard from many people that over 50 percent of the time of code review or design review meetings after the security push was spent discussing the security implications of the code or design. (Of course, the meetings I attended after the security push were completely devoted to security, but that’s just the Hawthorn effect at work!)

If you plan on performing a security push, take note of some of the best practices we learned:

Perform threat modeling first. We’ve found that teams that perform threat modeling first experience less "churn" and their process runs smoother than for those teams that perform threat modeling, code, test plan, and design reviews in parallel. The reason is the threat modeling process allows developers and program managers to determine which parts of the product are most at risk and should therefore be evaluated more deeply. Chapter 4 is dedicated to threat modeling.

Keep the information flowing. Inform the entire team about new security findings and insights on a daily basis with updated status e-mails, and keep everyone abreast as new classes of issues are discovered.

Set up a core security team that meets each day to go over bugs and issues and that looks for patterns of vulnerability. It is this team that steers the direction of the push.

The same team should have a mailing list or some sort of electronic discussion mechanism to allow all team members to ask security questions. Remember that the team is learning new stuff; be open to their ideas and comments. Don’t tell someone his idea of a security bug is stupid! You want to nurture security talent, not squash it.

Present prizes for best bugs, most bugs found, and so on. Geeks love prizes!

You’ll find security bugs if you focus on looking for them, but make sure your bug count doesn’t become unmanageable. A rule used by some groups is to allow a developer to have no more than five active bugs at a time. Also, the total number of bugs for the product should be no more than three times the number of developers in the group. Once either of these rules is broken, the developers should switch over to fixing issues rather than finding more security bugs. Once bugs are fixed, the developers can then look for others. This has the positive effect of keeping the developers fresh and productive.

When a security flaw is found in the design or in the code, you should log an entry in your bug-tracking database, as you would normally do. However, you should add an extra field to the database so that you can define what kind of security threat the bug poses. You can use the STRIDE threat model—explained in Chapter 4—to categorize the threats and at the end of the process analyze why you had, for example, so many denial of service flaws.

Don’t add any ridiculous code to your application that gives a list of all the people who contributed to the application. If you don’t have time to meet your schedule, how can you meet the schedule when you spend many hours working on an Easter egg? I have to admit that I wrote an Easter Egg in a former life, but it was not in the core product. It was in a sample application. I would not write an Easter Egg now, however, because I know that users don’t need them and, frankly, I don’t have the time to write one!

Security testing is so important that we gave it its own chapter. Like all other team members pursuing secure development, testers must be taught how attackers operate and they must learn the same security techniques as developers. Testing is often incorrectly seen as a way of "testing in" security. You must not do this. The role of security testing is to verify that the system design and code can withstand attack. Determining that features work as advertised is still a critically important part of the process, but as I mentioned earlier, a secure product exhibits no other "features" that could lead to security vulnerabilities. A good security tester looks for and exploits these extra capabilities. See Chapter 19 for information on these issues, including data mutation and least privilege tests.

The hard work is done, or so it seems, and the code is ready to ship. Is the product secure? Are there any known vulnerabilities that could be exploited? Both of these beg the question, "How Do You Know When You’re Done?"

You are done when you have no known security vulnerabilities that compromise the security goals determined during the design phase. Thankfully, I’ve never seen anyone readjust these goals once they reach the ship milestone; please do not be the first.

As you get closer to shipping, it becomes harder to fix issues of any kind without compromising the schedule. Obviously, security issues are serious and should be triaged with utmost care and consideration for your clients. If you find a serious security issue, you might have to reset the schedule to accommodate the issue.

Consider adding a list of known security issues in a readme file, but keep in mind that people often do not read readme files. Certainly don’t use the readme file as a means to secure customers. Your default install should be secure, and any issues outlined in the document should be trivial at worst.

Important

Do not ship with known exploitable vulnerabilities!

It’s a simple fact that security flaws will be found in your code after you ship. You’ll discover some flaws internally, and external entities will discover others. Therefore, you need a policy and process in place to respond to these issues as they arise. Once you find a flaw, you should put it through a standard triage mechanism during which you determine what the flaw’s severity is, how best to fix the flaw, and how to ship the fix to customers. If vulnerability is found in a component, you should look for all the other related issues in that component. If you do not look for related issues, you’ll not only have more to fix when the other issues are found but also be doing a disservice to your customers. Do the right thing and fix all the related issues, not just the singleton bug.

If you find a security vulnerability in a product, be responsible and work with the vendor to get the vulnerability fixed. You can get some insight into the process by reading the Acknowledgment Policy for Microsoft Security Bulletins at http://www.microsoft.com/technet/security/bulletin/policy.asp, the RFPolicy at http://www.wiretrip.net/rfp/policy.html, and the Internet Draft "Responsible Vulnerability Disclosure Process" by Christey and Wysopal (http://www.ietf.org.)

If you really want to get some ideas about how to build a security response process, take a look at the Common Criteria Flaw Redemption document at http://www.commoncriteria.org/docs/ALC_FLR/alc_flr.html. It’s heavy reading, but interesting nonetheless.

In some development organizations, the person responsible for the code is not necessarily the person that fixes the code if a security flaw is found. This is just wrong. Here’s why. John writes some code that ships as part of the product. A security bug is found in John’s code, and Mary makes the fix. What did John just learn from this process? Nothing! That means John will continue to make the same mistakes because he’s not getting negative feedback and not learning from his errors. It also makes it hard for John’s management to know how well he is doing. Is John becoming a better developer or not?

Important

If a security flaw is found, the person that wrote the code should fix it. That way she’ll know not to make the same mistake again.

A team that knows little about delivering secure systems will not deliver a secure product. A team that follows a process that does not encompass good security discipline will not deliver a secure product. This chapter outlined some of the product development cycle success factors for delivering products that withstand malicious attacks. You should adopt some of these measures as soon as you can. Developer education and supporting the accountability loop should be implemented right away. Other measures can be phased in as you become more adept. Whatever you do, take time to evaluate your process as it stands today, determine what your company’s security goals are, and plan for process changes that address the security goals.

The good news is changing the process to deliver more secure software is not as hard as you might think! The hard part is changing perceptions and attitudes.