i

i

i

i

i

i

i

i

13

Programming 3D

Graphics in

Real Time

Three-dimensional graphics have come a long way in the last 10 years or so.

Computer games look fantastically realistic on even an off-the-shelf home

PC, and there is no reason why an interactive virtual environment cannot do

so, too. The interactivity achievable in any computer game is exactly what is

required for a virtual environment even if it will be in a larger scale. Using

the hardware acceleration of some of the graphics display devices mentioned

in Chapter 12, this is easy to achieve. We will postpone examining the pro-

grammability of the display adapters until Chapter 14 and look now at how

to use their fixed functionality,asitiscalled.

When wor king in C/C++, the two choices open to application programs

for accessing the powerful 3D hardware are to use either the OpenGL or Di-

rect3D

1

API libraries. To see how these are used, we shall look at an example

program which renders a mesh model describing a virtual world. Our pro-

gram will give its user control over his navigation around the model-world and

the direction in which he is looking. We will assume that the mesh is made up

from triangular polygons, but in trying not to get too bogged down by a ll the

code needed for reading a mesh format, we shall assume that we have library

functions to read the mesh from file and store it as lists of vertices, polygons

and surface attributes in the computer’s random access memory (RAM).

2

1

Direct3D is only available on Windows PCs.

2

The mesh format that we will use is from our open source 3D animation and modeling

software package called OpenFX. The software can be obtained from http://www.openfx.org.

Source code to read OpenFX’s mesh model .MFX files is included on the CD.

303

i

i

i

i

i

i

i

i

304 13. Programming 3D Graphics in Real Time

It always surprises us how richly detailed a 3D environment, modeled

as a mesh, can appear with only a few tens or hundreds of polygons. The

reason for this is simply that texture mapping (or image mapping) has been

applied to the mesh surfaces. So our examples will show you how to use

texture maps. If you need to remind yourself of the mathematical principles

of texturing (also known as image mapping or shading), review Section 7.6.

In the next chapter, we shall see how texturing and shading are taken to a

whole new level of realism with the introduction of programmable GPUs.

13.1 Visualizing a Virtual Wo rld

Using OpenGL

OpenGL had a long and successful track recor d before appearing on PCs for

the first time with the release of Windows NT Version 3.51 in the mid 1990s.

OpenGL was the brainchild of Silicon Graphics, Inc.(SGI), who were known

as the real-time 3D graphics specialists with applications and their special

workstation hardware. Compared to PCs, the SGI systems were just unaf-

fordable. Today they are a rarity, although still around in some research labs

and specialist applications. However, the same cannot be said of OpenGL; it

goes from strength to strength. The massive leap in processing po wer, espe-

cially in graphics processing power in PCs, means that OpenGL can deliver

almost anything that is asked of it. Many games use it as the basis for their

rendering engines, and it is ideal for the visualization aspects of VR. I t has, in

our opinion, one major advantage: it is stable. Only minor extensions have

been added, in terms of its API, and they all fit in very well with what was

already there. The alternate 3D software technology, Direct3D, has required

nine versions to match OpenGL. For a short time, Direct3D seemed to be

ahead because of its advanced shader model, but now with the addition of

just a few functions in Version 2, OpenGL is its equal. And, we believe, it is

much easier to work with.

13.1.1 The Architecture of OpenGL

It is worth pausing for a moment or two to think about one of the key aspects

of OpenGL (and Direct3D too). They are designed for re al-time 3D graphics.

VR application developers must keep this fact in the forefront of their minds

at all times. One must always be thinking about how long it is going to take

to perform some particular task. If your virtual world is described by more

i

i

i

i

i

i

i

i

13.1. Visualizing a Virtual World Using OpenGL 305

than 1 million polygons, are you going to be able to render it at 100 frames

per second (fps) or even at 25 fps? There is a related question: how does one

do real-time rendering under a multitasking operating system? This is not a

trivial question, so we shall return to it in Section 13.1.6.

To find an answer to these questions and successfully craft our own pro-

grams, it helps if one has an understanding of how OpenGL takes advantage

of 3D hardware acceleration and what components that hardware acceleration

actually consists of. Then we can organize our program’s data and its control

logic to optimize its performance in real time. Look back at Section 5.1 to

review how VR worlds are stored numerically. At a macroscopic level, you

can think of OpenGL simply as a friendly and helpful interface between your

VR application program and the display hardware.

Since the hardware is designed to accelerate 3D graphics, the elements

which are going to be involved are:

• Bitmaps. These are blocks of memory storing images, movie frames

or textures, organized as an uncompressed 2D array of pixels. It is

normally assumed that each pixel is represented by 3 or 4 bytes, the

dimensions of the image may sometimes be a power of two and/or the

pitch

3

may be word- or quad-word-aligned. (This makes copying im-

ages faster and may be needed for some forms of texture interpolation,

as in image mapping.)

• Vertices. Vertex data is one of the main inputs. It is essentially a

floating-point vector with two, three or four elements. Whilst im-

age data m ay only need to be loaded to the display memory infre-

quently, vertex data may need to change every time the frame is ren-

dered. Therefore, moving vertex data from host computer to dis-

play adapter is one of the main sources of delay in real-time 3D. In

OpenGL, the vertex is the only str uctural data element; polygons are

implied from the vertex data stream.

• Polygons. Polygons are implied from the vertex data. They are raster-

ized

4

internally and broken up into fragments. Fragments are the basic

visible unit; they are subject to lighting and shading models and image

3

The pitch is the number of bytes of memory occupied by a row in the image. It may or

may not be the same as the number of pixels in a row of the image.

4

Rasterization is the process of finding what screen pixels are filled with a particular poly-

gon after all necessary transformations are applied to its vertices and it has been clipped.

i

i

i

i

i

i

i

i

306 13. Programming 3D Graphics in Real Time

mapping. The final color of the visible fragments is what we see in the

output frame buffer. For most situations, a fragment is what is visible

in a pixel of the final rendered image in the frame buffer. Essentially,

one fragment equals one screen pixel.

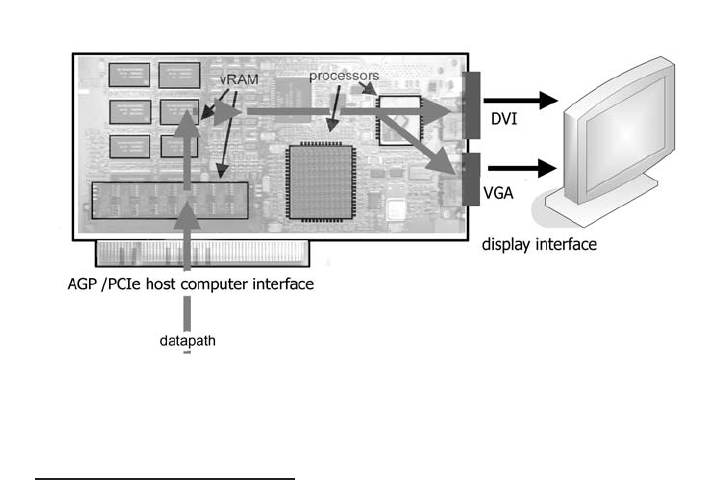

Like any microcomputer, a display adapter has three main components

(Figure 13.1.1):

• I/O. The adapter r eceives its input via one of the system buses. This

could be the AGP or the newer PCI Express interface

5

.Fromthepro-

gram’s viewpoint, the data transfers are blocks of byte memory (images

and textures) or floating-point vectors (vertices or blocks of vertices),

plus a few other state variables. The outputs are from the adapter’s

video memory. These are transferred to the display device through

either the DVI interface or an A-to-D circuit to VGA. The most sig-

nificant bottleneck in rendering big VR environments is often on the

input side where adapter meets PC.

Figure 13.1. The main components in a graphics adapter are the video memory, par-

allel and pipeline vertex and fragment processors and video memory for textures and

frame buffers.

5

The PCI Express allows from much faster read-back from the GPU to CPU and main

memory.

i

i

i

i

i

i

i

i

13.1. Visualizing a Virtual World Using OpenGL 307

• RAM. More precisely video or vRAM. This stores the results of the

rendering process in a frame buffer. U sually two or more buffers are

available. Buffers do not just store color pixel data. The Z depth buffer

is a good example of an essential buffer with its role in the hidden

surface elimination algorithm. Others such as the stencil buffer have

high utility when rendering shadows and lighting effects. vRAM may

also be used for storing vertex vectors and particularly image/texture

maps. The importance of texture map storage cannot be overempha-

sized. Realistic graphics and many other innovative ideas, such as doing

high-speed mathematics, rely on big texture arrays.

• Processor. Before the processor inherited greater significance by becom-

ing externally programmable (as discussed in Chapter 14), its primary

function was to carry out floating-point vector arithmetic on the in-

coming vertex data ,e.g., multiplying the vertex coordinates with one

or more matrices. Since vertex calculations and also fragment calcu-

lations are independent of other vertices/fragments, it is possible to

build several processors into one chip and achieve a degree of paral-

lelism. When one recognizes that there is a sequential element in the

rendering algorithm—vertex data transformation, clipping, rasteriza-

tion, lighting etc.—it is also possible to endow the adapter chipset with

a pipeline structure and reap the benefits that pipelining has for com-

puter processor architecture. (But without many of the drawbacks such

as conditional branching and data hazards.) Hence the term often used

to describe the process of rendering in real time, passing through the

graphics pipeline.

So, OpenGL drives the graphics hardware. It does this by acting as a state

machine. An application program uses functions from the OpenGL library to

set the machine into an appropriate state. Then it uses other OpenGL library

functions to feed vertex and bitmap data into the graphics pipeline. It can

pause, send data to set the state machine into some other state (e.g., change

the color of the vertex or draw lines rather than polygons) and then continue

sending data. Application programs have a very richly featured library of

functions with which to program the OpenGL state machine; they are very

well documented in [6]. The official guide [6] does not cover programming

with Windows very comprehensively; when using OpenGL with Windows,

several sources of tutorial material are available in books such as [7].

Figure 13.2 illustrates how the two main data streams, polygon geometry

and pixel image data, pass through the graphics hardware and interact with

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.