2. Chip-to-Chip Timing and Simulation

As signal integrity engineers, we need to understand and quantify the dominant failure mechanisms present in each digital interface if we want to achieve the goal of adequate operating margins across future manufacturing and operating conditions. Ideally, we would have the time and resources to perform a quantitative analysis of the operating margins for each digital interface in the system, but most engineers do not live in an ideal world. Instead, they must rely on experience and sound judgment for the less-critical interfaces and spend their analysis resources wisely on the interfaces that they know will have narrow operating margins. Regardless of company size or engineering resources available, the goal of any successful signal integrity department is to perform the analysis and testing required to ensure that each interface has enough operating margin to avoid the associated failure mechanisms.

Most signal integrity engineers would agree about the fundamental causes of signaling problems in modern digital interfaces: reflections, crosstalk, attenuation, resonances, and power distribution noise. Although these physical phenomena may be part of a complex and unique failure mechanism process, the end result of each process is the same: A storage element on the receiving chip fails to capture the data bit sent by the transmitting chip. Because on-chip storage elements (flip-flops and registers) are typically beyond the scope of the models used in signal integrity simulations, it is easy to forget that the actual process of failure involves not just voltage waveforms at the input pin of a chip, but also a timing relationship between those waveforms and the clock that samples the waveform. In fact, the appearance of the waveform itself may be horrible so long as its voltage has the correct value when the clock is sampling it.

2.1. Root Cause

A virtual tour of an integrated circuit (IC) chip would uncover one or more flip-flops in close proximity to each signal IO pin. A driver or receiver circuit connects directly to the chip IO pin, and a flip-flop usually resides on the other side of the driver or receiver. The purpose of a digital interface is to transmit a data bit stored on the driving chip and reliably capture that same data bit some time later on the receiving chip. However, the process has one caveat: The data signal must be in a stable high or low state at the moment when the flip-flop samples it—that is, when the clock ticks. Because IC chips are subject to variations in manufacturing and operating conditions, the moment of stability translates to a window in time during which the data signal must remain stable. In essence, the job of the signal integrity engineer is to ensure that the data does not switch during this window.

The next few paragraphs offer a functional description of one type of complementary metal oxide semiconductor (CMOS) latch and the associated flip-flop. Not all CMOS flip-flops are identical, but this particular circuit lends itself well to description. It might seem that a discussion of latches and flip-flops is too fundamental for a book on timing for signal integrity. Careful consideration of these fundamentals, though, lays a foundation for understanding more complicated topics such as writing IO timing specifications for an ASIC or measuring bit error rate.

2.2. CMOS Latch

At the heart of a latch lies a pair of pass gates. When the clock is low, the lower pass gate (P1 in Figure 2-1) allows data at the input, D, to flow through the latch to the output, Q. When the clock rises to a high state, the lower pass gate closes to block the data from the input, while the upper pass gate (P2 in Figure 2-2) opens to hold the output in the state that it was in just before the clock switched low. The mechanism for accomplishing data storage is positive feedback through inverters I4 and I5, which sample the outputs of both the lower and upper pass gates and feed them back to the input of the upper pass gate. When the lower pass gate is no longer open because the clock switched high, the upper pass gate turns on and activates the positive feedback loop. This allows the output of the upper pass gate to reinforce its input and hold the state of the data until the clock switches high again. The inverters I1 and I2 provide differential copies of the clock to the gates of the pass gates, as is necessary for their operation. The inverter I6 ensures that the sensitive internal data node is isolated from the next stage of logic; it also preserves the polarity of the output data, Q, with respect to the input data, D.

Figure 2-1. CMOS latch in open state.

Figure 2-2. CMOS latch in closed state.

The problem with a latch is that it stores data only for half the clock cycle, which is not terribly efficient. During the other half, the output simply mimics the input, which is not useful. Figure 2-3 depicts this behavior. If one had a latch that stored data when the clock was high and another kind of latch that stored data when the clock was low, as described in Figure 2-4, this would cover the entire clock cycle. This is precisely what a flip-flop does. It is easy enough to arrive at a circuit that stores data during the high part of the clock cycle by swapping the connections from the clock inverters to the pass gates inside the latch. Connecting these two kinds of latches in series creates a flip-flop. But how does a flip-flop work?

Figure 2-3. CMOS latch timing diagram.

Figure 2-4. CMOS flip-flop timing diagram.

When the clock transitions from a low state to a high state, the lower pass gate in the first latch closes off, while the upper pass gate in the first latch opens to "capture" the data that was waiting at the input to the flip-flop when the clock switched high. During the time before the clock switched high, the data at the input of the flip-flop also showed up at the input of the second latch but did not make it any further because the second latch was in its closed state at the time. Now that the clock is high, any change in data at the input of the flip-flop goes no further than the first inverter. The flip-flop is essentially regulating the activity of all circuits that precede it.

Because the pass gates in the second latch have inverted copies of the clock, the second latch goes into its open state at the same time the first latch goes into its closed state. This means that the data stored by the first latch immediately passes through the second latch and shows up at the output of the flip-flop—after some brief propagation delay. When the clock switches high, it also "launches" the data that was present at the input of the flip-flop on to whatever circuitry is connected to the output of the flip-flop.

The first latch holds the data that was present at its input when the clock switched high for the remainder of the clock cycle. When the clock falls low, the second latch transitions back into its closed state, blocking the data path again and storing the data that the first latch was holding for it. Now the first latch goes back into its open state and allows the new data to enter it, ready to be latched again the next time the clock rises.

2.3. Timing Failures

The previous discussion assumed that the data at the input of the flip-flop was in a stable state when the clock switched high. What would happen if the data and the clock were to switch at the same instant in time? CMOS logic is made from charge-coupled circuits. To change the state of the feedback pass gate in a flip-flop, it is necessary to move charge from one intrinsic capacitor to another. Some of this capacitance is a part of the transistor itself; a gate insulator sandwiched between a conductor and a semiconductor forms a capacitor. Some of the capacitance is associated with the metal that wires transistors together. It takes work to move electrons, and the alternating clock signals on either side of the feedback pass gate provide the energy to do the work.

If the data happens to change at the same time the clock is doing its work, it robs the circuit of the energy that it was using to change the state of the latch. This is not good. The result is metastability, an indeterminate state that is neither high nor low. This state may persist for several clock cycles, and it throws a wrench into the otherwise orderly digital state machine. Chip designers strive to avoid this troublesome situation by running timing analyzer programs during the process of placing and routing circuits. Signal integrity engineers strive to avoid the same situation, although their tools differ. Many of us are used to running voltage-time simulators, but few of us use timing analyzers.

The dual potential well diagram in Figure 2-5 is a useful analogy for visualizing the physics of metastability. Imagine a ball sitting at the bottom of a curved trough that is separated from an identical trough by a little hill. The first trough represents a latch that is in a zero state, and the second trough represents the same latch in a one state. Moving the ball from the first trough to the second requires the addition of some kinetic energy to get over the hump between the troughs. If the kinetic energy is just shy of the minimum threshold required, the ball may find itself "hung up" momentarily on the hill between the two troughs in a state of unstable equilibrium. A little bit of energy in the form of noise will push the ball into either of the two troughs.

Figure 2-5. Dual potential well model for CMOS latch.

2.4. Setup and Hold Constraints

To prevent the condition of metastability from occurring in a flip-flop, the circuit designers run a series of simulations at various silicon manufacturing, temperature, and voltage conditions. They carefully watch the race between the data and clock signals on their way from their respective inputs to the four pass gates inside the flip-flop, as well as the time it takes for the upper pass gates to change state. Based on the sensitivity of these timing parameters to manufacturing and operating conditions, they establish a "safety zone" in time around the rising edge of the clock during which the data must not switch if the flip-flop is to settle in a predictable state when the clock is done switching. The beginning of this safety zone is called the setup time of the flip-flop, and the end is called the hold time (see Figure 2-6). These two fundamental circuit timing parameters form the foundation for the performance limitations of a digital interface.

Figure 2-6. CMOS flip-flop setup and hold times.

The modern CMOS chip is a gigantic digital "state machine" that is governed by flip-flops, each of which gets its clock from a common source through a distribution network. In between the flip-flops lie various CMOS logic circuits that perform functions such as adding two binary numbers or selecting which of two data streams to pass along to the next stage of logic. Naturally, data does not pass through these logic circuits instantaneously. A circuit's characteristic propagation delay varies with the number and size of transistors and the metal connecting the transistors together.

Consider this simplest of state machines in Figure 2-7. When the left flip-flop launches a data bit into the first inverter in the chain at the tick of the clock, the data must arrive at the input of the right flip-flop before the tick of the next clock—less the setup time. If the data arrives after the setup time, the right flip-flop may go metastable or the data may actually be captured in the following clock cycle. In either case, the timing error destroys the fragile synchronization of the state machine, and the whole system comes to a grinding halt. This failure mechanism is called a setup time failure, and Figure 2-8 illustrates the timing relationship between data and clock. Depending on where you work, it may also be called a late-mode failure or max-path failure.

Figure 2-7. On-chip timing path.

Figure 2-8. Setup time failure.

Now imagine that the clock signal does not arrive at both flip-flops at the same time. This is not an uncommon problem because so many flip-flops all get their clocks from the same source. Just like everything else in the world, it is impossible to design a perfectly symmetrical clock distribution scheme that delivers a clock to each flip-flop in a chip at the same time. The difference in arrival times of two copies of the same clock at two different flip-flops is called clock skew.

Imagine that the clock arrives at the capturing flip-flop later than the launching flip-flop. Now imagine that the propagation delay of the logic circuits, including the launching flip-flop, is smaller than the clock skew. In this case, the second flip-flop will capture the data during the same cycle it was launched—that is, one cycle early. Figure 2-9 depicts this condition. This failure mechanism is called a hold time, early-mode, or min-path failure, and it is the more insidious of the two failures because the only way to fix it is to improve the clock skew or add more logic circuit delay, both of which require a chip design turn. The setup time failure can be alleviated by slowing the clock. To prevent a hold time failure from occurring, it is necessary to ensure that the logic propagation delay is longer than the sum of the clock skew and the flip-flop hold time.

Figure 2-9. Hold time failure.

Perhaps this illustration will be helpful. Imagine a long hallway of doors spaced at regular intervals. The doors all open at the same instant and remain open for a fixed period of time. A man named Sven is allowed to run through exactly one door at a time, after which all doors slam shut again at the same instant and remain closed for two seconds before opening again and sending Sven on his merry way. Consider four cases, as follows:

- If Sven is in good physical shape and times his steps, this process can continue indefinitely.

- If Sven needs a membership to the health club, he may find himself in between the same two doors for two slams—or longer.

- If Sven happens to be an overachiever, he may make it through two doors before hearing the last one slam behind him.

- Sven runs into the door just as it is slamming in his face.

These four cases correspond, respectively, to reliable data exchange, setup time failure, hold time failure, and metastability.

2.5. Common-Clock On-Chip Timing

The name common clock describes an architecture in which all storage elements derive their clocks from a single source. In a common-clock state machine, the clock enters the chip at a single point, and a clock tree repowers the signal to drive all the flip-flops in the state machine. The clock originates at an oscillator, and a fan-out chip distributes multiple copies of that clock to each chip in the system. The problem with the common-clock architecture is that copies of the clock do not arrive at their destination flip-flops at exactly the same instant. When clock skew began consuming more than 20% or 30% of the timing budget, people started looking for other ways to pass data between chips.

The following SPICE simulation "On-Chip Common Clock Timing" illustrates setup and hold failures. This simple network comprises two flip-flops separated by a noninverting buffer whose delay the user may alter by selectively commenting out two of the three instances of subcircuit x2. Clock sources vclk1 and vclk2 also need adjustment. Clock vclk1 (node 31) launches data from flip-flop x1, and clock vclk2 (node 32) samples data at flip-flop x3. In the default configuration of this deck, whose waveforms are shown in Figure 2-10, vclk1 launches data on the first clock cycle, vclk2 captures that data on the second clock cycle 5 ns later, and all is well.

On - Chip Common Clock Timing

*----------------------------------------------------------------------

* circuit and transistor models

*----------------------------------------------------------------------

.include ../models/circuit.inc

.include ../models/envt_nom.inc

*----------------------------------------------------------------------

* main circuit

*----------------------------------------------------------------------

vdat 10 0 pulse (0 3.3V 1.0ns 0.1ns 0.1ns 6.0ns 100ns)

* nominal clocks

vclk1 31 0 pulse (0 3.3V 2.0ns 0.1ns 0.1ns 2.4ns 5ns)

vclk2 32 0 pulse (0 3.3V 2.0ns 0.1ns 0.1ns 2.4ns 5ns)

* hold time clocks

*vclk1 31 0 pulse (0 3.3V 2.0ns 0.1ns 0.1ns 2.4ns 5ns)

*vclk2 32 0 pulse (0 3.3V 2.5ns 0.1ns 0.1ns 2.4ns 5ns)

* setup time clocks

*vclk1 31 0 pulse (0 3.3V 2.0ns 0.1ns 0.1ns 2.4ns 5ns)

*vclk2 32 0 pulse (0 3.3V 1.5ns 0.1ns 0.1ns 2.4ns 5ns)

* d q clk vss vdd

x1 10 20 31 100 200 flipflop

* nominal delay buffer

* a z vss vdd

x2 20 21 100 200 buf1ns

* hold time delay buffer

* a z vss vdd

*x2 20 21 100 200 buf0ns

* setup time delay buffer

* a z vss vdd

*x2 20 21 100 200 buf5ns

* d q clk vss vdd

x3 21 22 32 100 200 flipflop

*----------------------------------------------------------------------

* run controls

*----------------------------------------------------------------------

.tran 0.05ns 20ns

.print tran v(31) v(32) v(21) v(22)

.option ingold=2 numdgt=4 post csdf

.nodeset v(x1.60)=3.3 v(x1.70)=0.0

.nodeset v(x3.60)=3.3 v(x3.70)=0.0

.end

Figure 2-10. Nominal on-chip timing.

2.6. Setup and Hold SPICE Simulations

In the setup time failure depicted in Figure 2-11, the first flip-flop launches its data during the first clock cycle, but the data does not arrive at the second flip-flop until after the second clock cycle, which means the second flip-flop does not capture the data until the third clock cycle. Clock skew exacerbates the problem by advancing the position of the second clock with respect to the first (clock skew = 2.0 ns - 1.5 ns = 0.5 ns). The sum of the flip-flop delay, noninverting buffer delay, and setup time is greater than the clock period, and a setup time failure occurs.

Figure 2-11. On-chip setup time failure.

Figure 2-12 demonstrates the other extreme: the hold time failure. The combined delay of the first flip-flop and the noninverting buffer is less than the sum of the hold time of the second flip-flop and the clock skew (clock skew = 2.0 ns - 2.5 ns = -0.5 ns). The second flip-flop captures the data during the same clock cycle in which the first flip-flop launched the data, and a hold time failure occurs.

Figure 2-12. On-chip hold time failure.

2.7. Timing Budget

The simple spreadsheet timing budget in Table 2-1 expresses the relationship between each of these delay elements and the corresponding timing constraints: one column for setup time and one column for hold time. Notice that the setup column contains an entry for the clock period while the hold column does not. That is because a hold time failure occurs between two rising clock edges separated by skew in the same clock cycle; it is entirely independent of clock period, which is why you cannot fix this devastating problem by simply lengthening the clock cycle. (Take it from one who knows: Devastating is no exaggeration.) The setup time failure, on the other hand, occurs when the sum of all timing parameters is longer than the clock period.

Table 2-1. On-Chip Timing Budget

The second thing to notice is that flip-flop hold time appears only in the hold column, while flip-flop setup time appears only in the setup column. These two numbers represent the boundaries of the metastability window. The setup column numerically describes the physical constraint that data needs to switch to the left of the metastability window as marked by the setup time at the next clock cycle. The hold column conveys a different idea: Data cannot switch during the same clock cycle it was launched before the metastability window, as marked by the hold time.

Finally, the reason the hold column contains negative numbers is because the hold time failure is a race between delay elements (protagonists) on one side and the sum of clock skew and hold time (antagonists) on the other side. Therefore, the delay elements are positive, while the clock skew and hold time are negative. Summing all hold timing parameters in rows 1 through 5 results in a positive operating margin when the hold constraints are met and a negative margin when they are not. The numbers in rows 1 through 5 of the setup column are all positive, and setup operating margin is the difference between the clock period and the sum at row 6. Clock skew always detracts from operating margin.

The following somewhat artificial example swaps three different delay buffers into the x2 subcircuit under nominal conditions that, of course, would never happen in a real chip. Setup and hold timing analysis on an actual design proceed by subjecting the logic circuits between two flip-flops to the full range of process, temperature, and voltage variations. To accomplish this in SPICE, the example decks in this chapter call an include file, which in turn calls one of three transistor model parameter files for fast, slow, and nominal silicon. It also sets the junction temperature and power supplies.

*----------------------------------------------------------------------

* transistor model parameters

*----------------------------------------------------------------------

.include proc_nom.inc

*----------------------------------------------------------------------

* junction temperature (C)

*----------------------------------------------------------------------

.temp 55

*----------------------------------------------------------------------

* power supplies

*----------------------------------------------------------------------

vss 100 0 0.0

vdd 200 0 3.3

vssio 1000 0 0.0

vddio 2000 0 3.3

rss 100 0 1meg

rdd 200 0 1meg

rssio 1000 0 1meg

rddio 2000 0 1meg

The fast and slow transistor model parameter files call the same set of parameters as the nominal file but pass different values representing the predicted extreme manufacturing conditions over the lifetime of the semiconductor process. A typical range of junction temperatures for commercial chips might be 25–85 C. Processor chips often run hotter. Chip designers commonly use +/- 10% for power supply voltage variation, but a well-designed power distribution network seldom sees more than +/- 5% from dc to 100 MHz. Transient IO voltages may droop more than 5% when many drivers switch at the same time, but that is the topic of another book.

2.8. Common-Clock IO Timing

Several factors make timing a common-clock digital interface more complicated than timing an on-chip path:

• Delay from the chip clock input pin to the flip-flop (clock insertion delay)

• Skew between clock input pins on the driving and receiving chips

• Combination of driver and interconnect delay

• Receiver delay

A good part of this additional complexity is hidden beneath chip timing specifications. However, it is important to understand these underlying complexities, especially when writing timing specifications for a new ASIC.

* a oe pad vss vdd vssio vddio

x2 20 200 21 100 200 100 200 drv

t1 21 0 22 0 td=1ns z0=50

* a z vss vdd

x3 22 23 100 200 rcv

The differences between the on-chip and IO SPICE decks are not many. A driver, transmission line, and receiver replace the string of inverters. In addition to data input and output pins, the driver also has an output enable input pin (oe) that, when high, takes the driver out of its high-impedance state and allows the output transistors to drive the transmission line. An extra set of power supply rails (vssio and vddio) isolates the final stage of the driver from the core power and ground buses; the transient currents moving through the final stage are so large that the voltage noise they generate is best kept away from the core circuitry of the chip.

The ideal transmission line models a point-to-point connection between two chips on a printed circuit board. Typical common-clock interconnect simulations involve a multidrop net topology, lossy transmission lines, chip packages, and maybe a connector or two. The clock trees in Figure 2-13 appear in the timing budget but not in the simulation; they are simply too large to include in signal integrity simulations. The clock distribution chip does not appear in this simulation, either, although it is important to simulate these nets separately, as clocks are some of the most critical nets in any system.

Figure 2-13. Common-clock interface.

When the input to the clock distribution chip switches, a clock signal travels down the wire from the output of the clock chip to the clock input of Chip 1, where the clock tree distributes it to every flip-flop on the chip. When the flip-flop in front of the driver sees the rising edge of the clock, it grabs the data at its input and launches it out toward the driver, which repowers the data and sends it off the chip and down the interconnect—a 1 ns 50 ohm transmission line, in this case. After a delay of 1 ns, the data signal shows up at the input of the receiver.

Meanwhile, a clock signal from the second tick of the clock travels from the clock chip to Chip 2, through its clock tree, and arrives at the clock input of the flip-flop on the other side of the receiver. If all goes well, the data signal will arrive at the data input of the flip-flop on Chip 2 slightly before the clock signal does—with a difference of at least the setup time. To ensure that this is always the case, you must construct a timing budget similar to Table 2-1 but with extra terms to account for the additional complexity. Table 2-2 adds three new lines.

Table 2-2. IO Timing Budget

As was the case of the on-chip timing budget, clock skew comes right off the top and counts against the margin. The IO budget must account for system clock skew in addition to variations in two on-chip clock trees (rows 2 and 5 in Table 2-2). System clock skew has at least three possible origins. The output pins on the clock distribution chip never switch at exactly the same instant in time, and there is a specification in the chip datasheet for this. There are also differences in propagation delay down the segments L1 and L2. Even though these may be in the same printed circuit board, there will be layer-to-layer variation in dielectric material and trace geometry. Finally, the two chips will not have identical loading.

The timing budget in Table 2-1 assumed both flip-flops were on the same chip and neglected the clock insertion delay, defined as the difference in time between the switching of the chip's clock input and the arrival of that clock at the flip-flop. (This definition of clock insertion delay encompasses on-chip clock skew.) If clock insertion delay were identical for both chips, it would add to Chip 1 delay and subtract the same number from Chip 2 delay. However, silicon processing and operating conditions introduce variations that complicate things.

Assume the two chips depicted in Figure 2-13 are identical, and their nominal clock insertion delay is 0.3 ns. This delay is probably realistic for a 0.5 mm CMOS technology, which was state-of-the-art at the same time as 200 MHz common-clock systems. Also assume that the clock tree has a process-temperature-Voltage tolerance of 50%—a little worse than the transistor models predict for this imaginary CMOS process.

In the hold timing budget, worst-case timing occurs when the data path looking back from the capturing flip-flop is fast and the clock path looking back from the capturing flip-flop is slow. Tracing from the data input to the flip-flop on Chip 2 all the way to the source of the clock, which is the clock distribution chip, the clock tree on Chip 1 is actually in the data path. This implies that the clock insertion delay number in row 2 of the timing budget needs to be at its smallest possible value to generate worst-case hold conditions. Worst-case conditions for a setup time failure are the converse of those for a hold time failure. A large clock insertion delay on Chip 1 implies that the data arrives at the target flip-flop on Chip 2 later in time; this condition aggravates setup margin.

The flip-flop delay, setup, and hold times are present as they were in the on-chip budget. New to the budget is the combined delay of the driver and transmission line, considered here as one unit because the delay through the driver is very much a function of its loading. This delay is defined as the difference between the time when the driver input crosses its threshold, usually VDD/2, and the time when the receiver input exits its threshold window, usually some small tolerance around VDD/2 for a simple CMOS receiver. Note that the percent tolerance of this delay is less than it is for circuits on silicon because the transmission line delay is a large portion of the total delay, and its tolerance is zero for the purposes of this analysis.

Things get a little tricky on rows 5 and 6 because there is a race condition between the clock path and the data path on Chip 2. Using worst-case numbers for both these delays would result in an excessively conservative budget because process-temperature-Voltage variations are much less when clock and data circuits reside on the same chip. A crude assumption would be to simply take the difference between the nominal clock insertion delay of 0.3 ns and the nominal receiver delay of 0.17 ns. The clock would arrive at the flip-flop 0.13 ns later than the data if they both arrived at the pins of Chip 2 simultaneously. Clock insertion delay at Chip 2 is favorable for setup timing but detrimental for hold timing. To obtain a more accurate number, consult a friendly ASIC designer and ask for a static timing analysis run.

As in the on-chip budget, the hold timing margin is simply the sum of rows 1 through 7. Clock period does not come into play in a hold budget because all the action happens between two rising edges of the same clock cycle that are skewed in time. Setup timing margin is the clock period, 5 ns, less the sum of rows 1 through 8.

Early CMOS system architects realized that clock insertion delay was a pinch-point for CMOS interfaces, so they began using phase-locked loop circuits (PLLs) to "zero out" clock insertion delay at the expense of jitter and phase error. Contemporary digital interfaces rely heavily on PLLs, and signal integrity engineers need to pay close attention to PLL analog supplies to keep jitter in check.

2.9. Common-Clock IO Timing Using a Standard Load

Unless they are directly involved in designing a new ASIC, signal integrity engineers do not typically have access to on-chip nodes in their simulations. If they succeed in convincing a chip manufacturer to part with transistor-level driver and receiver models, they can connect a voltage source to the input of a driver and monitor the output of a receiver. But that is where it ends. IO Buffer Information Specification (IBIS) models are the standard for interfaces running slower than 1 Gbps (they are capable of running faster), and IBIS only facilitates modeling that part of the IO circuit connected directly to the chip IO pad. This situation is not entirely unfavorable since chip vendors are forced to write their specifications to the package pin, a point accessible to both manufacturing final test and the customer. Having access to only the driver output and receiver input simplifies the chip-to-chip timing problem considerably—assuming the chip vendor did a thorough job writing the chip timing specifications.

How does a chip designer arrive at IO timing specifications that are valid at the package pins rather than the flip-flop? For input setup and hold specifications, they begin with the flip-flop setup and hold specifications and take into consideration the difference between the receiver delay and the clock insertion delay, including both the logic gate delays and the effects of wiring. Looking back from the flip-flip on the receive chip, the receiver circuit is in the data path, and the clock tree is in the clock path.

Therefore, to specify a setup time requirement at the package pins, they consider the longest receiver delay and the shortest clock insertion delay. Conversely, the hold time specification involves the shortest receiver delay and the longest clock insertion delay. Because both the receiver and the clock tree are on the same chip, their delays will track each other and never see the full chip-to-chip process variation. In addition to the on-chip delays, the setup and hold time specifications must also account for variations in package delay.

Chip output timing specifications are also relative to the package pins. The output data valid delay for a chip, sometimes called clock-to-output delay, is a combination of clock insertion delay, flip-flop clock-to-Q delay, and driver output delay with one caveat: The delay of the driver is a function of its load, and the driver may see a wide variety of loading conditions unknown to the chip designer.

The standard load or timing load resolves this ambiguity. The standard load usually comprises some combination of transmission line, capacitor, and termination resistor(s) intended to match a typical load as the chip designer perceives it. Along with the standard load, the vendor also specifies a threshold voltage that defines the crossing points for both the clock input and the data output waveforms. The output data valid specification quantifies the delay between the clock input switching and the data output switching, each through their corresponding thresholds, with the standard load connected to the package output pin. As a signal integrity engineer, your job is to understand and quantify the difference between the standard load and your actual load.

Extracting timing numbers from simulation using a standard load is straightforward. Simulate the driver with its standard load alongside the driver, interconnect, and receiver in the same input deck, as shown in Figure 2-14, using the same driver input signal for both copies of the driver. The interconnect delay timing interval begins when the output of the standard load driver crosses the threshold, VT, specified in the chip datasheet and ends when the input of the receiver crosses one of the two threshold values, VIH and VIL, that define its switching window.

Figure 2-14. Actual load and standard load.

The interconnect timing interval in Figure 2-15 begins when the standard load crosses VT and ends when the actual load crosses VIL for the first time. Since the fast case corresponds to a hold time analysis, we use the earliest possible time the receiver could switch. In Figure 2-16, interconnect delay begins when the standard load crosses VT and ends when the actual load crosses VIH for the last time. The longest possible interconnect delay is consistent with a setup time analysis. Of course, some pathological combination of reflections could always result in the fast case producing the worst-case setup delay, so it pays to consider the possibilities.

Figure 2-15. Fast interconnect delay with standard load.

Figure 2-16. Slow interconnect delay with standard load.

The following analysis assumes no crosstalk-induced delay. Further discussion of the relationship between crosstalk and timing can be found in Chapter 4, "DDR2 Case Study," and Chapter 9, "PCI Express Case Study."

Note that it is possible to have a negative interconnect delay if the actual loading conditions are lighter than the standard load. This does not mean the signal is traveling faster than the speed of light! Rather, it is simply an artifact of the vendor's choice for standard load and the signal integrity engineer's choice of net topology. If the vendor chose a 50 pF standard load and you are timing a T net topology with two 5 pF loads at the end of a 4-inch transmission line, the actual load will switch before the standard load. In fact, the choice of standard load is not terribly significant so long as the simulations use the same load specified in the component datasheet.

It is also possible for the fast interconnect delay to be larger than the slow interconnect delay, as is the case for this configuration of driver and net. This looks strange in the budget, but rest assured that the sum of the fast driver and interconnect delays is actually smaller than the same sum in the slow case, as Table 2-3 proves. This is just another artifact of the choice of standard load.

Table 2-3. Driver and Interconnect Delay

The IO timing budget in Table 2-4 closely resembles the on-chip timing budget in Table 2-1 with Chip 1 taking the role of the first flip-flop, Chip 2 taking the role of the second flip-flop, and interconnect delay replacing the noninverting buffer delay. It is somewhat unusual to see a 0.41 ns setup and hold window in a datasheet for a chip running at 200 MHz. Although this is not difficult to achieve in silicon with a 0.5 mm CMOS process, chip vendors prosper when specifications are conservative and final test yields are high.

Table 2-4. IO Timing Budget Using Standard Load

Signal integrity engineers prosper when they successfully design and implement reliable digital interfaces. This fundamental conflict of interests often makes it difficult for signal integrity engineers to close timing budgets on paper. Fortunately, contemporary industry standard specifications that evolved with input from the user community have more realistic timing numbers.

Receiver thresholds are another area of creeping conservatism. Chapter 3 demonstrates that the actual receiver input window within which a receiver's output will transition from low to high is very narrow—on the order of 10 mV. Yet a typical VIH/VIL range for 3.3 V CMOS logic is 1.4 V, which is an artifact of transistor-transistor logic (TTL) legacy.

The two dashed lines in Figures 2-15 and 2-16 represent VIH and VIL at fast and slow process-temperature-Voltage corners. At least two philosophies exist for defining interconnect timing. In the first philosophy, setup timing uses the longest interconnect interval defined by the latest receiver threshold crossing; that is, VIH for the rising edge and VIL for the falling edge. Hold time timing uses the first VIL crossing for the rising edge and the first VIH crossing for the falling edge.

The second camp maintains the position that thresholds track with VDD by a factor of 50% for a typical CMOS receiver. Why time the receiver to fast thresholds when the driver is at low VDD? The two will never be off by more than 1% or 2% for a well-designed power delivery system. This example uses this second approach, but there is not much difference between the two because the receiver thresholds came from simulations of the receiver. When receiver thresholds come from a conservative component specification, the difference can be substantial.

2.10. Limits of the Common-Clock Architecture

The common-clock IO timing budget demonstrates how large-scale distribution of a low-skew clock caps system performance somewhere between 200 and 300 MHz. Supercomputer makers were able to push this to 400 MHz, but not without a huge investment in custom technology. Multidrop net topologies impose their own set of limitations. In the past decade, point-to-point source synchronous and high-speed serial interfaces became the bridges that enabled the next level of system performance.

The common-clock architecture is still alive and well in digital systems everywhere. Although it may not be exciting to work on these nets, ignoring them is sure to bring more trouble than any signal integrity engineer would care to deal with. Any time the input to a flip-flop violates the setup and hold window, a timing failure will occur, whether the data is switching once every 10 ns or once every 200 ps.

2.11. Inside IO Circuits

At first glance, it may seem that signal integrity engineering has more to do with packaging and interconnect technology than it has to do with silicon. After all, signal integrity engineers worry about things such as skin effect, dielectric losses, and coupling in three-dimensional structures; transporting electromagnetic energy from point A to point B is the primary goal. However, the output driver circuit is the source of the electromagnetic disturbances, and the receiver circuit must convert the electromagnetic energy incident on its input transistors into digital information. An elementary understanding of IO circuits helps us make decisions about what simulations are necessary to ensure the reliability of a digital interface.

For example, skin effect is a frequency-dependent phenomenon. The frequency content of a driver's output waveform determines whether a lossy transmission line model is required or whether an ideal transmission line is sufficient. Knowledge of a driver's rise time is necessary to make this decision. Unfortunately, the electrical characteristics of an IO circuit are often difficult to come by since many chip datasheets still contain only the most rudimentary dc specifications.

An IBIS model can fill in the gaps, but effective use of any component model implies a basic knowledge of the assumptions beneath the model. Some circuits make the transition between transistor-level modeling and behavioral modeling naturally, whereas others get trapped in between because the fundamental set of behavioral modeling assumptions is not consistent with the circuit function. This chapter provides an overview of the "workhorse" CMOS IO circuits and a platform from which to reach the more esoteric circuits.

2.12. CMOS Receiver

Although they often go overlooked, receiver thresholds are critical parameters for any digital IO interface. Perhaps the reason they go overlooked is that reliable and useful numbers remain so elusive. They don't need to be, though. Characterizing the input thresholds of a receiver is not difficult.

A receiver circuit interprets the signal on the net and translates it into a language the chip can understand—1s and 0s. The simple CMOS receiver in Figure 2-17 is a pair of inverters. If the n-channel and p-channel transistors have the right dimensions (that is, resistance), the inverter will change states when the input crosses VDD/2 and all four transistors are biased on. At this point, both the n- and p-channels have equal resistance, and the entire circuit functions as a pair of voltage dividers. The output will also be VDD/2.

Figure 2-17. CMOS receiver.

The dc transfer characteristic in Figure 2-18 plots receiver output voltage against the input voltage, and a close look shows that the receiver does not switch exactly at VDD/2 in a vertical line; there is some region of uncertainty called the threshold window. Traditional circuit analysis defines the boundaries of the threshold window using the unity gain points.

Figure 2-18. Receiver dc transfer characteristic.

Consider the receiver circuit as an amplifier. If biased near the threshold, the receiver will amplify a small signal superimposed on the dc bias level. By moving the dc bias level around, you will find two values at which the gain of the amplifier is equal to one—that is, output amplitude equals input amplitude. These are the unity gain points, and the receiver amplifies all signals that are biased between these points. An easier way to find these points is to plot a line whose slope is one on the dc transfer characteristic and find where this line is tangent to the curve. The input voltages that correspond to these two points of tangency are the unity gain points.

The distance in mV between unity gain points for the CMOS receiver in this fictional 0.5 mm circuit library is on the order of 10 mV—next to nothing. Variations in process and temperature affect the size of the threshold window very little, but the receiver threshold for this circuit does track differences between VDD and VSS by a factor of one half. This makes the thresholds sensitive to dc variation in supply voltage, as well as high-frequency ac noise from within the chip and mid- to low-frequency ac noise from without.

Chip designers must either account for high-frequency on-chip ac noise in the timing specifications or in the receiver thresholds. If they choose timing specifications, then typical receiver thresholds for a 0.5 mm CMOS process might vary ±165 mV around 1.67 V—that is, tracking a ±10% tolerance at the VDD pins by one half. If they choose to account for high-frequency on-chip ac noise in the dc input characteristics, the threshold window will probably be slightly larger. They had better not choose both ways!

In either case, the actual receiver threshold window is much narrower than the ancient 0.8 V and 2.0 V TTL specifications commonly seen in 3.3 V parts. If you use TTL specifications to define the right-hand side of an interconnect delay interval, as shown previously in Figures 2-15 and 2-16, this extra conservatism translates directly into lower system performance. We might expect IBIS models to contain more accurate threshold voltage numbers since the standard was intended to meet the needs of the signal integrity community, but that is not necessarily the case. It would appear that the authors of many IBIS models simply copy the receiver thresholds directly from the component datasheet rather than extracting them from simulations.

2.13. CMOS Differential Receiver

As data rates climbed and supply voltages dropped, low-swing differential signaling technologies became attractive. The CMOS differential receiver resembles the emitter-coupled logic NOR gate (also known as the bipolar differential amplifier) found in Motorola's "MECL System Design Handbook." It is a current-mode circuit in which the two input transistors, M1 and M2, in Figure 2-19 are alternately biased into conduction. Beneath them sits a current source. The two resistors hanging from the VDD rail are really transistors biased into conduction and sized to give the desired resistance.

Figure 2-19. CMOS differential receiver.

When M1 and M2 are driven differentially, the current from the source sloshes back and forth between the two parallel branches of the circuit, generating a voltage drop across whichever resistor happens to be carrying the current. An alternate implementation biases M2 with a dc reference voltage (Vref) and drives M1 with a single-ended signal.

The differential configuration has the advantage of an effective input edge rate that is twice as high as the single-ended configuration, making the circuit less sensitive to delay modulation induced by crosstalk or reflections. The single-ended implementation uses half as many package pins but requires distribution of a high-quality reference voltage, which is sensitive to noise and requires close attention to layout.

2.14. Pin Capacitance

Pin capacitance is yet another source of conservatism. A wide tolerance on a pin capacitance in a simulation model may not make or break a point-to-point net, but multiply that tolerance by eight for a memory address net and the simulation results can indicate that the interface will never function at the desired performance level when the actual system may never fail. This leaves the signal integrity engineer with the uncomfortable decision of accepting the risk of a design that is inconsistent with the component specification (the vendor could ship hardware to that spec) or lowering the performance target. A third solution exists: Write a purchase specification that calls for hardware-verified models and design to those models.

Pin capacitance originates in several places. Looking into a chip output pin, we see the sources and drains of the parallel output transistors that make up the driver's final stage. Both drivers and receivers require electrostatic discharge (ESD) protection devices, which are often just large transistors connected in such a way as to expose their intrinsic source-to-well or drain-to-well junction capacitances to the IO pad. In a wire bond package, the bond pad and the underlying substrate form a parallel plate capacitor. In a flip-chip package, the solder ball contributes capacitance, as does the metal between the IO circuit and the solder ball.

Finally, there is the package itself, which may take on many different forms. Because the chip datasheet specifies parameters at the package pins, the pin capacitance specification also includes the package. However, the package capacitance and the silicon-related capacitance are separate in most chip models used by signal integrity engineers. At rise times below 500 ps, it doesn't make much sense to model a 0.5-inch stripline in a ball grid array (BGA) package as a lumped capacitance.

With the exception of the package, these various sources of capacitance lie buried inside the model of an IO circuit. Even if the SPICE code for the circuit model were unencrypted, it would be difficult to calculate the pin capacitance from the transistor sizes, device parameters, and transistor model equations. Fortunately, there is a relatively easy way to extract IO pin capacitance from a SPICE simulation if the equivalent model for this circuit is a simple capacitor and a high impedance. For a driver, this implies that there is a tri-state enable function. For a receiver, this implies that there are no on-die termination devices. Agilent Technologies has published an excellent application note on this technique titled, "Measuring Parasitic Capacitance and Inductance Using TDR."

The reflection of a rising step from an ideal capacitor is a negative-going pulse whose area is proportional to the value of the capacitor. The SPICE simulation network that produced the pulse shown in Figure 2-20 is simple: an ideal 50 ohm source driving an ideal 50 ohm transmission line with an ideal 50 ohm termination. Place the device-under-test (driver or receiver) in the middle of the transmission line, which must be long enough relative to the pulse width and the rise time of the source to allow reflection to occur well after the source is done switching. First, integrate the area between dc level and the negative pulse. Second, multiply by two and divide by the transmission line impedance. This calculation yields the equivalent capacitance of the IO circuit. Test this technique using a known capacitor prior to using it to extract circuit capacitance.

Equation 2-1

Figure 2-20. TDR extraction of IO pin capacitance.

2.15. Receiver Current-Voltage Characteristics

If a receiver has some form of on-die termination (ODT), its current-voltage (IV) curves indicate how well it can absorb excess energy present on a net. There is strong motivation to study the current-voltage characteristics of a new IO circuit before putting it to use.

On-die termination is a useful solution to two common problems: 1) a transmission line stub between the package pin and the termination resistor, and 2) scarce printed circuit board real estate for large buses. Common configurations for on-die termination are parallel 100 ohm resistors to VSS and VDDIO for push-pull drivers, 50 ohm to VTT for open-drain drivers, or 100 ohm differential for low-voltage differential signaling (LVDS). Active termination is a more exotic circuit that turns on when the input passes some set of thresholds but draws no dc power when the input is below the thresholds.

The aggregate IV curve for a receiver with on-die termination is the superposition of the ESD diode curve and the termination curve. One word of caution: Resistors on silicon typically have a much wider tolerance (~30%) than what you might expect from a resistor pack or discrete component available to a PC board designer, so it is essential to ensure that the interface will still function with these wide tolerances when using on-die termination. See Chapter 4, "DDR2 Case Study," for a practical example of DDR2 ODT.

2.16. CMOS Push-Pull Driver

Driver IV characteristics naturally vary with IO circuit design, the most common of which is the CMOS push-pull driver. A pair of inverters accomplishes the physical function of a push-pull driver, which is to move large quantities of charge on and off the die. A few extra logic gates give this circuit tri-state capability.

The output stage of a push-pull driver is just a large number of parallel n-channel devices and a larger number of p-channel devices connected to the IO pad. In Figure 2-21, the multipliers indicate how many 0.5 mm x 20 mm transistors are in parallel. The current through the channel of a MOSFET transistor is a function of its drain-source voltage, Vds (the voltage across the channel resistance), and its gate-source voltage, Vgs. Once Vgs reaches a critical threshold, the channel comes alive and begins to conduct. Raising Vgs beyond the threshold increases the conductivity of the channel. As the output of the driver switches from a low to a high voltage, the Vgs voltages for the n-channel and p-channel output transistors transition through an entire continuous family of curves!

Figure 2-21. CMOS push-pull driver.

This family of curves is not visible from the output pin of the chip because the user cannot directly control the internal nodes within the driver circuit. The output of a chip under dc test conditions is either in a high or a low state, and the user can only see one curve from the family of curves—the curve that represents the driver's final state after the transient event of switching has passed (dashed lines in Figure 2-22). It is not possible to vary the voltage at the gate of the output transistors and produce the classical family of curves. This important fact influences the generation and simulation of behavioral models.

Figure 2-22. CMOS driver output IV curves.

Note that the solid curves in Figure 2-22 correspond to Vgs voltages spaced evenly at 0.5 V intervals beginning at 1.0 V. The threshold of the p-channel transistor is higher than the n-channel transistor, and one of the p-channel curves is flat at zero.

The output IV curves for a driver obey a nonlinear relationship that is difficult to quantify. In fact, device physicists have divided the IV space into two regions in which different sets of equations apply. These equations and their derivatives must remain continuous across the boundary between the two regions; if they are not, nonconvergence of SPICE simulations will occur, and this never made anybody's day.

2.17. Output Impedance

Is it possible to extract any useful information from these curves without running a simulation? Imagine holding the input of the driver in a low state and connecting a resistor between the output and VDDIO. What will the output voltage and current be? An easy way to answer this question graphically is to draw the linear IV characteristic of the resistor on top of the "final" IV curve of the n-channel pull-down transistor—that is, the curve that represents the largest value of Vgs achieved when switching is complete. The intersection of the line and the curve is the operating point of the driver with that particular load. Dividing the voltage by the current gives the output impedance of the driver—at that operating point.

What does output impedance mean, and what is its relevance? If we load the driver with an ideal resistor whose value is the same as the output impedance of the driver, the output voltage will be VDDIO/2 by virtue of the voltage divider equation. If the value of the resistor is same as the impedance of the transmission line connected to the driver in a system application, the output voltage will be the same as the input to the transmission line when the driver is in the middle of the switching event—that is, before the reflected wave has returned to it. This is a useful thing to know because it is important that the driver be strong enough to deliver the energy it takes to cause a full-swing waveform at the receiver input.

The driver will deliver its maximum power to the load when its impedance is equal to that of the load. Keep in mind that the output impedance of the driver is a function of its load. The IV curve of another resistor will intersect the IV curve of the driver at a different point. Another important point is that there are really two output impedances for a push-pull driver: one for the p-channel devices and one for the n-channel devices. In a well-designed driver, these two impedances will be nearly equal, but this is not always the case. An unbalanced driver can be difficult to work with because optimizing the net topology for the rising edge will cause the falling edge to be too strong or too weak.

The dc operating point simulations in Figures 2-23 and 2-24 define another method to extract driver output impedance using the voltage divider equation to calculate the equivalent resistance of the driver given a particular resistive load. This technique is also useful in a transient simulation or in the lab where sweeping the output current of a driver requires special test equipment.

Figure 2-23. Calculation of output high impedance.

Figure 2-24. Calculation of output low impedance.

2.18. Output Rise and Fall Times

Output rise and fall time are, in a sense, a combination of output impedance and capacitance. When a driver switches, it must move charge between its own intrinsic capacitance and the power supply rails. The size of these capacitors and the channel resistance of the transistors govern the rate at which the driver is able to move charge. We can define the intrinsic rise and fall times of the driver as the fastest possible time that a driver can change states when no load is present. The switching time of the pre-drive stage—that is, the transistors immediately preceding the final output stage—also influences the output rise and fall times. As is the case with output impedance, the rise and fall times may be significantly different if the circuit designer did not intentionally make them the same.

Output rise and fall times have a domino effect on the system design. At a bare minimum, the knee frequency or corner frequency associated with a given rise or fall time determines the bandwidth requirement for all interconnect between the driving and receiving chips. Likewise, it also determines the bandwidth requirements for models used in simulation, and this bandwidth is typically higher than that required for the actual interconnect since simulators must accurately reproduce the corners of waveforms and noise pulses of short duration. The minimum rise or fall time determines the length at which a piece of wire begins behaving like a transmission line.

On the negative side, the instantaneous changes in current that accompany sharp edges produce disturbances on the power distribution system that may ultimately result in the violation of flip-flop setup and hold times. Rapidly changing electric and magnetic fields surrounding a discontinuity such as a connector cause coupling between adjacent signal conductors—another detractor from system performance.

As signal integrity engineers, one of the first tasks we face at the beginning of a new project is cataloging rise and fall times for each bus and each chip, for they will dictate the interconnect technology and models requirements (refer to Table 1-2). The intrinsic rise and fall times will be misleading, however. The actual rise and fall times measured at a package pin will most certainly be lower as the wave encounters extra capacitance, attenuation from conductor and dielectric losses, and perhaps the inductance of a bond wire array. After passing through the first layers of interconnect, the corners of the waveform will no longer be as sharp.

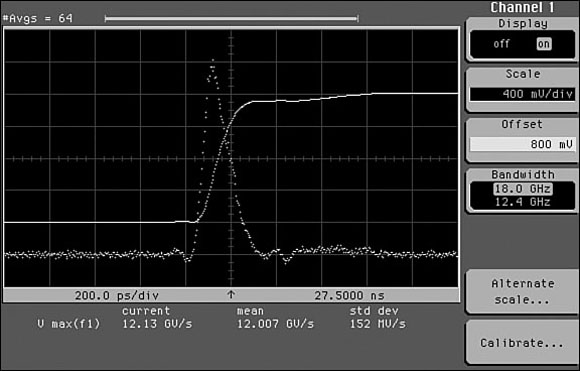

It is no coincidence that the derivative of a typical digital waveform has a Gaussian-like shape—the same shape as a crosstalk pulse. Coupled noise is a direct function of dV/dt. Cataloging this metric in mV/ns will help you understand where crosstalk hotspots are and how to control them. Be aware that instantaneous dV/dt can vary as much as 30% from a linear estimate using the 20% and 80% crossing points. Lower speed interfaces are not sensitive to this subtlety, but it can make a difference worth paying attention to when you're counting mV. The dV/dt in Figure 2-25 is 9.1 V/ns using the linear approximation; the actual instantaneous dV/dt in Figure 2-26 is 12 V/ns.

Figure 2-25. Edge rate calculated at 20% and 80% points.

Figure 2-26. Instantaneous dV/dt.

2.19. CMOS Current Mode Driver

Most high-speed serial interfaces do not use CMOS push-pull drivers; they use current mode drivers that bear some resemblance to the schematic in Figure 2-27. This is really just the same circuit as the differential receiver shown previously in Figure 2-19, except the transistors are much larger and the resistors are in the neighborhood of 50 ohm.

Figure 2-27. CMOS current mode driver.

Let's do a quick dc analysis of this circuit. The predrive stage drives M1 and M2 differentially, so when M1's channel is conducting, M2's channel is off. The equivalent circuit is shown in Figure 2-28. To get the voltage at the positive output pin, calculate the parallel resistance of R1 combined with R2 + RT. It's 37.5 ohm, which means the voltage at node P is 750 mV. Because the right branch has three times the resistance as the left branch, it carries ¼ the current or 5 mA. The drop across RT is 500 mV. The mirror analysis applies when M2 is on, and each output swings between 750 mV and 1250 mV for a net differential swing of 1000 mV at the receiver input.

Figure 2-28. DC analysis of CMOS current mode driver.

This circuit has some favorable qualities. Ideally, there is no net change in current through the source when the output switches states. If the legs are balanced properly and driven "exactly" out of phase, the net change in current through the VDDIO supply will be zero as well. This means no simultaneous switching noise between VDDIO and VSS on the die and no noise-induced jitter. Well, almost none. If any circuit were ideal, we would all be out of jobs.

The CMOS push-pull driver shown previously in Figure 2-21 uses both p-channel and n-channel FETs. In an ideal circuit, both channels would have the same resistance. In real life, the p-channel and n-channel resistances mistrack each other, leading to asymmetrical rise and fall times. The current mode driver solves this problem by using only n-channel transistors.

2.20. Behavioral Modeling of IO Circuits

Behavioral modeling has not always enjoyed a good reputation. In the early days of the signal integrity boom, many die-hard proponents of SPICE simulation (myself included) could not understand how such a simple model could accurately reproduce the behavior of dozens of transistors in a complex IO circuit. The complexity of the two models seemed to be different by one or two orders of magnitude. The remarkable fact, however, is how closely a behavioral model does mimic the characteristics of an IO circuit—if certain assumptions are satisfied and if the author of the model did his or her homework.

In hindsight, this should not be too surprising. For all its complexity, the set of device equations that SPICE solves for every instance of a transistor is really a behavioral model, too, albeit a much more complicated behavioral model. The more fundamental equation that describes the behavior of quantum mechanical systems in semiconductors is the Schrödinger Equation, and no one would ever consider using it to simulate transistors. In a sense, every mathematical model of a physical system is a behavioral model. Einstein said we should strive to make these models as simple as possible—but no simpler!

IBIS emerged in 1993 with a mission to satisfy the modeling needs of the growing signal integrity community while preserving IO circuit and semiconductor process intellectual property. Today, another urgent need presses the community toward advanced time- and frequency-domain simulation techniques: the need for simulations that will run at all. The combination of advanced CMOS device technologies, lossy transmission lines, s-parameters, and large-scale coupling has rendered an alarming number of SPICE simulations a quivering blob of pudding.

The primary job responsibility of a signal integrity engineer is to ensure the reliability of chip-to-chip interfaces, not to debug models. Time spent resolving nonconvergence problems and waiting for multiday simulations to finish is time wasted. In a more efficient paradigm, we would use behavioral simulation to define the boundaries of the design space, which probably accounts for 80% of all simulations. Then, for the most demanding interfaces, SPICE simulation would provide back-end verification that the behavioral modeling was indeed correct.

IBIS version 1.1 is a natural platform on which to build an understanding of behavioral modeling and simulation of IO circuits. A variety of industry-standard bus technologies is available to signal integrity engineers today: Gunning Transceiver Logic (GTL), High-Speed Transceiver Logic (HSTL), and Stub Series Terminated Logic (SSTL), to name a few of the JEDEC standards. There is an even wider variety of circuit design techniques, some of which are company jewels and may never be known to the world at large. Among the well-known techniques are the simple push-pull driver, open-drain driver, multiple phased output stages, dynamic termination, de-emphasis, equalization, and so on. HSTL and SSTL both use a push-pull output, while classic GTL is an open-drain output with a feedback network.

Any behavioral model assumes one of these known circuit design techniques as a template. The information contained in an IBIS model is really not a model at all; the model resides under the hood of the simulator. An IBIS model is merely a database of circuit parameters and tables that a simulator will load into its own unique behavioral model before initiating the simulation. If the circuit template in the simulator does not match the information in the IBIS model, the results of the simulation may not be worth much at all.

2.21. Behavioral Model for CMOS Push-Pull Driver

The 50 ohm CMOS push-pull driver from the previous SPICE simulations will serve as a demonstration vehicle for understanding how behavioral simulation works, how it is different from SPICE simulation of the same circuit, and what its accuracy limitations are. How do the transistor model and the behavioral model compare to one another?

The p-channel and n-channel transistor symbols in Figure 2-29 represent many parallel transistors that share a common gate, source, and drain. Beneath each of those transistors lies one instance of the device equations that govern the current-voltage behavior of that transistor. The SPICE model statement personalizes the device equations for a particular semiconductor process. In the behavioral model, a single time-dependent voltage-controlled current source (VCCS), whose current-voltage characteristics are defined by a look-up table, replaces all the p-channel transistors in the output stage.

Figure 2-29. Behavioral model for CMOS push-pull driver.

The behavioral simulator ensures that whenever a given current is flowing through the source, the voltage across it will be the same value found in the look-up table for that current. The lower VCCS represents all of the n-channel transistors. Herein lies one of the fundamental assumptions of behavioral modeling: Although SPICE has access to the continuous family of IV curves for any transistor, the behavioral model has only one curve and must make assumptions about what all the other curves in the family look like. This is true for both the n-channel and p-channel devices.

Another fundamental assumption involves the predrive stage of the IO circuit, represented in this diagram by the two triangular buffers that independently drive the pull-up and pull-down transistors. (Recall that in the CMOS driver, these were actually NAND and NOR gates rather than simple buffers.) The switching rates of these predrive buffers determine the rise and fall times of the output stage, together with the IV curves and loading.

Programs that generate IBIS models do not have access to node voltages that are internal to the driver SPICE subcircuit. This is particularly true if the SPICE subcircuit is encrypted or if the IBIS model was extracted from lab measurements. Therefore, the behavioral simulator must make further assumptions about the rise and fall times at the input of the driver final stage based on rise and fall times of the output.

In the case of an IBIS 1.1 model, the simulator also assumes that the pull-up and pull-down transistors switch at the same time, which is not necessarily the case if the predrive stage has two independent buffers. This also is a fundamental assumption. In the diagram of the behavioral model shown previously in Figure 2-29, the third terminal on the VCCS represents the time-domain waveform that turns the source on and off, which is analogous to the Vgs waveform at the input of the final stage in a SPICE driver subcircuit.

Two sets of voltage-time waveforms are to the left of the VCCS enclosed by dashed boxes. One set of waveforms controls the pull-up transistors, and the other controls the pull-down transistors. In IBIS 1.1, both sets of waveforms begin switching at the same time. IBIS 2.1 allows independent control over the pull-up and pull-down transistors via a set of voltage-time waveforms rather than a simple rise and fall time.

The second set of voltage-controlled current sources corresponds to the two ESD protection devices. Compared to the complexity of the pull-up and pull-down elements, these seem trivial as they have no time-dependent waveform turning them on and off. Each VCCS draws current from its corresponding supply rail. If the circuit were a receiver with some form of on-die termination, the IV curves for the termination would be superimposed on top of the ESD curves.

Finally, the lumped capacitor, called C_comp in IBIS, represents all capacitances that are connected to the output node of the circuit: transistors, ESD protection diodes, metal, and bond pad or solder ball. The value of this capacitor is not a function of time or voltage, which is a good assumption if the semiconductor junctions are reverse biased. As soon as they begin to conduct this assumption breaks down.

The physical location of C_comp may also be relevant. Drivers with on-chip series termination will have their capacitance distributed on either side of the resistor, and this can become an accuracy issue. In simultaneous switching simulations, the assumption that the other node of the capacitor is connected to ground breaks down. In the case of the CMOS driver, however, a constant lumped C_comp remains a valid assumption.

2.22. Behavioral Modeling Assumptions

In summary, the fundamental assumptions for an IBIS 1.1-compatible behavioral model of the CMOS driver are as follows:

- The behavioral model topology that the simulator employs is consistent with the IO circuit design (in this case, a simple CMOS push-pull final output stage).

- The behavioral simulator interpolates between a single pull-up (p-channel) IV curve and a single pull-down (n-channel) IV curve.

- The behavioral simulator infers the Vgs waveform at the input to the final stage from the rise and fall times measured at the output of the final stage with a 50 ohm load.

- The pull-up and pull-down transistors begin switching at the same time.

- A single constant-value capacitor represents all capacitances seen looking into the pad node.

2.23. Tour of an IBIS Model

Armed with an understanding of the IO circuit models used in signal integrity simulations, you can confidently assess the quality and accuracy of the models needed to perform your own analysis of the timing and voltage margins for a given interface. Model assessment is a significant step toward engineering a reliable digital interface.

A close look at an example IBIS model points out the differences and similarities between a behavioral model and a SPICE transistor model. The data structures within this IBIS model file illustrate the assumptions a behavioral simulator makes about the electrical characteristics of an IO circuit and how those assumptions compare to a SPICE model of the same circuit. The IBIS Committee has published a good deal of educational material, including instructions on how to create IBIS models.

The following simple IBIS model has three main sections: the header, the pin table, and the model data. IBIS is fundamentally a chip-centric specification, so there is a one-to-one correspondence between an IBIS model and the chip it represents. In some cases a chip manufacturer may publish a library of individual models from which the user can assemble a complete IBIS model—for example, ASICs.

The header contains various bits of background information about the file. The pin table lists each pin in the chip and, if the pin is a signal, associates a model with it. In the language of IBIS, keywords are enclosed in brackets, [ ], and may be followed by subparameters that are associated with the preceding keyword. For example, the [Model] keyword defines a new set of IO circuit model parameters, including pin capacitance, rise and fall times, and IV curves. The "Model Type" subparameter is hierarchically below the [Model] keyword, and it can take on the values Input, Output, I/O, 3-state, or Open_drain. The [Model] keyword covers the first assumption of behavioral modeling: It defines the circuit topology that the simulator will use.

2.24. IBIS Header

The header stores some valuable information. The version keyword allows the syntax checker to decide which features are legal. The filename keyword and the actual name of the file must match. This file happens to represent a fictional low-voltage CMOS (LVC) dual tri-state buffer in a standard 125 form factor that uses the CMOS push-pull driver circuit, drv50.

The source, notes, and disclaimer keywords are of special interest. A conscientious author will give some indication of the conditions under which he or she created the IBIS model. Did the information come from production-level SPICE models that were extracted from an integrated circuit layout? Or perhaps the source was lab measurements of an actual component, which only apply to one process-temperature-Voltage point. If the author's name does not appear under the source keyword, the user might wonder whether anyone is willing to support the IBIS model.

Many signal integrity engineers find this common disclaimer to be particularly disturbing: "...for modeling purposes only." This suggests that the vendor does not stand behind the IBIS model in the same way it stands behind the component datasheet. This is disconcerting because model parameters are in some ways even more influential in determining the success or failure of a design than the simpler parameters found in a component datasheet. However, if the quality of an IBIS model is not consistent with the needs of the design, signal integrity engineers have a tool for affecting change that is more potent than complaining: Include model quality and accuracy in the purchase order.

2.25. IBIS Pin Table

The pin table, designated by the [Pin] keyword, associates a [Model] keyword with a chip pin number. Remember, the information found in an IBIS model is not really the model itself but rather the input parameters to the model; the model resides inside the simulator. The language can be a bit confusing on this point. The first two columns in the table store the pin number and name as found in the component datasheet. If the user is running automated post-route simulation, the software will match the pin number in the logical netlist with a pin number from this table. Every model name found in the third column must have a matching [Model] keyword under the model section of the datasheet. The remaining three columns contain lumped RLC parameters for the purpose of modeling the package pin.

There are three ways to code IC package model data in IBIS. If the optional per-pin parameters do not exist under the pin table, the simulator assigns the default values found under the [Package] keyword to every pin. IBIS 2.1 offers a more sophisticated means of coding package models using the [Define Package Model] keyword. In the following example, the model for pin 10 of a BGA package comprises three sections: a 2 nH lumped inductance for the bond wire, a 0.5 in length of 50 ohm transmission line for the copper wire, and a 1 pF lumped capacitance for the solder ball and pad. In this case, the information truly does define a circuit-based model for the package; there is no ambiguity left for the simulator to resolve.

|

10 Len=0 L=2nH / Len=0.5 C=3pF L=9nH R=1m / Len=0 C=1pF /

|

2.26. IBIS Receiver Model

The first set of model data following the pin table corresponds to the receiver circuit, rcv. The previous section on IO circuit characteristics discussed three primary attributes of a simple CMOS receiver, and each has its place in the IBIS receiver model: threshold voltages, pin capacitance, and IV curves. Most IBIS models for low-voltage CMOS (3.3 V) use the default TTL thresholds commonly found in component datasheets—that is, 0.8V and 2.0V. I extracted the thresholds for this IBIS model from SPICE simulations of the receiver circuit, which means they are more accurate and result in less conservatism in the system design.

The [Temperature Range] and [Voltage Range] keywords document the conditions under which the model author derived the other keywords and subparameters. Some degree of confusion has surrounded these keywords because the values found under the min column are not always the smallest numerical values, and the values found under the max column are not always the largest. The values found under the min column for the [Temperature Range] and [Voltage Range] keywords correspond to the conditions that gave the highest channel resistance, also called weak, slow, or worst, depending on convention at your place of employment. For a CMOS process, high temperature and low voltage go along with weak silicon, and these conditions are the ones that produced the IV curves found in the min column of the [GND_clamp] and [POWER_clamp] keywords. Conversely, the values found under the max column for the [Temperature Range] and [Voltage Range] keywords represent the simulation conditions that gave low channel resistance, also called strong, fast, or best.