Chapter 1. Introduction to ACE

ACE is a rich and powerful toolkit with a distinctively nontraditional history. To help you get a feel for where ACE comes from, this chapter gives a brief history of ACE's development. Before going into programming details, we cover some foundational concepts: class libraries, patterns, and frameworks. Then we cover one of ACE's less glamorous but equally useful aspects: its facilities for smoothing out the differences among operating systems, hardware architectures, C++ compilers, and C++ runtime support environments. It is important to understand these aspects of ACE before we dive into the rest of ACE's capabilities.

1.1 A History of ACE

ACE (the ADAPTIVE—A Dynamically Assembled Protocol Transformation, Integration, and eValuation Environment—Communication Environment) grew out of the research and development activities of Dr. Douglas C. Schmidt at the University of California at Irvine. Doug's work was focused on design patterns, implementation, and experimental analysis of object-oriented techniques that facilitate the development of high-performance, real-time distributed object computing frameworks. As is so often the case, that work began on a small handful of hardware and OS (operating system) platforms and expanded over time. Doug ran into the following problems that still vex developers today.

• Trying to rearrange code to try out new combinations of processes, threads, and communications mechanisms is very tedious. The process of ripping code apart, rearranging it, and getting it working again is very boring and error prone.

• Working at the OS API (application programming interface) level adds accidental complexity. Identifying the subtle programming quirks and resolving these issues takes an inordinate amount of time in development schedules.

• Successful projects need to be ported to new platforms and platform versions. Standards notwithstanding, no two platforms or versions are the same, and the complexities begin again.

Doug, being the smart guy he is, came up with a plan. He invented a set of C++ classes that worked together, allowing him to create templates and strategize his way around the bumps inherent in rearranging the pieces of his programs. Moreover, these classes implemented some very useful design patterns for isolating the changes in the various computing platforms he kept getting, making it easy for his programs to run on all the new systems his advisors and colleagues could throw at him. Life was good. And thus was born ACE.

At this point, Doug could have grabbed his Ph.D. and some venture funding and taken the world of class libraries by storm. We'd all be paying serious money for the use of ACE today. Fortunately for the world's C++ developers, Doug decided to pursue further research in this area. He created the large and successful Distributed Object Computing group at Washington University in St. Louis. ACE was released as Open Source,1 and its development continued with the aid of more than US$7 million funding from visionary commercial sponsors.

Today, most of the research-related work on ACE is carried out at the Institute for Software Integrated Systems (ISIS) at Vanderbilt University, where Doug is now a professor and still heavily involved in ACE's day-to-day development. ACE is increasingly popular in commercial development as well. It enjoys a reputation for solving the cross-platform issues that face all high-performance networked application and systems developers. Additionally, commercial-level technical support for ACE is now available worldwide.

ACE is freely available for any use, including commercial, without onerous licensing requirements. For complete details, see the COPYING file in the top-level directory of the ACE source kit. Another interesting file in the top-level directory is THANKS. It lists all the people who have contributed to ACE over the years—more than 1,700 at the time of this writing! If you contribute improvements or additions to ACE, you too can get your name listed.

1.2 ACE's Benefits

The accidental complexity and development issues that led to ACE reflect lessons learned by all of us who've written networking programs, especially portable, high-performance networking programs.

• Multiplatform source code is difficult to write. The many and varied standards notwithstanding, each new port introduces a new set of problems. To remain flexible and adaptive to new technology and business conditions, software must be able to quickly change to take advantage of new operating systems and hardware. One of the best ways to remain that flexible is to take advantage of libraries and middleware that absorb the differences for you, allowing your code to remain above the fray. ACE has been ported to a wide range of systems—from handheld to supercomputer—running a variety of operating systems and using many of the available C++ compilers.

• Networked applications are difficult to write. Introducing a network into your design introduces a large set of challenges and issues throughout the development process. Network latency, byte ordering, data structure layout, network instability and performance, and handling multiple connections smoothly are a few typical problems that you must be concerned with when developing networked applications. ACE makes dozens of frameworks and design pattern implementations available to you, so you can take advantage of solutions that many other smart people have already come up with.

• Most system-provided APIs are written in terms of C API bindings because C is so common and the API is usable from a variety of languages. If you're considering working with ACE, you've already committed to using C++, though, and the little tricks that seemed so slick with C, such as overlaying the sockaddr structure with sockaddr_in, sockaddr_un, and friends, are just big opportunities for errors. Low-level APIs have many subtle requirements, which sometimes change from standard to standard—as in the series of drafts leading to POSIX 1004.1c Pthreads—and are sometimes poorly documented and difficult to use. (Remember when you first figured out that the socket(), bind(), listen(), and accept() functions were all related?) ACE provides a well-organized, coherent set of abstractions to make IPC, shared memory, threads, synchronization mechanisms, and more easy to use.

1.3 ACE's Organization

ACE is more than a class library. It is a powerful, object-oriented application toolkit. Although it is not apparent from a quick look at the source or reference pages, the ACE toolkit is designed using a layered architecture composed of the following layers:

• OS adaptation layer. The OS adaptation layer provides wrapper functions for most common system-level operations. This layer provides a common base of system functions across all the platforms ACE has been ported to. Where the native platform does not provide a desired function, it is emulated, if possible. If the function is available natively, the calls are usually inlined to maximize performance.

• Wrapper facade layer. A wrapper facade consists of one or more classes that encapsulate functions and data within a type-safe, object-oriented interface [5]. ACE's wrapper facade layer makes up nearly half of its source base. This layer provides much of the same capability available natively and via the OS adaptation layer but in an easier-to-use, type-safe form. Applications make use of the wrapper facade classes by selectively inheriting, aggregating, and/or instantiating them.

• Framework layer. A framework is an integrated collection of components that collaborate to produce a reusable architecture for a family of related applications [4] [7]. Frameworks emphasize the integration and collaboration of application-specific and application-independent components. By doing this, frameworks enable larger-scale reuse of software rather than simply reusing individual classes or stand-alone functions. ACE's frameworks integrate wrapper facade classes and implement canonical control flows and class collaborations to provide semicomplete applications. It is very easy to build applications by supplying application-specific behavior to a framework.

• Networked services layer. The networked services layer provides some complete, reusable services, including the distributed logging service we'll see in Chapter 3.

Each layer reuses classes in lower layers, abstracting out more common functionality. This means that any given task in ACE can usually be done in more than one way, depending on your needs and design constraints. Although we sometimes approach a problem “bottom up” because existing knowledge helps transition smoothly to ACE programming techniques, we generally cover the higher-level ways rather than the lower-level ways to approach problems in more depth. It is relatively easy, however, to find the lower-level classes and interfaces for your use when needed. The ACE reference documentation on the included CD-ROM can be used to find all class relationships and complete programming references.

1.4 Patterns, Class Libraries, and Frameworks

Computing power and network bandwidth have increased dramatically over the past decade. However, the design and implementation of complex software remains expensive and error prone. Much of the cost and effort stem from the continuous rediscovery and reinvention of core concepts and components across the software industry. In particular, the growing heterogeneity of hardware architectures and diversity of operating system and communication platforms make it difficult to build correct, portable, efficient, and inexpensive applications from scratch. Patterns, class libraries, and frameworks are three tools the software industry is using to reduce the complexity and cost of developing software.

Patterns represent recurring solutions to software development problems within a particular context. Patterns and frameworks both facilitate reuse by capturing successful software development strategies. The primary difference is that frameworks focus on reuse of concrete designs, algorithms, and implementations in a particular programming language. In contrast, patterns focus on reuse of abstract designs and software microarchitectures.

Frameworks can be viewed as a concrete implementation of families of design patterns that are targeted for a particular application domain. Likewise, design patterns can be viewed as more abstract microarchitectural framework elements that document and motivate the semantics of frameworks in an effective way. When patterns are used to structure and document frameworks, nearly every class in the framework plays a well-defined role and collaborates effectively with other classes in the framework.

Like frameworks, class libraries are implementations of useful, reusable software artifacts. Frameworks extend the benefits of OO (object-oriented) class libraries in the following ways.

• Frameworks define “semicomplete” applications that embody domain-specific object structures and functionality. Components in a framework work together to provide a generic architectural skeleton for a family of related applications. Complete applications are composed by inheriting from and/or instantiating framework components. In contrast, class libraries are less domain specific and provide a smaller scope of reuse. For instance, class library components, such as classes for strings, complex numbers, arrays, and bitsets, are relatively low level and ubiquitous across many application domains.

• Frameworks are active and exhibit “inversion of control” at runtime. Class libraries are typically passive; that is, they are directed to perform work by other application objects, in the same thread of control as those application objects. In contrast, frameworks are active; that is, they direct the flow of control within an application via event dispatching patterns, such as Reactor and Observer. The “inversion of control” in the runtime architecture of a framework is often referred to as the Hollywood Principle: “Don't call us; we'll call you.”

In practice, frameworks and class libraries are complementary technologies. For instance, frameworks typically use class libraries internally to simplify the development of the framework. (Certainly, ACE's frameworks reuse other parts of the ACE class library.) Likewise, application-specific code invoked by framework event handlers can use class libraries to perform such basic tasks as string processing, file management, and numerical analysis.

So, to summarize, ACE is a toolkit packaged as a class library. The toolkit contains many useful classes. Many of those classes are related and combined into frameworks, such as the Reactor and Event Handler, that embody semicomplete applications. In its classes and frameworks, ACE implements many useful design patterns.

1.5 Porting Your Code to Multiple Operating Systems

Many software systems must be designed to work correctly on a range of computing platforms. Competitive forces and changing technology combine to make it a nearly universal requirement that today's software systems be able to run on a range of platforms, sometimes changing targets during development. Networked systems have a stronger need to be portable, owing to the inherent mix of computing environments needed to build and configure competitive systems in today's marketplace. Standards provide some framework for portability; however, marketing messages notwithstanding, standards do not guarantee portability across systems. As Andrew Tanenbaum said, “The nice thing about standards is that there are so many to choose from” [3]. And rest assured, vendors often choose to implement different standards at different times. Standards also change and evolve, so it's very unlikely that you'll work on more than one platform that implements all the same standards in the same way.

In addition to operating system APIs and their associated standards, compiling and linking your programs and libraries is another area that differs among operating systems and compilers. The ACE developers over the years have developed an effective system of building ACE based on the GNU Make tool. Even on systems for which a make utility is supplied by the vendor, not all makes are created equal. GNU Make provides a common, powerful tool around which ACE has its build facility. This allows ACE to be built on systems that don't supply native make utilities but to which GNU Make has been ported. Don't worry if you're a Windows programmer using Microsoft or Borland compilers and don't have GNU Make. The native Microsoft and Borland build utilities are supported as well.

Data type differences are a common area of porting difficulty that experienced multiplatform developers have found numerous ways to engineer around. ACE provides a set of data types it uses internally, and you are encouraged to use them as well. These are described later, in the discussion of compilers.

ACE's OS adaptation layer provides the lowest level of functionality and forms the basis of ACE's wide range of portability. This layer uses the Wrapper Facade [4] and Façade [3] patterns to shield you from platform differences. The Wrapper pattern forms a relatively simple wrapper around a function, and ACE uses this pattern to unify the programming interfaces for common system functions where small differences in APIs and semantics are smoothed over. The Façade pattern presents a single interface to what may, on some platforms, be a complicated set of systems calls. For example, the ACE_OS::thr_create() method creates a new thread with a caller-specified set of attributes: scheduling policy, priority, state, and so on. The native system calls to do all that's required during thread creation vary widely among platforms in form, semantics, and the combination and order of calls needed. The Façade pattern allows the presentation of one consistent interface across all platforms to which ACE has been ported.

For relatively complex and obviously nonportable actions, such as creating a thread, you would of course think to use the ACE_OS methods—well, at least until you read about the higher-level ACE classes and frameworks later in the book. But what about other functions that are often taken for granted, such as printf() and fseek()? Even when you need to perform a basic function, it is safest to use the ACE_OS methods rather than native APIs. This usage guarantees that you won't be surprised by a small change in arguments to the native calls when you compile your code on a different platform.

The ACE OS adaptation layer is implemented in the ACE_OS class. The methods in this class are all static. You may wonder why a separate namespace wasn't used instead of a class, as that's basically what the class achieves. As we'll soon see, one of ACE's strengths is that it works with a wide variety of old and new C++ compilers, some of which do not support namespaces at all. We are not going to list all the supplied functions here. You won't often use these functions directly. They are still at a very low level, not all that much different from writing in C. Rather, you'll more often use high-level classes that themselves call ACE_OS methods to perform the requested actions. Therefore, we're going to leave a detailed list of these methods to the ACE_OS reference pages.

As you might imagine, ACE contains quite a lot of conditionally compiled code, especially in the OS adaptation layer. ACE does not make heavy use of vendor-supplied compiler macros for this, for a couple of reasons. First, a number of the settings deal with features that are missing or broken in the native platform or compiler. The missing or broken features may change over time; for instance, the OS is fixed or the compiler is updated. Rather than try to find a vendor-supplied macro for each possible item and use those macros in many places in ACE, any setting and vendor-supplied macro checking is done in one place and the result remembered for simple use within ACE. The second reason that vendor-supplied macros are not used extensively is that they may either not exist or may conflict with another vendor's macros. Rather than use a complicated combination of ACE-defined and vendor-supplied macros, a set of ACE-defined macros is used extensively and is sometimes augmented by vendor-supplied macros, although this is relatively rare.

The setting of all the ACE-defined compile-time macros is done in one file, ace/config.h, which we'll look at in Chapter 2. Many varieties of this configuration file are supplied with ACE, matching all the platforms ACE has been ported to. If you obtain a prebuilt version of ACE for installation in your environment, you most likely will never need to read or change this file. But sometime when you're up for some adventure, read through it anyway for a sampling of the range of features and issues ACE handles for you.

1.6 Smoothing the Differences among C++ Compilers

You're probably wondering why it's so important to smooth over the differences among compilers, as the C++ standard has finally settled down. There are a number of reasons.

• Compiler vendors are at various levels of conformance to the standard.

• ACE works with a range of C++ compilers, many of which are still at relatively early drafts of the C++ standard.

• Some compilers are simply broken, and ACE works around the problems.

Many items of compiler capability and disability adjustment are used to build ACE properly, but from a usage point of view, ACE helps you work with or around four primary areas of compiler differences:

- Templates, both use and instantiation

- Data types, both hardware and compiler

- Runtime initialization and shutdown

- Allocating heap memory

1.6.1 Templates

C++ templates are a powerful form of generic programming, and ACE makes heavy use of them, allowing you to combine and customize functionality in powerful ways. This brief discussion introduces you to class templates. If templates are a new feature to you, we suggest that you also read an in-depth discussion of their use in a good C++ book, such as Bjarne Stroustrup's The C++ Programming Language, 3rd Edition [11] or C++ Templates: The Complete Guide by David Vandevoorde and Nicolai M. Josuttis [14]. As you're reading, keep in mind that the dialect of C++ described in your book and that implemented by your compiler may be different. Check your compiler documentation for details, and stick to the guidelines documented in this book to be sure that your code continues to build and run properly when you change compilers.

C++'s template facility allows you to generically define a class or function, and have the compiler apply your template to a given set of data types at compile time. This increases code reuse in your project and enables you to reuse code across projects. For example, let's say that you need to design a class that remembers the largest value of a set of values you specify over time. Your system may have multiple uses for such a class—for example, to track integers, floating-point numbers, text strings, and even other classes that are part of your system. Rather than write a separate class for each possible type you want to track, you could write a class template:

template <class T> class max_tracker {

public:

void track_this (const T val);

private:

T max_val_;

};

template <class T>

void max_tracker::track_this (const T val)

{

if (val > this->max_val_)

this->max_val_ = val;

return;

}

Then when you want to track the maximum temperature of your home in integer degrees, you declare and use one of these objects:

max_tracker<int> temperature_tracker;

// Get a new temperature reading and track it

int temp_now = Thermometer.get_temp ();

temperature_tracker.track_this (temp_now);

The use of the template class would cause the compiler to generate code for the max_tracker class, substituting the int type for T in the class template. You could also declare a max_tracker<float> object, and the compiler would instantiate another set of code to use floating-point values. This magic of instantiating class template code brings us to the first area ACE helps you with—getting the needed classes instantiated correctly.

Template Instantiation

Many modern C++ compilers automatically “remember” which template instantiations your code needs and generate them as a part of the final link phase. In these cases, you're all set and don't need to do anything else. For one or more of the following reasons, however, you may need to take further steps regarding instantiation.

• You need to port your system to another platform on which the compiler isn't so accommodating.

• Your compiler is really slow when doing its magical template autoinstantiation, and you'd like to control it yourself, speeding up the build process.

Compilers provide a variety of directives and methods to specify template instantiation. Although the intricacies of preprocessors and compilers make it extremely difficult to provide one simple statement for specifying instantiation, ACE provides a boilerplate set of source lines you should use to control explicit template instantiation. The best way to explain it is to show its use. In the preceding example code, the following code would be added at the end of the source file:

#if defined (ACE_HAS_EXPLICIT_TEMPLATE_INSTANTIATION)

template class max_tracker<int>;

#elif defined (ACE_HAS_TEMPLATE_INSTANTIATION_PRAGMA)

#pragma instantiate max_tracker<int>

#endif /* ACE_HAS_EXPLICIT_TEMPLATE_INSTANTIATION */

This code will successfully build on all compilers that correctly handle automatic instantiation, as well as all compilers ACE has been ported to that customarily use explicit template instantiation for one reason or another. If you have more than one class to instantiate, you add more template class and #pragma instantiate lines to cover them.

Be careful when deciding to explicitly instantiate templates in projects that use the standard C++ library. The templates in the standard library often use other, not so obvious, templates, and it's easy to end up in a maze of instantiation statements that change between compilers. It's much safer to let the compiler automatically instantiate template classes whenever possible.

Use of Types Defined in Classes That Are Template Arguments

A number of ACE classes define types as traits of that class. For example, the ACE_SOCK_Stream class defines ACE_INET_Addr as the PEER_ADDR type. The other stream-abstracting classes also define a PEER_ADDR type internally to define the type of addressing information needed for that class. This use of traits will be seen in more detail in Section 7.6 when using the ACE_Svc_Handler class and in Chapter 17 when discussing ACE_Malloc. For now, though, view the use of traits as an example of this problem: If a template class can be used with one of these trait-defining classes as a template argument, the template class may need to know and use the trait type.

You'd think that the template argument class could be accessed as if the class were used by itself. But not so; some older compilers disallow that. ACE works around the limitation and keeps your code compiler neutral by defining a set of macros for the cases in ACE when the information is required together. In the case of the ACE_SOCK_Stream class's usage, when the addressing trait is also needed, ACE defines ACE_SOCK_STREAM, which expands to include the addressing type for compilers that don't support typedefs in classes used as template arguments. It expands to ACE_SOCK_Stream for compilers that do support it. The many uses of this tactic in ACE will be noted for you at the points in the book where their use comes up.

1.6.2 Data Types

Programs you must port to multiple platforms need a way to avoid implicit reliance on the hardware size of compiler types and the relationships between them. For example, on some hardware/compiler combinations, an int is 16 bits; in others, it is 32 bits.2 On some platforms, a long int is the same as an int, which is the same length as a pointer; on others, they're all different sizes. ACE provides a set of type definitions for use when the size of a data value really matters and must be the same on all platforms your code runs on. They are listed in Table 1.1, with some other definitions that are useful in multiple-platform development. If you use these types, your code will use properly sized types on all ACE-supported platforms.

1.6.3 Runtime Initialization and Rundown

One particular area of platform difference and incompatibility is runtime initialization of objects and the associated destruction of those objects at program rundown. This difference is particularly true when multiple threads are involved, as there is no compiler-added access serialization to the time-honored method of automatic initialization and destruction of runtime data: static objects. So remember this ACE saying: Statics are evil! Fortunately, ACE provides a portable solution to this problem in the form of three related classes:

• ACE_Object_Manager. As its name implies, this class manages objects. The ACE library contains a single instance of this class, which when initialized instantiates a set of ACE objects that are needed to properly support the ACE internals and also destroys them at rundown time. ACE programs can make use of the Object Manager by registering objects that must be destroyed. The Object Manager destroys all the objects registered with it at rundown time, in reverse order of their registration.

Table 1.1. Data Types Defined by ACE

• ACE_Cleanup. ACE_Object_Manager uses this class's interface to manage object life cycles. Each object to be registered with the Object Manager must be derived from ACE_Cleanup.

• ACE_Singleton. The Singleton pattern [3] is used to provide a single instance of an object to a program and provide a global way to access it. This object instance is similar to a global object in that it's not hidden from any part of the program. However, the instantiation and deletion of the object is under control of your program, not the platform's runtime environment. ACE's singleton class also adds thread safety to the basic Singleton pattern by using the Double-Checked Locking Optimization pattern [5]. The double-checked lock ensures that only one thread initializes the object in a multithreaded system.

The two most common ways to be sure that your objects are properly cleaned up at program rundown are described next. Which one you use depends on the situation. If you want to create a number of objects of the same class and be sure that each is cleaned up, use the ACE_Cleanup method. If you want one instance of your object accessible using the Singleton pattern, use the ACE_Singleton method.

• ACE_Cleanup method. To be able to create a number of objects of a class and have them be cleaned up by the ACE Object Manager, derive your class from ACE_Cleanup, which has a cleanup() method that the Object Manager calls to do the cleanup. To tell the Object Manager about your object—so it knows to clean it up—you make one simple call, probably from your object's constructor:

ACE_Object_Manager::at_exit (this);

• ACE_Singleton method. Use the ACE_Singleton class to create one and only one instance of your object. This class uses the Adapter pattern [3] to turn any ordinary class into a singleton optimized with the Double-Checked Locking Optimization pattern. ACE_Singleton is a template class with this definition:

template <class TYPE, class ACE_LOCK>

class ACE_Singleton : public ACE_Cleanup

TYPE is name of the class you are turning into a singleton. ACE_LOCK is the type of lock the Double-Checked Locking Optimization pattern implementation uses to serialize checking when the object needs to be created. For code that operates in a multithreaded environment, use ACE_Recursive_Thread_Mutex. If you are writing code without threads, you should use ACE_Null_Mutex, which provides the proper interface but does not lock anything. This would be useful if your main factor in choosing the Singleton pattern is to provide its “instantiate when needed” semantics, as well as have the Object Manager clean up the object. As an example, assume that you have a class named SystemController and that you want a single instance of it. You would define your class and then

typedef ACE_Singleton<SystemController,

ACE_Recursive_Thread_Mutex> TheSystemController;

When you need access to the instance of that object, you get a pointer to it from anywhere in your system by calling its instance() method. For example:

SystemController *s = TheSystemController::instance();

As we've seen, the ACE Object Manager is quite a useful and powerful object. You need to remember only two rules in order to successfully make use of this facility.

- Never call

exit()directly. - Make sure that the Object Manager is initialized successfully.

The first is easy. Remember all the registrations with the Object Manager to request proper cleanup at rundown time? If you call exit(), your program will terminate directly, without the Object Manager's having a chance to clean anything up. Instead, have your main() function simply do a return to end. If your program encounters a fatal condition inside a function call tree, either return, passing error indications, or throw an exception that your main() function will catch so it can cleanly return after cleaning up. In a pinch, you may call ACE_OS::exit() instead of the “naked” exit(). That will ensure that the Object Manager gets a chance to clean up.

Object Manager initialization is an important concept to understand. Once you understand it, you can often forget about it for a number of platforms. The Object Manager is, effectively, a Singleton. Because it is created before any code has a chance to create threads, the Object Manager doesn't need the same protection that Singletons generally require. And, what object would the Object Manager register with for cleanup? The cleanup chain has to end somewhere, and this is where. As we said, statics are generally evil.

However, on some platforms, statics are not completely evil, and the Object Manager is in fact a static object, initialized and destroyed properly by the platform's runtime environment, even when used in combination with shared libraries, some of which are possibly loaded and unloaded dynamically. On these platforms,3 you can usually ignore the rule for properly initializing the Object Manager.4 On the others, however, the Object Manager needs to be initialized and terminated explicitly. For use cases in which you are writing a regular C++ application that has the standard int main (int argc, char *argv[]) entry point or the wide-character-enabled ACE_TMAIN entry point shown in Section 1.7, ACE magically redefines your main() function and inserts its own, which instantiates the Object Manager on the runtime stack and then calls your main() function. When your main() function returns, the Object Manager is run down as well. (This is an example of why your code should not call exit(): You'd bypass the Object Manager cleanup.)

If your application does not have a standard main() function but needs to initialize and run down the Object Manager, you need to call two functions:

ACE::init()to initialize the Object Manager before any other ACE operations are performed.ACE::fini()to run down the Object Manager after all your ACE operations are complete. This call will trigger the cleanup of all objects registered with the Object Manager.

This may be necessary for Windows programs that have a WinMain() function rather than the standard main() function and for libraries that make use of ACE but the users of the library do not. For libraries, it is advantageous to add initialize and finalize functions to the library's API and have those functions call ACE::init() and ACE::fini(), respectively. In case you're writing a Windows DLL (dynamic link library) and thinking you can make the calls from DllMain(), don't go there. It's been tried.

You can also build ACE on Windows with

#define ACE_HAS_NONSTATIC_OBJECT_MANAGER 0

in your config.h file before including ace/config-win32.h. (Chapter 2 describes ACE's configuration.) This removes the need to explicitly call ACE::init() and ACE::fini() but may cause problems when using dynamic services.

1.6.4 Allocating Heap Memory

Dynamic memory allocation from the runtime heap is a common need in most programs. If you've used memory allocation functions from C, you've certainly written code like this:

char *c = (char *)malloc (64);

if (c == 0)

exit(1); /* Out of memory! */

When transitioning to C++, this sort of code was replaced by:

char *c = new char[64];

if (c == 0)

exit(1); /* Out of memory! */

Straightforward stuff—until the C++ standard settled down. Now operator new() throws an exception if the memory allocation request fails. However, you probably need to develop code to run in some C++ runtime environments that return a 0 and some that throw an exception on failure. ACE also supports a mix of these environments, so it uses a set of macros that adapt to the compiler's model to what ACE expects. Because it was built in the days when a failed allocation returned a 0 pointer, ACE uses that model internally and adapts the new exception throwing model to it. With the macros, the preceding code example becomes:

char *c;

ACE_NEW_NORETURN (c, char[64]);

if (c == 0)

exit(1); /* Out of memory! */

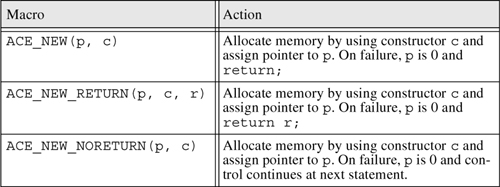

The complete set of ACE memory allocation macros is listed in Table 1.2.

1.7 Using Both Narrow and Wide Characters

Developers outside the United States are acutely aware that many character sets in use today require more than one byte, or octet, to represent each character. Characters that require more than one octet are referred to as “wide characters.” The most popular wide-character standard is ISO/IEC 10646, the Universal Multiple-Octet Coded Character Set (UCS). Unicode is a separate standard but can be thought of as a restricted UCS subset that uses two octets for each character (UCS-2). Many Windows programmers are familiar with Unicode.

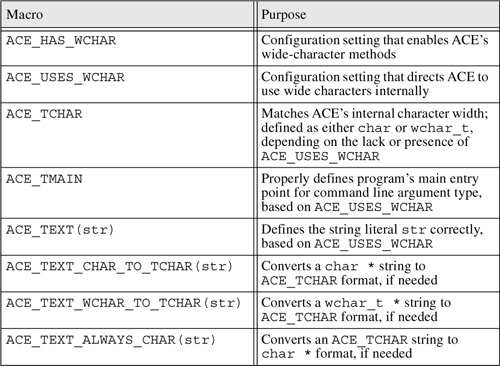

C++ represents wide characters with the wchar_t type, which enables methods to offer multiple signatures that are differentiated by their character type. Wide characters have a separate set of C string manipulation functions, however, and existing C++ code, such as string literals, requires change for wide-character usage. As a result, programming applications to use wide-character strings can become expensive, especially when applications written initially for U.S. markets must be internationalized for other countries. To improve portability and ease of use, ACE uses C++ method overloading and the macros described in Table 1.3 to use different character types without changing APIs.

For applications to use wide characters, ACE must be built with the ACE_HAS_WCHAR configuration setting, which most modern platforms are capable of. Moreover, ACE must be built with the ACE_USES_WCHAR setting if ACE should also use wide characters internally. The ACE_TCHAR and ACE_TEXT macros are illustrated in examples throughout this book.

Table 1.2. ACE Memory Allocation Macros

Table 1.3. Macros Related to Character Width

ACE also supplies two string classes—ACE_CString and ACE_WString—that hold narrow and wide characters, respectively. These classes are analogous to the standard C++ string class but can be configured to use custom memory allocators and are more portable. ACE_TString is a typedef for one of the two string types depending on the ACE_USES_WCHAR configuration setting.

1.8 Where to Find More Information and Support

As you're aware by the number and scope of items ACE helps with—from compiling to runtime—and from a perusal of the Contents—not to mention the size of this book!—you may be starting to wonder where you can find more information about ACE, how to use all its features, and where to get more help. A number of resources are available: ACE reference documentation, ACE kits, user forums, and technical support.

The ACE reference documentation is generated from specially tagged comments in the source code itself, using a tool called Doxygen.5 That reference documentation is available in the following places:

• On the CD-ROM included with this book

• At Riverace's web site: http://www.riverace.com/docs/

• At the DOC group's web site, which includes the reference documentation for ACE and TAO (The ACE ORB) for the most recent stable version, the most recent beta version, and the nightly development snapshot: http://www.dre.vanderbilt.edu/Doxygen/

ACE is, of course, freely available in a number of forms from the following locations.

• The complete ACE 5.3b source and prebuilt kits for selected platforms are on the included CD-ROM.

• Kits for the currently supported ACE versions are available at Riverace’s web site: http://www.riverace.com.

• Complete sources for the current release, current BFO (bug fix only) beta, and the most recent beta versions of ACE are available from the DOC group's web site: http://download.dre.vanderbilt.edu/. The various types of kits are explained in Section 2.1.

A number of ACE developer forums are available via e-mail and Usenet news. The authors and the ACE developers monitor traffic to these forums. These people, along with the ACE user community at large, are very helpful with questions and problems. If you post a question or a problem to any of the following forums, please include the information in the PROBLEM-REPORT-FORM file located in the top-level directory of the ACE source kit:

• comp.soft-sys.ace newsgroup.

• [email protected] mailing list. To join this list, send a request to [email protected]. Include the following command in the body of the e-mail:

subscribe ace-users [emailaddress@domain]

You must supply emailaddress@domain only if your message's From address is not the address you wish to subscribe. If you use this alternative address method, the list server will require an extra authorization step before allowing you to join the list.

Messages posted to the ace-users list are forwarded to the comp.soft-sys.ace newsgroup, but not vice versa. You can search an archive of the comp.soft-sys.ace newsgroup at http://groups.google.com.

Although the level of friendly assistance on the ACE user forums is extraordinary, it is all on a best-effort, volunteer basis. If you're developing commercial projects with ACE, you may benefit greatly from the technical support services that are available. Using technical support allows you and your development team to stay focused on your project instead of needing to learn all about ACE's internals and to get involved in toolkit maintenance.

Riverace Corporation is the premier support provider for the ACE toolkit. You can learn all about Riverace's services at http://www.riverace.com. A number of other companies have recently begun providing support services for ACE and TAO worldwide. A current list is available at http://www.cs.wustl.edu/~schmidt/commercial-support.html.

1.9 Summary

This chapter introduced the ACE toolkit's organization and described some foundational concepts and techniques that you'll need to work with ACE. The chapter covered some helpful macros and facilities provided to smooth out the differences among C++ compilers and runtime environments, showed how to take advantage of both narrow and wide characters, and, finally, listed some sources of further information about ACE and the available services to help you make the most of ACE.